写在前面

本博文以获取HIVE元数据为例,进行流程和源码的分析。

请提前安装好HADOOP和HIVE的环境,用于测试。

ATLAS官网:https://atlas.apache.org/#/HookHive

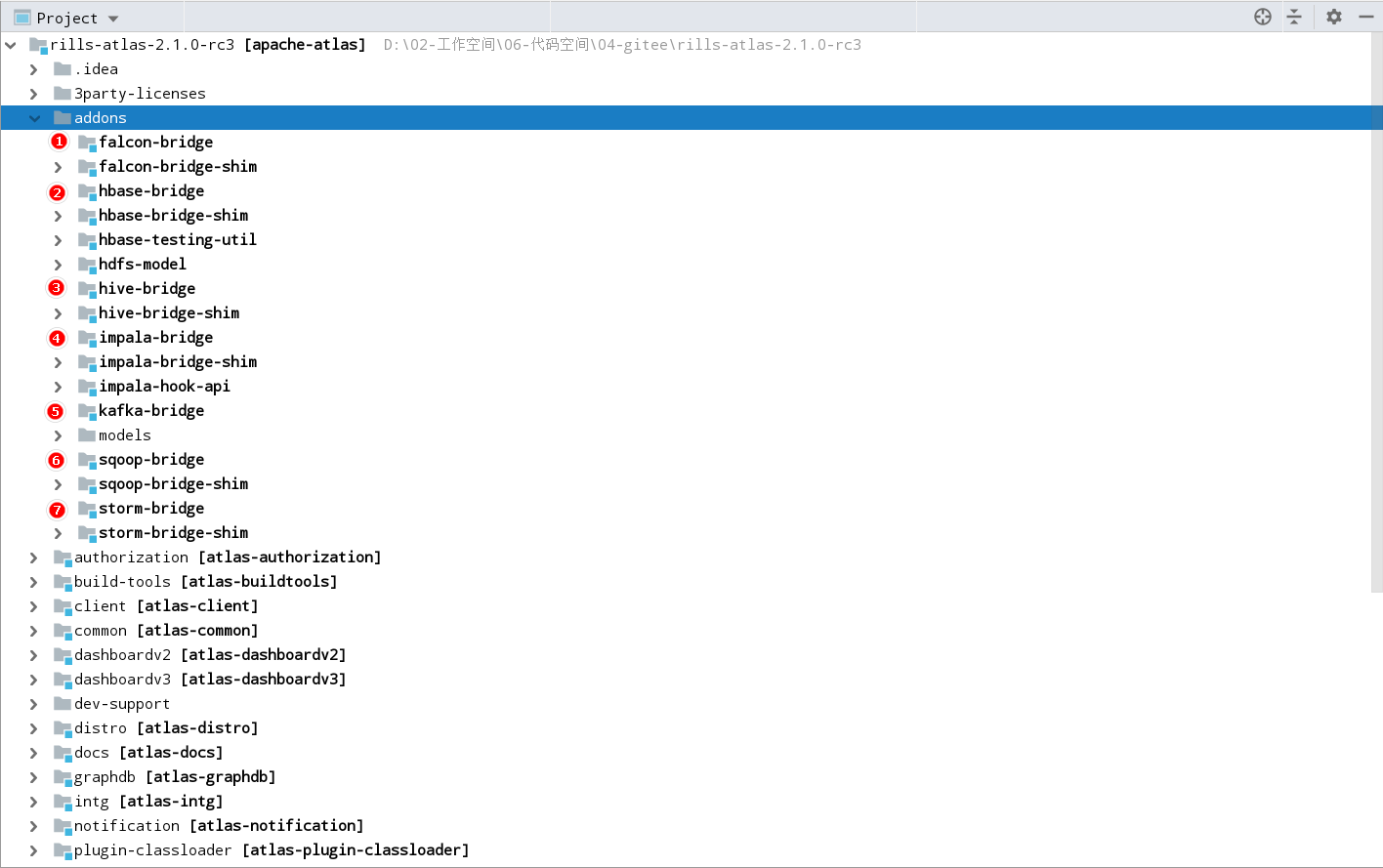

ATLAS支持的元数据源

什么是Hive Hook(钩子)

HOOK是一种在处理过程中拦截事件、消息或函数调用的机制,从这种意义上讲, HIVE HOOKS 提供了使用HIVE扩展和集成外部功能的能力。

HIVE HOOK 的工作流程

HIVE组件 -> ATLAS HIVE HOOK -> KAFKA -> ATLAS

安装HIVE HOOK的场景描述

(1)先在HIVE中创建一个数据库:my_test;

(2)安装配置HIVE HOOK;

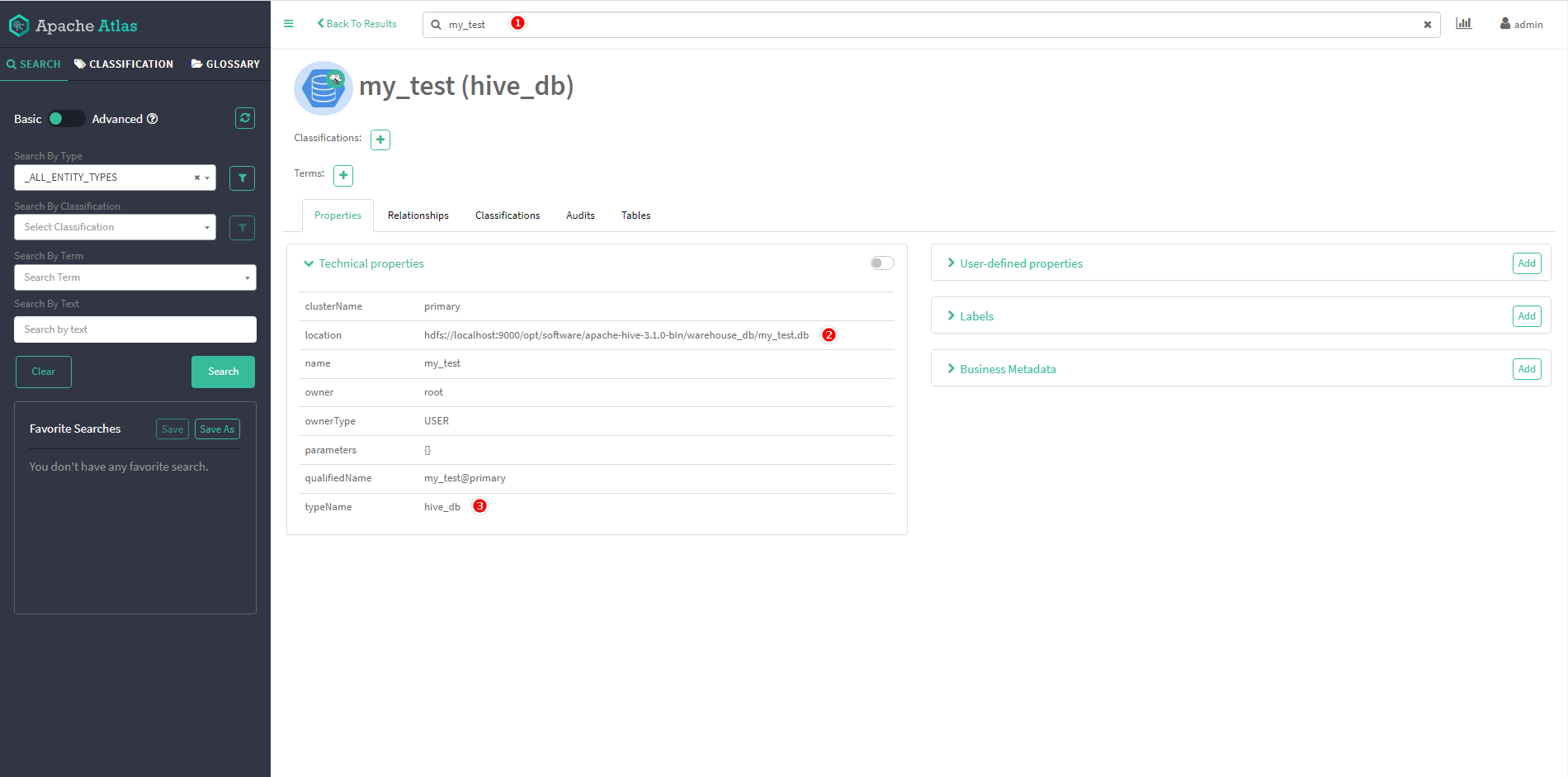

(3)执行导入脚本,导入HIVE的历史元数据信息,在ATLAS中检查是否可以查询到HIVE数据库:my_test;

(4)在my_test数据库中创建一张表:user_click_info,在ATLAS中检查是否可以查询到HIVE表:user_click_info。

安装HIVE HOOK的操作步骤

(1)在HIVE安装服务下的hive-site.xml文件中添加如下参数,设置 ATLAS HOOK的配置:

<property>

<name>hive.exec.post.hooks</name>

<value>org.apache.atlas.hive.hook.HiveHook</value>

<description>

Comma-separated list of post-execution hooks to be invoked for each statement.

A post-execution hook is specified as the name of a Java class which implements the

org.apache.hadoop.hive.ql.hooks.ExecuteWithHookContext interface.

</description>

</property>

(2)将apache-atlas-2.1.0-hive-hook.tar.gz在atlas的目录下执行解压,解压后会产生hook和hook-bin两个目录:

[root@hm apache-atlas-2.1.0]# pwd

/opt/software/apache-atlas-2.1.0

[root@hm apache-atlas-2.1.0]# tar -zxvf apache-atlas-2.1.0-hive-hook.tar.gz

[root@hm apache-atlas-2.1.0]# ll

总用量 28

drwxr-xr-x. 2 root root 4096 5月 24 13:59 bin

drwxr-xr-x. 5 root root 231 6月 1 17:09 conf

drwxr-xr-x. 5 root root 65 5月 19 01:04 data

-rwxr-xr-x. 1 root root 210 7月 8 2020 DISCLAIMER.txt

drwxr-xr-x. 8 root root 194 5月 18 15:40 hbase

drwxr-xr-x 3 root root 18 6月 5 22:25 hook

drwxr-xr-x 2 root root 28 6月 5 22:25 hook-bin

-rwxr-xr-x. 1 root root 14289 7月 8 2020 LICENSE

drwxr-xr-x. 2 root root 212 6月 5 19:06 logs

drwxr-xr-x. 7 root root 107 7月 8 2020 models

-rwxr-xr-x. 1 root root 169 7月 8 2020 NOTICE

drwxr-xr-x. 3 root root 20 5月 18 15:39 server

drwxr-xr-x. 9 root root 201 5月 18 11:45 solr

drwxr-xr-x 2 root root 22 5月 30 11:05 target

drwxr-xr-x. 4 root root 62 5月 18 15:39 tools

[root@hm apache-atlas-2.1.0]#

(3)在HIVE安装服务下的hive-env.sh文件中添加如下参数,配置HIVE编译/执行需要的第三方的库,如:HIVE HOOK:

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/opt/software/apache-atlas-2.1.0/hook/hive

(4)将ATLAS安装目录下的conf/atlas-application.properties文件复制到HIVE服务的conf目录下。

(5)执行同步HIVE元数据的脚本:

[root@hm hook-bin]# pwd

/opt/software/apache-atlas-2.1.0/hook-bin

[root@hm hook-bin]# ll

总用量 8

-rwxr-xr-x 1 root root 4304 6月 5 22:25 import-hive.sh

# 执行导入元数据的脚本(需要输入ATLAS的用户名和密码:admin/admin),这里同步的是Hive中已有数据的元数据。

[root@hm hook-bin]# ./import-hive.sh

(6)导入元数据成功(Hive Meta Data imported successfully!!!):

[root@hm hook-bin]#

[root@hm hook-bin]# pwd

/opt/software/apache-atlas-2.1.0/hook-bin

[root@hm hook-bin]# ./import-hive.sh

Using Hive configuration directory [/opt/software/apache-hive-3.1.0-bin/conf]

Log file for import is /opt/software/apache-atlas-2.1.0/logs/import-hive.log

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/software/apache-hive-3.1.0-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/software/hadoop-3.1.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

2023-06-05T22:47:05,338 INFO [main] org.apache.atlas.ApplicationProperties - Looking for atlas-application.properties in classpath

2023-06-05T22:47:05,342 INFO [main] org.apache.atlas.ApplicationProperties - Loading atlas-application.properties from file:/opt/software/apache-hive-3.1.0-bin/conf/atlas-application.properties

2023-06-05T22:47:05,381 INFO [main] org.apache.atlas.ApplicationProperties - Using graphdb backend 'janus'

2023-06-05T22:47:05,381 INFO [main] org.apache.atlas.ApplicationProperties - Using storage backend 'hbase2'

2023-06-05T22:47:05,381 INFO [main] org.apache.atlas.ApplicationProperties - Using index backend 'solr'

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Atlas is running in MODE: PROD.

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Setting solr-wait-searcher property 'true'

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Setting index.search.map-name property 'false'

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Setting atlas.graph.index.search.max-result-set-size = 150

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Property (set to default) atlas.graph.cache.db-cache = true

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Property (set to default) atlas.graph.cache.db-cache-clean-wait = 20

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Property (set to default) atlas.graph.cache.db-cache-size = 0.5

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Property (set to default) atlas.graph.cache.tx-cache-size = 15000

2023-06-05T22:47:05,382 INFO [main] org.apache.atlas.ApplicationProperties - Property (set to default) atlas.graph.cache.tx-dirty-size = 120

Enter username for atlas :- admin

Enter password for atlas :-

2023-06-05T22:47:11,008 INFO [main] org.apache.atlas.AtlasBaseClient - Client has only one service URL, will use that for all actions: http://localhost:21000

2023-06-05T22:47:11,050 INFO [main] org.apache.hadoop.hive.conf.HiveConf - Found configuration file file:/opt/software/apache-hive-3.1.0-bin/conf/hive-site.xml

2023-06-05T22:47:12,117 WARN [main] org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2023-06-05T22:47:12,289 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2023-06-05T22:47:12,311 WARN [main] org.apache.hadoop.hive.metastore.ObjectStore - datanucleus.autoStartMechanismMode is set to unsupported value null . Setting it to value: ignored

2023-06-05T22:47:12,318 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - ObjectStore, initialize called

2023-06-05T22:47:12,318 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file file:/opt/software/apache-hive-3.1.0-bin/conf/hive-site.xml

2023-06-05T22:47:12,319 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Unable to find config file hivemetastore-site.xml

2023-06-05T22:47:12,319 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file null

2023-06-05T22:47:12,320 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Unable to find config file metastore-site.xml

2023-06-05T22:47:12,320 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file null

2023-06-05T22:47:12,520 INFO [main] DataNucleus.Persistence - Property datanucleus.cache.level2 unknown - will be ignored

2023-06-05T22:47:12,911 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Starting...

2023-06-05T22:47:12,919 WARN [main] com.zaxxer.hikari.util.DriverDataSource - Registered driver with driverClassName=org.apache.derby.jdbc.EmbeddedDriver was not found, trying direct instantiation.

2023-06-05T22:47:13,108 INFO [main] com.zaxxer.hikari.pool.PoolBase - HikariPool-1 - Driver does not support get/set network timeout for connections. (Feature not implemented: No details.)

2023-06-05T22:47:13,115 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Start completed.

2023-06-05T22:47:13,142 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-2 - Starting...

2023-06-05T22:47:13,143 WARN [main] com.zaxxer.hikari.util.DriverDataSource - Registered driver with driverClassName=org.apache.derby.jdbc.EmbeddedDriver was not found, trying direct instantiation.

2023-06-05T22:47:13,148 INFO [main] com.zaxxer.hikari.pool.PoolBase - HikariPool-2 - Driver does not support get/set network timeout for connections. (Feature not implemented: No details.)

2023-06-05T22:47:13,151 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-2 - Start completed.

2023-06-05T22:47:13,486 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2023-06-05T22:47:13,633 INFO [main] org.apache.hadoop.hive.metastore.MetaStoreDirectSql - Using direct SQL, underlying DB is DERBY

2023-06-05T22:47:13,636 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Initialized ObjectStore

2023-06-05T22:47:13,823 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:13,824 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:13,824 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:13,825 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:13,825 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:13,825 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,508 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,512 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,512 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,513 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,513 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:14,513 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-06-05T22:47:15,484 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added admin role in metastore

2023-06-05T22:47:15,486 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added public role in metastore

2023-06-05T22:47:15,514 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - No user is added in admin role, since config is empty

2023-06-05T22:47:15,670 INFO [main] org.apache.hadoop.hive.metastore.RetryingMetaStoreClient - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=root (auth:SIMPLE) retries=1 delay=1 lifetime=0

2023-06-05T22:47:15,691 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_all_functions

2023-06-05T22:47:15,694 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_all_functions

2023-06-05T22:47:15,745 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Importing Hive metadata

2023-06-05T22:47:15,745 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_databases: @hive#

2023-06-05T22:47:15,745 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_databases: @hive#

2023-06-05T22:47:15,756 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Found 2 databases

2023-06-05T22:47:15,756 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#default

2023-06-05T22:47:15,756 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#default

2023-06-05T22:47:15,845 INFO [main] org.apache.atlas.AtlasBaseClient - method=GET path=api/atlas/v2/entity/uniqueAttribute/type/ contentType=application/json; charset=UTF-8 accept=application/json status=404

2023-06-05T22:47:17,519 INFO [main] org.apache.atlas.AtlasBaseClient - method=POST path=api/atlas/v2/entity/ contentType=application/json; charset=UTF-8 accept=application/json status=200

2023-06-05T22:47:17,627 INFO [main] org.apache.atlas.AtlasBaseClient - method=GET path=api/atlas/v2/entity/guid/ contentType=application/json; charset=UTF-8 accept=application/json status=200

2023-06-05T22:47:17,636 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Created hive_db entity: name=default@primary, guid=99c04f6e-e8cf-498e-b113-db9d1d1c281f

2023-06-05T22:47:17,677 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_tables: db=@hive#default pat=.*

2023-06-05T22:47:17,678 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_tables: db=@hive#default pat=.*

2023-06-05T22:47:17,709 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - No tables to import in database default

2023-06-05T22:47:17,710 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#my_test

2023-06-05T22:47:17,714 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#my_test

2023-06-05T22:47:17,735 INFO [main] org.apache.atlas.AtlasBaseClient - method=GET path=api/atlas/v2/entity/uniqueAttribute/type/ contentType=application/json; charset=UTF-8 accept=application/json status=404

2023-06-05T22:47:18,600 INFO [main] org.apache.atlas.AtlasBaseClient - method=POST path=api/atlas/v2/entity/ contentType=application/json; charset=UTF-8 accept=application/json status=200

2023-06-05T22:47:18,641 INFO [main] org.apache.atlas.AtlasBaseClient - method=GET path=api/atlas/v2/entity/guid/ contentType=application/json; charset=UTF-8 accept=application/json status=200

2023-06-05T22:47:18,641 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Created hive_db entity: name=my_test@primary, guid=425c3888-3610-462f-965d-4b6ddbe84d3f

2023-06-05T22:47:18,642 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_tables: db=@hive#my_test pat=.*

2023-06-05T22:47:18,642 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_tables: db=@hive#my_test pat=.*

2023-06-05T22:47:18,643 INFO [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - No tables to import in database my_test

Hive Meta Data imported successfully!!!

[root@hm hook-bin]# ll

(7)在HIVE中创建表,在ATLAS中可以看到该表的信息,说明HIVE元数据的变化也可以同步到ATLAS中:

// 第一步:在HIVE中创建表user_click_info:

create table user_click_info

(user_id string comment "用户id",

click_time string comment "点击时间",

item_id string comment "物品id")

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

LINES TERMINATED BY '\n'

STORED AS TEXTFILE;

// 第二步:在ATLA中查询: