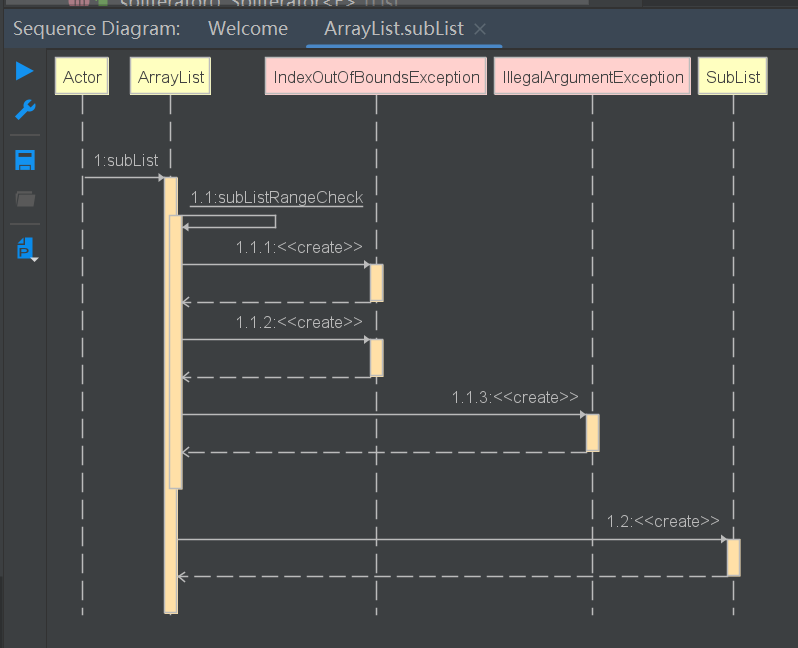

流程图

- 创建MediaStream, MediaStream一方面作为从客户端接收到媒体数据,另外一方面做为视频源;

- 创建VideoFrameConstruc=tor,VideoFrameConstructor 把sink 注册到MediaStream,这样MediaStream(继承了MediaSource)的视频数据会流向到VideoFrameConstructor。即MediaStream作为视频源,VideoFrameConstructor作为接收者。

4. new WrtcStream

dist/webrtc_agent/webrtc/wrtcConnection.js

/*

* WrtcStream represents a stream object

* of WrtcConnection. It has media source

* functions (addDestination) and media sink

* functions (receiver) which will be used

* in connection link-up. Each rtp-stream-id

* in simulcast refers to one WrtcStream.

*/

class WrtcStream extends EventEmitter {

/*

* audio: { format, ssrc, mid, midExtId }

* video: {

* format, ssrcs, mid, midExtId,

* transportcc, red, ulpfec, scalabilityMode

* }

*/

// Connection wrtc

// id = mid

constructor(id, wrtc, direction, {audio, video, owner, enableBWE}) {

super();

this.id = id;

this.wrtc = wrtc;

this.direction = direction;

this.audioFormat = audio ? audio.format : null;

this.videoFormat = video ? video.format : null;

this.audio = audio;

this.video = video;

this.audioFrameConstructor = null;

this.audioFramePacketizer = null;

this.videoFrameConstructor = null;

this.videoFramePacketizer = null;

this.closed = false;

this.owner = owner;

if (video && video.scalabilityMode) {

this.scalabilityMode = video.scalabilityMode;

// string => LayerStream

this.layerStreams = new Map();

}

// 设立sendOnly, 所以是in

if (direction === 'in') {

// 1. Connection wrtc, 创建MediaStream

wrtc.addMediaStream(id, {label: id}, true);

if (audio) {

...

}

if (video) {

// 2.

// wrtc 是Connction

// wrtc.callBase = new CallBase();

// 在创建Connction的时候创建,dist/webrtc_agent/webrtc/wrtcConnection.js

this.videoFrameConstructor = new VideoFrameConstructor(

this._onMediaUpdate.bind(this), video.transportcc, wrtc.callBase);

// 3.wrtc.getMediaStream 就是 5.1 创建的addon.MediaStream

this.videoFrameConstructor.bindTransport(wrtc.getMediaStream(id));

// 4.

wrtc.setVideoSsrcList(id, video.ssrcs);

}

} else {

...

}

}

...

}

4.1 Connection.addMediaStream

小节5 , 创建Mediastream,同时把mediastream 添加到 WebrtcConnection

4.2 new addon.VideoFrameConstructor

小节6, 创建addon.VideoFrameConstructor

4.3 addon.VideoFrameConstructor.bindTransport

小节7, source的赋值,把MediaStream 作为source,视频数据从MediaStream 流向到VideoFrameConstructor。

4.4 Connection.setVideoSsrcList

小节8,

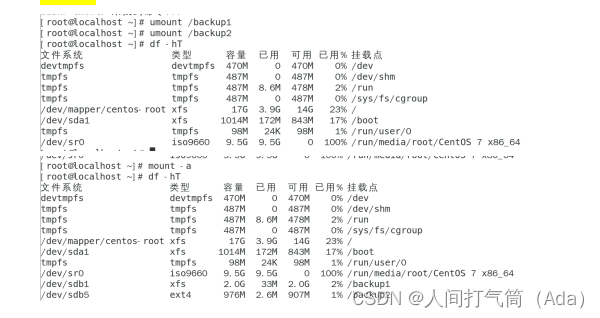

5. Connection.addMediaStream——创建Mediastream,同时把stream 添加到 WebrtcConnection

dist/webrtc_agent/webrtc/connection.js

// id = mid

addMediaStream(id, options, isPublisher) {

// this.id 就是 transportId(或者connect id)

log.info(`message: addMediaStream, connectionId: ${this.id}, mediaStreamId: ${id}`);

if (this.mediaStreams.get(id) === undefined) {

// 1.

const mediaStream = this._createMediaStream(id, options, isPublisher);

// 2. wrtc 就是 addon.WebRtcConnection

this.wrtc.addMediaStream(mediaStream);

// 3. map, 存放是 mid->MediaStream

this.mediaStreams.set(id, mediaStream);

}

}

5.1 Connection._createMediaStream——创建addon.MediaStream

dist/webrtc_agent/webrtc/connection.js

// id = mid

_createMediaStream(id, options = {}, isPublisher = true) {

log.debug(`message: _createMediaStream, connectionId: ${this.id}, ` +

`mediaStreamId: ${id}, isPublisher: ${isPublisher}`);

// this.wrtc 就是 addon.WebRtcConnection

const mediaStream = new addon.MediaStream(this.threadPool, this.wrtc, id,

options.label, this._getMediaConfiguration(this.mediaConfiguration), isPublisher);

mediaStream.id = id;

// 这里的label 就是id

mediaStream.label = options.label;

if (options.metadata) {

// mediaStream.metadata = options.metadata;

// mediaStream.setMetadata(JSON.stringify(options.metadata));

}

mediaStream.onMediaStreamEvent((type, message) => {

this._onMediaStreamEvent(type, message, mediaStream.id);

});

return mediaStream;

}

======5.1.1 NAN_METHOD(MediaStream::New)

source/agent/webrtc/rtcConn/MediaStream.cc

NAN_METHOD(MediaStream::New) {

if (info.Length() < 3) {

Nan::ThrowError("Wrong number of arguments");

}

if (info.IsConstructCall()) {

// Invoked as a constructor with 'new MediaStream()'

ThreadPool* thread_pool = Nan::ObjectWrap::Unwrap<ThreadPool>(Nan::To<v8::Object>(info[0]).ToLocalChecked());

// 就是在source/agent/webrtc/rtcConn/WebRtcConnection.cc的类 class WebRtcConnection

WebRtcConnection* connection =

Nan::ObjectWrap::Unwrap<WebRtcConnection>(Nan::To<v8::Object>(info[1]).ToLocalChecked());

std::shared_ptr<erizo::WebRtcConnection> wrtc = connection->me;

// wrtc_id=id=mid

std::string wrtc_id = getString(info[2]);

std::string stream_label = getString(info[3]);

bool is_publisher = Nan::To<bool>(info[5]).FromJust();

///

// source/agent/webrtc/rtcConn/MediaStream.cc

// MediaStream 结构关系图,参考小节9

MediaStream* obj = new MediaStream();

// source/agent/webrtc/rtcConn/WebRtcConnection.cc

// Share same worker with connection

obj->me = std::make_shared<erizo::MediaStream>(wrtc->getWorker(), wrtc, wrtc_id, stream_label, is_publisher);

// erizo::MediaSink* msink; MediaStream 集成于erizo::MediaSink

obj->msink = obj->me.get();

// erizo::MediaSource* msource; MediaStream 集成于erizo::MediaSource

obj->msource = obj->me.get();

obj->id_ = wrtc_id;

obj->label_ = stream_label;

///

ELOG_DEBUG("%s, message: Created", obj->toLog());

obj->Wrap(info.This());

info.GetReturnValue().Set(info.This());

obj->asyncResource_ = new Nan::AsyncResource("MediaStreamCallback");

} else {

// TODO(pedro) Check what happens here

}

}

log——MediaStreamWrapper

MediaStreamWrapper - id: 1, message: Created

MediaStream 和 erizo::MediaStream 注意区分

参考小节9

5.1.2 addon.MediaStream::MediaStream

source/agent/webrtc/rtcConn/MediaStream.cc

MediaStream::MediaStream() : closed_{false}, id_{"undefined"} {

// 异步回调事情

async_stats_ = new uv_async_t;

async_event_ = new uv_async_t;

uv_async_init(uv_default_loop(), async_stats_, &MediaStream::statsCallback);

uv_async_init(uv_default_loop(), async_event_, &MediaStream::eventCallback);

}

5.1.3 erizo::MediaStream::MediaStream

source/agent/webrtc/rtcConn/erizo/src/erizo/MediaStream.cpp

MediaStream::MediaStream(std::shared_ptr<Worker> worker,

std::shared_ptr<WebRtcConnection> connection,

const std::string& media_stream_id, // media_stream_id = mid

const std::string& media_stream_label,

bool is_publisher) :

audio_enabled_{false}, video_enabled_{false},

media_stream_event_listener_{nullptr},

connection_{std::move(connection)},

stream_id_{media_stream_id},

mslabel_ {media_stream_label},

bundle_{false},

pipeline_{Pipeline::create()},

worker_{std::move(worker)},

audio_muted_{false}, video_muted_{false},

pipeline_initialized_{false},

is_publisher_{is_publisher},

simulcast_{false},

bitrate_from_max_quality_layer_{0},

video_bitrate_{0} {

///

//constexpr uint32_t kDefaultVideoSinkSSRC = 55543;

//constexpr uint32_t kDefaultAudioSinkSSRC = 44444;

setVideoSinkSSRC(kDefaultVideoSinkSSRC);

setAudioSinkSSRC(kDefaultAudioSinkSSRC);

///

ELOG_INFO("%s message: constructor, id: %s",

toLog(), media_stream_id.c_str());

///

// FeedbackSink

source_fb_sink_ = this;

// FeedbackSource

sink_fb_source_ = this;

///

stats_ = std::make_shared<Stats>();

log_stats_ = std::make_shared<Stats>();

quality_manager_ = std::make_shared<QualityManager>();

packet_buffer_ = std::make_shared<PacketBufferService>();

std::srand(std::time(nullptr));

///

audio_sink_ssrc_ = std::rand();

video_sink_ssrc_ = std::rand();

///

rtcp_processor_ = nullptr;

should_send_feedback_ = true;

slide_show_mode_ = false;

mark_ = clock::now();

rate_control_ = 0;

sending_ = true;

}

2023-05-31 16:44:11,153 - INFO: MediaStream - id: 1, role:publisher, message: constructor, id: 1

5.2 NAN_METHOD(WebRtcConnection::addMediaStream)——添加MediaStream到WebRtcConnection

source/agent/webrtc/rtcConn/WebRtcConnection.cc

NAN_METHOD(WebRtcConnection::addMediaStream) {

WebRtcConnection* obj = Nan::ObjectWrap::Unwrap<WebRtcConnection>(info.Holder());

std::shared_ptr<erizo::WebRtcConnection> me = obj->me;

if (!me) {

return;

}

MediaStream* param = Nan::ObjectWrap::Unwrap<MediaStream>(

Nan::To<v8::Object>(info[0]).ToLocalChecked());

// param->me就是个erizo::MediaStream 指针

auto wr = std::shared_ptr<erizo::MediaStream>(param->me);

me->addMediaStream(wr);

}

about me

source/agent/webrtc/rtcConn/MediaStream.h

class MediaStream : public MediaFilter, public erizo::MediaStreamStatsListener, public erizo::MediaStreamEventListener {

public:

...

std::shared_ptr<erizo::MediaStream> me;

...

}

5.2.1 erizo::WebRtcConnection::addMediaStream

source/agent/webrtc/rtcConn/erizo/src/erizo/WebRtcConnection.cpp

void WebRtcConnection::addMediaStream(std::shared_ptr<MediaStream> media_stream) {

asyncTask([media_stream] (std::shared_ptr<WebRtcConnection> connection) {

boost::mutex::scoped_lock lock(connection->update_state_mutex_);

ELOG_DEBUG("%s message: Adding mediaStream, id: %s", connection->toLog(), media_stream->getId().c_str());

connection->media_streams_.push_back(media_stream);

});

}

5.3 mediaStreams

this.mediaStreams = new Map();

//mid->MediaStream

this.mediaStreams.set(id, mediaStream);

以id=mid为key,mediaStream 为value

6. new addon.VideoFrameConstructor——创建

this._onMediaUpdate.bind(this)

把function 传递下去,给native层调用

Javascript中function函数bind方法实例用法详解-js教程-PHP中文网

???========wrtc.callBase

6.1 NAN_METHOD(addon.VideoFrameConstructor::New)

source/agent/webrtc/rtcFrame/VideoFrameConstructorWrapper.cc

NAN_METHOD(VideoFrameConstructor::New) {

if (info.IsConstructCall()) {

VideoFrameConstructor* obj = new VideoFrameConstructor();

if (info.Length() <= 3) {

int transportccExt = (info.Length() >= 2) ? Nan::To<int32_t>(info[1]).FromMaybe(-1) : -1;

// CallBase 的创建流程,后面会详细说明

CallBase* baseWrapper = (info.Length() >= 3)

? Nan::ObjectWrap::Unwrap<CallBase>(Nan::To<v8::Object>(info[2]).ToLocalChecked())

: nullptr;

if (baseWrapper) {

// 走了这里的流程

obj->me = new owt_base::VideoFrameConstructor(baseWrapper->rtcAdapter, obj, transportccExt);

} else if (transportccExt > 0) {

obj->me = new owt_base::VideoFrameConstructor(obj, transportccExt);

} else {

obj->me = new owt_base::VideoFrameConstructor(obj);

}

} else {

VideoFrameConstructor* parent =

Nan::ObjectWrap::Unwrap<VideoFrameConstructor>(

Nan::To<v8::Object>(info[1]).ToLocalChecked());

Nan::Utf8String param2(Nan::To<v8::String>(info[2]).ToLocalChecked());

std::string layerId = std::string(*param2);

int spatialId = info[3]->IntegerValue(Nan::GetCurrentContext()).ToChecked();

int temporalId = info[4]->IntegerValue(Nan::GetCurrentContext()).ToChecked();

obj->me = new owt_base::VideoFrameConstructor(parent->me);

obj->me->setPreferredLayers(spatialId, temporalId);

obj->layerId = layerId;

obj->parent = parent->me;

obj->parent->addChildProcessor(layerId, obj->me);

}

/有用到吗///

// owt_base::VideoFrameConstructor me

// owt_base::FrameSource* src;

obj->src = obj->me;

// erizo::MediaSink* msink;

obj->msink = obj->me;

///

// info[0].As<Function>() 就是 js中WrtcStream._onMediaUpdate

obj->Callback_ = new Nan::Callback(info[0].As<Function>());

obj->asyncResource_ = new Nan::AsyncResource("VideoFrameCallback");

uv_async_init(uv_default_loop(), &obj->async_, &VideoFrameConstructor::Callback);

obj->Wrap(info.This());

info.GetReturnValue().Set(info.This());

} else {

// const int argc = 1;

// v8::Local<v8::Value> argv[argc] = {info[0]};

// v8::Local<v8::Function> cons = Nan::New(constructor);

// info.GetReturnValue().Set(cons->NewInstance(argc, argv));

}

}

???CallBase

???baseWrapper->rtcAdapter, rtc_adapter::RtcAdapter

???obj->src,obj->msink

6.2 owt_base::VideoFrameConstructor::VideoFrameConstructor

source/core/owt_base/VideoFrameConstructor.cpp

VideoFrameConstructor::VideoFrameConstructor(

std::shared_ptr<rtc_adapter::RtcAdapter> rtcAdapter,

VideoInfoListener* vil, uint32_t transportccExtId)

: m_enabled(true)

, m_ssrc(0)

, m_transport(nullptr)

, m_pendingKeyFrameRequests(0)

, m_videoInfoListener(vil)

, m_videoReceive(nullptr)

{

ELOG_DEBUG("VideoFrameConstructor2 %p", this);

m_config.transport_cc = transportccExtId;

assert(rtcAdapter.get());

m_feedbackTimer = SharedJobTimer::GetSharedFrequencyTimer(1);

m_feedbackTimer->addListener(this);

m_rtcAdapter = rtcAdapter;

}

7. NAN_METHOD(VideoFrameConstructor::bindTransport)——erizo::MediaSource赋值

source/agent/webrtc/rtcFrame/VideoFrameConstructorWrapper.cc

参数addon.MediaStream 就是在5.1 小节创建。

NAN_METHOD(VideoFrameConstructor::bindTransport) {

VideoFrameConstructor* obj = Nan::ObjectWrap::Unwrap<VideoFrameConstructor>(info.Holder());

owt_base::VideoFrameConstructor* me = obj->me;

// addon.MediaStream 继承于 MediaFilter

// 在 5.1.1 的时候对msource和msink 赋值

MediaFilter* param = Nan::ObjectWrap::Unwrap<MediaFilter>(Nan::To<v8::Object>(info[0]).ToLocalChecked());

erizo::MediaSource* source = param->msource;

me->bindTransport(source, source->getFeedbackSink());

}

7.1 addon.MediaFilter,addon.MediaSink,addon.MediaSource

source/agent/webrtc/rtcConn/MediaStream.h

source/core/owt_base/MediaWrapper.h

小节9

7.2 erizo::MediaSource.getFeedbackSink

source/agent/webrtc/rtcConn/erizo/src/erizo/MediaDefinitions.h

FeedbackSink* getFeedbackSink() {

boost::mutex::scoped_lock lock(monitor_mutex_);

return source_fb_sink_;

}

是在MediaStream 创建的时候 赋值

source/agent/webrtc/rtcConn/erizo/src/erizo/MediaStream.cpp

MediaStream::MediaStream(std::shared_ptr<Worker> worker,

std::shared_ptr<WebRtcConnection> connection,

const std::string& media_stream_id,

const std::string& media_stream_label,

bool is_publisher)

...

{

...

source_fb_sink_ = this;

sink_fb_source_ = this;

}

7.3 ======== owt_base::VideoFrameConstructor.bindTransport——向source注册回调,从erizo::MediaStream获取视频数据

source/core/owt_base/VideoFrameConstructor.cpp

void VideoFrameConstructor::bindTransport(erizo::MediaSource* source, erizo::FeedbackSink* fbSink)

{

boost::unique_lock<boost::shared_mutex> lock(m_transportMutex);

// erizo::MediaSource* source, 对应的对象就是erizo::MediaStream。

// erizo::MediaStream继承erizo::MediaSource

m_transport = source;

// 设置MediaSink

m_transport->setVideoSink(this);

m_transport->setEventSink(this);

setFeedbackSink(fbSink);

}

setVideoSink

setEventSink

setFeedbackSink

8. ????Connection.setVideoSsrcList

setVideoSsrcList(label, videoSsrcList) {

this.wrtc.setVideoSsrcList(label, videoSsrcList);

}

8.1 NAN_METHOD(WebRtcConnection::setVideoSsrcList)

NAN_METHOD(WebRtcConnection::setVideoSsrcList) {

WebRtcConnection* obj = Nan::ObjectWrap::Unwrap<WebRtcConnection>(info.Holder());

std::shared_ptr<erizo::WebRtcConnection> me = obj->me;

if (!me) {

return;

}

std::string stream_id = getString(info[0]);

v8::Local<v8::Array> video_ssrc_array = v8::Local<v8::Array>::Cast(info[1]);

std::vector<uint32_t> video_ssrc_list;

for (unsigned int i = 0; i < video_ssrc_array->Length(); i++) {

v8::Local<v8::Value> val = Nan::Get(video_ssrc_array, i).ToLocalChecked();

unsigned int numVal = Nan::To<int32_t>(val).FromJust();

video_ssrc_list.push_back(numVal);

}

me->getLocalSdpInfo()->video_ssrc_map[stream_id] = video_ssrc_list;

}

8.2 WebRtcConnection::getLocalSdpInfo

std::shared_ptr<SdpInfo> WebRtcConnection::getLocalSdpInfo() {

boost::mutex::scoped_lock lock(update_state_mutex_);

ELOG_DEBUG("%s message: getting local SDPInfo", toLog());

forEachMediaStream([this] (const std::shared_ptr<MediaStream> &media_stream) {

if (!media_stream->isRunning() || media_stream->isPublisher()) {

ELOG_DEBUG("%s message: getting local SDPInfo stream not running, stream_id: %s", toLog(), media_stream->getId());

return;

}

std::vector<uint32_t> video_ssrc_list = std::vector<uint32_t>();

if (media_stream->getVideoSinkSSRC() != kDefaultVideoSinkSSRC && media_stream->getVideoSinkSSRC() != 0) {

video_ssrc_list.push_back(media_stream->getVideoSinkSSRC());

}

ELOG_DEBUG("%s message: getting local SDPInfo, stream_id: %s, audio_ssrc: %u",

toLog(), media_stream->getId(), media_stream->getAudioSinkSSRC());

if (!video_ssrc_list.empty()) {

local_sdp_->video_ssrc_map[media_stream->getLabel()] = video_ssrc_list;

}

if (media_stream->getAudioSinkSSRC() != kDefaultAudioSinkSSRC && media_stream->getAudioSinkSSRC() != 0) {

local_sdp_->audio_ssrc_map[media_stream->getLabel()] = media_stream->getAudioSinkSSRC();

}

});

bool sending_audio = local_sdp_->audio_ssrc_map.size() > 0;

bool sending_video = local_sdp_->video_ssrc_map.size() > 0;

bool receiving_audio = remote_sdp_->audio_ssrc_map.size() > 0;

bool receiving_video = remote_sdp_->video_ssrc_map.size() > 0;

if (!sending_audio && receiving_audio) {

local_sdp_->audioDirection = erizo::RECVONLY;

} else if (sending_audio && !receiving_audio) {

local_sdp_->audioDirection = erizo::SENDONLY;

} else {

local_sdp_->audioDirection = erizo::SENDRECV;

}

if (!sending_video && receiving_video) {

local_sdp_->videoDirection = erizo::RECVONLY;

} else if (sending_video && !receiving_video) {

local_sdp_->videoDirection = erizo::SENDONLY;

} else {

local_sdp_->videoDirection = erizo::SENDRECV;

}

return local_sdp_;

}