如此简单的k8s,快速玩转ingress

NodePort 可以实现的功能和缺陷:

功能、在每一个节点上面都会启动一个端口,在访问的时候通过任何的节点,通过节点加ip的形式实现访问

缺点、也就是说每个端口只能使用一次,一个端口对应一个应用。

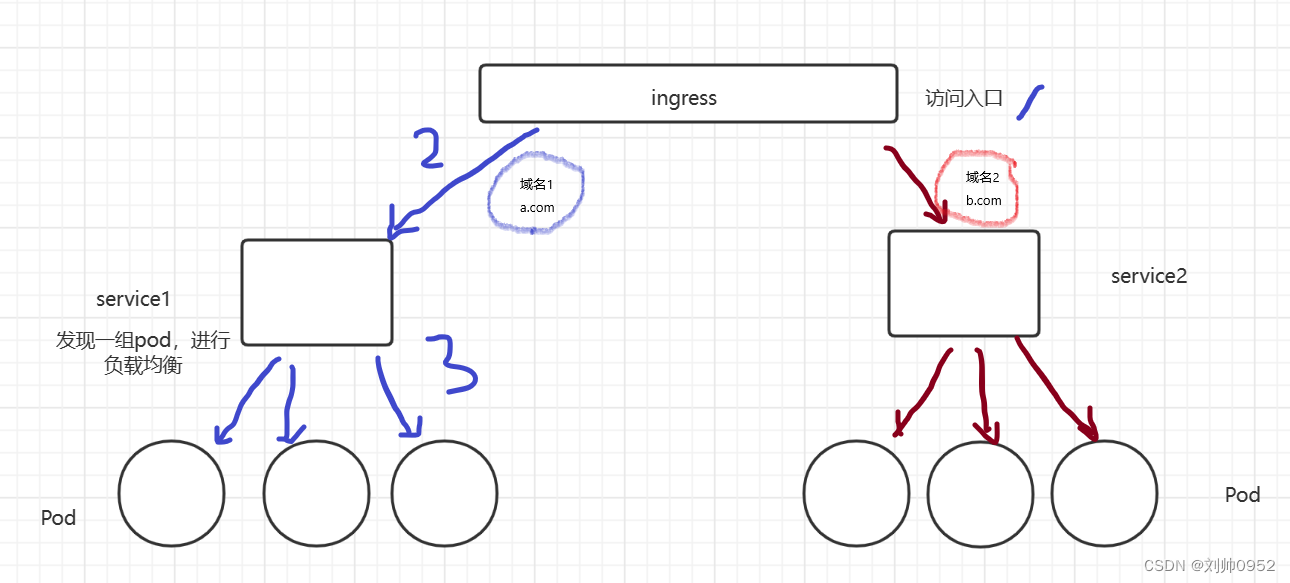

ingress简单的来说可以实现根据不同的域名跳转到不同的端口服务中去,如果还不理解,那就继续往下看

ingress作为流量的统一入口,然后会找到指定的service,不同的service下面会关联不同的pod组

小编使用的是k8s-1.25版本,应为ingress对版本来说还是有要求,就好比小编在网上找了一个yaml文件,但是部署后一直报错,原因是k8s 1.25 版本需要nginx-ingress 1.4.0 版本

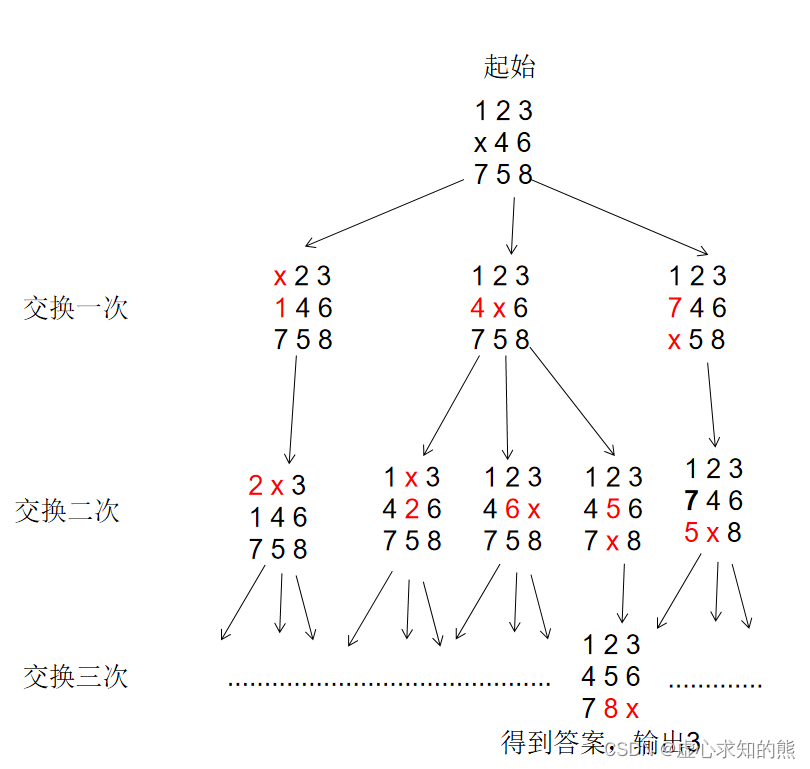

ingress和Pod之间的联系关联流程图:

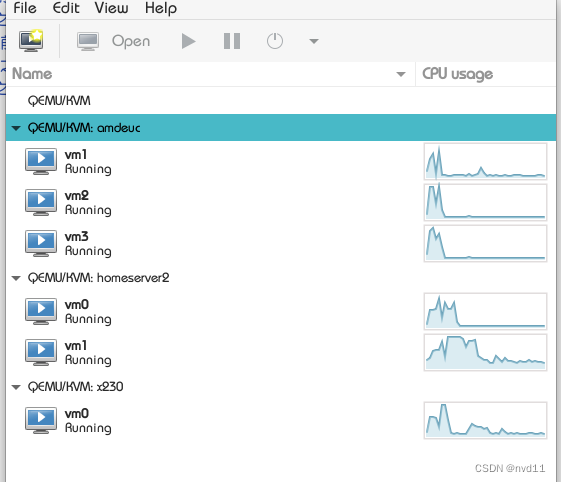

| 主机名 | 系统版本 | 作用 | ip | 备注 |

|---|---|---|---|---|

| localhost | centos7.5 | pull镜像打包镜像的机器 | 192.168.3.129 | 确保可以联网,可以是自己的虚拟机,联网docker pull镜像并打包,导入到k8s集群里面 |

| k8s-master1 | centos7.5 | k8s-master1 | 10.245.4.1 | k8s的master节点 |

| k8s-node1 | centos7.5 | k8s-node1 | 10.245.4.3 | k8s的node节点1 |

| k8s-node2 | centos7.5 | k8s-node2 | 10.245.4.4 | k8s的node节点2 |

小编这边是内网环境,也没得镜像仓库,所有需要纯碎的用外网下载镜像,打包导入到内网来进行安装

本次所需要的资料下载网址

链接:https://pan.baidu.com/s/1zjq1gr87oU7z2qWtDfuKlg

提取码:fidg

--来自百度网盘超级会员V4的分享

先部署一个nginx应用

部署Nginx的应用的原因是,最后咱们需要使用ingress来进行域名访问web,这个nginx就是这个web

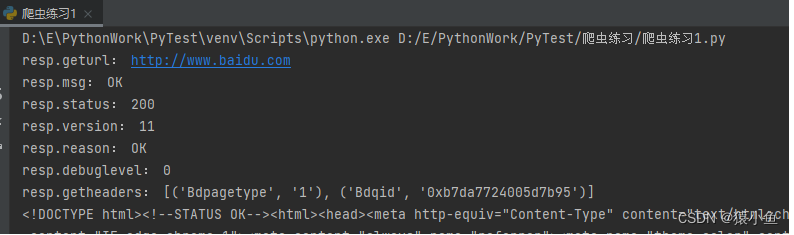

利用外网服务器将nginx包Pull下来

[root@localhost ~]# ping www.baidu.com

PING www.a.shifen.com (220.181.38.149) 56(84) bytes of data.

64 bytes from 220.181.38.149 (220.181.38.149): icmp_seq=1 ttl=128 time=7.47 ms

64 bytes from 220.181.38.149 (220.181.38.149): icmp_seq=2 ttl=128 time=8.84 ms

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@localhost ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

a2abf6c4d29d: Pull complete

a9edb18cadd1: Pull complete

589b7251471a: Pull complete

186b1aaa4aa6: Pull complete

b4df32aa5a72: Pull complete

a0bcbecc962e: Pull complete

Digest: sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx latest 605c77e624dd 11 months ago 141MB

[root@localhost ~]# docker save nginx > nginx.tar

[root@localhost ~]# ls nginx.tar

nginx.tar ###生成的tar就是我们要导入到内网的nginx镜像

####将这个nginx.tar包导入到我们的内网服务器上面,开始进行部署每个Node节点的镜像,

###因为小编的这套系统是没有镜像仓库的,也是不能联网的所以只能这样来进行导入

将包下载下来

之后需要上传到内网服务器上面,上传的办法有很多,小编就不在这里进行演示了哈

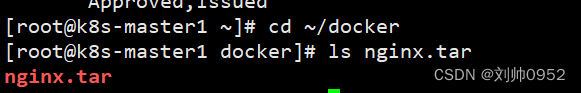

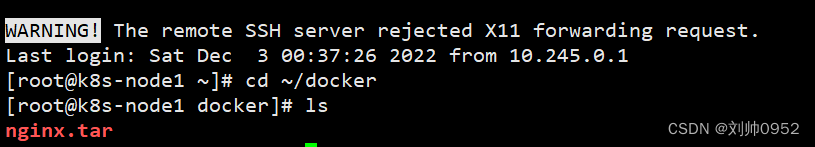

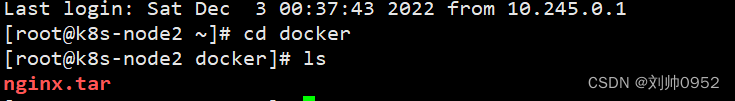

将nginx镜像利用docker load命令部署到各个节点上面

下面以node为例,其他的node 节点也需要这样操作

[root@k8s-node2 ~]# cd docker

[root@k8s-node2 docker]# ls

nginx.tar

[root@k8s-node2 docker]# docker load < nginx.tar

2edcec3590a4: Loading layer [==================================================>] 83.86MB/83.86MB

e379e8aedd4d: Loading layer [==================================================>] 62MB/62MB

b8d6e692a25e: Loading layer [==================================================>] 3.072kB/3.072kB

f1db227348d0: Loading layer [==================================================>] 4.096kB/4.096kB

32ce5f6a5106: Loading layer [==================================================>] 3.584kB/3.584kB

d874fd2bc83b: Loading layer [==================================================>] 7.168kB/7.168kB

Loaded image: nginx:latest

[root@k8s-node2 docker]# docker images | grep nginx

nginx latest 605c77e624dd 11 months ago 141MB

生成yaml文件

[root@k8s-master1 ~]# kubectl create deployment web --image=nginx --dry-run -o yaml > nginx.yaml

W1203 09:24:14.117673 15390 helpers.go:555] --dry-run is deprecated and can be replaced with --dry-run=client.

[root@k8s-master1 ~]# ls nginx.yaml

nginx.yaml ###生成yaml文件

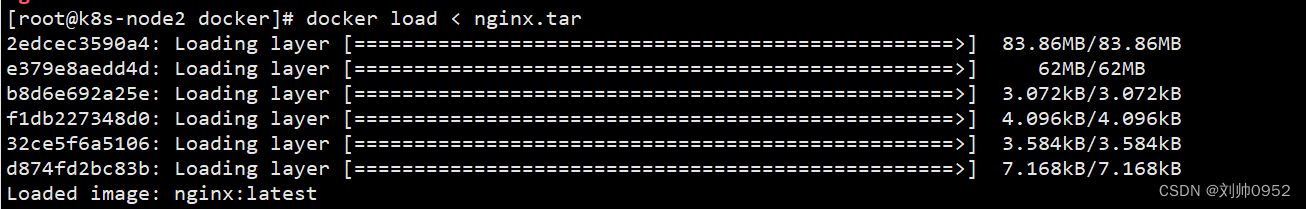

修改yaml文件

主要修改镜像的拉取策略,因为小编这个集群没得联网也没得镜像仓库,所以只能配置下优先本地拉取

[root@k8s-master1 ~]# vi nginx.yaml

[root@k8s-master1 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: web

name: web

spec:

replicas: 1

selector:

matchLabels:

app: web

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: web

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

resources: {}

status: {}

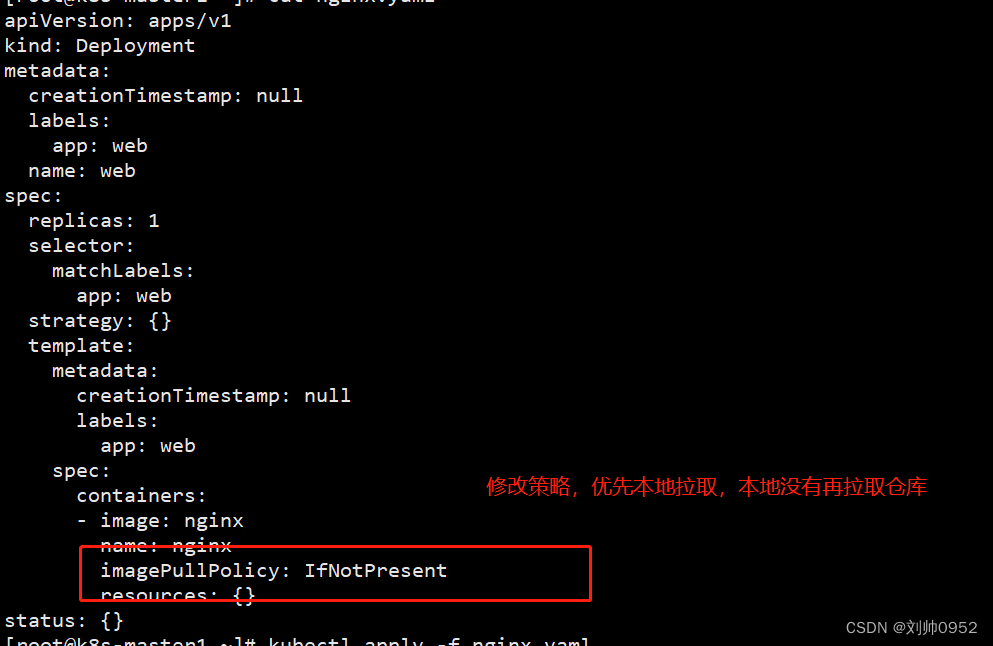

创建pod应用

[root@k8s-master1 docker]# cd

[root@k8s-master1 ~]# kubectl apply -f nginx.yaml

deployment.apps/web created

[root@k8s-master1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-59b9bb7664-ppl5h 1/1 Running 0 7s

对外暴露端口

[root@k8s-master1 ~]# kubectl expose deployment web --port=80 --target-port=80 --type=NodePort

service/web exposed

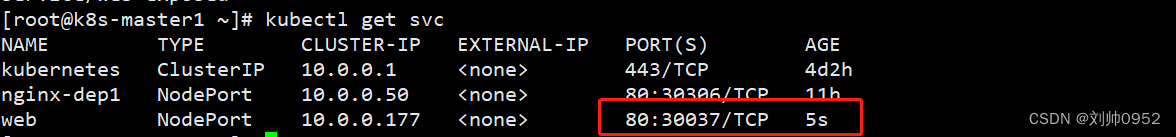

[root@k8s-master1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 4d2h

nginx-dep1 NodePort 10.0.0.50 <none> 80:30306/TCP 11h

web NodePort 10.0.0.177 <none> 80:30037/TCP 5s

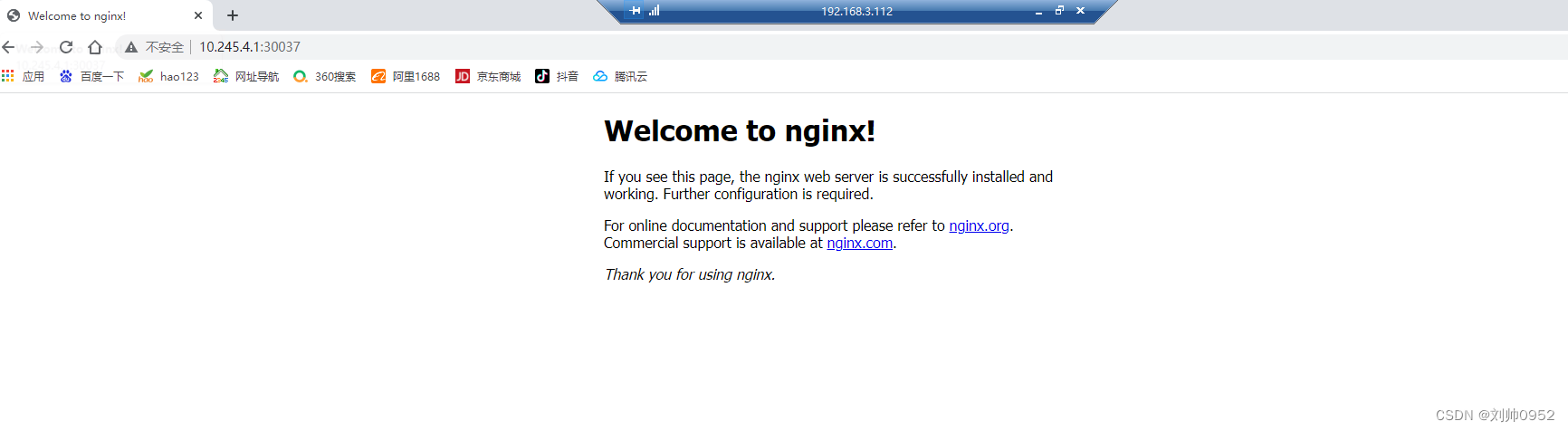

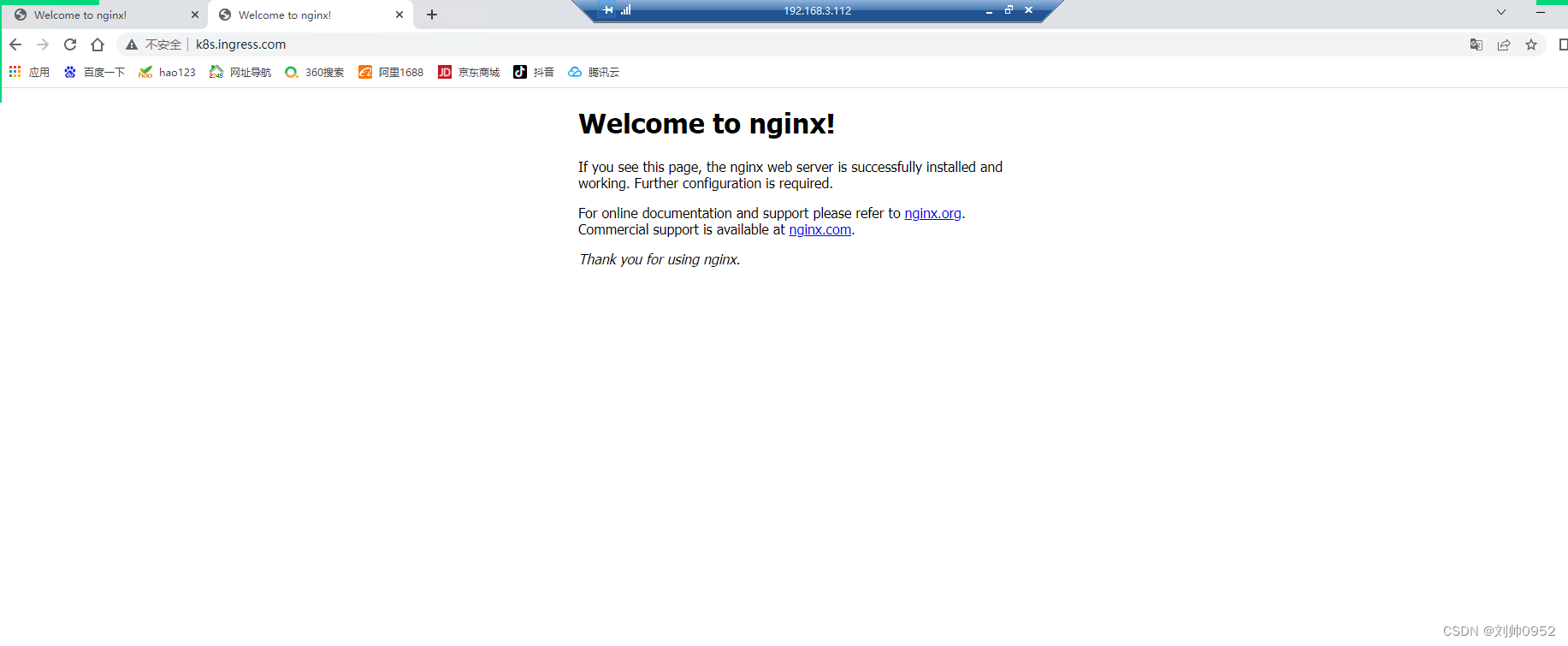

测试访问下

可以正常的进行访问

部署ingress controller

这里我们将选择官方维护的Nginx控制器来实现我们的部署

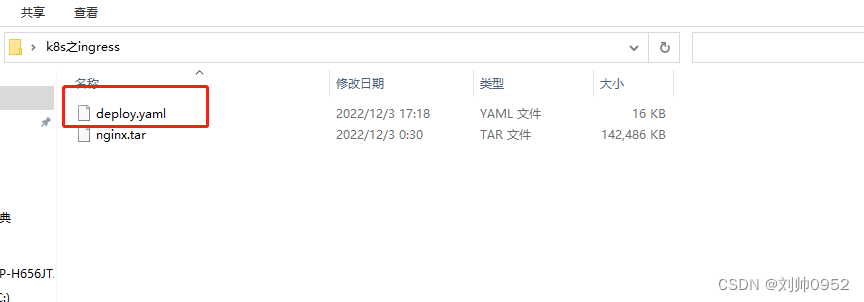

先来看官方得yaml文件

官方网址的不好拉取

给大家提供一个已修改过的yaml文件

https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.3.1/deploy/static/provider/cloud/deploy.yaml

外网服务器捞取yaml文件

[root@localhost ~]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.3.1/deploy/static/provider/cloud/deploy.yaml

--2022-12-03 04:18:00-- https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.3.1/deploy/static/provider/cloud/deploy.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.111.133, 185.199.110.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 15543 (15K) [text/plain]

Saving to: ‘deploy.yaml’

100%[==============================================================================================================================>] 15,543 --.-K/s in 0.02s

2022-12-03 04:18:19 (936 KB/s) - ‘deploy.yaml’ saved [15543/15543]

[root@localhost ~]# ls

anaconda-ks.cfg deploy.yaml docker docker-19.03.9.tgz ingress-nginx.yaml nginx.tar

[root@localhost ~]# rm -rf ingress-nginx.yaml

[root@localhost ~]# ls

anaconda-ks.cfg deploy.yaml docker docker-19.03.9.tgz nginx.tar ###daploy.yaml文件就是我们需要的文件

导出并导入到内网服务器中

##将刚才的deployer.yaml文件上传到master机器上

[root@k8s-master1 ~]# mkdir yaml/ingress

[root@k8s-master1 ~]# cd yaml/ingress/

[root@k8s-master1 ingress]# ls

[root@k8s-master1 ingress]# ls

deploy.yaml

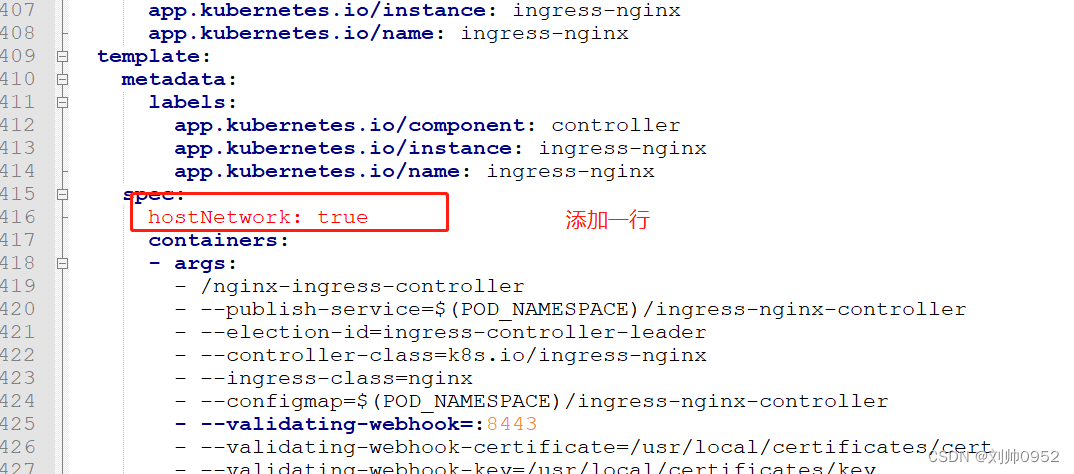

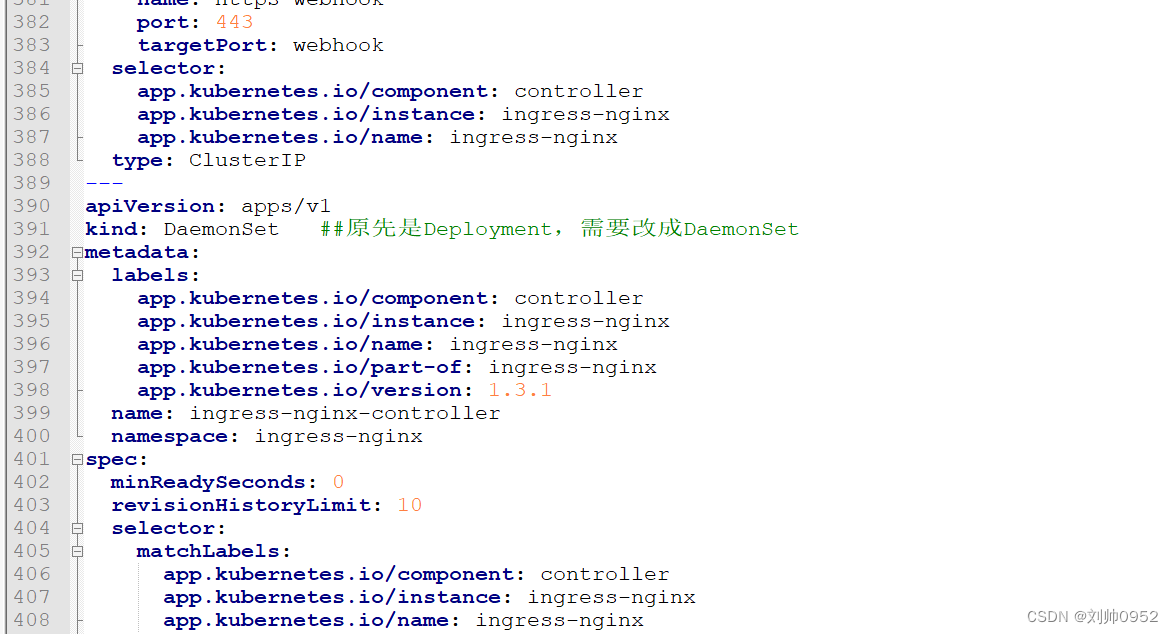

对当前deploy.yaml做一下修改

修改的地方

HostNetwork

是为了打通Cluster和node的网络,让Cluster直接监听node的端口,一般是80和443

DaemonSet

因为Deployment可能会把多个pod调度到同一个node,那就失去高可用的意义了。而DaemonSet在一个节点上只会有一个Pod,并且如果有条件的话可以进行dns负载来减轻服务器的压力

修改deploy.yaml

#####################最终yaml文件

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Local

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: DaemonSet ##原先是Deployment,需要改成DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

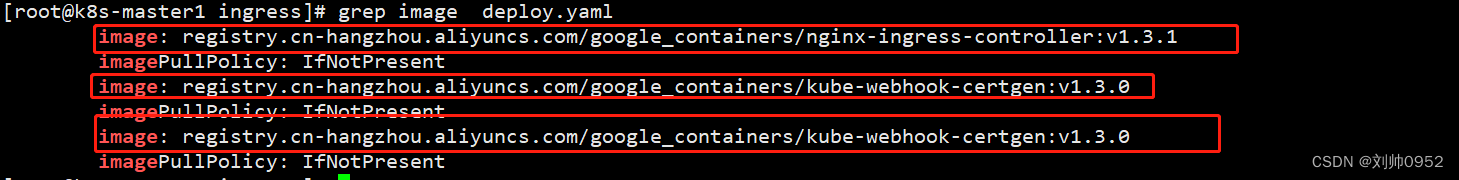

[root@k8s-master1 ingress]# grep image deploy.yaml

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

imagePullPolicy: IfNotPresent

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

##老规矩老套路,将这个镜像文件利用外网服务器,pull下来,然后打一个tar包上传到内网k8s集群中,并load一下,过程比较简单

##和我们上面部署nginx镜像得操作方法是一样得,小编这里就快速得过一下,如果有不懂得就看看上面是怎么pull nginx 镜像得就像

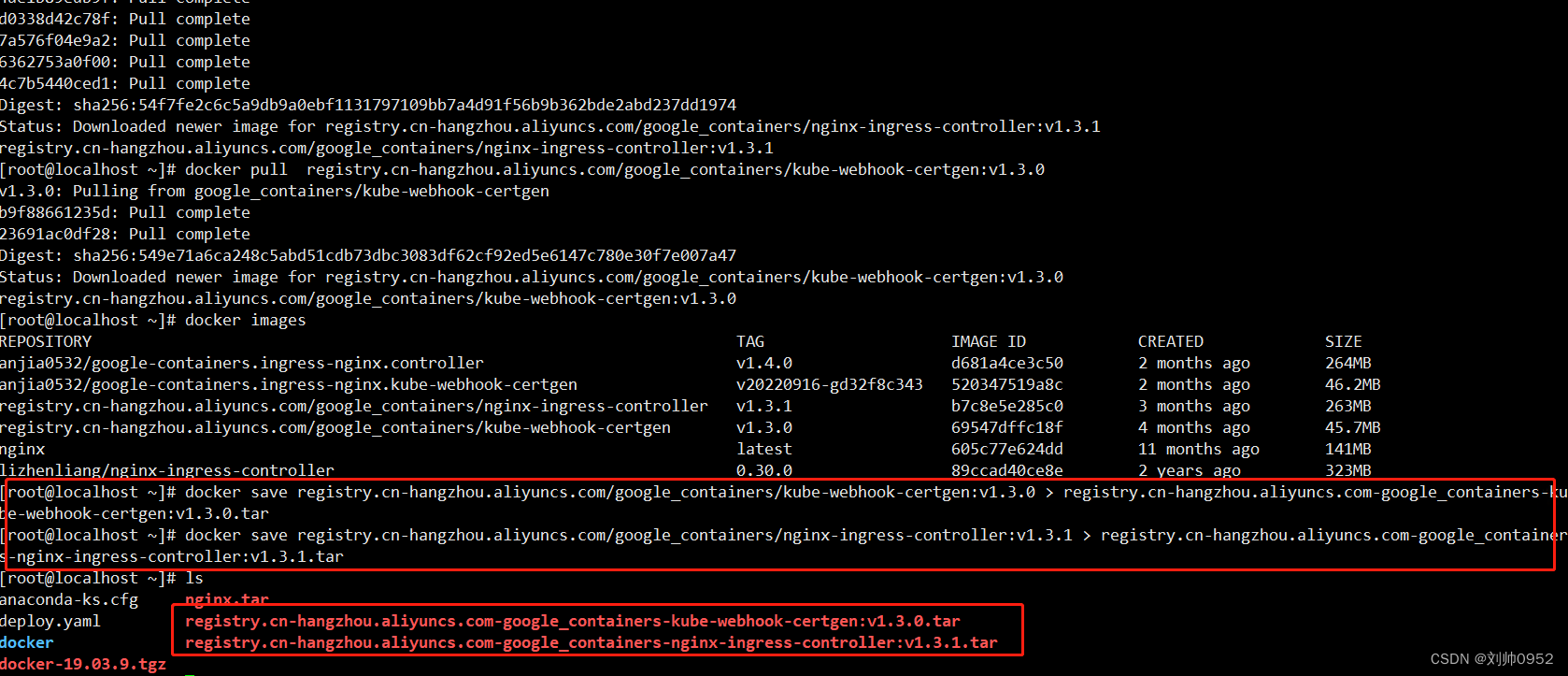

外网服务器pull所需镜像文件

[root@localhost ~]# systemctl start docker

[root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

v1.3.1: Pulling from google_containers/nginx-ingress-controller

213ec9aee27d: Already exists

2e0679428050: Already exists

3bb10086d473: Already exists

a9e78a589ab3: Already exists

a101ab4f42d5: Already exists

4f4fb700ef54: Already exists

dc27c0ef0bf2: Pull complete

3d5f4bb7af2f: Pull complete

8fb78251a937: Pull complete

4de1b89edb9f: Pull complete

d0338d42c78f: Pull complete

7a576f04e9a2: Pull complete

6362753a0f00: Pull complete

4c7b5440ced1: Pull complete

Digest: sha256:54f7fe2c6c5a9db9a0ebf1131797109bb7a4d91f56b9b362bde2abd237dd1974

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

[root@localhost ~]# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

v1.3.0: Pulling from google_containers/kube-webhook-certgen

b9f88661235d: Pull complete

23691ac0df28: Pull complete

Digest: sha256:549e71a6ca248c5abd51cdb73dbc3083df62cf92ed5e6147c780e30f7e007a47

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

anjia0532/google-containers.ingress-nginx.controller v1.4.0 d681a4ce3c50 2 months ago 264MB

anjia0532/google-containers.ingress-nginx.kube-webhook-certgen v20220916-gd32f8c343 520347519a8c 2 months ago 46.2MB

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.3.1 b7c8e5e285c0 3 months ago 263MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.3.0 69547dffc18f 4 months ago 45.7MB

nginx latest 605c77e624dd 11 months ago 141MB

lizhenliang/nginx-ingress-controller 0.30.0 89ccad40ce8e 2 years ago 323MB

[root@localhost ~]# docker save registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0 > registry.cn-hangzhou.aliyuncs.com-google_containers-kube-webhook-certgen:v1.3.0.tar

[root@localhost ~]# docker save registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1 > registry.cn-hangzhou.aliyuncs.com-google_containers-nginx-ingress-controller:v1.3.1.tar

[root@localhost ~]# ls

anaconda-ks.cfg nginx.tar

deploy.yaml registry.cn-hangzhou.aliyuncs.com-google_containers-kube-webhook-certgen:v1.3.0.tar

docker registry.cn-hangzhou.aliyuncs.com-google_containers-nginx-ingress-controller:v1.3.1.tar

docker-19.03.9.tgz

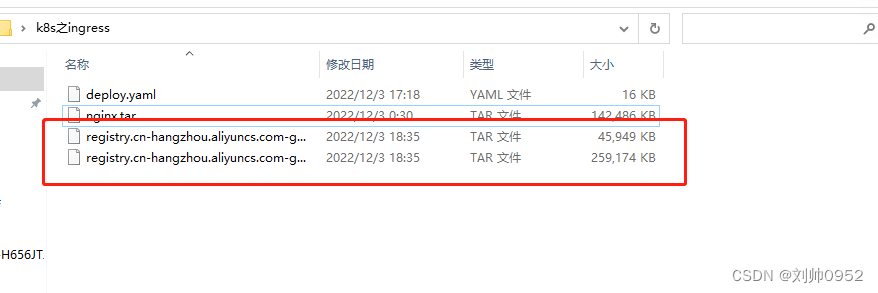

导出并导入内网服务器,方法很多还请大家各显神通

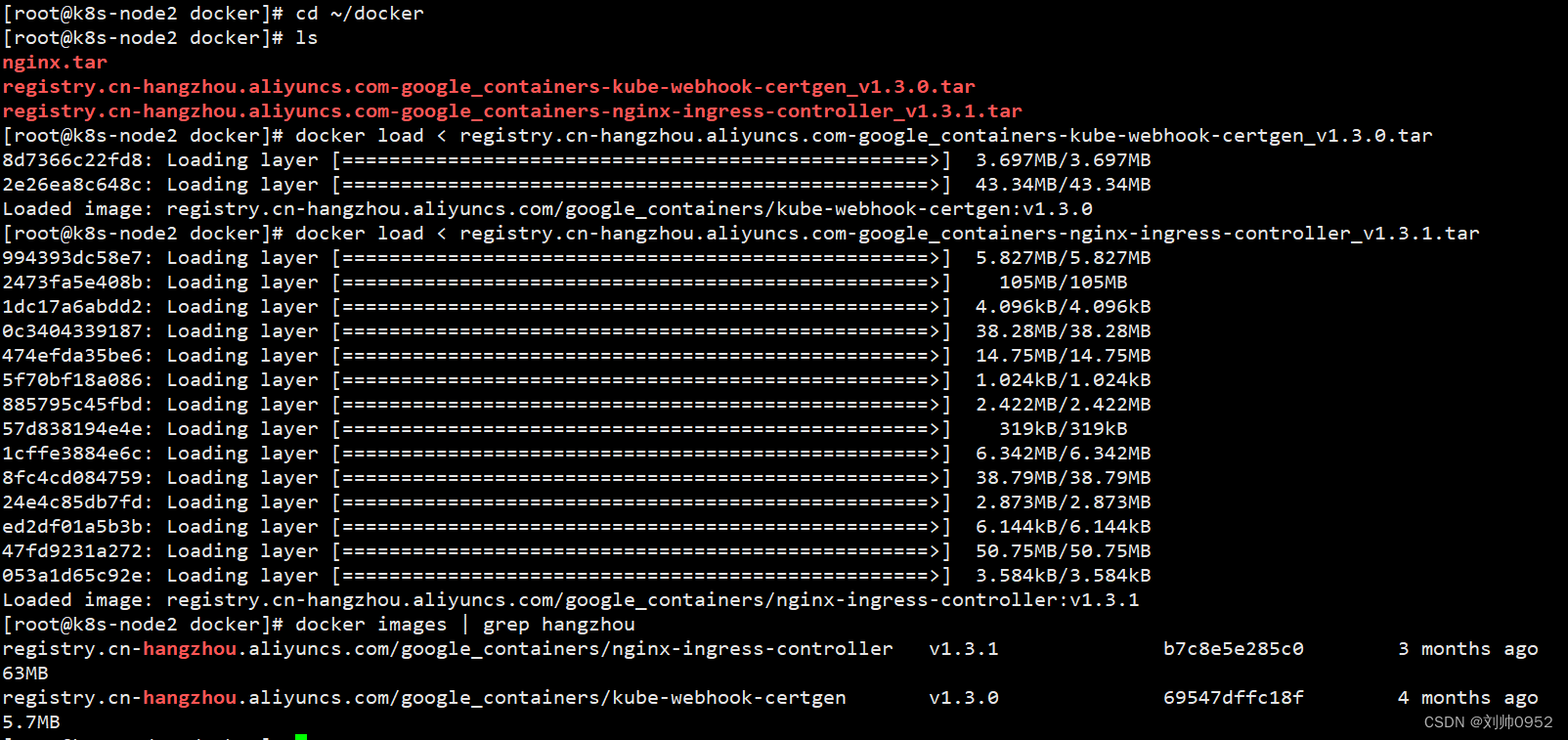

内网服务器以k8s-node2为例,其他节点都需要操作

[root@k8s-node2 docker]# cd ~/docker

[root@k8s-node2 docker]# ls

nginx.tar

registry.cn-hangzhou.aliyuncs.com-google_containers-kube-webhook-certgen_v1.3.0.tar

registry.cn-hangzhou.aliyuncs.com-google_containers-nginx-ingress-controller_v1.3.1.tar

[root@k8s-node2 docker]# docker load < registry.cn-hangzhou.aliyuncs.com-google_containers-kube-webhook-certgen_v1.3.0.tar

8d7366c22fd8: Loading layer [==================================================>] 3.697MB/3.697MB

2e26ea8c648c: Loading layer [==================================================>] 43.34MB/43.34MB

Loaded image: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0

[root@k8s-node2 docker]# docker load < registry.cn-hangzhou.aliyuncs.com-google_containers-nginx-ingress-controller_v1.3.1.tar

994393dc58e7: Loading layer [==================================================>] 5.827MB/5.827MB

2473fa5e408b: Loading layer [==================================================>] 105MB/105MB

1dc17a6abdd2: Loading layer [==================================================>] 4.096kB/4.096kB

0c3404339187: Loading layer [==================================================>] 38.28MB/38.28MB

474efda35be6: Loading layer [==================================================>] 14.75MB/14.75MB

5f70bf18a086: Loading layer [==================================================>] 1.024kB/1.024kB

885795c45fbd: Loading layer [==================================================>] 2.422MB/2.422MB

57d838194e4e: Loading layer [==================================================>] 319kB/319kB

1cffe3884e6c: Loading layer [==================================================>] 6.342MB/6.342MB

8fc4cd084759: Loading layer [==================================================>] 38.79MB/38.79MB

24e4c85db7fd: Loading layer [==================================================>] 2.873MB/2.873MB

ed2df01a5b3b: Loading layer [==================================================>] 6.144kB/6.144kB

47fd9231a272: Loading layer [==================================================>] 50.75MB/50.75MB

053a1d65c92e: Loading layer [==================================================>] 3.584kB/3.584kB

Loaded image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1

[root@k8s-node2 docker]# docker images | grep hangzhou

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller v1.3.1 b7c8e5e285c0 3 months ago 263MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen v1.3.0 69547dffc18f 4 months ago 45.7MB

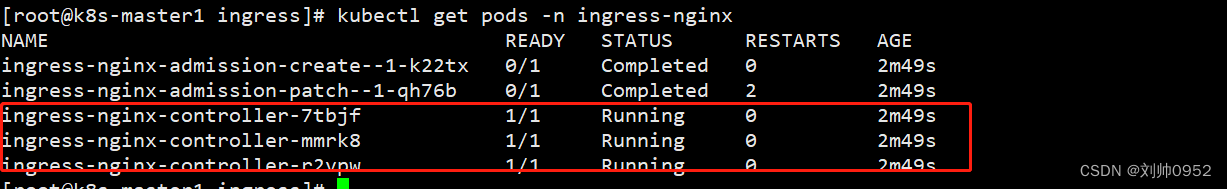

创建ingress-controller

[root@k8s-master1 ingress]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

daemonset.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

[root@k8s-master1 ingress]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create--1-k22tx 0/1 Completed 0 2m49s

ingress-nginx-admission-patch--1-qh76b 0/1 Completed 2 2m49s

ingress-nginx-controller-7tbjf 1/1 Running 0 2m49s

ingress-nginx-controller-mmrk8 1/1 Running 0 2m49s

ingress-nginx-controller-r2vpw 1/1 Running 0 2m49s

编写规则yaml

#模拟的,域名可以自己配置

[root@k8s-master1 ingress]# vi ingress.yaml

[root@k8s-master1 ingress]# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-nginx-test

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- host: k8s.ingress.com

http:

paths:

- backend:

service:

name: web

port:

number: 80

path: /

pathType: Prefix

**注意我们在 Ingress 资源对象中添加了一个 annotations:kubernetes.io/ingress.class: "nginx",这就是指定让这个 Ingress 通过 nginx-ingress 来处理,小编在这个上面吃了3小时的亏**

创建规则

[root@k8s-master1 ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/ingress-nginx-test created

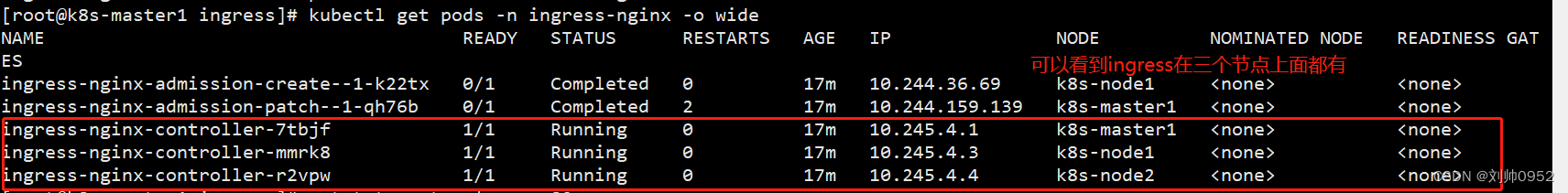

[root@k8s-master1 ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create--1-k22tx 0/1 Completed 0 17m 10.244.36.69 k8s-node1 <none> <none>

ingress-nginx-admission-patch--1-qh76b 0/1 Completed 2 17m 10.244.159.139 k8s-master1 <none> <none>

ingress-nginx-controller-7tbjf 1/1 Running 0 17m 10.245.4.1 k8s-master1 <none> <none>

ingress-nginx-controller-mmrk8 1/1 Running 0 17m 10.245.4.3 k8s-node1 <none> <none>

ingress-nginx-controller-r2vpw 1/1 Running 0 17m 10.245.4.4 k8s-node2 <none> <none>

检查监听的端口

[root@k8s-master1 ingress]# ss -antup | grep 80 ####80端口

tcp LISTEN 0 128 10.245.4.1:2380 *:* users:(("etcd",pid=942,fd=5))

tcp LISTEN 0 128 *:80 *:* users:(("nginx",pid=89482,fd=18),("nginx",pid=89476,fd=18))

tcp LISTEN 0 128 *:80 *:* users:(("nginx",pid=89481,fd=10),("nginx",pid=89476,fd=10))

tcp ESTAB 0 0 10.245.4.1:2379 10.245.4.1:52800 users:(("etcd",pid=942,fd=22))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.4:52256 users:(("etcd",pid=942,fd=9))

tcp ESTAB 0 0 10.245.4.1:39916 10.245.4.4:2380 users:(("etcd",pid=942,fd=31))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.4:51942 users:(("etcd",pid=942,fd=16))

tcp ESTAB 0 0 10.245.4.1:58802 10.245.4.4:2379 users:(("kube-apiserver",pid=652,fd=130))

tcp ESTAB 0 0 10.245.4.1:39918 10.245.4.4:2380 users:(("etcd",pid=942,fd=32))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.3:32886 users:(("etcd",pid=942,fd=116))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.4:48934 users:(("etcd",pid=942,fd=20))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:51680 users:(("kube-apiserver",pid=652,fd=321))

tcp ESTAB 0 0 10.245.4.1:59880 10.245.4.3:2379 users:(("kube-apiserver",pid=652,fd=139))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.3:32882 users:(("etcd",pid=942,fd=106))

tcp ESTAB 0 0 10.245.4.1:58580 10.245.4.4:2379 users:(("kube-apiserver",pid=652,fd=17))

tcp ESTAB 0 0 10.245.4.1:59180 10.245.4.4:2379 users:(("kube-apiserver",pid=652,fd=51))

tcp ESTAB 0 0 10.245.4.1:2379 10.245.4.1:53080 users:(("etcd",pid=942,fd=57))

tcp ESTAB 0 0 10.245.4.1:41346 10.245.4.3:2380 users:(("etcd",pid=942,fd=12))

tcp ESTAB 0 0 10.245.4.1:53232 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=180))

tcp ESTAB 0 0 10.245.4.1:39930 10.245.4.4:2380 users:(("etcd",pid=942,fd=11))

tcp ESTAB 0 0 10.245.4.1:53032 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=80))

tcp ESTAB 0 0 10.245.4.1:59980 10.245.4.3:2379 users:(("kube-apiserver",pid=652,fd=261))

tcp ESTAB 0 0 10.245.4.1:53080 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=104))

tcp ESTAB 0 0 10.245.4.1:59380 10.245.4.4:2379 users:(("kube-apiserver",pid=652,fd=285))

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.3:32906 users:(("etcd",pid=942,fd=117))

tcp ESTAB 0 0 10.245.4.1:2379 10.245.4.1:53280 users:(("etcd",pid=942,fd=90))

tcp ESTAB 0 0 10.245.4.1:41348 10.245.4.3:2380 users:(("etcd",pid=942,fd=10))

tcp ESTAB 0 0 10.245.4.1:53696 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=280))

tcp TIME-WAIT 0 0 127.0.0.1:10246 127.0.0.1:37080

tcp ESTAB 0 0 10.245.4.1:2380 10.245.4.4:52254 users:(("etcd",pid=942,fd=8))

tcp ESTAB 0 0 10.245.4.1:52800 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=13))

tcp ESTAB 0 0 10.245.4.1:58808 10.245.4.4:2379 users:(("kube-apiserver",pid=652,fd=133))

tcp ESTAB 0 0 10.245.4.1:59808 10.245.4.3:2379 users:(("kube-apiserver",pid=652,fd=27))

tcp ESTAB 0 0 10.245.4.1:2379 10.245.4.1:53220 users:(("etcd",pid=942,fd=80))

tcp ESTAB 0 0 10.245.4.1:41132 10.245.4.3:2380 users:(("etcd",pid=942,fd=114))

tcp ESTAB 0 0 10.245.4.1:53280 10.245.4.1:2379 users:(("kube-apiserver",pid=652,fd=204))

tcp LISTEN 0 128 :::80 :::* users:(("nginx",pid=89481,fd=11),("nginx",pid=89476,fd=11))

tcp LISTEN 0 128 :::80 :::* users:(("nginx",pid=89482,fd=19),("nginx",pid=89476,fd=19))

[root@k8s-master1 ingress]# ss -antup | grep 443 ####443端口

tcp LISTEN 0 128 10.245.4.1:6443 *:* users:(("kube-apiserver",pid=652,fd=8))

tcp LISTEN 0 128 *:443 *:* users:(("nginx",pid=89482,fd=20),("nginx",pid=89476,fd=20))

tcp LISTEN 0 128 *:443 *:* users:(("nginx",pid=89481,fd=12),("nginx",pid=89476,fd=12))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:37414 users:(("kube-apiserver",pid=652,fd=270))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:56688 users:(("kube-apiserver",pid=652,fd=315))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:37412 users:(("kube-apiserver",pid=652,fd=275))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:56686 users:(("kube-apiserver",pid=652,fd=18))

tcp ESTAB 0 0 10.245.4.1:42216 10.0.0.1:443 users:(("calico-node",pid=2499,fd=5))

tcp ESTAB 0 0 10.245.4.1:51114 10.0.0.1:443 users:(("nginx-ingress-c",pid=89456,fd=3))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:51114 users:(("kube-apiserver",pid=652,fd=322))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42948 users:(("kube-apiserver",pid=652,fd=273))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:47234 users:(("kube-apiserver",pid=652,fd=16))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:56692 users:(("kube-apiserver",pid=652,fd=317))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:54970 users:(("kube-apiserver",pid=652,fd=311))

tcp ESTAB 0 0 10.245.4.1:42952 10.245.4.1:6443 users:(("kubelet",pid=66488,fd=12))

tcp ESTAB 0 0 10.245.4.1:47234 10.245.4.1:6443 users:(("kube-proxy",pid=81162,fd=12))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42944 users:(("kube-apiserver",pid=652,fd=310))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42942 users:(("kube-apiserver",pid=652,fd=271))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:51218 users:(("kube-apiserver",pid=652,fd=320))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:37418 users:(("kube-apiserver",pid=652,fd=252))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:51680 users:(("kube-apiserver",pid=652,fd=321))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42218 users:(("kube-apiserver",pid=652,fd=268))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:33624 users:(("kube-apiserver",pid=652,fd=304))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:59506 users:(("kube-apiserver",pid=652,fd=253))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:33620 users:(("kube-apiserver",pid=652,fd=290))

tcp ESTAB 0 0 10.245.4.1:59506 10.245.4.1:6443 users:(("kube-scheduler",pid=662,fd=11))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42212 users:(("kube-apiserver",pid=652,fd=267))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:58624 users:(("kube-apiserver",pid=652,fd=309))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:57754 users:(("kube-apiserver",pid=652,fd=254))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:58622 users:(("kube-apiserver",pid=652,fd=301))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:33622 users:(("kube-apiserver",pid=652,fd=293))

tcp ESTAB 0 0 10.245.4.1:42942 10.245.4.1:6443 users:(("kubelet",pid=66488,fd=24))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:58626 users:(("kube-apiserver",pid=652,fd=312))

tcp TIME-WAIT 0 0 127.0.0.1:44438 127.0.0.1:9099

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:37426 users:(("kube-apiserver",pid=652,fd=323))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:34836 users:(("kube-apiserver",pid=652,fd=313))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:58614 users:(("kube-apiserver",pid=652,fd=259))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42216 users:(("kube-apiserver",pid=652,fd=272))

tcp ESTAB 0 0 10.245.4.1:42950 10.245.4.1:6443 users:(("kubelet",pid=66488,fd=40))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:60060 users:(("kube-apiserver",pid=652,fd=247))

tcp ESTAB 0 0 10.245.4.1:60060 10.245.4.1:6443 users:(("kube-apiserver",pid=652,fd=278))

tcp ESTAB 0 0 10.245.4.1:42212 10.0.0.1:443 users:(("calico-node",pid=2498,fd=5))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42950 users:(("kube-apiserver",pid=652,fd=324))

tcp ESTAB 0 0 10.245.4.1:42944 10.245.4.1:6443 users:(("kubelet",pid=66488,fd=28))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:42952 users:(("kube-apiserver",pid=652,fd=325))

tcp ESTAB 0 0 10.245.4.1:51184 10.245.4.1:6443 users:(("kube-controller",pid=105553,fd=10))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:43624 users:(("kube-apiserver",pid=652,fd=249))

tcp ESTAB 0 0 10.245.4.1:42948 10.245.4.1:6443 users:(("kubelet",pid=66488,fd=39))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.3:58618 users:(("kube-apiserver",pid=652,fd=307))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.4:37424 users:(("kube-apiserver",pid=652,fd=318))

tcp ESTAB 0 0 10.245.4.1:6443 10.245.4.1:51184 users:(("kube-apiserver",pid=652,fd=251))

tcp ESTAB 0 0 10.245.4.1:42218 10.0.0.1:443 users:(("calico-node",pid=2497,fd=5))

tcp LISTEN 0 128 :::8443 :::* users:(("nginx-ingress-c",pid=89456,fd=40))

tcp LISTEN 0 128 :::443 :::* users:(("nginx",pid=89481,fd=13),("nginx",pid=89476,fd=13))

tcp LISTEN 0 128 :::443 :::* users:(("nginx",pid=89482,fd=21),("nginx",pid=89476,fd=21))

[root@k8s-master1 ingress]# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create--1-k22tx 0/1 Completed 0 17m 10.244.36.69 k8s-node1 <none> <none>

ingress-nginx-admission-patch--1-qh76b 0/1 Completed 2 17m 10.244.159.139 k8s-master1 <none> <none>

ingress-nginx-controller-7tbjf 1/1 Running 0 17m 10.245.4.1 k8s-master1 <none> <none>

ingress-nginx-controller-mmrk8 1/1 Running 0 17m 10.245.4.3 k8s-node1 <none> <none>

ingress-nginx-controller-r2vpw 1/1 Running 0 17m 10.245.4.4 k8s-node2 <none> <none>

小编这里dns将解析一个ip地址,咱们这边有三个,因为小编的集群就三个,咱们的yaml文件里面使用的是DaemonSet控制器,也就是说有几个节点就会有几个ip,如果用条件的话可以尝试进行dns解析,不但分控了流量,还减轻了服务器的压力

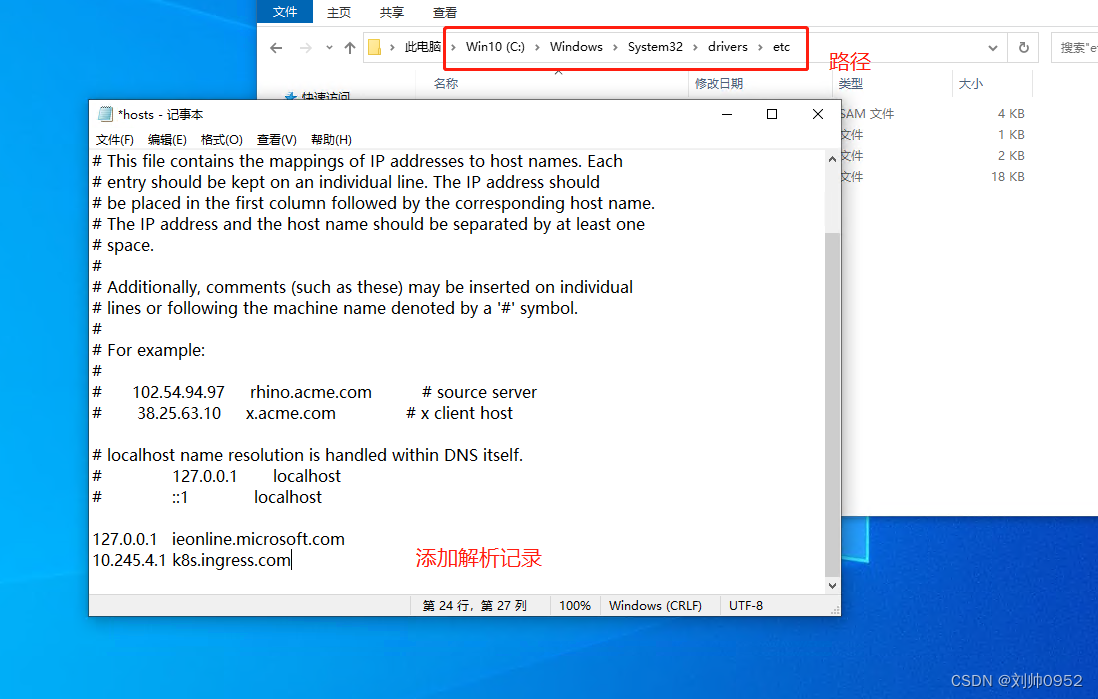

测试域名访问

添加域名解析记录

小编这边模拟在winows机器的host文件里面进行添加,毕竟小编没得dns服务器

这里需要注意的是:最好不要用master节点,小编这里用master节点,是因为对自己的mater能干些啥,是很清楚的。要不然大家容易踩坑

这个文件有一定的权限控制,可以先粘贴到桌面上,然后更改完覆盖掉之前的文件

使用浏览器进行访问

结束语

努力很苦,但是不快乐

,加油吧少年

![[附源码]JAVA毕业设计敬老院管理系统(系统+LW)](https://img-blog.csdnimg.cn/ec2aeb7c7b5942809c9b1e037134f9ea.png)

![[Java安全]—Tomcat反序列化注入回显内存马](https://img-blog.csdnimg.cn/img_convert/68fb1115241445ff6481271b7d748755.png)

![[附源码]计算机毕业设计JAVA学生信息管理系统](https://img-blog.csdnimg.cn/0dc53ca94efd4ea482ce17d1b1516459.png)

![[静态时序分析简明教程(八)]虚假路径](https://img-blog.csdnimg.cn/b7bb17870a764c6f923d00c99379c0a5.png)