目录

项目搭建

初始化three.js基础代码

设置环境纹理

加载机器人模型

添加光阵

今天简单实现一个three.js的小Demo,加强自己对three知识的掌握与学习,只有在项目中才能灵活将所学知识运用起来,话不多说直接开始。

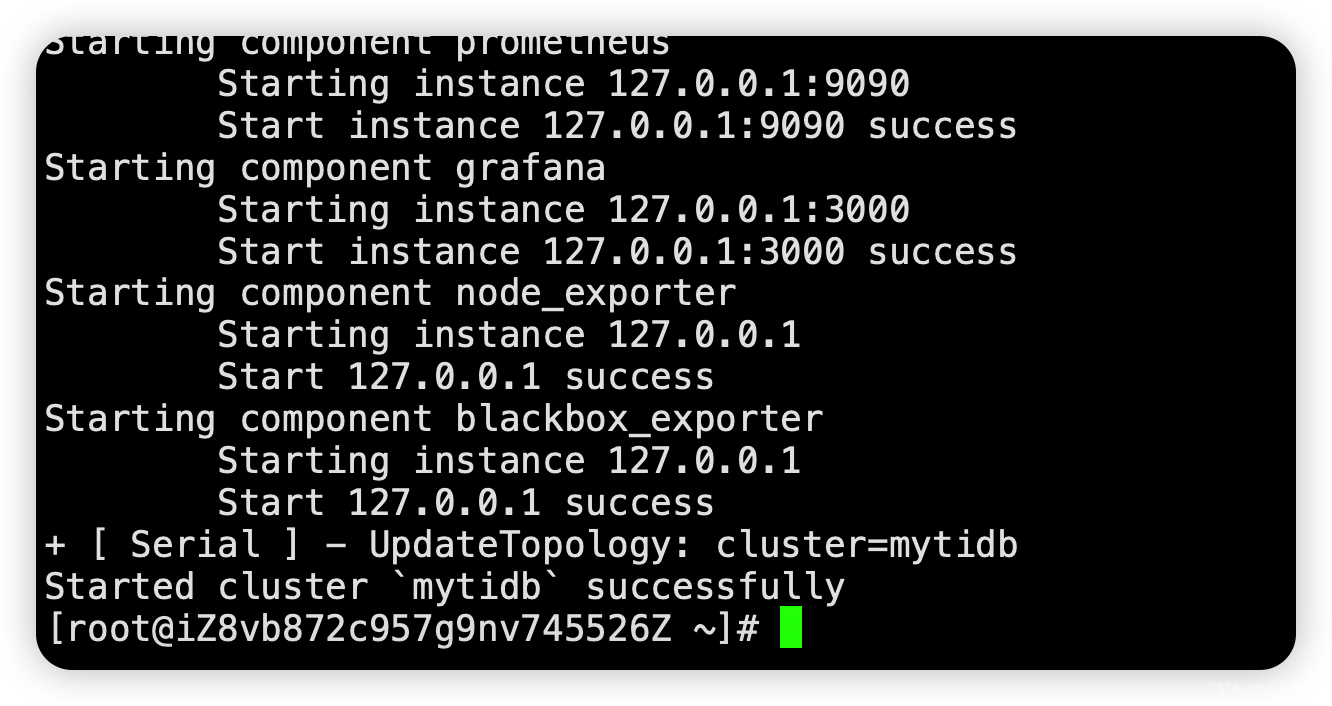

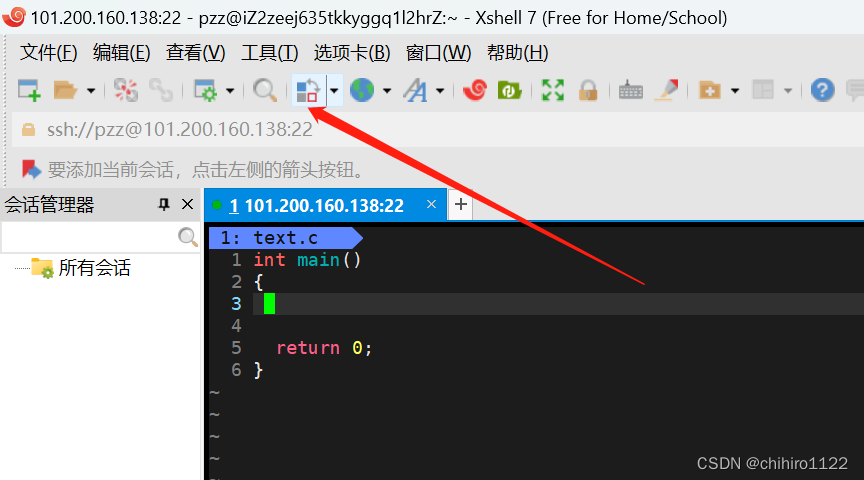

项目搭建

本案例还是借助框架书写three项目,借用vite构建工具搭建vue项目,vite这个构建工具如果有不了解的朋友,可以参考我之前对其讲解的文章:vite脚手架的搭建与使用 。搭建完成之后,用编辑器打开该项目,在终端执行 npm i 安装一下依赖,安装完成之后终端在安装 npm i three 即可。

因为我搭建的是vue3项目,为了便于代码的可读性,所以我将three.js代码单独抽离放在一个组件当中,在App根组件中进入引入该组件。具体如下:

<template>

<!-- 球形机器人 -->

<SphericalRobot></SphericalRobot>

</template>

<script setup>

import SphericalRobot from './components/SphericalRobot.vue';

</script>

<style lang="less">

*{

margin: 0;

padding: 0;

}

</style>初始化three.js基础代码

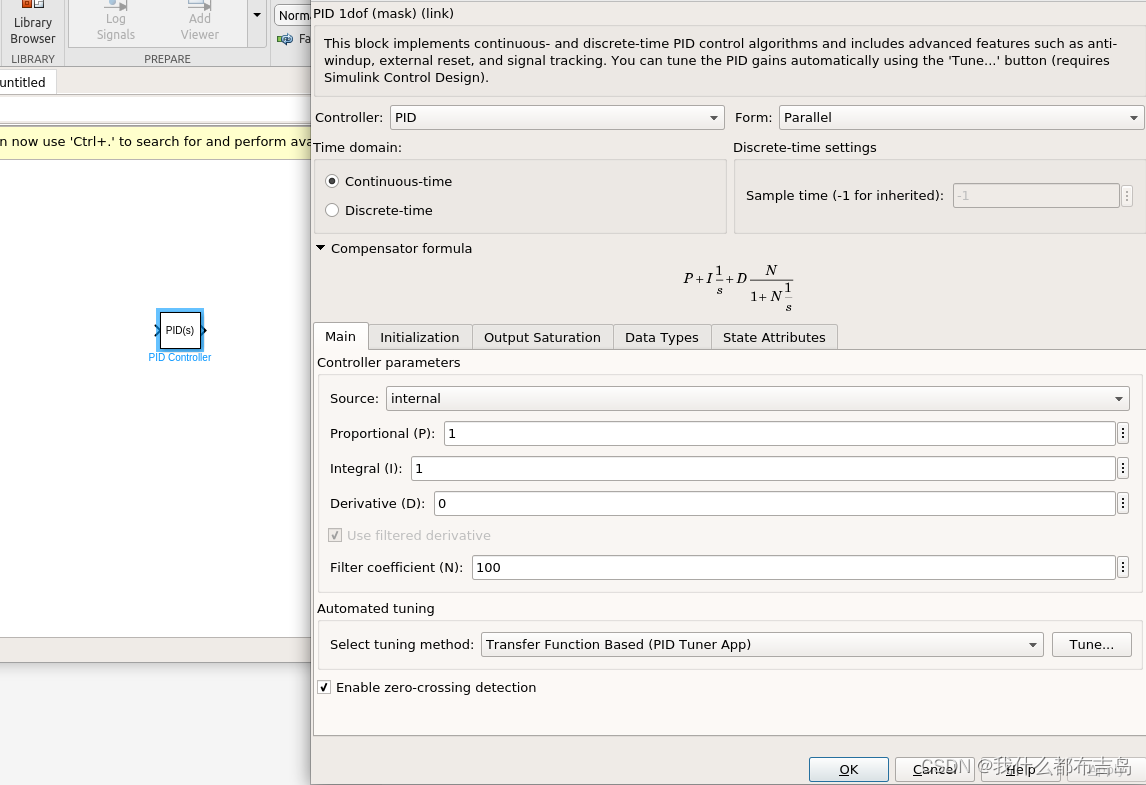

three.js开启必须用到的基础代码如下:

导入three库:

import * as THREE from 'three'初始化场景:

const scene = new THREE.Scene()初始化相机:

let camera = new THREE.PerspectiveCamera(75,window.innerWidth/window.innerHeight,0.1,1000);

camera.position.set(0, 1.5, 6);初始化渲染器:

let renderer = new THREE.WebGLRenderer({ antialias: true });

renderer.setSize(window.innerWidth,window.innerHeight);监听屏幕大小的改变,修改渲染器的宽高和相机的比例:

window.addEventListener("resize",()=>{

renderer.setSize(window.innerWidth,window.innerHeight)

camera.aspect = window.innerWidth/window.innerHeight

camera.updateProjectionMatrix()

})设置渲染函数:

// 设置渲染函数

function render() {

requestAnimationFrame(render);

renderer.render(scene, camera);

}进行挂载:

import { onMounted } from "vue";

onMounted(() => {

// 添加控制器

let controls = new OrbitControls(camera, renderer.domElement);

controls.enableDamping = true

screenDom.value.appendChild(renderer.domElement)

render();

});设置环境纹理

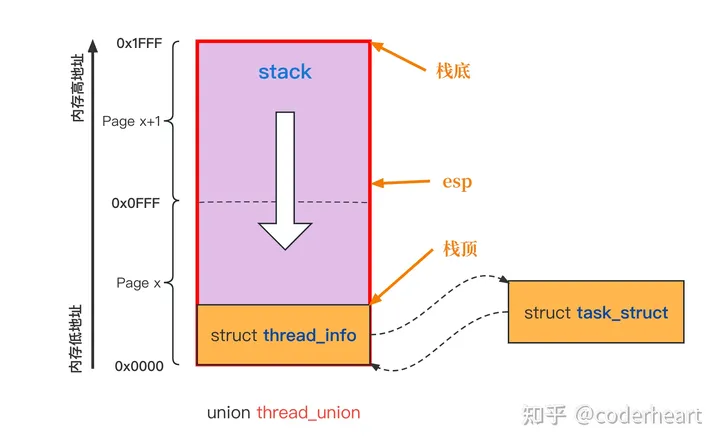

这里的话通过RGBELoader将HDR(高动态范围)格式的图片数据加载到Three.js中,并将其转换为Cubemap格式的文本形式,以用于创建更高质量、更真实的3D场景和物体。

// 解析 HDR 纹理数据

import { RGBELoader } from 'three/examples/jsm/loaders/RGBELoader'

// 创建rgbe加载器

let hdrLoader = new RGBELoader();

hdrLoader.load("src/assets/imgs/sky12.hdr", (texture) => {

texture.mapping = THREE.EquirectangularReflectionMapping;

scene.background = texture;

scene.environment = texture;

});呈现的效果如下:

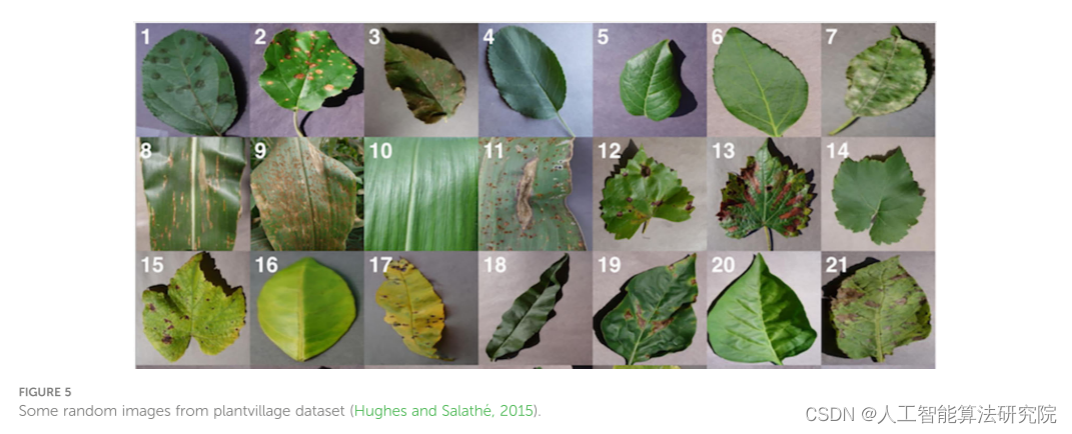

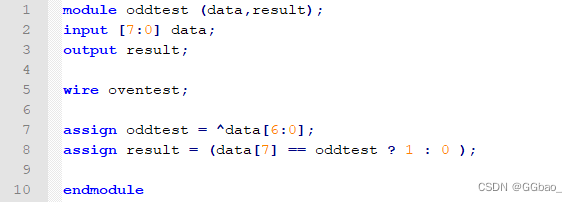

加载机器人模型

经过前几篇对three.js小demo的训练,相信大家对加载模型可谓是得心应手了吧,无非就四步嘛:

第一步引入加载GLTF模型和压缩模型的第三方库:

// 加载GLTF模型

import { GLTFLoader } from 'three/examples/jsm/loaders/GLTFLoader';

// 解压GLTF模型

import { DRACOLoader } from 'three/examples/jsm/loaders/DRACOLoader';第二步初始化loader:

// 设置解压缩的加载器

let dracoLoader = new DRACOLoader();

dracoLoader.setDecoderPath("./draco/gltf/");

dracoLoader.setDecoderConfig({ type: "js" });

let gltfLoader = new GLTFLoader();

gltfLoader.setDRACOLoader(dracoLoader);第三步就是加载gltf模型:

// 添加机器人模型

gltfLoader.load("src/assets/model/robot.glb", (gltf) => {

scene.add(gltf.scene);

});第四步就是根据具体情况添加光源:

// 添加直线光

let light1 = new THREE.DirectionalLight(0xffffff, 0.3);

light1.position.set(0, 10, 10);

let light2 = new THREE.DirectionalLight(0xffffff, 0.3);

light1.position.set(0, 10, -10);

let light3 = new THREE.DirectionalLight(0xffffff, 0.8);

light1.position.set(10, 10, 10);

scene.add(light1, light2, light3);

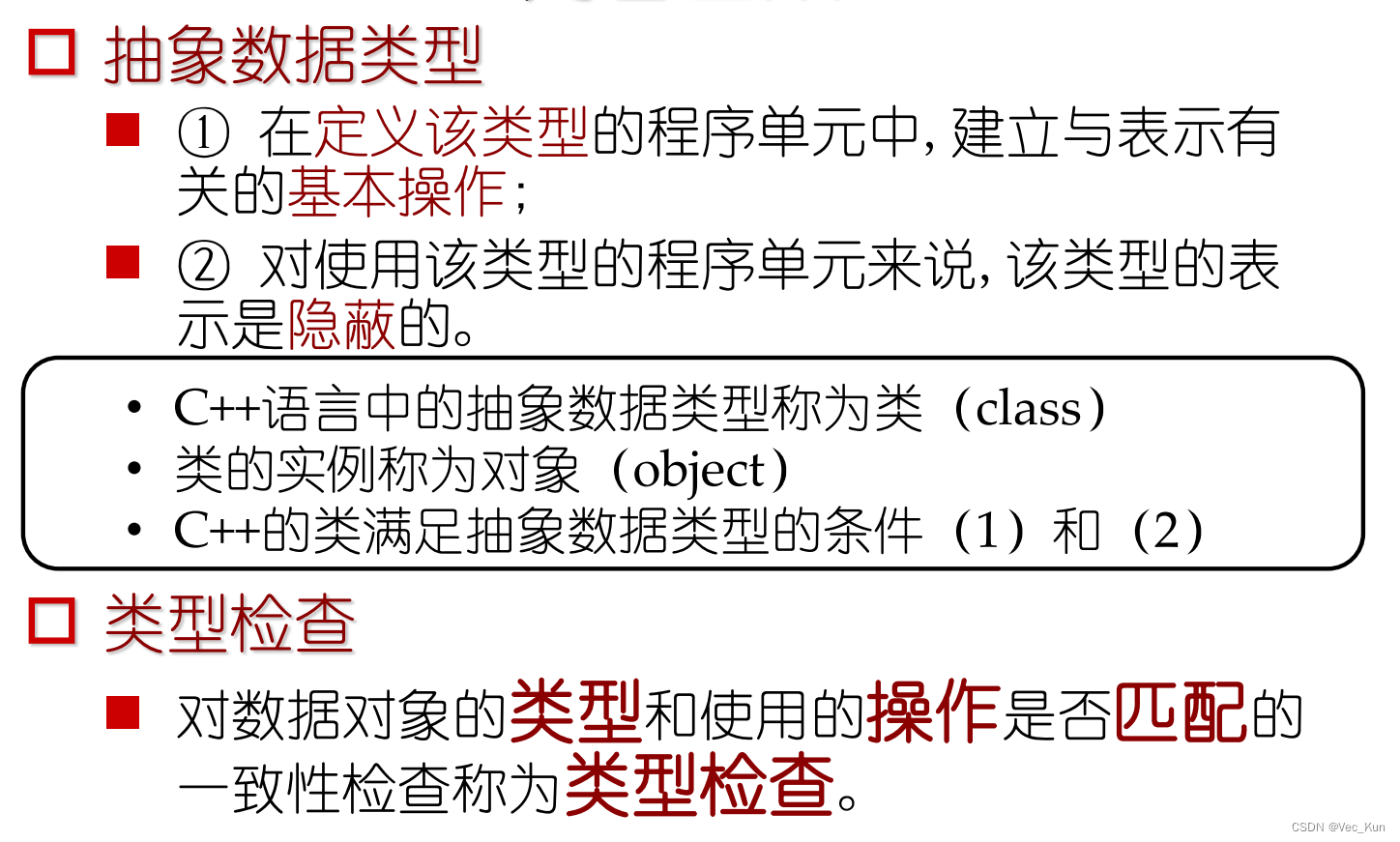

添加光阵

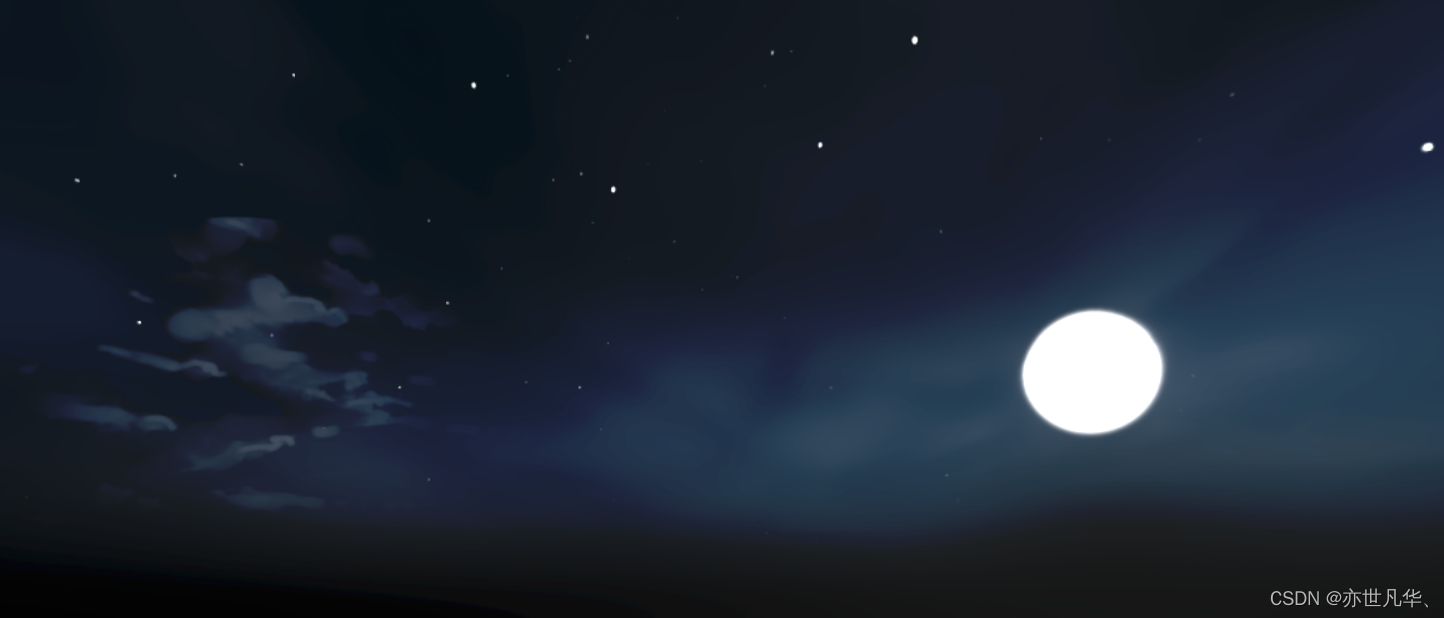

这里使用视频纹理。VideoTexture(视频纹理)是一种用于游戏和其它交互式3D应用程序中的纹理类型。它允许开发人员将视频流或预先录制的视频作为纹理应用于3D模型,从而为用户提供更加逼真的虚拟体验。VideoTexture通常用于创建虚拟电视、显示屏或其他需要显示视频的场景。在使用VideoTexture时,视频将自动循环播放,因此它可以被用作一种实时纹理,来呈现实时生成的影像或流媒体视频。

// 添加光阵

let video = document.createElement("video");

video.src = "src/assets/zp2.mp4";

video.loop = true;

video.muted = true;

video.play();

let videoTexture = new THREE.VideoTexture(video);

const videoGeoPlane = new THREE.PlaneGeometry(8, 4.5);

const videoMaterial = new THREE.MeshBasicMaterial({

map: videoTexture,

transparent: true,

side: THREE.DoubleSide,

alphaMap: videoTexture, // 纹理贴图,它可以用于控制模型表面的透明度。

});

const videoMesh = new THREE.Mesh(videoGeoPlane, videoMaterial);

videoMesh.position.set(0, 0.2, 0);

videoMesh.rotation.set(-Math.PI / 2, 0, 0);

scene.add(videoMesh);

接下来可以进行添加镜面反射:在Three.js中,Reflector是一种可以创建反射效果的对象。它可以模拟出水面或镜面等表面的反射效果,用于增强场景的真实感。Reflector可以创建在平面、球体、圆柱体等几何体上,可以使用Three.js中的Reflector、Refractor、RefractorRTT等类来创建不同类型的反射效果。

// 添加镜面反射

let reflectorGeometry = new THREE.PlaneGeometry(100, 100);

let reflectorPlane = new Reflector(reflectorGeometry, {

textureWidth: window.innerWidth,

textureHeight: window.innerHeight,

color: 0x332222,

});

reflectorPlane.rotation.x = -Math.PI / 2;

scene.add(reflectorPlane);

demo做完,给出本案例的完整代码:(获取素材也可以私信博主)

<template>

<div class="canvas-container" ref="screenDom"></div>

</template>

<script setup>

import * as THREE from "three";

import { ref, onMounted, onUnmounted } from "vue";

import { OrbitControls } from "three/examples/jsm/controls/OrbitControls";

import { RGBELoader } from "three/examples/jsm/loaders/RGBELoader";

import { GLTFLoader } from "three/examples/jsm/loaders/GLTFLoader";

import { DRACOLoader } from "three/examples/jsm/loaders/DRACOLoader";

import { Reflector } from "three/examples/jsm/objects/Reflector";

let screenDom = ref(null);

// 创建场景

let scene = new THREE.Scene();

// 创建相机

let camera = new THREE.PerspectiveCamera(75,window.innerWidth/window.innerHeight,0.1,1000);

camera.position.set(0, 1.5, 6);

// 创建渲染器

let renderer = new THREE.WebGLRenderer({ antialias: true });

renderer.setSize(window.innerWidth,window.innerHeight);

// 监听画面变化,更新渲染画面

window.addEventListener("resize", () => {

camera.aspect = window.innerWidth / window.innerHeight;// 更新摄像头

camera.updateProjectionMatrix();// 更新摄像机的投影矩阵

renderer.setSize(window.innerWidth, window.innerHeight);// 更新渲染器

renderer.setPixelRatio(window.devicePixelRatio);// 设置渲染器的像素比

});

// 设置渲染函数

function render() {

requestAnimationFrame(render);

renderer.render(scene, camera);

}

onMounted(() => {

// 添加控制器

let controls = new OrbitControls(camera, renderer.domElement);

controls.enableDamping = true

screenDom.value.appendChild(renderer.domElement)

render();

});

// 创建rgbe加载器

let hdrLoader = new RGBELoader();

hdrLoader.load("src/assets/imgs/sky12.hdr", (texture) => {

texture.mapping = THREE.EquirectangularReflectionMapping;

scene.background = texture;

scene.environment = texture;

});

// 设置解压缩的加载器

let dracoLoader = new DRACOLoader();

dracoLoader.setDecoderPath("./draco/gltf/");

dracoLoader.setDecoderConfig({ type: "js" });

let gltfLoader = new GLTFLoader();

gltfLoader.setDRACOLoader(dracoLoader);

// 添加机器人模型

gltfLoader.load("src/assets/model/robot.glb", (gltf) => {

scene.add(gltf.scene);

});

// 添加直线光

let light1 = new THREE.DirectionalLight(0xffffff, 0.3);

light1.position.set(0, 10, 10);

let light2 = new THREE.DirectionalLight(0xffffff, 0.3);

light1.position.set(0, 10, -10);

let light3 = new THREE.DirectionalLight(0xffffff, 0.8);

light1.position.set(10, 10, 10);

scene.add(light1, light2, light3);

// 添加光阵

let video = document.createElement("video");

video.src = "src/assets/zp2.mp4";

video.loop = true;

video.muted = true;

video.play();

let videoTexture = new THREE.VideoTexture(video);

const videoGeoPlane = new THREE.PlaneGeometry(8, 4.5);

const videoMaterial = new THREE.MeshBasicMaterial({

map: videoTexture,

transparent: true,

side: THREE.DoubleSide,

alphaMap: videoTexture, // 纹理贴图,它可以用于控制模型表面的透明度。

});

const videoMesh = new THREE.Mesh(videoGeoPlane, videoMaterial);

videoMesh.position.set(0, 0.2, 0);

videoMesh.rotation.set(-Math.PI / 2, 0, 0);

scene.add(videoMesh);

// 添加镜面反射

let reflectorGeometry = new THREE.PlaneGeometry(100, 100);

let reflectorPlane = new Reflector(reflectorGeometry, {

textureWidth: window.innerWidth,

textureHeight: window.innerHeight,

color: 0x332222,

});

reflectorPlane.rotation.x = -Math.PI / 2;

scene.add(reflectorPlane);

</script>

<style lang="less" scoped></style>