2.2.2 部署Master节点

1.安装docker、kubeadm、kubelet、kubectl

前面我们已经完成了虚拟机中系统的初始化,下面我们就在我们所有的节点上安装docker、kubeadm、kubelet、kubectl。

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker

(1)安装Docker

- 首先联网下载Docker

- 默认下载时下载最新的,安装时指定一个版本,不指名安装最新的,不安装最新的原因是因为可能存在兼容性问题

- 设置Docker开机自动启动

- 查看Docker版本

- 设置改变Docker仓库,改成阿里云仓库,在阿里云仓库可以下载相应的内容

三个节点都要安装Docker

# 联网下载Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 安装指定版本的docker

$ yum -y install docker-ce-18.06.1.ce-3.el7

# 设置Docker开机启动,并开启Docker服务

$ systemctl enable docker && systemctl start docker

# 测试docker是否安装成功

$ docker --version

# 设置改变仓库,改用阿里云的镜像,因为很多资源比如Kubernetes的官网下载会出现问题,因为那是国外的网站而阿里云里很多东西都可以下载到

$ cat >> /etc/docker/daemon.json <<EOF

{

"registry-mirrors":["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

# 更改docker仓库后需要对docker进行重启

$ systemctl restart docker

# 查看docker仓库是否更改成功

$ docker info

(2) 添加阿里云YUM软件源

因为我们要下载很多软件和资源,如果连官网会导致拉取镜像失败,所以我们yum源设置成阿里云,为了方便我们后面的下载。

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

以上就是我们所有的准备工作。

(3)安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本部署,如果不指定版本默认安装最新的。这里要在每个节点上都安装

$ yum -y install kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

# 设置kubelet开机启动

$ systemctl enable kubelet

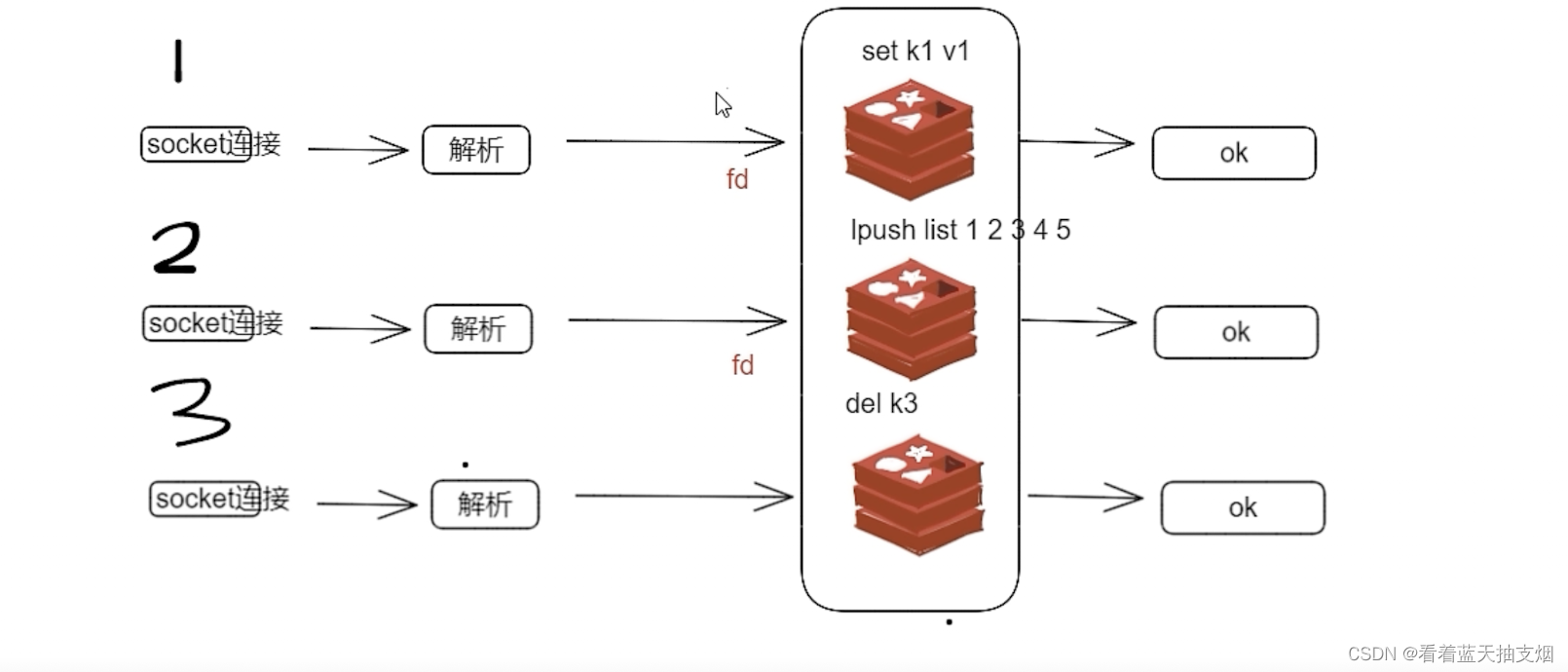

2.部署Kubernetes Master

要在Master节点执行初始化操作,由于默认拉取镜像地址k8s.gcr.lo国内无法访问,这里指定阿里云镜像仓库地址。

$ kubeadm init \

--apiserver-advertise-address=192.168.184.136 \ #表示将该节点配置成Master节点

--image-repository=registry.aliyuncs.com/google_containers \ #配置使用阿里云镜像

--kubernetes-version v1.18.0 \ #指定安装版本

--service-cidr=10.96.0.0/12 \ #配置内部使用的IP,没有固定要求只要跟当前IP不冲突即可。用于指定SVC的网络范围

--pod-network-cidr=10.244.0.0/16 #配置内部使用的IP,没有固定要求只要跟当前IP不冲突即可。用于指定Pod的网络范围

## 完整运行命令

kubeadm init --apiserver-advertise-address=192.168.184.142 --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version v1.18.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

安装完成后提示信息显示

Your Kubernetes control-plane has initialized successfully!

表示Kubernetes初始化成功。初始化成功之后的操作也已经有提示,我们按照提示使用kubectl进行操作

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 查看操作是否成功,查看当前的node节点

kubectl get nodes

3.加入Kubernetes Node

在Node节点中执行初始化成功后的添加节点命令。

向集群添加节点,执行在kubeadm init输出的kubeadm join命令

kubeadm join 192.168.184.142:6443 --token nbn09x.6sh2lay2pfs3ebqc \

--discovery-token-ca-cert-hash sha256:be4b7aba2a55de50ffb0af8e02fc22673a9f783a410d18ab15b34a3aff0f8978

# 到Master节点测试Node节点是否添加成功

kubectl get nodes

此时我们已经清楚的看到目前集群中的Master、Node节点都已经添加即我们的主节点以及两个工作节点。但同时我们也发现节点的状态是NotReady,此时我们还需要一步即配置一个网络的插件,也就是说目前通过网络还不能访问。

默认token有效期为24小时,当过期之后,该token就不可用了。这时候就需要重新创建token

kubeadm token create --print-join-command

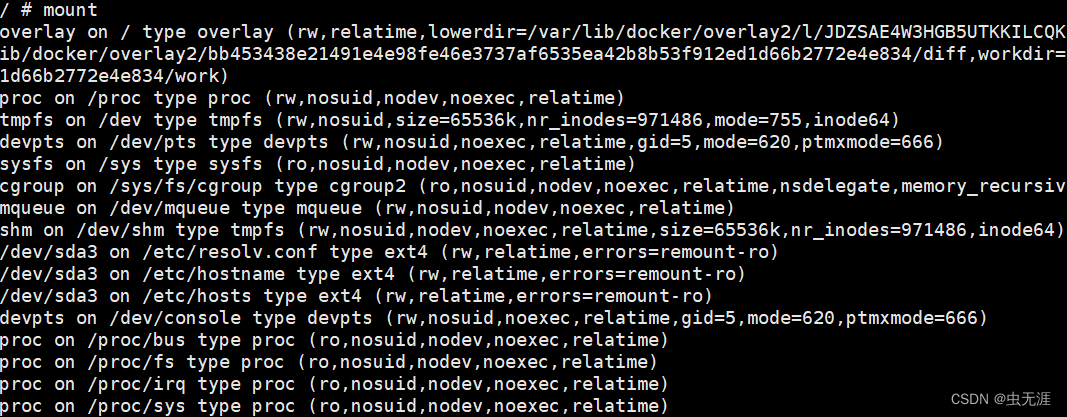

这里说一下部署Kubernetes中踩的坑,当执行kubeadm init初始化操作时报错

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get http://localhost:10248/healthz: dial tcp [::1]:10248: connect: connection refused.

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get http://localhost:10248/healthz: dial tcp [::1]:10248: connect: connection refused.

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

网上各种找,各种排错,后来发现初始化时候的一个警告

W0524 17:53:18.154656 8520 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8smaster" could not be reached

[WARNING Hostname]: hostname "k8smaster": lookup k8smaster on 192.168.184.2:53: no such host

发现是因为hosts中的主机名写错了,写成了k8smastr,后来改成k8smaster,安装成功。

4.部署CNI网络插件

经过以上的操作Kubernetes集群的整个配置,我们已经把Master、Node1、Node2相关组件都已经安装完成,但我们也看到节点的状态是NotReady,但此时我们并不能做其他的操作,此时我们还需要配置一个网络的插件,才能对外进行访问。

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

默认镜像地址是外网可能无法访问,可尝试使用sed命令修改为docker hub镜像仓库

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果无法访问国外的源可以在当前执行命令目录下创建文件kube-flannel.yml,将下述内容粘到文件中,然后进行后续安装。

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

k8s-app: flannel

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- networking.k8s.io

resources:

- clustercidrs

verbs:

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: flannel

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: flannel

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

k8s-app: flannel

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

k8s-app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/flannel/flannel-cni-plugin:v1.1.2

#image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.2

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/flannel/flannel:v0.21.5

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.21.5

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/flannel/flannel:v0.21.5

#image: docker.io/rancher/mirrored-flannelcni-flannel:v0.21.5

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

执行部署命令

$ kubectl apply -f kube-flannel.yml

执行命令查看pod,查看pod是否运行

$ kubectl get pods -n kube-system

我们可以看出已经运行了很多相关的组件。

执行命令查看Node节点运行情况

$ kubectl get nodes

此时我们看出节点都已做好准备,至此我们通过kubeadm方式搭建好了Kubernetes集群服务。

![《汇编语言》- 读书笔记 - 第5章- [BX]和 loop 指令](https://img-blog.csdnimg.cn/16ecdcdd473e4e1cb08e1caa24a32d1c.png)