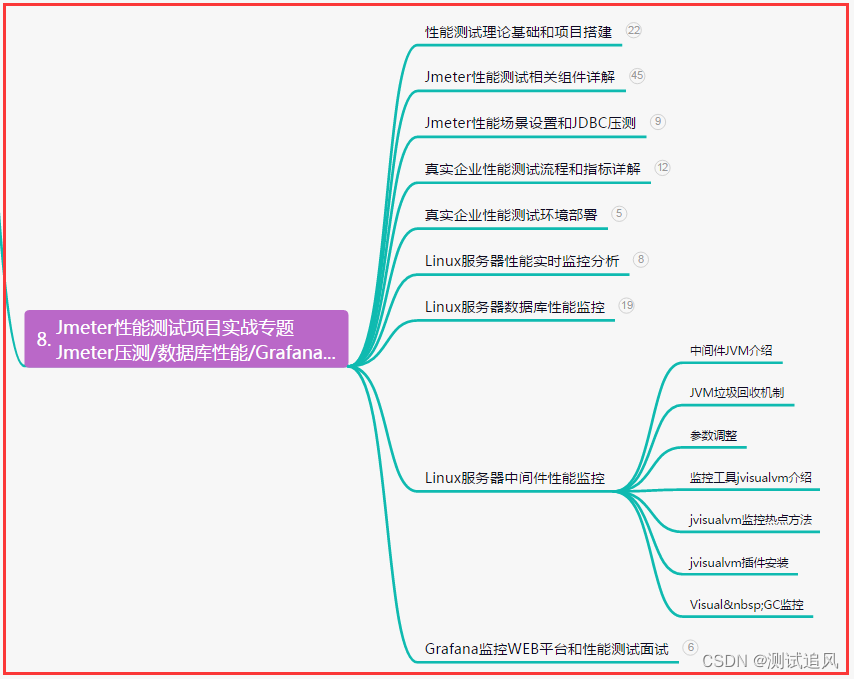

深度学习笔记之递归网络——铺垫:Softmax的反向传播过程

- 引言

- 总结:递归神经网络的前馈计算过程

- 场景构建

- 前馈计算描述

- 铺垫: Softmax \text{Softmax} Softmax的反向传播过程

引言

上一节介绍了递归神经网络前馈计算过程的基本逻辑,以及作为语言模型时,衡量一个语言模型的优劣性指标——困惑度。本节介绍 Softmax \text{Softmax} Softmax函数的反向传播 ( Backward Propagation,BP ) (\text{Backward Propagation,BP}) (Backward Propagation,BP)过程。

总结:递归神经网络的前馈计算过程

场景构建

已知某特定时刻的递归神经网络神经元表示如下:

其中:

-

x t x_t xt表示数据在 t t t时刻的输入,其维度格式为 x t ∈ R n x × m × 1 x_t \in \mathbb R^{n_x \times m \times 1} xt∈Rnx×m×1。其中 n x n_x nx表示当前时刻输入向量的维数; m m m表示样本数量; 1 1 1则表示当前所在时刻 t t t。

输入向量可能是‘词向量’,或者是其他描述序列单位的向量。而n x n_x nx描述该向量的大小。-

m

m

m

可表示为当前Batch \text{Batch} Batch内的样本数量。 对应完整序列数据X \mathcal X X可表示为如下形式。其中T \mathcal T T表示输入时刻的具体数量。

X = ( x 1 , x 2 , ⋯ , x t , x t + 1 , ⋯ , x T ) T ∈ R n x × m × T \mathcal X = (x_1,x_2,\cdots,x_t,x_{t+1},\cdots,x_{\mathcal T})^T \in \mathbb R^{n_x \times m \times \mathcal T} X=(x1,x2,⋯,xt,xt+1,⋯,xT)T∈Rnx×m×T

-

h t h_t ht表示 t t t时刻的序列信息,也是要传递到 t + 1 t+1 t+1时刻的值;它的维度格式表示为:

这里n h n_h nh表示隐藏状态的维数大小;它由参数W H ⇒ H , W H ⇒ X \mathcal W_{\mathcal H \Rightarrow \mathcal H},\mathcal W_{\mathcal H \Rightarrow \mathcal X} WH⇒H,WH⇒X决定;h t + 1 ∈ R n h × m × 1 h_{t+1} \in \mathbb R^{n_h \times m \times 1} ht+1∈Rnh×m×1同理。

h t ∈ R n h × m × 1 h_t \in \mathbb R^{n_h \times m \times 1} ht∈Rnh×m×1

对应的隐藏层矩阵H ∈ R n h × m × T \mathcal H \in \mathbb R^{n_h \times m \times \mathcal T} H∈Rnh×m×T。因为每一进入一个输入,都会得到一个相应更长的序列信息。因此X , H \mathcal X,\mathcal H X,H共用同一个T \mathcal T T。 -

O t + 1 \mathcal O_{t+1} Ot+1表示数据传入后计算产生的预测值,它的维度格式表示为:

其中n O n_{\mathcal O} nO表示预测输出结果的长度。

O t + 1 ∈ R n O × m × 1 \mathcal O_{t+1} \in \mathbb R^{n_{\mathcal O} \times m \times \mathcal 1} Ot+1∈RnO×m×1

同理,对应的输出矩阵O ∈ R n O × m × T O \mathcal O \in \mathbb R^{n_{\mathcal O} \times m \times \mathcal T_{\mathcal O}} O∈RnO×m×TO,这里的T O \mathcal T_{\mathcal O} TO表示输出时刻的数量。需要注意的是,T O \mathcal T_{\mathcal O} TO和T \mathcal T T是两个概念。也就是说,输出的序列长度和输入长度无关,它与权重参数W H ⇒ O \mathcal W_{\mathcal H \Rightarrow \mathcal O} WH⇒O相关。

前馈计算描述

为了方便描述,将上述过程中的序列下标表示为序列上标:

x

t

,

h

t

,

h

t

+

1

,

O

t

+

1

⇒

x

(

t

)

,

h

(

t

)

,

h

(

t

+

1

)

,

O

(

t

+

1

)

x_t,h_t,h_{t+1},\mathcal O_{t+1} \Rightarrow x^{(t)},h^{(t)},h^{(t+1)},\mathcal O^{(t+1)}

xt,ht,ht+1,Ot+1⇒x(t),h(t),h(t+1),O(t+1)

关于第

t

t

t时刻神经元的前馈计算过程表示如下:

需要注意的是,这里的

h

(

t

+

1

)

,

O

(

t

+

1

)

h^{(t+1)},\mathcal O^{(t+1)}

h(t+1),O(t+1)表示对下一时刻信息的预测,而这个预测过程是在

t

t

t时刻完成的。

- 序列信息

h

(

t

+

1

)

h^{(t+1)}

h(t+1)的计算过程:

{ Z 1 ( t + 1 ) = W h ( t ) ⇒ h ( t + 1 ) ⋅ h ( t ) + W x ( t ) ⇒ h ( t + 1 ) ⋅ x ( t ) + b h ( t + 1 ) h ( t + 1 ) = Tanh ( Z 1 ( t ) ) \begin{cases} \mathcal Z_1^{(t+1)} = \mathcal W_{h^{(t)} \Rightarrow h^{(t+1)}}\cdot h^{(t)} + \mathcal W_{x^{(t)} \Rightarrow h^{(t+1)}} \cdot x^{(t)} + b_{h^{(t+1)}} \\ \quad \\ h^{(t+1)} = \text{Tanh}(\mathcal Z_1^{(t)}) \end{cases} ⎩ ⎨ ⎧Z1(t+1)=Wh(t)⇒h(t+1)⋅h(t)+Wx(t)⇒h(t+1)⋅x(t)+bh(t+1)h(t+1)=Tanh(Z1(t)) - 预测值

O

(

t

+

1

)

\mathcal O^{(t+1)}

O(t+1)的计算过程:

关于后验概率P m o d e l [ O ( t + 1 ) ∣ x ( t ) , h ( t + 1 ) ] \mathcal P_{model}[\mathcal O^{(t+1)} \mid x^{(t)},h^{(t+1)}] Pmodel[O(t+1)∣x(t),h(t+1)]本质上是一个分类任务——从该分布中选择概率最高的结果作为x ( t + 1 ) x^{(t+1)} x(t+1)的结果,这里使用Softmax \text{Softmax} Softmax函数对各结果对应的概率分布信息进行评估。

{ Z 2 ( t + 1 ) = W h ( t + 1 ) ⇒ O ( t + 1 ) ⋅ h ( t + 1 ) + b O ( t + 1 ) O ( t + 1 ) = Softmax ( Z 2 ( t + 1 ) ) = exp { Z 2 ( t + 1 ) } ∑ i = 1 n O exp { Z 2 ; i ( t + 1 ) } \begin{cases} \mathcal Z_2^{(t+1)} = \mathcal W_{h^{(t+1)} \Rightarrow \mathcal O^{(t+1)}} \cdot h^{(t+1)} + b_{\mathcal O^{(t+1)}} \\ \quad \\ \begin{aligned} \mathcal O^{(t+1)} & = \text{Softmax}(\mathcal Z_2^{(t+1)}) \\ & = \frac{\exp \left\{\mathcal Z_2^{(t+1)}\right\}}{\sum_{i=1}^{n_{\mathcal O}}\exp \left\{\mathcal Z_{2;i}^{(t+1)}\right\}} \\ \end{aligned} \end{cases} ⎩ ⎨ ⎧Z2(t+1)=Wh(t+1)⇒O(t+1)⋅h(t+1)+bO(t+1)O(t+1)=Softmax(Z2(t+1))=∑i=1nOexp{Z2;i(t+1)}exp{Z2(t+1)}

其中,公式中出现的各参数维度格式表示如下:

Z

1

:

{

W

h

(

t

)

⇒

h

(

t

+

1

)

∈

R

1

×

n

h

⇒

W

H

⇒

H

∈

R

n

h

×

n

h

W

x

(

t

)

⇒

h

(

t

+

1

)

∈

R

1

×

n

x

⇒

W

X

⇒

H

∈

R

n

h

×

n

x

b

h

(

t

+

1

)

∈

R

1

×

1

⇒

b

H

∈

R

n

h

×

1

Z

2

:

{

W

h

(

t

+

1

)

⇒

O

(

t

+

1

)

∈

R

⇒

W

H

⇒

O

∈

R

n

O

×

n

h

b

O

(

t

+

1

)

∈

R

1

×

1

⇒

b

O

∈

R

n

O

×

1

\begin{aligned} & \mathcal Z_1:\begin{cases} \mathcal W_{h^{(t)} \Rightarrow h^{(t+1)}} \in \mathbb R^{1 \times n_h} \Rightarrow \mathcal W_{\mathcal H \Rightarrow \mathcal H} \in \mathbb R^{n_h \times n_h} \\ \mathcal W_{x^{(t)} \Rightarrow h^{(t+1)}} \in \mathbb R^{1 \times n_x} \Rightarrow \mathcal W_{\mathcal X \Rightarrow \mathcal H} \in \mathbb R^{n_h \times n_x} \\ b_{\mathcal h^{(t+1)}} \in \mathbb R^{1 \times 1} \Rightarrow b_{\mathcal H} \in \mathbb R^{n_h \times 1} \end{cases} \\ & \mathcal Z_2:\begin{cases} \mathcal W_{h^{(t+1)} \Rightarrow \mathcal O^{(t+1)}} \in \mathbb R^{} \Rightarrow \mathcal W_{\mathcal H \Rightarrow \mathcal O} \in \mathbb R^{n_{\mathcal O} \times n_h} \\ b_{\mathcal O^{(t+1)}} \in \mathbb R^{1 \times 1} \Rightarrow b_{\mathcal O} \in \mathbb R^{n_{\mathcal O} \times 1} \end{cases} \end{aligned}

Z1:⎩

⎨

⎧Wh(t)⇒h(t+1)∈R1×nh⇒WH⇒H∈Rnh×nhWx(t)⇒h(t+1)∈R1×nx⇒WX⇒H∈Rnh×nxbh(t+1)∈R1×1⇒bH∈Rnh×1Z2:{Wh(t+1)⇒O(t+1)∈R⇒WH⇒O∈RnO×nhbO(t+1)∈R1×1⇒bO∈RnO×1

铺垫: Softmax \text{Softmax} Softmax的反向传播过程

场景构建

假设一个

L

\mathcal L

L层全连接神经网络用作

C

\mathcal C

C分类的分类任务,并且已知由

m

m

m个训练样本构成的训练集

D

\mathcal D

D:

D

=

{

(

x

(

i

)

,

y

(

i

)

)

}

i

=

1

m

\mathcal D = \{(x^{(i)},y^{(i)})\}_{i=1}^m

D={(x(i),y(i))}i=1m

中间的计算过程忽略。仅观察输出结果。设每一个

x

(

i

)

(

i

=

1

,

2

,

⋯

,

m

)

x^{(i)}(i=1,2,\cdots,m)

x(i)(i=1,2,⋯,m)的对应预测结果为

y

^

(

i

)

\hat y^{(i)}

y^(i),使用交叉熵

(

CrossEntropy

)

(\text{CrossEntropy})

(CrossEntropy)对其计算损失:

L

[

y

(

i

)

,

y

^

(

i

)

]

=

−

∑

j

=

1

C

y

j

(

i

)

log

y

^

j

(

i

)

\mathscr L \left[y^{(i)},\hat y^{(i)}\right] = -\sum_{j=1}^{\mathcal C} y_j^{(i)} \log \hat y_j^{(i)}

L[y(i),y^(i)]=−j=1∑Cyj(i)logy^j(i)

相应地,对训练集

D

\mathcal D

D的损失函数

J

(

W

)

\mathcal J(\mathcal W)

J(W)表示为:

这里将偏置项

b

b

b忽略掉了。

J

(

W

)

=

1

m

∑

i

=

1

m

L

[

y

(

i

)

,

y

^

(

i

)

]

\mathcal J(\mathcal W) = \frac{1}{m} \sum_{i=1}^m \mathscr L \left[y^{(i)},\hat y^{(i)}\right]

J(W)=m1i=1∑mL[y(i),y^(i)]

关于最后一层神经网络输出

Z

(

L

)

\mathcal Z^{(\mathcal L)}

Z(L)与

Softmax

\text{Softmax}

Softmax激活函数的前馈计算过程表示如下:

y

^

=

a

(

L

)

=

Softmax

(

Z

(

L

)

)

\hat y = a^{(\mathcal L)} = \text{Softmax}(\mathcal Z^{(\mathcal L)})

y^=a(L)=Softmax(Z(L))

Softmax \text{Softmax} Softmax反向传播过程

以单个样本

(

x

,

y

)

∈

D

(x,y) \in \mathcal D

(x,y)∈D为例。首先计算该样本的损失函数结果

L

(

y

,

y

^

)

\mathscr L(y,\hat y)

L(y,y^)关于预测输出

y

^

=

a

(

L

)

\hat y = a^{(\mathcal L)}

y^=a(L) 的导数结果:

∂

L

∂

a

(

L

)

=

∂

∂

a

(

L

)

[

−

∑

j

=

1

C

y

j

log

y

^

j

]

=

∂

∂

a

(

L

)

[

−

(

y

1

log

y

^

1

+

y

2

log

y

^

2

+

⋯

+

y

C

log

y

^

C

)

]

=

∂

∂

a

(

L

)

[

−

(

y

1

log

a

1

(

L

)

+

y

2

log

a

2

(

L

)

+

⋯

+

y

C

log

a

C

(

L

)

)

]

\begin{aligned} \frac{\partial \mathscr L}{\partial a^{(\mathcal L)}} & = \frac{\partial}{\partial a^{(\mathcal L)}} \left[-\sum_{j=1}^{\mathcal C} y_j \log \hat y_j \right] \\ & = \frac{\partial}{\partial a^{(\mathcal L)}} \left[- (y_1 \log \hat y_1 + y_2 \log \hat y_2 + \cdots + y_{\mathcal C} \log \hat y_{\mathcal C}) \right] \\ & = \frac{\partial}{\partial a^{(\mathcal L)}} \left[ - (y_1 \log a_1^{(\mathcal L)} + y_2 \log a_2^{(\mathcal L)} + \cdots + y_{\mathcal C} \log a_{\mathcal C}^{(\mathcal L)})\right] \end{aligned}

∂a(L)∂L=∂a(L)∂[−j=1∑Cyjlogy^j]=∂a(L)∂[−(y1logy^1+y2logy^2+⋯+yClogy^C)]=∂a(L)∂[−(y1loga1(L)+y2loga2(L)+⋯+yClogaC(L))]

很明显,

L

\mathscr L

L表示各维度的连加和,是一个标量;而此时的

a

(

L

)

a^{(\mathcal L)}

a(L)是一个

1

×

C

1 \times \mathcal C

1×C的向量。其求导结果表示如下:

标量对向量求导见文章末尾链接,侵删。

∂

L

∂

a

(

L

)

=

[

∂

L

∂

a

1

(

L

)

,

⋯

,

∂

L

∂

a

C

(

L

)

]

=

{

∂

∂

a

1

(

L

)

[

−

(

y

1

log

a

1

(

L

)

⏟

a

1

(

L

)

相关

+

⋯

+

y

C

log

a

C

(

L

)

⏟

a

1

(

L

)

无关

)

]

,

⋯

,

∂

∂

a

C

(

L

)

[

−

(

y

1

log

a

1

(

L

)

+

⋯

⏟

a

C

(

L

)

无关

+

y

C

log

a

C

(

L

)

⏟

a

C

(

L

)

相关

)

]

}

=

[

−

y

1

a

1

(

L

)

,

⋯

,

−

y

C

a

C

(

L

)

]

=

−

(

y

1

,

⋯

,

y

C

)

(

a

1

(

L

)

,

⋯

,

a

C

(

L

)

)

=

−

y

y

^

\begin{aligned} \frac{\partial \mathscr L}{\partial a^{(\mathcal L)}} & = \left[\frac{\partial \mathscr L}{\partial a_1^{(\mathcal L)}},\cdots,\frac{\partial \mathscr L}{\partial a_{\mathcal C}^{(\mathcal L)}}\right]\\ & = \left\{\frac{\partial}{\partial a_1^{(\mathcal L)}} \left[-(\underbrace{y_1 \log a_1^{(\mathcal L)}}_{a_1^{(\mathcal L) 相关}} + \underbrace{\cdots + y_{\mathcal C} \log a_{\mathcal C}^{(\mathcal L)}}_{a_1^{(\mathcal L)无关}})\right],\cdots,\frac{\partial}{\partial a_{\mathcal C}^{(\mathcal L)}} \left[-(\underbrace{y_1 \log a_1^{(\mathcal L)} + \cdots}_{a_{\mathcal C}^{(\mathcal L)无关}} + \underbrace{y_{\mathcal C} \log a_{\mathcal C}^{(\mathcal L)}}_{a_{\mathcal C}^{(\mathcal L)相关}})\right]\right\} \\ & = \left[-\frac{y_1}{a_1^{(\mathcal L)}},\cdots,-\frac{y_{\mathcal C}}{a_{\mathcal C}^{(\mathcal L)}}\right] \\ & = -\frac{(y_1,\cdots,y_{\mathcal C})}{\left(a_1^{(\mathcal L)},\cdots,a_{\mathcal C}^{(\mathcal L)} \right)} \\ & = -\frac{y}{\hat y} \end{aligned}

∂a(L)∂L=[∂a1(L)∂L,⋯,∂aC(L)∂L]=⎩

⎨

⎧∂a1(L)∂

−(a1(L)相关

y1loga1(L)+a1(L)无关

⋯+yClogaC(L))

,⋯,∂aC(L)∂

−(aC(L)无关

y1loga1(L)+⋯+aC(L)相关

yClogaC(L))

⎭

⎬

⎫=[−a1(L)y1,⋯,−aC(L)yC]=−(a1(L),⋯,aC(L))(y1,⋯,yC)=−y^y

继续向前传播,计算

∂

L

∂

Z

(

L

)

\begin{aligned}\frac{\partial \mathscr L}{\partial \mathcal Z^{(\mathcal L)}}\end{aligned}

∂Z(L)∂L:

∂

L

∂

Z

(

L

)

=

∂

L

∂

a

(

L

)

⋅

∂

a

(

L

)

∂

Z

(

L

)

\frac{\partial \mathscr L}{\partial \mathcal Z^{(\mathcal L)}} = \frac{\partial \mathscr L}{\partial a^{(\mathcal L)}} \cdot \frac{\partial a^{(\mathcal L)}}{\partial \mathcal Z^{(\mathcal L)}}

∂Z(L)∂L=∂a(L)∂L⋅∂Z(L)∂a(L)

关于

∂

a

(

L

)

∂

Z

(

L

)

\begin{aligned}\frac{\partial a^{(\mathcal L)}}{\partial \mathcal Z^{(\mathcal L)}}\end{aligned}

∂Z(L)∂a(L),由于

a

(

L

)

,

Z

(

L

)

a^{(\mathcal L)},\mathcal Z^{(\mathcal L)}

a(L),Z(L)均是

1

×

C

1 \times \mathcal C

1×C的向量。其导数结果表示如下:

这是一个

C

×

C

×

1

\mathcal C \times \mathcal C \times 1

C×C×1的三维张量。

∂

a

(

L

)

∂

Z

(

L

)

=

[

∂

a

(

L

)

∂

z

1

(

L

)

,

⋯

,

∂

a

(

L

)

∂

z

C

(

L

)

]

C

×

C

×

1

T

=

{

∂

∂

z

1

(

L

)

[

exp

(

Z

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

,

⋯

,

∂

∂

z

C

(

L

)

[

exp

(

Z

(

L

)

)

∑

i

=

1

C

exp

(

z

C

(

L

)

)

]

}

C

×

C

×

1

T

\begin{aligned} \frac{\partial a^{(\mathcal L)}}{\partial \mathcal Z^{(\mathcal L)}} & = \left[\frac{\partial a^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}},\cdots,\frac{\partial a^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\right]_{\mathcal C \times \mathcal C \times 1}^T \\ & = \left\{\frac{\partial}{\partial z_1^{(\mathcal L)}}\left[\frac{\exp(\mathcal Z^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right],\cdots,\frac{\partial}{\partial z_{\mathcal C}^{(\mathcal L)}} \left[\frac{\exp(\mathcal Z^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_{\mathcal C}^{(\mathcal L)})}\right]\right\}_{\mathcal C \times \mathcal C \times 1}^T \end{aligned}

∂Z(L)∂a(L)=[∂z1(L)∂a(L),⋯,∂zC(L)∂a(L)]C×C×1T={∂z1(L)∂[∑i=1Cexp(zi(L))exp(Z(L))],⋯,∂zC(L)∂[∑i=1Cexp(zC(L))exp(Z(L))]}C×C×1T

这里以第一项为例,不可否认的是,它是一个

1

×

C

1 \times \mathcal C

1×C的向量结果。并且

z

1

(

L

)

z_1^{(\mathcal L)}

z1(L)是一个标量,它的导数结果表示如下:

其中

exp

(

Z

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

\begin{aligned}\frac{\exp(\mathcal Z^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\end{aligned}

∑i=1Cexp(zi(L))exp(Z(L))是输出结果

a

(

L

)

a^{(\mathcal L)}

a(L)的第一个分量。记作

a

1

(

L

)

a_1^{(\mathcal L)}

a1(L).

∂

∂

z

1

(

L

)

[

exp

(

Z

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

=

{

∂

∂

z

1

(

L

)

[

exp

(

z

1

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

⏟

a

1

(

L

)

,

⋯

,

∂

∂

z

1

(

L

)

[

exp

(

z

C

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

⏟

a

C

(

L

)

}

1

×

C

\frac{\partial}{\partial z_1^{(\mathcal L)}}\left[\frac{\exp(\mathcal Z^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right] = \left\{\frac{\partial}{\partial z_1^{(\mathcal L)}}\underbrace{\left[\frac{\exp(z_1^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right]}_{a_1^{(\mathcal L)}},\cdots,\frac{\partial}{\partial z_1^{(\mathcal L)}}\underbrace{\left[\frac{\exp(z_{\mathcal C}^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right]}_{a_{\mathcal C}^{(\mathcal L)}}\right\}_{1 \times \mathcal C}

∂z1(L)∂[∑i=1Cexp(zi(L))exp(Z(L))]=⎩

⎨

⎧∂z1(L)∂a1(L)

[∑i=1Cexp(zi(L))exp(z1(L))],⋯,∂z1(L)∂aC(L)

[∑i=1Cexp(zi(L))exp(zC(L))]⎭

⎬

⎫1×C

继续以第一项为例,关于

∂

a

1

(

L

)

∂

z

1

(

L

)

\begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned}

∂z1(L)∂a1(L)结果表示如下:

除法求导~

其中

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

′

\left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]'

[∑i=1Cexp(zi(L))]′中与

z

1

(

L

)

z_1^{(\mathcal L)}

z1(L)相关的只有第一项。因此该项结果为:

exp

(

z

i

(

L

)

)

\exp(z_i^{(L)})

exp(zi(L)).

∂

a

1

(

L

)

∂

z

1

(

L

)

=

∂

∂

z

1

(

L

)

[

exp

(

Z

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

=

[

exp

(

z

1

(

L

)

)

]

′

⋅

∑

i

=

1

C

exp

(

z

i

(

L

)

)

−

exp

(

z

1

(

L

)

)

⋅

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

′

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

2

=

exp

(

z

1

(

L

)

)

⋅

∑

i

=

1

C

exp

(

z

i

(

L

)

)

−

[

exp

(

z

1

(

L

)

)

]

2

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

2

\begin{aligned} \frac{\partial a_1^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}} & = \frac{\partial}{\partial z_1^{(\mathcal L)}}\left[\frac{\exp(\mathcal Z^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right] \\ & = \frac{\left[\exp(z_1^{(\mathcal L)})\right]' \cdot \sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)}) - \exp(z_1^{(\mathcal L)}) \cdot \left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]'}{\left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]^2} \\ & = \frac{\exp(z_1^{(\mathcal L)}) \cdot \sum_{i=1}^{\mathcal C}\exp(z_i^{(\mathcal L)}) - \left[\exp(z_1^{(L)})\right]^2}{\left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]^2} \end{aligned}

∂z1(L)∂a1(L)=∂z1(L)∂[∑i=1Cexp(zi(L))exp(Z(L))]=[∑i=1Cexp(zi(L))]2[exp(z1(L))]′⋅∑i=1Cexp(zi(L))−exp(z1(L))⋅[∑i=1Cexp(zi(L))]′=[∑i=1Cexp(zi(L))]2exp(z1(L))⋅∑i=1Cexp(zi(L))−[exp(z1(L))]2

分子提出

exp

(

z

1

(

L

)

)

\exp(z_1^{(\mathcal L)})

exp(z1(L)),分母平方项展开:

∂

a

1

(

L

)

∂

z

1

(

L

)

=

exp

(

z

1

(

L

)

)

⋅

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

−

exp

(

z

1

(

L

)

)

]

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

2

=

exp

(

z

1

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

⋅

∑

i

=

1

C

exp

(

z

i

(

L

)

)

−

exp

(

z

1

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

=

exp

(

z

1

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

⋅

[

1

−

exp

(

z

1

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

=

a

1

(

L

)

⋅

(

1

−

a

1

(

L

)

)

\begin{aligned} \frac{\partial a_1^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}} & = \frac{\exp(z_1^{(\mathcal L)}) \cdot \left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)}) - \exp(z_1^{(\mathcal L)})\right]}{\left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]^2} \\ & = \frac{\exp(z_1^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})} \cdot \frac{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)}) - \exp(z_1^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})} \\ & = \frac{\exp(z_1^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})} \cdot \left[1 - \frac{\exp(z_1^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})}\right] \\ & = a_1^{(\mathcal L)} \cdot (1 - a_1^{(\mathcal L)}) \end{aligned}

∂z1(L)∂a1(L)=[∑i=1Cexp(zi(L))]2exp(z1(L))⋅[∑i=1Cexp(zi(L))−exp(z1(L))]=∑i=1Cexp(zi(L))exp(z1(L))⋅∑i=1Cexp(zi(L))∑i=1Cexp(zi(L))−exp(z1(L))=∑i=1Cexp(zi(L))exp(z1(L))⋅[1−∑i=1Cexp(zi(L))exp(z1(L))]=a1(L)⋅(1−a1(L))

同理,关于两个下标参数

p

,

q

p,q

p,q;当

p

=

q

p=q

p=q时,有:

∂

a

q

(

L

)

∂

z

p

(

L

)

=

a

p

(

L

)

⋅

(

1

−

a

p

(

L

)

)

p

,

q

∈

{

1

,

2

,

⋯

,

C

}

;

p

=

q

\frac{\partial a_q^{(\mathcal L)}}{\partial z_p^{(\mathcal L)}} = a_p^{(L)} \cdot (1 - a_p^{(L)}) \quad p,q \in \{1,2,\cdots,\mathcal C\};p = q

∂zp(L)∂aq(L)=ap(L)⋅(1−ap(L))p,q∈{1,2,⋯,C};p=q

当

p

≠

q

p \neq q

p=q时,对应结果表示为:

其中

[

∂

exp

(

z

q

(

L

)

)

∂

z

p

(

L

)

]

p

≠

q

=

0

\begin{aligned}\left[\frac{\partial \exp(z_q^{(\mathcal L)})}{\partial z_p^{(\mathcal L)}}\right]_{p \neq q} = 0\end{aligned}

[∂zp(L)∂exp(zq(L))]p=q=0恒成立。

∂

a

q

(

L

)

∂

z

p

(

L

)

=

0

⋅

∑

i

=

1

C

exp

(

z

i

(

L

)

)

−

exp

(

z

q

(

L

)

)

⋅

exp

(

z

p

(

L

)

)

[

∑

i

=

1

C

exp

(

z

i

(

L

)

)

]

2

=

−

e

x

p

(

z

q

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

⋅

exp

(

z

p

(

L

)

)

∑

i

=

1

C

exp

(

z

i

(

L

)

)

=

−

a

p

⋅

a

q

\begin{aligned} \frac{\partial a_q^{(\mathcal L)}}{\partial z_p^{(\mathcal L)}} & = \frac{0 \cdot \sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)}) - \exp(z_q^{(\mathcal L)})\cdot \exp(z_p^{(\mathcal L)})}{\left[\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})\right]^2} \\ & = - \frac{exp(z_q^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})} \cdot \frac{\exp(z_p^{(\mathcal L)})}{\sum_{i=1}^{\mathcal C} \exp(z_i^{(\mathcal L)})} \\ & = -a_p \cdot a_q \end{aligned}

∂zp(L)∂aq(L)=[∑i=1Cexp(zi(L))]20⋅∑i=1Cexp(zi(L))−exp(zq(L))⋅exp(zp(L))=−∑i=1Cexp(zi(L))exp(zq(L))⋅∑i=1Cexp(zi(L))exp(zp(L))=−ap⋅aq

至此,

[

∂

a

(

L

)

∂

Z

(

L

)

]

C

×

C

×

1

\begin{aligned}\left[\frac{\partial a^{(\mathcal L)}}{\partial \mathcal Z^{(\mathcal L)}}\right]_{\mathcal C \times \mathcal C \times 1}\end{aligned}

[∂Z(L)∂a(L)]C×C×1中的所有项均可进行表示。将该三维张量进行压缩(删除最后一个维度),可以得到一个雅可比矩阵

(

Jacobian Matrix

)

(\text{Jacobian Matrix})

(Jacobian Matrix):

矩阵中的每一个元素均可使用上述两种方式进行表达。

∂

a

(

L

)

∂

Z

(

L

)

=

[

∂

a

1

(

L

)

∂

z

1

(

L

)

∂

a

1

(

L

)

∂

z

2

(

L

)

⋯

∂

a

1

(

L

)

∂

z

C

(

L

)

∂

a

2

(

L

)

∂

z

1

(

L

)

∂

a

2

(

L

)

∂

z

2

(

L

)

⋯

∂

a

2

(

L

)

∂

z

C

(

L

)

⋮

⋮

⋱

⋮

∂

a

C

(

L

)

∂

z

1

(

L

)

∂

a

C

(

L

)

∂

z

2

(

L

)

⋯

∂

a

C

(

L

)

∂

z

C

(

L

)

]

C

×

C

\frac{\partial a^{(\mathcal L)}}{\partial \mathcal Z^{(\mathcal L)}} = \begin{bmatrix} \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \vdots & \vdots &\ddots & \vdots\\ \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \end{bmatrix}_{\mathcal C \times \mathcal C}

∂Z(L)∂a(L)=

∂z1(L)∂a1(L)∂z1(L)∂a2(L)⋮∂z1(L)∂aC(L)∂z2(L)∂a1(L)∂z2(L)∂a2(L)⋮∂z2(L)∂aC(L)⋯⋯⋱⋯∂zC(L)∂a1(L)∂zC(L)∂a2(L)⋮∂zC(L)∂aC(L)

C×C

此时,对

∂

L

∂

Z

(

L

)

\begin{aligned}\frac{\partial \mathscr L}{\partial \mathcal Z^{(\mathcal L)}}\end{aligned}

∂Z(L)∂L进行表达:

其结果是一个

1

×

C

1 \times \mathcal C

1×C的向量格式。

∂

L

∂

a

(

L

)

⋅

∂

a

(

L

)

∂

Z

(

L

)

=

[

−

y

1

a

1

(

L

)

,

⋯

,

−

y

C

a

C

(

L

)

]

⋅

[

∂

a

1

(

L

)

∂

z

1

(

L

)

∂

a

1

(

L

)

∂

z

2

(

L

)

⋯

∂

a

1

(

L

)

∂

z

C

(

L

)

∂

a

2

(

L

)

∂

z

1

(

L

)

∂

a

2

(

L

)

∂

z

2

(

L

)

⋯

∂

a

2

(

L

)

∂

z

C

(

L

)

⋮

⋮

⋱

⋮

∂

a

C

(

L

)

∂

z

1

(

L

)

∂

a

C

(

L

)

∂

z

2

(

L

)

⋯

∂

a

C

(

L

)

∂

z

C

(

L

)

]

C

×

C

=

[

−

∑

i

=

1

C

y

i

a

i

(

L

)

⋅

∂

a

i

(

L

)

∂

z

1

(

L

)

,

⋯

,

−

∑

i

=

1

C

y

i

a

i

(

L

)

⋅

∂

a

i

(

L

)

∂

z

C

(

L

)

]

1

×

C

=

[

−

∑

i

=

1

C

y

i

a

i

(

L

)

⋅

∂

a

i

(

L

)

∂

z

j

(

L

)

]

1

×

C

j

=

1

,

2

,

⋯

,

C

\begin{aligned} \frac{\partial \mathscr L}{\partial a^{(\mathcal L)}} \cdot \frac{\partial a^{(\mathcal L)}}{\partial\mathcal Z^{(\mathcal L)}} & = \left[-\frac{y_1}{a_1^{(\mathcal L)}},\cdots,-\frac{y_{\mathcal C}}{a_{\mathcal C}^{(\mathcal L)}}\right] \cdot \begin{bmatrix} \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_1^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_2^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \vdots & \vdots &\ddots & \vdots\\ \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}}\end{aligned} & \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_2^{(\mathcal L)}}\end{aligned} & \cdots& \begin{aligned}\frac{\partial a_{\mathcal C}^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\end{aligned} \\ \end{bmatrix}_{\mathcal C \times \mathcal C} \\ & = \left[- \sum_{i=1}^{\mathcal C} \frac{y_i}{a_i^{(\mathcal L)}} \cdot \frac{\partial a_i^{(\mathcal L)}}{\partial z_1^{(\mathcal L)}},\cdots,- \sum_{i=1}^{\mathcal C} \frac{y_i}{a_i^{(\mathcal L)}} \cdot \frac{\partial a_i^{(\mathcal L)}}{\partial z_{\mathcal C}^{(\mathcal L)}}\right]_{1 \times \mathcal C} \\ & = \left[- \sum_{i=1}^{\mathcal C} \frac{y_i}{a_i^{(\mathcal L)}} \cdot \frac{\partial a_i^{(\mathcal L)}}{\partial z_j^{(\mathcal L)}}\right]_{1 \times \mathcal C} \quad j =1,2,\cdots,\mathcal C \end{aligned}

∂a(L)∂L⋅∂Z(L)∂a(L)=[−a1(L)y1,⋯,−aC(L)yC]⋅

∂z1(L)∂a1(L)∂z1(L)∂a2(L)⋮∂z1(L)∂aC(L)∂z2(L)∂a1(L)∂z2(L)∂a2(L)⋮∂z2(L)∂aC(L)⋯⋯⋱⋯∂zC(L)∂a1(L)∂zC(L)∂a2(L)⋮∂zC(L)∂aC(L)

C×C=[−i=1∑Cai(L)yi⋅∂z1(L)∂ai(L),⋯,−i=1∑Cai(L)yi⋅∂zC(L)∂ai(L)]1×C=[−i=1∑Cai(L)yi⋅∂zj(L)∂ai(L)]1×Cj=1,2,⋯,C

将

∂

a

i

(

L

)

∂

z

j

(

L

)

(

i

,

j

∈

{

1

,

2

,

⋯

,

C

}

)

=

{

a

i

(

1

−

a

j

)

i

=

j

−

a

i

⋅

a

j

i

≠

j

\begin{aligned}\frac{\partial a_i^{(\mathcal L)}}{\partial z_j^{(\mathcal L)}}(i,j \in \{1,2,\cdots,\mathcal C\}) = \begin{cases}a_i(1 - a_j) \quad i = j \\ -a_i \cdot a_j \quad i \neq j \end{cases}\end{aligned}

∂zj(L)∂ai(L)(i,j∈{1,2,⋯,C})={ai(1−aj)i=j−ai⋅aji=j两种情况代入到上式中:

可以消掉

a

i

(

L

)

a_i^{(\mathcal L)}

ai(L).

需要注意的是,这里的连加号

∑

i

=

1

C

\sum_{i=1}^{\mathcal C}

∑i=1C是均满足条件时的累加结果。如果只有一项满足条件,那么

C

=

1

\mathcal C = 1

C=1,以此类推。

−

∑

i

=

1

C

y

i

a

i

(

L

)

⋅

∂

a

i

(

L

)

∂

z

j

(

L

)

=

{

∑

i

=

1

C

y

i

⋅

a

j

(

L

)

−

y

i

i

=

j

∑

i

=

1

C

y

i

⋅

a

j

(

L

)

i

≠

j

- \sum_{i=1}^{\mathcal C} \frac{y_i}{a_i^{(\mathcal L)}} \cdot \frac{\partial a_i^{(\mathcal L)}}{\partial z_j^{(\mathcal L)}} = \begin{cases} \begin{aligned} & \sum_{i=1}^{\mathcal C} y_i \cdot a_j^{(\mathcal L)} - y_i \quad i = j \\ & \sum_{i=1}^{\mathcal C} y_i \cdot a_j^{(\mathcal L)} \quad i \neq j \end{aligned} \end{cases}

−i=1∑Cai(L)yi⋅∂zj(L)∂ai(L)=⎩

⎨

⎧i=1∑Cyi⋅aj(L)−yii=ji=1∑Cyi⋅aj(L)i=j

关于

[

∂

L

∂

a

(

L

)

⋅

∂

a

(

L

)

∂

Z

(

L

)

]

1

×

C

\begin{aligned} \left[\frac{\partial \mathscr L}{\partial a^{(\mathcal L)}} \cdot \frac{\partial a^{(\mathcal L)}}{\partial\mathcal Z^{(\mathcal L)}}\right]_{1 \times \mathcal C}\end{aligned}

[∂a(L)∂L⋅∂Z(L)∂a(L)]1×C中的结果,其每一项内的连加项中,只有一项是

i

=

j

i = j

i=j的情况。因而对

1

×

C

1 \times \mathcal C

1×C向量中的每一项均执行如下操作:

就是分成

i

=

j

i = j

i=j的

1

1

1项与

i

≠

j

i \neq j

i=j的

C

−

1

\mathcal C - 1

C−1项分别运算。

其中

∑

i

=

1

C

y

i

\begin{aligned}\sum_{i=1}^{\mathcal C}y_i\end{aligned}

i=1∑Cyi是真实标签向量各分量之和。而真实标签中只有

{

0

,

1

}

\{0,1\}

{0,1}两种元素(是该分类的为

1

1

1,不是该分类的为

0

0

0)因此,

∑

i

=

1

C

y

i

\begin{aligned}\sum_{i=1}^{\mathcal C}y_i\end{aligned}

i=1∑Cyi = 1.

−

∑

i

=

1

C

y

i

a

i

(

L

)

⋅

∂

a

i

(

L

)

∂

z

j

(

L

)

=

−

y

j

+

y

j

⋅

a

j

(

L

)

⏟

i

=

j

+

∑

i

≠

j

y

i

⋅

a

i

(

L

)

⏟

i

≠

j

=

−

y

j

+

(

y

j

⋅

a

j

(

L

)

+

∑

i

≠

j

y

i

⋅

a

j

(

L

)

)

=

−

y

j

+

a

j

(

L

)

⋅

∑

i

=

1

C

y

i

=

a

j

(

L

)

−

y

j

\begin{aligned} -\sum_{i=1}^{\mathcal C} \frac{y_i}{a_i^{(\mathcal L)}} \cdot \frac{\partial a_i^{(\mathcal L)}}{\partial z_j^{(\mathcal L)}} & = \underbrace{-y_j + y_j \cdot a_j^{(\mathcal L)}}_{i = j} + \underbrace{\sum_{i \neq j} y_i \cdot a_i^{(\mathcal L)}}_{i \neq j} \\ & = -y_j + \left(y_j \cdot a_j^{(\mathcal L)} + \sum_{i \neq j} y_i \cdot a_j^{(\mathcal L)}\right) \\ & = -y_j + a_j^{(\mathcal L)} \cdot \sum_{i=1}^{\mathcal C}y_i \\ & = a_j^{(\mathcal L)} - y_j \end{aligned}

−i=1∑Cai(L)yi⋅∂zj(L)∂ai(L)=i=j

−yj+yj⋅aj(L)+i=j

i=j∑yi⋅ai(L)=−yj+

yj⋅aj(L)+i=j∑yi⋅aj(L)

=−yj+aj(L)⋅i=1∑Cyi=aj(L)−yj

这仅仅是一个分量的结果,所有分量的结果组成一个

1

×

C

1 \times \mathcal C

1×C的向量:

[

a

j

(

L

)

−

y

j

]

1

×

C

j

=

1

,

2

,

⋯

,

C

⇒

a

(

L

)

−

y

\left[a_j^{(\mathcal L)} - y_j\right]_{1 \times \mathcal C} \quad j = 1,2,\cdots,\mathcal C \Rightarrow a^{(\mathcal L)} - y

[aj(L)−yj]1×Cj=1,2,⋯,C⇒a(L)−y

由于

a

(

L

)

=

y

^

a^{(\mathcal L)} = \hat y

a(L)=y^,因此对于递归神经网络中某时刻条件下,

∂

L

∂

Z

(

L

)

\begin{aligned}\frac{\partial \mathscr L}{\partial \mathcal Z^{(\mathcal L)}}\end{aligned}

∂Z(L)∂L中某分量

i

(

i

∈

{

1

,

2

,

⋯

,

C

}

)

i(i \in \{1,2,\cdots,\mathcal C\})

i(i∈{1,2,⋯,C})结果可表示为:

y

^

i

(

t

)

−

I

i

;

y

(

t

)

\hat y_i^{(t)} - \mathbb I_{i;y^{(t)}}

y^i(t)−Ii;y(t)

其实它描述的就是各分量的相减结果:

对应《机器学习》(花书) P234 10.2.2 公式10.18

(

y

^

1

(

t

)

y

^

2

(

t

)

⋮

y

^

C

(

t

)

)

−

(

y

1

(

t

)

y

2

(

t

)

⋮

y

C

(

t

)

)

∑

i

=

1

C

y

i

(

t

)

=

1

;

y

i

(

t

)

∈

{

0

,

1

}

\begin{pmatrix} \hat y_1^{(t)} \\ \hat y_2^{(t)} \\ \vdots \\ \hat y_{\mathcal C}^{(t)} \\ \end{pmatrix} - \begin{pmatrix} y_1^{(t)} \\ y_2^{(t)} \\ \vdots \\ y_{\mathcal C}^{(t)} \\ \end{pmatrix} \quad \sum_{i=1}^{\mathcal C} y_i^{(t)} = 1;y_i^{(t)} \in \{0,1\}

y^1(t)y^2(t)⋮y^C(t)

−

y1(t)y2(t)⋮yC(t)

i=1∑Cyi(t)=1;yi(t)∈{0,1}

下一节介绍递归神经网络的反向传播过程(写不下了)。

相关参考:

向量对向量求导

关于 Softmax 回归的反向传播求导数过程