在Ubuntu20.04部署Flink1.17实现基于Flink GateWay的Hive On Flink的踩坑记录(一)

前言

转眼间,Flink1.14还没玩明白,Flink已经1.17了,这迭代速度还是够快。。。

之前写过一篇:https://lizhiyong.blog.csdn.net/article/details/128195438

是FFA2022展示的Flink1.16新特性:Flink GateWay。新版本1.17据说GA了,可以尝试下。

原理当然和Hive On Tez当然是有所不同,具体参考之前写过的这2篇:

https://lizhiyong.blog.csdn.net/article/details/126634843

https://lizhiyong.blog.csdn.net/article/details/126688391

虚拟机部署

规划

由于笔者目前已经有这些虚拟机:

USDP可互通双集群:https://lizhiyong.blog.csdn.net/article/details/123389208

zhiyong1 :192.168.88.100

zhiyong2 :192.168.88.101

zhiyong3 :192.168.88.102

zhiyong4 :192.168.88.103

zhiyong5 :192.168.88.104

zhiyong6 :192.168.88.105

zhiyong7 :192.168.88.106

K8S的All in one:https://lizhiyong.blog.csdn.net/article/details/126236516

zhiyong-ksp1 :192.168.88.20

开发机:zhiyong-vm-dev :192.168.88.50

Doris:https://lizhiyong.blog.csdn.net/article/details/126338539

zhiyong-doris :192.168.88.21

Clickhouse:https://lizhiyong.blog.csdn.net/article/details/126737711

zhiyong-ck1 :192.168.88.22

Docker机:https://lizhiyong.blog.csdn.net/article/details/126761470

zhiyong-docker :192.168.88.23

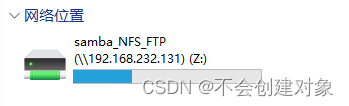

Win10跳板机:https://lizhiyong.blog.csdn.net/article/details/127641326

跳板机 :192.168.88.25

所以,没有搭集群的必要,搞个单节点随便玩玩,带新人/给项目组那些肤浅的SQL Boy们练习HQL足够了,不用时也可以随时挂起,这种用途,单节点挺方便的,毕竟除了SQL就什么都不会的SQL Boy们从来不知道分布式的各种原理【当然也不需要知道】。

所以这台虚拟机的IP规划为:192.168.88.24,其实也是蓄谋已久。。。年前就预留了这个坑位,只是工作繁忙,一直没能腾出时间。

虚拟机制作

参考:https://lizhiyong.blog.csdn.net/article/details/126338539

基本和之前一样的。。。不过Doris已经2.0.0了:https://doris.apache.org/zh-CN/download/

还是要向前看的。

由于是All In One的模式,所以资源配置的稍微阔绰点,防止出现OOM:

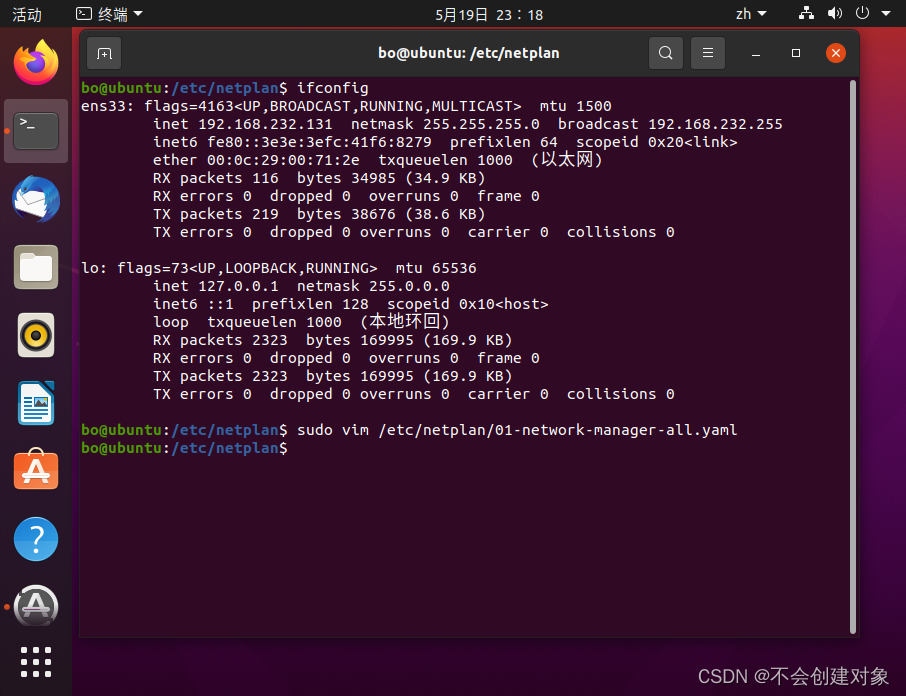

设置网络:

安装必要的命令:

sudo apt install net-tools

sudo apt-get install openssh-server

sudo apt-get install openssh-client

sudo apt install vim

此时可以使用MobaXterm。

配置SSH免密:

zhiyong@zhiyong-hive-on-flink1:~$ sudo -su root

root@zhiyong-hive-on-flink1:/home/zhiyong# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:sLPlghwaubeie5ddmWAOzWGJvA0+1nfqzunllZ1vXp4 root@zhiyong-hive-on-flink1

The key's randomart image is:

+---[RSA 3072]----+

| . . . |

| + + |

| . O.. |

| .* Bo. . |

| o..=ooS= |

| = o.== o . |

| o +ooo. . o o .|

| o.o..o.+ . o+|

|o+ o. o= . E+|

+----[SHA256]-----+

root@zhiyong-hive-on-flink1:/home/zhiyong# ssh-copy-id zhiyong-hive-on-flink1.17

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@zhiyong-hive-on-flink1.17's password:

Permission denied, please try again.

root@zhiyong-hive-on-flink1.17's password:

root@zhiyong-hive-on-flink1:/home/zhiyong# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 zhiyong-hive-on-flink1.17 zhiyong-hive-on-flink1

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

root@zhiyong-hive-on-flink1:/home/zhiyong#sudo vim /etc/ssh/sshd_config

:set nu

34 #PermitRootLogin prohibit-password #此处需要修改,才能以root做ssh登录

PermitRootLogin yes #35行+入

esc

:wq

zhiyong@zhiyong-hive-on-flink1:~$ sudo su root

[sudo] zhiyong 的密码:

root@zhiyong-hive-on-flink1:/home/zhiyong# sudo passwd root

新的 密码:

重新输入新的 密码:

passwd:已成功更新密码

root@zhiyong-hive-on-flink1:/home/zhiyong# reboot

root@zhiyong-hive-on-flink1:/home/zhiyong# ssh-copy-id zhiyong-hive-on-flink1.17

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@zhiyong-hive-on-flink1.17's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'zhiyong-hive-on-flink1.17'"

and check to make sure that only the key(s) you wanted were added.

root@zhiyong-hive-on-flink1:/home/zhiyong#

此时做好了免密SSH。

安装JDK17

根据官网文档:https://nightlies.apache.org/flink/flink-docs-release-1.17/docs/try-flink/local_installation/

Flink runs on all UNIX-like environments, i.e. Linux, Mac OS X, and Cygwin (for Windows). You need to have Java 11 installed

所以JDK1.8有淘汰的趋势。。。Flink早在1.15就要求使用JDK11,主要是为了用上比G1更优秀的ZGC,毕竟吞吐量下降15%只要多+20%的机器就可以弥补,有限Money能解决的问题并不是太大的问题,但是老一些的GC万一STW来个几秒钟,那Flink所谓的亚秒级实时响应就无从谈起了。ZGC保证了4TB内存时暂停时间控制在15ms以内,还是很适合Flink使用的。JDK15中ZGC达到了GA【使用–XX:+UseZGC开启】,目前Oracle主推的LTS在1.8、11后就是17了。。。所以JDK17才是未来。。。由于不会在这个虚拟机做开发和编译,使用JRE其实也可以。

zhiyong@zhiyong-hive-on-flink1:/usr/lib/jvm/java-11-openjdk-amd64/bin$ cd

zhiyong@zhiyong-hive-on-flink1:~$ sudo apt remove openjdk-11-jre-headless

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

软件包 openjdk-11-jre-headless 未安装,所以不会被卸载

下列软件包是自动安装的并且现在不需要了:

java-common

使用'sudo apt autoremove'来卸载它(它们)。

升级了 0 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 355 个软件包未被升级。

zhiyong@zhiyong-hive-on-flink1:~$ java

Command 'java' not found, but can be installed with:

sudo apt install openjdk-11-jre-headless # version 11.0.18+10-0ubuntu1~20.04.1, or

sudo apt install default-jre # version 2:1.11-72

sudo apt install openjdk-13-jre-headless # version 13.0.7+5-0ubuntu1~20.04

sudo apt install openjdk-16-jre-headless # version 16.0.1+9-1~20.04

sudo apt install openjdk-17-jre-headless # version 17.0.6+10-0ubuntu1~20.04.1

sudo apt install openjdk-8-jre-headless # version 8u362-ga-0ubuntu1~20.04.1

此时卸载JDK,接下来就是配置$JAVA_HOME:

zhiyong@zhiyong-hive-on-flink1:~$ sudo su root

root@zhiyong-hive-on-flink1:/home/zhiyong# mkdir -p /export/software

root@zhiyong-hive-on-flink1:/home/zhiyong# mkdir -p /export/server

root@zhiyong-hive-on-flink1:/home/zhiyong# chmod -R 777 /export/

root@zhiyong-hive-on-flink1:/home/zhiyong# cd /export/software/

root@zhiyong-hive-on-flink1:/export/software# ll

总用量 8

drwxrwxrwx 2 root root 4096 5月 14 15:55 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

root@zhiyong-hive-on-flink1:/export/software# cp /home/zhiyong/jdk-17_linux-x64_bin.tar.gz /export/software/

root@zhiyong-hive-on-flink1:/export/software# ll

总用量 177472

drwxrwxrwx 2 root root 4096 5月 14 15:56 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

-rw-r--r-- 1 root root 181719178 5月 14 15:56 jdk-17_linux-x64_bin.tar.gz

root@zhiyong-hive-on-flink1:/export/software# cd /export/server/

root@zhiyong-hive-on-flink1:/export/server# tar -zxvf jdk-17_linux-x64_bin.tar.gz -C /export/server/

root@zhiyong-hive-on-flink1:/export/server# ll

总用量 12

drwxrwxrwx 3 root root 4096 5月 14 15:57 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

drwxr-xr-x 9 root root 4096 5月 14 15:57 jdk-17.0.7/

root@zhiyong-hive-on-flink1:/export/server# cat /etc/profile

# /etc/profile: system-wide .profile file for the Bourne shell (sh(1))

# and Bourne compatible shells (bash(1), ksh(1), ash(1), ...).

if [ "${PS1-}" ]; then

if [ "${BASH-}" ] && [ "$BASH" != "/bin/sh" ]; then

# The file bash.bashrc already sets the default PS1.

# PS1='\h:\w\$ '

if [ -f /etc/bash.bashrc ]; then

. /etc/bash.bashrc

fi

else

if [ "`id -u`" -eq 0 ]; then

PS1='# '

else

PS1='$ '

fi

fi

fi

if [ -d /etc/profile.d ]; then

for i in /etc/profile.d/*.sh; do

if [ -r $i ]; then

. $i

fi

done

unset i

fi

export JAVA_HOME=/export/server/jdk-17.0.7

export PATH=:$PATH:$JAVA_HOME/bin

root@zhiyong-hive-on-flink1:/export/server# java -version

java version "17.0.7" 2023-04-18 LTS

Java(TM) SE Runtime Environment (build 17.0.7+8-LTS-224)

Java HotSpot(TM) 64-Bit Server VM (build 17.0.7+8-LTS-224, mixed mode, sharing)

root@zhiyong-hive-on-flink1:/export/server#

此时JDK17部署完毕。【但是JDK17目前还有很多问题,之后笔者更换了JDK11】。

部署Hadoop

去官网找最新版:https://hadoop.apache.org/releases.html

参照官网文档:https://hadoop.apache.org/docs/current/

当然是安装单节点:https://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-common/SingleCluster.html

Apache Hadoop 3.3 and upper supports Java 8 and Java 11 (runtime only)。。。

试一试JDK17能不能运行。。。

root@zhiyong-hive-on-flink1:/export/software# cp /home/zhiyong/hadoop-3.3.5.tar.gz /export/software/

root@zhiyong-hive-on-flink1:/export/software# tar -zxvf hadoop-3.3.5.tar.gz -C /export/server

root@zhiyong-hive-on-flink1:/export/software# cd /export/server

root@zhiyong-hive-on-flink1:/export/server# ll

总用量 16

drwxrwxrwx 4 root root 4096 5月 14 17:25 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

drwxr-xr-x 10 2002 2002 4096 3月 16 00:58 hadoop-3.3.5/

drwxr-xr-x 9 root root 4096 5月 14 15:57 jdk-17.0.7/

root@zhiyong-hive-on-flink1:/export/server# cd hadoop-3.3.5/

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5/etc/hadoop# chmod 666 core-site.xml hdfs-site.xml mapred-site.xml yarn-site.xml

直接在Ubuntu的GUI修改即可:

修改core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.88.24:9000</value>

</property>

</configuration>

修改hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.88.24:50070</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>192.168.88.24:50090</value>

</property>

</configuration>

初始化:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# pwd

/export/server/hadoop-3.3.5

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hdfs namenode -format

2023-05-14 17:54:12,552 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted.

这条Log说明初始化成功。

启动HDFS:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

Starting namenodes on [192.168.88.24]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [zhiyong-hive-on-flink1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

果然会报错。

这个脚本有这么一句:

## startup matrix:

#

# if $EUID != 0, then exec

# if $EUID =0 then

# if hdfs_subcmd_user is defined, su to that user, exec

# if hdfs_subcmd_user is not defined, error

#

# For secure daemons, this means both the secure and insecure env vars need to be

# defined. e.g., HDFS_DATANODE_USER=root HDFS_DATANODE_SECURE_USER=hdfs

#

所以需要给这个脚本增加配置:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# pwd

/export/server/hadoop-3.3.5

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# vim ./sbin/start-dfs.sh

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [192.168.88.24]

192.168.88.24: ERROR: JAVA_HOME is not set and could not be found.

Starting datanodes

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [zhiyong-hive-on-flink1]

zhiyong-hive-on-flink1: Warning: Permanently added 'zhiyong-hive-on-flink1' (ECDSA) to the list of known hosts.

zhiyong-hive-on-flink1: ERROR: JAVA_HOME is not set and could not be found.

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# echo $JAVA_HOME

/export/server/jdk-17.0.7

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# vim ./etc/hadoop/hadoop-env.sh

所以:

# The java implementation to use. By default, this environment

53 # variable is REQUIRED on ALL platforms except OS X!

54 # export JAVA_HOME=

55 export JAVA_HOME=$JAVA_HOME

这样不管用,需要写死:

export JAVA_HOME=$JAVA_HOME=/export/server/jdk-17.0.7

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [192.168.88.24]

Starting datanodes

Starting secondary namenodes [zhiyong-hive-on-flink1]

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# jps

5232 DataNode

5668 Jps

5501 SecondaryNameNode

5069 NameNode

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

此时启动成功,但是这个命令失败:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/stop-dfs.sh

Stopping namenodes on [192.168.88.24]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Stopping datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Stopping secondary namenodes [zhiyong-hive-on-flink1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

所以:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# vim ./sbin/stop-dfs.sh

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/stop-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Stopping namenodes on [192.168.88.24]

Stopping datanodes

Stopping secondary namenodes [zhiyong-hive-on-flink1]

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# jps

6507 Jps

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

才能执行这个命令。

然后重启HDFS:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [192.168.88.24]

Starting datanodes

Starting secondary namenodes [zhiyong-hive-on-flink1]

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# jps

6756 NameNode

7348 Jps

6921 DataNode

7194 SecondaryNameNode

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

可以看到Web UI:

http://192.168.88.24:50070/

但是遇到了:Failed to retrieve data from /webhdfs/v1/?op=LISTSTATUS: Server Error

当然是因为JDK版本太高,导致了丢包。在JDK9标识为过期的:java.activation在JDK11完全删除了!!!到JDK17当然是没有了。。。凑合着用。。。

验证:

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# vim /home/zhiyong/test1.txt

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# cat /home/zhiyong/test1.txt

用于验证文件是否发送成功 by:CSDN@虎鲸不是鱼

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -put /home/zhiyong/test1.txt hdfs://192.168.88.24:9000/test1

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -ls hdfs://192.168.88.24:9000/test1

Found 1 items

-rw-r--r-- 1 root supergroup 63 2023-05-14 19:11 hdfs://192.168.88.24:9000/test1/test1.txt

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -cat hdfs://192.168.88.24:9000/test1/test1.txt

用于验证文件是否发送成功 by:CSDN@虎鲸不是鱼

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5#

说明JDK17环境下,HDFS可以凑合使用。。。

部署Hive

root@zhiyong-hive-on-flink1:~# sudo apt-get install mysql-server

root@zhiyong-hive-on-flink1:~# cd /etc/mysql/

root@zhiyong-hive-on-flink1:/etc/mysql# ll

总用量 40

drwxr-xr-x 4 root root 4096 5月 14 19:23 ./

drwxr-xr-x 132 root root 12288 5月 14 19:23 ../

drwxr-xr-x 2 root root 4096 2月 23 2022 conf.d/

-rw------- 1 root root 317 5月 14 19:23 debian.cnf

-rwxr-xr-x 1 root root 120 4月 21 22:17 debian-start*

lrwxrwxrwx 1 root root 24 5月 14 14:59 my.cnf -> /etc/alternatives/my.cnf

-rw-r--r-- 1 root root 839 8月 3 2016 my.cnf.fallback

-rw-r--r-- 1 root root 682 11月 16 04:42 mysql.cnf

drwxr-xr-x 2 root root 4096 5月 14 19:23 mysql.conf.d/

root@zhiyong-hive-on-flink1:/etc/mysql# cat debian.cnf

# Automatically generated for Debian scripts. DO NOT TOUCH!

[client]

host = localhost

user = debian-sys-maint

password = PnqdmcrBnP2vLCE8

socket = /var/run/mysqld/mysqld.sock

[mysql_upgrade]

host = localhost

user = debian-sys-maint

password = PnqdmcrBnP2vLCE8

socket = /var/run/mysqld/mysqld.sock

root@zhiyong-hive-on-flink1:/etc/mysql# mysql

mysql> ALTER USER root@localhost IDENTIFIED BY '123456';

Query OK, 0 rows affected (0.00 sec)

mysql> exit

Bye

root@zhiyong-hive-on-flink1:/etc/mysql# pwd

/etc/mysql

root@zhiyong-hive-on-flink1:/etc/mysql# ll

总用量 40

drwxr-xr-x 4 root root 4096 5月 14 19:23 ./

drwxr-xr-x 132 root root 12288 5月 14 19:23 ../

drwxr-xr-x 2 root root 4096 2月 23 2022 conf.d/

-rw------- 1 root root 317 5月 14 19:23 debian.cnf

-rwxr-xr-x 1 root root 120 4月 21 22:17 debian-start*

lrwxrwxrwx 1 root root 24 5月 14 14:59 my.cnf -> /etc/alternatives/my.cnf

-rw-r--r-- 1 root root 839 8月 3 2016 my.cnf.fallback

-rw-r--r-- 1 root root 682 11月 16 04:42 mysql.cnf

drwxr-xr-x 2 root root 4096 5月 14 19:23 mysql.conf.d/

root@zhiyong-hive-on-flink1:/etc/mysql# cd mysql.conf.d/

root@zhiyong-hive-on-flink1:/etc/mysql/mysql.conf.d# ll

总用量 16

drwxr-xr-x 2 root root 4096 5月 14 19:23 ./

drwxr-xr-x 4 root root 4096 5月 14 19:23 ../

-rw-r--r-- 1 root root 132 11月 16 04:42 mysql.cnf

-rw-r--r-- 1 root root 2220 11月 16 04:42 mysqld.cnf

root@zhiyong-hive-on-flink1:/etc/mysql/mysql.conf.d# vim mysqld.cnf

#bind-address = 127.0.0.1 #屏蔽这一句才能远程连接

root@zhiyong-hive-on-flink1:/etc/mysql/mysql.conf.d# mysql

create user 'root'@'%' identified by '123456';

grant all privileges on *.* to 'root'@'%' with grant option;

flush privileges;

授权后尝试使用DataGrip可以连接:

元数据库MySQL准备好以后,可以准备安装Hive。

root@zhiyong-hive-on-flink1:/export/software# cp /home/zhiyong/apache-hive-3.1.3-bin.tar.gz /export/software/

root@zhiyong-hive-on-flink1:/export/software# ll

总用量 1186736

drwxrwxrwx 2 root root 4096 5月 14 19:58 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

-rw-r--r-- 1 root root 326940667 5月 14 19:57 apache-hive-3.1.3-bin.tar.gz

-rw-r--r-- 1 root root 706533213 5月 14 17:23 hadoop-3.3.5.tar.gz

-rw-r--r-- 1 root root 181719178 5月 14 15:56 jdk-17_linux-x64_bin.tar.gz

root@zhiyong-hive-on-flink1:/export/software# tar -zxvf apache-hive-3.1.3-bin.tar.gz -C /export/server/

root@zhiyong-hive-on-flink1:/export/software# cd /export/server/

root@zhiyong-hive-on-flink1:/export/server# ll

总用量 20

drwxrwxrwx 5 root root 4096 5月 14 20:00 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

drwxr-xr-x 10 root root 4096 5月 14 20:00 apache-hive-3.1.3-bin/

drwxr-xr-x 11 2002 2002 4096 5月 14 17:54 hadoop-3.3.5/

drwxr-xr-x 9 root root 4096 5月 14 15:57 jdk-17.0.7/

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/lib# cp /home/zhiyong/mysql-connector-java-8.0.28.jar /export/server/apache-hive-3.1.3-bin/lib/

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/lib# ll | grep mysql

-rw-r--r-- 1 root root 2476480 5月 14 20:07 mysql-connector-java-8.0.28.jar

-rw-r--r-- 1 root staff 10476 12月 20 2019 mysql-metadata-storage-0.12.0.jar

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# pwd

/export/server/apache-hive-3.1.3-bin/conf

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# cp ./hive-env.sh.template hive-env.sh

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# vim hive-env.sh

增加:

HADOOP_HOME=/export/server/hadoop-3.3.5

export HIVE_CONF_DIR=/export/server/apache-hive-3.1.3-bin/conf

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# vim /etc/profile

末尾增加:

export HIVE_HOME=/export/server/apache-hive-3.1.3-bin

export PATH=:$PATH:$HIVE_HOME/bin

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# source /etc/profile

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# touch hive-site.xml

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# chmod 666 hive-site.xml

在Ubuntu的Gui写入配置:

<configuration>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.88.24:3306/hivemetadata?createDatabaseIfNotExist=true&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.88.24</value>

</property>

</configuration>

创建Hive在HDFS的路径:

/export/server/hadoop-3.3.5/bin/hadoop fs -mkdir -p /user/hive/warehouse

/export/server/hadoop-3.3.5/bin/hadoop fs -mkdir -p /tmp

/export/server/hadoop-3.3.5/bin/hadoop fs -chmod g+w /tmp

/export/server/hadoop-3.3.5/bin/hadoop fs -chmod g+w /user/hive/warehouse

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf# /export/server/hadoop-3.3.5/bin/hadoop fs -ls /

Found 3 items

drwxr-xr-x - root supergroup 0 2023-05-14 19:11 /test1

drwxrwxr-x - root supergroup 0 2023-05-14 20:27 /tmp

drwxr-xr-x - root supergroup 0 2023-05-14 20:26 /user

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/conf#

接下来初始化Hive的元数据:

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# pwd

/export/server/apache-hive-3.1.3-bin/bin

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# schematool -dbType mysql -initSchema

Initialization script completed

schemaTool completed

然后启动Hive:

hive --service metastore > /dev/null 2>&1 &

hiveserver2 > /dev/null 2>&1 &

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# jps

12513 RunJar

7698 NameNode

12694 RunJar

7302 SecondaryNameNode

7065 DataNode

12844 Jps

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# beeline -u jdbc:hive2://localhost:10000/ -n root

失败

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/apache-hive-3.1.3-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.5/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = 957f3413-ca47-43da-a6a4-c5bbf9597de5

Exception in thread "main" java.lang.ClassCastException: class jdk.internal.loader.ClassLoaders$AppClassLoader cannot be cast to class java.net.URLClassLoader (jdk.internal.loader.ClassLoaders$AppClassLoader and java.net.URLClassLoader are in module java.base of loader 'bootstrap')

at org.apache.hadoop.hive.ql.session.SessionState.<init>(SessionState.java:413)

at org.apache.hadoop.hive.ql.session.SessionState.<init>(SessionState.java:389)

at org.apache.hadoop.hive.cli.CliSessionState.<init>(CliSessionState.java:60)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.util.RunJar.run(RunJar.java:328)

at org.apache.hadoop.util.RunJar.main(RunJar.java:241)

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# netstat -atunlp | grep 9083

tcp6 0 0 :::9083 :::* LISTEN 12513/java

但是MetaStore启动成功!!!

显然这又是JDK的问题。。。Hive貌似只对JDK1.8友好。

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# jps

12513 RunJar

7698 NameNode

12694 RunJar

7302 SecondaryNameNode

13334 Jps

7065 DataNode

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# kill -9 12513

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# kill -9 12694

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive --service metastore > /dev/null 2>&1 &

[1] 13397

部署Flink

参照:https://nightlies.apache.org/flink/flink-docs-release-1.17/docs/dev/table/sql-gateway/overview/

root@zhiyong-hive-on-flink1:/export/software# cp /home/zhiyong/flink-1.17.0-bin-scala_2.12.tgz /export/software/

root@zhiyong-hive-on-flink1:/export/software# tar -zxvf flink-1.17.0-bin-scala_2.12.tgz -C /export/server/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0# ./bin/sql-gateway.sh start -Dsql-gateway.endpoint.type=hiveserver2

启动脚本执行后并没有什么反应。

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# sql-client.sh

Flink SQL> show databases;

+------------------+

| database name |

+------------------+

| default_database |

+------------------+

1 row in set

Flink SQL> select 1 as col1;

[ERROR] Could not execute SQL statement. Reason:

java.lang.reflect.InaccessibleObjectException: Unable to make field private static final int java.lang.Class.ANNOTATION accessible: module java.base does not "opens java.lang" to unnamed module @74582ff6

Flink SQL>

显然Flink1.17并不支持JDK17!!!所以还是应该老老实实用JDK11。

重新部署JDK11

由于Oracle的JDK11下载需要注册,所以下载个OpenJKD:http://jdk.java.net/archive/

root@zhiyong-hive-on-flink1:/export/software# ll

总用量 1645100

drwxrwxrwx 2 root root 4096 5月 14 20:50 ./

drwxrwxrwx 4 root root 4096 5月 14 15:55 ../

-rw-r--r-- 1 root root 326940667 5月 14 19:57 apache-hive-3.1.3-bin.tar.gz

-rw-r--r-- 1 root root 469363537 5月 14 20:50 flink-1.17.0-bin-scala_2.12.tgz

-rw-r--r-- 1 root root 706533213 5月 14 17:23 hadoop-3.3.5.tar.gz

-rw-r--r-- 1 root root 181719178 5月 14 15:56 jdk-17_linux-x64_bin.tar.gz

root@zhiyong-hive-on-flink1:/export/software# cp /home/zhiyong/openjdk-11_linux-x64_bin.tar.gz /export/software/

root@zhiyong-hive-on-flink1:/export/software# tar -zxvf openjdk-11_linux-x64_bin.tar.gz -C /export/server/

root@zhiyong-hive-on-flink1:/export/server/jdk-11# pwd

/export/server/jdk-11

root@zhiyong-hive-on-flink1:/export/server/jdk-11# vim /etc/profile

#修改:export JAVA_HOME=/export/server/jdk-11

root@zhiyong-hive-on-flink1:/export/server/jdk-11# source /etc/profile

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# pwd

/export/server/hadoop-3.3.5

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# vim ./etc/hadoop/hadoop-env.sh

#修改:export JAVA_HOME=/export/server/jdk-11

root@zhiyong-hive-on-flink1:/home/zhiyong# java -version

openjdk version "11" 2018-09-25

OpenJDK Runtime Environment 18.9 (build 11+28)

OpenJDK 64-Bit Server VM 18.9 (build 11+28, mixed mode)

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [192.168.88.24]

Starting datanodes

Starting secondary namenodes [192.168.88.24]

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hdfs namenode -format

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./sbin/start-dfs.sh

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -put /home/zhiyong/test1.txt hdfs://192.168.88.24:9000/test1

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -ls hdfs://192.168.88.24:9000/test1

-rw-r--r-- 1 root supergroup 63 2023-05-14 22:51 hdfs://192.168.88.24:9000/test1

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -cat hdfs://192.168.88.24:9000/test1

用于验证文件是否发送成功 by:CSDN@虎鲸不是鱼

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -mkdir -p /user/hive/warehouse

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -mkdir -p /tmp

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -chmod g+w /tmp

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -chmod g+w /user/hive/warehouse

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5# ./bin/hadoop fs -ls /

Found 3 items

-rw-r--r-- 1 root supergroup 63 2023-05-14 22:51 /test1

drwxrwxr-x - root supergroup 0 2023-05-14 22:55 /tmp

drwxr-xr-x - root supergroup 0 2023-05-14 22:55 /user

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive --service metastore > /dev/null 2>&1 &

[1] 4797

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/apache-hive-3.1.3-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.5/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = e5d7b83d-9cf6-4cde-a945-511b919da96a

Exception in thread "main" java.lang.ClassCastException: class jdk.internal.loader.ClassLoaders$AppClassLoader cannot be cast to class java.net.URLClassLoader (jdk.internal.loader.ClassLoaders$AppClassLoader and java.net.URLClassLoader are in module java.base of loader 'bootstrap')

at org.apache.hadoop.hive.ql.session.SessionState.<init>(SessionState.java:413)

at org.apache.hadoop.hive.ql.session.SessionState.<init>(SessionState.java:389)

at org.apache.hadoop.hive.cli.CliSessionState.<init>(CliSessionState.java:60)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at java.base/jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.hadoop.util.RunJar.run(RunJar.java:328)

at org.apache.hadoop.util.RunJar.main(RunJar.java:241)

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin#

显然Hive还是只能JDK1.8,对JDK11的支持也很不友好,毕竟Hive这玩意儿太古老了。。。

继续部署Flink

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# cp /home/zhiyong/flink-connector-hive_2.12-1.17.0.jar /export/server/flink-1.17.0/lib

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# cp /export/server/apache-hive-3.1.3-bin/lib/hive-exec-3.1.3.jar /export/server/flink-1.17.0/lib

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# cp /export/server/apache-hive-3.1.3-bin/lib/hive-metastore-3.1.3.jar /export/server/flink-1.17.0/lib

root@zhiyong-hive-on-flink1:/home/zhiyong# cp /home/zhiyong/antlr-runtime-3.5.2.jar /export/server/flink-1.17.0/lib

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# ll

总用量 261608

drwxr-xr-x 2 root root 4096 5月 14 23:41 ./

drwxr-xr-x 10 root root 4096 3月 17 20:22 ../

-rw-r--r-- 1 root root 167761 5月 14 23:41 antlr-runtime-3.5.2.jar

-rw-r--r-- 1 root root 196487 3月 17 20:07 flink-cep-1.17.0.jar

-rw-r--r-- 1 root root 542616 3月 17 20:10 flink-connector-files-1.17.0.jar

-rw-r--r-- 1 root root 8876209 5月 14 23:26 flink-connector-hive_2.12-1.17.0.jar

-rw-r--r-- 1 root root 102468 3月 17 20:14 flink-csv-1.17.0.jar

-rw-r--r-- 1 root root 135969953 3月 17 20:22 flink-dist-1.17.0.jar

-rw-r--r-- 1 root root 180243 3月 17 20:13 flink-json-1.17.0.jar

-rw-r--r-- 1 root root 21043313 3月 17 20:20 flink-scala_2.12-1.17.0.jar

-rw-r--r-- 1 root root 15407474 3月 17 20:21 flink-table-api-java-uber-1.17.0.jar

-rw-r--r-- 1 root root 37975208 3月 17 20:15 flink-table-planner-loader-1.17.0.jar

-rw-r--r-- 1 root root 3146205 3月 17 20:07 flink-table-runtime-1.17.0.jar

-rw-r--r-- 1 root root 41873153 5月 14 23:29 hive-exec-3.1.3.jar

-rw-r--r-- 1 root root 36983 5月 14 23:29 hive-metastore-3.1.3.jar

-rw-r--r-- 1 root root 208006 3月 17 17:31 log4j-1.2-api-2.17.1.jar

-rw-r--r-- 1 root root 301872 3月 17 17:31 log4j-api-2.17.1.jar

-rw-r--r-- 1 root root 1790452 3月 17 17:31 log4j-core-2.17.1.jar

-rw-r--r-- 1 root root 24279 3月 17 17:31 log4j-slf4j-impl-2.17.1.jar

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# pwd

/export/server/flink-1.17.0/bin

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host zhiyong-hive-on-flink1.

Starting taskexecutor daemon on host zhiyong-hive-on-flink1.

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0# ./bin/flink run examples/streaming/WordCount.jar

Executing example with default input data.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.flink.api.java.ClosureCleaner (file:/export/server/flink-1.17.0/lib/flink-dist-1.17.0.jar) to field java.lang.String.value

WARNING: Please consider reporting this to the maintainers of org.apache.flink.api.java.ClosureCleaner

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Job has been submitted with JobID 2149aad493c0ab55386d31d1c1663be2

Program execution finished

Job with JobID 2149aad493c0ab55386d31d1c1663be2 has finished.

Job Runtime: 1014 ms

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0# tail log/flink-*-taskexecutor-*.out

(nymph,1)

(in,3)

(thy,1)

(orisons,1)

(be,4)

(all,2)

(my,1)

(sins,1)

(remember,1)

(d,4)

说明Flink此时还算正常。

启动Flink的SqlClient

参考:https://nightlies.apache.org/flink/flink-docs-release-1.17/docs/connectors/table/hive/hive_catalog/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# sql-client.sh

Flink SQL> CREATE CATALOG zhiyonghive WITH (

> 'type' = 'hive',

> 'hive-conf-dir' = '/export/server/apache-hive-3.1.3-bin/conf/'

> );

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.hadoop.conf.Configuration

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/hadoop-common-3.1.1.jar /export/server/flink-1.17.0/lib/

Flink SQL> CREATE CATALOG zhiyonghive WITH (

> 'type' = 'hive',

> 'hive-conf-dir' = '/export/server/apache-hive-3.1.3-bin/conf/'

> );

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: com.ctc.wstx.io.InputBootstrapper

此时又爆了一种异常。。。

使用这个GAV:

<dependency>

<groupId>com.fasterxml.woodstox</groupId>

<artifactId>woodstox-core</artifactId>

<version>5.0.3</version>

</dependency>

下载Jar包,放入lib。。。

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/woodstox-core-5.0.3.jar /export/server/flink-1.17.0/lib/

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/stax2-api-3.1.4.jar /export/server/flink-1.17.0/lib/

Flink SQL> CREATE CATALOG zhiyonghive WITH (

> 'type' = 'hive',

> 'hive-conf-dir' = '/export/server/apache-hive-3.1.3-bin/conf/'

> );

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.commons.logging.LogFactory

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/commons-logging-1.2.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.hadoop.mapred.JobConf

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/hadoop-mapreduce-client-core-3.1.1.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.commons.configuration2.Configuration

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/commons-configuration2-2.1.1.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.hadoop.util.PlatformName

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/hadoop-auth-3.1.0.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: org.apache.htrace.core.Tracer$Builder

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/htrace-core4-4.1.0-incubating.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.lang.IllegalArgumentException: Embedded metastore is not allowed. Make sure you have set a valid value for hive.metastore.uris

还需要修改Hive的hive-site.xml配置文件:

<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.88.24:9083</value>

</property>

然后:

root@zhiyong-hive-on-flink1:/export/server/jdk-11/bin# cd /export/server/apache-hive-3.1.3-bin/bin

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive --service metastore > /dev/null 2>&1 &

[1] 10835

[ERROR] Could not execute SQL statement. Reason:

java.lang.ClassNotFoundException: com.facebook.fb303.FacebookService$Iface

root@zhiyong-hive-on-flink1:~# cp /home/zhiyong/libfb303-0.9.3.jar /export/server/flink-1.17.0/lib/

[ERROR] Could not execute SQL statement. Reason:

java.net.ConnectException: 拒绝连接 (Connection refused)

显然是Hive的MetaStore又挂了:

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# ./hive --service metastore

Caused by: com.mysql.cj.exceptions.UnableToConnectException: Public Key Retrieval is not allowed

at jdk.internal.reflect.GeneratedConstructorAccessor79.newInstance(Unknown Source)

at java.base/jdk.internal.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.base/java.lang.reflect.Constructor.newInstance(Constructor.java:490)

at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:61)

at com.mysql.cj.exceptions.ExceptionFactory.createException(ExceptionFactory.java:85)

at com.mysql.cj.protocol.a.authentication.CachingSha2PasswordPlugin.nextAuthenticationStep(CachingSha2PasswordPlugin.java:130)

at com.mysql.cj.protocol.a.authentication.CachingSha2PasswordPlugin.nextAuthenticationStep(CachingSha2PasswordPlugin.java:49)

at com.mysql.cj.protocol.a.NativeAuthenticationProvider.proceedHandshakeWithPluggableAuthentication(NativeAuthenticationProvider.java:445)

at com.mysql.cj.protocol.a.NativeAuthenticationProvider.connect(NativeAuthenticationProvider.java:211)

at com.mysql.cj.protocol.a.NativeProtocol.connect(NativeProtocol.java:1369)

at com.mysql.cj.NativeSession.connect(NativeSession.java:133)

at com.mysql.cj.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:949)

at com.mysql.cj.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:819)

... 74 more

这就是使用了新版本MySQL的坏处!!!

修改hive的配置文件:

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.88.24:3306/hivemetadata?createDatabaseIfNotExist=true&allowPublicKeyRetrieval=true&useSSL=false&serviceTimezone=UTC</value>

</property>

还是报错:

Caused by: com.ctc.wstx.exc.WstxUnexpectedCharException: Unexpected character '=' (code 61); expected a semi-colon after the reference for entity 'useSSL'

at [row,col,system-id]: [12,124,"file:/export/server/apache-hive-3.1.3-bin/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.throwUnexpectedChar(StreamScanner.java:666)

at com.ctc.wstx.sr.StreamScanner.parseEntityName(StreamScanner.java:2080)

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1538)

at com.ctc.wstx.sr.BasicStreamReader.readTextSecondary(BasicStreamReader.java:4765)

at com.ctc.wstx.sr.BasicStreamReader.finishToken(BasicStreamReader.java:3789)

at com.ctc.wstx.sr.BasicStreamReader.safeFinishToken(BasicStreamReader.java:3743)

... 17 more

需要改为:

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.88.24:3306/hivemetadata?createDatabaseIfNotExist=true&allowPublicKeyRetrieval=true&useSSL=false&serviceTimezone=UTC</value>

</property>

此时Flink的SqlClient可以成功创建Catalog:

Flink SQL> CREATE CATALOG zhiyonghive WITH (

> 'type' = 'hive',

> 'hive-conf-dir' = '/export/server/apache-hive-3.1.3-bin/conf/'

> );

[INFO] Execute statement succeed.

让Hive的MetaStore保持后台常驻:

root@zhiyong-hive-on-flink1:/export/server/apache-hive-3.1.3-bin/bin# hive --service metastore > /dev/null 2>&1 &

[1] 12138

Flink SQL> use catalog zhiyonghive;

[INFO] Execute statement succeed.

Flink SQL> show databases;

+---------------+

| database name |

+---------------+

| default |

+---------------+

1 row in set

Flink SQL> create database if not exists zhiyong_flink_db;

[INFO] Execute statement succeed.

Flink SQL> show databases;

+------------------+

| database name |

+------------------+

| default |

| zhiyong_flink_db |

+------------------+

2 rows in set

连接到MySQL查看元数据:

select * from hivemetadata.DBS;

显然此时Flink集成Hive成功!!!

Flink SQL> CREATE TABLE test1 (id int,name string)

> with (

> 'connector'='hive',

> 'is_generic' = 'false'

> )

> ;

[INFO] Execute statement succeed.

可以查元数据:

select * from hivemetadata.TBLS;

显然元数据中多了一个Hive表【内部表】。

Flink SQL> insert into test1 values(1,'暴龙兽1');WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.flink.api.java.ClosureCleaner (file:/export/server/flink-1.17.0/lib/flink-dist-1.17.0.jar) to field java.lang.Class.ANNOTATION

WARNING: Please consider reporting this to the maintainers of org.apache.flink.api.java.ClosureCleaner

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

[INFO] Submitting SQL update statement to the cluster...

[INFO] SQL update statement has been successfully submitted to the cluster:

Job ID: e16b471d5f1628d552a3393426cbb0bb

Flink SQL> select * from test1;

[ERROR] Could not execute SQL statement. Reason:

org.apache.hadoop.fs.UnsupportedFileSystemException: No FileSystem for scheme "hdfs"

所以还需要拷Jar包。中途出现了不少class not found exception,也是类似的做法,把确实的Jar包手动放到flink的lib路径下。

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5/share/hadoop/hdfs# pwd

/export/server/hadoop-3.3.5/share/hadoop/hdfs

root@zhiyong-hive-on-flink1:/export/server/hadoop-3.3.5/share/hadoop/hdfs# cp ./*.jar /export/server/flink-1.17.0/lib/

Flink SQL> select * from zhiyong_flink_db.test1;

[ERROR] Could not execute SQL statement. Reason:

java.lang.NoSuchMethodError: org.apache.hadoop.fs.FsTracer.get(Lorg/apache/hadoop/conf/Configuration;)Lorg/apache/hadoop/tracing/Tracer;

Flink SQL> set table.sql-dialect=hive;

[INFO] Execute statement succeed.

Flink SQL> select * from zhiyong_flink_db.test1;WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.flink.util.ExceptionUtils (file:/export/server/flink-1.17.0/lib/flink-dist-1.17.0.jar) to field java.lang.Throwable.detailMessage

WARNING: Please consider reporting this to the maintainers of org.apache.flink.util.ExceptionUtils

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

[ERROR] Could not execute SQL statement. Reason:

org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'hive' that implements 'org.apache.flink.table.planner.delegation.DialectFactory' in the classpath.

Available factory identifiers are:

Note: if you want to use Hive dialect, please first move the jar `flink-table-planner_2.12` located in `FLINK_HOME/opt` to `FLINK_HOME/lib` and then move out the jar `flink-table-planner-loader` from `FLINK_HOME/lib`.

到处是坑!!!

启动Flink的Sql Gateway

参考官网:https://nightlies.apache.org/flink/flink-docs-release-1.17/zh/docs/dev/table/sql-gateway/overview/

这个脚本的内容:

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# cat sql-gateway.sh

#!/usr/bin/env bash

function usage() {

echo "Usage: sql-gateway.sh [start|start-foreground|stop|stop-all] [args]"

echo " commands:"

echo " start - Run a SQL Gateway as a daemon"

echo " start-foreground - Run a SQL Gateway as a console application"

echo " stop - Stop the SQL Gateway daemon"

echo " stop-all - Stop all the SQL Gateway daemons"

echo " -h | --help - Show this help message"

}

################################################################################

# Adopted from "flink" bash script

################################################################################

target="$0"

# For the case, the executable has been directly symlinked, figure out

# the correct bin path by following its symlink up to an upper bound.

# Note: we can't use the readlink utility here if we want to be POSIX

# compatible.

iteration=0

while [ -L "$target" ]; do

if [ "$iteration" -gt 100 ]; then

echo "Cannot resolve path: You have a cyclic symlink in $target."

break

fi

ls=`ls -ld -- "$target"`

target=`expr "$ls" : '.* -> \(.*\)$'`

iteration=$((iteration + 1))

done

# Convert relative path to absolute path

bin=`dirname "$target"`

# get flink config

. "$bin"/config.sh

if [ "$FLINK_IDENT_STRING" = "" ]; then

FLINK_IDENT_STRING="$USER"

fi

################################################################################

# SQL gateway specific logic

################################################################################

ENTRYPOINT=sql-gateway

if [[ "$1" = *--help ]] || [[ "$1" = *-h ]]; then

usage

exit 0

fi

STARTSTOP=$1

if [ -z "$STARTSTOP" ]; then

STARTSTOP="start"

fi

if [[ $STARTSTOP != "start" ]] && [[ $STARTSTOP != "start-foreground" ]] && [[ $STARTSTOP != "stop" ]] && [[ $STARTSTOP != "stop-all" ]]; then

usage

exit 1

fi

# ./sql-gateway.sh start --help, print the message to the console

if [[ "$STARTSTOP" = start* ]] && ( [[ "$*" = *--help* ]] || [[ "$*" = *-h* ]] ); then

FLINK_TM_CLASSPATH=`constructFlinkClassPath`

SQL_GATEWAY_CLASSPATH=`findSqlGatewayJar`

"$JAVA_RUN" -classpath "`manglePathList "$FLINK_TM_CLASSPATH:$SQL_GATEWAY_CLASSPATH:$INTERNAL_HADOOP_CLASSPATHS"`" org.apache.flink.table.gateway.SqlGateway "${@:2}"

exit 0

fi

if [[ $STARTSTOP == "start-foreground" ]]; then

exec "${FLINK_BIN_DIR}"/flink-console.sh $ENTRYPOINT "${@:2}"

else

"${FLINK_BIN_DIR}"/flink-daemon.sh $STARTSTOP $ENTRYPOINT "${@:2}"

fi

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin#

为了避免繁琐的参数,直接在Flink的配置文件写死:

root@zhiyong-hive-on-flink1:/export/server# vim ./flink-1.17.0/conf/flink-conf.yaml

#新增2个kv

sql-gateway.endpoint.type: hiveserver2

sql-gateway.endpoint.hiveserver2.catalog.hive-conf-dir: /export/server/apache-hive-3.1.3-bin/conf

此时可以减少一些参数。尝试启动:

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# pwd

/export/server/flink-1.17.0/bin

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# ./sql-gateway.sh start -Dsql-gateway.endpoint.type=hiveserver2

Starting sql-gateway daemon on host zhiyong-hive-on-flink1.

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# jps

2496 RunJar

3794 TaskManagerRunner

4276 Jps

3499 StandaloneSessionClusterEntrypoint

4237 SqlGateway

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# ./sql-gateway.sh stop

Stopping sql-gateway daemon (pid: 4237) on host zhiyong-hive-on-flink1.

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/bin# ./sql-gateway.sh start-foreground \

> -Dsql-gateway.session.check-interval=10min \

> -Dsql-gateway.endpoint.type=hiveserver2 \

> -Dsql-gateway.endpoint.hiveserver2.catalog.hive-conf-dir=/export/server/apache-hive-3.1.3-bin/conf \

> -Dsql-gateway.endpoint.hiveserver2.catalog.default-database=zhiyong_flink_db \

> -Dsql-gateway.endpoint.hiveserver2.catalog.name=hive

01:39:22.132 [hiveserver2-endpoint-thread-pool-thread-1] ERROR org.apache.thrift.server.TThreadPoolServer - Thrift error occurred during processing of message.

org.apache.thrift.protocol.TProtocolException: Missing version in readMessageBegin, old client?

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:228) ~[hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:27) ~[hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) [hive-exec-3.1.3.jar:3.1.3]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) [?:?]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) [?:?]

at java.lang.Thread.run(Thread.java:834) [?:?]

貌似进程已经启动。但是却不能访问。

问题集中在Hive。

重新部署JDK1.8

所以需要重新部署JDK1.8。此过程不赘述。

重新启动Flink的sql gateway

sql-gateway.sh start-foreground \

-Dsql-gateway.session.check-interval=10min \

-Dsql-gateway.endpoint.type=hiveserver2 \

-Dsql-gateway.endpoint.hiveserver2.catalog.hive-conf-dir=/export/server/apache-hive-3.1.3-bin/conf \

-Dsql-gateway.endpoint.hiveserver2.catalog.default-database=zhiyong_flink_db \

-Dsql-gateway.endpoint.hiveserver2.catalog.name=hive \

-Dsql-gateway.endpoint.hiveserver2.module.name=hive

但是:

02:44:47.970 [hiveserver2-endpoint-thread-pool-thread-1] ERROR org.apache.flink.table.endpoint.hive.HiveServer2Endpoint - Failed to GetInfo.

java.lang.UnsupportedOperationException: Unrecognized TGetInfoType value: CLI_ODBC_KEYWORDS.

at org.apache.flink.table.endpoint.hive.HiveServer2Endpoint.GetInfo(HiveServer2Endpoint.java:379) [flink-connector-hive_2.12-1.17.0.jar:1.17.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$GetInfo.getResult(TCLIService.java:1537) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$GetInfo.getResult(TCLIService.java:1522) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) [hive-exec-3.1.3.jar:3.1.3]

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286) [hive-exec-3.1.3.jar:3.1.3]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_202]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_202]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_202]

02:45:22.027 [sql-gateway-operation-pool-thread-1] ERROR org.apache.flink.table.gateway.service.operation.OperationManager - Failed to execute the operation c6cf01d3-afe5-4da0-8619-1948c8353c1d.

org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'hive' that implements 'org.apache.flink.table.planner.delegation.DialectFactory' in the classpath.

Available factory identifiers are:

Note: if you want to use Hive dialect, please first move the jar `flink-table-planner_2.12` located in `FLINK_HOME/opt` to `FLINK_HOME/lib` and then move out the jar `flink-table-planner-loader` from `FLINK_HOME/lib`.

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:546) ~[flink-table-api-java-uber-1.17.0.jar:1.17.0]

at org.apache.flink.table.planner.delegation.PlannerBase.getDialectFactory(PlannerBase.scala:161) ~[?:?]

at org.apache.flink.table.planner.delegation.PlannerBase.getParser(PlannerBase.scala:171) ~[?:?]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.getParser(TableEnvironmentImpl.java:1764) ~[flink-table-api-java-uber-1.17.0.jar:1.17.0]

at org.apache.flink.table.api.internal.TableEnvironmentImpl.<init>(TableEnvironmentImpl.java:240) ~[flink-table-api-java-uber-1.17.0.jar:1.17.0]

at org.apache.flink.table.api.bridge.internal.AbstractStreamTableEnvironmentImpl.<init>(AbstractStreamTableEnvironmentImpl.java:89) ~[flink-table-api-java-uber-1.17.0.jar:1.17.0]

at org.apache.flink.table.api.bridge.java.internal.StreamTableEnvironmentImpl.<init>(StreamTableEnvironmentImpl.java:84) ~[flink-table-api-java-uber-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.operation.OperationExecutor.createStreamTableEnvironment(OperationExecutor.java:393) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.operation.OperationExecutor.getTableEnvironment(OperationExecutor.java:332) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.operation.OperationExecutor.executeStatement(OperationExecutor.java:190) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.SqlGatewayServiceImpl.lambda$executeStatement$1(SqlGatewayServiceImpl.java:212) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.operation.OperationManager.lambda$submitOperation$1(OperationManager.java:119) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:258) ~[flink-sql-gateway-1.17.0.jar:1.17.0]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_202]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_202]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_202]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [?:1.8.0_202]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_202]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_202]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_202]

02:47:25.096 [sql-gateway-operation-pool-thread-2] ERROR org.apache.flink.table.gateway.service.operation.OperationManager - Failed to execute the operation 35155187-741f-4ea2-b6df-ee5f5b0f2dc8.

org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'hive' that implements 'org.apache.flink.table.planner.delegation.DialectFactory' in the classpath.

在beeline可以连接:

root@zhiyong-hive-on-flink1:/home/zhiyong# beeline

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/apache-hive-3.1.3-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.5/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Beeline version 3.1.3 by Apache Hive

beeline> !connect jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db;auth=noSasl

Connecting to jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db;auth=noSasl

Enter username for jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db: root

Enter password for jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db: ******

Connected to: Apache Flink (version 1.17)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> show databases;

Error: org.apache.flink.table.gateway.service.utils.SqlExecutionException: Failed to execute the operation c6cf01d3-afe5-4da0-8619-1948c8353c1d.

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.processThrowable(OperationManager.java:414)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:267)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'hive' that implements 'org.apache.flink.table.planner.delegation.DialectFactory' in the classpath.

Available factory identifiers are:

Note: if you want to use Hive dialect, please first move the jar `flink-table-planner_2.12` located in `FLINK_HOME/opt` to `FLINK_HOME/lib` and then move out the jar `flink-table-planner-loader` from `FLINK_HOME/lib`.

at org.apache.flink.table.factories.FactoryUtil.discoverFactory(FactoryUtil.java:546)

at org.apache.flink.table.planner.delegation.PlannerBase.getDialectFactory(PlannerBase.scala:161)

at org.apache.flink.table.planner.delegation.PlannerBase.getParser(PlannerBase.scala:171)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.getParser(TableEnvironmentImpl.java:1764)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.<init>(TableEnvironmentImpl.java:240)

at org.apache.flink.table.api.bridge.internal.AbstractStreamTableEnvironmentImpl.<init>(AbstractStreamTableEnvironmentImpl.java:89)

at org.apache.flink.table.api.bridge.java.internal.StreamTableEnvironmentImpl.<init>(StreamTableEnvironmentImpl.java:84)

at org.apache.flink.table.gateway.service.operation.OperationExecutor.createStreamTableEnvironment(OperationExecutor.java:393)

at org.apache.flink.table.gateway.service.operation.OperationExecutor.getTableEnvironment(OperationExecutor.java:332)

at org.apache.flink.table.gateway.service.operation.OperationExecutor.executeStatement(OperationExecutor.java:190)

at org.apache.flink.table.gateway.service.SqlGatewayServiceImpl.lambda$executeStatement$1(SqlGatewayServiceImpl.java:212)

at org.apache.flink.table.gateway.service.operation.OperationManager.lambda$submitOperation$1(OperationManager.java:119)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:258)

... 7 more (state=,code=0)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> SET table.sql-dialect = default;

Error: org.apache.flink.table.gateway.service.utils.SqlExecutionException: Failed to execute the operation 35155187-741f-4ea2-b6df-ee5f5b0f2dc8.

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.processThrowable(OperationManager.java:414)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:267)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.table.api.ValidationException: Could not find any factory for identifier 'hive' that implements 'org.apache.flink.table.planner.delegation.DialectFactory' in the classpath.

但是同样报错!

按照官网描述:https://nightlies.apache.org/flink/flink-docs-release-1.17/zh/docs/dev/table/hive-compatibility/hive-dialect/overview/

所以照着操作:

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# pwd

/export/server/flink-1.17.0/opt

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# ll

总用量 277744

drwxr-xr-x 3 root root 4096 3月 17 20:22 ./

drwxr-xr-x 10 root root 4096 3月 17 20:22 ../

-rw-r--r-- 1 root root 28040881 3月 17 20:18 flink-azure-fs-hadoop-1.17.0.jar

-rw-r--r-- 1 root root 48461 3月 17 20:21 flink-cep-scala_2.12-1.17.0.jar

-rw-r--r-- 1 root root 46756459 3月 17 20:18 flink-gs-fs-hadoop-1.17.0.jar

-rw-r--r-- 1 root root 26300214 3月 17 20:17 flink-oss-fs-hadoop-1.17.0.jar

-rw-r--r-- 1 root root 32998666 3月 17 20:16 flink-python-1.17.0.jar

-rw-r--r-- 1 root root 20400 3月 17 20:20 flink-queryable-state-runtime-1.17.0.jar

-rw-r--r-- 1 root root 30938059 3月 17 20:17 flink-s3-fs-hadoop-1.17.0.jar

-rw-r--r-- 1 root root 96609524 3月 17 20:17 flink-s3-fs-presto-1.17.0.jar

-rw-r--r-- 1 root root 233709 3月 17 17:37 flink-shaded-netty-tcnative-dynamic-2.0.54.Final-16.1.jar

-rw-r--r-- 1 root root 952711 3月 17 20:16 flink-sql-client-1.17.0.jar

-rw-r--r-- 1 root root 210103 3月 17 20:14 flink-sql-gateway-1.17.0.jar

-rw-r--r-- 1 root root 191815 3月 17 20:21 flink-state-processor-api-1.17.0.jar

-rw-r--r-- 1 root root 21072371 3月 17 20:13 flink-table-planner_2.12-1.17.0.jar

drwxr-xr-x 2 root root 4096 3月 17 20:16 python/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# cp flink-table-planner_2.12-1.17.0.jar /export/server/flink-1.17.0/li

lib/ licenses/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# cp flink-table-planner_2.12-1.17.0.jar /export/server/flink-1.17.0/li

lib/ licenses/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# cp flink-table-planner_2.12-1.17.0.jar /export/server/flink-1.17.0/lib/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/opt# cd /export/server/flink-1.17.0/lib/

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# ll

总用量 321488

drwxr-xr-x 2 root root 4096 5月 19 02:54 ./

drwxr-xr-x 10 root root 4096 3月 17 20:22 ../

-rw-r--r-- 1 root root 167761 5月 14 23:41 antlr-runtime-3.5.2.jar

-rw-r--r-- 1 root root 616888 5月 15 00:33 commons-configuration2-2.1.1.jar

-rw-r--r-- 1 root root 61829 5月 15 00:26 commons-logging-1.2.jar

-rw-r--r-- 1 root root 196487 3月 17 20:07 flink-cep-1.17.0.jar

-rw-r--r-- 1 root root 542616 3月 17 20:10 flink-connector-files-1.17.0.jar

-rw-r--r-- 1 root root 8876209 5月 14 23:26 flink-connector-hive_2.12-1.17.0.jar

-rw-r--r-- 1 root root 102468 3月 17 20:14 flink-csv-1.17.0.jar

-rw-r--r-- 1 root root 135969953 3月 17 20:22 flink-dist-1.17.0.jar

-rw-r--r-- 1 root root 180243 3月 17 20:13 flink-json-1.17.0.jar

-rw-r--r-- 1 root root 21043313 3月 17 20:20 flink-scala_2.12-1.17.0.jar

-rw-r--r-- 1 root root 15407474 3月 17 20:21 flink-table-api-java-uber-1.17.0.jar

-rw-r--r-- 1 root root 21072371 5月 19 02:54 flink-table-planner_2.12-1.17.0.jar

-rw-r--r-- 1 root root 37975208 3月 17 20:15 flink-table-planner-loader-1.17.0.jar

-rw-r--r-- 1 root root 3146205 3月 17 20:07 flink-table-runtime-1.17.0.jar

-rw-r--r-- 1 root root 138291 5月 15 00:35 hadoop-auth-3.1.0.jar

-rw-r--r-- 1 root root 4034318 5月 15 00:15 hadoop-common-3.1.1.jar

-rw-r--r-- 1 root root 4535144 5月 15 01:33 hadoop-common-3.3.5.jar

-rw-r--r-- 1 root root 3474147 5月 15 01:33 hadoop-common-3.3.5-tests.jar

-rw-r--r-- 1 root root 6296402 5月 15 01:29 hadoop-hdfs-3.3.5.jar

-rw-r--r-- 1 root root 6137497 5月 15 01:29 hadoop-hdfs-3.3.5-tests.jar

-rw-r--r-- 1 root root 5532342 5月 15 01:29 hadoop-hdfs-client-3.3.5.jar

-rw-r--r-- 1 root root 129796 5月 15 01:29 hadoop-hdfs-client-3.3.5-tests.jar

-rw-r--r-- 1 root root 251501 5月 15 01:29 hadoop-hdfs-httpfs-3.3.5.jar

-rw-r--r-- 1 root root 9586 5月 15 01:29 hadoop-hdfs-native-client-3.3.5.jar

-rw-r--r-- 1 root root 9586 5月 15 01:29 hadoop-hdfs-native-client-3.3.5-tests.jar

-rw-r--r-- 1 root root 115593 5月 15 01:29 hadoop-hdfs-nfs-3.3.5.jar

-rw-r--r-- 1 root root 1133476 5月 15 01:29 hadoop-hdfs-rbf-3.3.5.jar

-rw-r--r-- 1 root root 450962 5月 15 01:29 hadoop-hdfs-rbf-3.3.5-tests.jar

-rw-r--r-- 1 root root 96472 5月 15 01:33 hadoop-kms-3.3.5.jar

-rw-r--r-- 1 root root 1654887 5月 15 00:30 hadoop-mapreduce-client-core-3.1.1.jar

-rw-r--r-- 1 root root 170289 5月 15 01:33 hadoop-nfs-3.3.5.jar

-rw-r--r-- 1 root root 189835 5月 15 01:33 hadoop-registry-3.3.5.jar

-rw-r--r-- 1 root root 41873153 5月 14 23:29 hive-exec-3.1.3.jar

-rw-r--r-- 1 root root 36983 5月 14 23:29 hive-metastore-3.1.3.jar

-rw-r--r-- 1 root root 4101057 5月 15 00:42 htrace-core4-4.1.0-incubating.jar

-rw-r--r-- 1 root root 56674 5月 15 01:33 javax.activation-api-1.2.0.jar

-rw-r--r-- 1 root root 313702 5月 15 00:52 libfb303-0.9.3.jar

-rw-r--r-- 1 root root 208006 3月 17 17:31 log4j-1.2-api-2.17.1.jar

-rw-r--r-- 1 root root 301872 3月 17 17:31 log4j-api-2.17.1.jar

-rw-r--r-- 1 root root 1790452 3月 17 17:31 log4j-core-2.17.1.jar

-rw-r--r-- 1 root root 24279 3月 17 17:31 log4j-slf4j-impl-2.17.1.jar

-rw-r--r-- 1 root root 161867 5月 15 00:24 stax2-api-3.1.4.jar

-rw-r--r-- 1 root root 512742 5月 15 00:20 woodstox-core-5.0.3.jar

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib# mv flink-table-planner-loader-1.17.0.jar flink-table-planner-loader-1.17.0.jar_bak

root@zhiyong-hive-on-flink1:/export/server/flink-1.17.0/lib#

已经手动处理了不少依赖的Jar包,但是还会报错:

beeline> !connect jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db;auth=noSasl

Connecting to jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db;auth=noSasl

Enter username for jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db: root

Enter password for jdbc:hive2://192.168.88.24:10000/zhiyong_flink_db: ******

Connected to: Apache Flink (version 1.17)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> show databases;

Error: org.apache.flink.table.gateway.service.utils.SqlExecutionException: Failed to execute the operation 7c60e354-b2af-4c1b-a364-4a4d48a8ff8b.

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.processThrowable(OperationManager.java:414)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:267)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ExceptionInInitializerError

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.table.catalog.hive.client.HiveShimV120.registerTemporaryFunction(HiveShimV120.java:262)

at org.apache.flink.table.planner.delegation.hive.HiveParser.parse(HiveParser.java:212)

at org.apache.flink.table.gateway.service.operation.OperationExecutor.executeStatement(OperationExecutor.java:191)

at org.apache.flink.table.gateway.service.SqlGatewayServiceImpl.lambda$executeStatement$1(SqlGatewayServiceImpl.java:212)

at org.apache.flink.table.gateway.service.operation.OperationManager.lambda$submitOperation$1(OperationManager.java:119)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:258)

... 7 more

Caused by: java.lang.RuntimeException: java.lang.reflect.InvocationTargetException

at org.apache.hive.common.util.ReflectionUtil.newInstance(ReflectionUtil.java:85)

at org.apache.hadoop.hive.ql.exec.Registry.registerGenericUDF(Registry.java:177)

at org.apache.hadoop.hive.ql.exec.Registry.registerGenericUDF(Registry.java:170)

at org.apache.hadoop.hive.ql.exec.FunctionRegistry.<clinit>(FunctionRegistry.java:203)

... 17 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hive.common.util.ReflectionUtil.newInstance(ReflectionUtil.java:83)

... 20 more

Caused by: java.lang.NoClassDefFoundError: org/apache/commons/codec/language/Soundex

at org.apache.hadoop.hive.ql.udf.generic.GenericUDFSoundex.<init>(GenericUDFSoundex.java:49)

... 25 more

Caused by: java.lang.ClassNotFoundException: org.apache.commons.codec.language.Soundex

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 26 more (state=,code=0)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> SET table.sql-dialect = default;

+---------+

| result |

+---------+

| OK |

+---------+

1 row selected (0.206 seconds)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> show databases;

+-------------------+

| database name |

+-------------------+

| default |

| zhiyong_flink_db |

+-------------------+

2 rows selected (0.383 seconds)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> use zhiyong_flink_db;

+---------+

| result |

+---------+

| OK |

+---------+

1 row selected (0.03 seconds)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> show tables;

+-------------+

| table name |

+-------------+

| test1 |

+-------------+

1 row selected (0.044 seconds)

0: jdbc:hive2://192.168.88.24:10000/zhiyong_f> select * from test1;

Error: org.apache.flink.table.gateway.service.utils.SqlExecutionException: Failed to execute the operation 627c0f0b-8c1d-4882-a0d4-085abf05ab75.

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.processThrowable(OperationManager.java:414)

at org.apache.flink.table.gateway.service.operation.OperationManager$Operation.lambda$run$0(OperationManager.java:267)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.fs.FsTracer.get(Lorg/apache/hadoop/conf/Configuration;)Lorg/apache/hadoop/tracing/Tracer;

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:323)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:308)

at org.apache.hadoop.hdfs.DistributedFileSystem.initDFSClient(DistributedFileSystem.java:202)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:187)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:3354)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:124)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:3403)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:3371)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:477)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:361)

at org.apache.flink.connectors.hive.HiveSourceFileEnumerator.getNumFiles(HiveSourceFileEnumerator.java:195)

at org.apache.flink.connectors.hive.HiveTableSource.lambda$getDataStream$0(HiveTableSource.java:174)

at org.apache.flink.connectors.hive.HiveParallelismInference.logRunningTime(HiveParallelismInference.java:107)

at org.apache.flink.connectors.hive.HiveParallelismInference.infer(HiveParallelismInference.java:89)

at org.apache.flink.connectors.hive.HiveTableSource.getDataStream(HiveTableSource.java:172)

at org.apache.flink.connectors.hive.HiveTableSource$1.produceDataStream(HiveTableSource.java:138)

at org.apache.flink.table.planner.plan.nodes.exec.common.CommonExecTableSourceScan.translateToPlanInternal(CommonExecTableSourceScan.java:140)

at org.apache.flink.table.planner.plan.nodes.exec.batch.BatchExecTableSourceScan.translateToPlanInternal(BatchExecTableSourceScan.java:101)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:161)

at org.apache.flink.table.planner.plan.nodes.exec.ExecEdge.translateToPlan(ExecEdge.java:257)

at org.apache.flink.table.planner.plan.nodes.exec.batch.BatchExecSink.translateToPlanInternal(BatchExecSink.java:65)

at org.apache.flink.table.planner.plan.nodes.exec.ExecNodeBase.translateToPlan(ExecNodeBase.java:161)

at org.apache.flink.table.planner.delegation.BatchPlanner.$anonfun$translateToPlan$1(BatchPlanner.scala:93)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:233)

at scala.collection.Iterator.foreach(Iterator.scala:937)

at scala.collection.Iterator.foreach$(Iterator.scala:937)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1425)

at scala.collection.IterableLike.foreach(IterableLike.scala:70)

at scala.collection.IterableLike.foreach$(IterableLike.scala:69)

at scala.collection.AbstractIterable.foreach(Iterable.scala:54)

at scala.collection.TraversableLike.map(TraversableLike.scala:233)

at scala.collection.TraversableLike.map$(TraversableLike.scala:226)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)