Zero → \to → Hero : 2

接上篇,Zero → \to → Hero : 1,进一步的扩展模型:

- 增加输入字符序列的长度,通过多个字符预测下一个字符的概率分布

- 增加模型的深度,通过多层的MLP来学习和预测字符的生成概率

- 增加嵌入层,把字符转换为稠密向量

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt # for making figures

from matplotlib.font_manager import FontProperties

font = FontProperties(fname='../chinese_pop.ttf', size=10)

加载数据集

数据是一个中文名数据集

- 名字最小长度为 2:

- 名字最大长度为 3:

words = open('../Chinese_Names_Corpus.txt', 'r').read().splitlines()

# 数据包含100多万个姓名,过滤出一个姓氏用来测试

names = [name for name in words if name[0] == '王' and len(name) == 3]

len(names)

52127

# 构建词汇表到索引,索引到词汇表的映射,词汇表大小为:1561(加上开始和结束填充字符):

chars = sorted(list(set(''.join(names))))

char2i = {s:i+1 for i,s in enumerate(chars)}

char2i['.'] = 0 # 填充字符

i2char = {i:s for s,i in char2i.items()}

len(chars)

1650

block_size = 2 # 用两个字符预测下一个字符

X, Y = [], []

for w in names[:1]:

context = [0] * block_size

for ch in w + '.':

ix = char2i[ch]

X.append(context)

Y.append(ix)

print(''.join(i2char[i] for i in context), '--->', i2char[ix])

context = context[1:] + [ix] # crop and append

X = torch.tensor(X)

Y = torch.tensor(Y)

.. ---> 王

.王 ---> 阿

王阿 ---> 宝

阿宝 ---> .

构建训练数据

block_size = 2

def build_dataset(names):

X, Y = [], []

for w in names:

context = [0] * block_size

for ch in w + '.':

ix = char2i[ch]

X.append(context)

Y.append(ix)

context = context[1:] + [ix] # crop and append

X = torch.tensor(X)

Y = torch.tensor(Y)

print(X.shape, Y.shape)

return X, Y

划分数据集

import random

random.seed(42)

random.shuffle(names)

n1 = int(0.8*len(names))

Xtr, Ytr = build_dataset(names[:n1])

Xte, Yte = build_dataset(names[n1:])

torch.Size([166804, 2]) torch.Size([166804])

torch.Size([41704, 2]) torch.Size([41704])

构建MLP

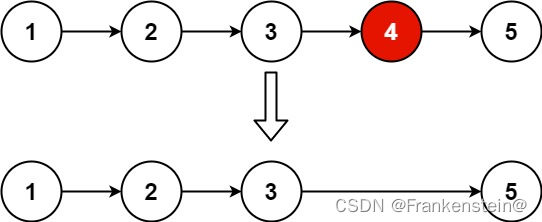

模型结构:输入层 → \to →嵌入层 → \to →隐藏层 → \to →输出层。

-

在Zero2Hero:1中,是直接对输入进行了one-hot编码,然后直接将编码后的稀疏向量传递给了输出层。

-

在Zero2Hero:2中,增加嵌入层,通过查询嵌入层,把输入字符(token)序列转换低维的稠密向量,同时随着模型的训练,字符的表示也会发生变化。

-

在Zero2Hero:2中,增加隐藏层,提取更高级的特征表示。

嵌入层:

- 随机初始化一张向量表

- 根据字符的索引查询对应的向量表示

- 向量表示向后传播,最后通过梯度下降,修正向量表

# 随机初始化和词汇表大小相同的向量表

len(char2i)

C = torch.randn((1651, 2))

1651

# 根据索引查询字符的向量表示

emb = C[X]

emb.shape

emb

tensor([[[ 0.7928, -0.2331],

[ 0.7928, -0.2331]],

[[ 0.7928, -0.2331],

[-2.0187, -1.1116]],

[[-2.0187, -1.1116],

[ 0.9795, 1.4175]],

[[ 0.9795, 1.4175],

[ 0.2740, 1.5466]]])

初始化模型参数:

g = torch.Generator().manual_seed(2147483647)

C = torch.randn((len(char2i), 2), generator=g)

W1 = torch.randn((4, 200), generator=g)

b1 = torch.randn(200, generator=g)

W2 = torch.randn((200, len(char2i)), generator=g)

b2 = torch.randn(len(char2i), generator=g)

parameters = [C, W1, b1, W2, b2]

print("参数统计:",sum(p.nelement() for p in parameters)) # 模型参数统计

参数统计: 336153

for p in parameters:

p.requires_grad = True

训练模型:

lri = []

lossi = []

stepi = []

for i in range(20000):

# minibatch construct

ix = torch.randint(0, Xtr.shape[0], (32,))

# forward pass

emb = C[Xtr[ix]] # (32, 2, 2)

h = torch.tanh(emb.view(-1, 4) @ W1 + b1) # (32, 200)

logits = h @ W2 + b2 # (32, 1651)

loss = F.cross_entropy(logits, Ytr[ix])

#print(loss.item())

# backward pass

for p in parameters:

p.grad = None

loss.backward()

# update

lr = 0.1 if i < 10000 else 0.01

for p in parameters:

p.data += -lr * p.grad

# track stats

#lri.append(lre[i])

stepi.append(i)

lossi.append(loss.log10().item())

print(loss.item())

3.3062288761138916

plt.plot(stepi, lossi)

测试误差:

emb = C[Xte]

h = torch.tanh(emb.view(-1, 4) @ W1 + b1) # (32, 100)

logits = h @ W2 + b2 # (32, 1651)

loss = F.cross_entropy(logits, Yte)

loss

tensor(3.2770, grad_fn=<NllLossBackward0>)

可视化

可视化字符的嵌入:

# visualize dimensions 0 and 1 of the embedding matrix C for all characters

plt.figure(figsize=(12,6))

plt.scatter(C[:,0].data, C[:,1].data, s=200)

for i in range(C.shape[0]):

plt.text(C[i,0].item(), C[i,1].item(), i2char[i], ha="center", va="center", color='white',fontproperties=font)

plt.grid('minor')

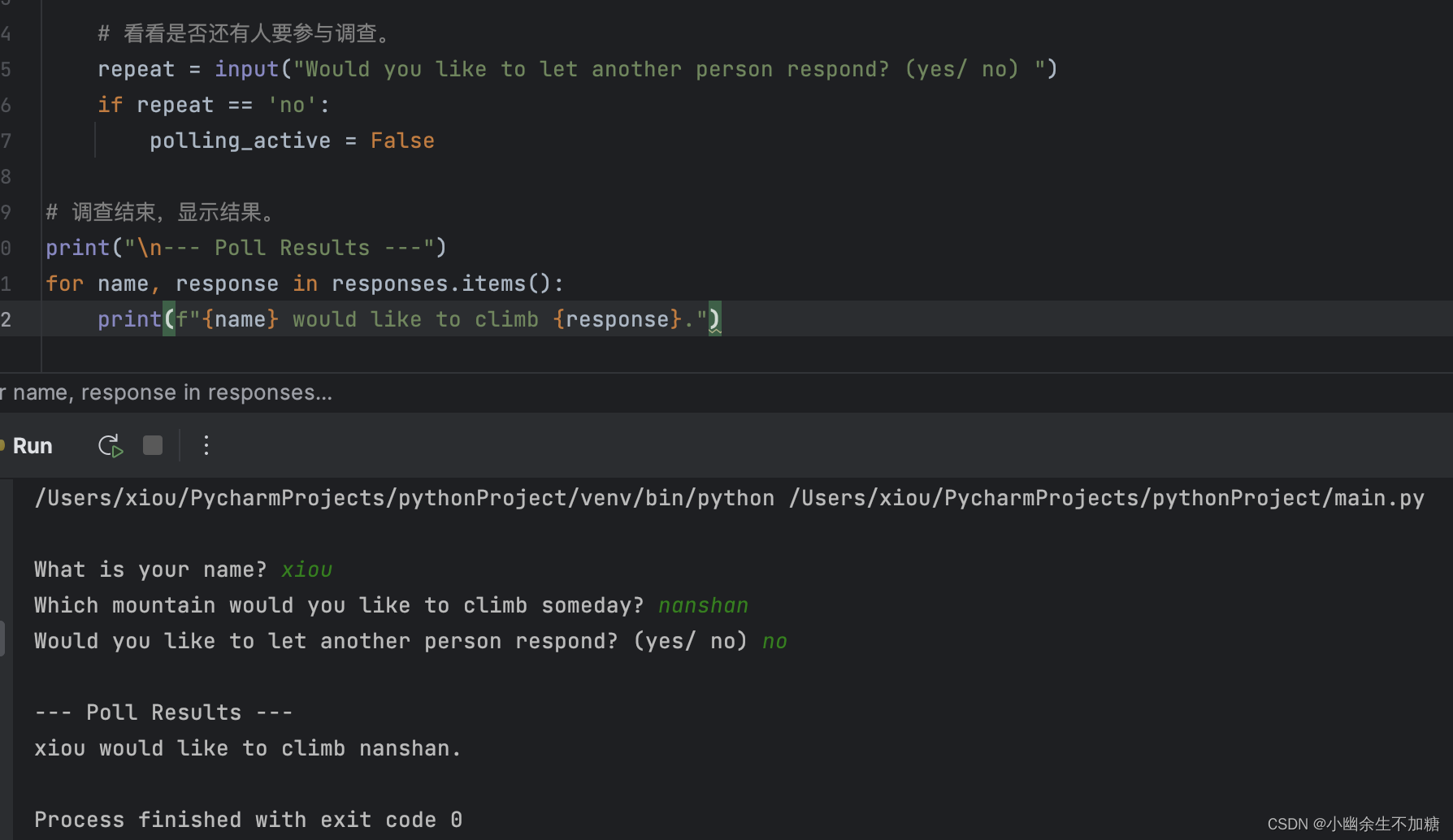

测试生成

g = torch.Generator().manual_seed(20230516 + 10)

for _ in range(10):

out = []

context = [0] * block_size # initialize with all ...

while True:

emb = C[torch.tensor([context])] # (1,block_size,d)

h = torch.tanh(emb.view(1, -1) @ W1 + b1)

logits = h @ W2 + b2

probs = F.softmax(logits, dim=1)

ix = torch.multinomial(probs, num_samples=1, generator=g).item()

context = context[1:] + [ix]

out.append(ix)

if ix == 0:

break

print(''.join(i2char[i] for i in out))

王胜兵.

王紫琴.

王坡健.

王家菲.

王青金.

王碧财.

王华士.

王海维.

王旭荣.

王玉树.