1.安装

安装步骤按照官网安装即可

官网:DolphinScheduler | 文档中心 (apache.org)

版本:3.1.5

2.踩坑记录

Q1.大文件无法上传

问题描述:

在资源中心中上传文件选择完大文件夹之后,选择确认之后确认按钮转了几圈圈之后就没反应了,对应服务器上使用ds登录的用户资源目录也没有对应的文件。

问题解决:

通过排查发现每到15s。网络请求就会断开。

利用浏览器工具找到请求的js文件。找到请求url,baseURL中有个参数是timeout 为 15e3(15000ms即15s),找到对应ds安装目录下的ui/asserts/ 对应js脚本文件,时间改大一点。

Q2.Flink程序无法提交到yarn

问题描述:

创建完Flink-Stream 之后,命令总提交失败,分析日志DS生成的提交命令为:

去服务器上执行,发现有以下报错,主要是因为Flink的总内存默认为1.5G。

去服务器上执行,发现有以下报错,主要是因为Flink的总内存默认为1.5G。

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: TaskManager memory configuration failed: Sum of configured Framework Heap Memory (128.000mb (134217728 bytes)), Framework Off-Heap Memory (512.000mb (536870912 bytes)), Task Off-Heap Memory (512.000mb (536870912 bytes)), Managed Memory (1024.000mb (1073741824 bytes)) and Network Memory (158.720mb (166429984 bytes)) exceed configured Total Flink Memory (1.550gb (1664299824 bytes))

解决:

对应的参数调整成以下配置项即可:(应该有其他更好的解法,暂时没找到......)

对应JobManager内存数 和 taskManager内存数分别用以下两个参数代替,原有选项置空。

-Djobmanager.memory.process.size=1024mb -Dtaskmanager.memory.process.size=6144mb

Q3.提交完flinkStream 之后,在ResourceManagerWebUi上一直处于create状态

Q3问题描述:

使用root用户在对应节点的服务器提交任务,任务可以成功被提交,并且可以running起来。

但是切换完ds租户提交任务之后,也可以成功提交,但是任务一直处于created 的状态,并且在一段时间之后会失败并报错

org.apache.flink.runtime.jobmaster.slotpool.PhysicalSlotRequestBulkCheckerImpl.lambda$schedulePendingRequestBulkWithTimestampCheck$0(PhysicalSlotRequestBulkCheckerImpl.java:91)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRunAsync(AkkaRpcActor.java:440)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleRpcMessage(AkkaRpcActor.java:208)

at org.apache.flink.runtime.rpc.akka.FencedAkkaRpcActor.handleRpcMessage(FencedAkkaRpcActor.java:77)

at org.apache.flink.runtime.rpc.akka.AkkaRpcActor.handleMessage(AkkaRpcActor.java:158)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:26)

at akka.japi.pf.UnitCaseStatement.apply(CaseStatements.scala:21)

at scala.PartialFunction$class.applyOrElse(PartialFunction.scala:123)

at akka.japi.pf.UnitCaseStatement.applyOrElse(CaseStatements.scala:21)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:170)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171)

at akka.actor.Actor$class.aroundReceive(Actor.scala:517)

at akka.actor.AbstractActor.aroundReceive(AbstractActor.scala:225)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:592)

at akka.actor.ActorCell.invoke(ActorCell.scala:561)

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:258)

at akka.dispatch.Mailbox.run(Mailbox.scala:225)

at akka.dispatch.Mailbox.exec(Mailbox.scala:235)

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: java.util.concurrent.CompletionException: org.apache.flink.runtime.jobmanager.scheduler.NoResourceAvailableException: Slot request bulk is not fulfillable! Could not allocate the required slot within slot request timeout

at java.util.concurrent.CompletableFuture.encodeThrowable(CompletableFuture.java:292)

at java.util.concurrent.CompletableFuture.completeThrowable(CompletableFuture.java:308)

at java.util.concurrent.CompletableFuture.uniApply(CompletableFuture.java:593)

at java.util.concurrent.CompletableFuture$UniApply.tryFire(CompletableFuture.java:577)

... 31 more

Caused by: org.apache.flink.runtime.jobmanager.scheduler.NoResourceAvailableException: Slot request bulk is not fulfillable! Could not allocate the required slot within slot request timeout

at org.apache.flink.runtime.jobmaster.slotpool.PhysicalSlotRequestBulkCheckerImpl.lambda$schedulePendingRequestBulkWithTimestampCheck$0(PhysicalSlotRequestBulkCheckerImpl.java:86)

... 24 more

Caused by: java.util.concurrent.TimeoutException: Timeout has occurred: 300000 ms

... 25 more

Exception in thread "Thread-5" java.lang.IllegalStateException: Trying to access closed classloader. Please check if you store classloaders directly or indirectly in static fields. If the stacktrace suggests that the leak occurs in a third party library and cannot be fixed immediately, you can disable this check with the configuration 'classloader.check-leaked-classloader'.

at org.apache.flink.runtime.execution.librarycache.FlinkUserCodeClassLoaders$SafetyNetWrapperClassLoader.ensureInner(FlinkUserCodeClassLoaders.java:164)

at org.apache.flink.runtime.execution.librarycache.FlinkUserCodeClassLoaders$SafetyNetWrapperClassLoader.getResource(FlinkUserCodeClassLoaders.java:183)

at org.apache.hadoop.conf.Configuration.getResource(Configuration.java:2647)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:2905)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2864)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2838)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2715)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1186)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1774)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)主要看 Caused by: java.util.concurrent.CompletionException: org.apache.flink.runtime.jobmanager.scheduler.NoResourceAvailableException: Slot request bulk is not fulfillable! Could not allocate the required slot within slot request timeout

Q3问题解决:

找到对应node节点的flink安装目录,在对应 flink 启动脚本第一行添加一行代码

export HADOOP_CLASSPATH=`hadoop classpath`

其含义为运行shell命令hadoop classpath 并将输出的值赋予给HADOOP_CLASSPATH环境变量。

然后重新提交flink任务即可在ResourceManagerWebUi看到对应DS租户提交的Flink任务。

Q4.关于一些僵尸任务前端不能删除

Q4问题描述:

1.对于DS的任务管理中任务定义没有删除的操作。已经删除了的工作流中的任务定义依然存在,也不能通过前端删除。

2.在任务示例中实时任务也没有删除选项,但会存在僵尸任务示例。

Q4问题解决:

对于配置了mysql环境的DS。

1.任务定义表为t_ds_process_instance,根据名称(字段name)去删除对应任务定义即可。

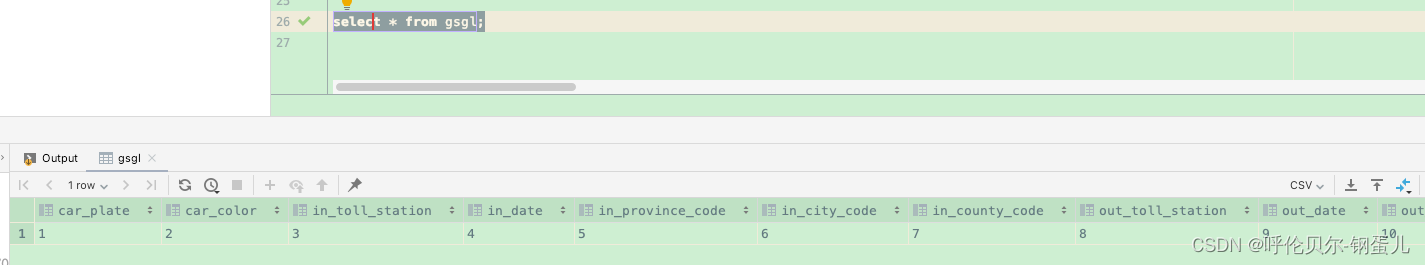

2.任务实例表为t_ds_task_instance,根据需要条件删除任务实例即可。

![[Element]调整select样式](https://img-blog.csdnimg.cn/7610054fcf444eb1bd5362402d95ef9a.png)