Sentinel实现动态配置的集群流控的方法

介绍

06-cluster-embedded-8081

为什么要使用集群流控呢?

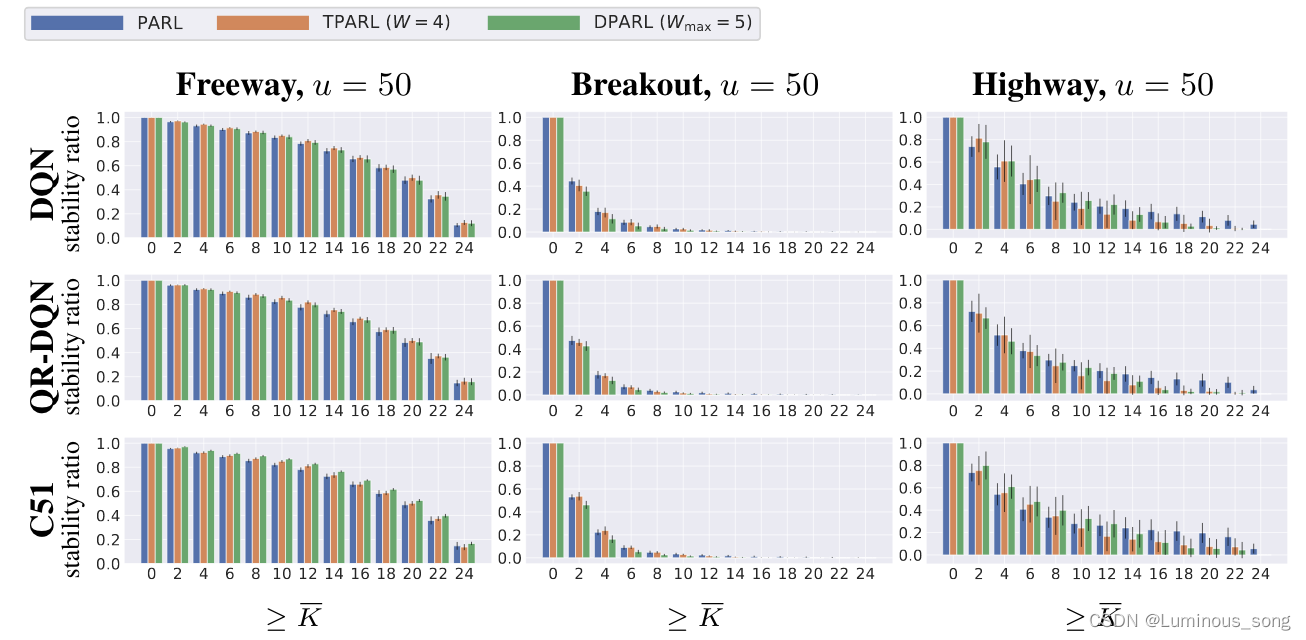

相对于单机流控而言,我们给每台机器设置单机限流阈值,在理想情况下整个集群的限流阈值为机器数量✖️单机阈值。不过实际情况下流量到每台机器可能会不均匀,会导致总量没有到的情况下某些机器就开始限流。因此仅靠单机维度去限制的话会无法精确地限制总体流量。而集群流控可以精确地控制整个集群的调用总量,结合单机限流兜底,可以更好地发挥流量控制的效果。

基于单机流量不均的问题以及如何设置集群整体的QPS的问题,我们需要创建一种集群限流的模式,这时候我们很自然地就想到,可以找一个 server 来专门统计总的调用量,其它的实例都与这台 server 通信来判断是否可以调用。这就是最基础的集群流控的方式。

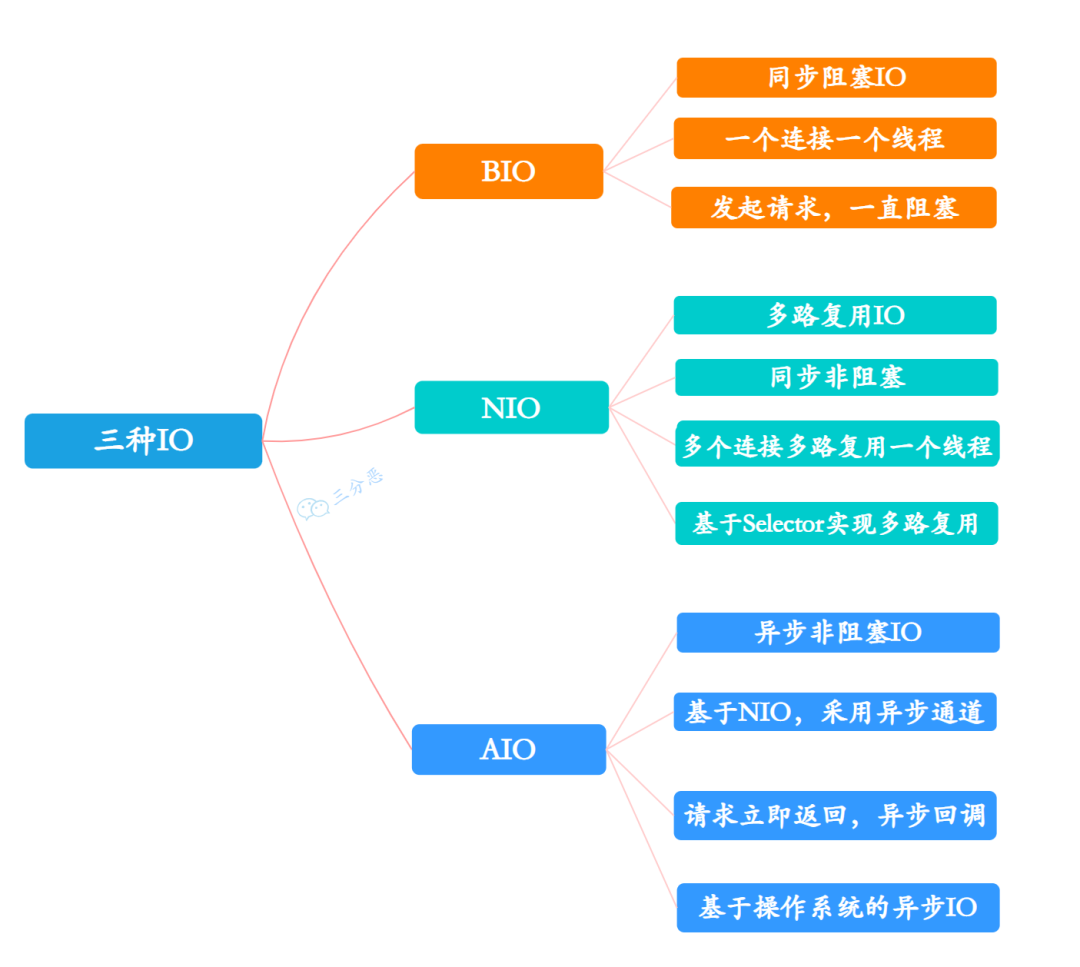

原理

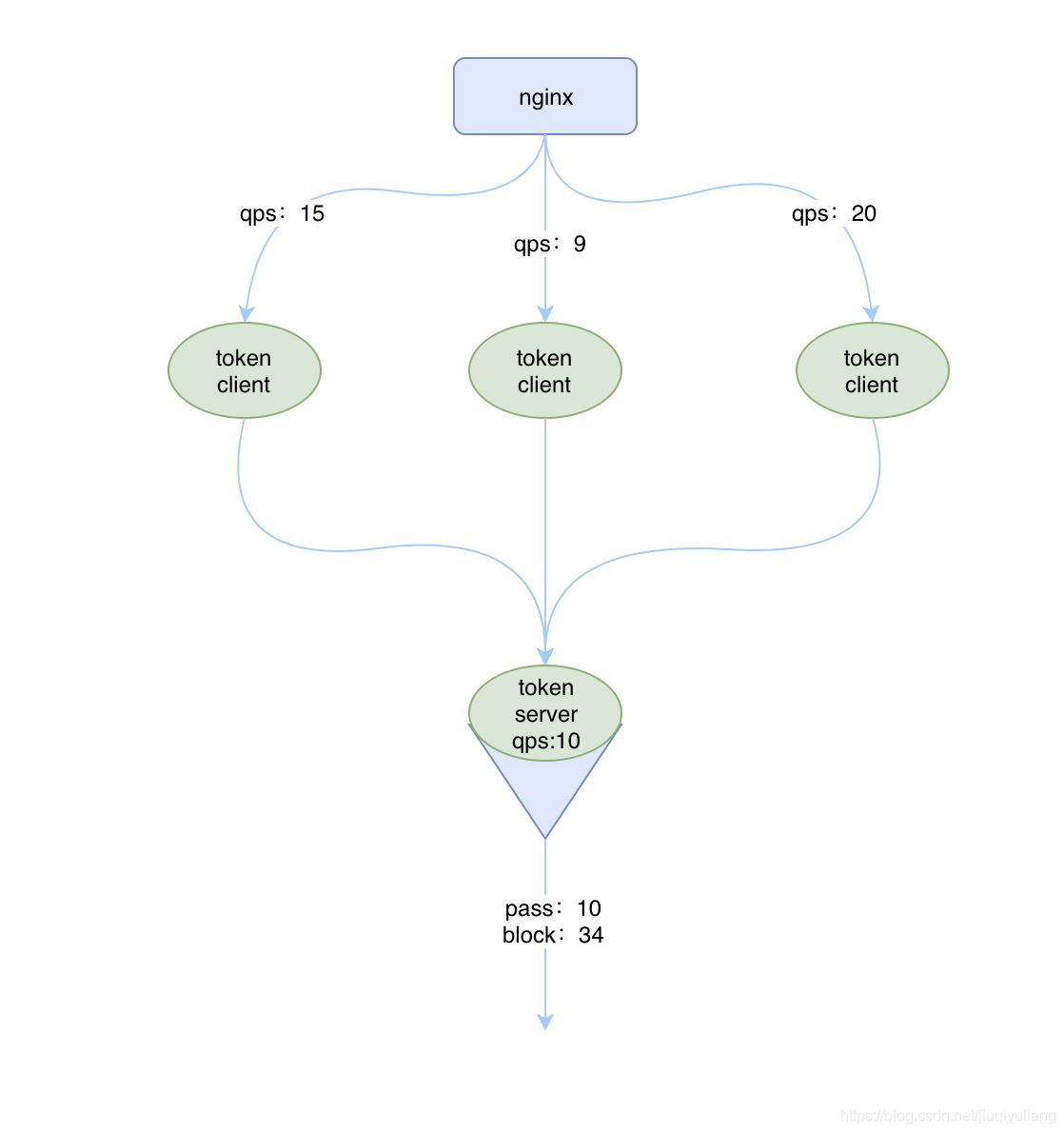

集群限流的原理很简单,和单机限流一样,都需要对 qps 等数据进行统计,区别就在于单机版是在每个实例中进行统计,而集群版是有一个专门的实例进行统计。

这个专门的用来统计数据的称为 Sentinel 的 token server,其他的实例作为 Sentinel 的 token client 会向 token server 去请求 token,如果能获取到 token,则说明当前的 qps 还未达到总的阈值,否则就说明已经达到集群的总阈值,当前实例需要被 block,如下图所示:

和单机流控相比,集群流控中共有两种身份:

- Token Client:集群流控客户端,用于向所属 Token Server 通信请求 token。集群限流服务端会返回给客户端结果,决定是否限流。

- Token Server:即集群流控服务端,处理来自 Token Client 的请求,根据配置的集群规则判断是否应该发放 token(是否允许通过)。

而单机流控中只有一种身份,每个 sentinel 都是一个 token server。

注意,集群限流中的 token server 是单点的,一旦 token server 挂掉,那么集群限流就会退化成单机限流的模式。

Sentinel 集群流控支持限流规则和热点规则两种规则,并支持两种形式的阈值计算方式:

- 集群总体模式:即限制整个集群内的某个资源的总体 qps 不超过此阈值。

- 单机均摊模式:单机均摊模式下配置的阈值等同于单机能够承受的限额,token server 会根据连接数来计算总的阈值(比如独立模式下有 3 个 client 连接到了 token server,然后配的单机均摊阈值为 10,则计算出的集群总量就为 30),按照计算出的总的阈值来进行限制。这种方式根据当前的连接数实时计算总的阈值,对于机器经常进行变更的环境非常适合。

部署方式

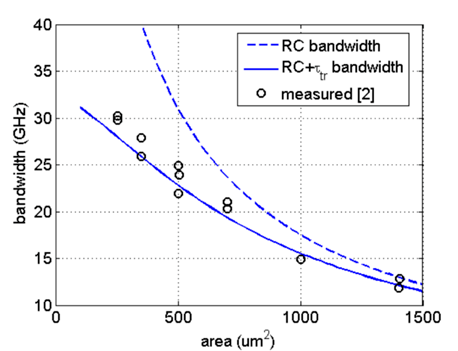

token server 有两种部署方式:

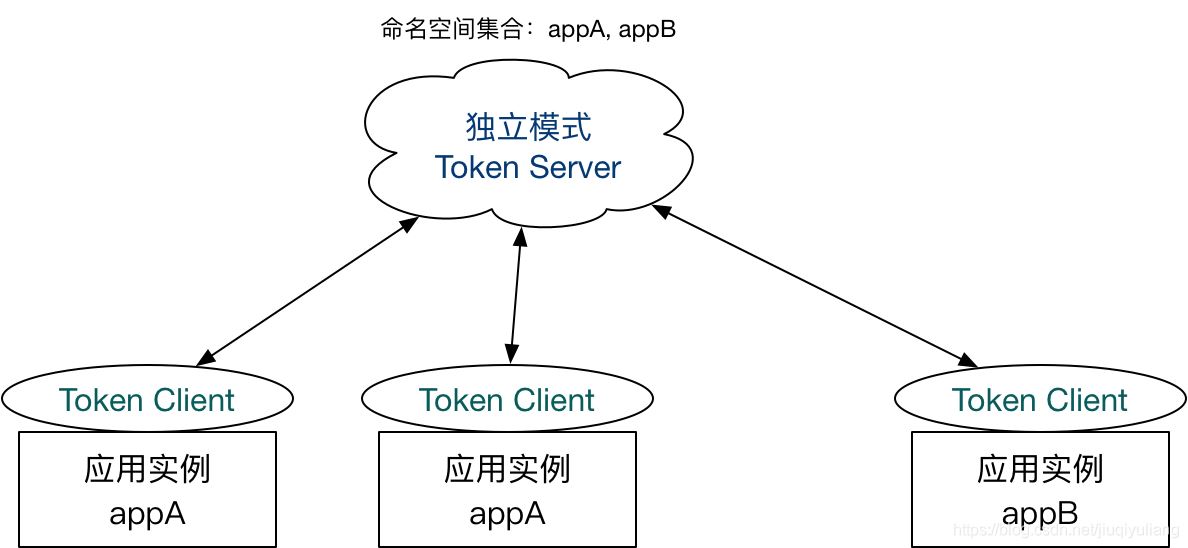

一种是独立部署,就是单独启动一个 token server 服务来处理 token client 的请求,如下图所示:

如果独立部署的 token server 服务挂掉的话,那其他的 token client 就会退化成本地流控的模式,也就是单机版的流控,所以这种方式的集群限流需要保证 token server 的高可用性。

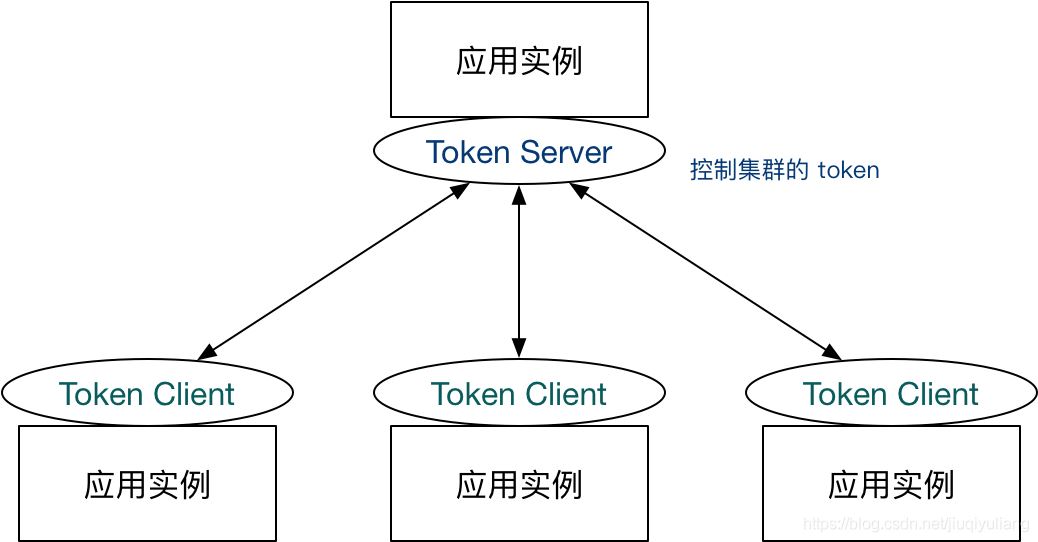

一种是嵌入部署,即作为内置的 token server 与服务在同一进程中启动。在此模式下,集群中各个实例都是对等的,token server 和 client 可以随时进行转变,如下图所示:

嵌入式部署的模式中,如果 token server 服务挂掉的话,我们可以将另外一个 token client 升级为token server来,当然啦如果我们不想使用当前的 token server 的话,也可以选择另外一个 token client 来承担这个责任,并且将当前 token server 切换为 token client。Sentinel 为我们提供了一个 api 来进行 token server 与 token client 的切换:

http://192.168.175.1:8721/setClusterMode?mode=1

其中 mode 为 0 代表 client,1 代表 server,-1 代表关闭。

嵌入模式

导入依赖

<dependency>

<groupId>com.alibaba.csp</groupId>

<artifactId>sentinel-cluster-server-default</artifactId>

<version>2.0.0-alpha</version>

</dependency>

<dependency>

<groupId>com.alibaba.csp</groupId>

<artifactId>sentinel-cluster-client-default</artifactId>

<version>2.0.0-alpha</version>

</dependency>

<dependency>

<groupId>com.alibaba.csp</groupId>

<artifactId>sentinel-datasource-nacos</artifactId>

<version>2.0.0-alpha</version>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>2.10</version>

</dependency>

application.yml

server:

port: 8081

spring:

datasource:

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://localhost:3306/test?useSSL=false&useUnicode=true&characterEncoding=utf-8&serverTimezone=GMT%2B8

username: root

password: gj001212

type: com.alibaba.druid.pool.DruidDataSource

cloud:

nacos:

discovery:

server-addr: 192.168.146.1:8839

sentinel:

transport:

dashboard: localhost:7777

port: 8719

eager: true

web-context-unify: false

application:

name: cloudalibaba-sentinel-clusterServer

mybatis-plus:

configuration:

log-impl: org.apache.ibatis.logging.stdout.StdOutImpl #开启sql日志

修改VM options配置,启动三个不同端口的实例。

-Dserver.port=9091 -Dproject.name=cloudalibaba-sentinel-clusterServer -Dcsp.sentinel.log.use.pid=true

-Dserver.port=9092 -Dproject.name=cloudalibaba-sentinel-clusterServer -Dcsp.sentinel.log.use.pid=true

-Dserver.port=9093 -Dproject.name=cloudalibaba-sentinel-clusterServer -Dcsp.sentinel.log.use.pid=true

控制台配置

登录sentinel的控制台,并有访问量后,我们就可以在 Sentinel上面看到集群流控:

点击添加Token Server。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-MNDP51sm-1683617959600)(C:\Users\86151\AppData\Roaming\Typora\typora-user-images\image-20230509103407298.png)]

sentinel结合nacos实现集群限流

ClusterGroupEntity解析类

package com.liang.springcloud.alibaba.entity;

import java.util.Set;

/**

* @author Eric Zhao

* @since 1.4.1

*/

public class ClusterGroupEntity {

private String machineId;

private String ip;

private Integer port;

private Set<String> clientSet;

public String getMachineId() {

return machineId;

}

public ClusterGroupEntity setMachineId(String machineId) {

this.machineId = machineId;

return this;

}

public String getIp() {

return ip;

}

public ClusterGroupEntity setIp(String ip) {

this.ip = ip;

return this;

}

public Integer getPort() {

return port;

}

public ClusterGroupEntity setPort(Integer port) {

this.port = port;

return this;

}

public Set<String> getClientSet() {

return clientSet;

}

public ClusterGroupEntity setClientSet(Set<String> clientSet) {

this.clientSet = clientSet;

return this;

}

@Override

public String toString() {

return "ClusterGroupEntity{" +

"machineId='" + machineId + '\'' +

", ip='" + ip + '\'' +

", port=" + port +

", clientSet=" + clientSet +

'}';

}

}

Constants

/*

* Copyright 1999-2018 Alibaba Group Holding Ltd.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.liang.springcloud.alibaba;

/**

* @author Eric Zhao

*/

public final class Constants {

public static final String FLOW_POSTFIX = "-flow-rules";

public static final String PARAM_FLOW_POSTFIX = "-param-rules";

public static final String SERVER_NAMESPACE_SET_POSTFIX = "-cs-namespace-set";

public static final String CLIENT_CONFIG_POSTFIX = "-cluster-client-config";

public static final String CLUSTER_MAP_POSTFIX = "-cluster-map";

private Constants() {}

}

在resources文件夹下创建META-INF/service,然后创建一个叫做com.alibaba.csp.sentinel.init.InitFunc的文件,在文件中指名实现InitFunc接口的类全路径,内容如下:

com.liang.springcloud.alibaba.init.ClusterInitFunc

ClusterInitFunc

package com.liang.springcloud.alibaba.init;

import java.util.List;

import java.util.Objects;

import java.util.Optional;

import com.alibaba.csp.sentinel.cluster.ClusterStateManager;

import com.alibaba.csp.sentinel.cluster.client.config.ClusterClientAssignConfig;

import com.alibaba.csp.sentinel.cluster.client.config.ClusterClientConfig;

import com.alibaba.csp.sentinel.cluster.client.config.ClusterClientConfigManager;

import com.alibaba.csp.sentinel.cluster.flow.rule.ClusterFlowRuleManager;

import com.alibaba.csp.sentinel.cluster.flow.rule.ClusterParamFlowRuleManager;

import com.alibaba.csp.sentinel.cluster.server.config.ClusterServerConfigManager;

import com.alibaba.csp.sentinel.cluster.server.config.ServerTransportConfig;

import com.alibaba.csp.sentinel.datasource.ReadableDataSource;

import com.alibaba.csp.sentinel.datasource.nacos.NacosDataSource;

import com.liang.springcloud.alibaba.Constants;

import com.liang.springcloud.alibaba.entity.ClusterGroupEntity;

import com.alibaba.csp.sentinel.init.InitFunc;

import com.alibaba.csp.sentinel.slots.block.flow.FlowRule;

import com.alibaba.csp.sentinel.slots.block.flow.FlowRuleManager;

import com.alibaba.csp.sentinel.slots.block.flow.param.ParamFlowRule;

import com.alibaba.csp.sentinel.slots.block.flow.param.ParamFlowRuleManager;

import com.alibaba.csp.sentinel.transport.config.TransportConfig;

import com.alibaba.csp.sentinel.util.AppNameUtil;

import com.alibaba.csp.sentinel.util.HostNameUtil;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.TypeReference;

/**

* @author Eric Zhao

*/

public class ClusterInitFunc implements InitFunc {

private static final String APP_NAME = AppNameUtil.getAppName();

private final String remoteAddress = "localhost:8839";

private final String groupId = "SENTINEL_GROUP";

private final String flowDataId = APP_NAME + Constants.FLOW_POSTFIX;

private final String paramDataId = APP_NAME + Constants.PARAM_FLOW_POSTFIX;

private final String configDataId = APP_NAME + "-cluster-client-config";

private final String clusterMapDataId = APP_NAME + Constants.CLUSTER_MAP_POSTFIX;

@Override

public void init() throws Exception {

// Register client dynamic rule data source.

initDynamicRuleProperty();

// Register token client related data source.

// Token client common config:

initClientConfigProperty();

// Token client assign config (e.g. target token server) retrieved from assign map:

initClientServerAssignProperty();

// Register token server related data source.

// Register dynamic rule data source supplier for token server:

registerClusterRuleSupplier();

// Token server transport config extracted from assign map:

initServerTransportConfigProperty();

// Init cluster state property for extracting mode from cluster map data source.

initStateProperty();

}

private void initDynamicRuleProperty() {

ReadableDataSource<String, List<FlowRule>> ruleSource = new NacosDataSource<>(remoteAddress, groupId,

flowDataId, source -> JSON.parseObject(source, new TypeReference<List<FlowRule>>() {}));

FlowRuleManager.register2Property(ruleSource.getProperty());

ReadableDataSource<String, List<ParamFlowRule>> paramRuleSource = new NacosDataSource<>(remoteAddress, groupId,

paramDataId, source -> JSON.parseObject(source, new TypeReference<List<ParamFlowRule>>() {}));

ParamFlowRuleManager.register2Property(paramRuleSource.getProperty());

}

private void initClientConfigProperty() {

ReadableDataSource<String, ClusterClientConfig> clientConfigDs = new NacosDataSource<>(remoteAddress, groupId,

configDataId, source -> JSON.parseObject(source, new TypeReference<ClusterClientConfig>() {}));

ClusterClientConfigManager.registerClientConfigProperty(clientConfigDs.getProperty());

}

private void initServerTransportConfigProperty() {

ReadableDataSource<String, ServerTransportConfig> serverTransportDs = new NacosDataSource<>(remoteAddress, groupId,

clusterMapDataId, source -> {

List<ClusterGroupEntity> groupList = JSON.parseObject(source, new TypeReference<List<ClusterGroupEntity>>() {});

return Optional.ofNullable(groupList)

.flatMap(this::extractServerTransportConfig)

.orElse(null);

});

ClusterServerConfigManager.registerServerTransportProperty(serverTransportDs.getProperty());

}

private void registerClusterRuleSupplier() {

// Register cluster flow rule property supplier which creates data source by namespace.

// Flow rule dataId format: ${namespace}-flow-rules

ClusterFlowRuleManager.setPropertySupplier(namespace -> {

ReadableDataSource<String, List<FlowRule>> ds = new NacosDataSource<>(remoteAddress, groupId,

namespace + Constants.FLOW_POSTFIX, source -> JSON.parseObject(source, new TypeReference<List<FlowRule>>() {}));

return ds.getProperty();

});

// Register cluster parameter flow rule property supplier which creates data source by namespace.

ClusterParamFlowRuleManager.setPropertySupplier(namespace -> {

ReadableDataSource<String, List<ParamFlowRule>> ds = new NacosDataSource<>(remoteAddress, groupId,

namespace + Constants.PARAM_FLOW_POSTFIX, source -> JSON.parseObject(source, new TypeReference<List<ParamFlowRule>>() {}));

return ds.getProperty();

});

}

private void initClientServerAssignProperty() {

// Cluster map format:

// [{"clientSet":["112.12.88.66@8729","112.12.88.67@8727"],"ip":"112.12.88.68","machineId":"112.12.88.68@8728","port":11111}]

// machineId: <ip@commandPort>, commandPort for port exposed to Sentinel dashboard (transport module)

ReadableDataSource<String, ClusterClientAssignConfig> clientAssignDs = new NacosDataSource<>(remoteAddress, groupId,

clusterMapDataId, source -> {

List<ClusterGroupEntity> groupList = JSON.parseObject(source, new TypeReference<List<ClusterGroupEntity>>() {});

return Optional.ofNullable(groupList)

.flatMap(this::extractClientAssignment)

.orElse(null);

});

ClusterClientConfigManager.registerServerAssignProperty(clientAssignDs.getProperty());

}

private void initStateProperty() {

// Cluster map format:

// [{"clientSet":["112.12.88.66@8729","112.12.88.67@8727"],"ip":"112.12.88.68","machineId":"112.12.88.68@8728","port":11111}]

// machineId: <ip@commandPort>, commandPort for port exposed to Sentinel dashboard (transport module)

ReadableDataSource<String, Integer> clusterModeDs = new NacosDataSource<>(remoteAddress, groupId,

clusterMapDataId, source -> {

List<ClusterGroupEntity> groupList = JSON.parseObject(source, new TypeReference<List<ClusterGroupEntity>>() {});

return Optional.ofNullable(groupList)

.map(this::extractMode)

.orElse(ClusterStateManager.CLUSTER_NOT_STARTED);

});

ClusterStateManager.registerProperty(clusterModeDs.getProperty());

}

private int extractMode(List<ClusterGroupEntity> groupList) {

// If any server group machineId matches current, then it's token server.

if (groupList.stream().anyMatch(this::machineEqual)) {

return ClusterStateManager.CLUSTER_SERVER;

}

// If current machine belongs to any of the token server group, then it's token client.

// Otherwise it's unassigned, should be set to NOT_STARTED.

boolean canBeClient = groupList.stream()

.flatMap(e -> e.getClientSet().stream())

.filter(Objects::nonNull)

.anyMatch(e -> e.equals(getCurrentMachineId()));

return canBeClient ? ClusterStateManager.CLUSTER_CLIENT : ClusterStateManager.CLUSTER_NOT_STARTED;

}

private Optional<ServerTransportConfig> extractServerTransportConfig(List<ClusterGroupEntity> groupList) {

return groupList.stream()

.filter(this::machineEqual)

.findAny()

.map(e -> new ServerTransportConfig().setPort(e.getPort()).setIdleSeconds(600));

}

private Optional<ClusterClientAssignConfig> extractClientAssignment(List<ClusterGroupEntity> groupList) {

if (groupList.stream().anyMatch(this::machineEqual)) {

return Optional.empty();

}

// Build client assign config from the client set of target server group.

for (ClusterGroupEntity group : groupList) {

if (group.getClientSet().contains(getCurrentMachineId())) {

String ip = group.getIp();

Integer port = group.getPort();

return Optional.of(new ClusterClientAssignConfig(ip, port));

}

}

return Optional.empty();

}

private boolean machineEqual(/*@Valid*/ ClusterGroupEntity group) {

return getCurrentMachineId().equals(group.getMachineId());

}

private String getCurrentMachineId() {

// Note: this may not work well for container-based env.

return HostNameUtil.getIp() + SEPARATOR + TransportConfig.getRuntimePort();

}

private static final String SEPARATOR = "@";

}