微服务前置中间件部署

1. MySQL主从

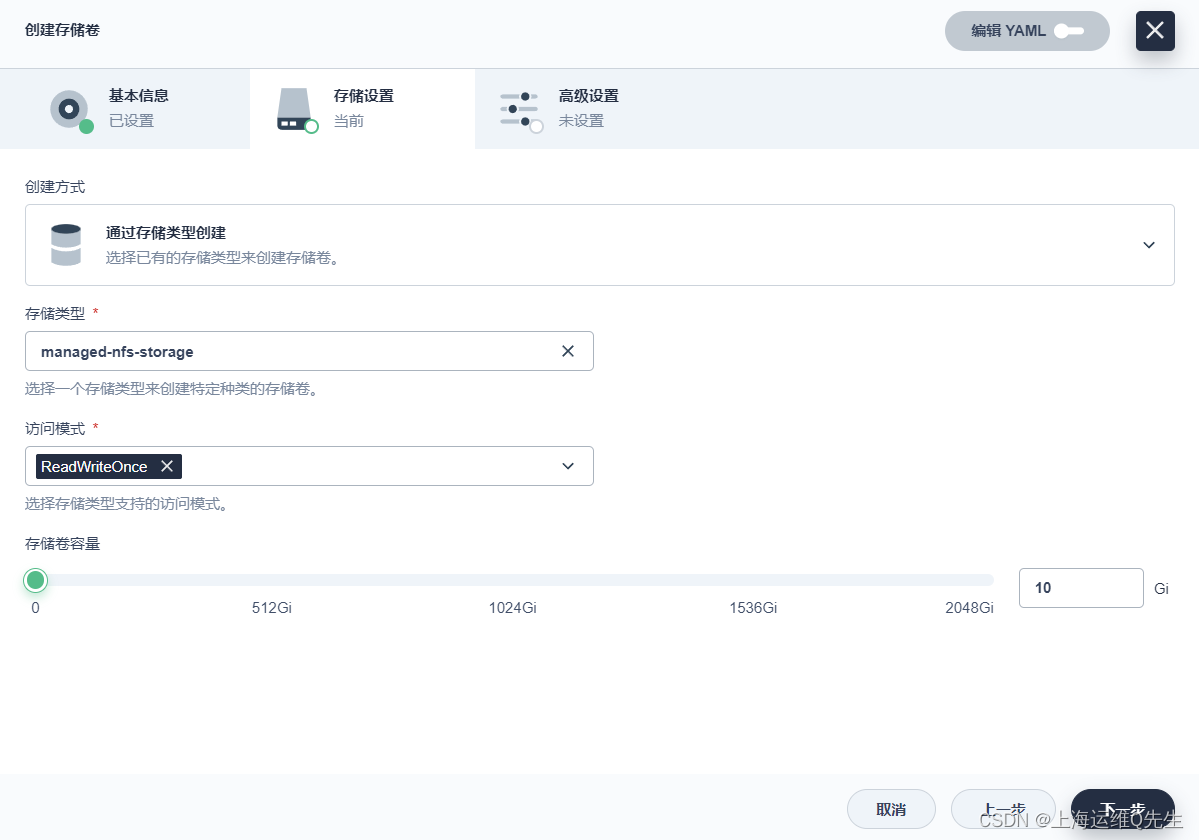

1.1 创建持久化存储

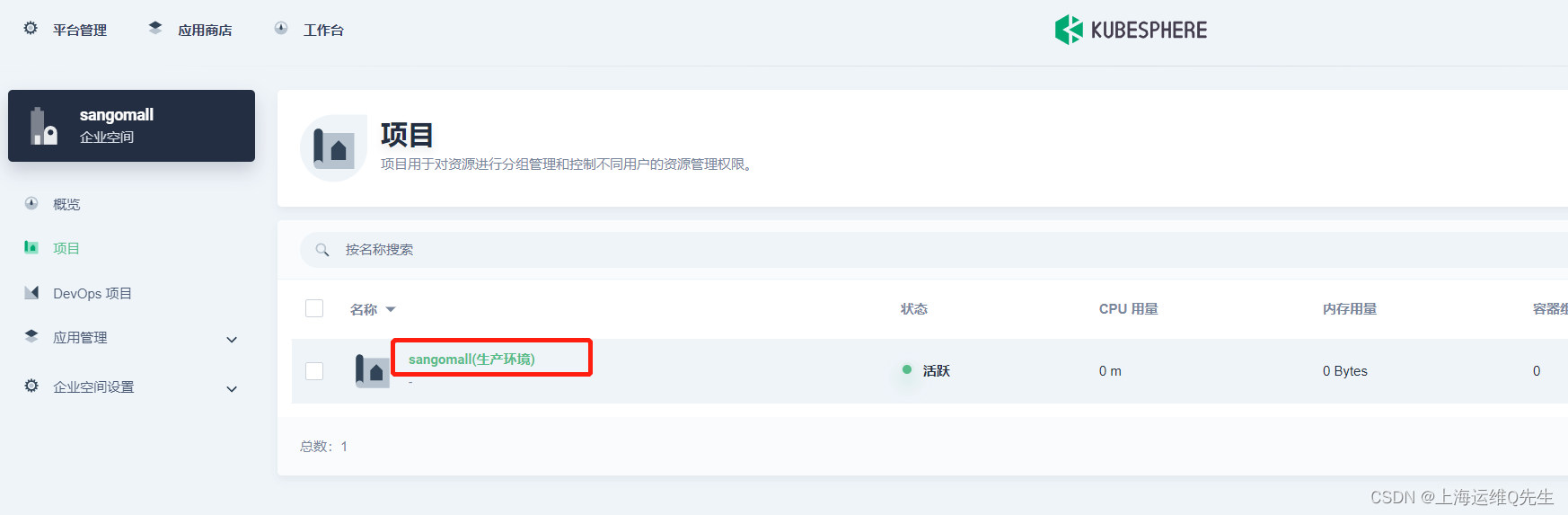

使用

project-admin账号

进入sangomall项目

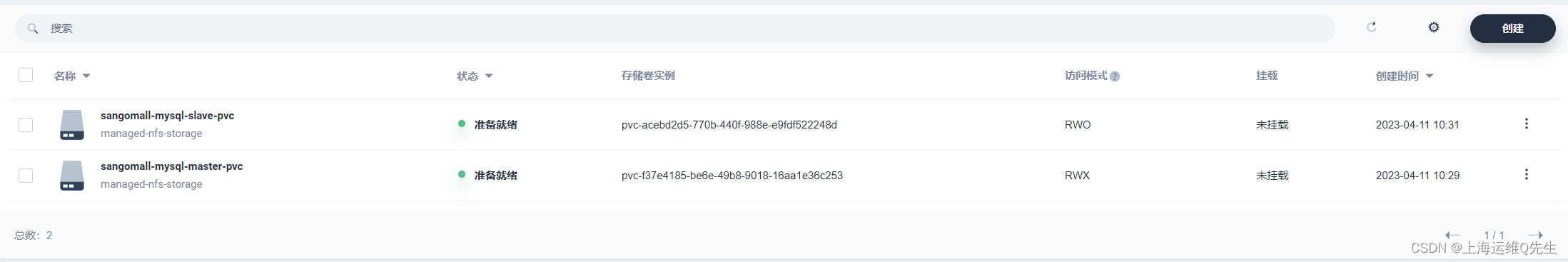

[存储],[存储卷],sangomall-mysql-master-pvc

storageclass创建详见K8s集群中部署KubeSphere 2.1章节

在k8s环境下可以看到这个sc

root@ks-master:~/yaml# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local (default) openebs.io/local Delete WaitForFirstConsumer false 19d

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate false 10m

多个节点挂载需要选择ReadWriteMany

[下一步],[创建],这样pvc就创建完成

再一样步骤创建sangomall-mysql-slave-pvc

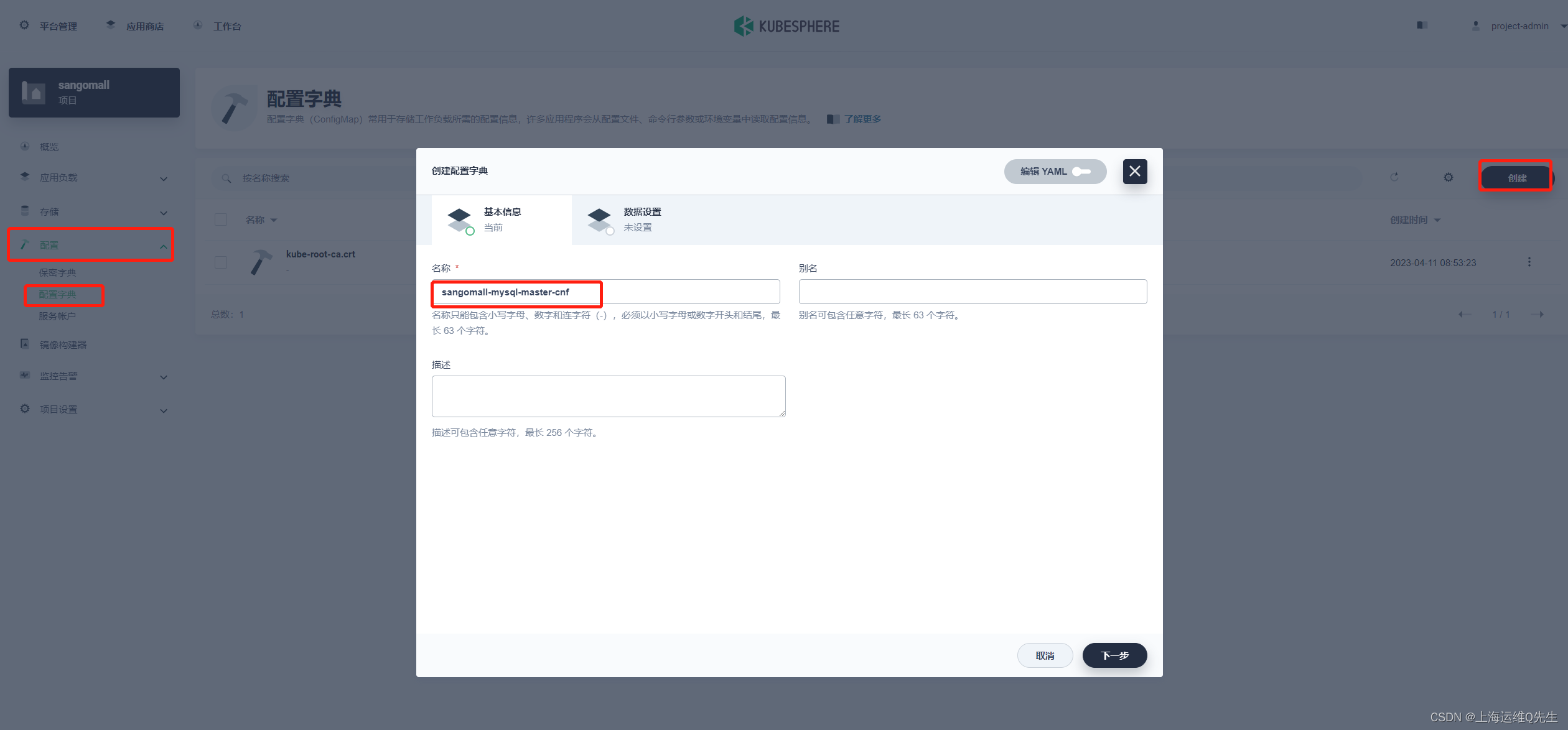

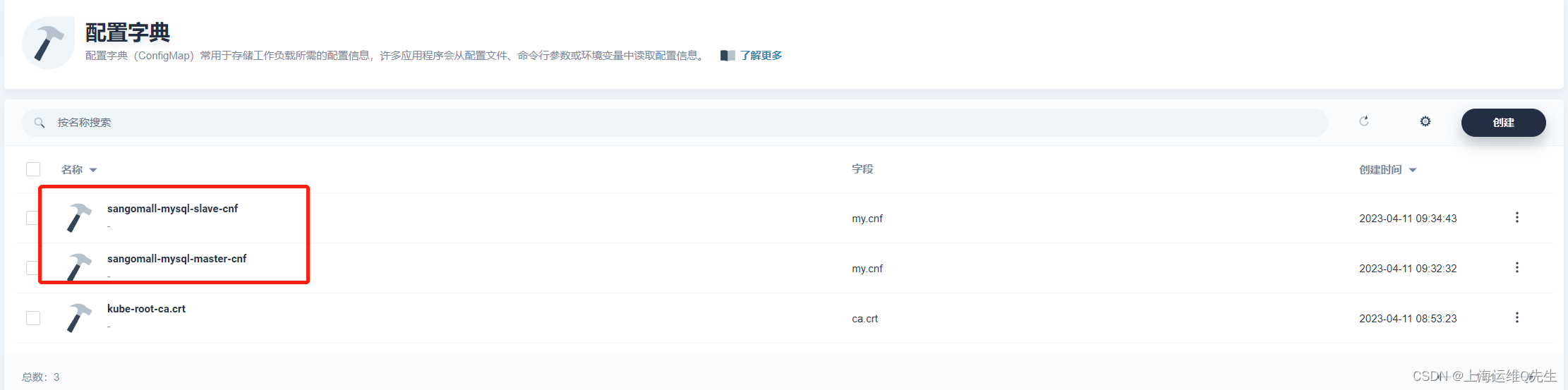

1.2 配置文件

1.1 Master节点

sangomall-mysql-master-cnf

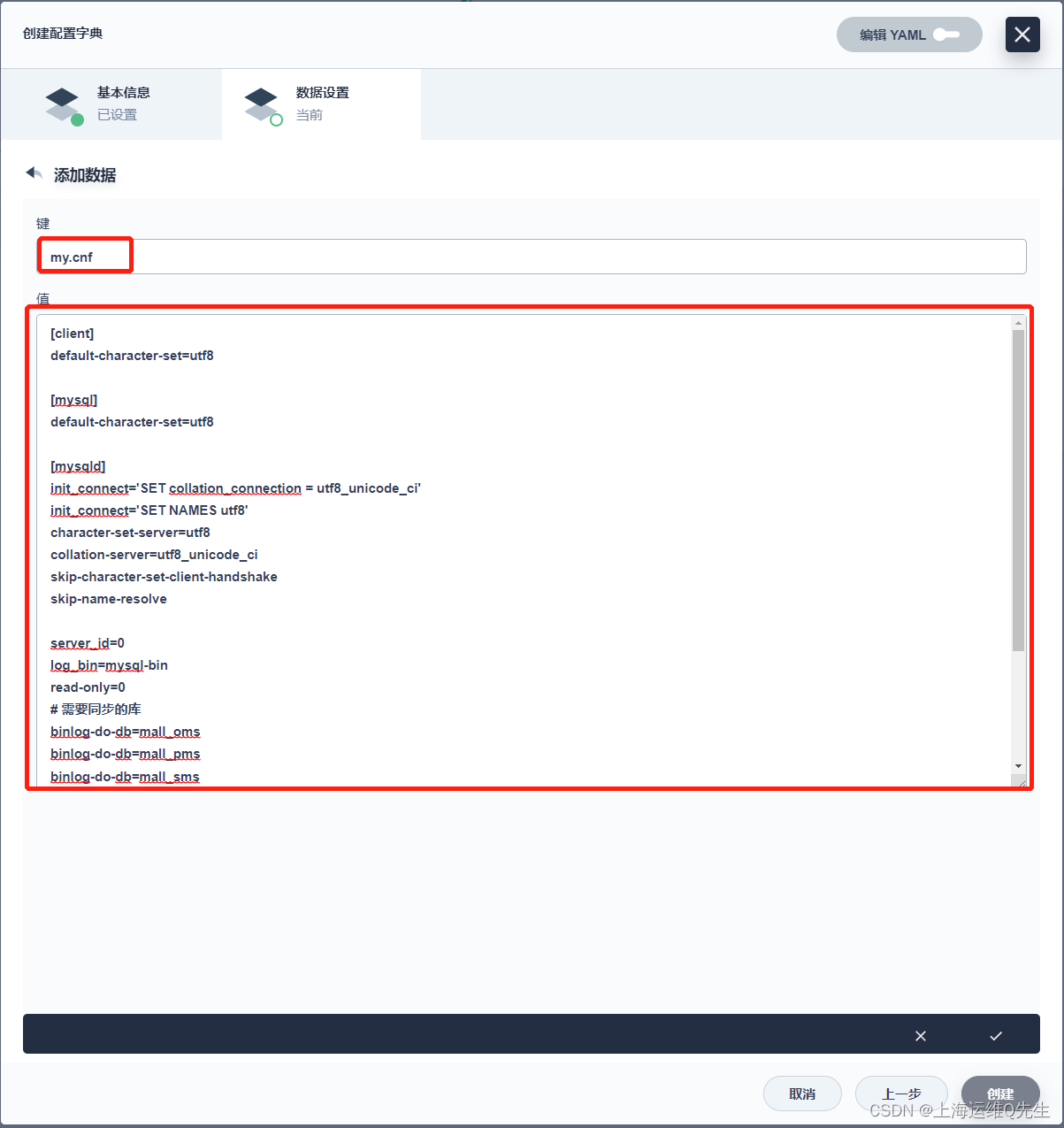

[添加数据]

skip-name-resolve一定要加,不然连接mysql 会慢

my.cnf,[下一步],[创建]

当你使用主从拓扑时,一定要对所有MysOL实例都分别指定一个独特的互不相同的server-id。默认值为0,当server-id=0时,对于主机来说依然会记录二进制日志,但会拒绝所有的从机连接,对于从机来说则会拒绝连接其它实例。

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

skip-ssl

server_id=1

log_bin=mysql-bin

read-only=0

binlog-do-db=mall_oms

binlog-do-db=mall_pms

binlog-do-db=mall_sms

binlog-do-db=mall_ums

binlog-do-db=mall_wms

replicate-ignore-db=mysql

replicate-ignore-db=sys

replicate-ignore-db=information_schema

replicate-ignore-db=performance_schema

1.2 Slave节点

和maste一样创建[sangomall-mysql-slave-cnf],[my.cnf],[下一步],[创建]

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

init_connect='SET collation_connection = utf8_unicode_ci'

init_connect='SET NAMES utf8'

character-set-server=utf8

collation-server=utf8_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

skip-ssl

server_id=2

log_bin=mysql-bin

read-only=1

binlog-do-db=mall_oms

binlog-do-db=mall_pms

binlog-do-db=mall_sms

binlog-do-db=mall_ums

binlog-do-db=mall_wms

replicate-ignore-db=mysql

replicate-ignore-db=sys

replicate-ignore-db=information_schema

replicate-ignore-db=performance_schema

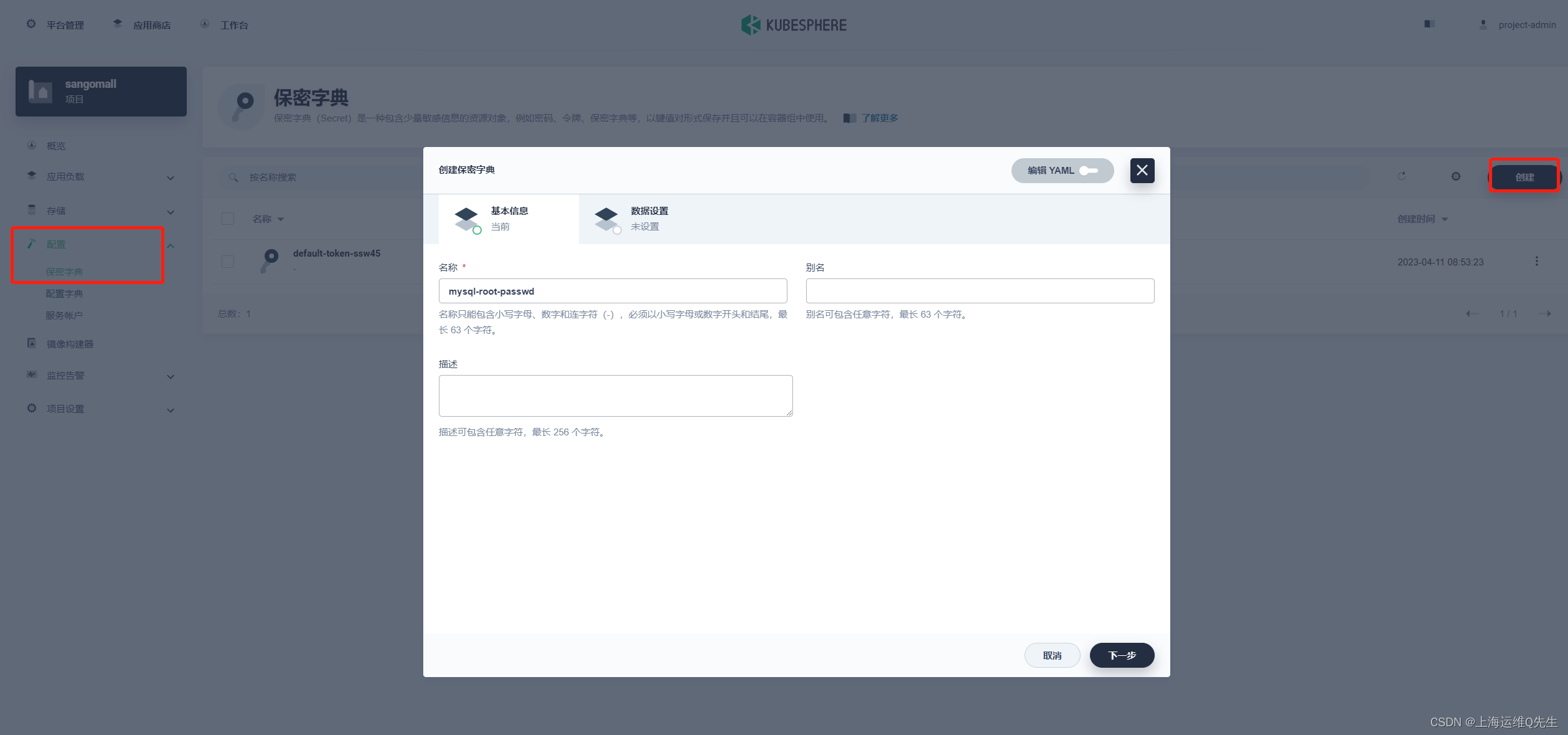

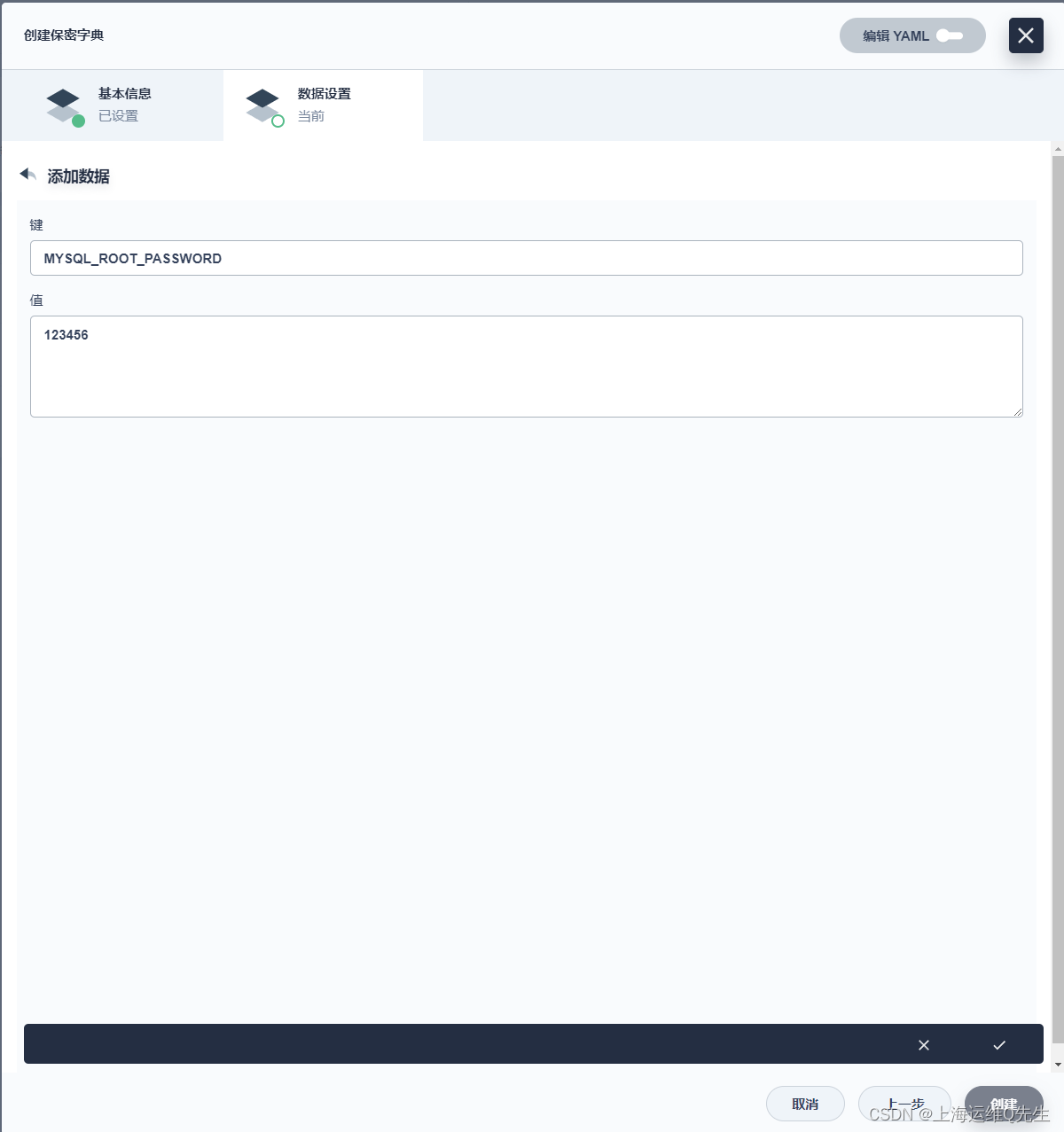

1.3 添加root密码

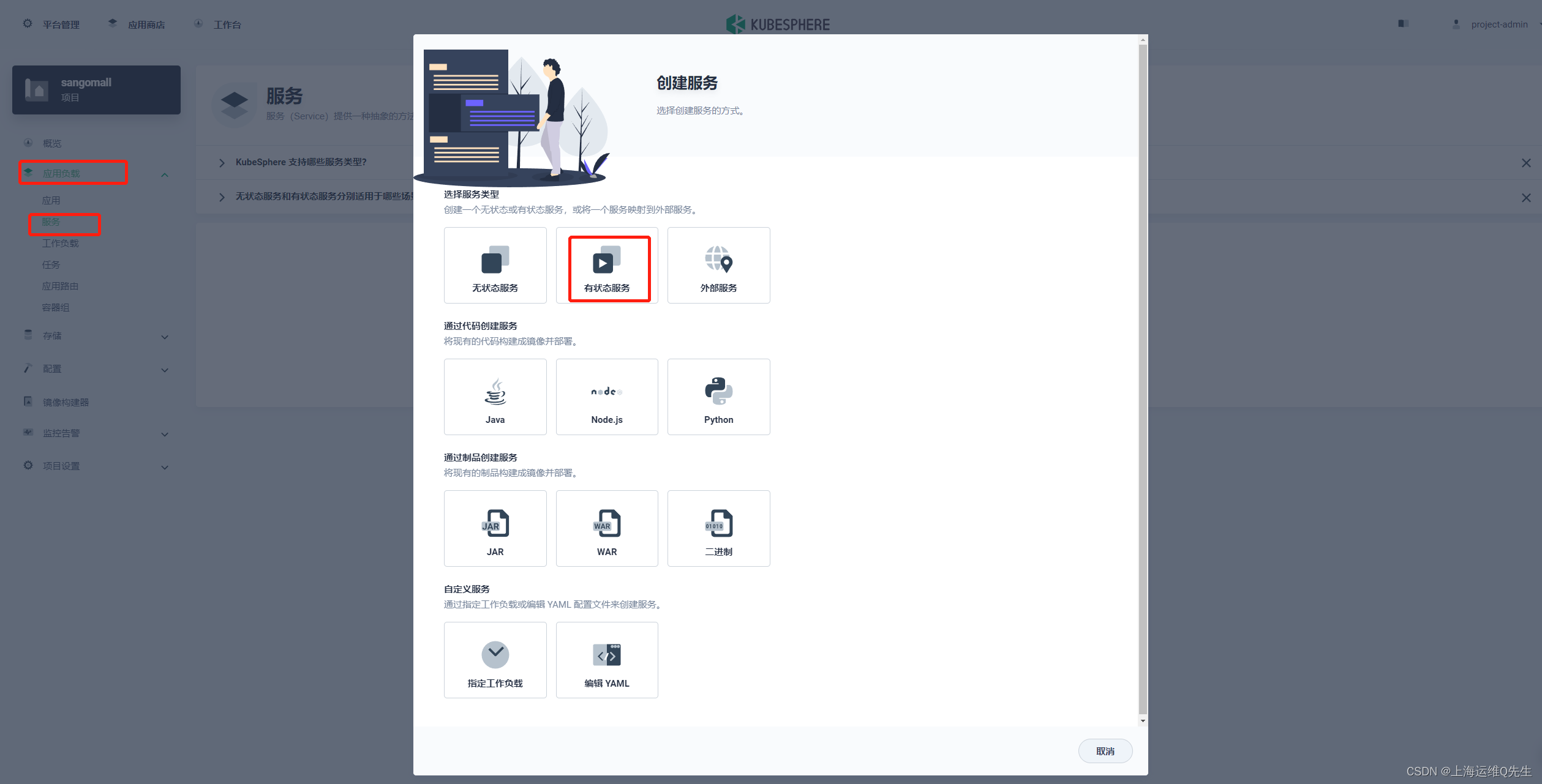

1.4 mysql部署

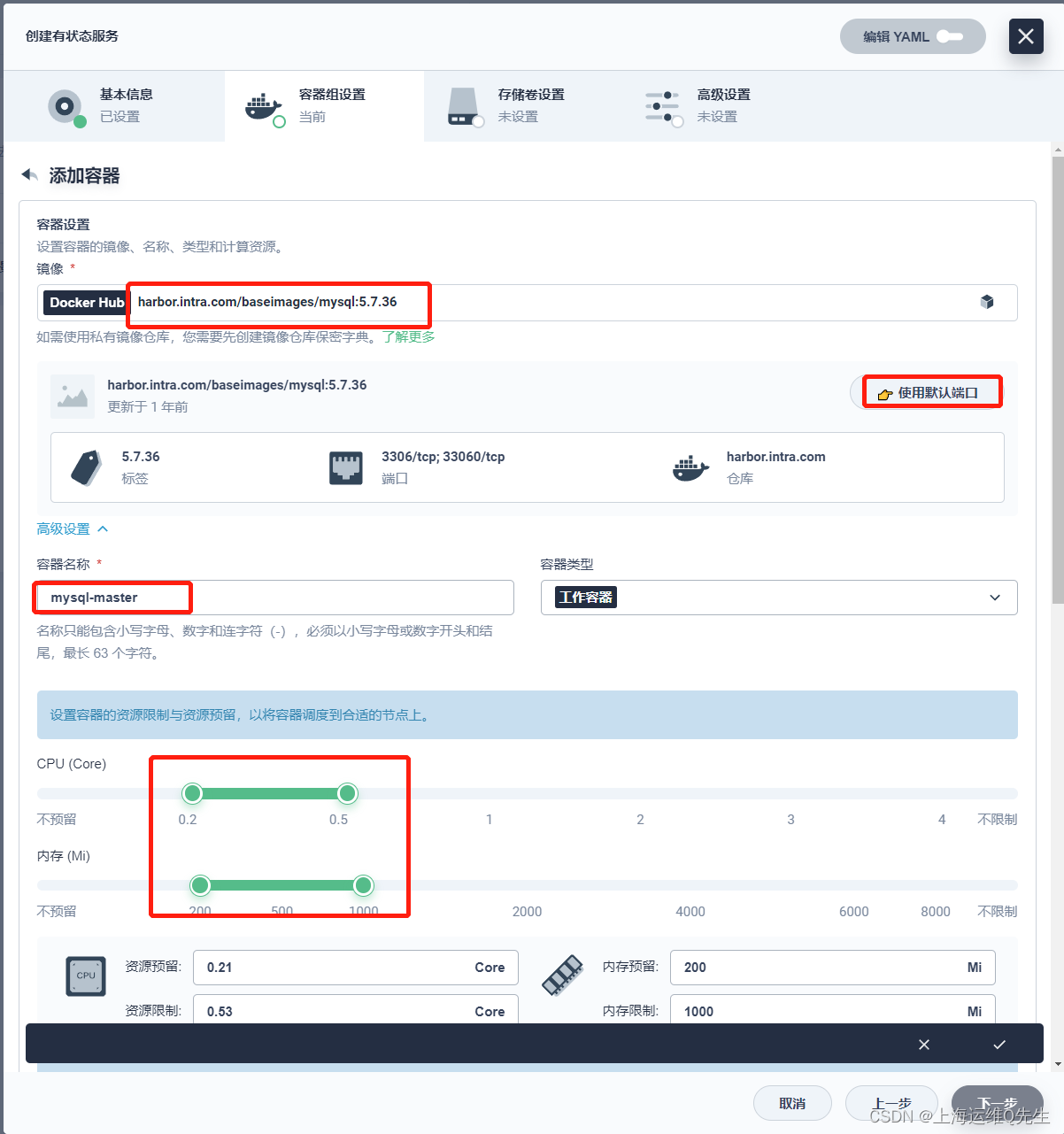

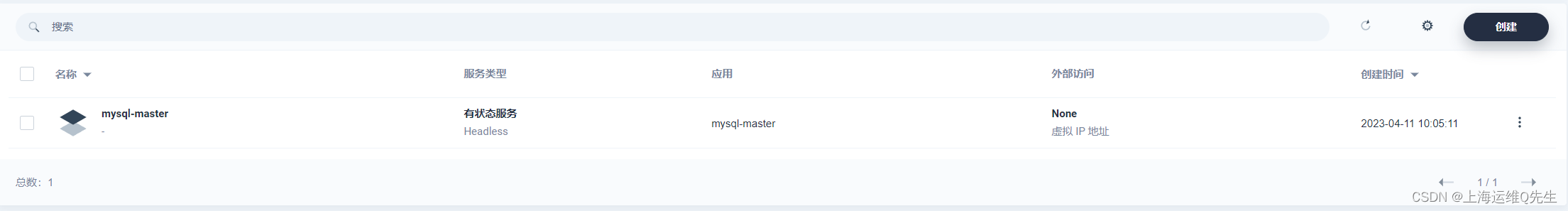

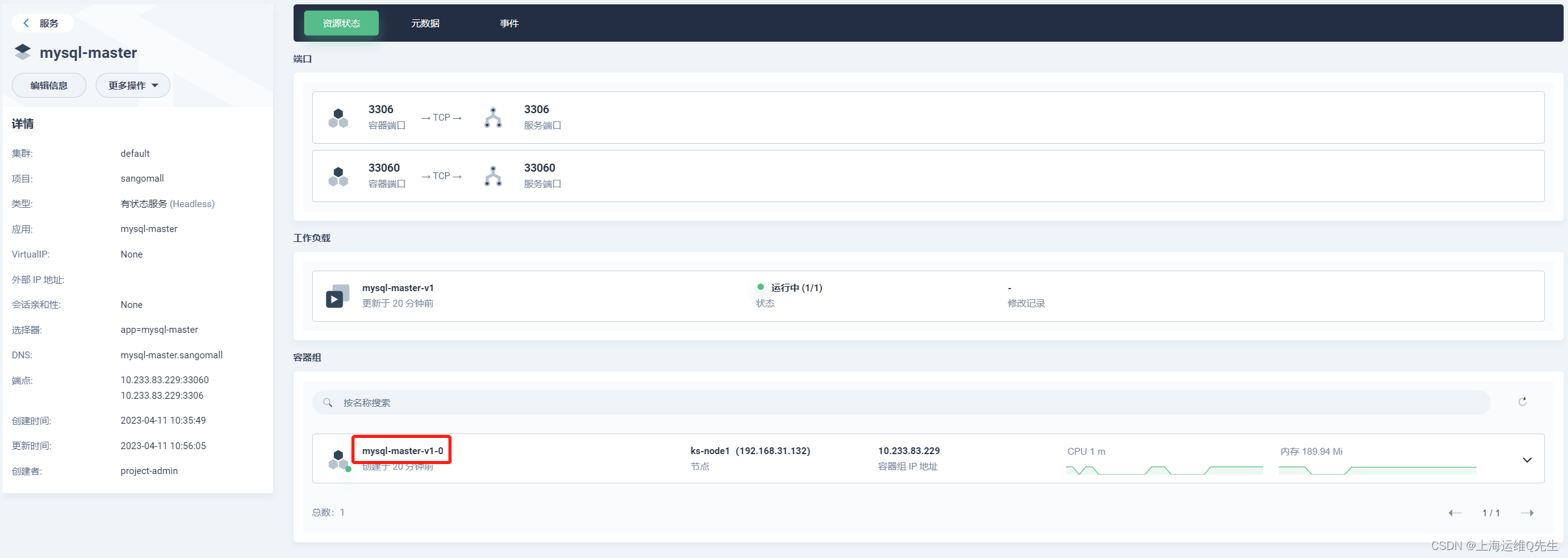

1.4.1 Master部署

创建有状态服务

mysql-master

选择harbor上镜像

harbor.intra.com/baseimages/mysql:5.7.36

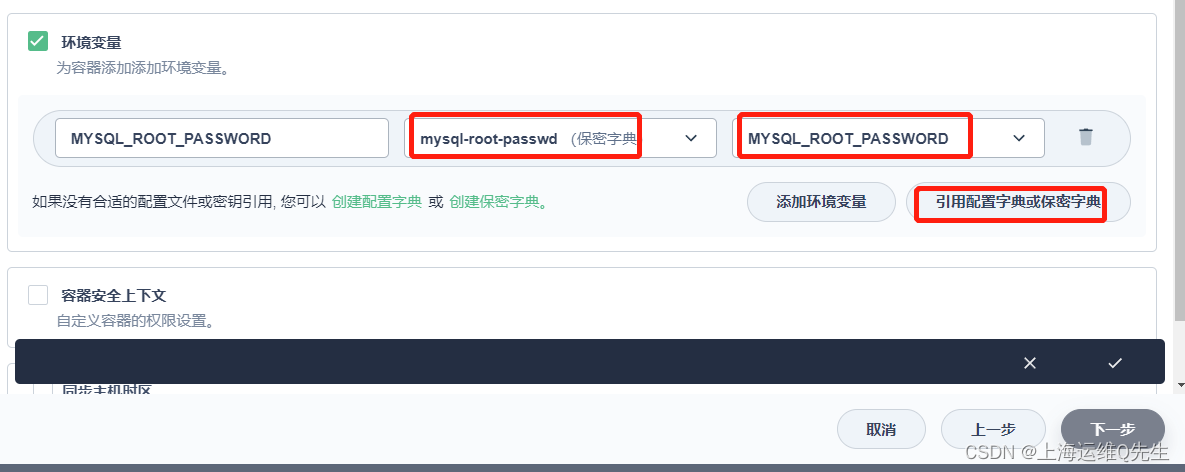

引用保密字典

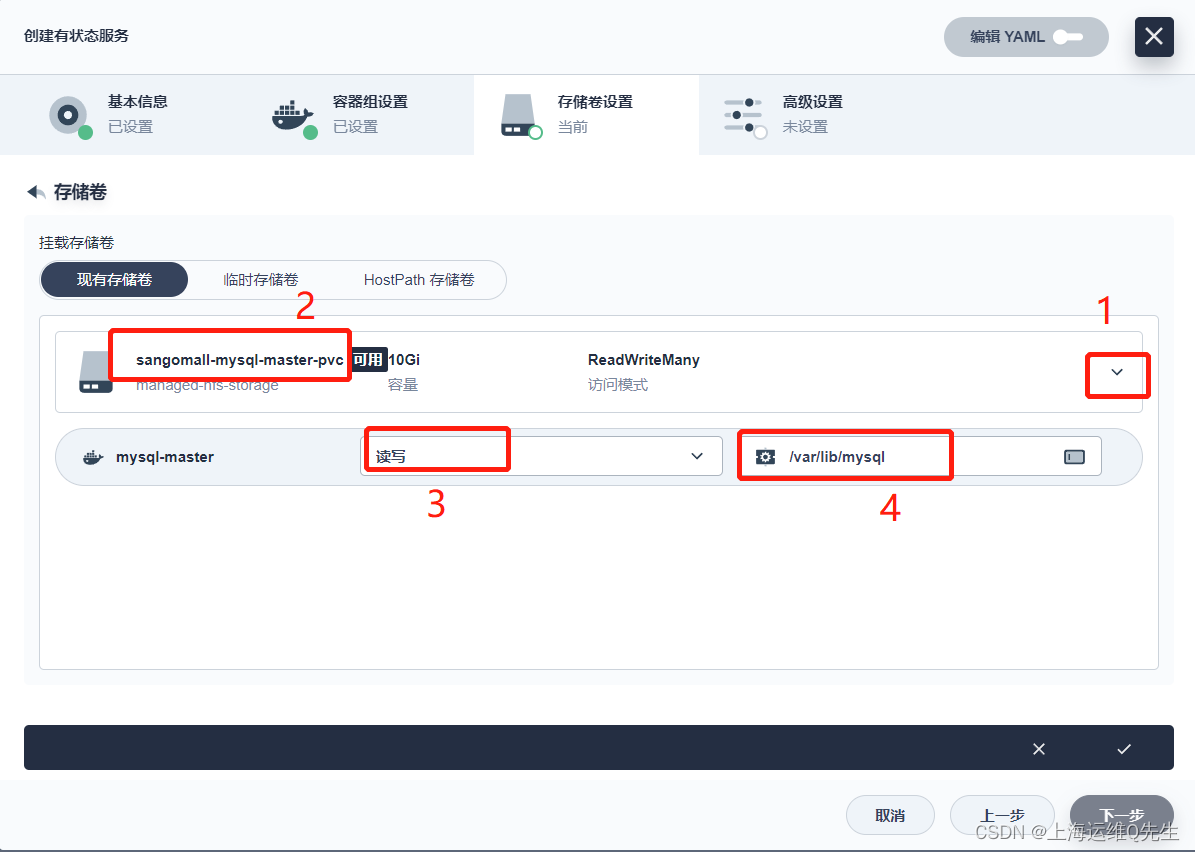

[下一步],[挂载存储卷] /var/lib/mysql

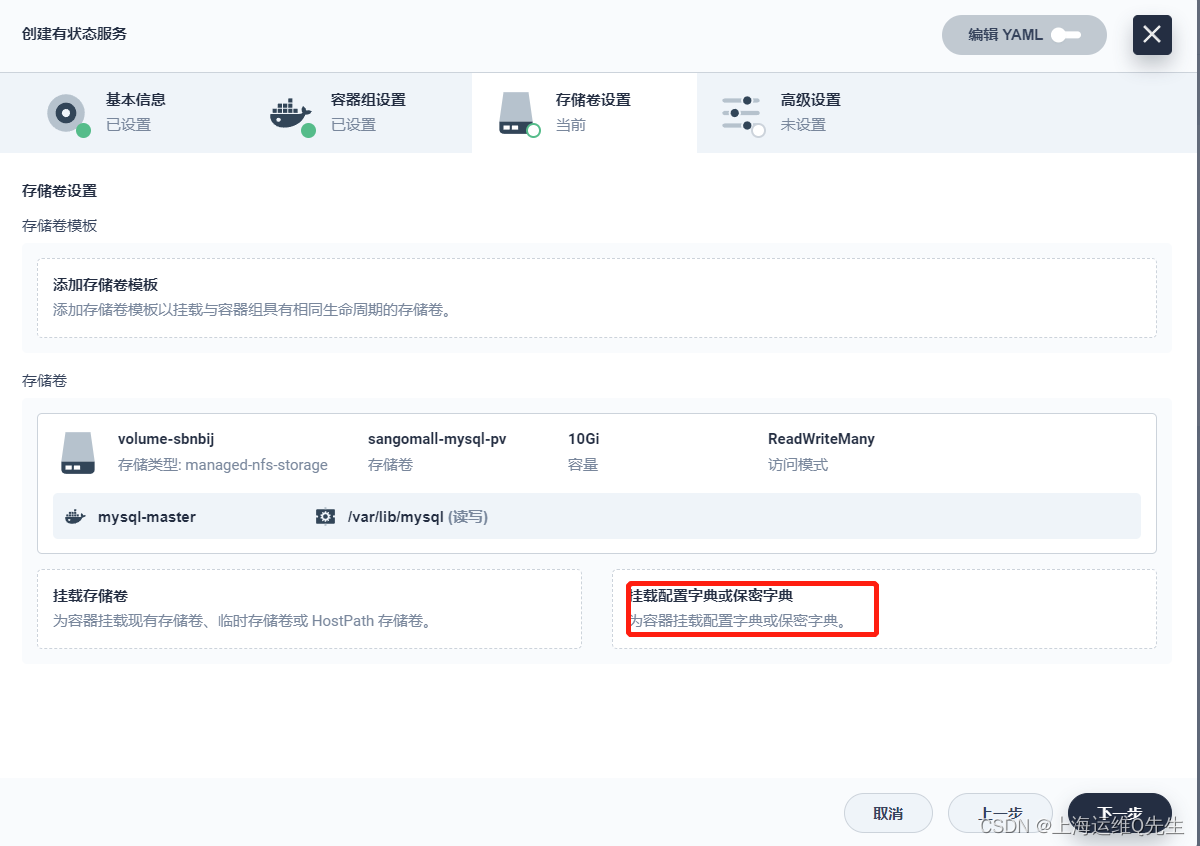

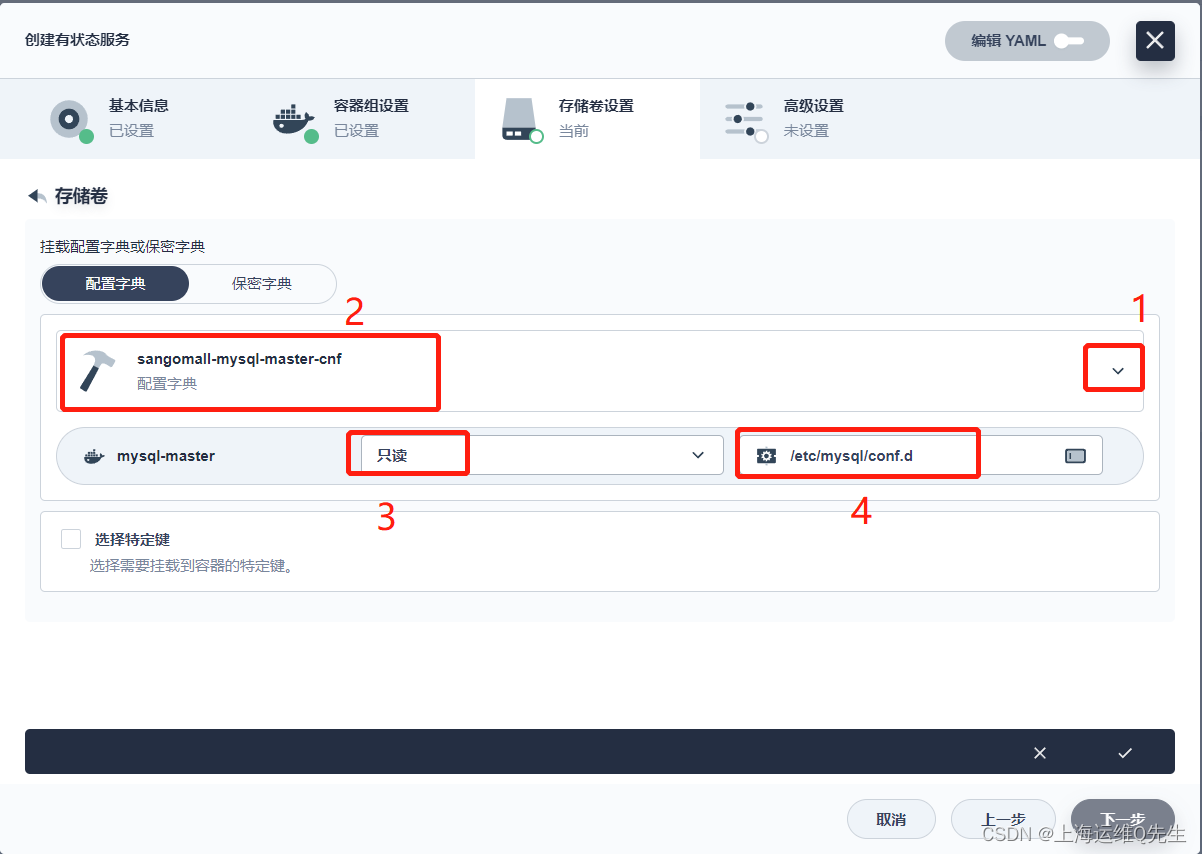

[挂载配置文件]

保密字典选择之前创建的sangomall-mysql-master-cnf,挂载到/etc/mysql/conf.d

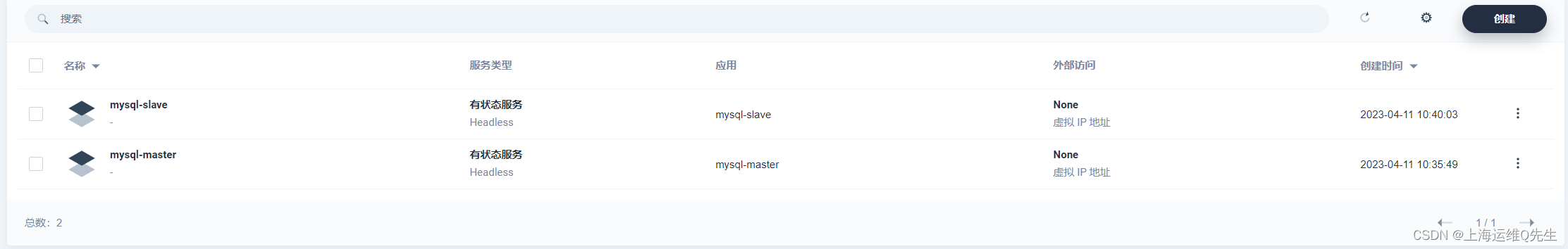

[下一步], [创建] Mysql-Master就被创建完成

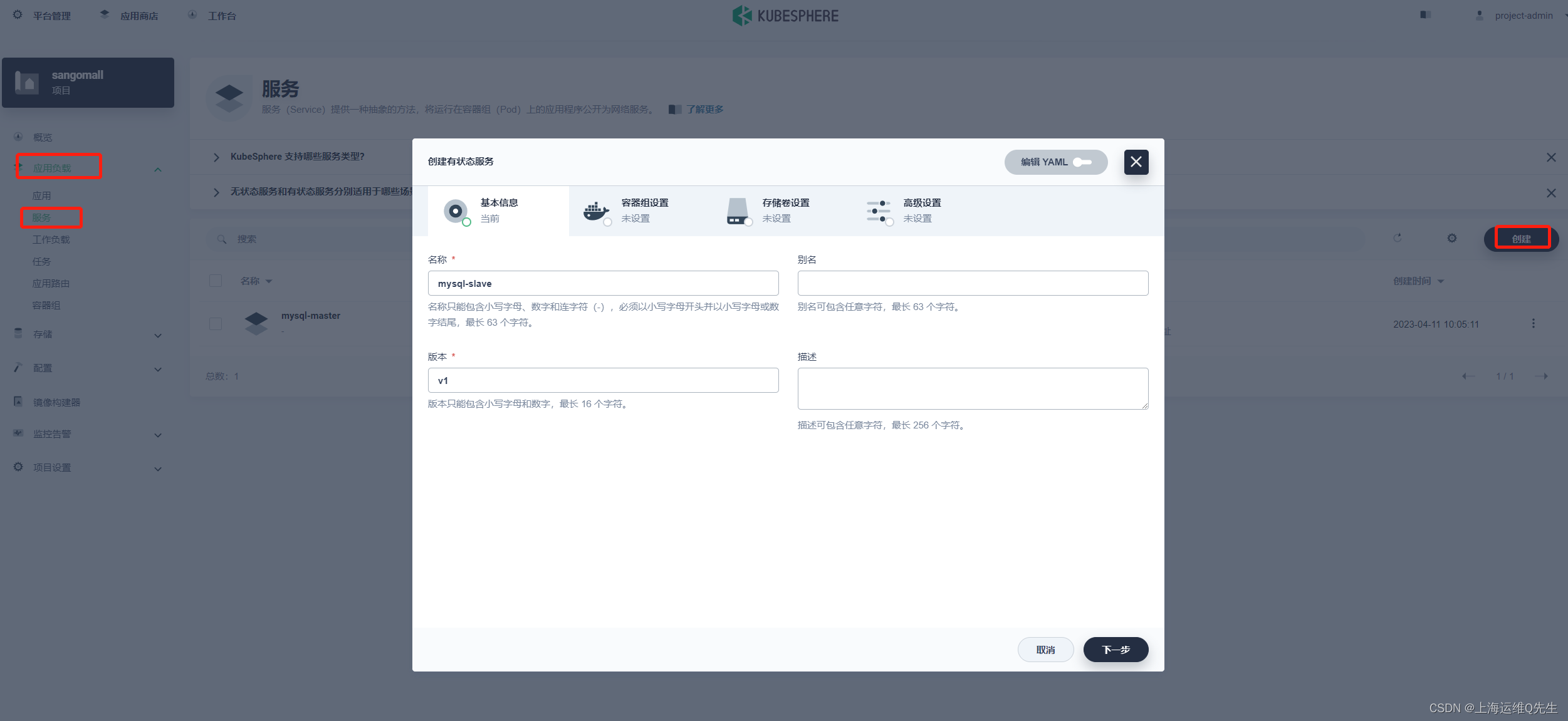

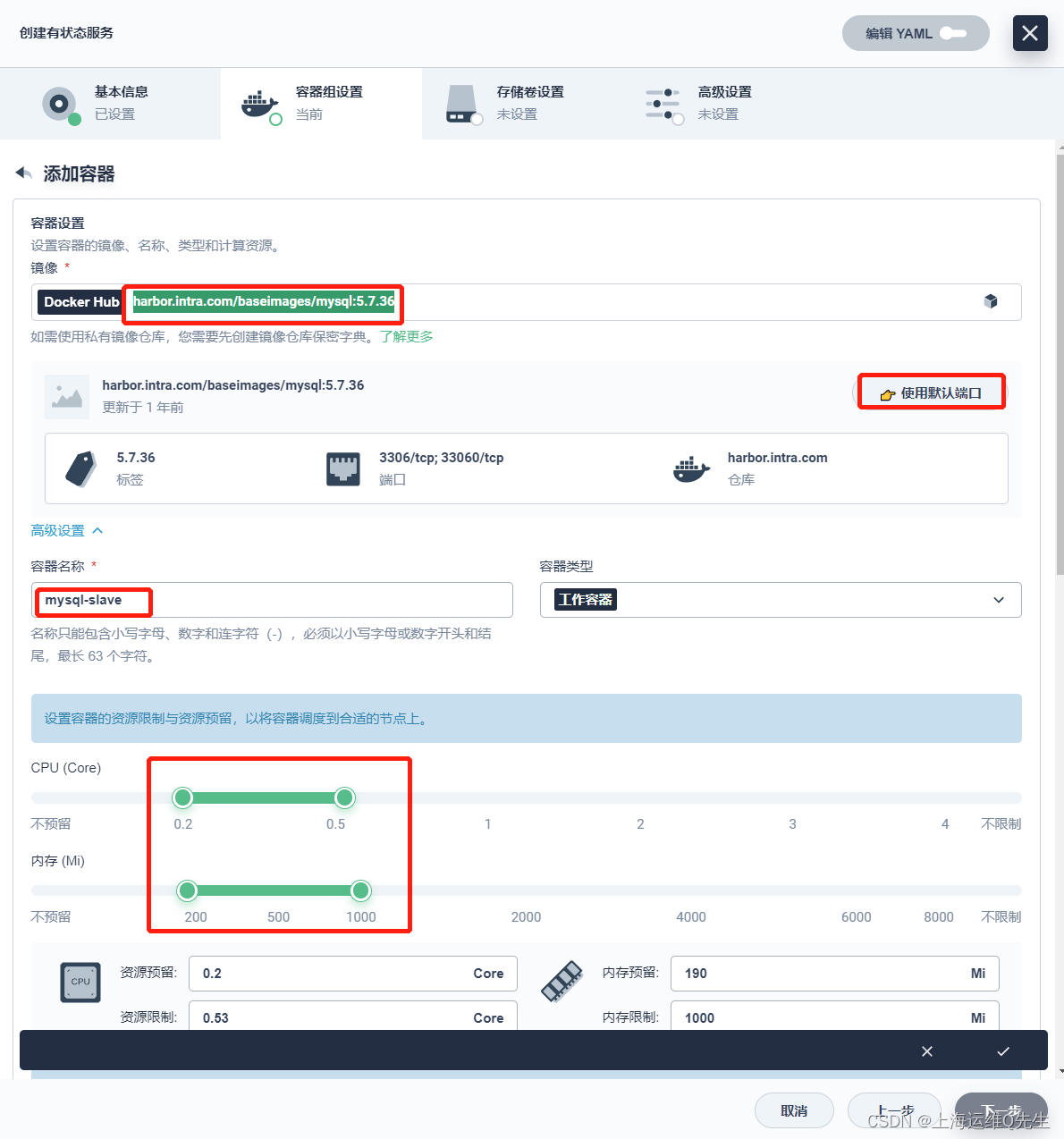

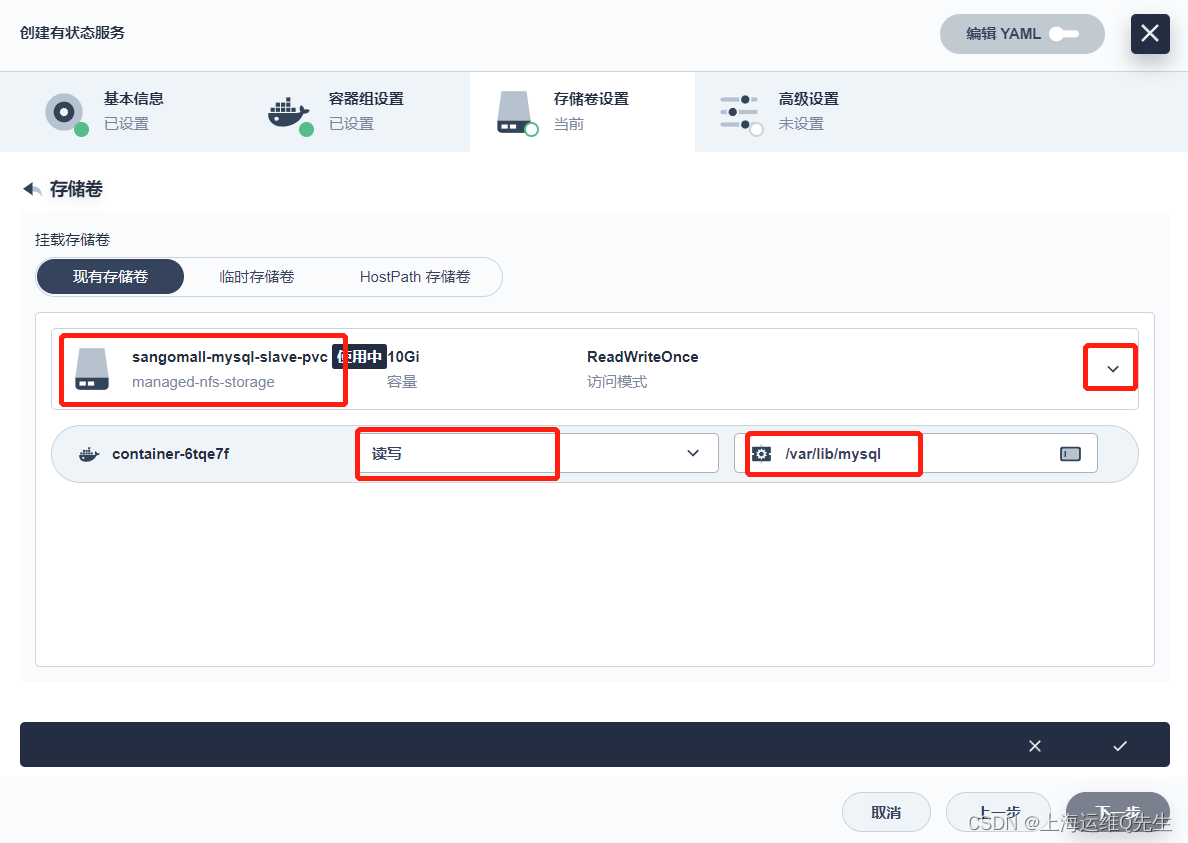

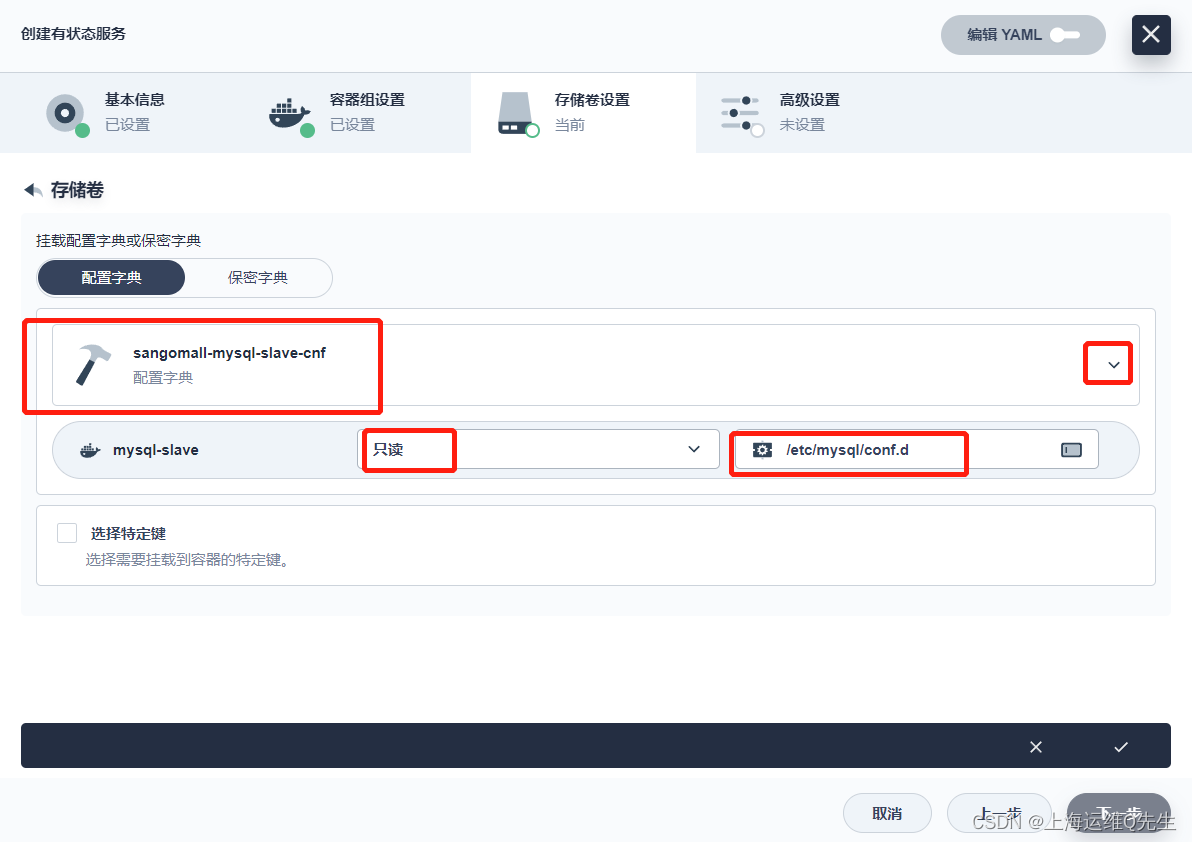

1.4.2 Slave部署

[mysql-slave]

harbor.intra.com/baseimages/mysql:5.7.36

/var/lib/mysql

/etc/mysql/conf.d

[下一步], [创建] Mysql-Slave就被创建完成

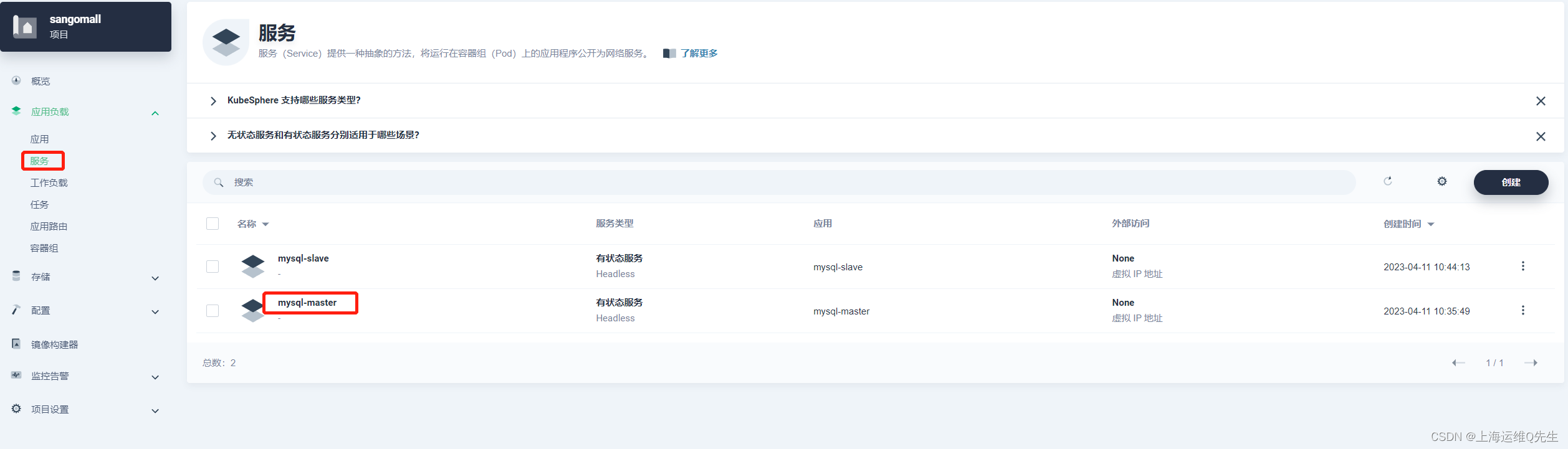

确认2个pod都被正确创建

root@ks-master:~# kubectl get pods -A |grep mysql

sangomall mysql-master-v1-0 1/1 Running 0 12m

sangomall mysql-slave-v1-0 1/1 Running 0 4m11s

1.5 配置主从复制

1.5 Master节点授权数据同步

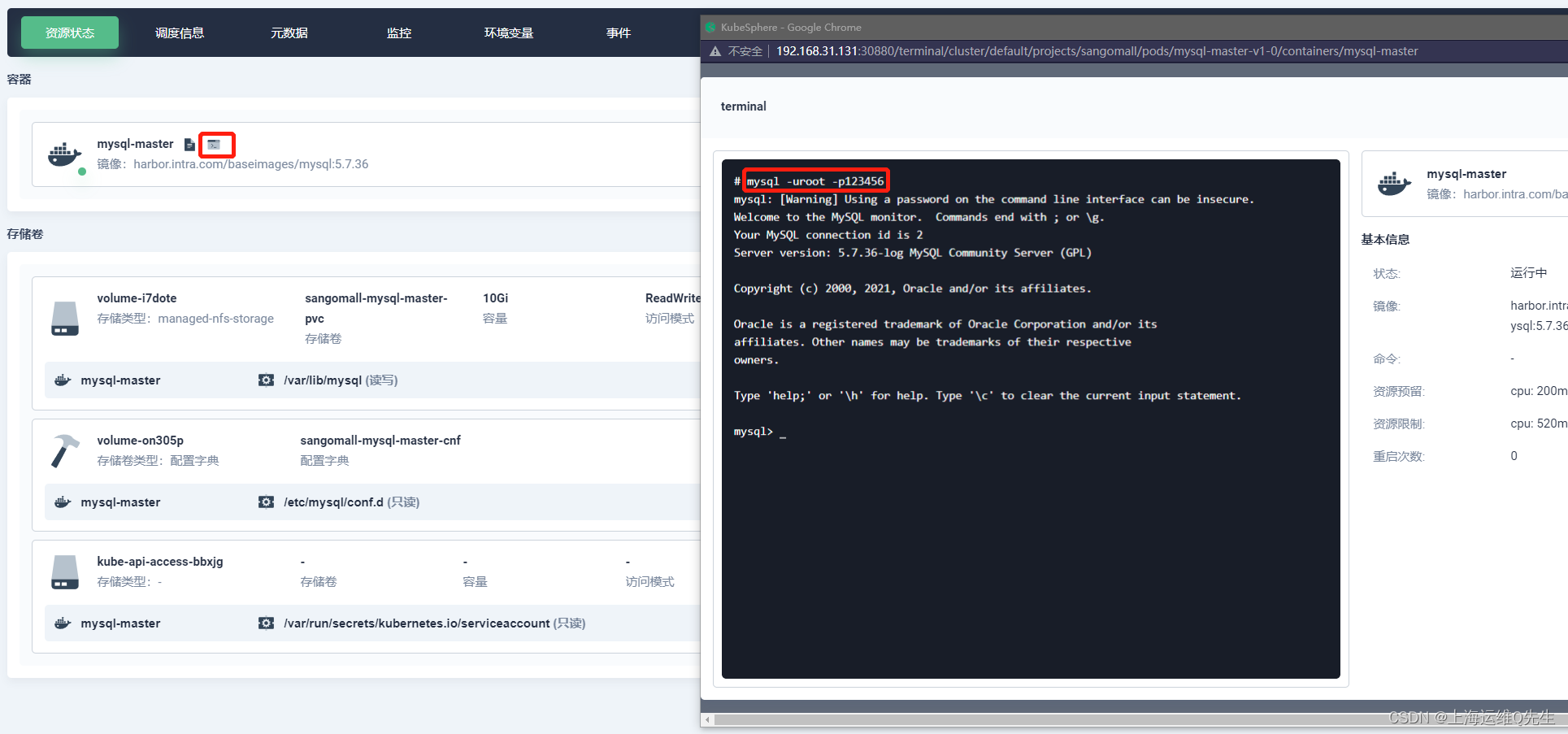

进入容器组

[打开终端]

mysql -uroot -p123456

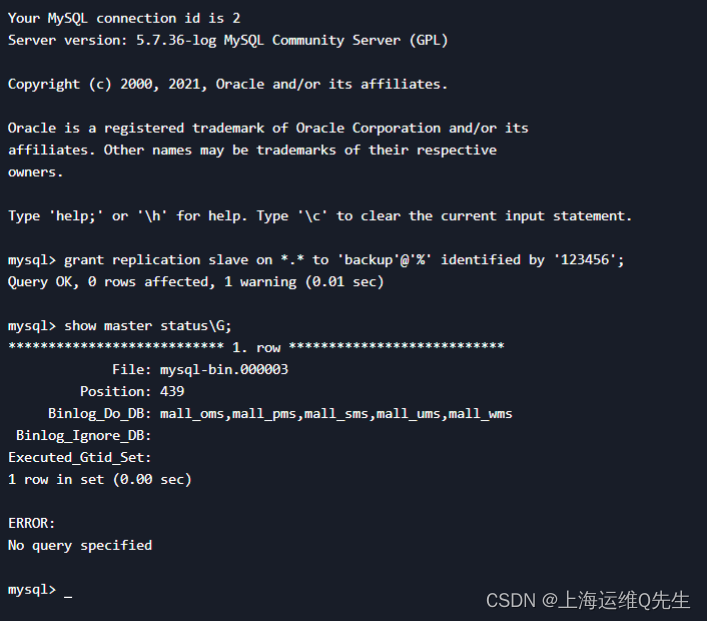

添加用于同步用户

grant replication slave on *.* to 'backup'@'%' identified by '123456';

查看master状态

show master status\G;

将内存保存

File: mysql-bin.000003

Position: 439

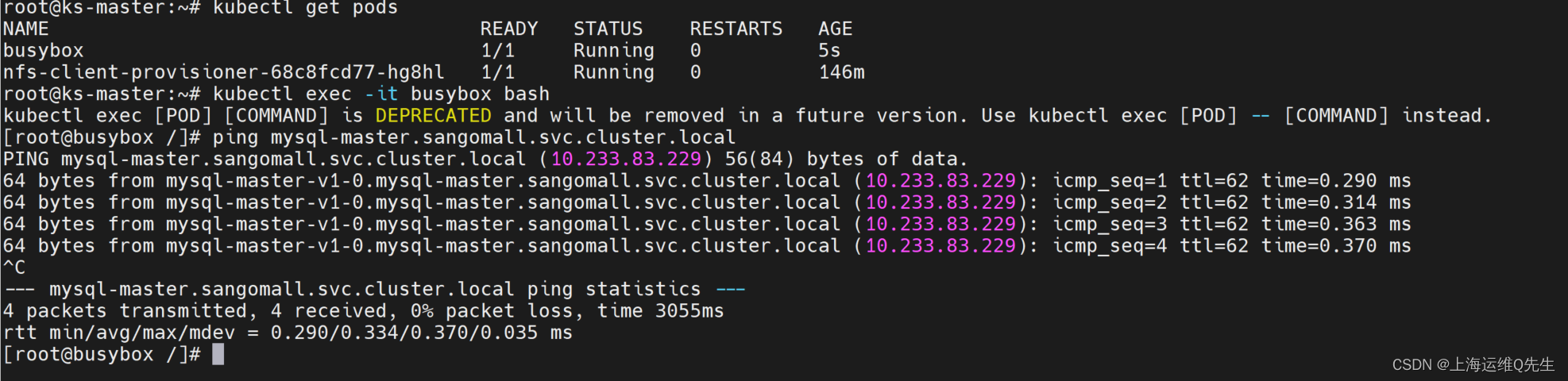

确保在容器中可以解析到mysql-master.sangomall.svc.cluster.local

1.4 Slave节点同步master数据

进入Slave的控制台

mysql -uroot -p123456

将slave设置为从节点

change master to

master_host='mysql-master.sangomall.svc.cluster.local.',

master_user='backup',

master_password='123456',

master_log_file='mysql-bin.000003',

master_log_pos=439,

master_port=3306;

启动从库同步

start slave;

查看同步状态

mysql> show slave status \G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-master.sangomall.svc.cluster.local.

Master_User: backup

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000004

Read_Master_Log_Pos: 439

Relay_Log_File: mysql-slave-v1-0-relay-bin.000002

Relay_Log_Pos: 320

Relay_Master_Log_File: mysql-bin.000004

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB: mysql,sys,information_schema,performance_schema

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 439

Relay_Log_Space: 538

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 1

Master_UUID: d1e6fcff-d844-11ed-9270-0acd35891bf8

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.00 sec)

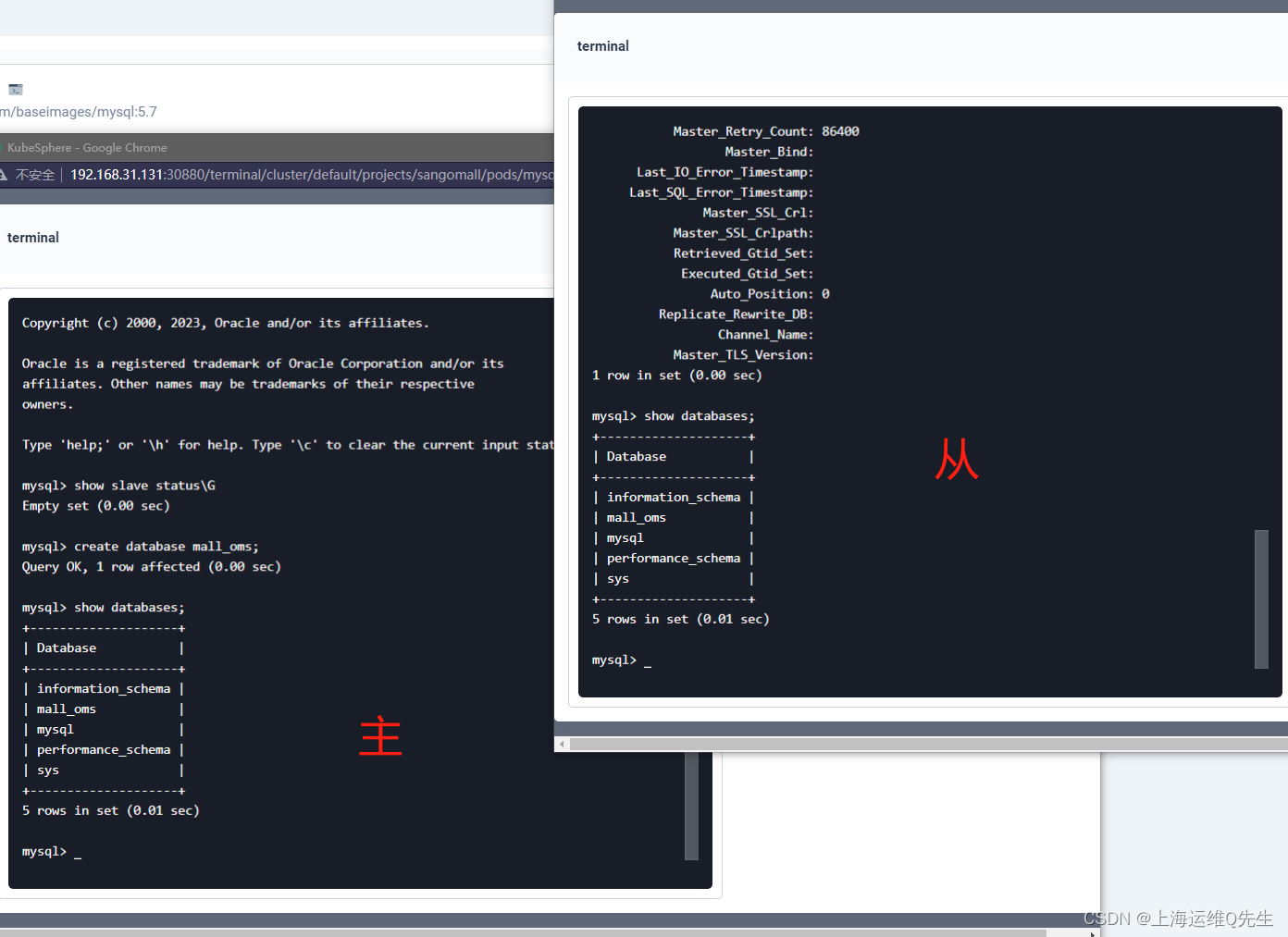

1.5.3 验证数据同步

当主节点有新数据更新时,从节点会自动同步

create database mall_oms;

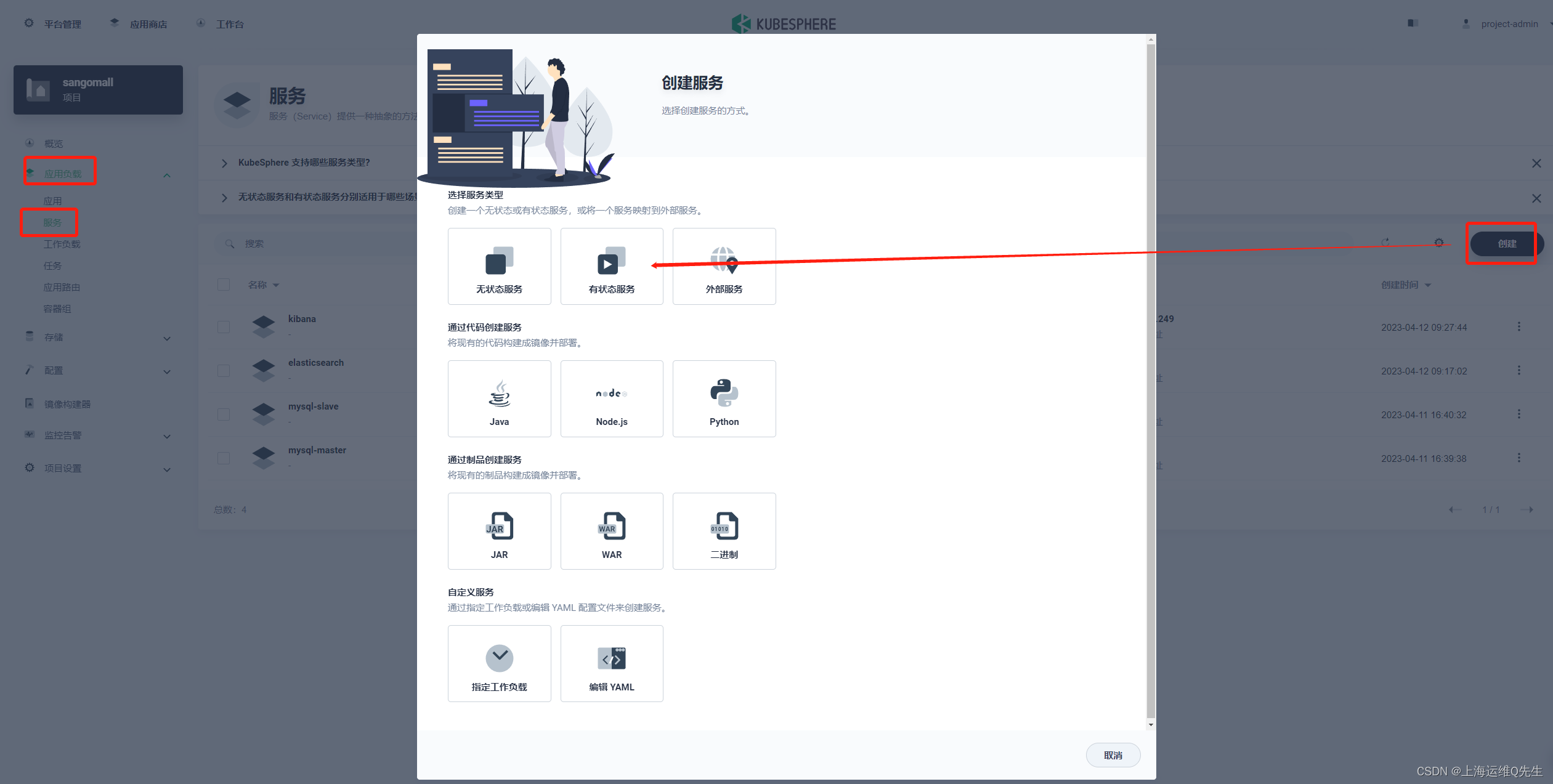

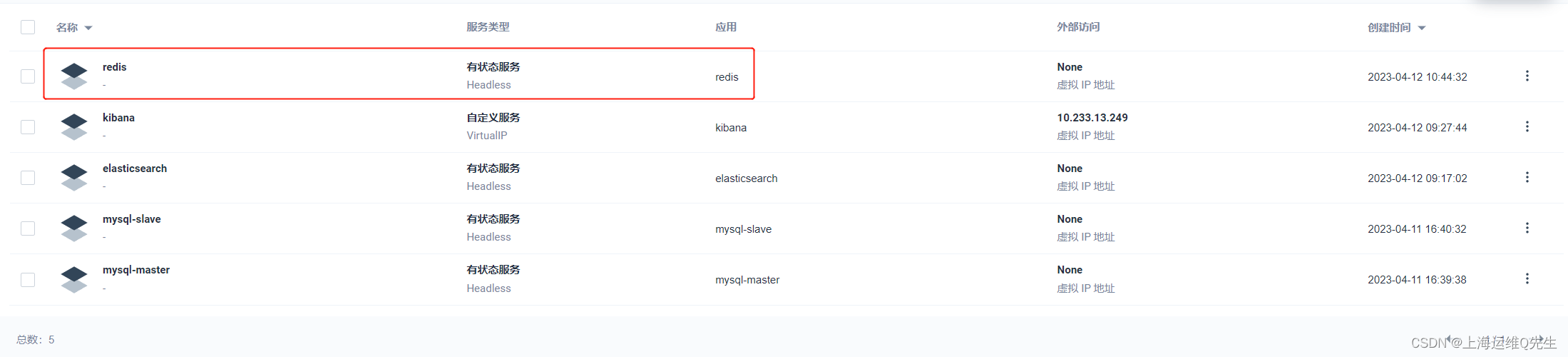

2. Redis

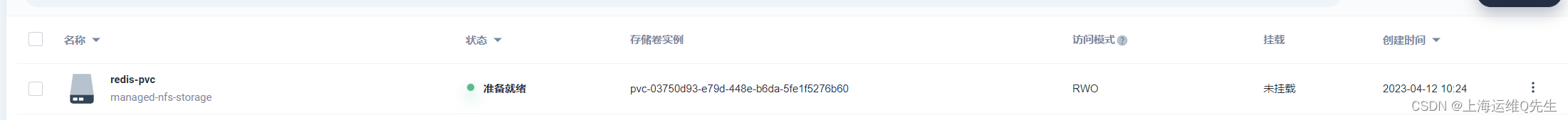

2.1 配置pvc

redis-pvc

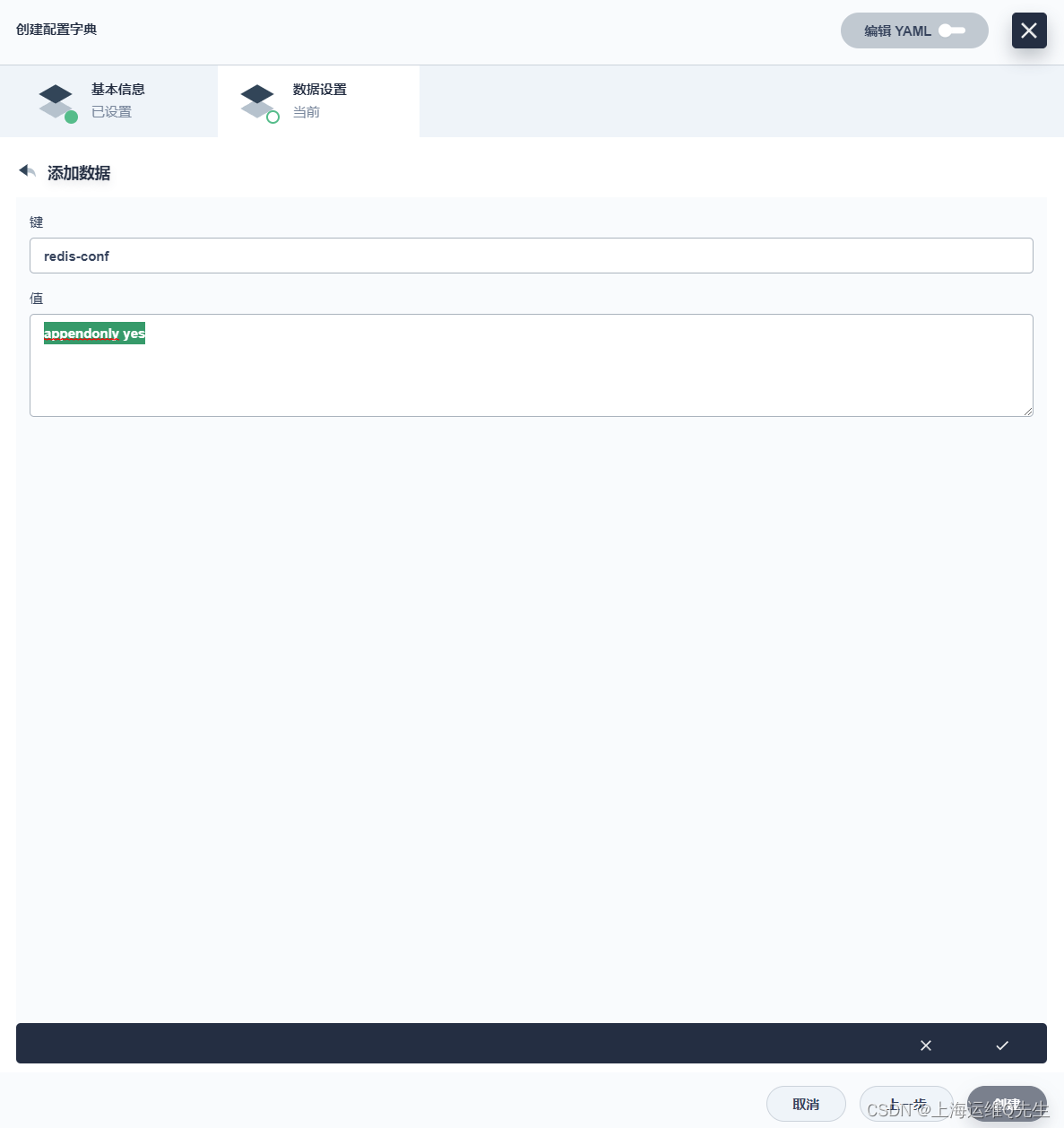

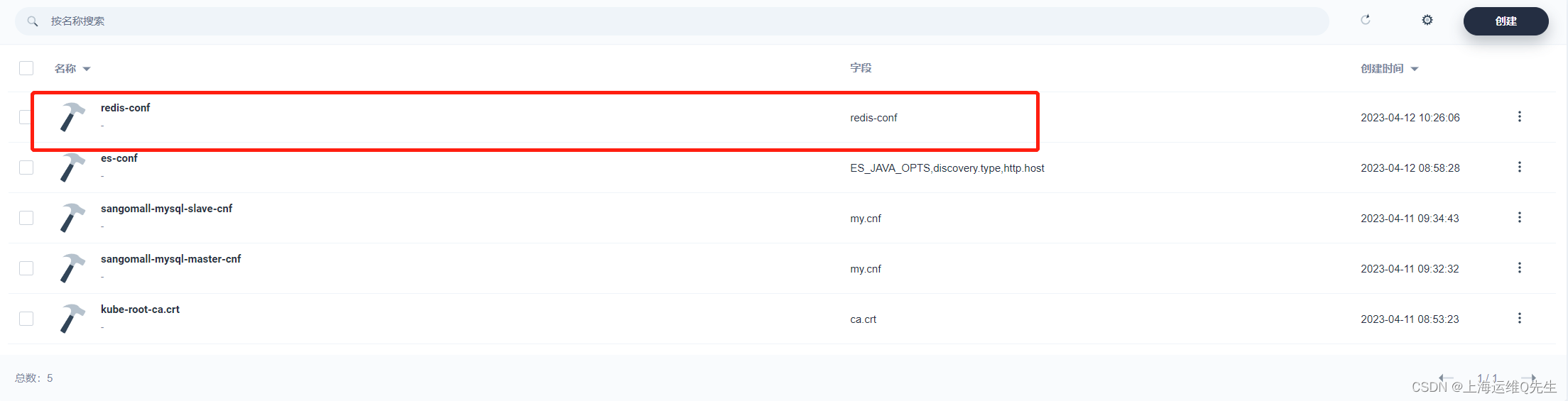

2.2 配置文件

redis-conf

redis-conf

appendonly yes

[下一步],[创建]

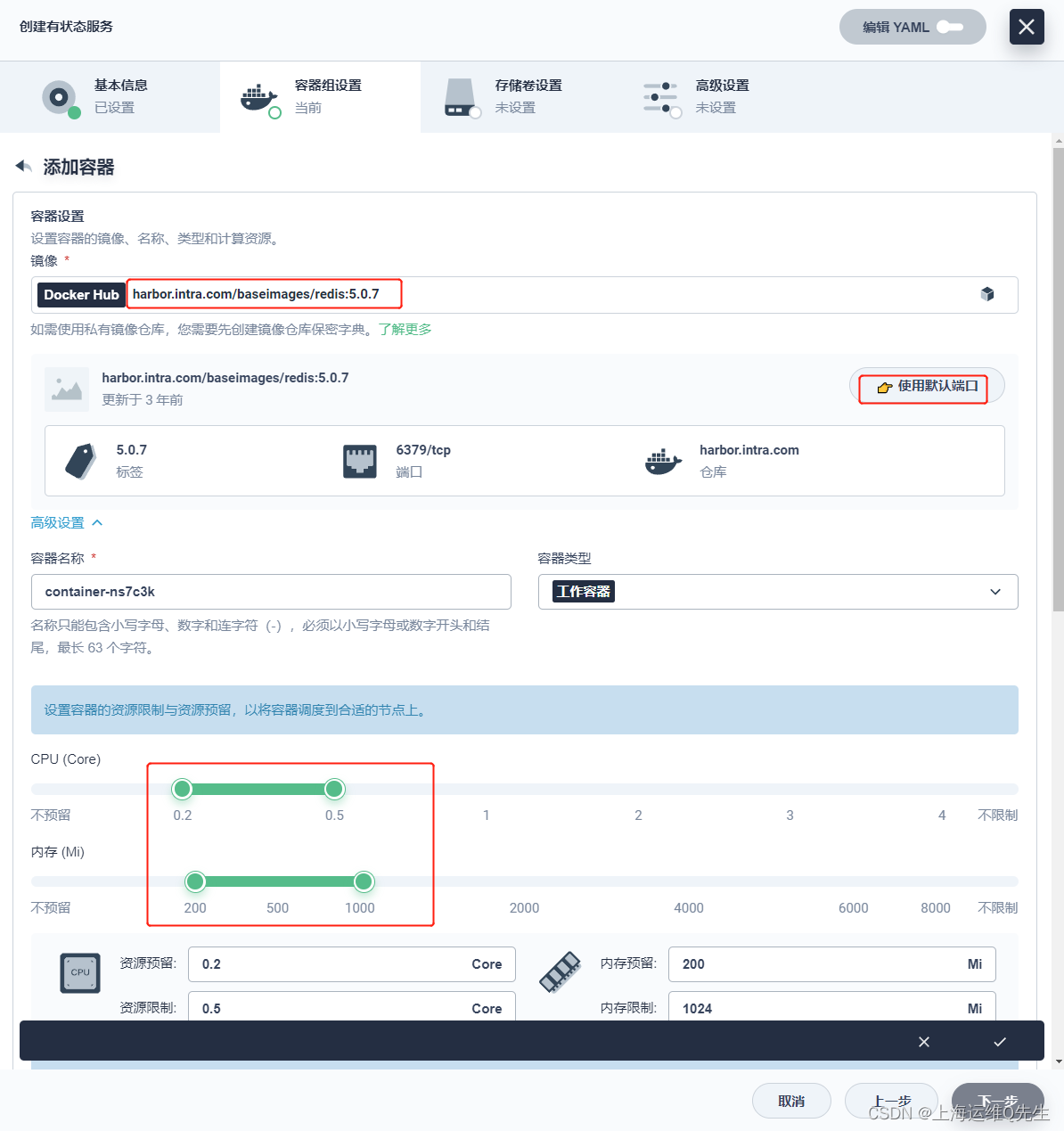

2.3 部署Redis

创建[有状态服务]

名称为:[redis],[下一步]

镜像为

harbor.intra.com/baseimages/redis:5.0.7

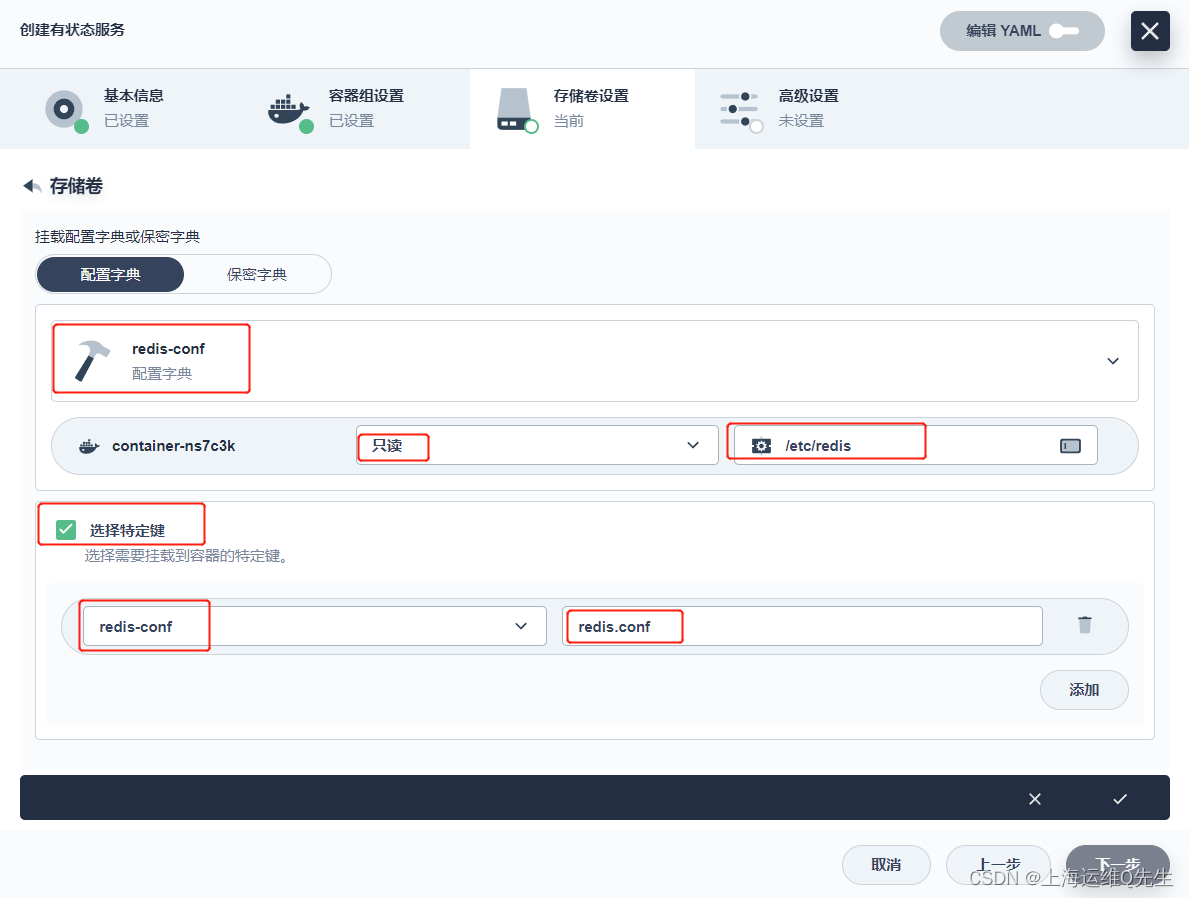

redis-server

/etc/redis/redis.conf

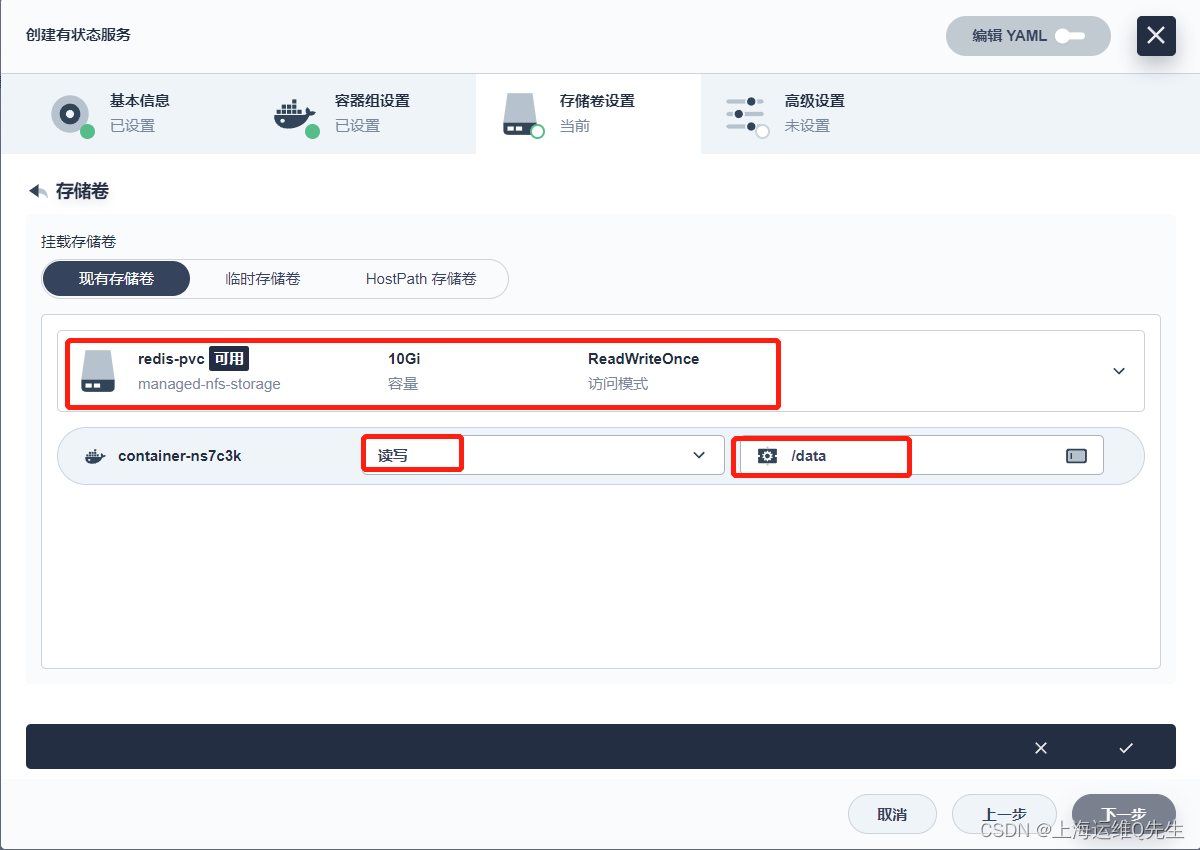

挂载卷

[挂载保密字典]

/etc/redis

redis.conf

[下一步],[创建]

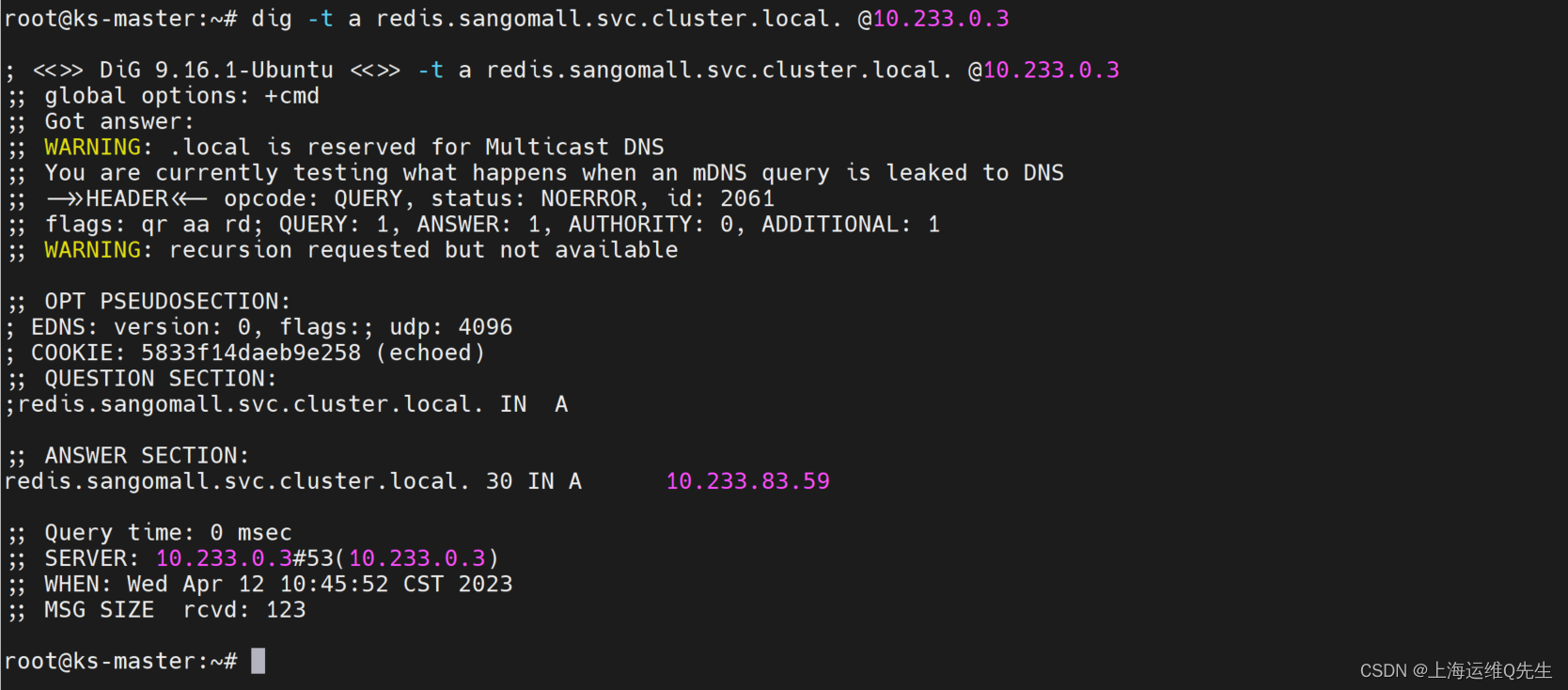

测试解析

dig -t a redis.sangomall.svc.cluster.local. @10.233.0.3

验证服务

root@ks-master:~# kubectl exec -it -n sangomall redis-v1-0 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@redis-v1-0:/data# redis-cli

127.0.0.1:6379> set key1 v1

OK

127.0.0.1:6379> get key1

"v1"

127.0.0.1:6379> exit

root@redis-v1-0:/data# exit

exit

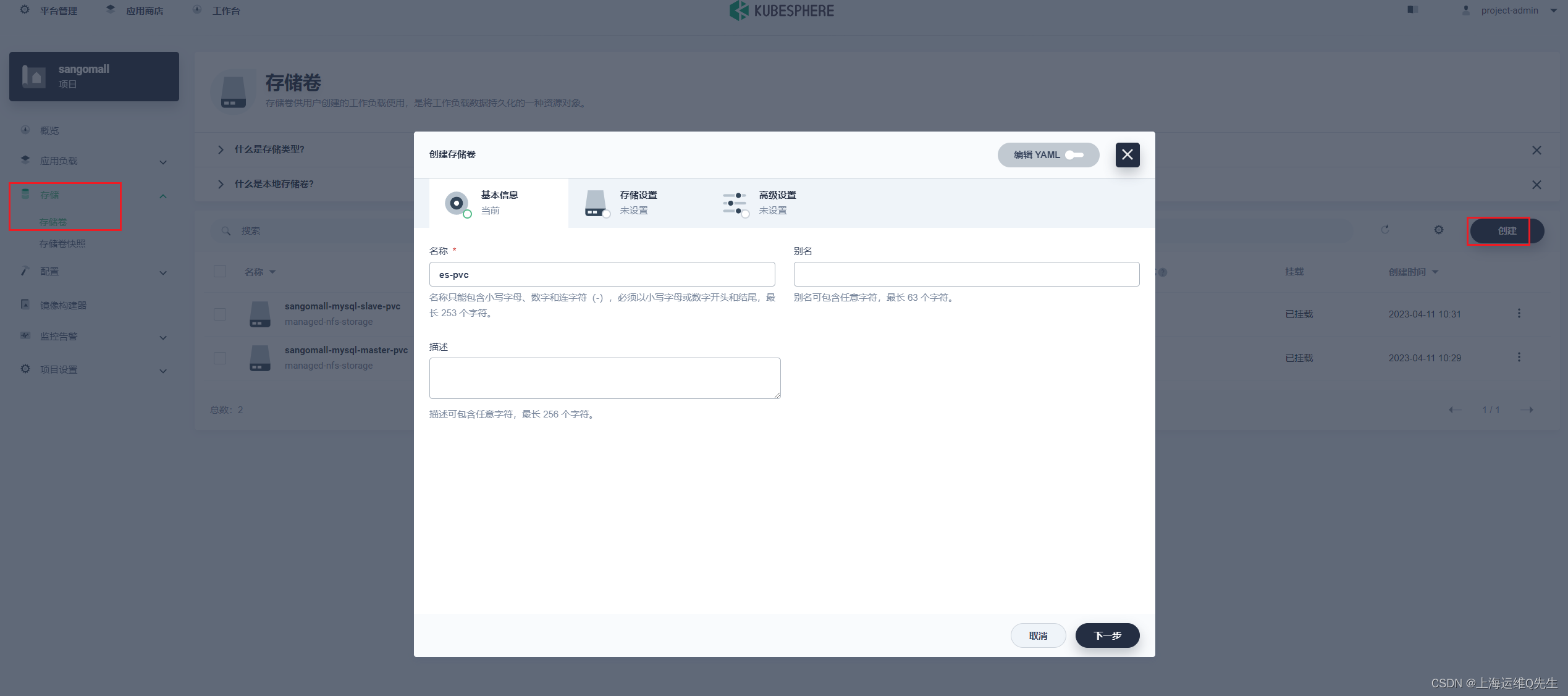

3.ELK

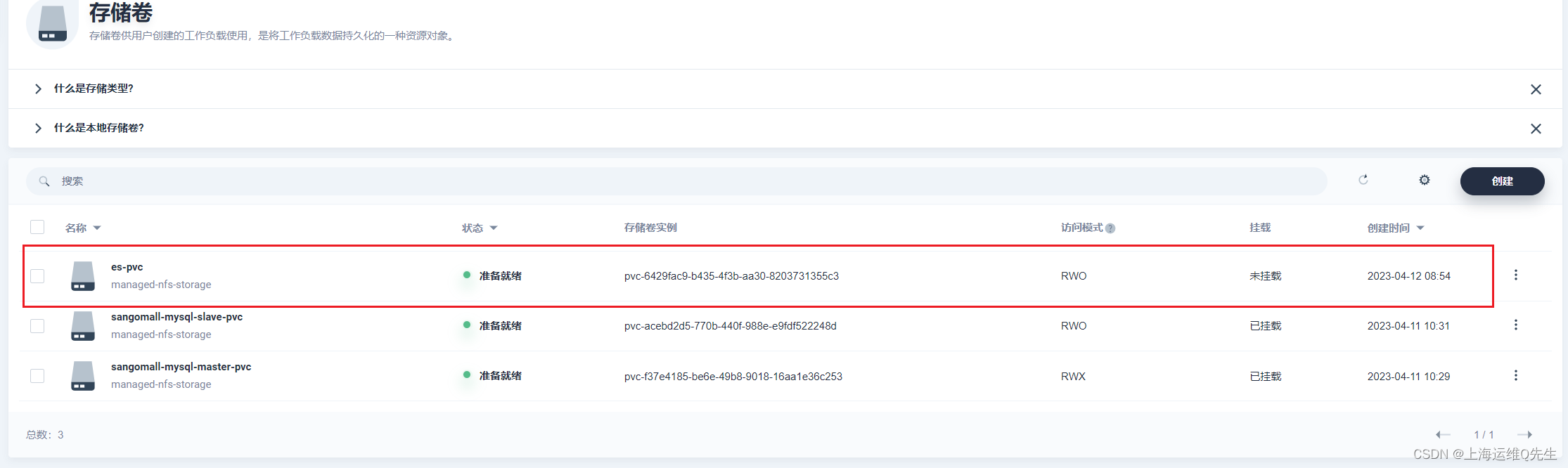

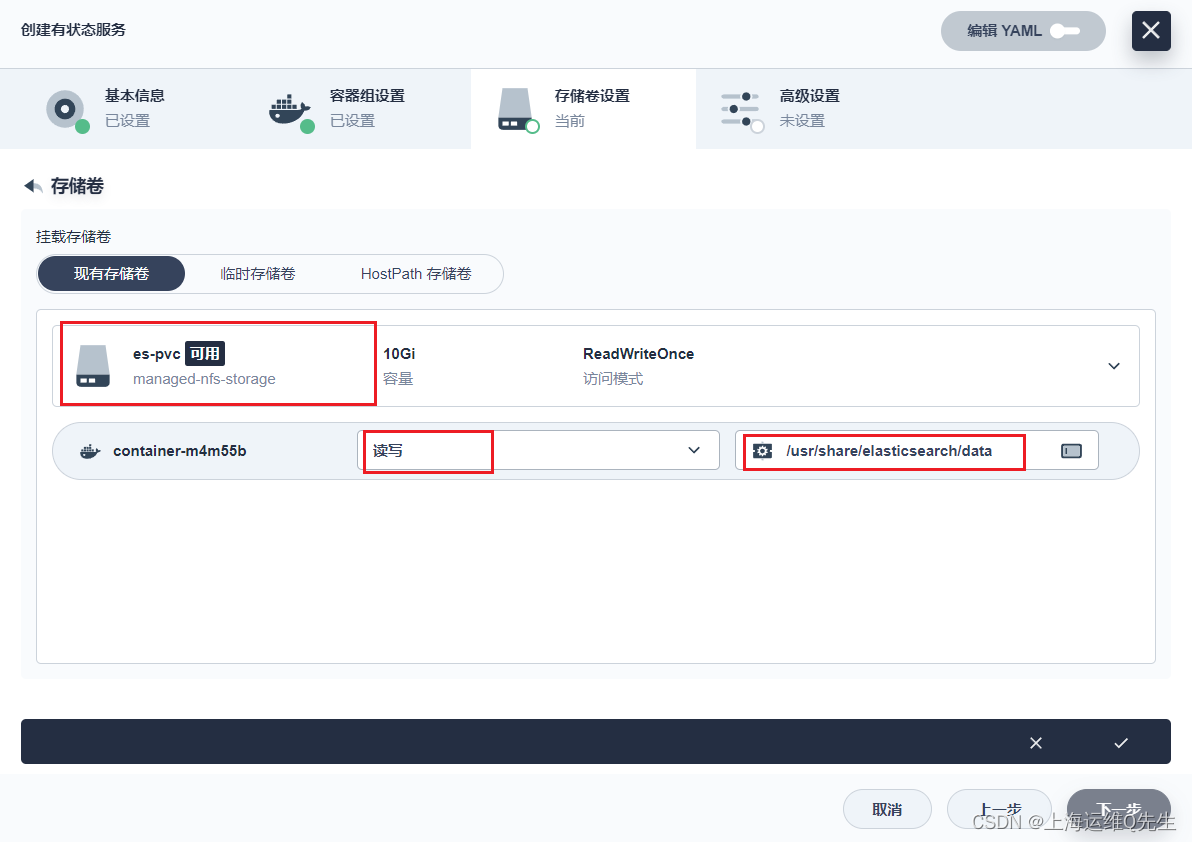

3.1 创建Es的pvc

es-pvc

这里readwriteonce即可

[下一步],[创建] 这样pvc就创建完成了

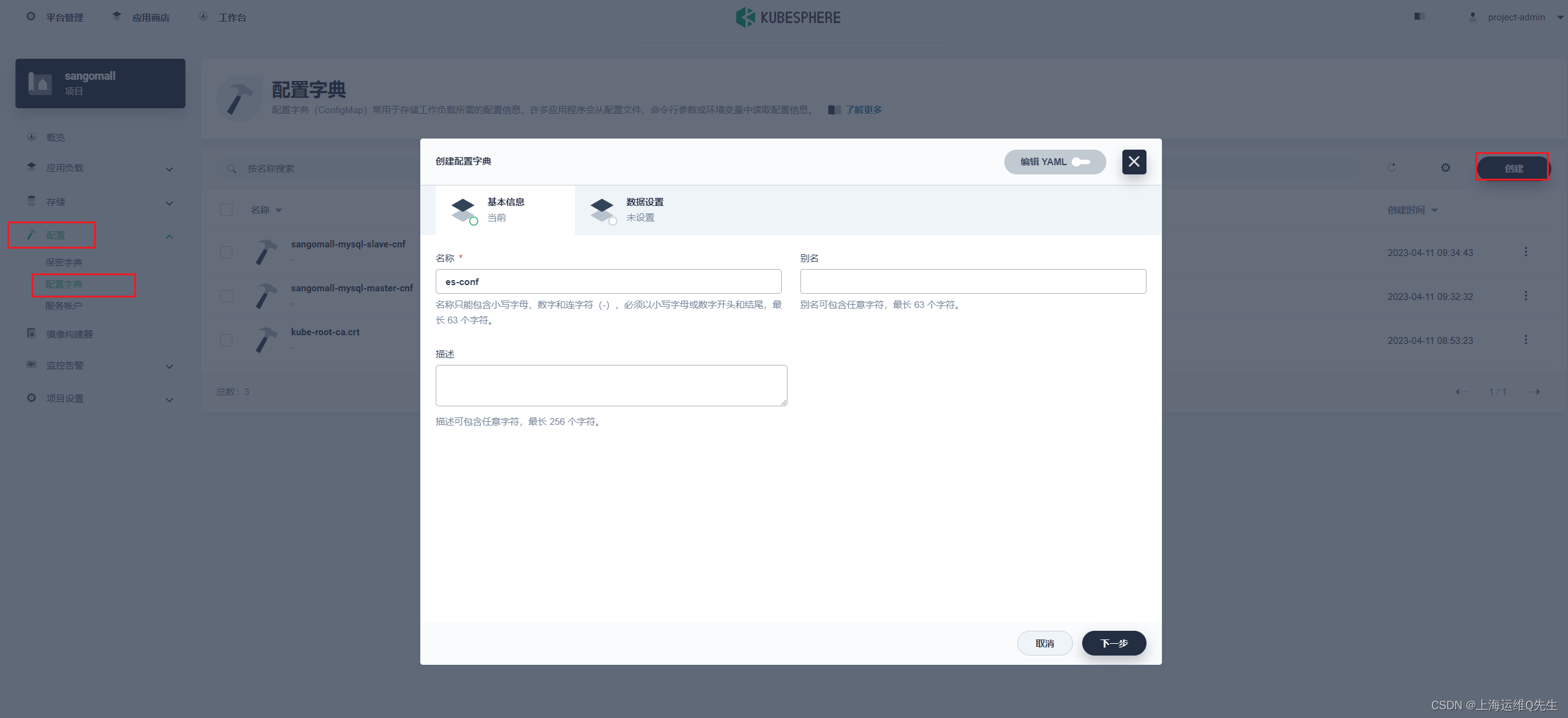

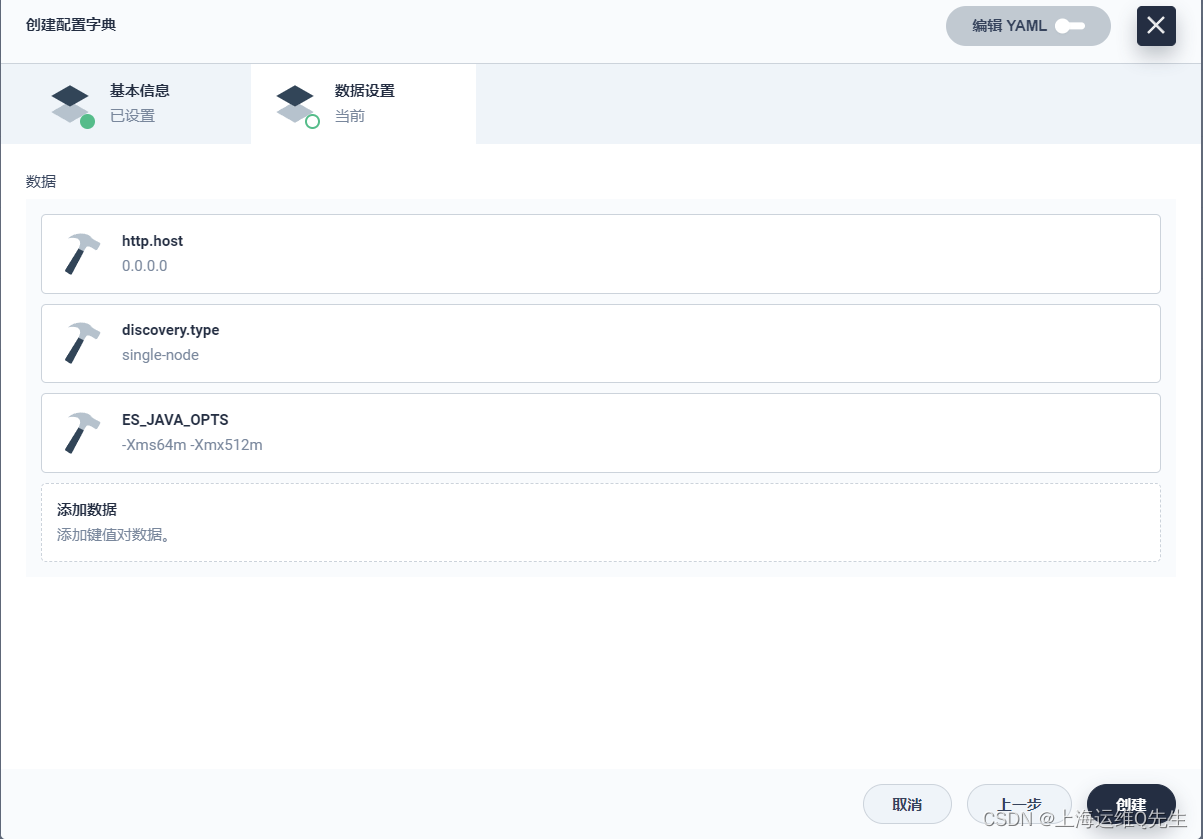

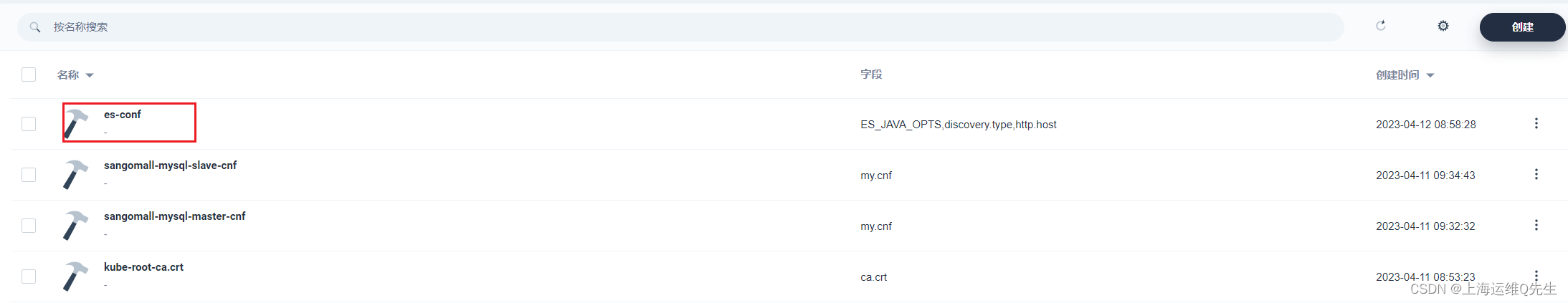

3.2 创建ES字典

es-conf

[添加数据]

http.host: 0.0.0.0

discovery.type: single-node

ES_JAVA_OPTS: -Xms64m -Xmx512m

[创建]

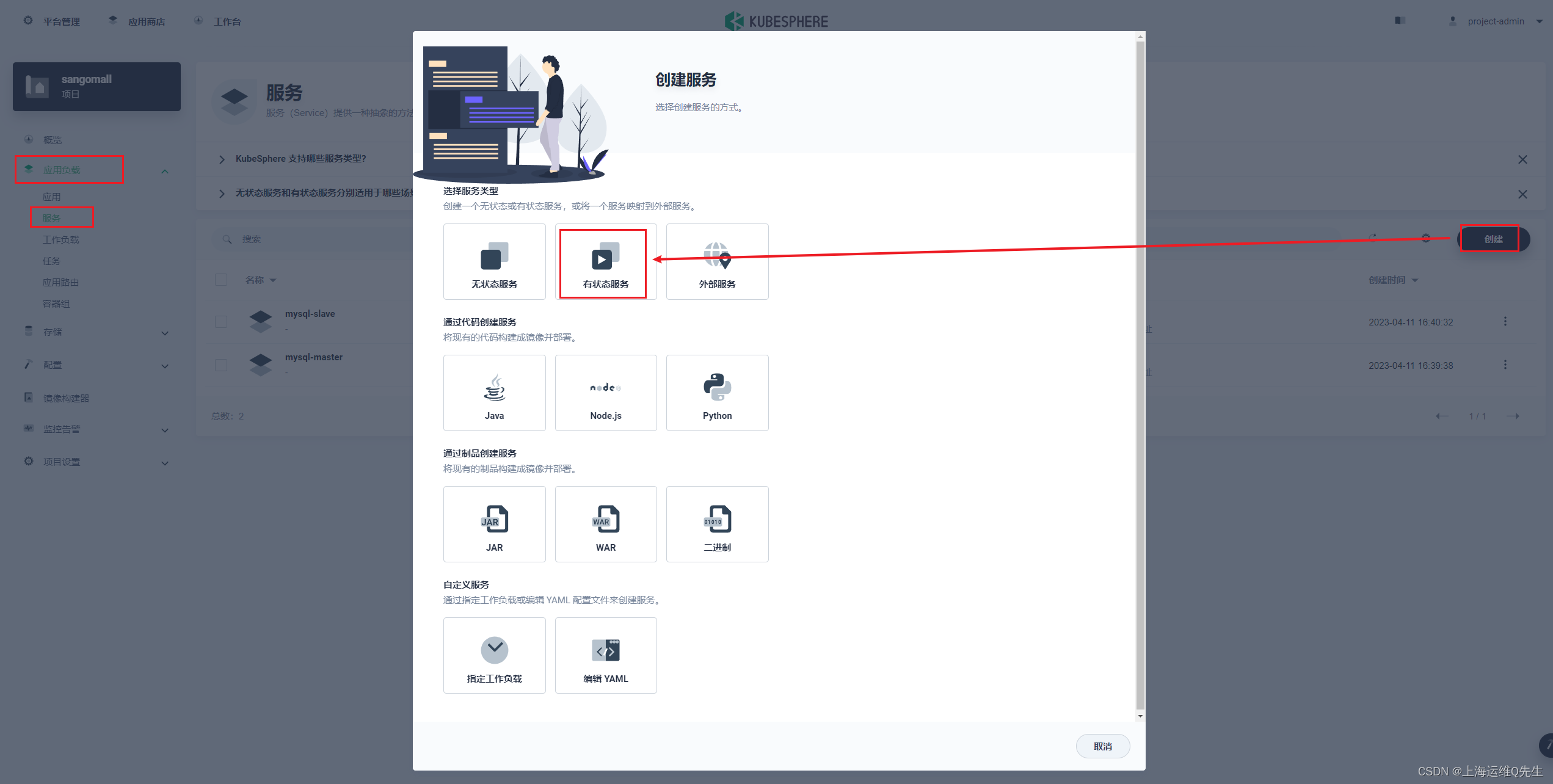

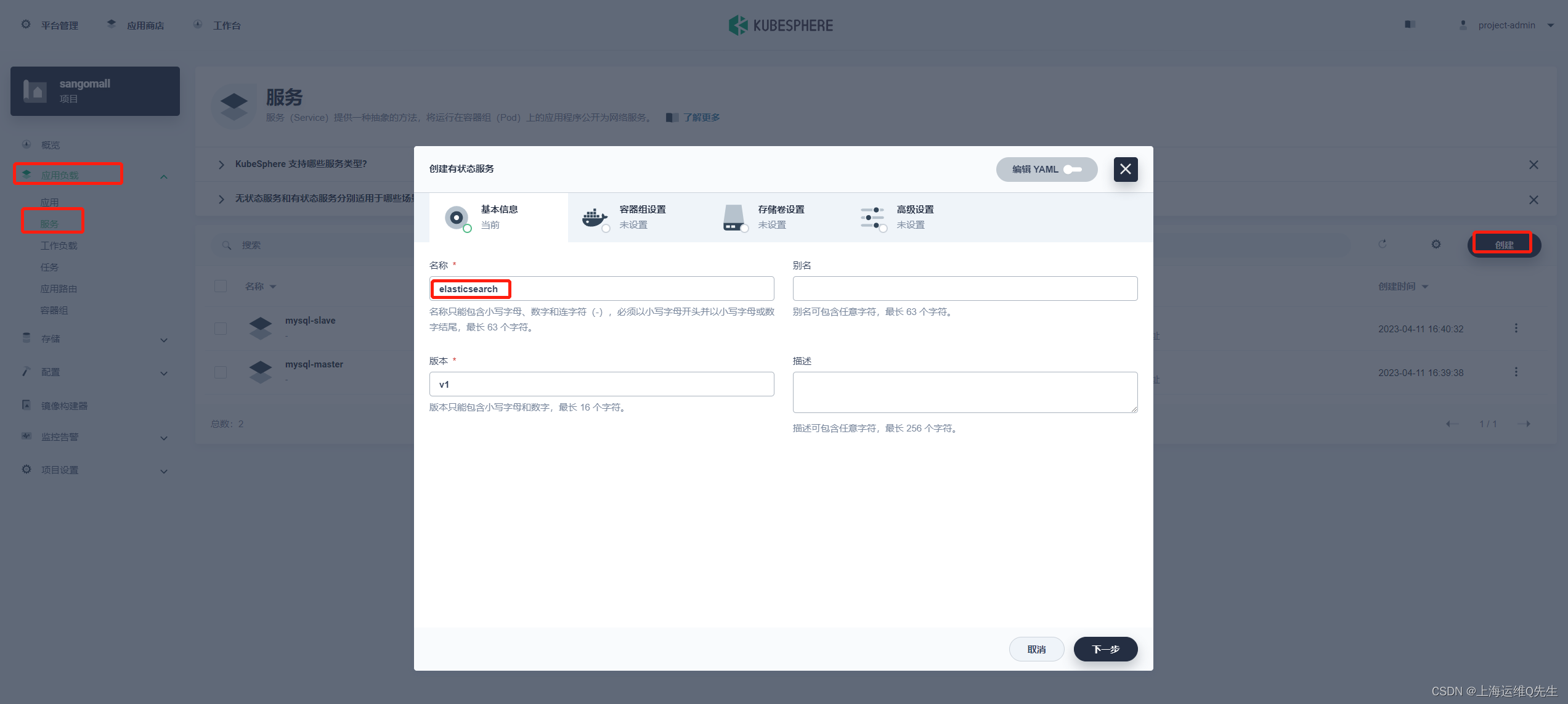

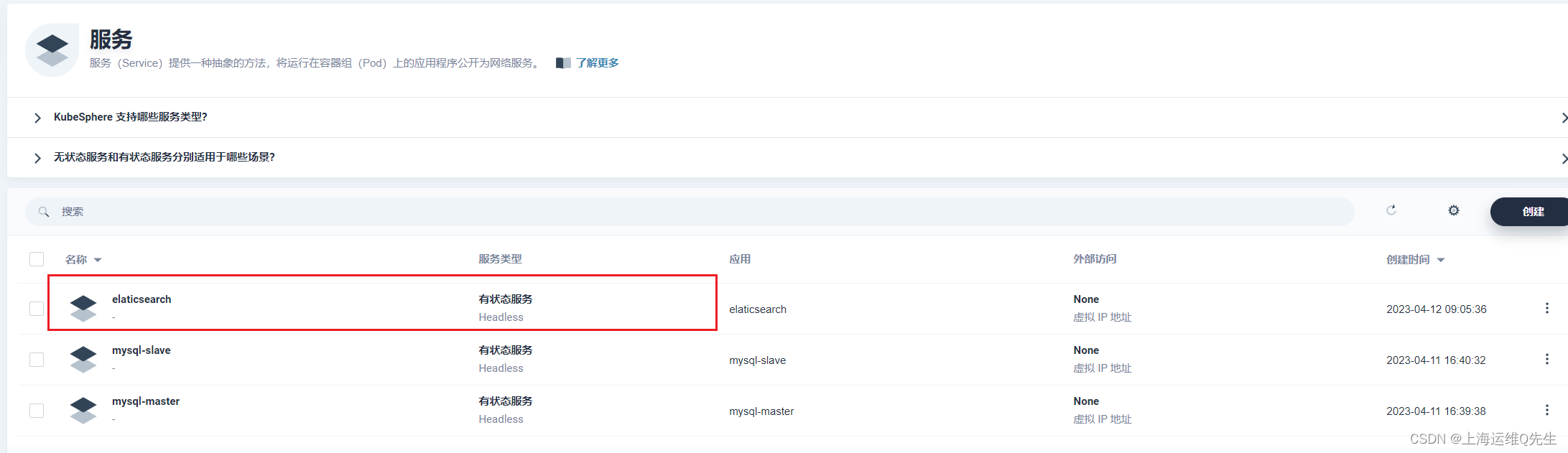

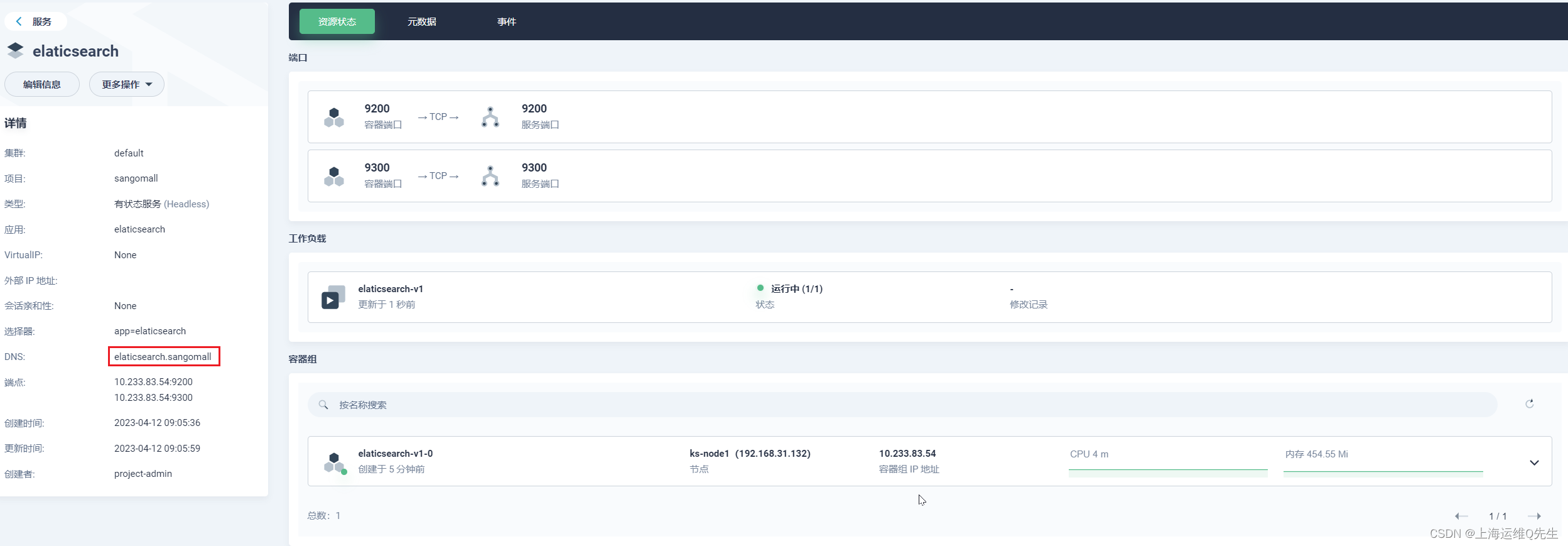

3.3 创建ES服务

这里选择[有状态服务]

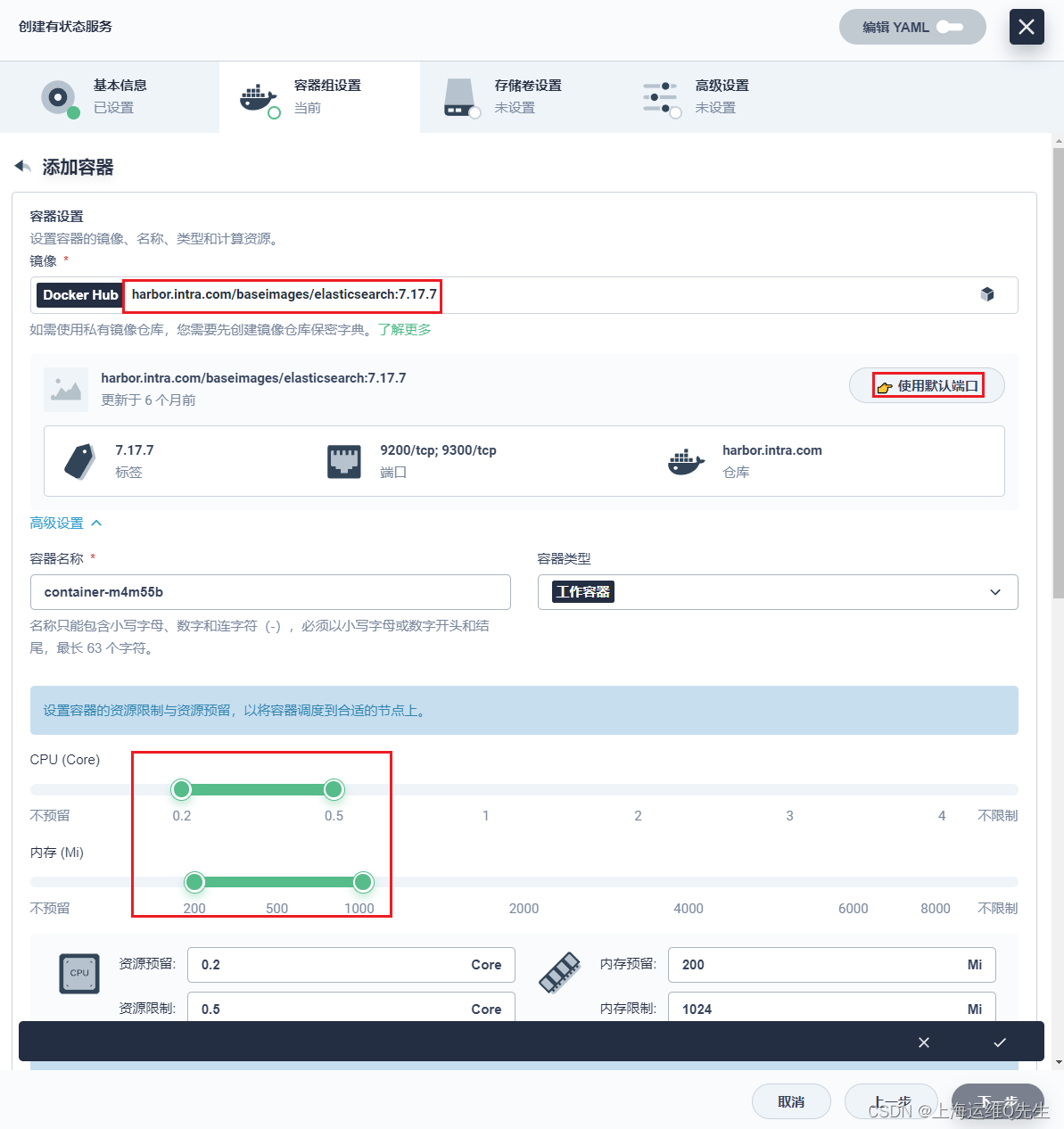

elasticsearch

harbor.intra.com/baseimages/elasticsearch:7.17.7

添加3个环境变量

/usr/share/elasticsearch/data

[下一步],[创建]

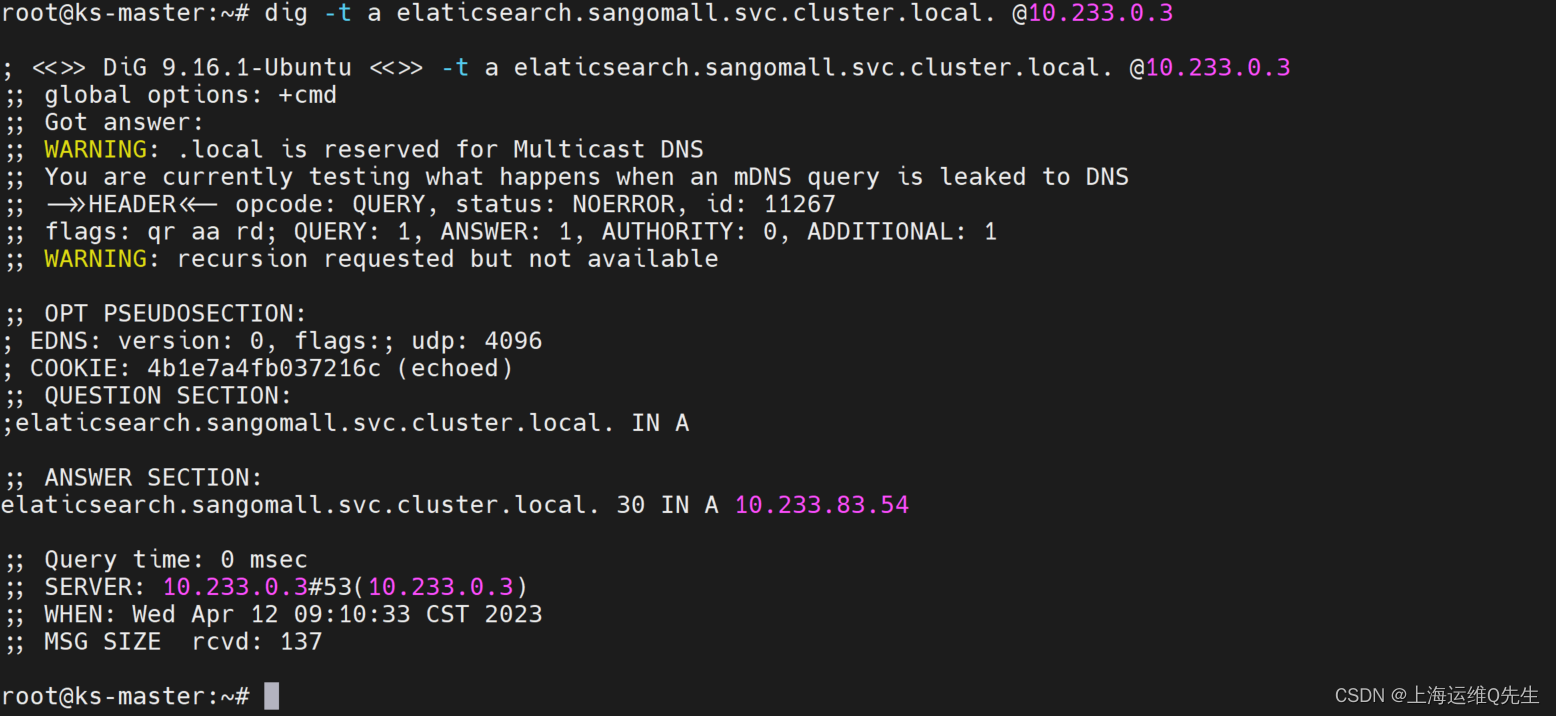

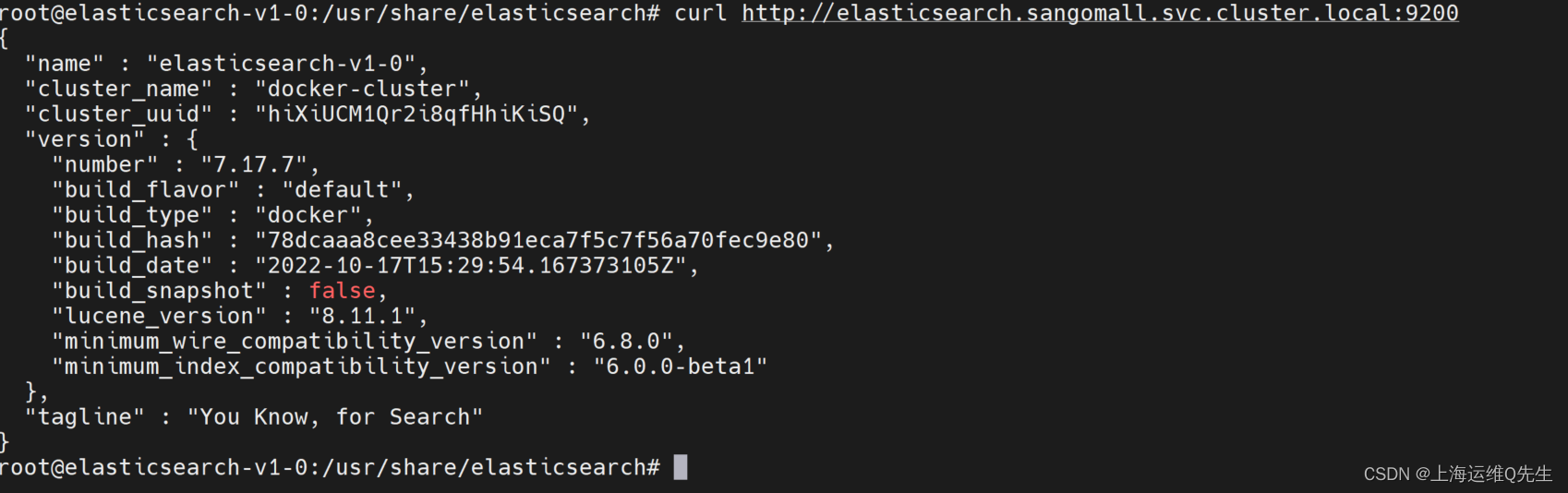

3.4 访问测试

dig -t a elaticsearch.sangomall.svc.cluster.local. @10.233.0.3

curl http://elasticsearch.sangomall.svc.cluster.local:9200

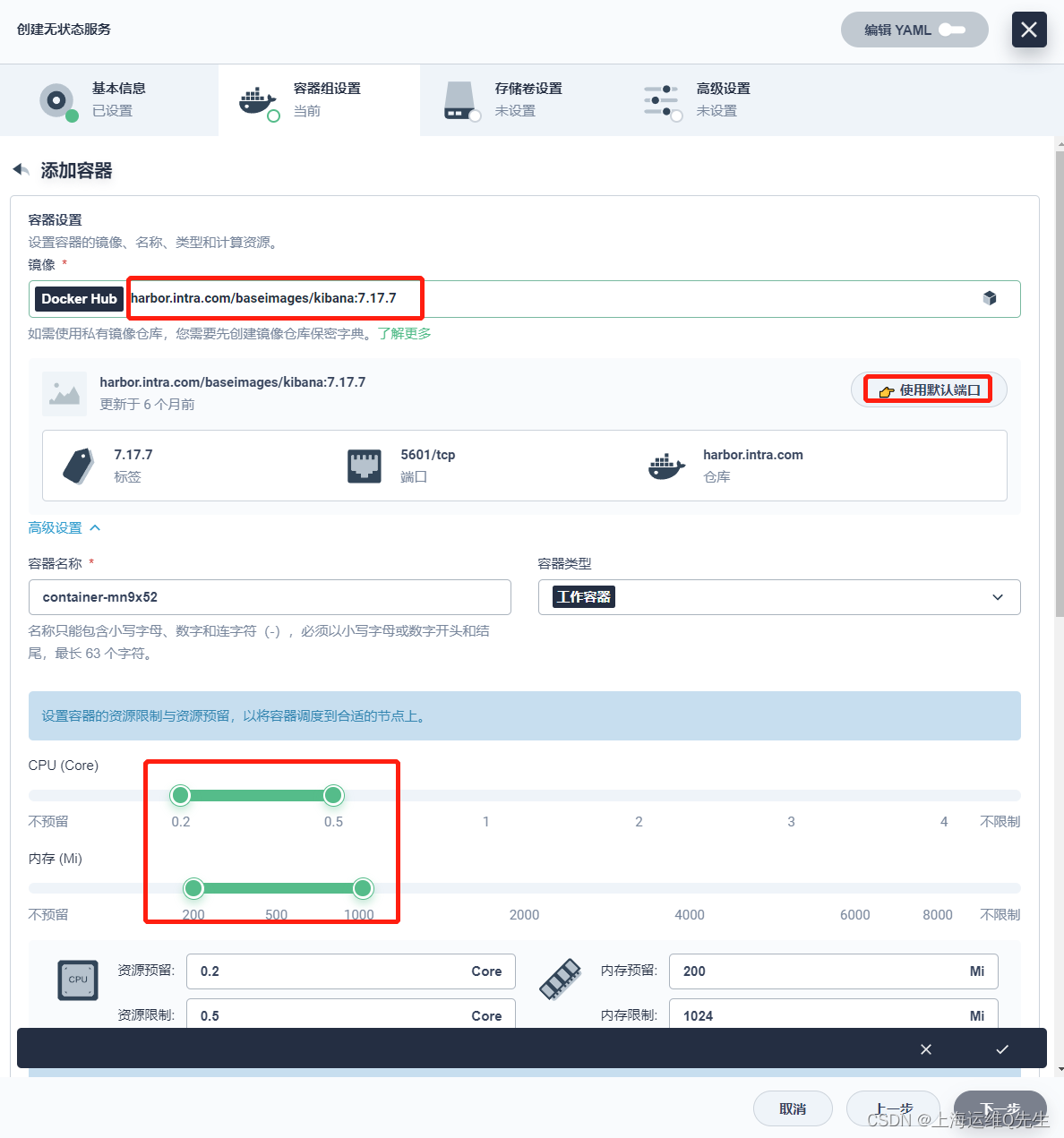

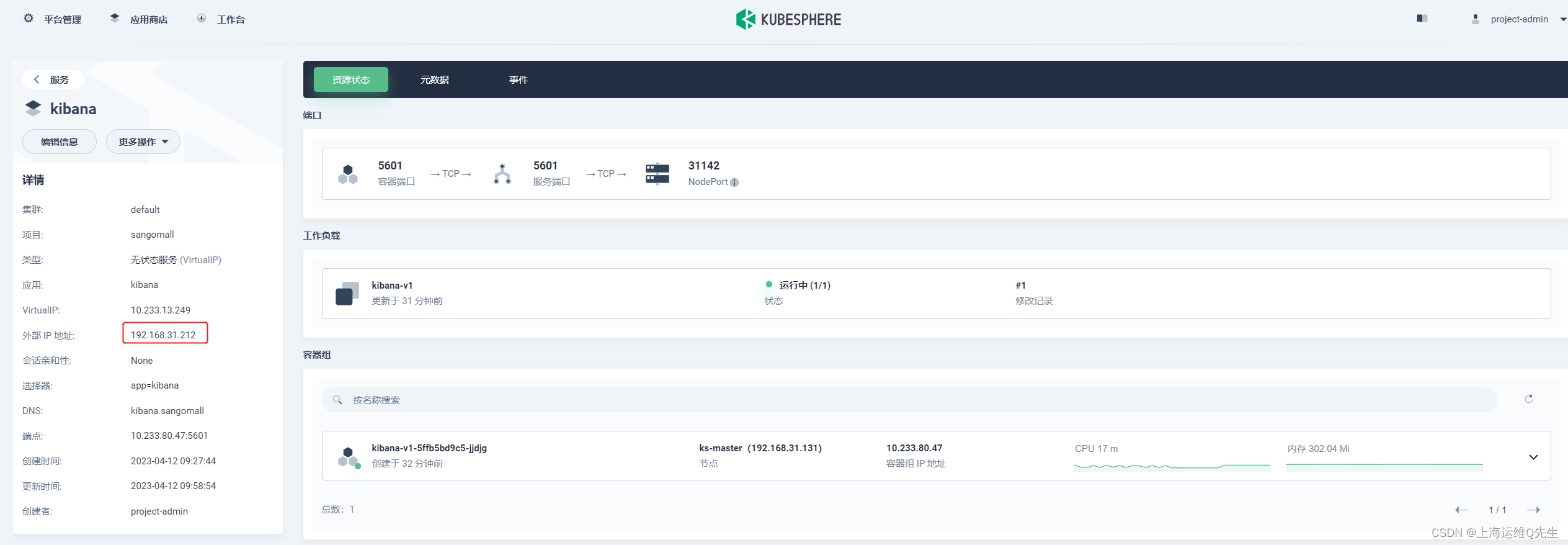

4. Kibana

4.1 创建kibana服务

创建[无状态服务]

[kibana]

镜像选择harbor仓库中的kibana

harbor.intra.com/baseimages/kibana:7.17.7

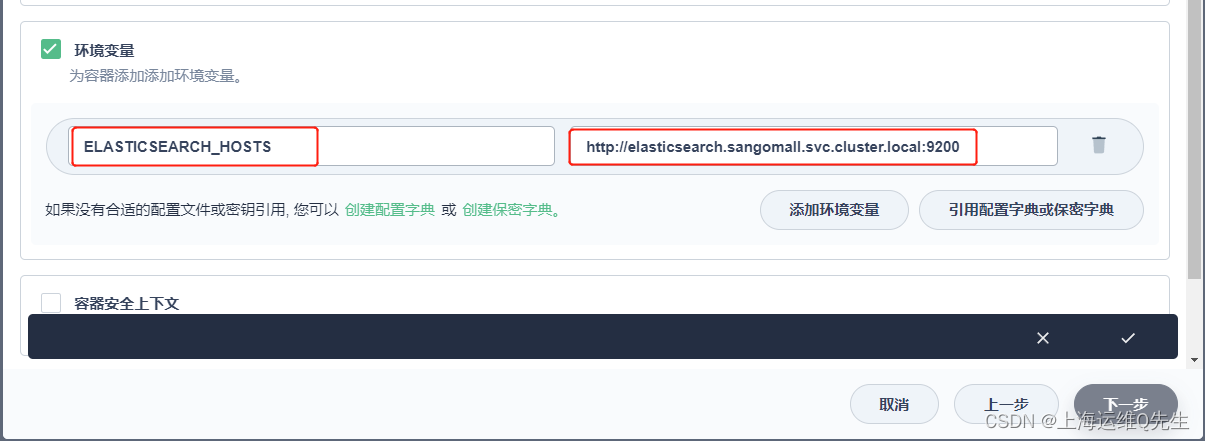

添加环境变量

ELASTICSEARCH_HOSTS http://elasticsearch.sangomall.svc.cluster.local:9200

[下一步],[下一步],[创建]

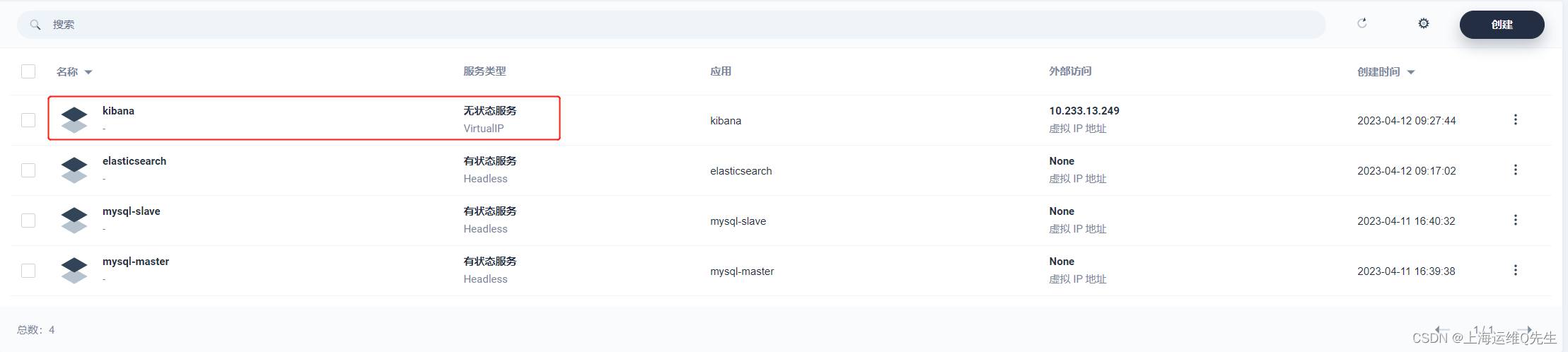

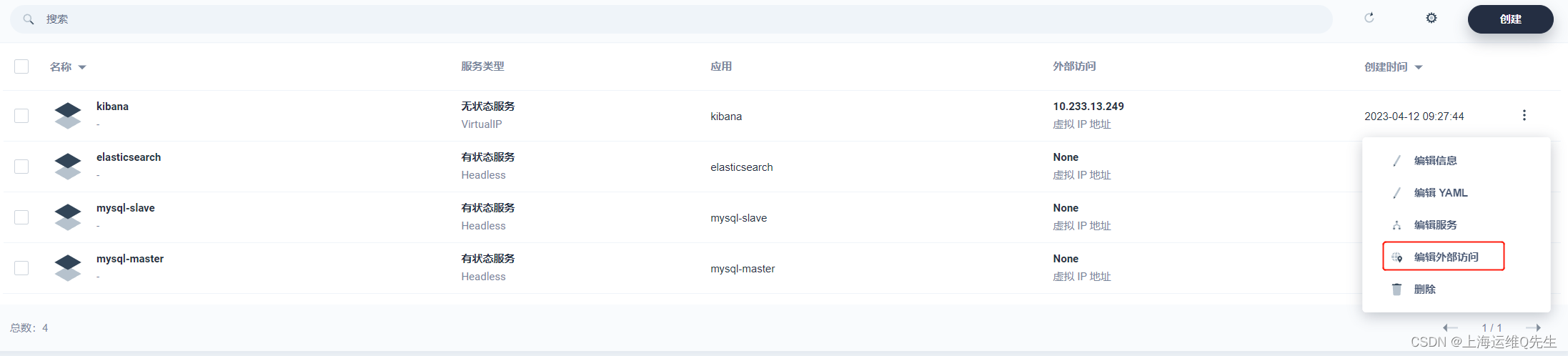

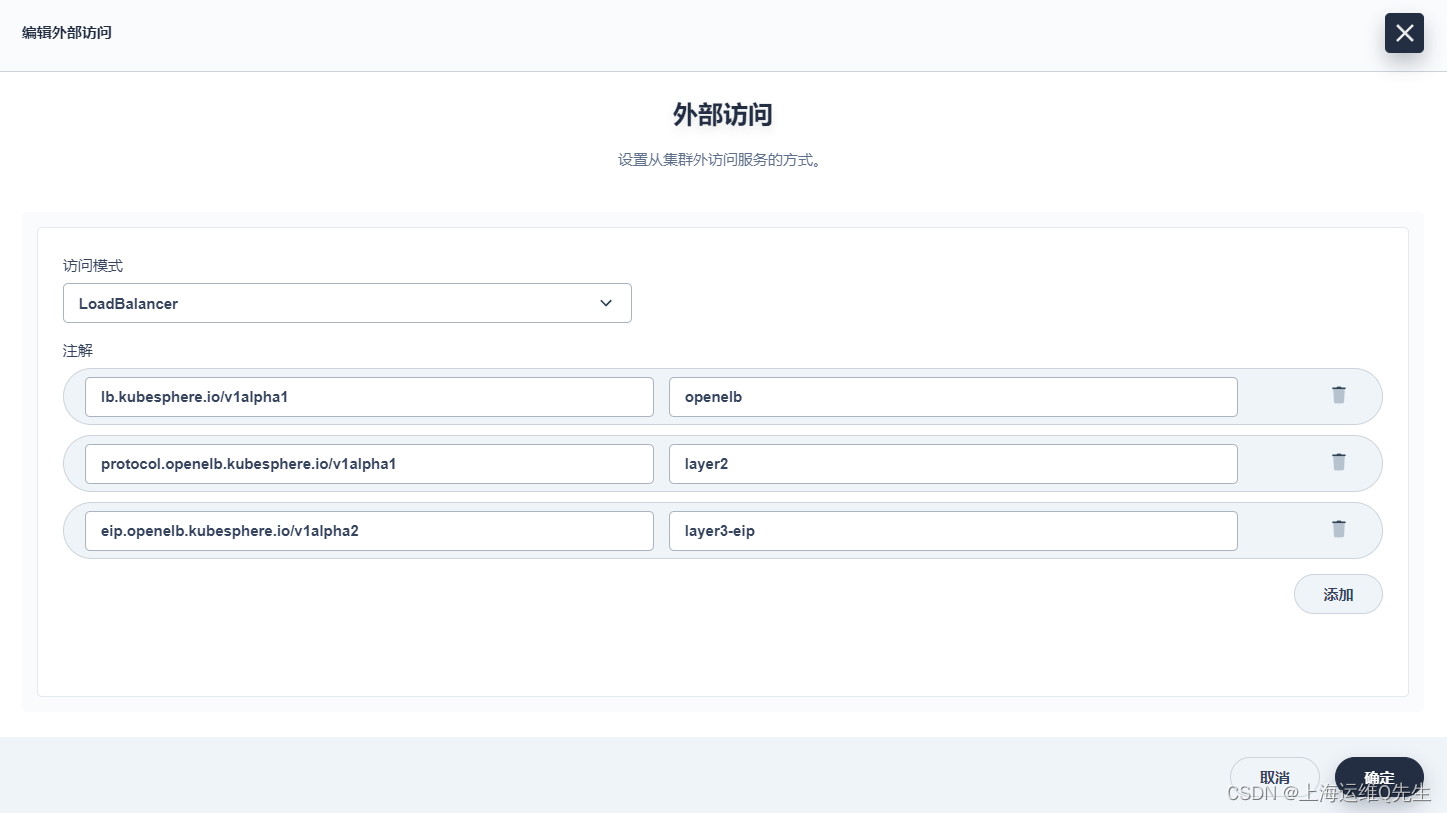

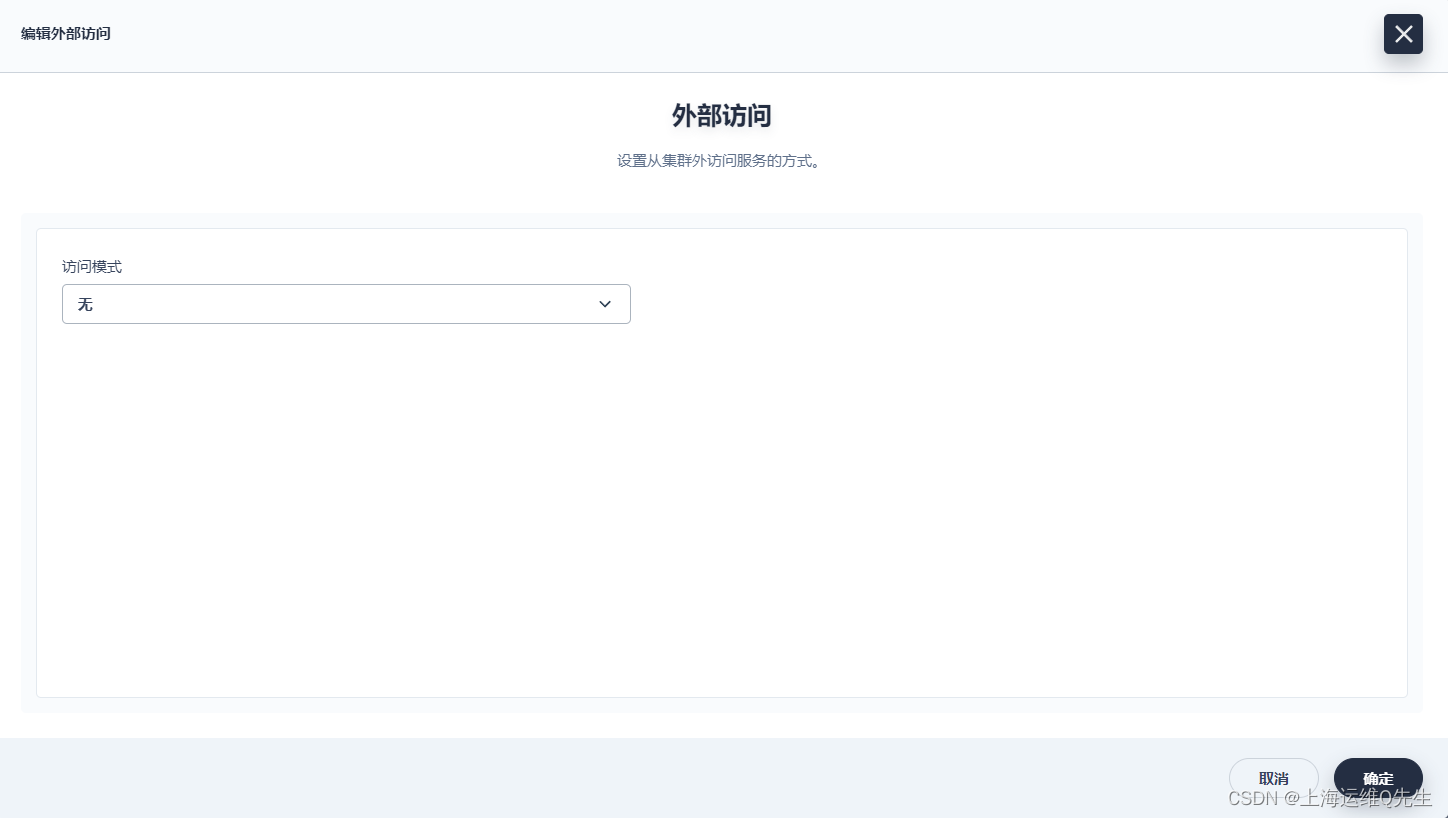

4.2 创建Kibana eip

[编辑外部访问]

[LoadBalancer]

eip.openelb.kubesphere.io/v1alpha2的值通过以下命令获得

# kubectl get eip

NAME CIDR USAGE TOTAL

layer3-eip 192.168.31.210-192.168.31.220 2 11

这里的NAME即是eip.openelb.kubesphere.io/v1alpha2的值

lb.kubesphere.io/v1alpha1: openelb

protocol.openelb.kubesphere.io/v1alpha1: layer2

eip.openelb.kubesphere.io/v1alpha2: layer3-eip

创建完成后

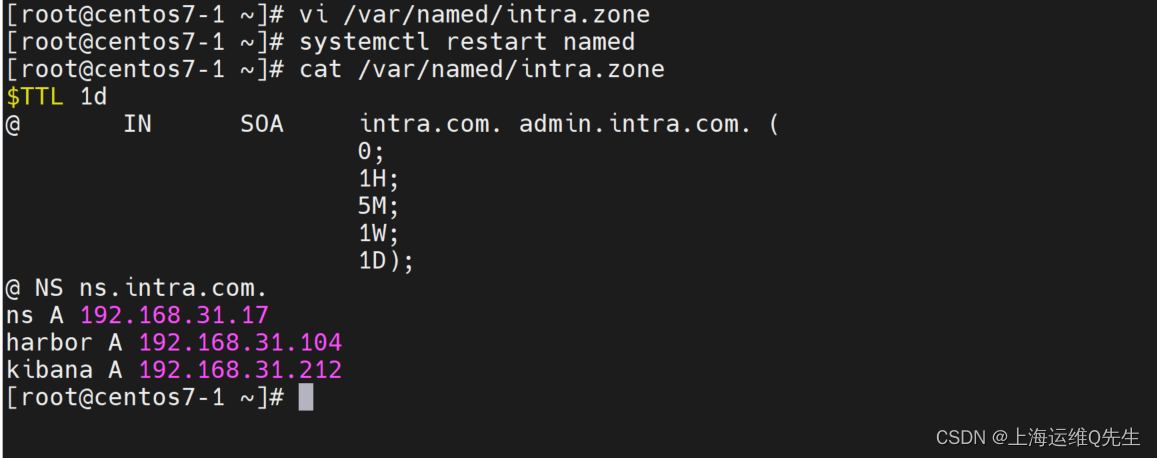

将地址配置到dns或hosts中

root@ks-master:~# ping kibana.intra.com

PING kibana.intra.com (192.168.31.212) 56(84) bytes of data.

64 bytes from ks-master (192.168.31.212): icmp_seq=1 ttl=64 time=0.051 ms

64 bytes from ks-master (192.168.31.212): icmp_seq=2 ttl=64 time=0.059 ms

64 bytes from ks-master (192.168.31.212): icmp_seq=3 ttl=64 time=0.064 ms

^C

--- kibana.intra.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2007ms

rtt min/avg/max/mdev = 0.051/0.058/0.064/0.005 ms

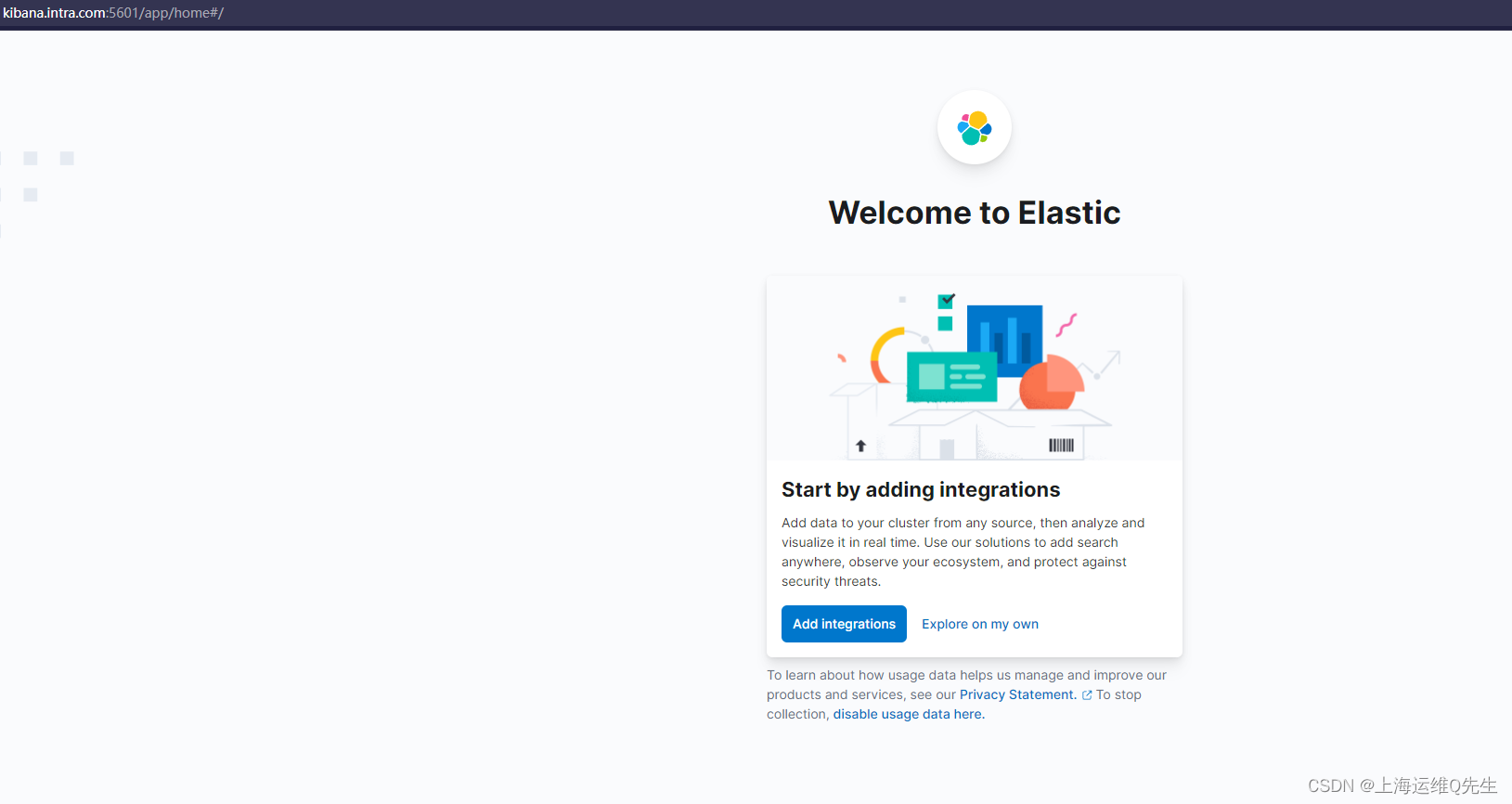

访问页面

http://kibana.intra.com:5601/

测试通过后删除掉kibana的外部访问

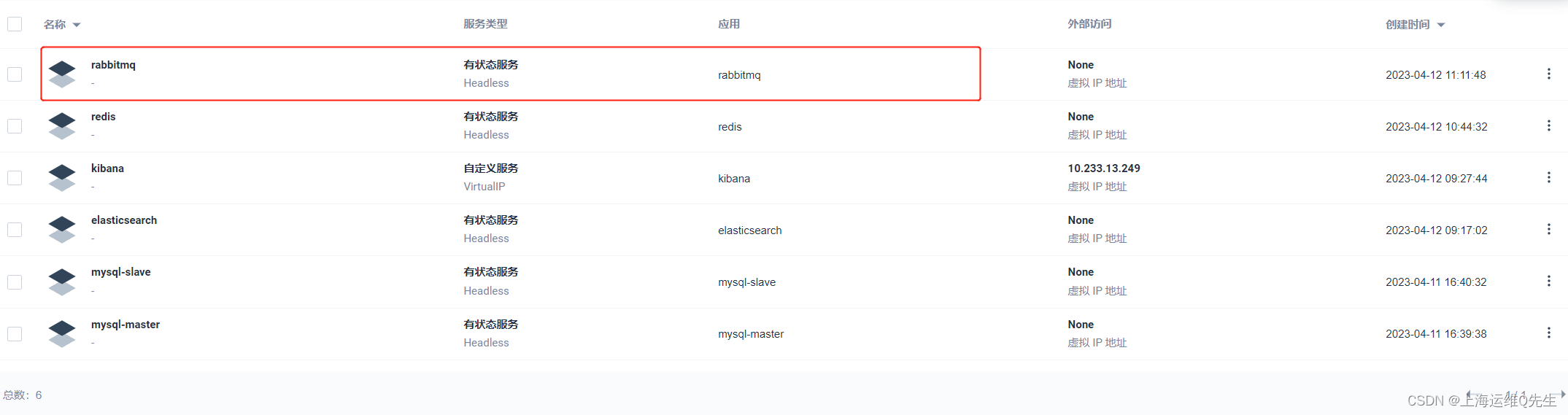

5. RabbitMQ

5.1 PVC创建

rabbmq-pvc

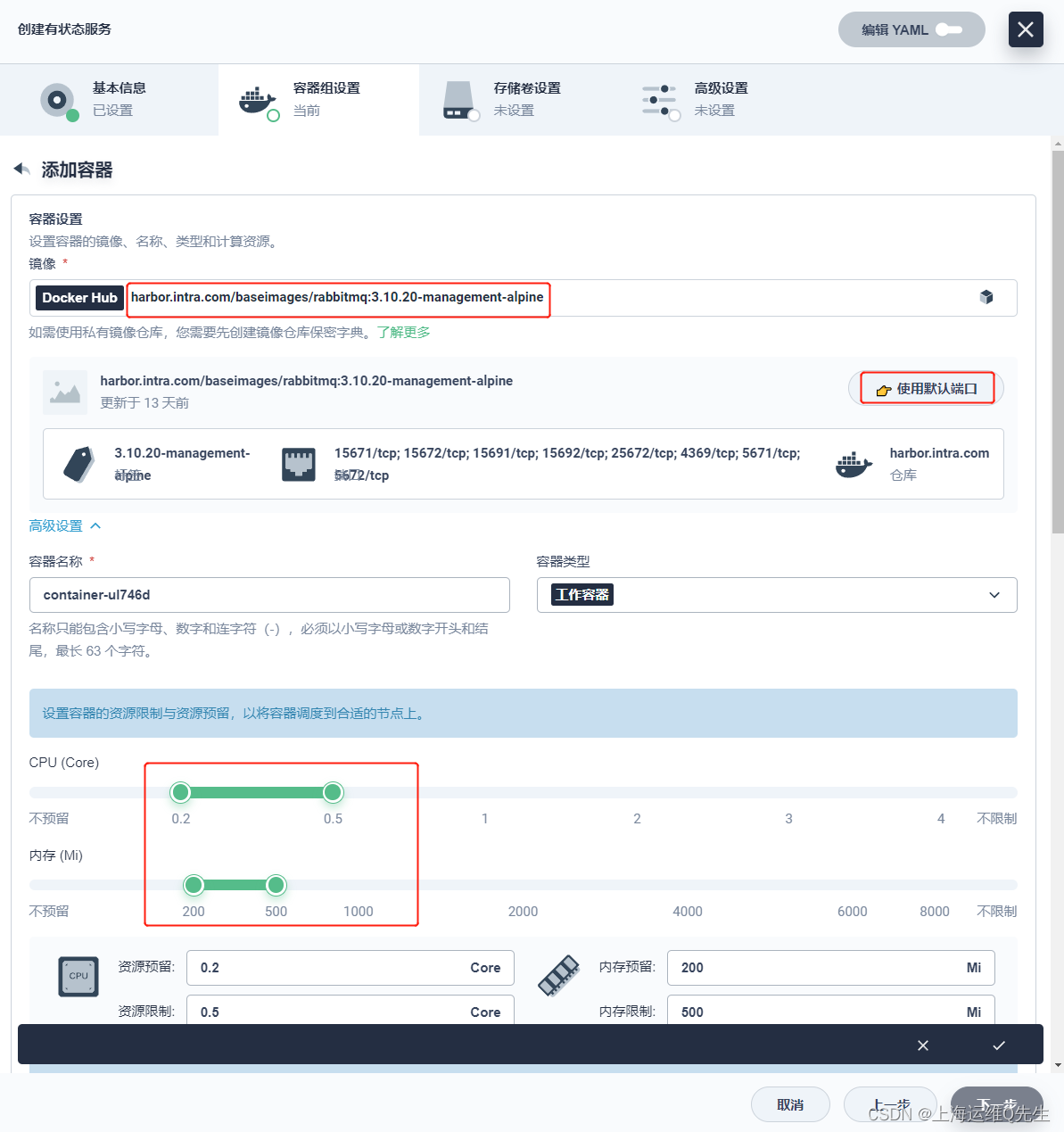

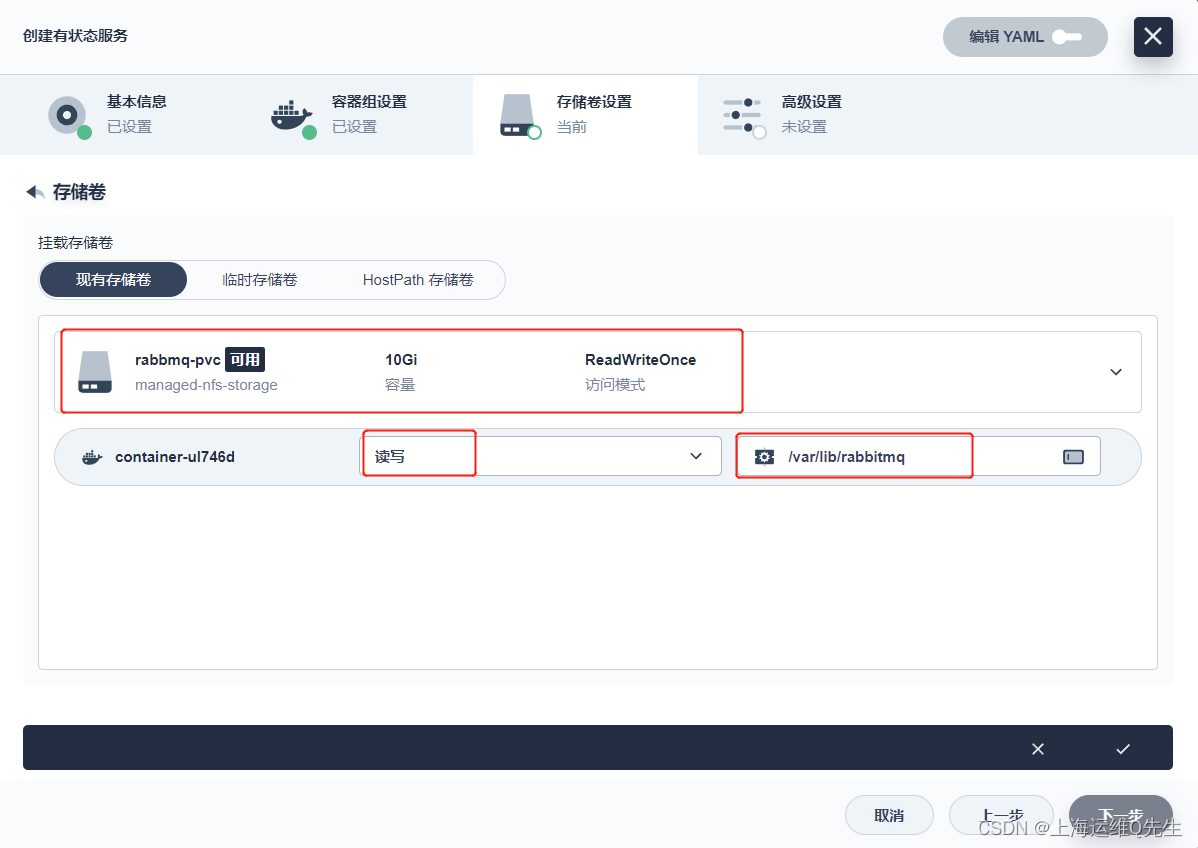

5.2 部署RabbitMQ

[有状态服务] rabbitmq

镜像选择

harbor.intra.com/baseimages/rabbitmq:3.10.20-management-alpine

/var/lib/rabbitmq

[下一步],[创建]

验证服务能解析

dig -t a rabbitmq.sangomall.svc.cluster.local. @10.233.0.3

root@ks-master:~# dig -t a rabbitmq.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a rabbitmq.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 32872

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 1bcc9a0e98f56984 (echoed)

;; QUESTION SECTION:

;rabbitmq.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

rabbitmq.sangomall.svc.cluster.local. 30 IN A 10.233.83.47

;; Query time: 0 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 11:29:58 CST 2023

;; MSG SIZE rcvd: 129

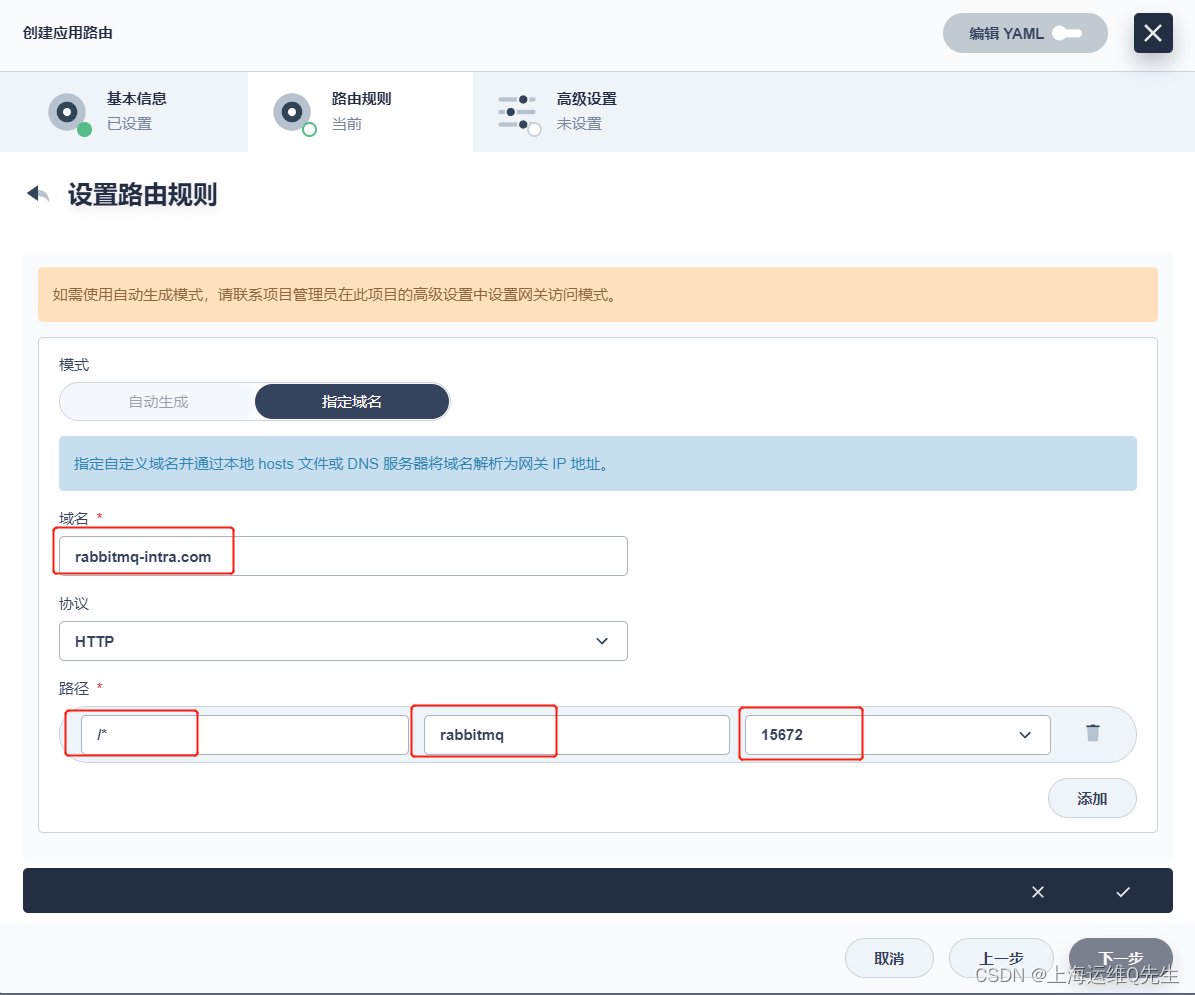

5.3 访问测试

rabbitmq-route

rabbitmq-intra.com

15672

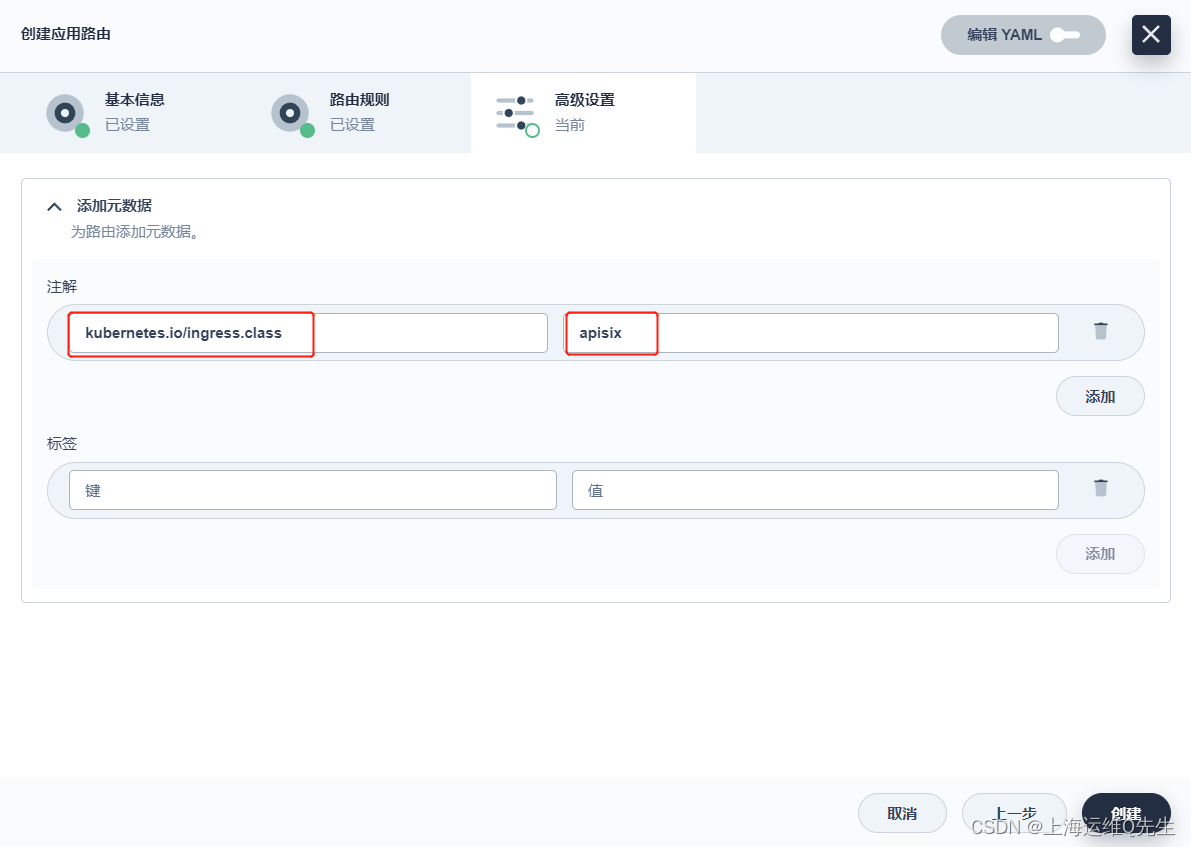

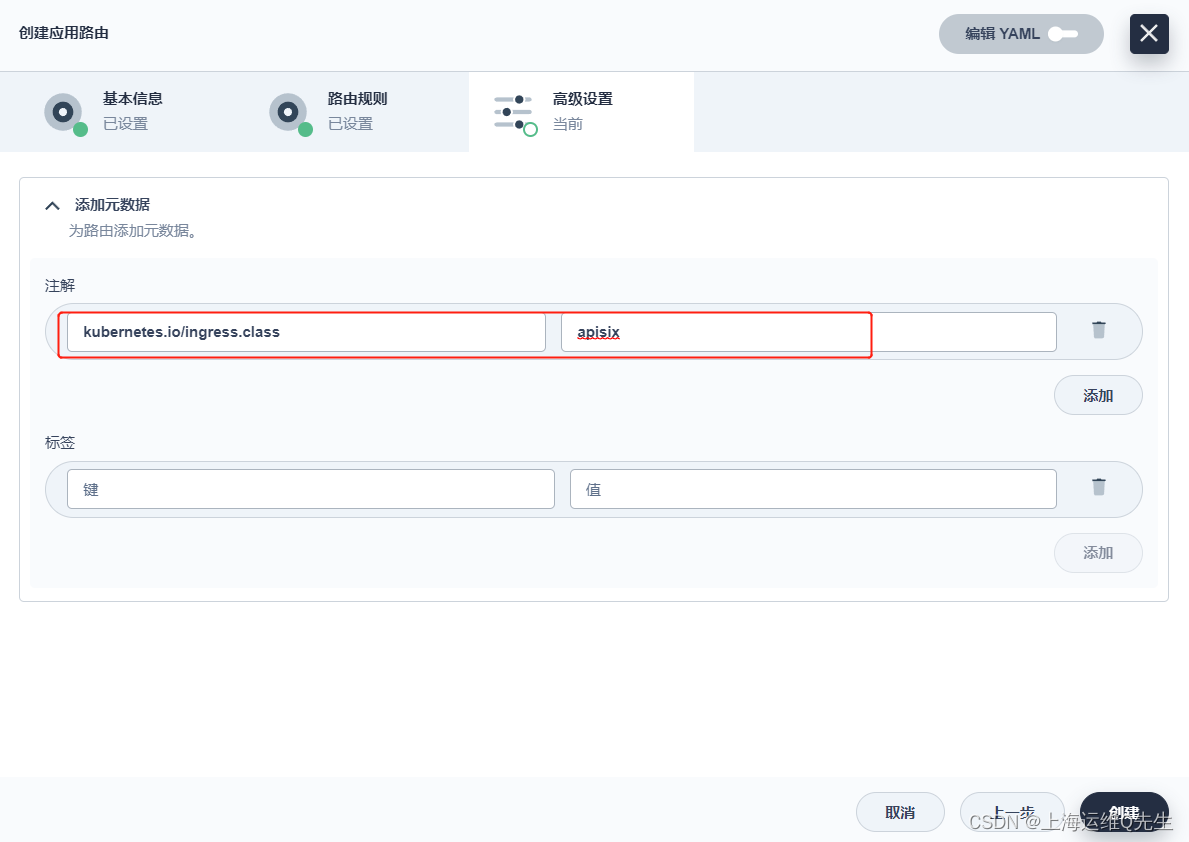

[下一步],[添加元数据]

kubernetes.io/ingress.class: apisix

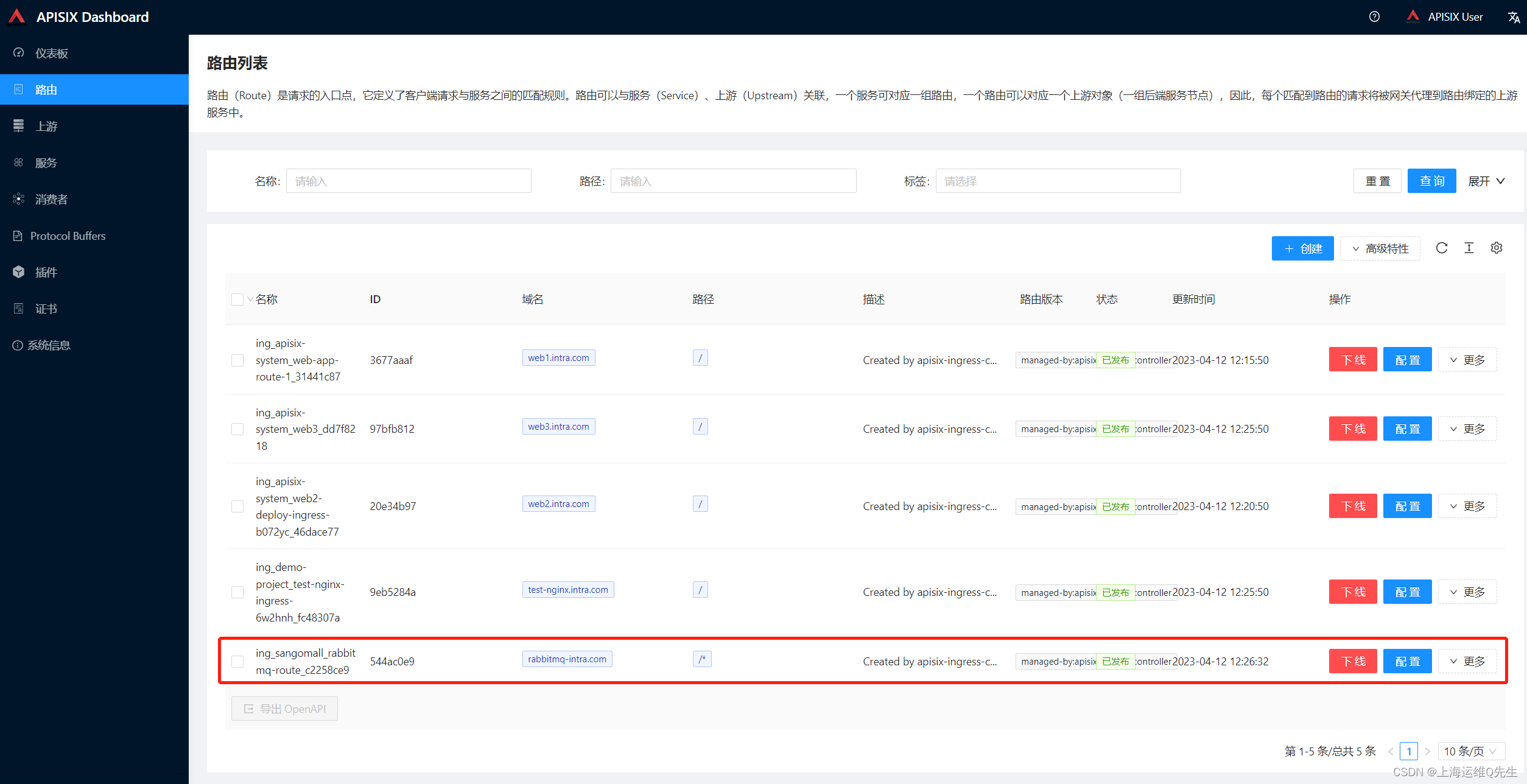

登录APISIX,此时rabbitmq的路由被创建

http://192.168.31.131:31869/

DNS对域名进行解析,将A记录指向apisix网关地址

[root@centos7-1 ~]# vi /var/named/intra.zone

[root@centos7-1 ~]# systemctl restart named

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

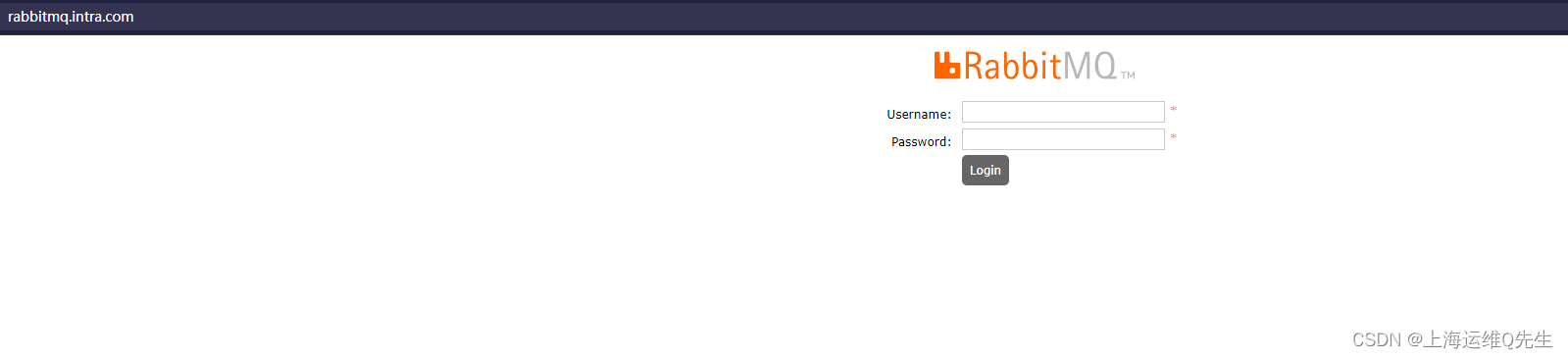

浏览器访问

http://rabbitmq.intra.com/

# 用户名密码

guest/guest

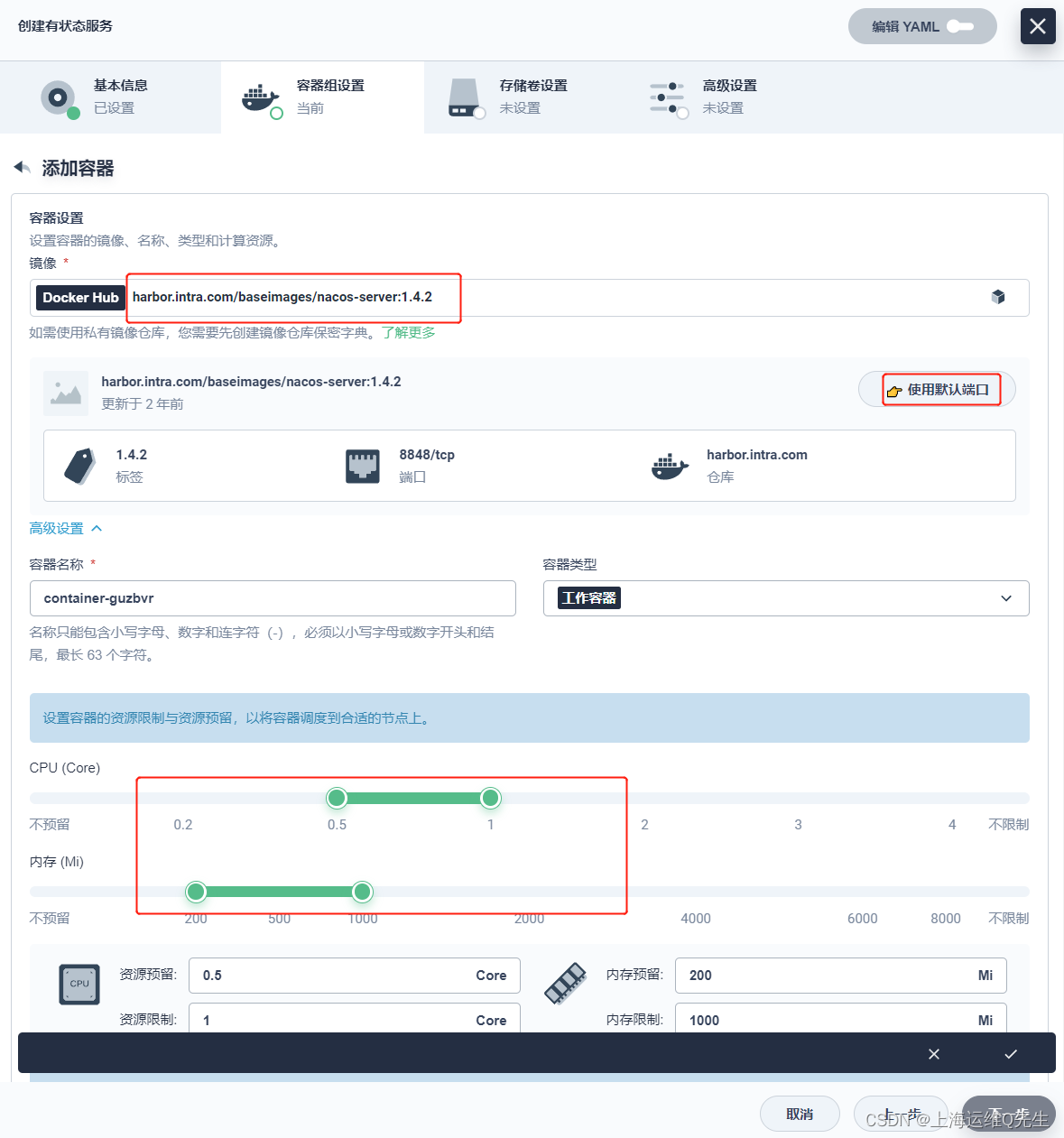

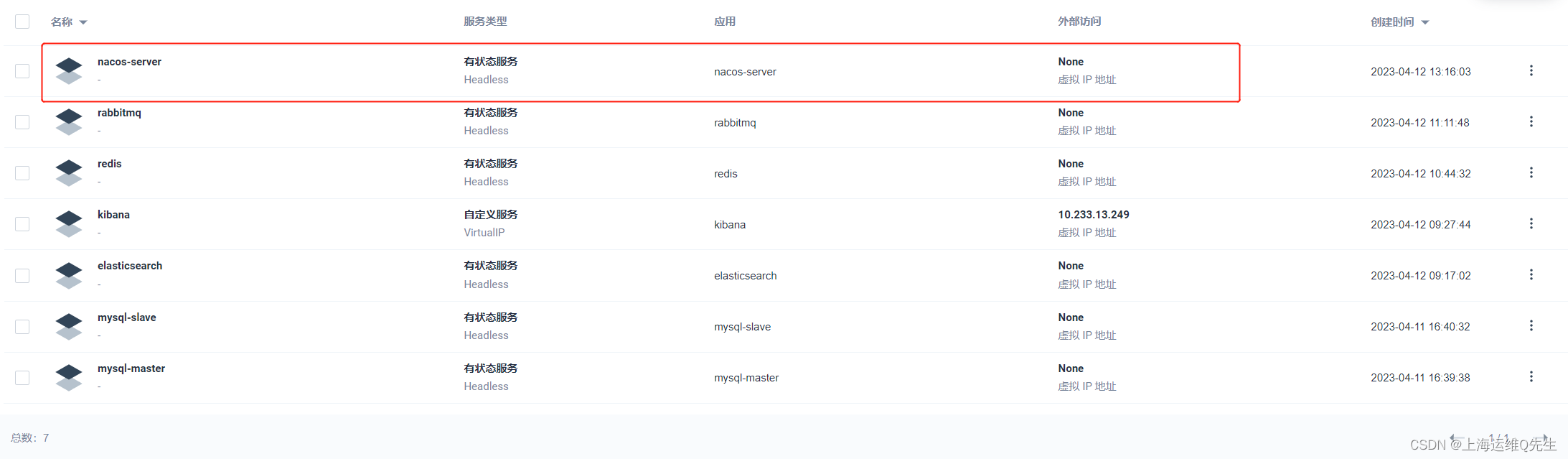

6. Nacos

6.1 Nacos PVC

nacos-pvc

6.2 Nacos服务部署

[有状态服务] nacos-server

镜像选择

harbor.intra.com/baseimages/nacos-server:1.4.2

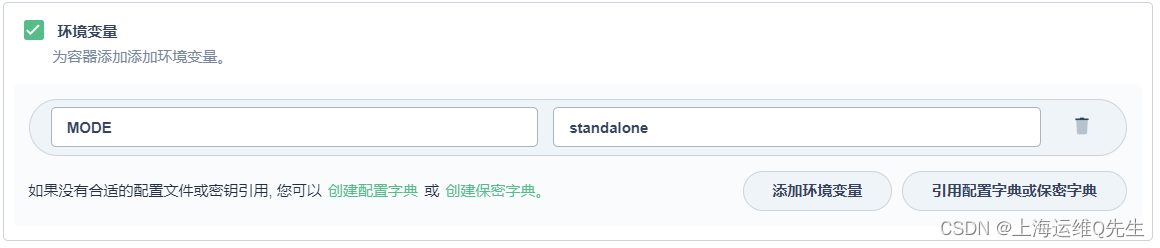

MODE standalone

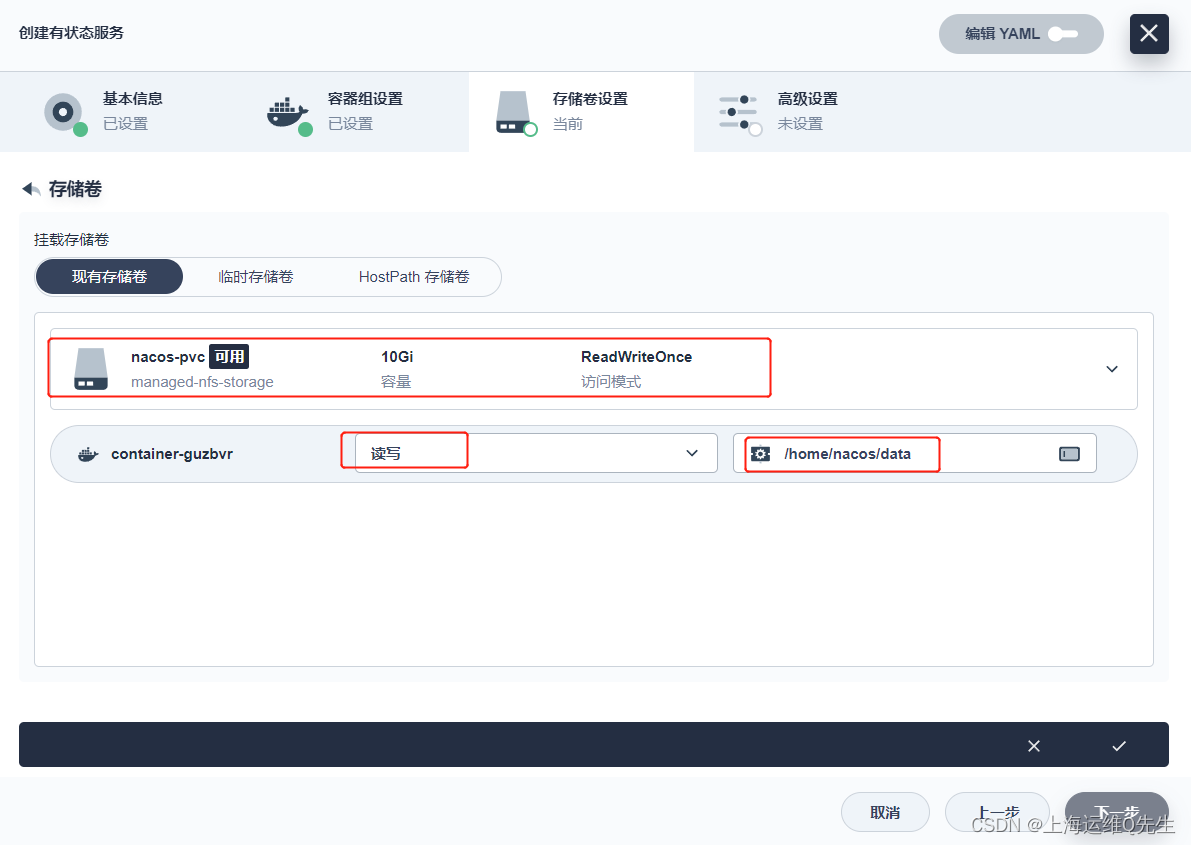

[挂载存储卷]

/home/nacos/data

[下一步],[下一步],[创建]

6.3 访问测试

测试解析

root@ks-master:~# dig -t a nacos-server.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a nacos-server.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 4119

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 327890f817c8b95d (echoed)

;; QUESTION SECTION:

;nacos-server.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

nacos-server.sangomall.svc.cluster.local. 30 IN A 10.233.80.48

;; Query time: 8 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 13:17:39 CST 2023

;; MSG SIZE rcvd: 137

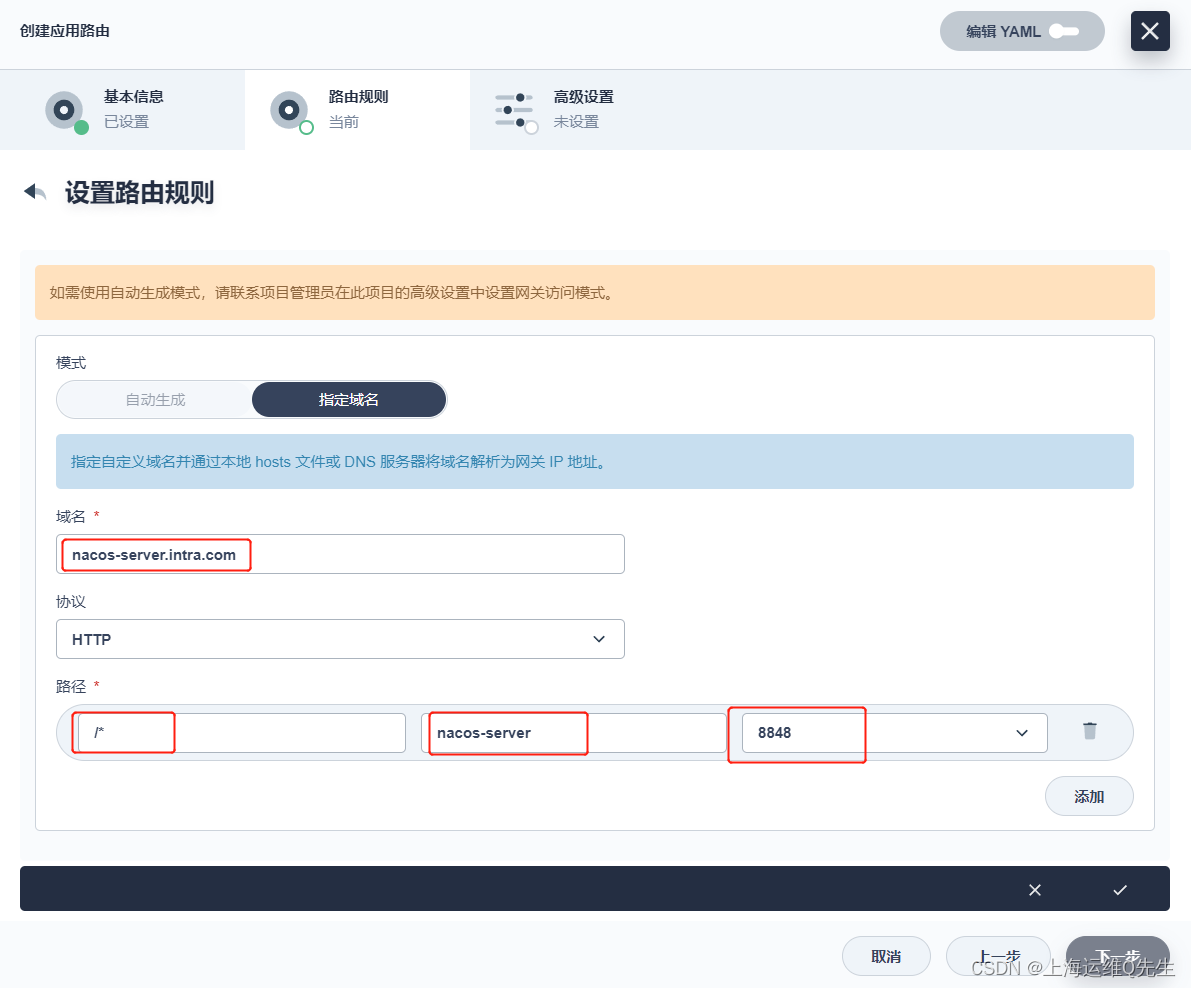

添加路由

nacos-server-route

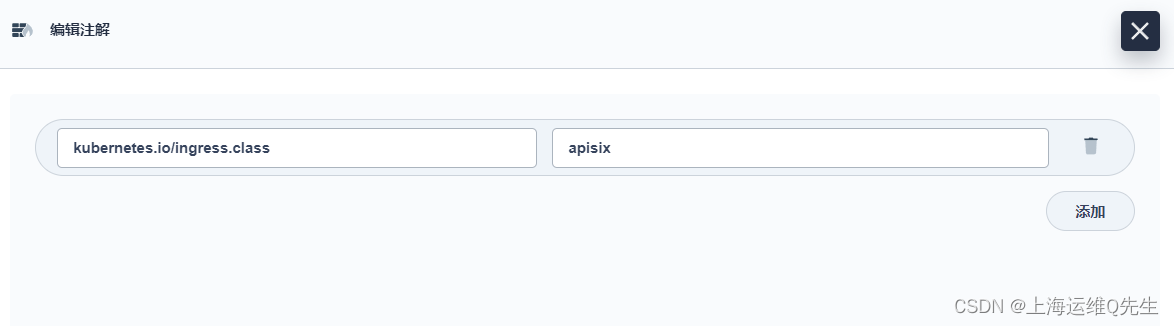

[下一步],[添加元数据]

kubernetes.io/ingress.class: apisix

域名解析

[root@centos7-1 ~]# vi /var/named/intra.zone

[root@centos7-1 ~]# systemctl restart named

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

nacos-server A 192.168.31.211

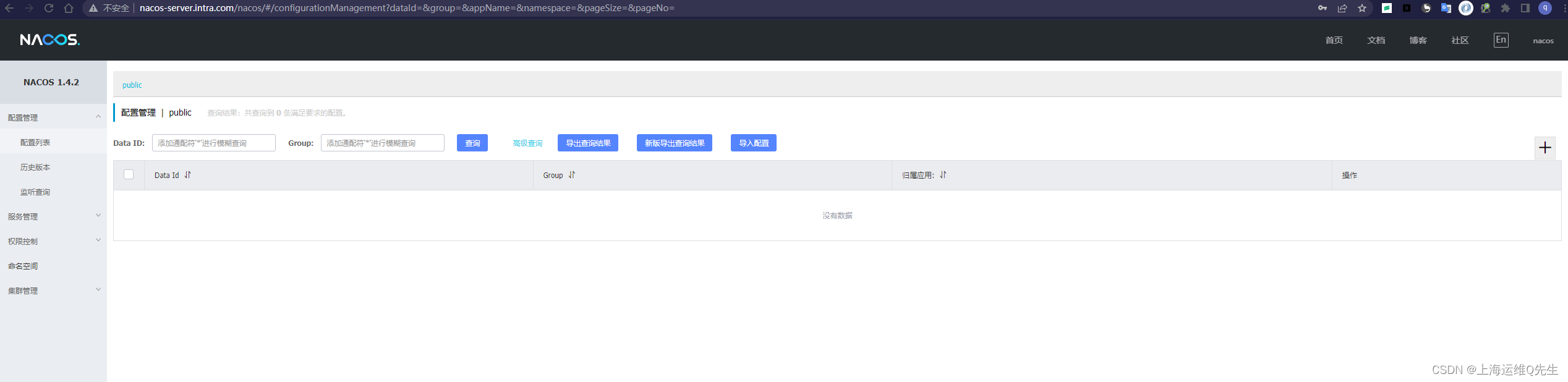

客户url访问

http://nacos-server.intra.com/nacos

# 用户名密码

nacos/nacos

7. Zipkin

7.1 服务依赖

zipkin依赖于elasticsearch,先确保可以解析elasticsearch

root@ks-master:~# dig -t a elasticsearch.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a elasticsearch.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 50774

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: facb147d338b5dec (echoed)

;; QUESTION SECTION:

;elasticsearch.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

elasticsearch.sangomall.svc.cluster.local. 30 IN A 10.233.83.48

;; Query time: 0 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 13:35:20 CST 2023

;; MSG SIZE rcvd: 139

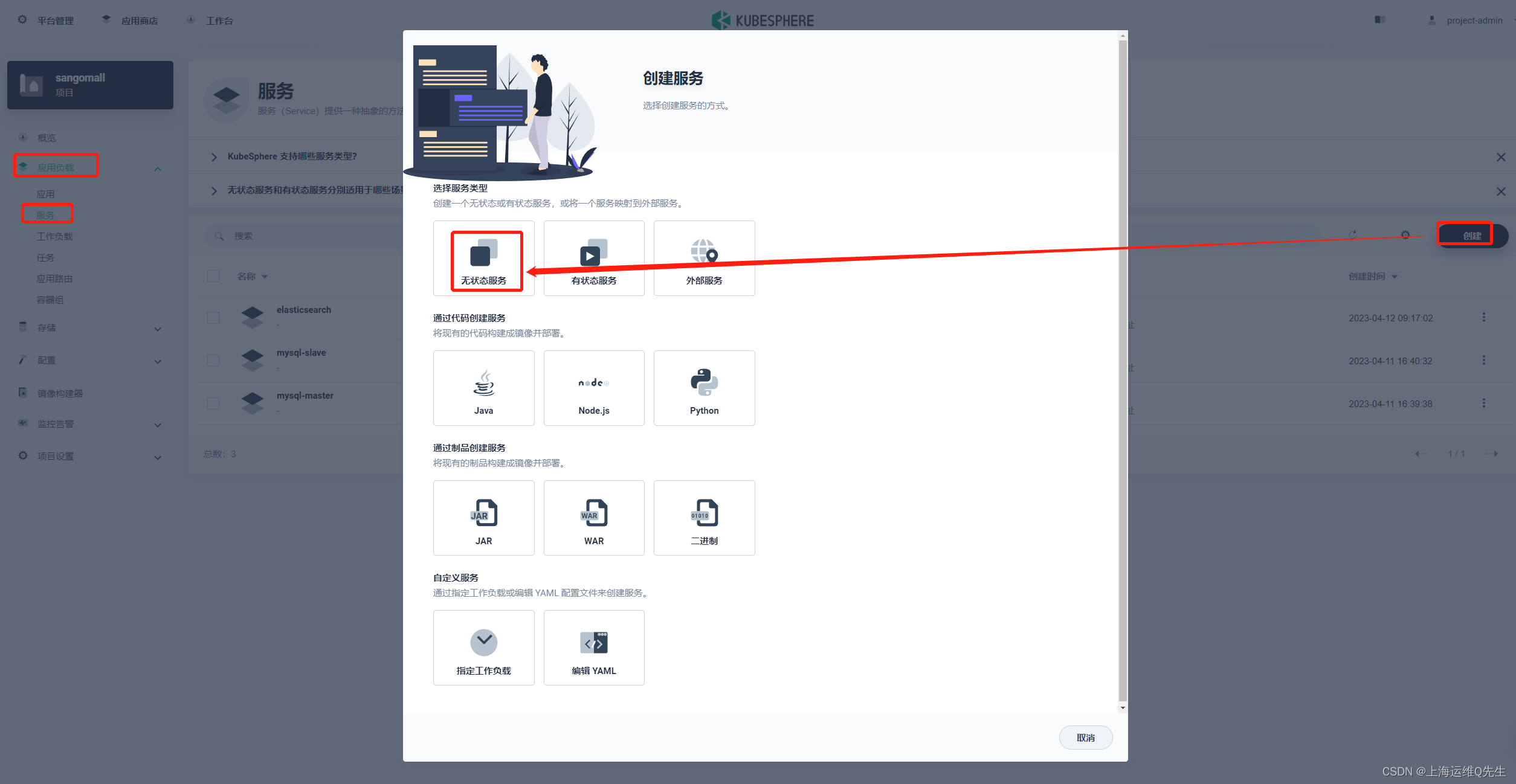

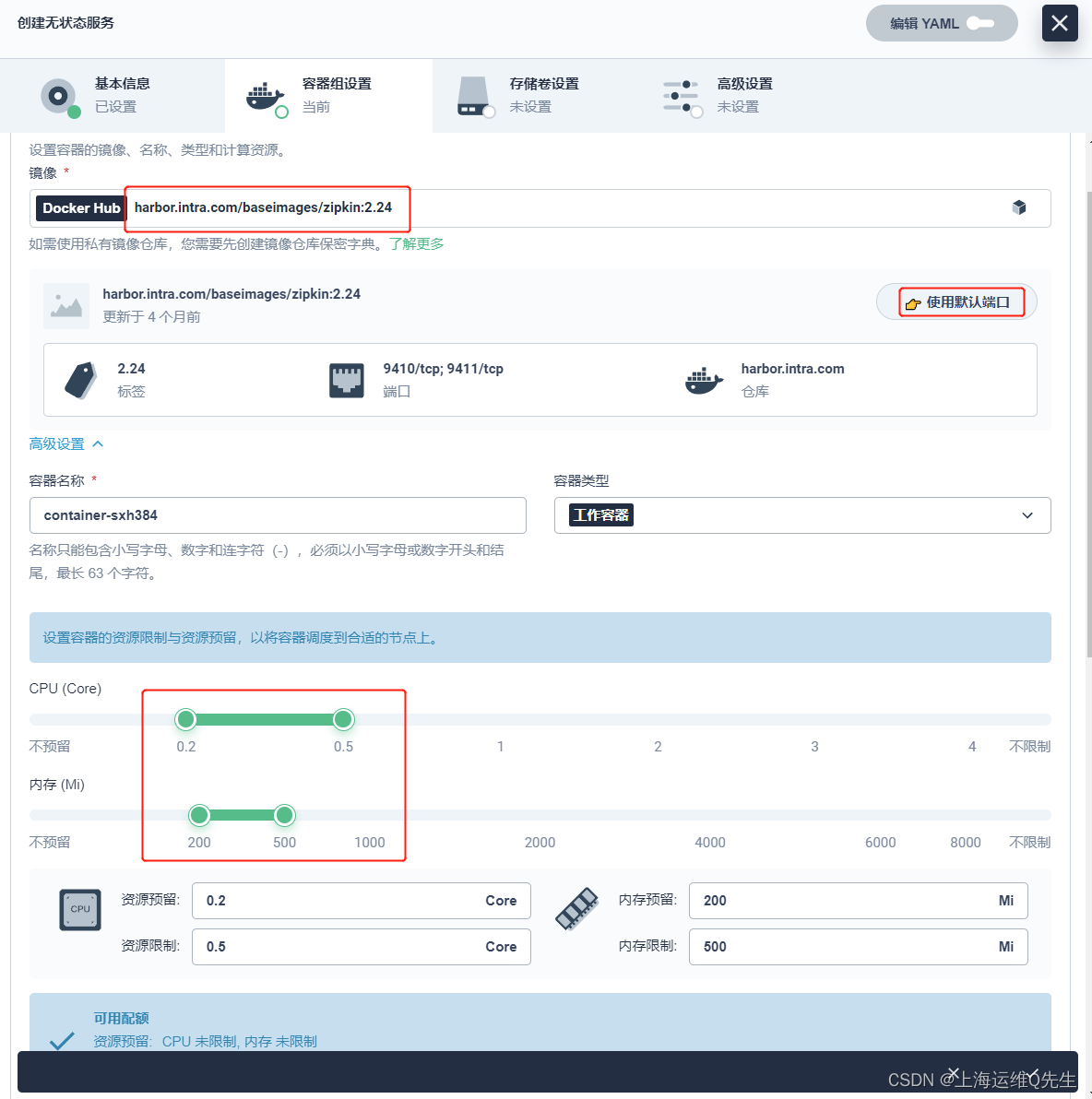

7.2 部署Zipkin

创建[无状态服务]

zipkin-server

镜像选择

harbor.intra.com/baseimages/zipkin:2.24

配置环境变量

STORAGE_TYPE: elasticsearch

ES_HOSTS: elasticsearch.sangomall.svc.cluster.local.:9200

[下一步],[下一步],[创建]

测试是否可以解析

dig -t a zipkin-server.sangomall.svc.cluster.local. @10.233.0.3

结果也是没问题的

root@ks-master:~# dig -t a zipkin-server.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a zipkin-server.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 1210

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: ee7d7b15b95e0fcc (echoed)

;; QUESTION SECTION:

;zipkin-server.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

zipkin-server.sangomall.svc.cluster.local. 30 IN A 10.233.27.128

;; Query time: 3 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 13:50:13 CST 2023

;; MSG SIZE rcvd: 139

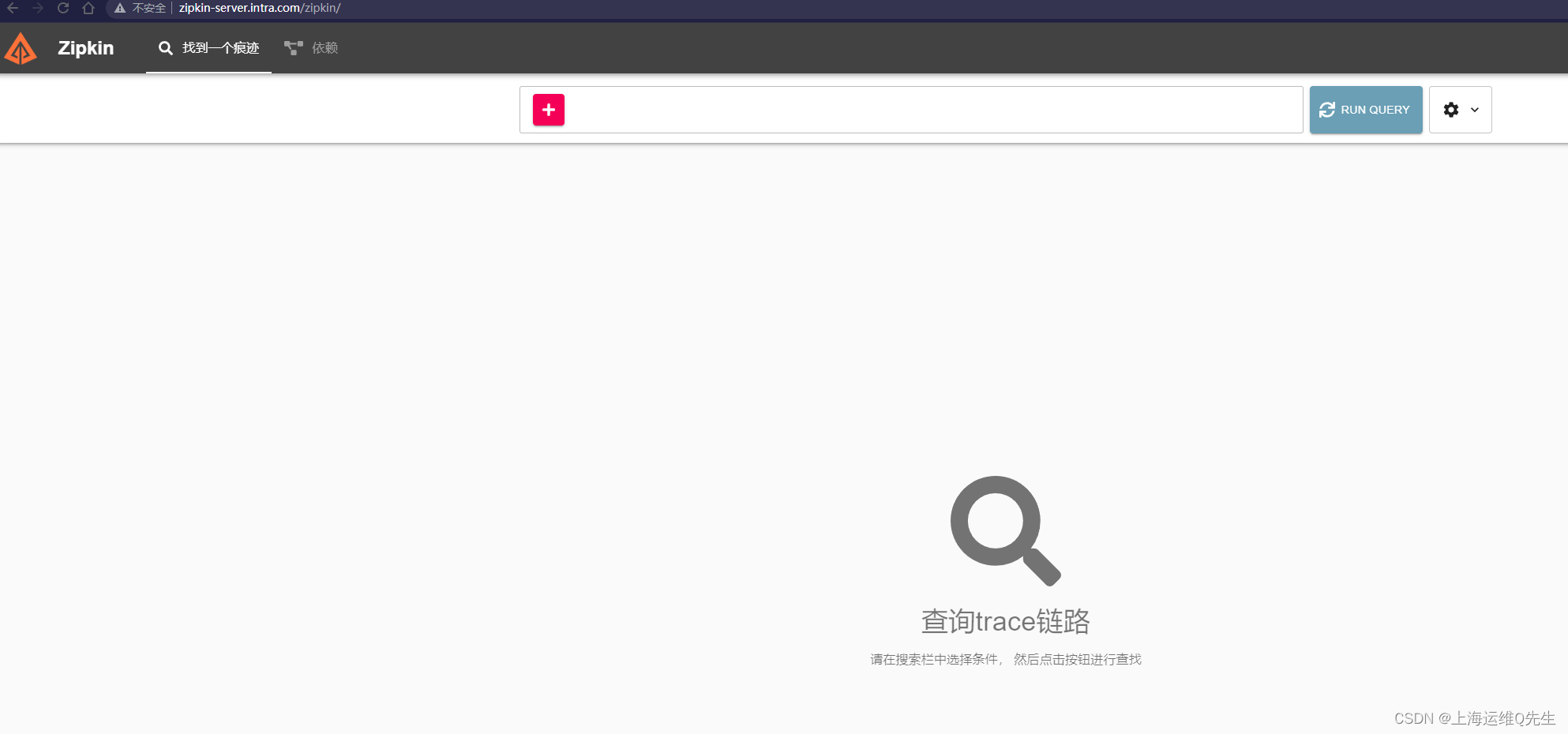

7.3 测试访问

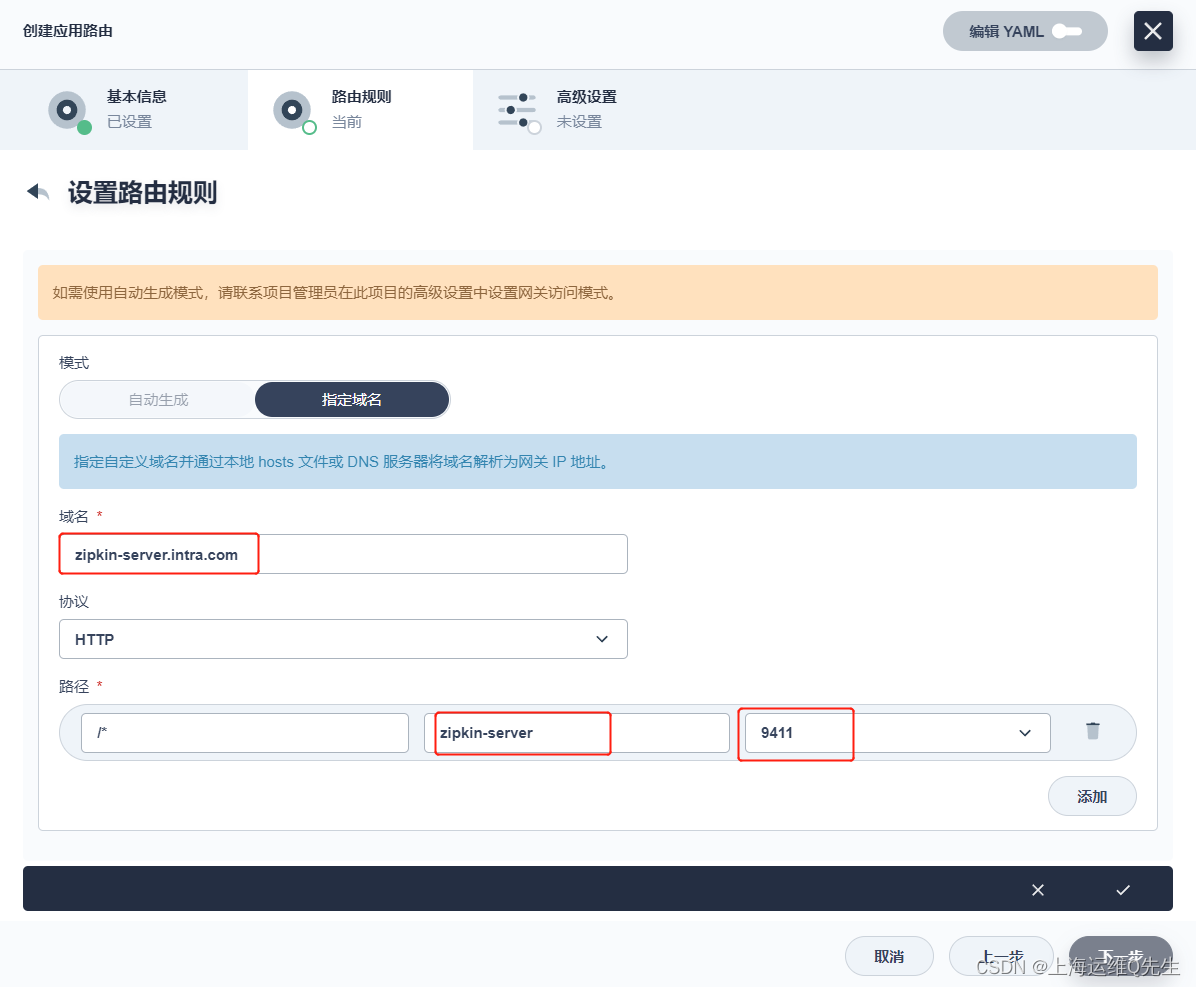

zipkin-server-route

zipkin-server.intra.com

9411

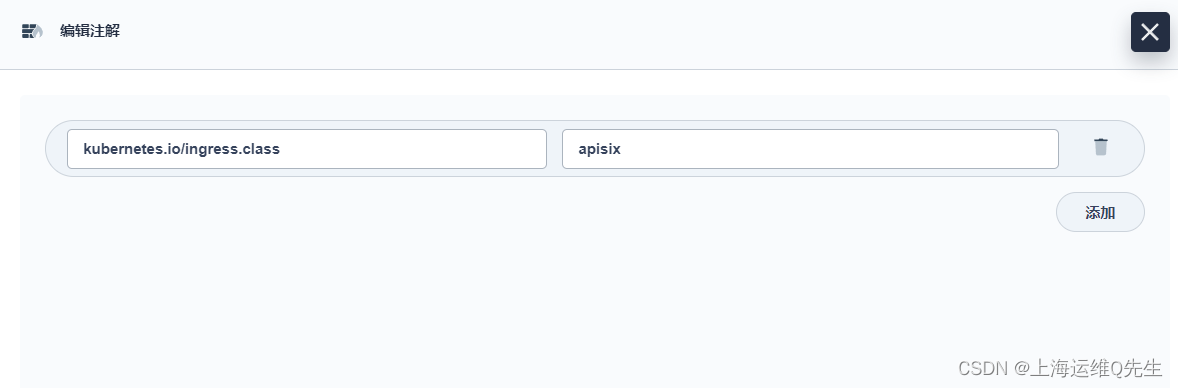

[添加元数据]

kubernetes.io/ingress.class: apisix

域名解析

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

nacos-server A 192.168.31.211

zipkin-server A 192.168.31.211

访问测试

http://zipkin-server.intra.com/zipkin/

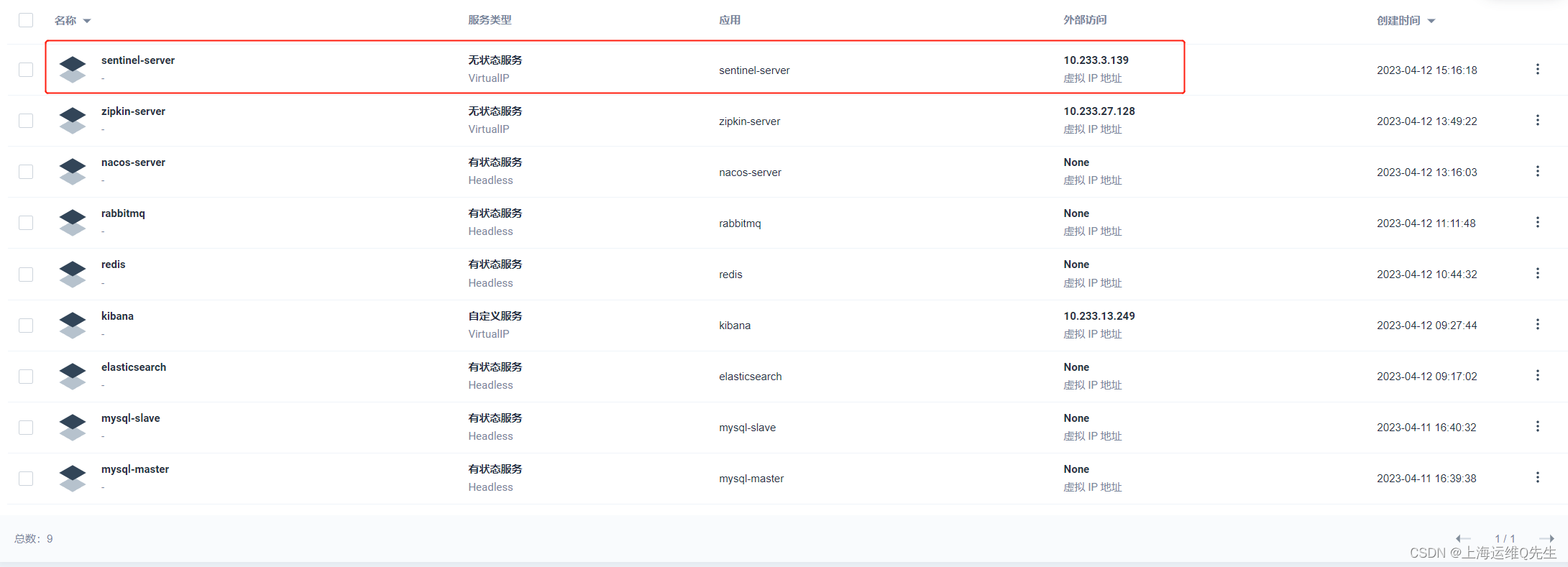

8. Sentinel

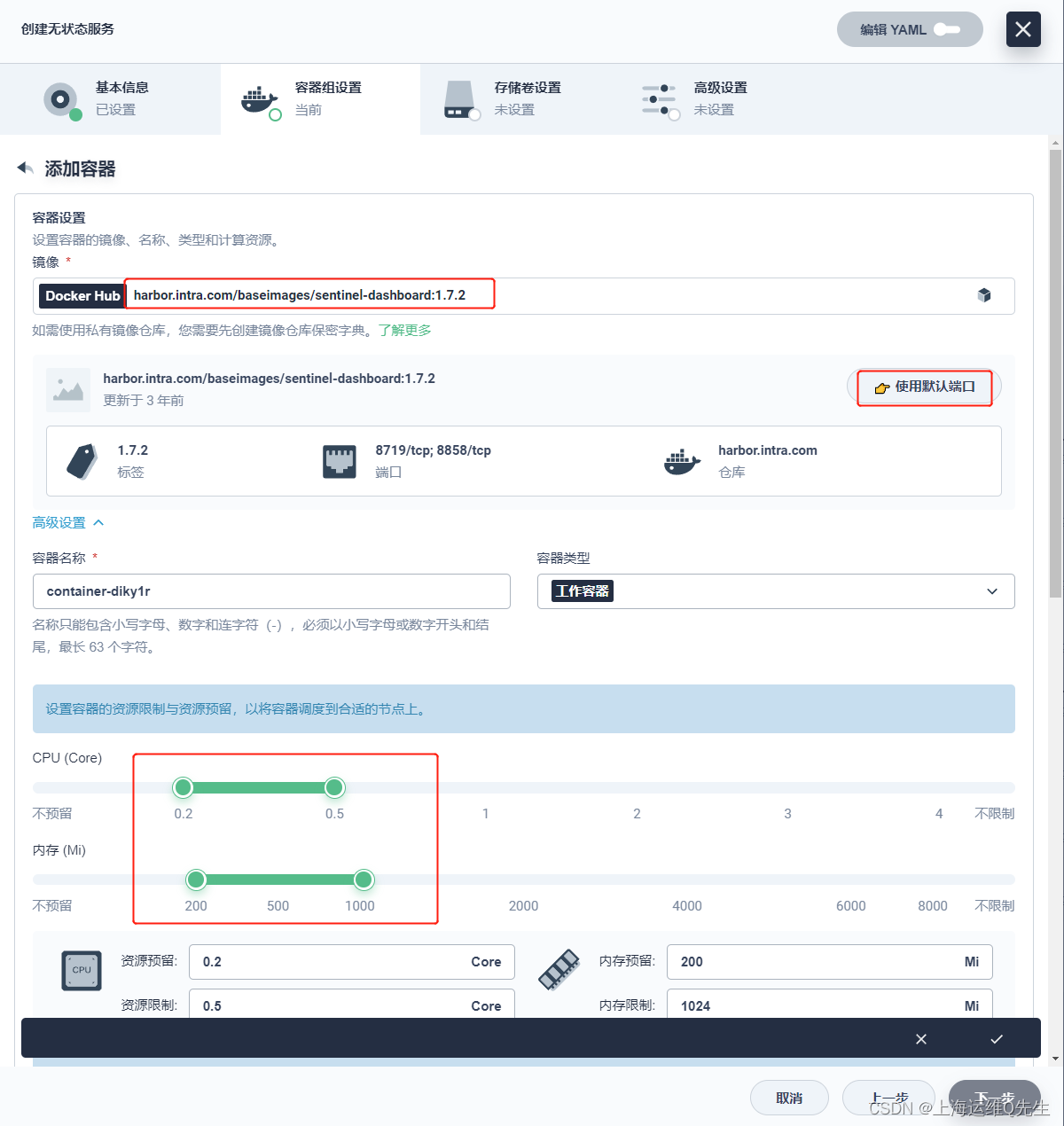

8.1 部署Sentinel

创建[无状态服务] sentinel-server

镜像使用harbor上的

harbor.intra.com/baseimages/sentinel-dashboard:1.7.2

[下一步],[下一步],[创建]

集群中解析

root@ks-master:~# dig -t a sentinel-server.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a sentinel-server.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 23170

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: c93cc5fa76af7e0f (echoed)

;; QUESTION SECTION:

;sentinel-server.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

sentinel-server.sangomall.svc.cluster.local. 30 IN A 10.233.3.139

;; Query time: 4 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 15:17:52 CST 2023

;; MSG SIZE rcvd: 143

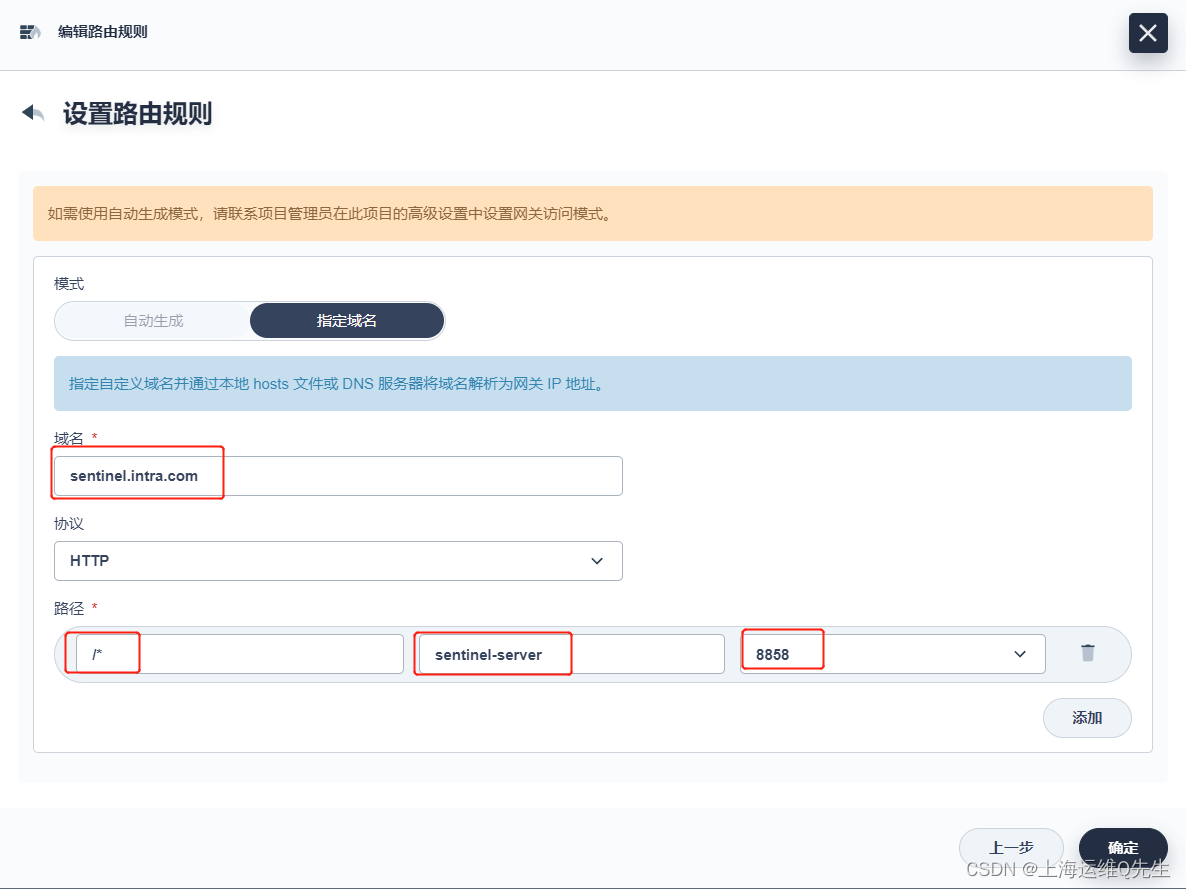

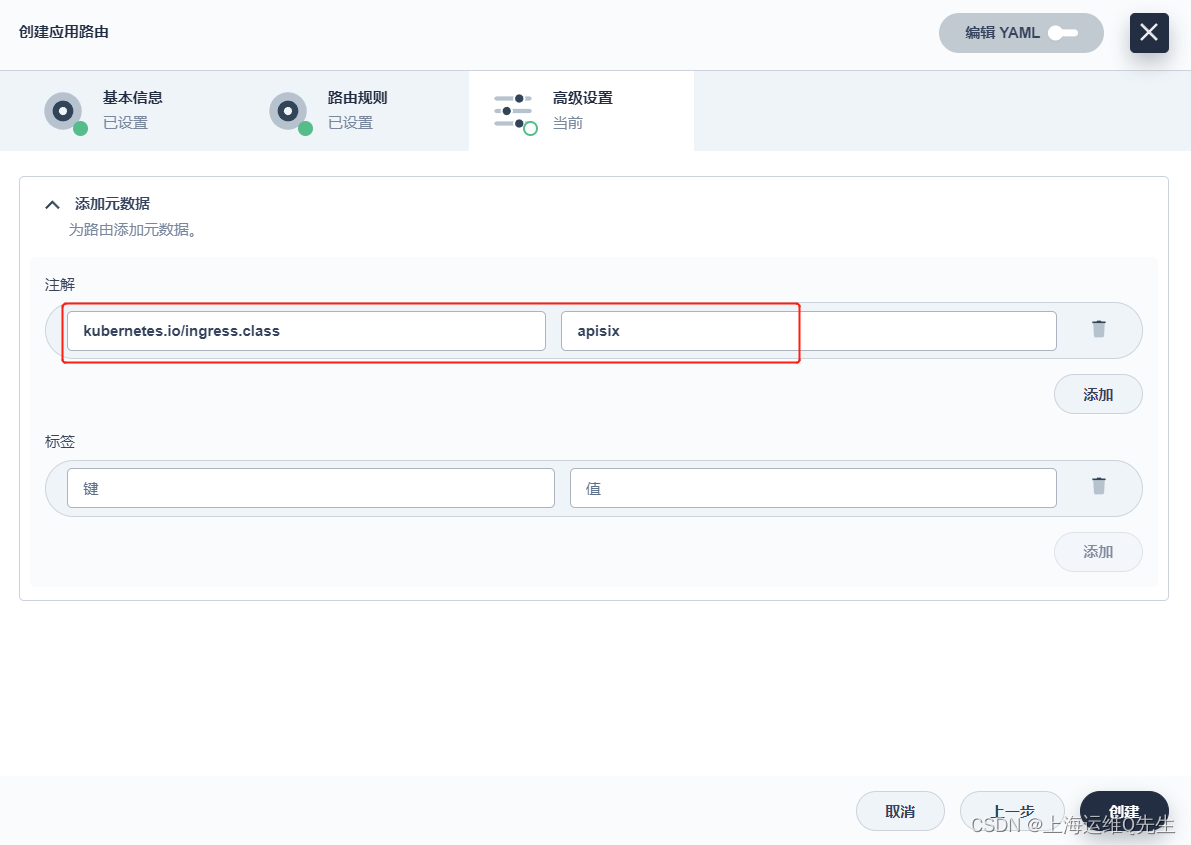

8.2 应用路由

sentinel-route

sentinel.intra.com

8858

[添加元数据]

kubernetes.io/ingress.class: apisix

配置DNS

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

nacos-server A 192.168.31.211

zipkin-server A 192.168.31.211

sentinel A 192.168.31.211

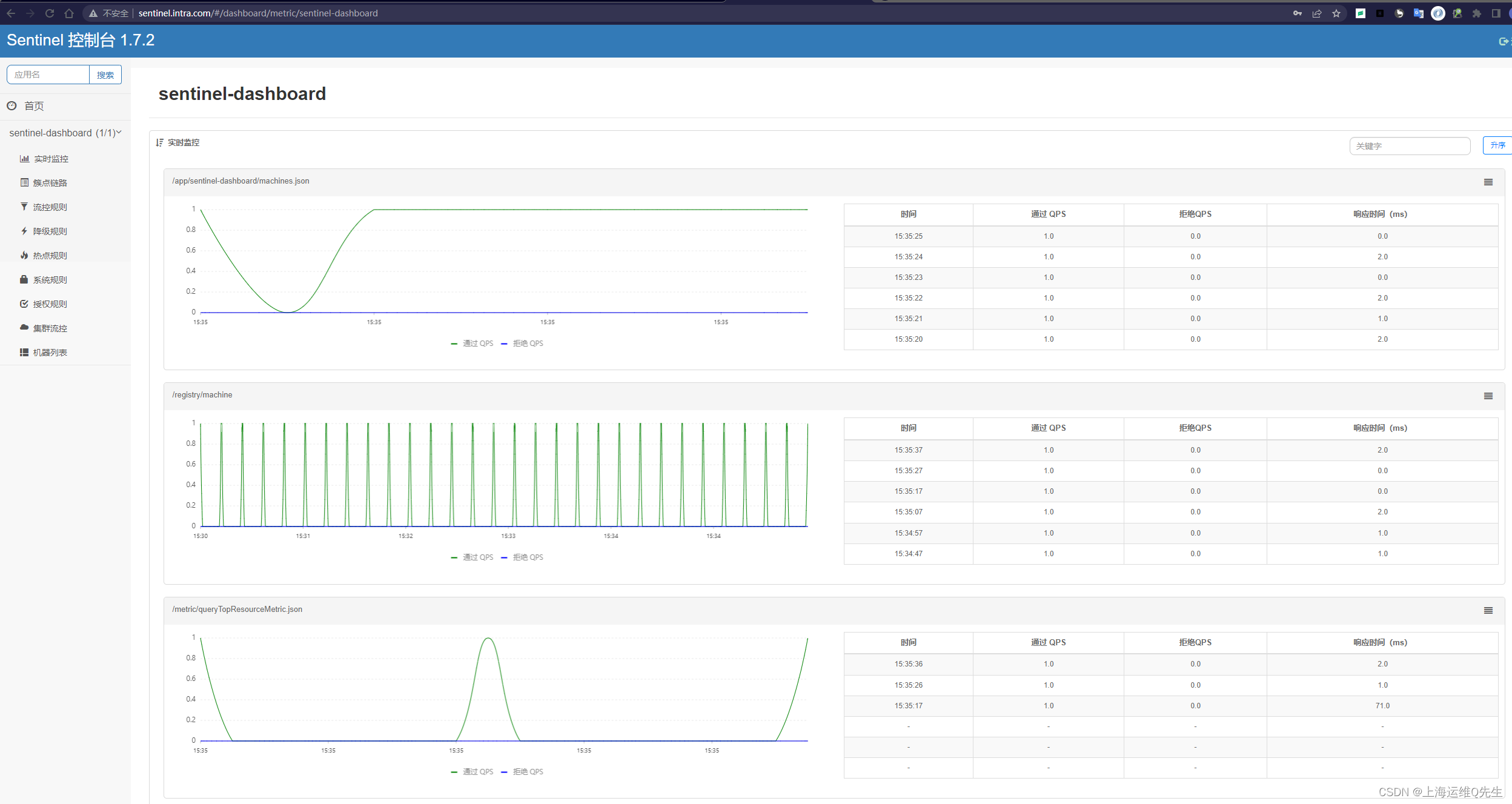

访问url

http://sentinel.intra.com/

# 用户名密码

sentinel/sentinel

9. Skywalking

9.1 服务依赖

Skywalking依赖elasticsearch,所以先测试els是否正常解析

root@ks-master:~# dig -t a elasticsearch.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a elasticsearch.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 56731

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: bb18e9ef60ef3f2d (echoed)

;; QUESTION SECTION:

;elasticsearch.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

elasticsearch.sangomall.svc.cluster.local. 30 IN A 10.233.83.48

;; Query time: 0 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 15:40:14 CST 2023

;; MSG SIZE rcvd: 139

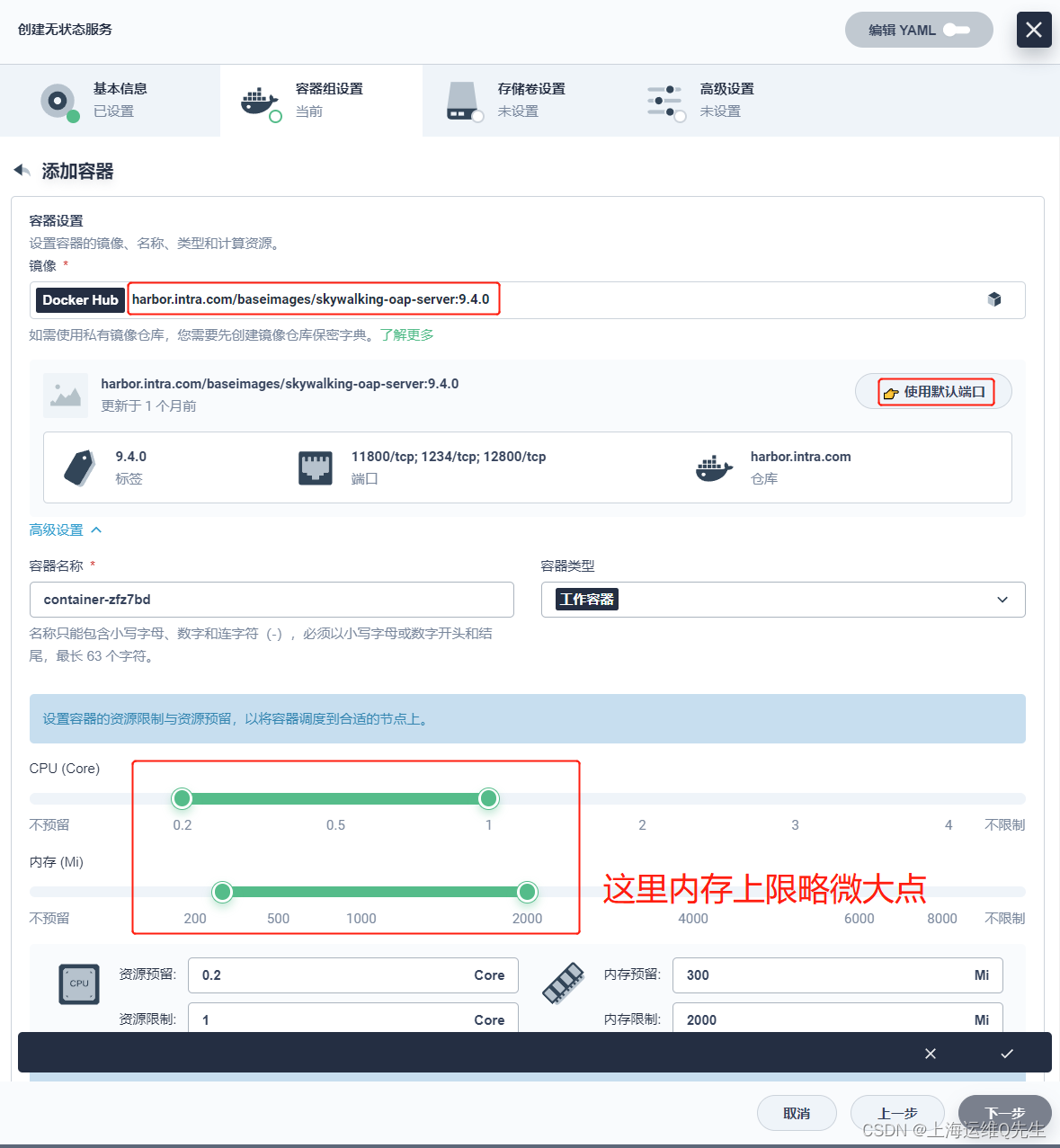

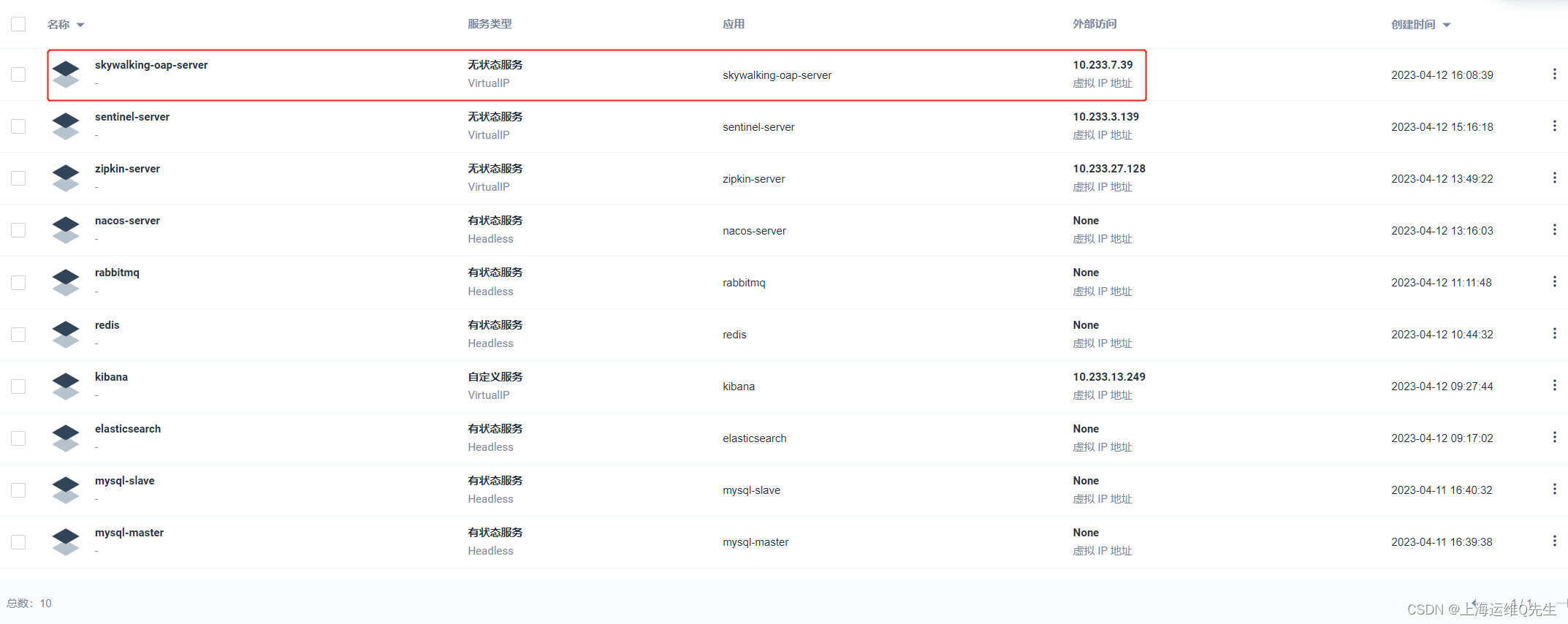

9.2 Skywalking Oap部署

创建[无状态服务] skywalking-oap-server

镜像

harbor.intra.com/baseimages/skywalking-oap-server:9.4.0

环境变量

SW_STORAGE elasticsearch

SW_STORAGE_ES_CLUSTER_NODES elasticsearch.sangomall.svc.cluster.local.:9200

[下一步],[下一步],[创建]

验证,确保服务可以解析

root@ks-master:~# dig -t a skywalking-oap-server.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a skywalking-oap-server.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 39288

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 9ef1e9fe750766cd (echoed)

;; QUESTION SECTION:

;skywalking-oap-server.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

skywalking-oap-server.sangomall.svc.cluster.local. 30 IN A 10.233.7.39

;; Query time: 0 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 16:10:12 CST 2023

;; MSG SIZE rcvd: 155

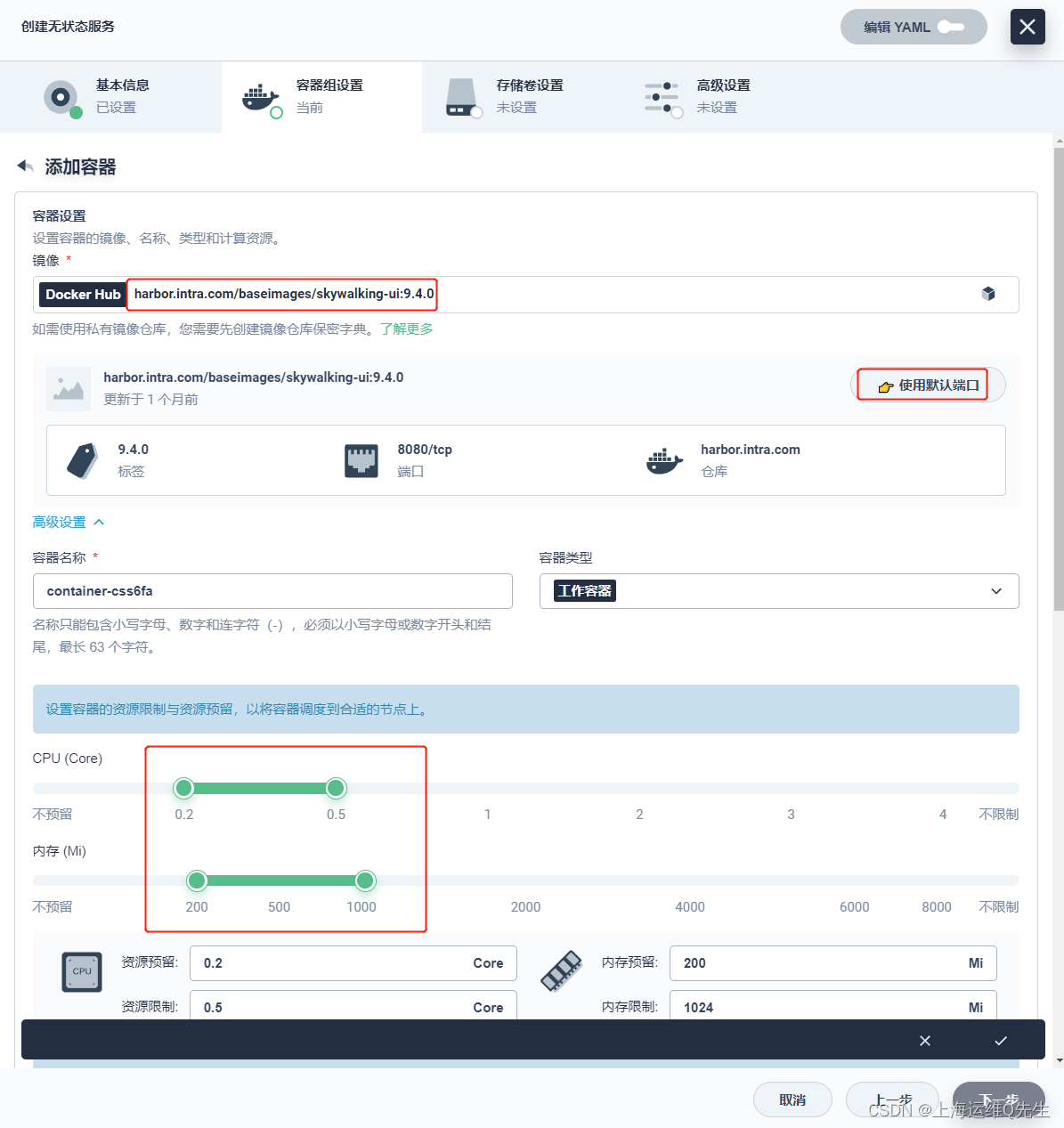

9.3 Skywalking UI 部署

创建[无状态服务] skywalking-ui

镜像使用

harbor.intra.com/baseimages/skywalking-ui:9.4.0

[环境变量]

SW_OAP_ADDRESS http://skywalking-oap-server.sangomall.svc.cluster.local.:12800

[下一步],[下一步],[创建]

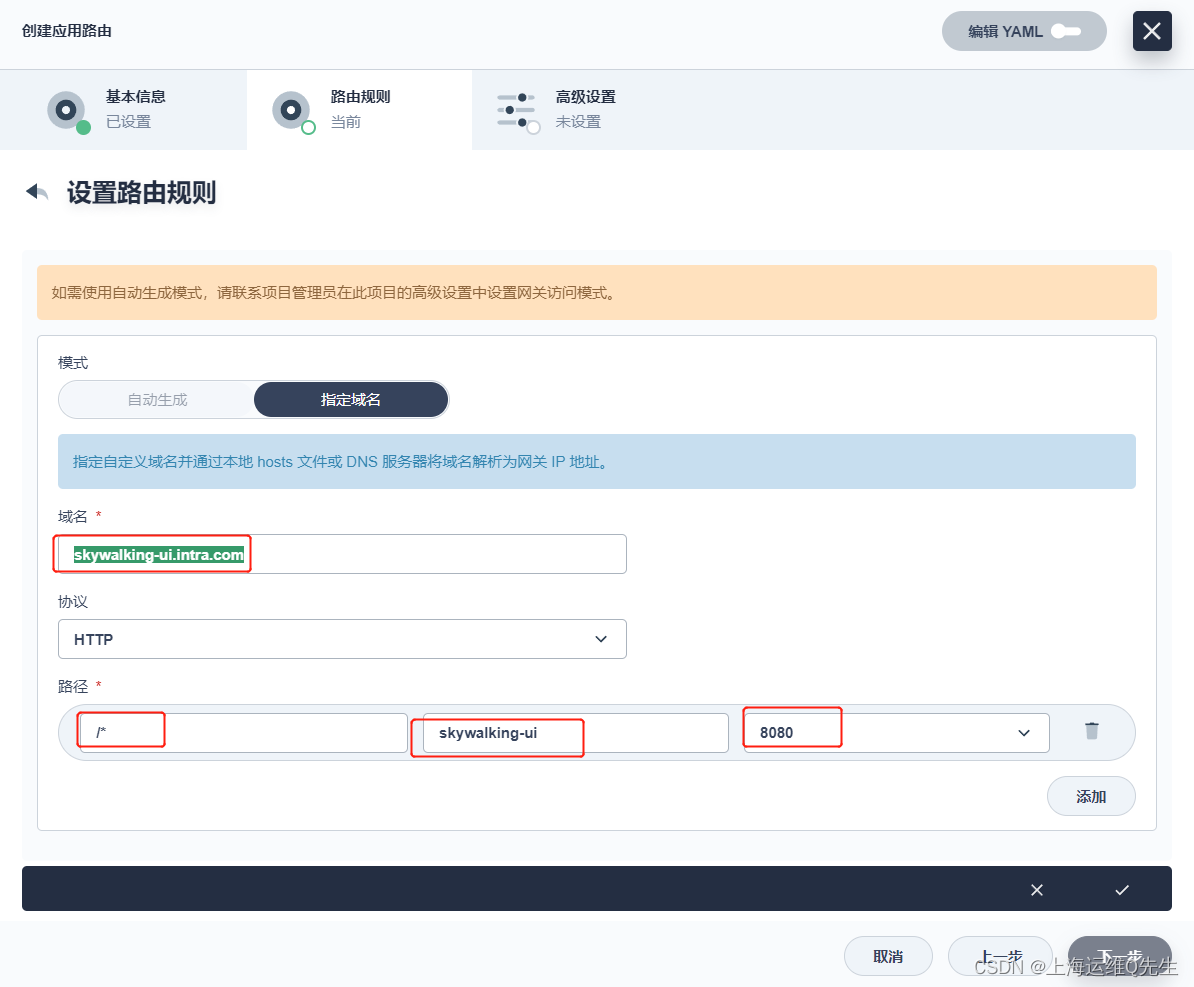

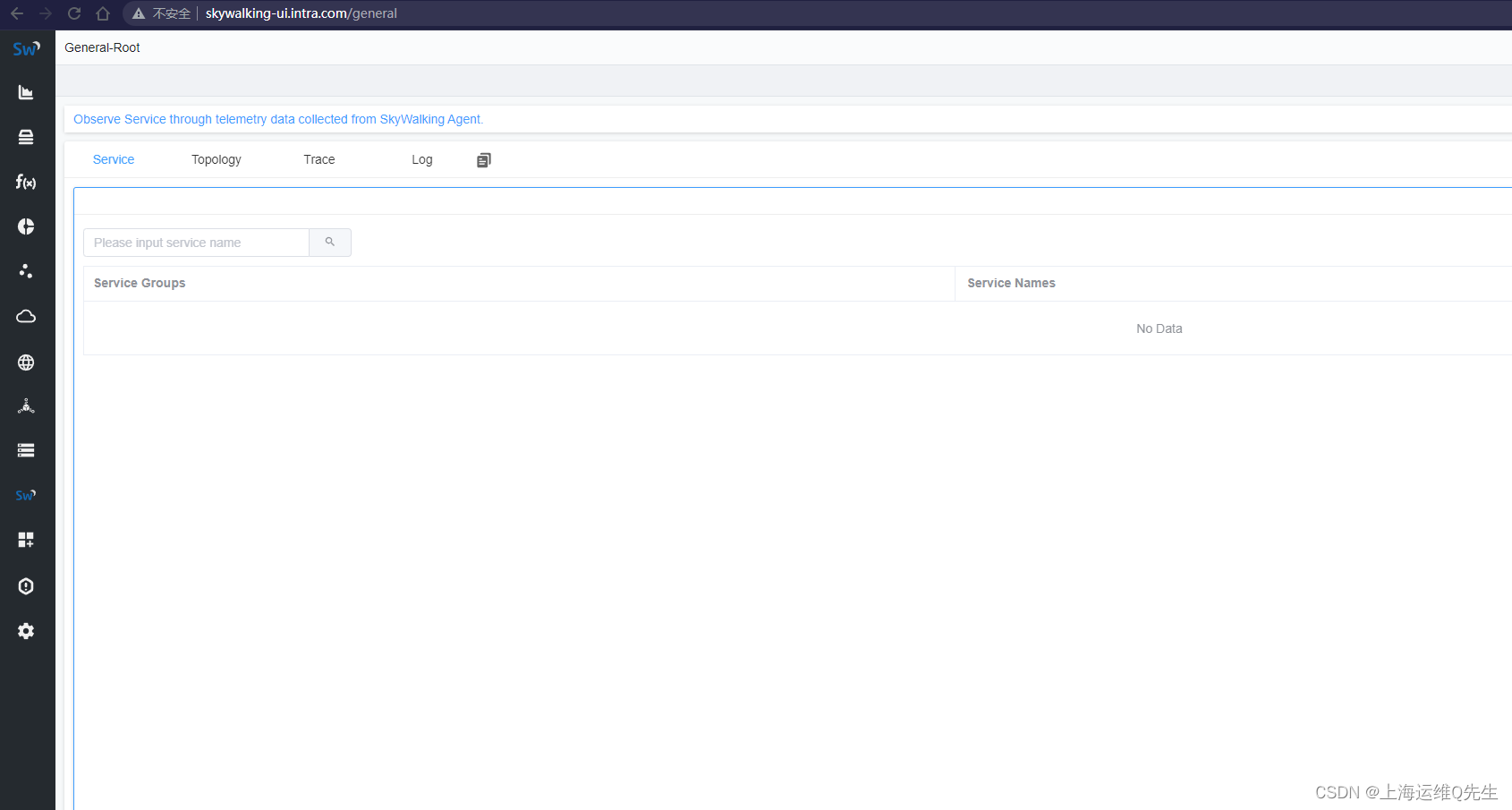

9.4 Skywalking UI 访问

创建[应用路由] skywalking-ui

skywalking-ui.intra.com

8080

[添加元数据]

kubernetes.io/ingress.class apisix

创建DNS解析

[root@centos7-1 ~]# vi /var/named/intra.zone3

[root@centos7-1 ~]# systemctl restart named

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

nacos-server A 192.168.31.211

zipkin-server A 192.168.31.211

sentinel A 192.168.31.211

skywalking-ui A 192.168.31.211

浏览器访问

http://skywalking-ui.intra.com/general

10. RocketMQ

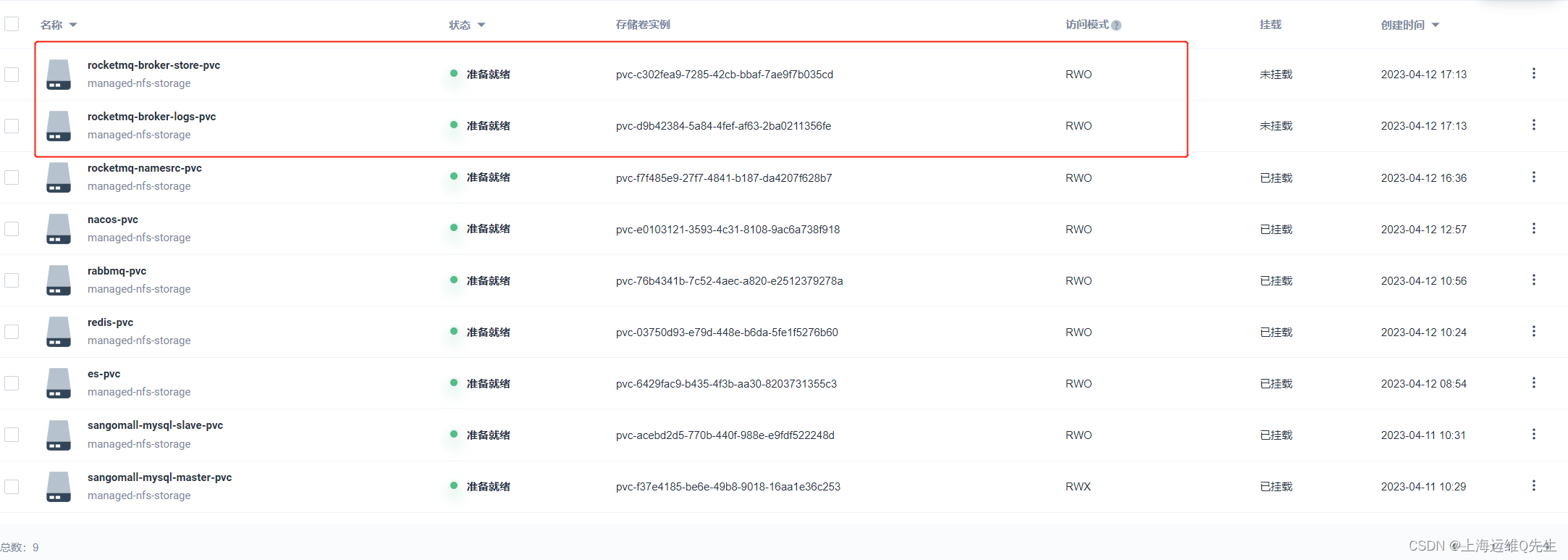

10.1 RocketMQ Pvc创建

10.1.1 RocketMQ namesrv PVC

rocketmq-namesrc-pvc

选择nfs

10.2.1 RocketMQ broker PVC

创建2个PVC

rocketmq-broker-logs-pvc

rocketmq-broker-store-pvc

10.2 RocketMQ 部署

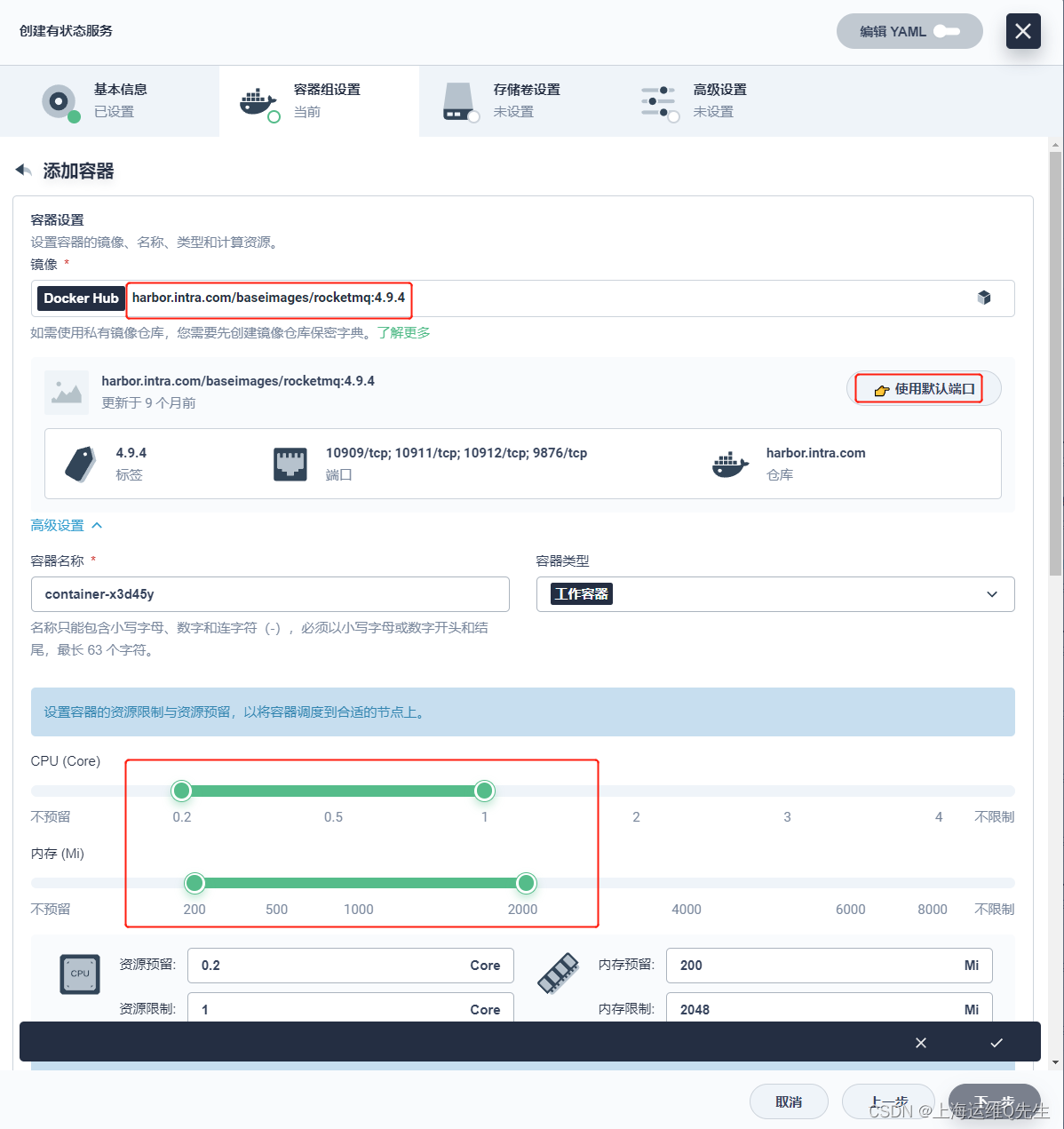

10.2.1 RocketMQ namesrv部署

创建[有状态服务] rocketmq-namesrv

镜像

harbor.intra.com/baseimages/rocketmq:4.9.4

启动命令:

/bin/bash

参数:

mqnamesrv

环境变量

JAVA_OPT_EXT: -Xms512M -Xmx512M -Xmn128m

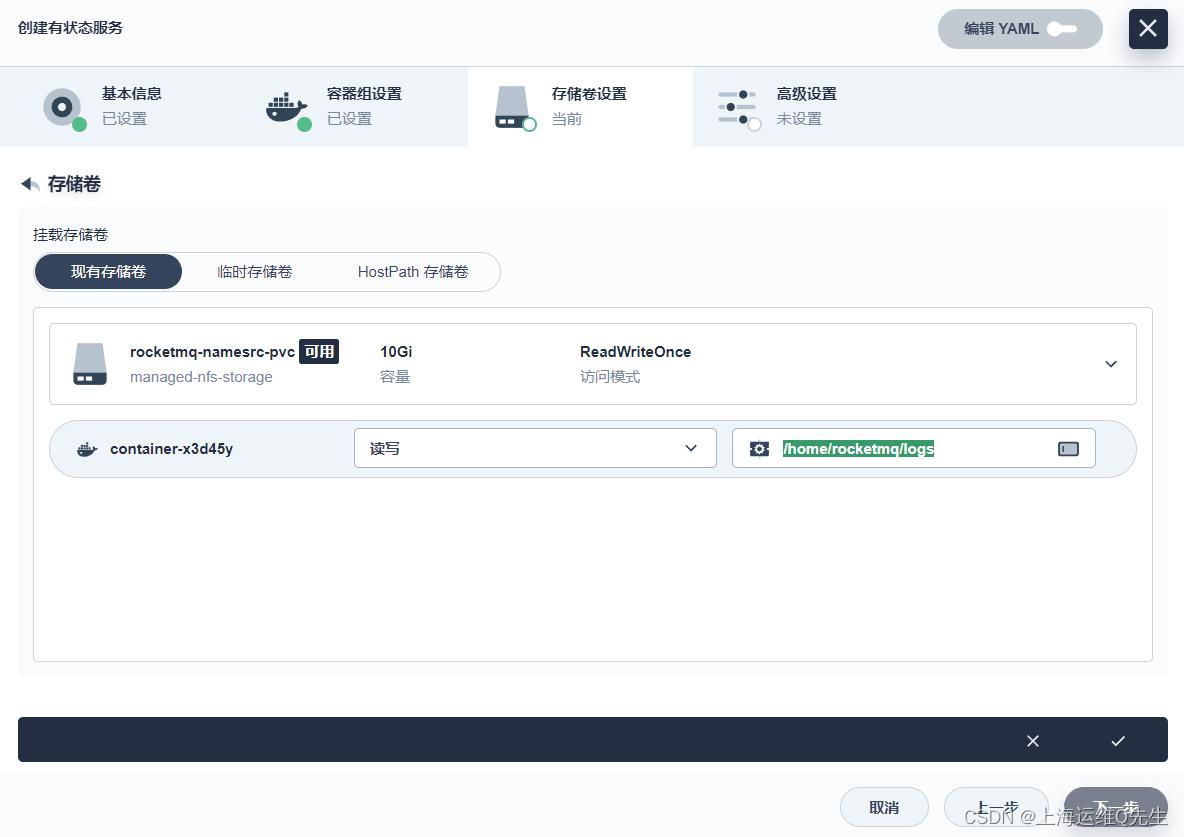

挂载存储

/home/rocketmq/logs

[下一步],[下一步],[创建]

尝试解析

root@ks-master:~# dig -t a rocketmq-namesrv.sangomall.svc.cluster.local. @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a rocketmq-namesrv.sangomall.svc.cluster.local. @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 40683

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 3baa8b38fbdb4ad7 (echoed)

;; QUESTION SECTION:

;rocketmq-namesrv.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

rocketmq-namesrv.sangomall.svc.cluster.local. 30 IN A 10.233.83.55

;; Query time: 3 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Wed Apr 12 16:50:13 CST 2023

;; MSG SIZE rcvd: 145

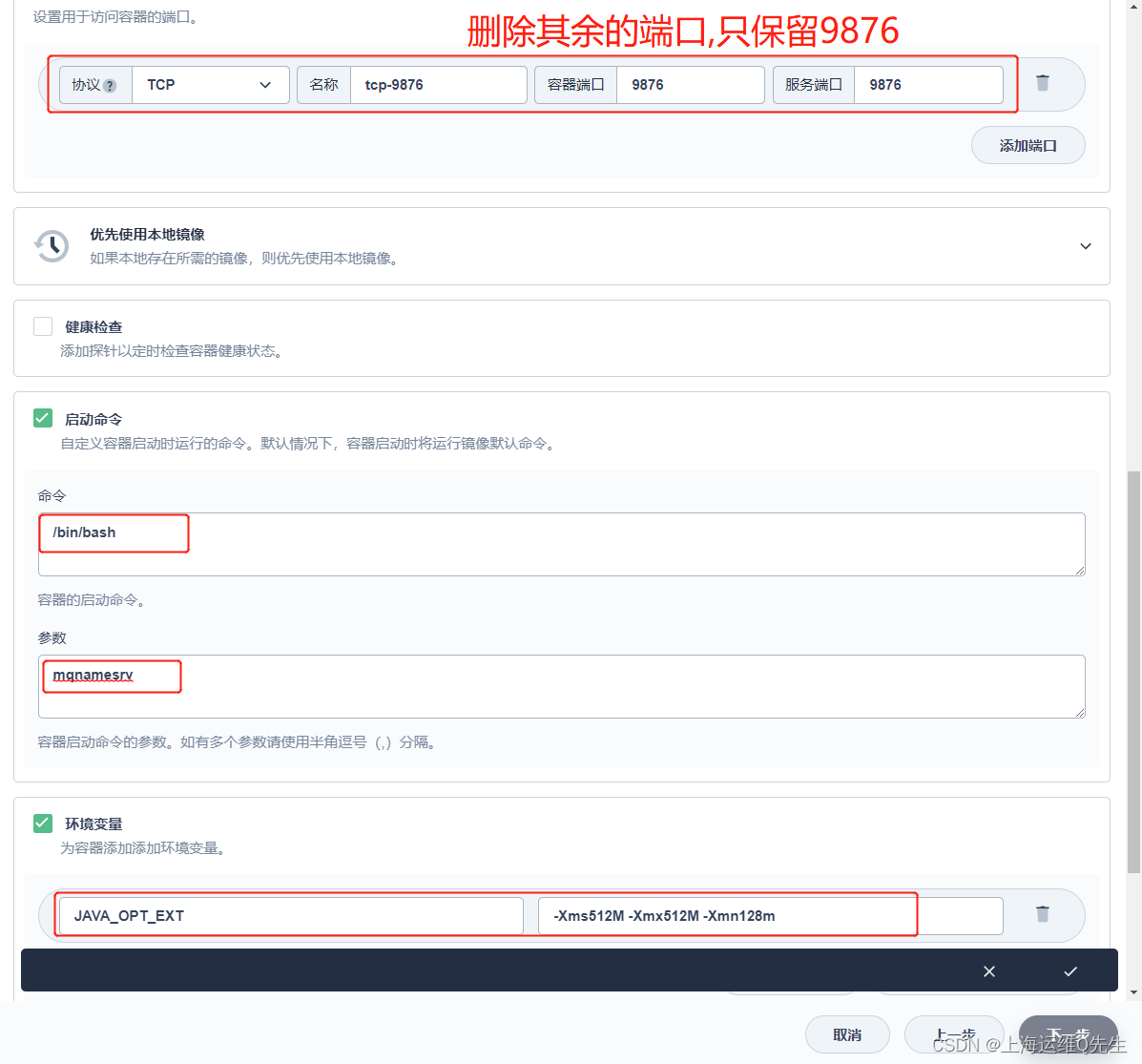

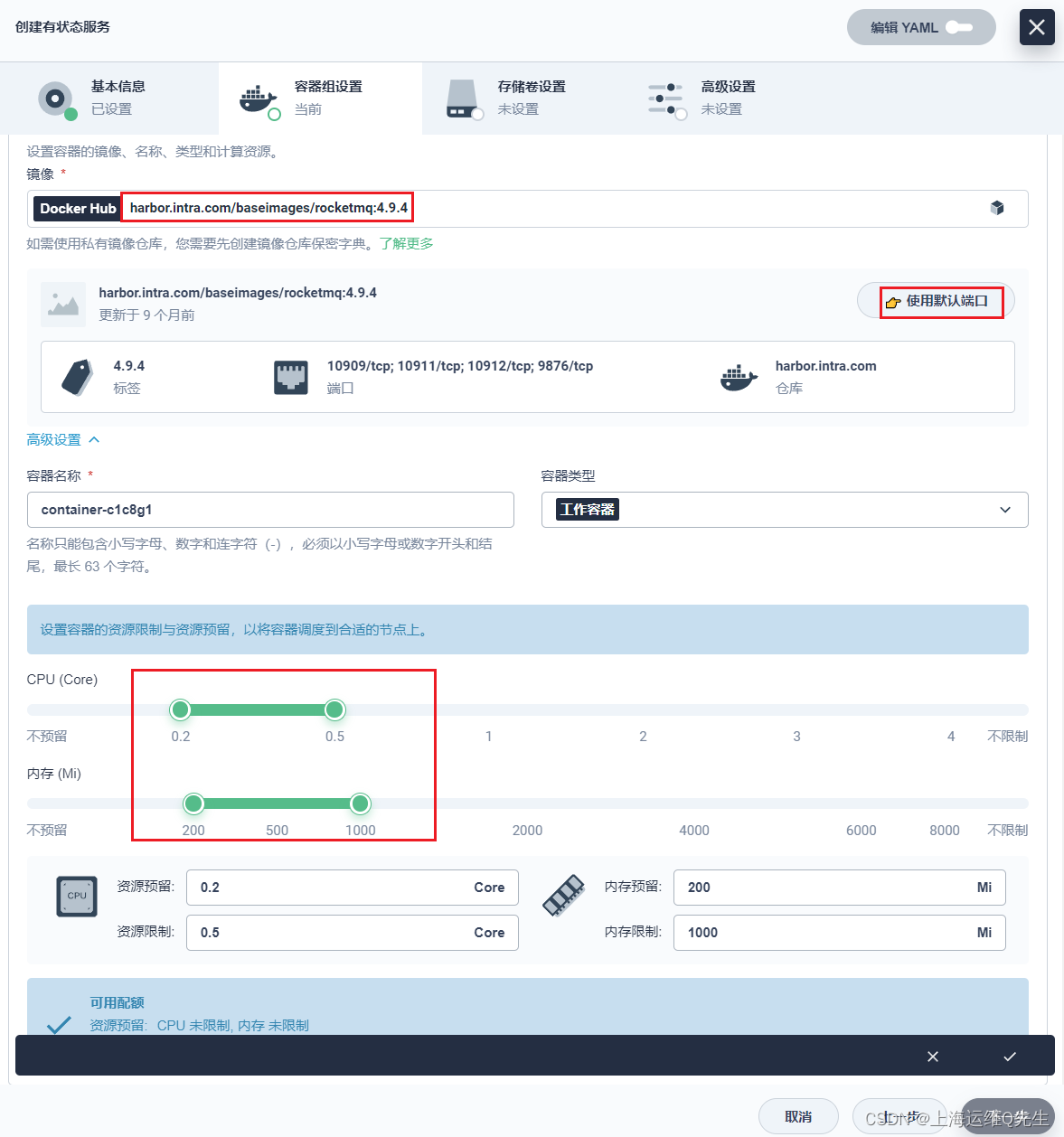

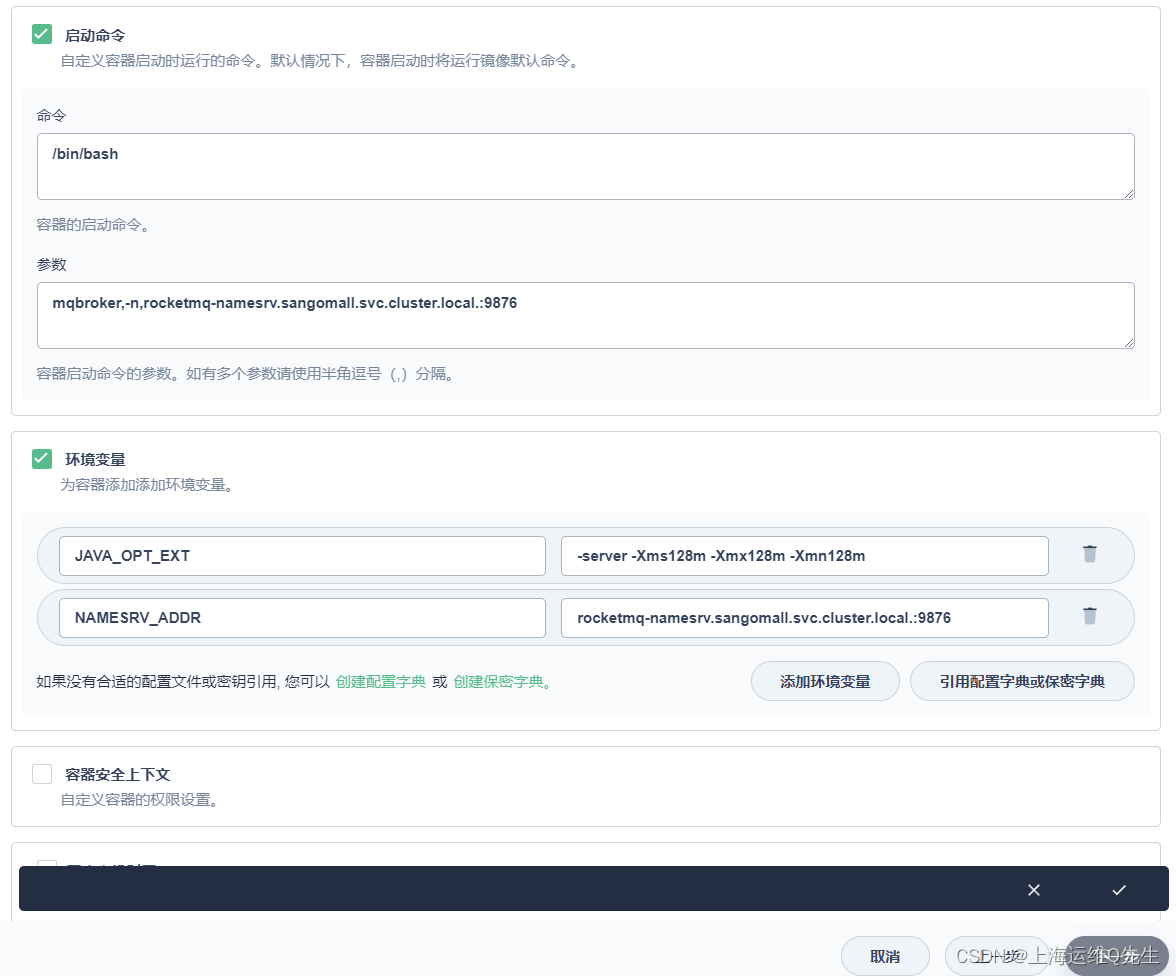

10.2.2 RocketMQ broker部署

创建[有状态服务] rocketmq-broker-1

镜像

harbor.intra.com/baseimages/rocketmq:4.9.4

删除9876端口

配置启动命令和环境变量

启动命令:

/bin/bash

参数:

mqbroker,-n,rocketmq-namesrv.sangomall.svc.cluster.local.:9876

环境变量

JAVA_OPT_EXT: -server -Xms128m -Xmx128m -Xmn128m

NAMESRV_ADDR: rocketmq-namesrv.sangomall.svc.cluster.local.:9876

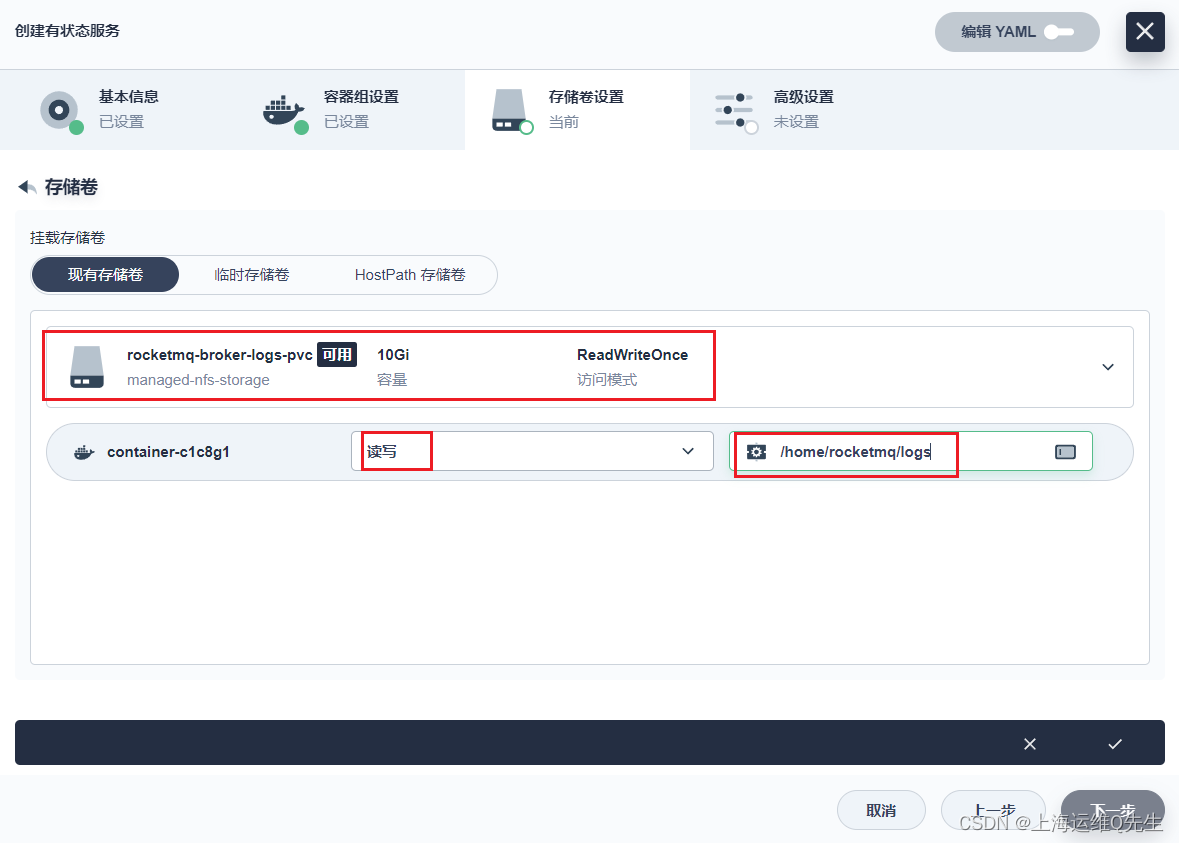

[挂载存储卷]

/home/rocketmq/logs

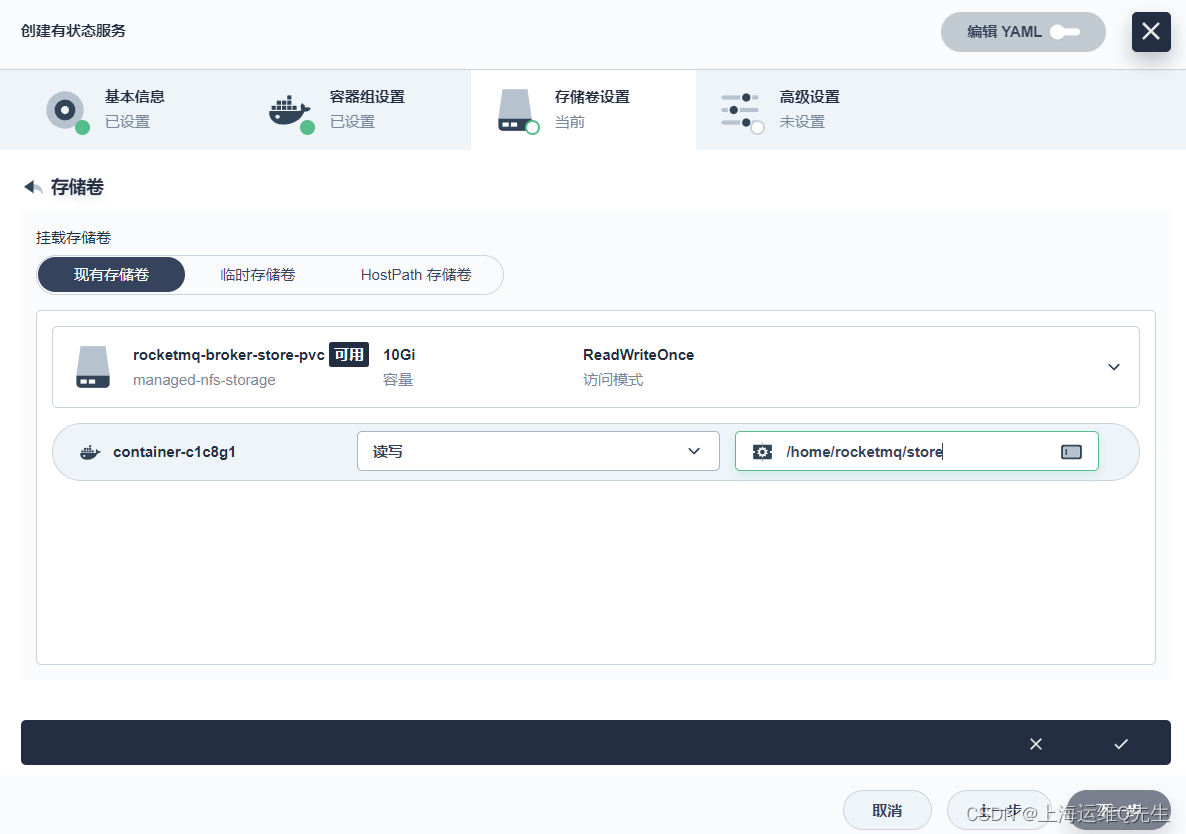

[挂载存储卷]

/home/rocketmq/store

[下一步],[下一步],[创建]

测试解析

root@ks-master:~# dig -t a rocketmq-broker-1.sangomall.svc.cluster.local @10.233.0.3

; <<>> DiG 9.16.1-Ubuntu <<>> -t a rocketmq-broker-1.sangomall.svc.cluster.local @10.233.0.3

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 46511

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: aebce3b8398ed98f (echoed)

;; QUESTION SECTION:

;rocketmq-broker-1.sangomall.svc.cluster.local. IN A

;; ANSWER SECTION:

rocketmq-broker-1.sangomall.svc.cluster.local. 30 IN A 10.233.83.65

;; Query time: 4 msec

;; SERVER: 10.233.0.3#53(10.233.0.3)

;; WHEN: Thu Apr 13 09:02:50 CST 2023

;; MSG SIZE rcvd: 147

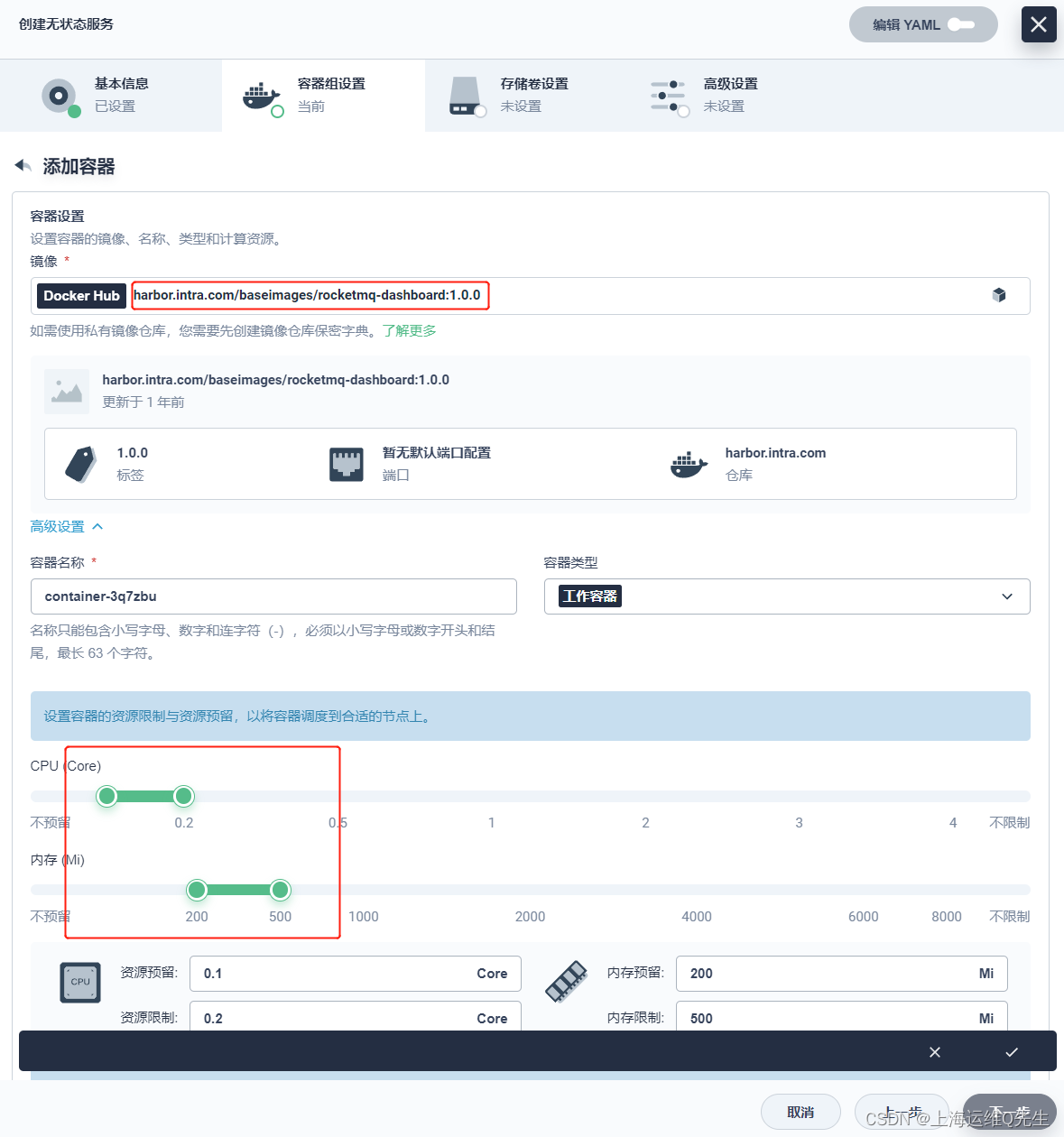

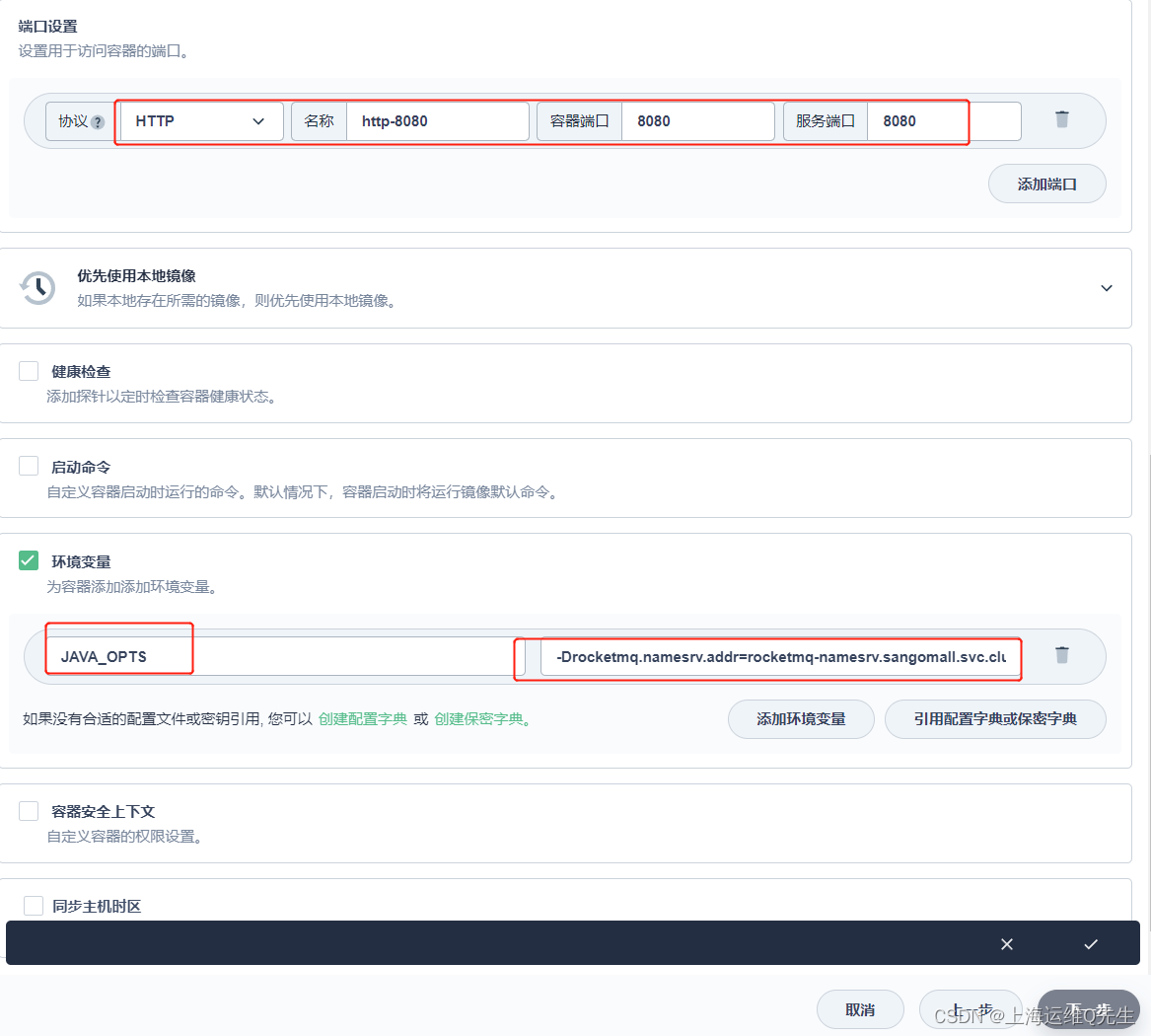

10.3 RocketMQ Dashboard 部署

10.3.1 创建RocketMq Dashboard服务

创建[无状态服务] rocketmq-dashboard

使用镜像

harbor.intra.com/baseimages/rocketmq-dashboard:1.0.0

端口 8080

环境变量

JAVA_OPTS: -Drocketmq.namesrv.addr=rocketmq-namesrv.sangomall.svc.cluster.local.:9876

[下一步],[下一步],[创建]

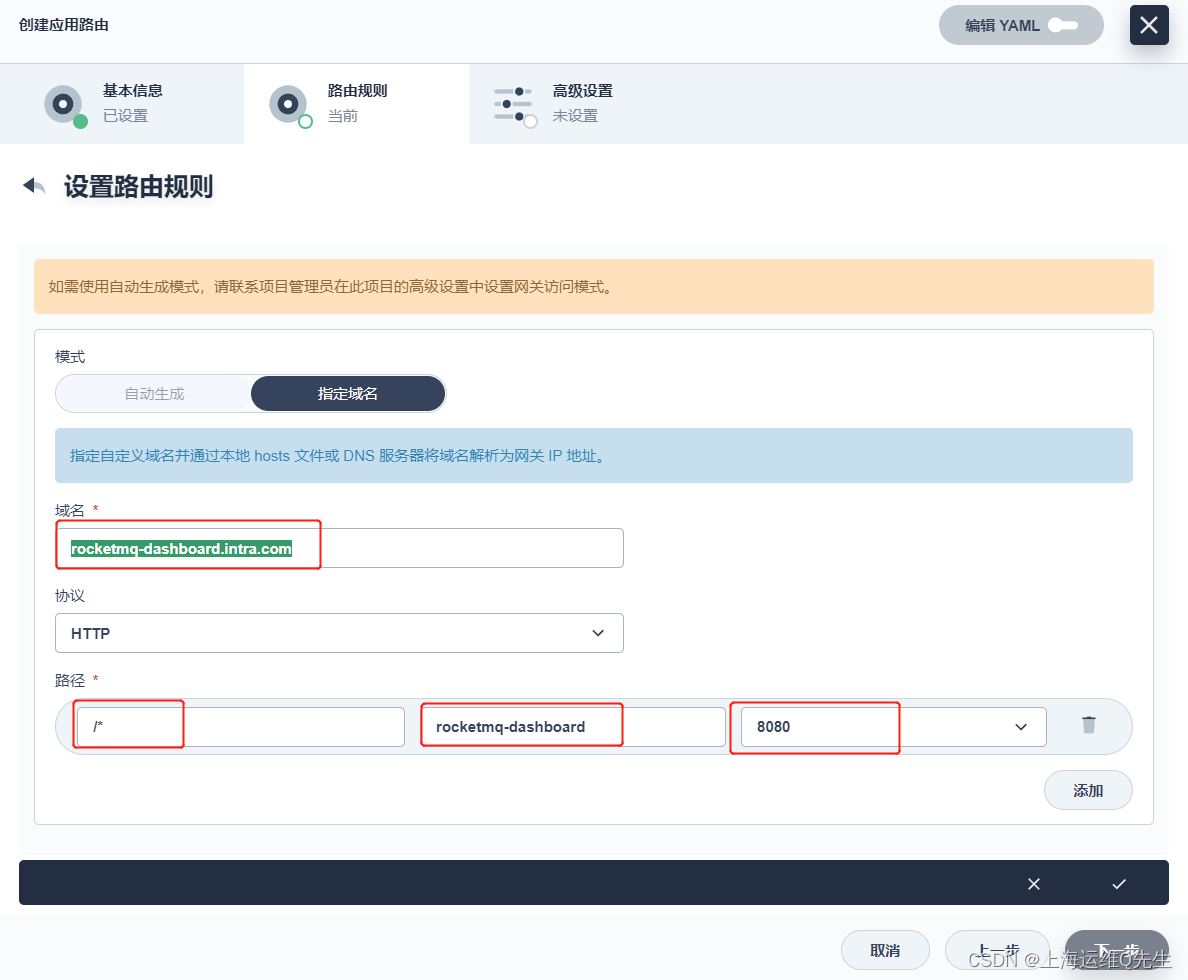

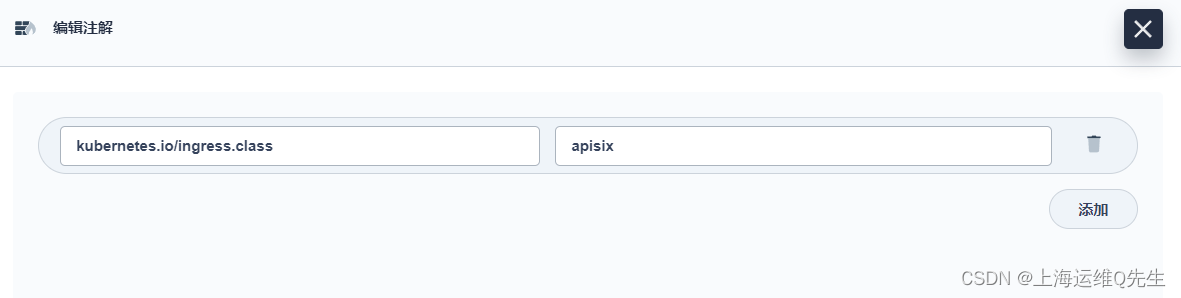

10.3.2 创建Rocketmq-Dashboard应用路由

[应用路由],[创建] rocketmq-dashboar

rocketmq-dashboard.intra.com

8080

[添加元数据]

kubernetes.io/ingress.class apisix

添加DNS解析

[root@centos7-1 ~]# vi /var/named/intra.zone

[root@centos7-1 ~]# systemctl restart named

[root@centos7-1 ~]# cat /var/named/intra.zone

$TTL 1d

@ IN SOA intra.com. admin.intra.com. (

0;

1H;

5M;

1W;

1D);

@ NS ns.intra.com.

ns A 192.168.31.17

harbor A 192.168.31.104

kibana A 192.168.31.212

rabbitmq A 192.168.31.211

web1 A 192.168.31.211

nacos-server A 192.168.31.211

zipkin-server A 192.168.31.211

sentinel A 192.168.31.211

skywalking-ui A 192.168.31.211

rocketmq-dashboard A 192.168.31.211

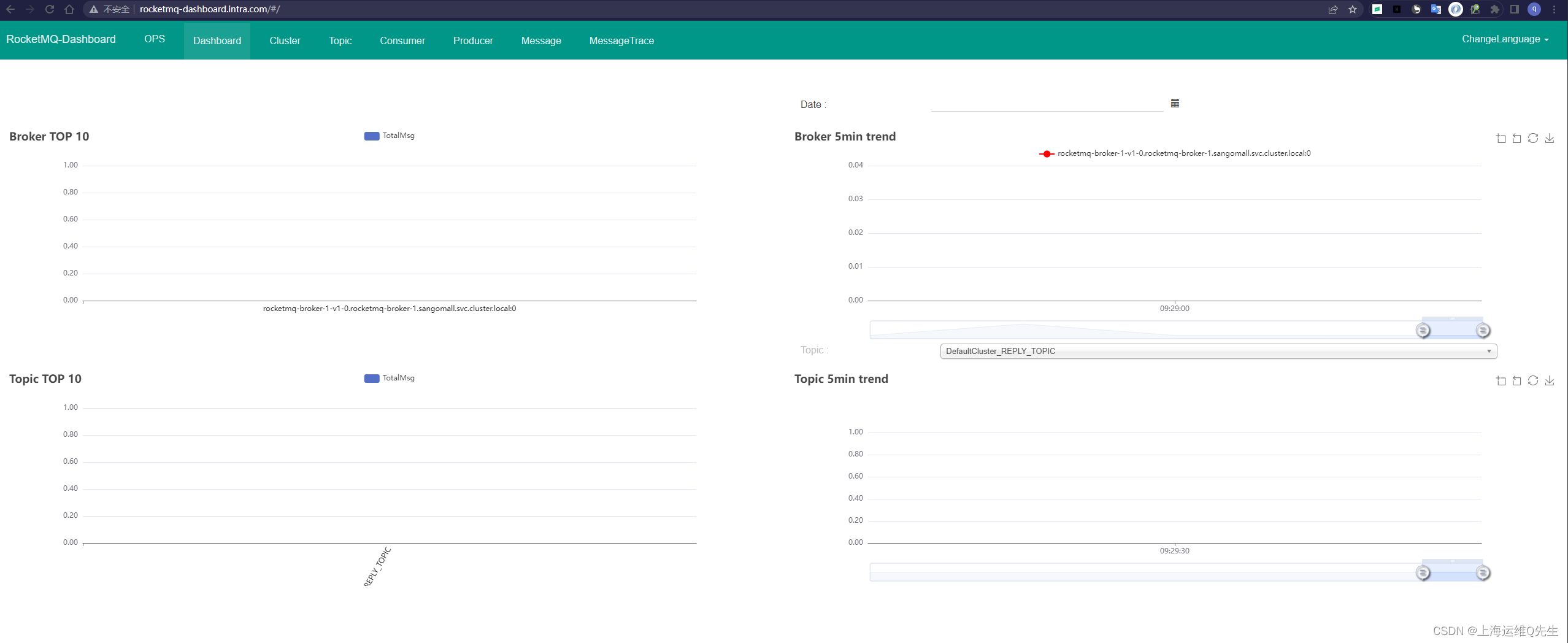

浏览器访问

http://rocketmq-dashboard.intra.com/

![[Java] 中的具体集合](https://img-blog.csdnimg.cn/63e9a4a287f34001b07b95c0caed0027.png#pic_center)