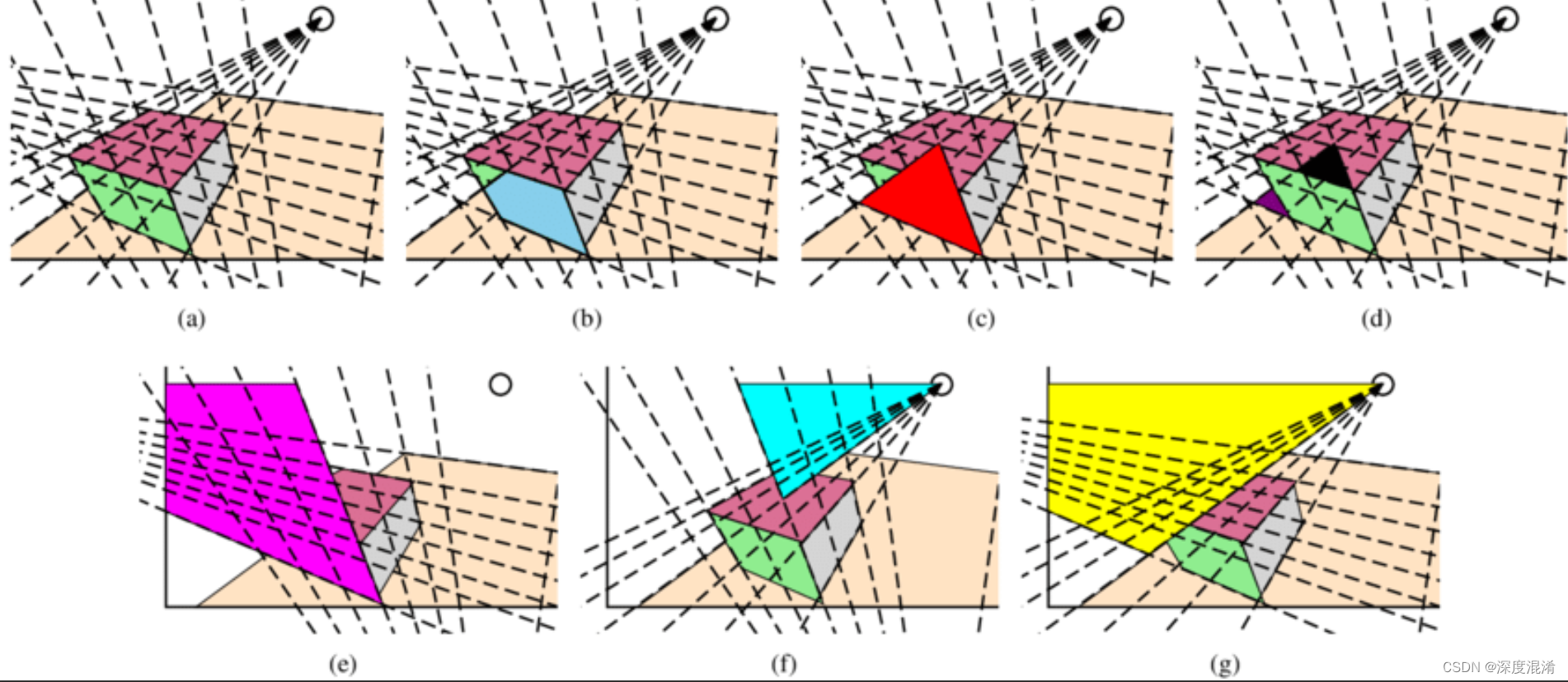

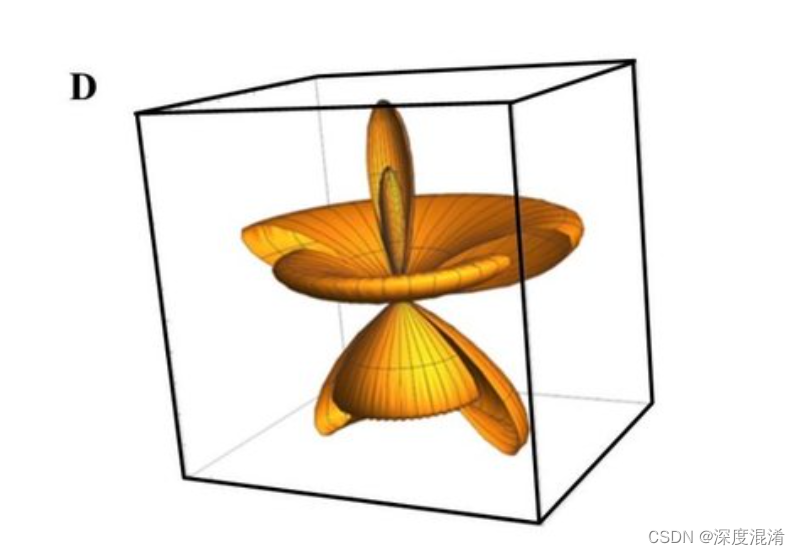

1 三角分解法 Triangular Decomposition

三角分解法亦称因子分解法,由消元法演变而来的解线性方程组的一类方法。设方程组的矩阵形式为Ax=b,三角分解法就是将系数矩阵A分解为一个下三角矩阵L和一个上三角矩阵U之积:A=LU,然后依次解两个三角形方程组Ly=b和Ux=y,而得到原方程组的解,例如,杜利特尔分解法、乔莱斯基分解法等就是三角分解法。

若能通过正交变换,将系数矩阵A分解为A=LU,其中L是单位下三角矩阵(主对角线元素为1的下三角矩阵),而U是上三角矩阵,则线性方程组Ax=b变为LUx=b,若令Ux=y,则线性方程组Ax=b的求解分为两个三角方程组的求解:

(1)求解Ly=b,得y;

(2)再求解Ux=y,即得方程组的解x;

因此三角分解法的关键问题在于系数矩阵A的LU分解。

矩阵能LU分解的充分条件编辑 播报

一般地,任给一个矩阵不一定有LU分解,下面给出一个矩阵能LU分解的充分条件。

定理1 对任意矩阵 ,若A的各阶顺序主子式均不为零,则A有唯一的Doolittle分解(或Crout分解)。

定理2 若矩阵 非奇异,且其LU分解存在,则A的LU分解是唯一的。

三角分解法是将原正方 (square) 矩阵分解成一个上三角形矩阵或是排列(permuted) 的上三角形矩阵和一个 下三角形矩阵,这样的分解法又称为LU分解法。它的用途主要在简化一个大矩阵的行列式值的计算过程,求逆矩阵,和求解联立方程组。不过要注意这种分解法所得到的上下三角形矩阵并非唯一,还可找到数个不同 的一对上下三角形矩阵,此两三角形矩阵相乘也会得到原矩阵。

2 三角分解法C#源程序

using System;

namespace Zhou.CSharp.Algorithm

{

/// <summary>

/// 矩阵类

/// 作者:周长发

/// 改进:深度混淆

/// https://blog.csdn.net/beijinghorn

/// </summary>

public partial class Matrix

{

/// <summary>

/// 矩阵的三角分解,分解成功后,原矩阵将成为Q矩阵

/// </summary>

/// <param name="src">源矩阵</param>

/// <param name="mtxL">分解后的L矩阵</param>

/// <param name="mtxU">分解后的U矩阵</param>

/// <returns>求解是否成功</returns>

public static bool SplitLU(Matrix src, Matrix mtxL, Matrix mtxU)

{

int i, j, k, w, v, z;

// 初始化结果矩阵

if (mtxL.Init(src.Columns, src.Columns) == false || mtxU.Init(src.Columns, src.Columns) == false)

{

return false;

}

for (k = 0; k <= src.Columns - 2; k++)

{

z = k * src.Columns + k;

if (Math.Abs(src[z]) < float.Epsilon)

{

return false;

}

for (i = k + 1; i <= src.Columns - 1; i++)

{

w = i * src.Columns + k;

src[w] = src[w] / src[z];

}

for (i = k + 1; i <= src.Columns - 1; i++)

{

w = i * src.Columns + k;

for (j = k + 1; j <= src.Columns - 1; j++)

{

v = i * src.Columns + j;

src[v] = src[v] - src[w] * src[k * src.Columns + j];

}

}

}

for (i = 0; i <= src.Columns - 1; i++)

{

for (j = 0; j < i; j++)

{

w = i * src.Columns + j;

mtxL[w] = src[w];

mtxU[w] = 0.0;

}

w = i * src.Columns + i;

mtxL[w] = 1.0;

mtxU[w] = src[w];

for (j = i + 1; j <= src.Columns - 1; j++)

{

w = i * src.Columns + j;

mtxL[w] = 0.0;

mtxU[w] = src[w];

}

}

return true;

}

}

}

POWER BY 315SOFT.COM & TRUFFER.CN

附:

There are essentially two ways to solve the ensuing matrix Eq. (6) that results from the integral equation by MOM: (i) a direct solver that seeks the inversion of the matrix equation, and (ii) an iterative solver for the solution of the matrix equation. A direct solver of the matrix equation can be Gaussian elimination, or lower-upper-triangular decomposition (LUD). The number of operations is proportional to O(N3), while the matrix storage requirement is of O(N2). An iterative method to solve a matrix equation can either be Gauss–Seidel, Jacobi relaxation, conjugate gradient, or the derivatives of the conjugate gradient algorithm [9]. All iterative methods require the performance of a matrix–vector product, which usually is the bottleneck of the computation. For dense matrices, such a matrix–vector product requires operations of O(N2). If the matrix equation is solved in Niter iterations, the computational cost is proportional to NiterN2. Moreover, traditional iterative solvers require the generation and storage of the matrix itself.

Cholesky decomposition or factorization is a form of triangular decomposition that can only be applied to either a positive definite symmetric matrix or a positive definite Hermitian matrix. A symmetric matrix A is said to be positive definite if Ax>0 for any non-zero x. Similarly, if A is Hermitian, then Ax>0 . A more useful definition of a positive definite matrix is one that has all eigenvalues greater than zero.