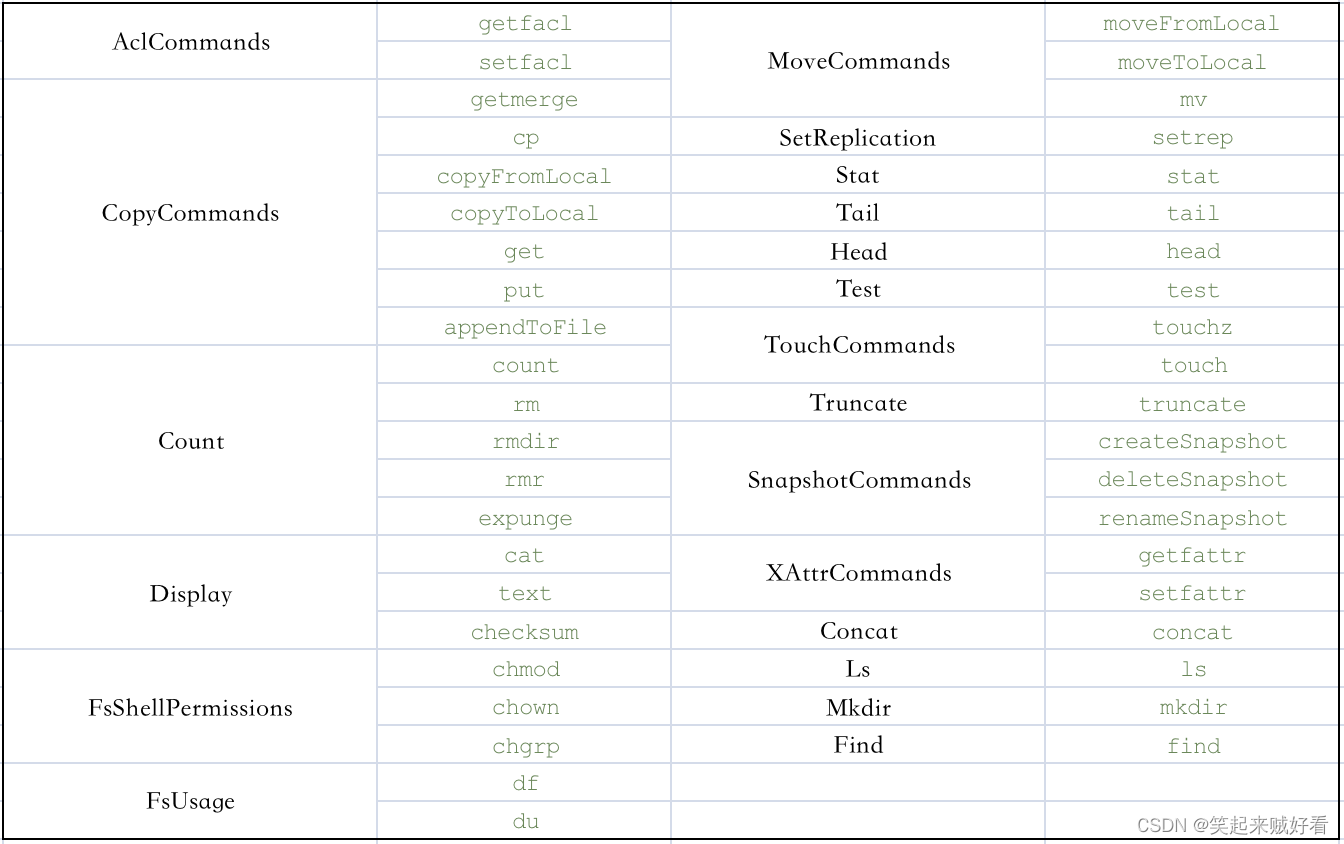

DFS命令使用

- 概览

- 使用说明

- ls

- df

- du

- count

- appendToFile

- cat

- checksum

- chgrp

- chmod

- chown

- concat

- copyFromLocal

- copyToLocal

- cp

- createSnapshot

- deleteSnapshot

- expunge

- find

- get

- getfacl

- getfattr

- getmerge

- head

- mkdir

- moveFromLocal

- moveToLocal

- mv

- put

- renameSnapshot

- rm

- rmdir

- setfacl

- setfattr

- setrep

- stat

- tail

- test

- text

- touch

- touchz

- truncate

- usage

概览

hadoop分布式文件系统客户端命令行操作

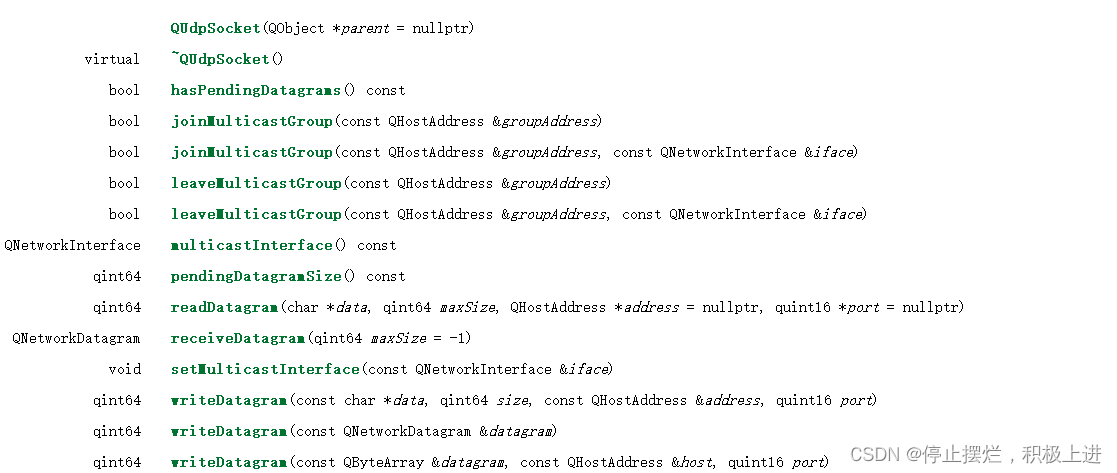

使用说明

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum [-v] <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-concat <target path> <src path> <src path> ...]

[-copyFromLocal [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst>]

[-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] [-s] <path> ...]

[-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-v] [-x] <path> ...]

[-expunge [-immediate] [-fs <path>]]

[-find <path> ... <expression> ...]

[-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] [-skip-empty-file] <src> <localdst>]

[-head <file>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] [-d] [-t <thread count>] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] [-s <sleep interval>] <file>]

[-test -[defswrz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touch [-a] [-m] [-t TIMESTAMP (yyyyMMdd:HHmmss) ] [-c] <path> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

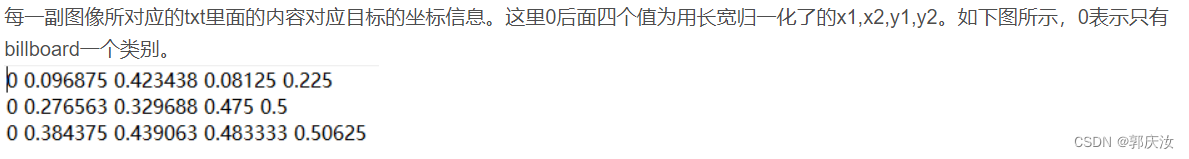

path路径支持正则表达式

| 通配符 | 名称 | 匹配 |

|---|---|---|

| * | 星号 | 匹配0或多个字符 |

| ? | 问号 | 匹配单一字符 |

| [ab] | 字符类别 | 匹配{a,b}中的一个字符 |

| [^ab] | 非字符类别 | 匹配不是{a,b}中的一个字符 |

| [a-b] | 字符范围 | 匹配一个在{a,b}范围内的 字符(包括ab),a在字典 顺序上要小于或等于b |

| [^a-b] | 非字符范围 | 匹配一个不在{a,b}范围内 的字符(包括ab),a在字 典顺序上要小于或等于b |

| {a,b} | 或选择 | 匹配包含a或b中的一个的语句 |

| 通配符 | 扩展 |

|---|---|

| /* | /2007/2008 |

| /*/* | /2007/12 /2008/01 |

| /*/12/* | /2007/12/30 /2007/12/31 |

| /200? | /2007 /2008 |

| /200[78] | /2007 /2008 |

| /200[7-8] | /2007 /2008 |

| /200[^01234569] | /2007 /2008 |

| /*/*/{31,01} | /2007/12/31 /2008/01/01 |

| /*/*/3{0,1} | /2007/12/30 /2007/12/31 |

| /*/{12/31,01/01} | /2007/12/31 /2008/01/01 |

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -e /yarn/logs

Found 5 items

-rw-r--r-- 2 root root Replicated 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root Replicated 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root Replicated 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root Replicated 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -e /yarn/logs/had*.log

-rw-r--r-- 2 root root Replicated 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

ls

展示文件列表

此命令调用的是 org.apache.hadoop.fs.shell.Ls 类

使用 [-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [-e] [<path> ...]]

- -C 只展示目录那一列

- -d 只展示目录

- -h 人性化format文件大小

- -q 用?代替无法打印的字符

- -R 递归展示

- -t 根据修改时间(modification time)排序

- -S 根据文件大小排序

- -r 反向排序,配合-t -S 使用

- -u 使用最近访问的时间代替(modification time)展示和排序

- -e 展示路径的ec策略

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls /yarn/logs

Found 5 items

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

-C 只显示目录或者文件那一列

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -C /yarn/logs

/yarn/logs/hadoop-client-runtime-3.3.1.jar

/yarn/logs/hadoop.log

/yarn/logs/httpfs.log

/yarn/logs/root

/yarn/logs/start-all.sh

-d 只展示目录

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -d /yarn/logs

drwxrwxrwt - root root 0 2023-03-11 10:51 /yarn/logs

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -C -d /yarn/logs

/yarn/logs

-h 格式化文件大小,目录大小为 0

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -h /yarn/logs

Found 5 items

-rw-r--r-- 2 root root 30.2 M 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 150.9 K 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 2.4 K 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root 2.2 K 2023-03-11 11:10 /yarn/logs/start-all.sh

-R 递归展示目录内容

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -h -R /yarn/logs

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

drwxrwx--- - root root 0 2023-03-06 08:03 /yarn/logs/root/bucket-logs-tfile

drwxrwx--- - root root 0 2023-02-15 17:01 /yarn/logs/root/bucket-logs-tfile/0001

drwxrwx--- - root root 0 2023-02-14 16:02 /yarn/logs/root/bucket-logs-tfile/0001/application_1676356354068_0001

-rw-r----- 2 root root 299.1 K 2023-02-14 16:02 /yarn/logs/root/bucket-logs-tfile/0001/application_1676356354068_0001/spark-31_45454

-t 根据修改时间(modification time)排序

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls /yarn/logs

Found 5 items

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

# 对比 时间排序

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -t /yarn/logs

Found 5 items

-rw-r--r-- 2 root root 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-S 根据文件大小排序

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -S /yarn/logs

Found 5 items

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

-rw-r--r-- 2 root root 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-r 反向排序

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -S -r /yarn/logs

Found 5 items

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

-rw-r--r-- 2 root root 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

-rw-r--r-- 2 root root 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-e 展示路径的ec策略

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -ls -e /yarn/logs

Found 5 items

-rw-r--r-- 2 root root Replicated 31717292 2023-03-11 11:09 /yarn/logs/hadoop-client-runtime-3.3.1.jar

-rw-r--r-- 2 root root Replicated 154525 2023-03-11 10:51 /yarn/logs/hadoop.log

-rw-r--r-- 2 root root Replicated 2452 2023-03-11 10:51 /yarn/logs/httpfs.log

drwxrwx--- - root root 0 2023-02-14 16:01 /yarn/logs/root

-rw-r--r-- 2 root root Replicated 2221 2023-03-11 11:10 /yarn/logs/start-all.sh

df

展示文件系统的总容量,空闲和已使用大小

此命令调用的是 org.apache.hadoop.fs.shell.Df 类

使用 -df [-h] [<path> ...]

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -df /

Filesystem Size Used Available Use%

hdfs://cdp-cluster 4936800665600 1544134656 4853417132032 0%

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -df /yarn/logs

Filesystem Size Used Available Use%

hdfs://cdp-cluster 4936800665600 1544134656 4853425569792 0%

du

展示指定文件或目录大小 单位为:bytes

此命令调用的是 org.apache.hadoop.fs.shell.Du 类

使用 -du [-s] [-h] [-v] [-x] <path>

-s 展示总目录大小

-h 人性化format文件大小

-v 展示列表头信息

-x 排除 snapshots

count

展示文件或目录的数目以及大小

此命令调用的是 org.apache.hadoop.fs.shell.Count 类

使用 [-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] [-e] [-s] <path> ...]

-q 展示目录quota信息

-h 人性化format文件大小

-v 展示列表头信息

-t 展示quota的storage type

-u 展示quota和使用率信息,没有文件数目和文件内容大小

-x 排除 snapshots

-e 展示路径的ec策略

-s 展示snapshots信息

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -count -v /yarn/logs

DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

69 65 154821533 /yarn/logs

展示quota信息

[root@spark-31 hadoop-3.3.1]# bin/hdfs dfs -count -v -q /yarn/logs

QUOTA REM_QUOTA SPACE_QUOTA REM_SPACE_QUOTA DIR_COUNT FILE_COUNT CONTENT_SIZE PATHNAME

1000000 999866 107374182400 107064539334 69 65 154821533 /yarn/logs