系列文章目录

【大数据实时数据同步】超级详细的生产环境OGG(GoldenGate)12.2实时异构同步Oracle数据部署方案(上)

【大数据实时数据同步】超级详细的生产环境OGG(GoldenGate)12.2实时异构同步Oracle数据部署方案(中)

【大数据实时数据同步】超级详细的生产环境OGG(GoldenGate)12.2实时异构同步Oracle数据部署方案(下)

文章目录

- 系列文章目录

- 前言

- 八、配置支持DDL同步操作

- 1、@marker_setup.sql

- 2、@ddl_setup.sql

- 3、@role_setup.sql

- 4、@ddl_enable.sql

- 5、@marker_status.sql

- 九、配置主库到备库同步(支持DDL)

- 1、源端配置

- 2、源端新增抽取进程配置

- 3、源端配置投递进程配置

- 4、源端增加抽取进程

- 5、源端增加投递进程

- 6、源端添加表级别附加日志

- 7、启动Extract和PUMP进程

- 8、目的端编辑REPLICAT进程配置

- 9、添加应用进程

- 10、源端导出emp.dept表并传到目的端应用

- 10.1、源端通过数据泵导出表

- 10.2、目的端配置导入目录

- 10.3、将源端导出的dump文件传到目的端

- 10.4、目标端导入数据到原表

- 10.5、OGG库审计表数据初始化

- 10.6、OGG库添加ETLTIME字段

- 10.7、OGG库ETLTIME字段数据初始化

- 10.8、OGG库添加索引,防止进程lag过高

- 11、启动应用进程

- 12、验证

- 12.1、源端操作

- 12.2、目的端查看

- 总结

前言

博主所在单位目前使用Oracle GoldenGate将各个业务生产库汇聚到一起做数仓实时ODS平台,源端库可能涉及Oracle、Mysql、达梦、Guassdb库。

之前写过一系列关于GoldenGate异构同步Mysql、Kafka、Kylin、Flink做实时计算的场景文章。但是突然发现,卧槽最最最应该第一个做的Oracle->Oracle的实时异构同步文档竟然没写!

来弥补一下这个空白!!!

好了,扯远了,回归技术!

下面是我给大家的生产环境下,如何部署GoldenGate12C及异构实时同步数据的解决方案。本文主要介绍如何实现实时异构的进行Oracle->Oracle的数据同步,这种数据同步适用于灾备、升级、实时ODS等场景使用。我这里给大家了三个GoldenGate部署方式,看大家喜欢用哪种吧!!

注意:本部署方案分为三章节,三章节的重点分别是:

- GoldenGate12C安装前,数据库层面的准备工作!!!

- 给你GoldenGate12C的三种部署方式,看你喜欢哪种部署方式!!!

- 配置支持DDL操作的实时数据同步!!!

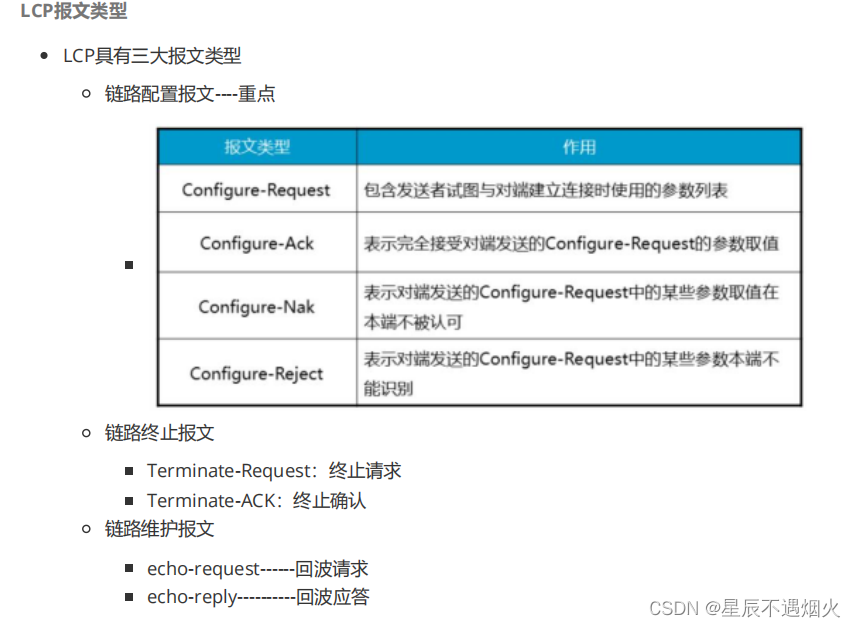

八、配置支持DDL同步操作

在源库执行,根据提示输入ogg管理账户:ogg

[oracle@source ogg12]$ cd $GGHOME

[oracle@source ogg12]$ sqlplus / as sysdba

执行如下脚本:

1、@marker_setup.sql

SQL> @marker_setup.sql

Marker setup script

You will be prompted for the name of a schema for the Oracle GoldenGate database objects.

NOTE: The schema must be created prior to running this script.

NOTE: Stop all DDL replication before starting this installation.

Enter Oracle GoldenGate schema name:OGG

Marker setup table script complete, running verification script...

Please enter the name of a schema for the GoldenGate database objects:

Setting schema name to OGG

MARKER TABLE

-------------------------------

OK

MARKER SEQUENCE

-------------------------------

OK

Script complete.

2、@ddl_setup.sql

该脚本创建了进行DDL抽取所需要的触发器和包。

主要提示:执行此脚本时,所有发出DDL的会话都必须关闭并重新连接,否则可能会发生错误6508(找不到被调用的程序单元)并且DDL操作可能会失败。这是因为一个已知的Oracle bug#2747350。

未关闭的DDL会话会以列表的形式显示出来。

3、@role_setup.sql

该脚本删除并且重建DDL同步所需要的角色,它授权管理账户对DDL对象上的DML权限

根据提示执行如下授权操作:

Grant GGS_GGSUSER_ROLE to ogg;

4、@ddl_enable.sql

改脚本创建DDL触发器,以捕获DDL操作,用于向marker和history表插入DDL信息

5、@marker_status.sql

验证ddl安装

如下即正常安装ddl支持

SQL> @marker_status.sql

Please enter the name of a schema for the GoldenGate database objects:

OGG

Setting schema name to OGG

MARKER TABLE

-------------------------------

OK

MARKER SEQUENCE

-------------------------------

OK

九、配置主库到备库同步(支持DDL)

需求:将源端scott用户下的emp,dept 表通过ogg同步到目的端 ,逻辑同生产ODS

1、源端配置

MGR进程配置(源端和目的端都做下面操作,添加的参数也一样):

GGSCI (source) 6> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

GGSCI (source) 7> edit params mgr

--加入下面内容

PORT 7809

DYNAMICPORTLIST 7810-7860

AUTORESTART ER *, RETRIES 3, WAITMINUTES 5

PURGEOLDEXTRACTS ./dirdat/*, USECHECKPOINTS, MINKEEPDAYS 30

lagreporthours 1

laginfominutes 30

lagcriticalminutes 60

--保存退出后,重启管理进程,让配置生效:

GGSCI (source) 8> stop mgr

Manager process is required by other GGS processes.

Are you sure you want to stop it (y/n)?y

Sending STOP request to MANAGER ...

Request processed.

Manager stopped.

GGSCI (source) 9> start mgr

Manager started.

GGSCI (source) 10> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

2、源端新增抽取进程配置

GGSCI (source) 12> edit params e_sc

--加入如下内容:

extract e_sc

userid ogg,password ogg

setenv(NLS_LANG=AMERICAN_AMERICA.AL32UTF8)

setenv(ORACLE_SID="orcl")

reportcount every 30 minutes,rate

numfiles 5000

discardfile ./dirrpt/e_sc.dsc,append,megabytes 1000

warnlongtrans 2h,checkinterval 30m

exttrail ./dirdat/sc

dboptions allowunusedcolumn

tranlogoptions archivedlogonly

tranlogoptions altarchivelogdest primary /u01/arch

ddl include mapped

ddloptions addtrandata,report

notcpsourcetimer

nocompressupdates

fetchoptions USEROWID

NOCOMPRESSDELETES

----------scott.EMP

table SCOTT.EMP,tokens(

TKN-CSN = @GETENV('TRANSACTION', 'CSN'),

TKN-COMMIT-TS = @GETENV ('GGHEADER', 'COMMITTIMESTAMP'),

TKN-OP-TYPE = @GETENV ('GGHEADER', 'OPTYPE')

);

----------SCOTT.DEPT

table SCOTT.DEPT,tokens(

TKN-CSN = @GETENV('TRANSACTION', 'CSN'),

TKN-COMMIT-TS = @GETENV ('GGHEADER', 'COMMITTIMESTAMP'),

TKN-OP-TYPE = @GETENV ('GGHEADER', 'OPTYPE')

);

3、源端配置投递进程配置

GGSCI (source) 13>edit params d_sc

--加入如下内容:

extract d_sc

rmthost 192.168.1.10,mgrport 7809,compress

userid ogg,password ogg

PASSTHRU

numfiles 5000

rmttrail ./dirdat/sc

dynamicresolution

table scott.*;

4、源端增加抽取进程

GGSCI (source) 16> add extract e_sc,tranlog,begin now

EXTRACT added.

GGSCI (source) 17> add exttrail ./dirdat/sc,extract e_sc,megabytes 500

EXTTRAIL added.

5、源端增加投递进程

add extract d_sc,exttrailsource ./dirdat/sc

add rmttrail ./dirdat/sc,extract d_sc,megabytes 500

6、源端添加表级别附加日志

GGSCI (source) 40> dblogin userid ogg,password ogg

Successfully logged into database.

GGSCI (source as ogg@orcl) 41> add trandata SCOTT.EMP

Logging of supplemental redo data enabled for table SCOTT.EMP.

TRANDATA for scheduling columns has been added on table 'SCOTT.EMP'.

TRANDATA for instantiation CSN has been added on table 'SCOTT.EMP'.

GGSCI (source as ogg@orcl) 42>

GGSCI (source as ogg@orcl) 42> add trandata SCOTT.DEPT

Logging of supplemental redo data enabled for table SCOTT.DEPT.

TRANDATA for scheduling columns has been added on table 'SCOTT.DEPT'.

TRANDATA for instantiation CSN has been added on table 'SCOTT.DEPT'.

GGSCI (source as ogg@orcl) 43> info trandata SCOTT.EMP

Logging of supplemental redo log data is enabled for table SCOTT.EMP.

Columns supplementally logged for table SCOTT.EMP: EMPNO.

Prepared CSN for table SCOTT.EMP: 1108355

7、启动Extract和PUMP进程

GGSCI (source) 38> start er *

Sending START request to MANAGER ...

EXTRACT D_SC starting

Sending START request to MANAGER ...

EXTRACT E_SC starting

GGSCI (source) 39> info all

Program Status Group Lag at Chkpt Time Since Chkpt

MANAGER RUNNING

EXTRACT RUNNING D_SC 00:00:00 00:00:04

EXTRACT RUNNING E_SC 00:00:00 00:00:04

8、目的端编辑REPLICAT进程配置

GGSCI (ogg) 1> edit params mgr

---加入下面配置

replicat r_sc

setenv(NLS_LANG=AMERICAN_AMERICA.UTF8)

userid ogg,password ogg

reportcount every 30 minutes,rate

reperror default,abend

numfiles 20000

checkpointsecs 40

assumetargetdefs

discardfile ./dirrpt/r_sc.dsc,append,megabytes 1000

allownoopupdates

ddl &

include mapped &

exclude objname scott.*_audit &

exclude optype create &

objtype 'table' &

exclude optype drop &

objtype 'table' &

exclude objtype 'index' &

objname scott.*_his &

exclude instr 'constraint' &

--exclude instr 'null' &

exclude instr 'trigger' &

exclude instr 'rename to' &

exclude instr 'grant' &

exclude instr 'revoke' &

exclude instr 'analyze'

ddloptions report

allowduptargetmap

----------EMP

getinserts

getupdates

getdeletes

noupdatedeletes

map SCOTT.EMP,target SCOTT.EMP,keycols(EMPNO),colmap(

usedefaults,

etltime=@DATENOW()

);

ignoreinserts

ignoreupdates

getdeletes

INSERTDELETES

map SCOTT.EMP,target SCOTT.EMP_HIS,keycols(EMPNO),colmap(

usedefaults,

etltime=@DATENOW()

);

NOINSERTDELETES

updatedeletes

getinserts

getupdates

getdeletes

map SCOTT.EMP,target SCOTT.EMP_AUDIT,keycols(EMPNO),colmap(

EMPNO=@if(@strfind(@token('tkn-op-type'),'PK UPDATE')>0,before.EMPNO,EMPNO),

csn=@token('tkn-csn'),

optime=@token('tkn-commit-ts'),

optype=@if(@strfind(@token('tkn-op-type'),'PK UPDATE')>0,'DELETE',@token('tkn-op-type')),

inserttime=@eval(@strfind(@token('tkn-op-type'),'INSERT')>0,@token('tkn-commit-ts')),

curdate=@DATENOW()

);

ignoreinserts

getupdates

ignoredeletes

map SCOTT.EMP,target SCOTT.EMP_AUDIT,keycols(EMPNO),colmap(

usedefaults,

csn=@token('tkn-csn'),

optime=@token('tkn-commit-ts'),

optype=@case(@token('tkn-op-type'),'PK UPDATE','INSERT'),

inserttime=@token('tkn-commit-ts'),

curdate=@DATENOW()

),filter(@strfind(@token('tkn-op-type'),'PK UPDATE') >0),insertallrecords;

----------DEPT

getinserts

getupdates

getdeletes

noupdatedeletes

map SCOTT.DEPT,target SCOTT.DEPT,keycols(DEPTNO),colmap(

usedefaults,

etltime=@DATENOW()

);

ignoreinserts

ignoreupdates

getdeletes

INSERTDELETES

map SCOTT.DEPT,target SCOTT.DEPT_HIS,keycols(DEPTNO),colmap(

usedefaults,

etltime=@DATENOW()

);

NOINSERTDELETES

updatedeletes

getinserts

getupdates

getdeletes

map SCOTT.DEPT,target SCOTT.DEPT_AUDIT,keycols(DEPTNO),colmap(

DEPTNO=@if(@strfind(@token('tkn-op-type'),'PK UPDATE')>0,before.DEPTNO,DEPTNO),

csn=@token('tkn-csn'),

optime=@token('tkn-commit-ts'),

optype=@if(@strfind(@token('tkn-op-type'),'PK UPDATE')>0,'DELETE',@token('tkn-op-type')),

inserttime=@eval(@strfind(@token('tkn-op-type'),'INSERT')>0,@token('tkn-commit-ts')),

curdate=@DATENOW()

);

ignoreinserts

getupdates

ignoredeletes

map SCOTT.DEPT,target SCOTT.DEPT_AUDIT,keycols(DEPTNO),colmap(

usedefaults,

csn=@token('tkn-csn'),

optime=@token('tkn-commit-ts'),

optype=@case(@token('tkn-op-type'),'PK UPDATE','INSERT'),

inserttime=@token('tkn-commit-ts'),

curdate=@DATENOW()

),filter(@strfind(@token('tkn-op-type'),'PK UPDATE') >0),insertallrecords;

9、添加应用进程

GGSCI (ogg) 8> dblogin userid ogg,password ogg

Successfully logged into database.

GGSCI (ogg as ogg@ogg) 9> ADD CHECKPOINTTABLE ogg.ckpt

Successfully created checkpoint table ogg.ckpt.

GGSCI (ogg as ogg@ogg) 10> add replicat r_sc,exttrail ./dirdat/sc checkpointtable ogg.ckpt

10、源端导出emp.dept表并传到目的端应用

10.1、源端通过数据泵导出表

[oracle@source ogg12]$ mkdir /u01/exp--创建目录

[oracle@source ogg12]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Thu Sep 20 18:34:35 2018

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> create directory expdir as '/u01/exp';

Directory created.

SQL> grant read,write on directory expdir to scott;

Grant succeeded.

SQL> grant dba to scott;--这个权限是为了测试方便所以给的dba权限。生产慎用

Grant succeeded.

SQL> set num 50

SQL> select current_scn from v$database;---查询当前scn

CURRENT_SCN

--------------------------------------------------

1108836

SQL> exit

Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

[oracle@source ~]$ expdp \' / as sysdba\' directory=EXPDIR dumpfile=expdp_20180920.dmp logfile=expdp_20180920.log tables=SCOTT.EMP,SCOTT.DEPT exclude=ref_constraint flashback_scn=1108836

Export: Release 11.2.0.4.0 - Production on Thu Sep 20 18:45:47 2018

Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

FLASHBACK automatically enabled to preserve database integrity.

Starting "SYS"."SYS_EXPORT_TABLE_01": "/******** AS SYSDBA" directory=EXPDIR dumpfile=expdp_20180920.dmp logfile=expdp_20180920.log tables=SCOTT.EMP,SCOTT.DEPT exclude=ref_constraint flash

back_scn=1108836 Estimate in progress using BLOCKS method...

Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

Total estimation using BLOCKS method: 128 KB

Processing object type TABLE_EXPORT/TABLE/PROCACT_INSTANCE

Processing object type TABLE_EXPORT/TABLE/TABLE

Processing object type TABLE_EXPORT/TABLE/INDEX/INDEX

Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT

Processing object type TABLE_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS

Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

. . exported "SCOTT"."DEPT" 5.929 KB 4 rows

. . exported "SCOTT"."EMP" 8.562 KB 14 rows

Master table "SYS"."SYS_EXPORT_TABLE_01" successfully loaded/unloaded

******************************************************************************

Dump file set for SYS.SYS_EXPORT_TABLE_01 is:

/u01/exp/expdp_20180920.dmp

Job "SYS"."SYS_EXPORT_TABLE_01" successfully completed at Thu Sep 20 18:46:21 2018 elapsed 0 00:00:24

10.2、目的端配置导入目录

[oracle@ogg ~]$ mkdir /u01/imp

[oracle@ogg ~]$ sqlplus / as sysdba

SQL*Plus: Release 11.2.0.4.0 Production on Thu Sep 20 18:34:24 2018

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> create directory expdir as '/u01/exp';

Directory created.

SQL> create directory impdir as '/u01/imp';

Directory created.

SQL> grant read,write on directory impdir to scott;

Grant succeeded.

10.3、将源端导出的dump文件传到目的端

[oracle@source ~]$ scp /u01/exp/expdp_20180920.* 192.168.1.10:/u01/imp/

oracle@192.168.1.10's password:

expdp_20180920.dmp 100% 168KB 168.0KB/s 00:00

expdp_20180920.log

10.4、目标端导入数据到原表

impdp \' / as sysdba\' directory=IMPDIR dumpfile=expdp_20180920.dmp logfile=expdp_20180920.log

10.5、OGG库审计表数据初始化

create table SCOTT.EMP_AUDIT

(

EMPNO NUMBER(4),

CSN NUMBER,

OPTIME TIMESTAMP(6),

OPTYPE VARCHAR2(20),

INSERTTIME TIMESTAMP(6),

CURDATE TIMESTAMP(6)

);

create table SCOTT.DEPT_AUDIT

(

DEPTNO NUMBER(2),

CSN NUMBER,

OPTIME TIMESTAMP(6),

OPTYPE VARCHAR2(20),

INSERTTIME TIMESTAMP(6),

CURDATE TIMESTAMP(6)

);

insert into SCOTT.EMP_AUDIT select EMPNO,1108836,sysdate,'INITIAL',sysdate,'' from SCOTT.EMP;

insert into SCOTT.DEPT_AUDIT select DEPTNO,1108836,sysdate,'INITIAL',sysdate,'' from SCOTT.DEPT;

commit;

10.6、OGG库添加ETLTIME字段

---目标表

alter table scott.emp add etltime timestamp;

alter table scott.dept add etltime timestamp;

---HIS表

alter table scott.emp_HIS add etltime timestamp;

alter table scott.dept_HIS add etltime timestamp;

10.7、OGG库ETLTIME字段数据初始化

----目标表

update scott.emp set etltime=sysdate;

update scott.dept set etltime=sysdate;

commit;

10.8、OGG库添加索引,防止进程lag过高

CREATE INDEX scott.emp_idx ON scott.emp_audit(empno);

CREATE INDEX scott.dept_idx ON scott.dept_audit(deptno);

11、启动应用进程

start replicat r_sc aftercsn 1108836

12、验证

12.1、源端操作

[oracle@source ~]$ sqlplus scott/tiger

SQL*Plus: Release 11.2.0.4.0 Production on Thu Sep 20 19:11:57 2018

Copyright (c) 1982, 2013, Oracle. All rights reserved.

Connected to:

Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production

With the Partitioning, OLAP, Data Mining and Real Application Testing options

SQL> alter table dept add test number;

Table altered.

SQL> insert into dept values(50,'a','a','1');

1 row created.

SQL> alter table dept drop column test;

Table altered.

SQL> alter system switch logfile;

System altered.

SQL> alter system switch logfile;

alter system switch logfile;

System altered.

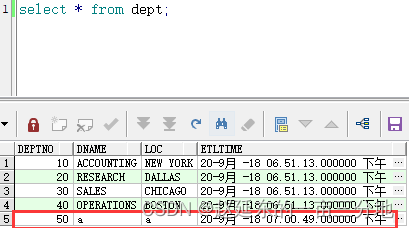

12.2、目的端查看

看一下日志,DDL也正常应用了:

2018-09-20 19:00:49 INFO OGG-06510 Using the following key columns for target table SCOTT.DEPT_AUDIT: DEPTNO.

2018-09-20 19:00:49 INFO OGG-03010 Performing implicit conversion of column data from character set UTF-8 to zhs16gbk.

2018-09-20 19:00:49 INFO OGG-00482 DDL found, operation [alter table dept drop column test (size 34)].

2018-09-20 19:00:49 INFO OGG-00489 DDL is of mapped scope, after mapping new operation [alter table "SCOTT"."DEPT" drop column test (size 44)].

2018-09-20 19:00:49 INFO OGG-00487 DDL operation included [include mapped], optype [ALTER], objtype [TABLE], objowner [SCOTT], objname [DEPT].

2018-09-20 19:00:49 INFO OGG-01407 Setting current schema for DDL operation to [SCOTT].

2018-09-20 19:00:49 INFO OGG-00484 Executing DDL operation.

2018-09-20 19:00:50 INFO OGG-00483 DDL operation successful.

2018-09-20 19:00:50 INFO OGG-01408 Restoring current schema for DDL operation to [OGG].

2018-09-20 19:00:50 INFO OGG-00489 DDL is of mapped scope, after mapping new operation [alter table "SCOTT"."DEPT_HIS" drop column test /* GOLDENGATE_DDL_REPLICATION */ (size 81)].

2018-09-20 19:00:50 INFO OGG-00487 DDL operation included [include mapped], optype [ALTER], objtype [TABLE], objowner [SCOTT], objname [DEPT_HIS].

2018-09-20 19:00:50 INFO OGG-01407 Setting current schema for DDL operation to [SCOTT].

2018-09-20 19:00:50 INFO OGG-00484 Executing DDL operation.

2018-09-20 19:00:50 INFO OGG-00483 DDL operation successful.

2018-09-20 19:00:50 INFO OGG-01408 Restoring current schema for DDL operation to [OGG].

2018-09-20 19:00:50 INFO OGG-00489 DDL is of mapped scope, after mapping new operation [alter table "SCOTT"."DEPT_AUDIT" drop column test /* GOLDENGATE_DDL_REPLICATION */ (size 83)].

2018-09-20 19:00:50 INFO OGG-00488 DDL operation excluded [exclude objname scott.*_audit], optype [ALTER], objtype [TABLE], objowner [SCOTT], objname [DEPT_AUDIT].

总结

我用了三个章节介绍完了生产环境下如何实时异构同步Oracle->Oracle,且其中包括了如何初始化以及增量应该从哪个时间点开始才能够保证数据不重复、不丢失,如果有用到的各位可以作为参考!!!

![shell文件通配符:任意一个:?、任意数量:*、任意包含[]、[^]:任意不包含](https://img-blog.csdnimg.cn/1444031bf1ad4b23858cecb12fc3d275.png)