本文通过传统OpenCV图像处理方法实现单向行驶的车辆计数。用于车辆检测的视频是在https://www.bilibili.com/video/BV1uS4y1v7qN/?spm_id_from=333.337.search-card.all.click里面下载的。

思路一:来自B站某教程。大致是在视频中选取一窄长条区域,统计每帧图像中车辆检测框的中心点落入该区域的数量求和。在实现中发现该方法受限于窄长条区域的位置和高度和设置,计数效果也不好。

具体实现步骤如下:

Step1 读取视频每帧,做高斯滤波、阈值分割等处理;

Step2 背景差分,可以使用MOG或者KNN等算法;

Step3 图像形态学处理;

Step4 车辆连通域轮廓提取;

Step5 计算各个连通域的中心坐标,若落在指定区域则计数加1。

该方法思路还是很清晰的,其中包含大量传统图像处理方法,适合初学者用来练手。

一个可能的功能实现编码展示如下:

#include <iostream>

#include <opencv2/opencv.hpp>

const int IMAGE_WIDTH = 1280;

const int IMAGE_HEIGHT = 720;

const int LINE_HEIGHT = IMAGE_HEIGHT / 2;

const int MIN_CAR_WIDTH = 80;

const int MIN_CAR_HEIGHT = 40;

const int OFFSET = 6;

const int KSIZE = 9;

//计算检测框的中心

cv::Point get_center(cv::Rect detection)

{

return cv::Point(detection.x + detection.width / 2, detection.y + detection.height / 2);

}

int main(int argc, char** argv)

{

cv::VideoCapture capture("test.mp4");

cv::Mat frame, image;

cv::Mat kernel = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(KSIZE, KSIZE));

int frame_num = 0;

int count = 0;

cv::Ptr<cv::BackgroundSubtractor> mogSub = cv::createBackgroundSubtractorKNN(5, 50);

while (cv::waitKey(20) < 0)

{

capture >> frame;

if (frame.empty())

break;

frame_num ++;

cv::GaussianBlur(frame, image, cv::Size(KSIZE, KSIZE), KSIZE);

cv::threshold(image, image, 100, 255, cv::THRESH_BINARY);

mogSub->apply(image, image);

if (frame_num < 5)

continue;

cv::morphologyEx(image, image, cv::MORPH_ERODE, kernel);

cv::morphologyEx(image, image, cv::MORPH_DILATE, kernel, cv::Point(-1,-1), 3);

cv::morphologyEx(image, image, cv::MORPH_CLOSE, kernel);

cv::morphologyEx(image, image, cv::MORPH_CLOSE, kernel);

std::vector<cv::Vec4i> hierarchy;

std::vector<std::vector<cv::Point>> contours;

cv::findContours(image, contours, hierarchy, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_NONE);

std::vector<cv::Point> center_points;

for (size_t i = 0; i < contours.size(); ++i)

{

cv::Rect rect = cv::boundingRect(cv::Mat(contours[i]));

if (rect.width < MIN_CAR_WIDTH || rect.height < MIN_CAR_HEIGHT)

continue;

cv::Point center_point = get_center(rect);

center_points.push_back(center_point);

for (auto p : center_points)

{

if (p.y > LINE_HEIGHT - OFFSET && p.y < LINE_HEIGHT + OFFSET)

count++;

}

cv::rectangle(image, rect, cv::Scalar(255, 0, 0), 1);

}

cv::cvtColor(image, image, cv::COLOR_GRAY2BGR);

cv::putText(image, "car num: " + std::to_string(count), cv::Point(20, 50), cv::FONT_HERSHEY_SIMPLEX, 0.7, cv::Scalar(0, 0, 255), 1);

cv::line(image, cv::Point(0, LINE_HEIGHT), cv::Point(IMAGE_WIDTH, LINE_HEIGHT), cv::Scalar(0, 0, 255));

cv::imshow("output", image);

}

capture.release();

return 0;

}

视频中向上通行的车辆一共52辆,但是该算法统计出60辆,误差还是不小的。由于效果一般,就不展示动图结果了。

思路二:除了Step5和思路一不一样之外其他相同。把落在指定区域则计数增加改为撞线计数法。

撞线计数法的步骤如下:

(1)计算初识检测框的中心点,

(2)读取视频的后续帧,计算新目标和上一帧图像中检测框中心点的距离矩阵;

(3)通过距离矩阵确定新旧目标检测框之间的对应关系;

(4)计算对应新旧目标检测框中心点之间的连线,判断和事先设置的虚拟撞线是否相交,若相交则计数加1。

一个可能的功能实现编码展示如下:

#include <iostream>

#include <opencv2/opencv.hpp>

const int IMAGE_WIDTH = 1280;

const int IMAGE_HEIGHT = 720;

const int LINE_HEIGHT = IMAGE_HEIGHT / 2;

const int MIN_CAR_WIDTH = 80;

const int MIN_CAR_HEIGHT = 40;

const int KSIZE = 9;

//计算检测框的中心

std::vector<cv::Point> get_centers(std::vector<cv::Rect> detections)

{

std::vector<cv::Point> detections_centers(detections.size());

for (size_t i = 0; i < detections.size(); i++)

{

detections_centers[i] = cv::Point(detections[i].x + detections[i].width / 2, detections[i].y + detections[i].height / 2);

}

return detections_centers;

}

//计算两点间距离

float get_distance(cv::Point p1, cv::Point p2)

{

return sqrt(pow(p1.x - p2.x, 2) + pow(p1.y - p2.y, 2));

}

//判断连接相邻两帧对应检测框中心的线段是否与红线相交

bool is_cross(cv::Point p1, cv::Point p2)

{

return (p1.y <= LINE_HEIGHT && p2.y > LINE_HEIGHT) || (p1.y > LINE_HEIGHT && p2.y <= LINE_HEIGHT);

}

int main(int argc, char** argv)

{

cv::VideoCapture capture("test.mp4");

cv::Mat frame, image;

cv::Mat kernel = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(KSIZE, KSIZE));

int frame_num = 0;

int count = 0;

std::vector<cv::Point> detections_center_old;

std::vector<cv::Point> detections_center_new;

cv::Ptr<cv::BackgroundSubtractor> mogSub = cv::createBackgroundSubtractorKNN(5, 50);

while (cv::waitKey(20) < 0)

{

capture >> frame;

if (frame.empty())

break;

frame_num++;

cv::GaussianBlur(frame, image, cv::Size(KSIZE, KSIZE), KSIZE);

cv::threshold(image, image, 100, 255, cv::THRESH_BINARY);

mogSub->apply(image, image);

if (frame_num < 6)

continue;

cv::morphologyEx(image, image, cv::MORPH_ERODE, kernel);

cv::morphologyEx(image, image, cv::MORPH_DILATE, kernel, cv::Point(-1, -1), 3);

cv::morphologyEx(image, image, cv::MORPH_CLOSE, kernel);

cv::morphologyEx(image, image, cv::MORPH_CLOSE, kernel);

std::vector<cv::Vec4i> hierarchy;

std::vector<std::vector<cv::Point>> contours;

cv::findContours(image, contours, hierarchy, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_NONE);

std::vector<cv::Rect> detections;

for (size_t i = 0; i < contours.size(); ++i)

{

cv::Rect detection = cv::boundingRect(cv::Mat(contours[i]));

detections.push_back(detection);

if (detection.y + detection.height > LINE_HEIGHT)

{

cv::rectangle(frame, detection, cv::Scalar(255, 0, 0), 1);

}

}

if (frame_num == 6)

{

detections_center_old = get_centers(detections);

#ifdef DEBUG

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_old.size(); i++)

{

std::cout << detections_center_old[i] << std::endl;

}

#endif // DEBUG

}

else if (frame_num % 2 == 0)

{

detections_center_new = get_centers(detections);

#ifdef DEBUG

std::cout << "detections_center:" << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::cout << detections_center_new[i] << std::endl;

}

#endif // DEBUG

std::vector<std::vector<float>> distance_matrix(detections_center_new.size(), std::vector<float>(detections_center_old.size())); //距离矩阵

for (size_t i = 0; i < detections_center_new.size(); i++)

{

for (size_t j = 0; j < detections_center_old.size(); j++)

{

distance_matrix[i][j] = get_distance(detections_center_new[i], detections_center_old[j]);

}

}

#ifdef DEBUG

std::cout << "min_index:" << std::endl;

#endif // DEBUG

std::vector<float> min_indices(detections_center_new.size());

for (size_t i = 0; i < detections_center_new.size(); i++)

{

std::vector<float> distance_vector = distance_matrix[i];

float min_val = *std::min_element(distance_vector.begin(), distance_vector.end());

int min_index = -1;

if (min_val < LINE_HEIGHT / 5)

min_index = std::min_element(distance_vector.begin(), distance_vector.end()) - distance_vector.begin();

min_indices[i] = min_index;

#ifdef DEBUG

std::cout << min_index << " ";

#endif // DEBUG

}

std::cout << std::endl;

for (size_t i = 0; i < detections_center_new.size(); i++)

{

if (min_indices[i] < 0)

continue;

cv::Point p1 = detections_center_new[i];

cv::Point p2 = detections_center_old[min_indices[i]];

#ifdef DEBUG

std::cout << p1 << " " << p2 << std::endl;

#endif // DEBUG

if (is_cross(p1, p2))

{

#ifdef DEBUG

std::cout << "is_cross" << p1 << " " << p2 << std::endl;

#endif // DEBUG

count++;

}

}

detections_center_old = detections_center_new;

}

cv::putText(frame, "car num: " + std::to_string(count), cv::Point(20, 50), cv::FONT_HERSHEY_SIMPLEX, 2, cv::Scalar(0, 0, 255), 2);

cv::line(frame, cv::Point(0, LINE_HEIGHT), cv::Point(IMAGE_WIDTH, LINE_HEIGHT), cv::Scalar(0, 0, 255));

cv::imshow("output", frame);

}

capture.release();

return 0;

}

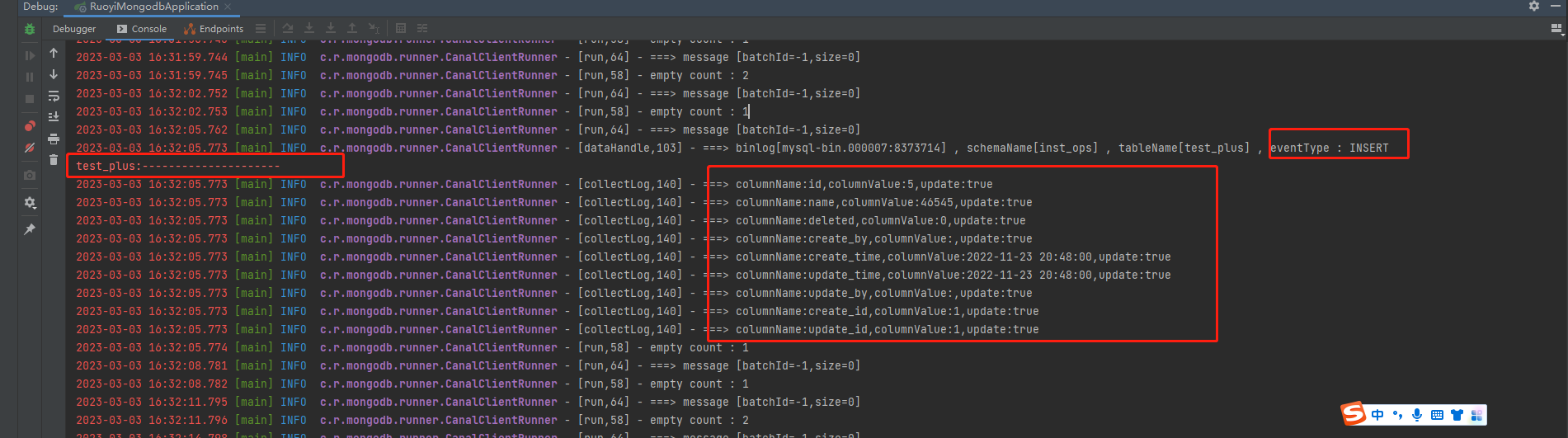

效果展示如下:

可以看到检测框并不准确,会偏大偏小,还会和主体一分为二,这和传统算法预处理的局限性有关,即不具备普适性和鲁棒性。改进后的思路二检出55辆车,比思路一有一定提升。

在两百行C++代码实现yolov5车辆计数部署(通俗易懂版)中,我们可以看到强大的深度学习工具对车辆计数问题的精度带来巨大的提升。