欢迎来到课程5的第一个作业!在此作业中,你将使用numpy实现你的第一个循环神经网络。

循环神经网络(RNN)在解决自然语言处理和其他序列任务上非常有效,因为它们具有“记忆”,可以一次读取一个输入 x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩(例如单词),并通过从一个时间步传递到下一个时间步的隐藏层激活来记住一些信息/上下文。这使得单向RNN可以提取过去的信息以处理之后的输入。双向RNN则可以借鉴过去和未来的上下文信息。

符号:

- 上标

[

l

]

[l]

[l]表示与

l

t

h

l^{th}

lth层关联的对象。

- 例如: a [ 4 ] a^{[4]} a[4]是 4 t h 4^{th} 4th层激活。 W [ 5 ] W^{[5]} W[5]和 b [ 5 ] b^{[5]} b[5]是 5 t h 5^{th} 5th层参数。 - 上标

(

i

)

(i)

(i)表示与

i

t

h

i^{th}

ith示例关联的对象。

- 示例: x ( i ) x^{(i)} x(i)是 i t h i^{th} ith训练示例输入。 - 上标

⟨

t

⟩

\langle t \rangle

⟨t⟩表示在

t

t

h

t^{th}

tth时间步的对象。

- 示例: x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩是在 t t h t^{th} tth时间步的输入x。 x ( i ) ⟨ t ⟩ x^{(i)\langle t \rangle} x(i)⟨t⟩是示例 i i i的 t t h t^{th} tth时间步的输入。 - 下标

I

I

I表示向量的

i

t

h

i^{th}

ith条目。

- 示例: a i [ l ] a^{[l]}_i ai[l]表示层 l l l中激活的 i t h i^{th} ith条目。

我们假设你已经熟悉numpy或者已经完成了之前的专业课程。让我们开始吧!

评论

In [3]:

cd /home/kesci/input/deeplearning131883

/home/kesci/input/deeplearning131883

首先导入在作业过程中需要用到的所有软件包。

In [4]:

import numpy as np

from rnn_utils import *

1 循环神经网络的正向传播

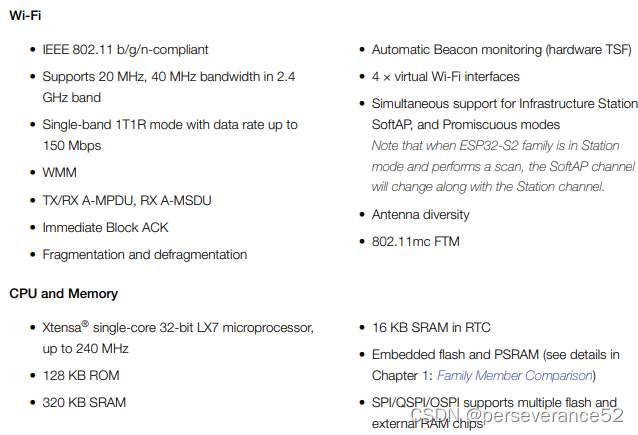

在本周之后的作业,你将使用RNN生成音乐。你将实现的基本RNN具有以下结构。在此示例中, T x = T y T_x = T_y Tx=Ty。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-wQGA5Vvx-1667651367211)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960.png)]](https://img-blog.csdnimg.cn/b41dfea41ef140839f3afef75eed1672.png)

图1 :基础RNN模型

这是实现RNN的方法:

步骤:

- 实现RNN的一个时间步所需的计算。

- 在 T x T_x Tx个时间步上实现循环,以便一次处理所有输入。

让我们开始吧!

1.1 RNN单元

循环神经网络可以看作是单个cell的重复。你首先要在单个时间步上实现计算。下图描述了RNN单元的单个时间步的操作。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-cXBdvPwH-1667651367213)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960-1667291444257-1.png)]](https://img-blog.csdnimg.cn/33f80668723a442681f1fec7bc406e33.png)

图2:基础RNN单元,将 x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩(当前输入)和 a ⟨ t − 1 ⟩ a^{\langle t - 1\rangle} a⟨t−1⟩(包含过去信息的前隐藏状态)作为输入,并输出 a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩给下一个RNN单元,用于预测 y ⟨ t ⟩ y^{\langle t \rangle} y⟨t⟩

练习:实现图(2)中描述的RNN单元。

说明:

- 使用tanh激活计算隐藏状态: a ⟨ t ⟩ = tanh ( W a a a ⟨ t − 1 ⟩ + W a x x ⟨ t ⟩ + b a ) a^{\langle t \rangle} = \tanh(W_{aa} a^{\langle t-1 \rangle} + W_{ax} x^{\langle t \rangle} + b_a) a⟨t⟩=tanh(Waaa⟨t−1⟩+Waxx⟨t⟩+ba)。

- 使用新的隐藏状态

a

⟨

t

⟩

a^{\langle t \rangle}

a⟨t⟩,计算预测

y

^

⟨

t

⟩

=

s

o

f

t

m

a

x

(

W

y

a

a

⟨

t

⟩

+

b

y

)

\hat{y}^{\langle t \rangle} = softmax(W_{ya} a^{\langle t \rangle} + b_y)

y^⟨t⟩=softmax(Wyaa⟨t⟩+by)。我们为你提供了一个函数:

softmax。 - 将 ( a ⟨ t ⟩ , a ⟨ t − 1 ⟩ , x ⟨ t ⟩ , p a r a m e t e r s ) (a^{\langle t \rangle}, a^{\langle t-1 \rangle}, x^{\langle t \rangle}, parameters) (a⟨t⟩,a⟨t−1⟩,x⟨t⟩,parameters)存储在缓存中

- 返回 a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩并缓存

我们将对m个示例进行向量化处理。因此, x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩维度将是 ( n x , m ) (n_x,m) (nx,m),而 a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩维度将是 ( n a , m ) (n_a,m) (na,m)。

In [5]:

# GRADED FUNCTION: rnn_cell_forward

def rnn_cell_forward(xt, a_prev, parameters):

"""

Implements a single forward step of the RNN-cell as described in Figure (2)

Arguments:

xt -- your input data at timestep "t", numpy array of shape (n_x, m).

a_prev -- Hidden state at timestep "t-1", numpy array of shape (n_a, m)

parameters -- python dictionary containing:

Wax -- Weight matrix multiplying the input, numpy array of shape (n_a, n_x)

Waa -- Weight matrix multiplying the hidden state, numpy array of shape (n_a, n_a)

Wya -- Weight matrix relating the hidden-state to the output, numpy array of shape (n_y, n_a)

ba -- Bias, numpy array of shape (n_a, 1)

by -- Bias relating the hidden-state to the output, numpy array of shape (n_y, 1)

Returns:

a_next -- next hidden state, of shape (n_a, m)

yt_pred -- prediction at timestep "t", numpy array of shape (n_y, m)

cache -- tuple of values needed for the backward pass, contains (a_next, a_prev, xt, parameters)

"""

# Retrieve parameters from "parameters"

Wax = parameters["Wax"]

Waa = parameters["Waa"]

Wya = parameters["Wya"]

ba = parameters["ba"]

by = parameters["by"]

### START CODE HERE ### (≈2 lines)

# compute next activation state using the formula given above

a_next = np.tanh(np.dot(Wax,xt)+np.dot(Waa,a_prev)+ba)

# compute output of the current cell using the formula given above

yt_pred = softmax(np.dot(Wya,a_next)+by)

### END CODE HERE ###

# store values you need for backward propagation in cache

cache = (a_next, a_prev, xt, parameters)

return a_next, yt_pred, cache

In [6]:

np.random.seed(1)

xt = np.random.randn(3,10)

a_prev = np.random.randn(5,10)

Waa = np.random.randn(5,5)

Wax = np.random.randn(5,3)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Waa": Waa, "Wax": Wax, "Wya": Wya, "ba": ba, "by": by}

a_next, yt_pred, cache = rnn_cell_forward(xt, a_prev, parameters)

print("a_next[4] = ", a_next[4])

print("a_next.shape = ", a_next.shape)

print("yt_pred[1] =", yt_pred[1])

print("yt_pred.shape = ", yt_pred.shape)

a_next[4] = [ 0.59584544 0.18141802 0.61311866 0.99808218 0.85016201 0.99980978

-0.18887155 0.99815551 0.6531151 0.82872037]

a_next.shape = (5, 10)

yt_pred[1] = [0.9888161 0.01682021 0.21140899 0.36817467 0.98988387 0.88945212

0.36920224 0.9966312 0.9982559 0.17746526]

yt_pred.shape = (2, 10)

预期输出:

a_next[4] = [ 0.59584544 0.18141802 0.61311866 0.99808218 0.85016201 0.99980978

-0.18887155 0.99815551 0.6531151 0.82872037]

a_next.shape = (5, 10)

yt_pred[1] = [0.9888161 0.01682021 0.21140899 0.36817467 0.98988387 0.88945212

0.36920224 0.9966312 0.9982559 0.17746526]

yt_pred.shape = (2, 10)

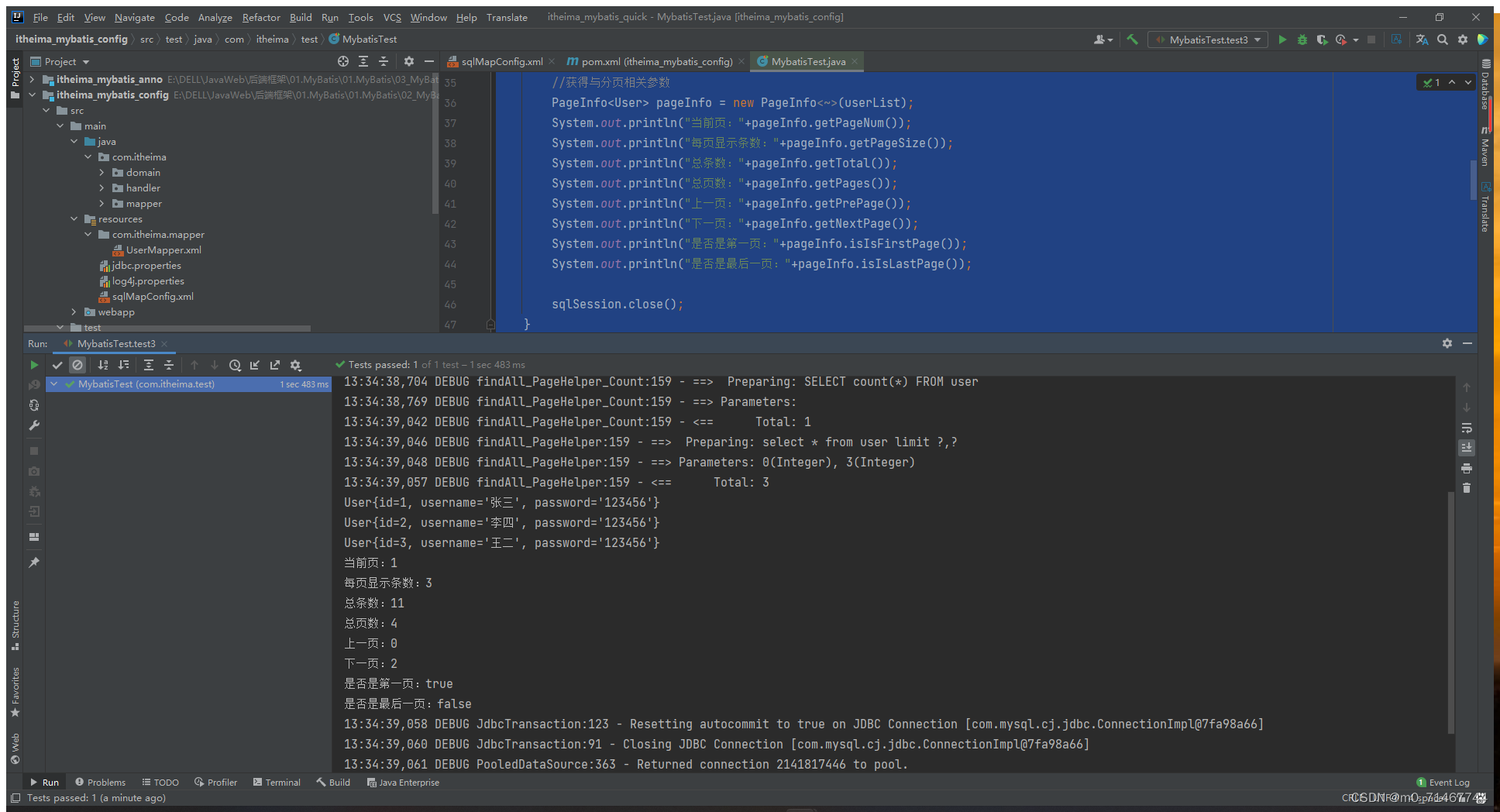

1.2 RNN正向传播

你可以将RNN视为刚刚构建的单元的重复。如果输入的数据序列经过10个时间步长,则将复制RNN单元10次。每个单元格都将前一个单元格( a ⟨ t − 1 ⟩ a^{\langle t-1 \rangle} a⟨t−1⟩)的隐藏状态和当前时间步的输入数据( x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩)作为输入,并为此时间步输出隐藏状态( a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩) 和预测( y ⟨ t ⟩ y^{\langle t \rangle} y⟨t⟩)。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-6BYdh19X-1667651367214)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960-1667291444258-2.png)]](https://img-blog.csdnimg.cn/5810c150af2b4dc4a20a4884e3cc8c65.png)

图3:基本RNN。输入序列 x = ( x ⟨ 1 ⟩ , x ⟨ 2 ⟩ , . . . , x ⟨ T x ⟩ ) x = (x^{\langle 1 \rangle}, x^{\langle 2 \rangle}, ..., x^{\langle T_x \rangle}) x=(x⟨1⟩,x⟨2⟩,...,x⟨Tx⟩)执行 T x T_x Tx个时间步。网络输出 y = ( y ⟨ 1 ⟩ , y ⟨ 2 ⟩ , . . . , y ⟨ T x ⟩ ) y = (y^{\langle 1 \rangle}, y^{\langle 2 \rangle}, ..., y^{\langle T_x \rangle}) y=(y⟨1⟩,y⟨2⟩,...,y⟨Tx⟩)。

练习:编码实现图(3)中描述的RNN的正向传播。

说明:

- 创建一个零向量( a a a),该向量将存储RNN计算的所有隐藏状态。

- 将“下一个”隐藏状态初始化为 a 0 a_0 a0(初始隐藏状态)。

- 开始遍历每个时间步,增量索引为t:

- 通过运行rnn_step_forward更新“下一个”隐藏状态和缓存。

- 将“下一个”隐藏状态存储在a中( t t h t^{th} tth位置)

- 将预测存储在y中

- 将缓存添加到缓存列表中

- 返回a,y和缓存

In [7]:

# GRADED FUNCTION: rnn_forward

def rnn_forward(x, a0, parameters):

"""

Implement the forward propagation of the recurrent neural network described in Figure (3).

Arguments:

x -- Input data for every time-step, of shape (n_x, m, T_x).

a0 -- Initial hidden state, of shape (n_a, m)

parameters -- python dictionary containing:

Waa -- Weight matrix multiplying the hidden state, numpy array of shape (n_a, n_a)

Wax -- Weight matrix multiplying the input, numpy array of shape (n_a, n_x)

Wya -- Weight matrix relating the hidden-state to the output, numpy array of shape (n_y, n_a)

ba -- Bias numpy array of shape (n_a, 1)

by -- Bias relating the hidden-state to the output, numpy array of shape (n_y, 1)

Returns:

a -- Hidden states for every time-step, numpy array of shape (n_a, m, T_x)

y_pred -- Predictions for every time-step, numpy array of shape (n_y, m, T_x)

caches -- tuple of values needed for the backward pass, contains (list of caches, x)

"""

# Initialize "caches" which will contain the list of all caches

caches = []

# Retrieve dimensions from shapes of x and Wy

n_x, m, T_x = x.shape

n_y, n_a = parameters["Wya"].shape

### START CODE HERE ###

# initialize "a" and "y" with zeros (≈2 lines)

a = np.zeros((n_a,m,T_x))

y_pred = np.zeros((n_y,m,T_x))

# Initialize a_next (≈1 line)

a_next = a0

# loop over all time-steps

for t in range(T_x):

# Update next hidden state, compute the prediction, get the cache (≈1 line)

a_next, yt_pred, cache = rnn_cell_forward(x[:,:,t],a_next,parameters)

# Save the value of the new "next" hidden state in a (≈1 line)

a[:,:,t] = a_next

# Save the value of the prediction in y (≈1 line)

y_pred[:,:,t] = yt_pred

# Append "cache" to "caches" (≈1 line)

caches.append(cache)

### END CODE HERE ###

# store values needed for backward propagation in cache

caches = (caches, x)

return a, y_pred, caches

In [8]:

np.random.seed(1)

x = np.random.randn(3,10,4)

a0 = np.random.randn(5,10)

Waa = np.random.randn(5,5)

Wax = np.random.randn(5,3)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Waa": Waa, "Wax": Wax, "Wya": Wya, "ba": ba, "by": by}

a, y_pred, caches = rnn_forward(x, a0, parameters)

print("a[4][1] = ", a[4][1])

print("a.shape = ", a.shape)

print("y_pred[1][3] =", y_pred[1][3])

print("y_pred.shape = ", y_pred.shape)

print("caches[1][1][3] =", caches[1][1][3])

print("len(caches) = ", len(caches))

a[4][1] = [-0.99999375 0.77911235 -0.99861469 -0.99833267]

a.shape = (5, 10, 4)

y_pred[1][3] = [0.79560373 0.86224861 0.11118257 0.81515947]

y_pred.shape = (2, 10, 4)

caches[1][1][3] = [-1.1425182 -0.34934272 -0.20889423 0.58662319]

len(caches) = 2

预期输出:

a[4][1] = [-0.99999375 0.77911235 -0.99861469 -0.99833267]

a.shape = (5, 10, 4)

y_pred[1][3] = [0.79560373 0.86224861 0.11118257 0.81515947]

y_pred.shape = (2, 10, 4)

caches[1][1][3] = [-1.1425182 -0.34934272 -0.20889423 0.58662319]

len(caches) = 2

Nice!你已经从头实现了循环神经网络的正向传播。对于某些应用来说,这已经足够好,但是会遇到梯度消失的问题。因此,当每个输出 y ⟨ t ⟩ y^{\langle t \rangle} y⟨t⟩主要使用"local"上下文进行估算时它表现最好(即来自输入 x ⟨ t ′ ⟩ x^{\langle t' \rangle} x⟨t′⟩的信息,其中 t ′ t' t′是距离 t t t较近)。

在下一部分中,你将构建一个更复杂的LSTM模型,该模型更适合解决逐渐消失的梯度。LSTM将能够更好地记住一条信息并将其保存许多个时间步。

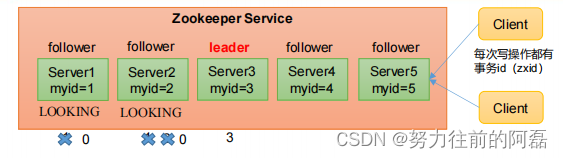

2 长短期记忆网络(LSTM)

下图显示了LSTM单元的运作。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-DQrpr81O-1667651367216)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960-1667291444258-3.png)]](https://img-blog.csdnimg.cn/3df426ef23324bb38c4213eb1e3734e1.png)

图4:LSTM单元,这会在每个时间步上跟踪并更新“单元状态”或存储的变量 c ⟨ t ⟩ c^{\langle t \rangle} c⟨t⟩,与 a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩不同。

与上面的RNN示例类似,你将以单个时间步开始实现LSTM单元。然后,你可以从for循环内部迭代调用它,以使其具有 T x T_x Tx时间步长的输入。

2.0 关于“门”

遗忘门

为了便于说明,假设我们正在阅读一段文本中的单词,并希望使用LSTM跟踪语法结构,例如主体是单数还是复数。如果主体从单数变为复数,我们需要找到一种方法来摆脱以前存储的单/复数状态的内存值。在LSTM中,遗忘门可以实现次操作:

Γ

f

⟨

t

⟩

=

σ

(

W

f

[

a

⟨

t

−

1

⟩

,

x

⟨

t

⟩

]

+

b

f

)

(1)

\Gamma_f^{\langle t \rangle} = \sigma(W_f[a^{\langle t-1 \rangle}, x^{\langle t \rangle}] + b_f)\tag{1}

Γf⟨t⟩=σ(Wf[a⟨t−1⟩,x⟨t⟩]+bf)(1)

在这里, W f W_f Wf是控制遗忘门行为的权重。我们将 [ a ⟨ t − 1 ⟩ , x ⟨ t ⟩ ] [a^{\langle t-1 \rangle}, x^{\langle t \rangle}] [a⟨t−1⟩,x⟨t⟩]连接起来,然后乘以 W f W_f Wf。上面的等式使得向量 Γ f ⟨ t ⟩ \Gamma_f^{\langle t \rangle} Γf⟨t⟩的值介于0到1之间。该遗忘门向量将逐元素乘以先前的单元状态 c ⟨ t − 1 ⟩ c^{\langle t-1 \rangle} c⟨t−1⟩。因此,如果 Γ f ⟨ t ⟩ \Gamma_f^{\langle t \rangle} Γf⟨t⟩的其中一个值为0(或接近于0),则表示LSTM应该移除 c ⟨ t − 1 ⟩ c^{\langle t-1 \rangle} c⟨t−1⟩组件中的一部分信息(例如,单数主题),如果其中一个值为1,则它将保留信息。

更新门

一旦我们忘记了所讨论的主体是单数,就需要找到一种更新它的方式,以反映新主体现在是复数。这是更新门的公式:

Γ

u

⟨

t

⟩

=

σ

(

W

u

[

a

⟨

t

−

1

⟩

,

x

{

t

}

]

+

b

u

)

(2)

\Gamma_u^{\langle t \rangle} = \sigma(W_u[a^{\langle t-1 \rangle}, x^{\{t\}}] + b_u)\tag{2}

Γu⟨t⟩=σ(Wu[a⟨t−1⟩,x{t}]+bu)(2)

类似于遗忘门,在这里 Γ u ⟨ t ⟩ \Gamma_u^{\langle t \rangle} Γu⟨t⟩也是值为0到1之间的向量。这将与 c ~ ⟨ t ⟩ \tilde{c}^{\langle t \rangle} c~⟨t⟩逐元素相乘以计算 c ⟨ t ⟩ c^{\langle t \rangle} c⟨t⟩。

更新单元格

要更新新主体,我们需要创建一个新的数字向量,可以将其添加到先前的单元格状态中。我们使用的等式是:

c

~

⟨

t

⟩

=

tanh

(

W

c

[

a

⟨

t

−

1

⟩

,

x

⟨

t

⟩

]

+

b

c

)

(3)

\tilde{c}^{\langle t \rangle} = \tanh(W_c[a^{\langle t-1 \rangle}, x^{\langle t \rangle}] + b_c)\tag{3}

c~⟨t⟩=tanh(Wc[a⟨t−1⟩,x⟨t⟩]+bc)(3)

最后,新的单元状态为:

c

⟨

t

⟩

=

Γ

f

⟨

t

⟩

∗

c

⟨

t

−

1

⟩

+

Γ

u

⟨

t

⟩

∗

c

~

⟨

t

⟩

(4)

c^{\langle t \rangle} = \Gamma_f^{\langle t \rangle}* c^{\langle t-1 \rangle} + \Gamma_u^{\langle t \rangle} *\tilde{c}^{\langle t \rangle} \tag{4}

c⟨t⟩=Γf⟨t⟩∗c⟨t−1⟩+Γu⟨t⟩∗c~⟨t⟩(4)

输出门

为了确定我们将使用哪些输出,我们将使用以下两个公式:

Γ

o

⟨

t

⟩

=

σ

(

W

o

[

a

⟨

t

−

1

⟩

,

x

⟨

t

⟩

]

+

b

o

)

(5)

\Gamma_o^{\langle t \rangle}= \sigma(W_o[a^{\langle t-1 \rangle}, x^{\langle t \rangle}] + b_o)\tag{5}

Γo⟨t⟩=σ(Wo[a⟨t−1⟩,x⟨t⟩]+bo)(5)

a ⟨ t ⟩ = Γ o ⟨ t ⟩ ∗ tanh ( c ⟨ t ⟩ ) (6) a^{\langle t \rangle} = \Gamma_o^{\langle t \rangle}* \tanh(c^{\langle t \rangle})\tag{6} a⟨t⟩=Γo⟨t⟩∗tanh(c⟨t⟩)(6)

在等式5中,你决定使用sigmoid函数输出;在等式6中,将其乘以先前状态的 tanh \tanh tanh。

2.1 LSTM单元

练习:实现图(3)中描述的LSTM单元。

说明:

- 将 a ⟨ t − 1 ⟩ a^{\langle t-1 \rangle} a⟨t−1⟩和 x ⟨ t ⟩ x^{\langle t \rangle} x⟨t⟩连接在一个矩阵中: c o n c a t = [ a ⟨ t − 1 ⟩ x ⟨ t ⟩ ] concat = \begin{bmatrix} a^{\langle t-1 \rangle} \\ x^{\langle t \rangle} \end{bmatrix} concat=[a⟨t−1⟩x⟨t⟩]

- 计算公式2-6,你可以使用

sigmoid()和np.tanh()。 - 计算预测

y

⟨

t

⟩

y^{\langle t \rangle}

y⟨t⟩,你可以使用

softmax()。

In [9]:

# GRADED FUNCTION: lstm_cell_forward

def lstm_cell_forward(xt, a_prev, c_prev, parameters):

"""

Implement a single forward step of the LSTM-cell as described in Figure (4)

Arguments:

xt -- your input data at timestep "t", numpy array of shape (n_x, m).

a_prev -- Hidden state at timestep "t-1", numpy array of shape (n_a, m)

c_prev -- Memory state at timestep "t-1", numpy array of shape (n_a, m)

parameters -- python dictionary containing:

Wf -- Weight matrix of the forget gate, numpy array of shape (n_a, n_a + n_x)

bf -- Bias of the forget gate, numpy array of shape (n_a, 1)

Wi -- Weight matrix of the save gate, numpy array of shape (n_a, n_a + n_x)

bi -- Bias of the save gate, numpy array of shape (n_a, 1)

Wc -- Weight matrix of the first "tanh", numpy array of shape (n_a, n_a + n_x)

bc -- Bias of the first "tanh", numpy array of shape (n_a, 1)

Wo -- Weight matrix of the focus gate, numpy array of shape (n_a, n_a + n_x)

bo -- Bias of the focus gate, numpy array of shape (n_a, 1)

Wy -- Weight matrix relating the hidden-state to the output, numpy array of shape (n_y, n_a)

by -- Bias relating the hidden-state to the output, numpy array of shape (n_y, 1)

Returns:

a_next -- next hidden state, of shape (n_a, m)

c_next -- next memory state, of shape (n_a, m)

yt_pred -- prediction at timestep "t", numpy array of shape (n_y, m)

cache -- tuple of values needed for the backward pass, contains (a_next, c_next, a_prev, c_prev, xt, parameters)

Note: ft/it/ot stand for the forget/update/output gates, cct stands for the candidate value (c tilda),

c stands for the memory value

"""

# Retrieve parameters from "parameters"

Wf = parameters["Wf"]

bf = parameters["bf"]

Wi = parameters["Wi"]

bi = parameters["bi"]

Wc = parameters["Wc"]

bc = parameters["bc"]

Wo = parameters["Wo"]

bo = parameters["bo"]

Wy = parameters["Wy"]

by = parameters["by"]

# Retrieve dimensions from shapes of xt and Wy

n_x, m = xt.shape

n_y, n_a = Wy.shape

### START CODE HERE ###

# Concatenate a_prev and xt (≈3 lines)

concat = np.zeros((n_x+n_a,m))

concat[: n_a, :] = a_prev

concat[n_a :, :] = xt

# Compute values for ft, it, cct, c_next, ot, a_next using the formulas given figure (4) (≈6 lines)

ft = sigmoid(np.dot(Wf,concat)+bf)

it = sigmoid(np.dot(Wi,concat)+bi)

cct = np.tanh(np.dot(Wc,concat)+bc)

c_next = ft*c_prev + it*cct

ot = sigmoid(np.dot(Wo,concat)+bo)

a_next = ot*np.tanh(c_next)

# Compute prediction of the LSTM cell (≈1 line)

yt_pred = softmax(np.dot(Wy, a_next) + by)

### END CODE HERE ###

# store values needed for backward propagation in cache

cache = (a_next, c_next, a_prev, c_prev, ft, it, cct, ot, xt, parameters)

return a_next, c_next, yt_pred, cache

In [10]:

np.random.seed(1)

xt = np.random.randn(3,10)

a_prev = np.random.randn(5,10)

c_prev = np.random.randn(5,10)

Wf = np.random.randn(5, 5+3)

bf = np.random.randn(5,1)

Wi = np.random.randn(5, 5+3)

bi = np.random.randn(5,1)

Wo = np.random.randn(5, 5+3)

bo = np.random.randn(5,1)

Wc = np.random.randn(5, 5+3)

bc = np.random.randn(5,1)

Wy = np.random.randn(2,5)

by = np.random.randn(2,1)

parameters = {"Wf": Wf, "Wi": Wi, "Wo": Wo, "Wc": Wc, "Wy": Wy, "bf": bf, "bi": bi, "bo": bo, "bc": bc, "by": by}

a_next, c_next, yt, cache = lstm_cell_forward(xt, a_prev, c_prev, parameters)

print("a_next[4] = ", a_next[4])

print("a_next.shape = ", c_next.shape)

print("c_next[2] = ", c_next[2])

print("c_next.shape = ", c_next.shape)

print("yt[1] =", yt[1])

print("yt.shape = ", yt.shape)

print("cache[1][3] =", cache[1][3])

print("len(cache) = ", len(cache))

a_next[4] = [-0.66408471 0.0036921 0.02088357 0.22834167 -0.85575339 0.00138482

0.76566531 0.34631421 -0.00215674 0.43827275]

a_next.shape = (5, 10)

c_next[2] = [ 0.63267805 1.00570849 0.35504474 0.20690913 -1.64566718 0.11832942

0.76449811 -0.0981561 -0.74348425 -0.26810932]

c_next.shape = (5, 10)

yt[1] = [0.79913913 0.15986619 0.22412122 0.15606108 0.97057211 0.31146381

0.00943007 0.12666353 0.39380172 0.07828381]

yt.shape = (2, 10)

cache[1][3] = [-0.16263996 1.03729328 0.72938082 -0.54101719 0.02752074 -0.30821874

0.07651101 -1.03752894 1.41219977 -0.37647422]

len(cache) = 10

预期输出:

a_next[4] = [-0.66408471 0.0036921 0.02088357 0.22834167 -0.85575339 0.00138482

0.76566531 0.34631421 -0.00215674 0.43827275]

a_next.shape = (5, 10)

c_next[2] = [ 0.63267805 1.00570849 0.35504474 0.20690913 -1.64566718 0.11832942

0.76449811 -0.0981561 -0.74348425 -0.26810932]

c_next.shape = (5, 10)

yt[1] = [0.79913913 0.15986619 0.22412122 0.15606108 0.97057211 0.31146381

0.00943007 0.12666353 0.39380172 0.07828381]

yt.shape = (2, 10)

cache[1][3] = [-0.16263996 1.03729328 0.72938082 -0.54101719 0.02752074 -0.30821874

0.07651101 -1.03752894 1.41219977 -0.37647422]

len(cache) = 10

2.2 LSTM的正向传播

既然你已经实现了LSTM的一个步骤,现在就可以使用for循环在Tx输入序列上对此进行迭代。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-i9wuM89R-1667651367217)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960-1667291444258-4.png)]](https://img-blog.csdnimg.cn/80664b5a83db4d35af81a9452cb35323.png)

图4:多个时间步的LSTM。

练习:实现lstm_forward()以在

T

x

T_x

Tx个时间步上运行LSTM。

注意: c ⟨ 0 ⟩ c^{\langle 0 \rangle} c⟨0⟩用零初始化。

In [11]:

# GRADED FUNCTION: lstm_forward

def lstm_forward(x, a0, parameters):

"""

Implement the forward propagation of the recurrent neural network using an LSTM-cell described in Figure (3).

Arguments:

x -- Input data for every time-step, of shape (n_x, m, T_x).

a0 -- Initial hidden state, of shape (n_a, m)

parameters -- python dictionary containing:

Wf -- Weight matrix of the forget gate, numpy array of shape (n_a, n_a + n_x)

bf -- Bias of the forget gate, numpy array of shape (n_a, 1)

Wi -- Weight matrix of the save gate, numpy array of shape (n_a, n_a + n_x)

bi -- Bias of the save gate, numpy array of shape (n_a, 1)

Wc -- Weight matrix of the first "tanh", numpy array of shape (n_a, n_a + n_x)

bc -- Bias of the first "tanh", numpy array of shape (n_a, 1)

Wo -- Weight matrix of the focus gate, numpy array of shape (n_a, n_a + n_x)

bo -- Bias of the focus gate, numpy array of shape (n_a, 1)

Wy -- Weight matrix relating the hidden-state to the output, numpy array of shape (n_y, n_a)

by -- Bias relating the hidden-state to the output, numpy array of shape (n_y, 1)

Returns:

a -- Hidden states for every time-step, numpy array of shape (n_a, m, T_x)

y -- Predictions for every time-step, numpy array of shape (n_y, m, T_x)

caches -- tuple of values needed for the backward pass, contains (list of all the caches, x)

"""

# Initialize "caches", which will track the list of all the caches

caches = []

### START CODE HERE ###

# Retrieve dimensions from shapes of xt and Wy (≈2 lines)

n_x, m, T_x = x.shape

n_y, n_a = parameters['Wy'].shape

# initialize "a", "c" and "y" with zeros (≈3 lines)

a = np.zeros((n_a, m, T_x))

c = np.zeros((n_a, m, T_x))

y = np.zeros((n_y, m, T_x))

# Initialize a_next and c_next (≈2 lines)

a_next = a0

c_next = np.zeros((n_a, m))

# loop over all time-steps

for t in range(T_x):

# Update next hidden state, next memory state, compute the prediction, get the cache (≈1 line)

a_next, c_next, yt, cache = lstm_cell_forward(x[:, :, t], a_next, c_next, parameters)

# Save the value of the new "next" hidden state in a (≈1 line)

a[:,:,t] = a_next

# Save the value of the prediction in y (≈1 line)

y[:,:,t] = yt

# Save the value of the next cell state (≈1 line)

c[:,:,t] = c_next

# Append the cache into caches (≈1 line)

caches.append(cache)

### END CODE HERE ###

# store values needed for backward propagation in cache

caches = (caches, x)

return a, y, c, caches

In [12]:

np.random.seed(1)

x = np.random.randn(3,10,7)

a0 = np.random.randn(5,10)

Wf = np.random.randn(5, 5+3)

bf = np.random.randn(5,1)

Wi = np.random.randn(5, 5+3)

bi = np.random.randn(5,1)

Wo = np.random.randn(5, 5+3)

bo = np.random.randn(5,1)

Wc = np.random.randn(5, 5+3)

bc = np.random.randn(5,1)

Wy = np.random.randn(2,5)

by = np.random.randn(2,1)

parameters = {"Wf": Wf, "Wi": Wi, "Wo": Wo, "Wc": Wc, "Wy": Wy, "bf": bf, "bi": bi, "bo": bo, "bc": bc, "by": by}

a, y, c, caches = lstm_forward(x, a0, parameters)

print("a[4][3][6] = ", a[4][3][6])

print("a.shape = ", a.shape)

print("y[1][4][3] =", y[1][4][3])

print("y.shape = ", y.shape)

print("caches[1][1[1]] =", caches[1][1][1])

print("c[1][2][1]", c[1][2][1])

print("len(caches) = ", len(caches))

a[4][3][6] = 0.17211776753291672

a.shape = (5, 10, 7)

y[1][4][3] = 0.9508734618501101

y.shape = (2, 10, 7)

caches[1][1[1]] = [ 0.82797464 0.23009474 0.76201118 -0.22232814 -0.20075807 0.18656139

0.41005165]

c[1][2][1] -0.8555449167181981

len(caches) = 2

预期输出:

a[4][3][6] = 0.17211776753291672

a.shape = (5, 10, 7)

y[1][4][3] = 0.9508734618501101

y.shape = (2, 10, 7)

caches[1][1[1]] = [ 0.82797464 0.23009474 0.76201118 -0.22232814 -0.20075807 0.18656139

0.41005165]

c[1][2][1] -0.8555449167181981

len(caches) = 2

Nice!现在,你已经为基础RNN和LSTM实现了正向传播。使用深度学习框架时,实现正向传播以足以构建性能出色的系统。

此笔记本电脑的其余部分是可选的,不会用于评分。

3 循环神经网络中的反向传播(可选练习)

在现代深度学习框架中,你仅需实现正向传播,而框架将处理反向传播,因此大多数深度学习工程师无需理会反向传播的细节。但是,如果你是微积分专家并且想查看RNN中反向传播的详细信息,则可以学习此笔记本的剩余部分。

在较早的课程中,当你实现了一个简单的(全连接的)神经网络时,你就使用了反向传播来计算用于更新参数的损失的导数。同样,在循环神经网络中,你可以计算损失的导数以更新参数。反向传播方程非常复杂,我们在讲座中没有导出它们。但是,我们将在下面简要介绍它们。

3.1 基础RNN的反向传播

我们将从计算基本RNN单元的反向传播开始。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-4hUm4GaQ-1667651367218)(L5W1%E4%BD%9C%E4%B8%9A1%20%E6%89%8B%E6%8A%8A%E6%89%8B%E5%AE%9E%E7%8E%B0%E5%BE%AA%E7%8E%AF%E7%A5%9E%E7%BB%8F%E7%BD%91%E7%BB%9C.assets/960-1667291444258-5.png)]](https://img-blog.csdnimg.cn/6fdbc6be97e443dba9f64d0168ac1632.png)

图5:RNN单元的反向传播。就像在全连接的神经网络中一样,损失函数J的导数遵循链规则在RNN中计算反向传播。链规则还用于计算 ( ∂ J ∂ W a x , ∂ J ∂ W a a , ∂ J ∂ b ) (\frac{\partial J}{\partial W_{ax}},\frac{\partial J}{\partial W_{aa}},\frac{\partial J}{\partial b}) (∂Wax∂J,∂Waa∂J,∂b∂J)更新参数 ( W a x , W a a , b a ) (W_{ax}, W_{aa}, b_a) (Wax,Waa,ba)。

3.1.1 反向求导函数:

要计算rnn_cell_backward,你需要计算以下方程式。手工导出它们是一个很好的练习。

tanh \tanh tanh的导数为 1 − tanh ( x ) 2 1-\tanh(x)^2 1−tanh(x)2。你可以在here中找到完整的证明。请注意: sec ( x ) 2 = 1 − tanh ( x ) 2 \sec(x)^2 = 1 - \tanh(x)^2 sec(x)2=1−tanh(x)2

同样,对于 ∂ a ⟨ t ⟩ ∂ W a x , ∂ a ⟨ t ⟩ ∂ W a a , ∂ a ⟨ t ⟩ ∂ b \frac{ \partial a^{\langle t \rangle} } {\partial W_{ax}}, \frac{ \partial a^{\langle t \rangle} } {\partial W_{aa}}, \frac{ \partial a^{\langle t \rangle} } {\partial b} ∂Wax∂a⟨t⟩,∂Waa∂a⟨t⟩,∂b∂a⟨t⟩, tanh ( u ) \tanh(u) tanh(u)导数为 ( 1 − tanh ( u ) 2 ) d u (1-\tanh(u)^2)du (1−tanh(u)2)du。

最后两个方程式也遵循相同的规则,并使用$ tanh$ 导数导出。请注意,这种安排是为了获得相同的维度以方便匹配的。

In [13]:

def rnn_cell_backward(da_next, cache):

"""

Implements the backward pass for the RNN-cell (single time-step).

Arguments:

da_next -- Gradient of loss with respect to next hidden state

cache -- python dictionary containing useful values (output of rnn_step_forward())

Returns:

gradients -- python dictionary containing:

dx -- Gradients of input data, of shape (n_x, m)

da_prev -- Gradients of previous hidden state, of shape (n_a, m)

dWax -- Gradients of input-to-hidden weights, of shape (n_a, n_x)

dWaa -- Gradients of hidden-to-hidden weights, of shape (n_a, n_a)

dba -- Gradients of bias vector, of shape (n_a, 1)

"""

# Retrieve values from cache

(a_next, a_prev, xt, parameters) = cache

# Retrieve values from parameters

Wax = parameters["Wax"]

Waa = parameters["Waa"]

Wya = parameters["Wya"]

ba = parameters["ba"]

by = parameters["by"]

### START CODE HERE ###

# compute the gradient of tanh with respect to a_next (≈1 line)

dtanh = (1-a_next*a_next)*da_next #注意这里是 element_wise ,即 * da_next,dtanh 可以只看做一个中间结果的表示方式

# compute the gradient of the loss with respect to Wax (≈2 lines)

dxt = np.dot(Wax.T, dtanh)

dWax = np.dot(dtanh,xt.T)

# 根据公式1、2, dxt = da_next .( Wax.T . (1- tanh(a_next)**2) ) = da_next .( Wax.T . dtanh * (1/d_a_next) )= Wax.T . dtanh

# 根据公式1、3, dWax = da_next .( (1- tanh(a_next)**2) . xt.T) = da_next .( dtanh * (1/d_a_next) . xt.T )= dtanh . xt.T

# 上面的 . 表示 np.dot

# compute the gradient with respect to Waa (≈2 lines)

da_prev = np.dot(Waa.T, dtanh)

dWaa = np.dot( dtanh,a_prev.T)

# compute the gradient with respect to b (≈1 line)

dba = np.sum( dtanh,keepdims=True,axis=-1) # axis=0 列方向上操作 axis=1 行方向上操作 keepdims=True 矩阵的二维特性

### END CODE HERE ###

# Store the gradients in a python dictionary

gradients = {"dxt": dxt, "da_prev": da_prev, "dWax": dWax, "dWaa": dWaa, "dba": dba}

return gradients

In [14]:

np.random.seed(1)

xt = np.random.randn(3,10)

a_prev = np.random.randn(5,10)

Wax = np.random.randn(5,3)

Waa = np.random.randn(5,5)

Wya = np.random.randn(2,5)

b = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Wax": Wax, "Waa": Waa, "Wya": Wya, "ba": ba, "by": by}

a_next, yt, cache = rnn_cell_forward(xt, a_prev, parameters)

da_next = np.random.randn(5,10)

gradients = rnn_cell_backward(da_next, cache)

print("gradients[\"dxt\"][1][2] =", gradients["dxt"][1][2])

print("gradients[\"dxt\"].shape =", gradients["dxt"].shape)

print("gradients[\"da_prev\"][2][3] =", gradients["da_prev"][2][3])

print("gradients[\"da_prev\"].shape =", gradients["da_prev"].shape)

print("gradients[\"dWax\"][3][1] =", gradients["dWax"][3][1])

print("gradients[\"dWax\"].shape =", gradients["dWax"].shape)

print("gradients[\"dWaa\"][1][2] =", gradients["dWaa"][1][2])

print("gradients[\"dWaa\"].shape =", gradients["dWaa"].shape)

print("gradients[\"dba\"][4] =", gradients["dba"][4])

print("gradients[\"dba\"].shape =", gradients["dba"].shape)

gradients["dxt"][1][2] = -0.4605641030588796

gradients["dxt"].shape = (3, 10)

gradients["da_prev"][2][3] = 0.08429686538067724

gradients["da_prev"].shape = (5, 10)

gradients["dWax"][3][1] = 0.39308187392193034

gradients["dWax"].shape = (5, 3)

gradients["dWaa"][1][2] = -0.28483955786960663

gradients["dWaa"].shape = (5, 5)

gradients["dba"][4] = [0.80517166]

gradients["dba"].shape = (5, 1)

预期输出:

gradients[“dxt”][1][2] = -0.4605641030588796

gradients[“dxt”].shape = (3, 10)

gradients[“da_prev”][2][3] = 0.08429686538067724

gradients[“da_prev”].shape = (5, 10)

gradients[“dWax”][3][1] = 0.39308187392193034

gradients[“dWax”].shape = (5, 3)

gradients[“dWaa”][1][2] = -0.28483955786960663

gradients[“dWaa”].shape = (5, 5)

gradients[“dba”][4] = [0.80517166]

gradients[“dba”].shape = (5, 1)

3.1.2 反向传播

在每个时间步长t上计算相对于 a ⟨ t ⟩ a^{\langle t \rangle} a⟨t⟩的损失梯度非常有用,因为它有助于将梯度反向传播到先前的RNN单元。为此,你需要从头开始遍历所有时间步,并且在每一步中,增加总的 d b a db_a dba, d W a a dW_{aa} dWaa, d W a x dW_{ax} dWax并存储 d x dx dx 。

说明:

实现rnn_backward函数。首先用零初始化返回变量,然后循环遍历所有时间步,同时在每个时间步调用rnn_cell_backward,相应地更新其他变量。

In [15]:

def rnn_backward(da, caches):

"""

Implement the backward pass for a RNN over an entire sequence of input data.

Arguments:

da -- Upstream gradients of all hidden states, of shape (n_a, m, T_x)

caches -- tuple containing information from the forward pass (rnn_forward)

Returns:

gradients -- python dictionary containing:

dx -- Gradient w.r.t. the input data, numpy-array of shape (n_x, m, T_x)

da0 -- Gradient w.r.t the initial hidden state, numpy-array of shape (n_a, m)

dWax -- Gradient w.r.t the input's weight matrix, numpy-array of shape (n_a, n_x)

dWaa -- Gradient w.r.t the hidden state's weight matrix, numpy-arrayof shape (n_a, n_a)

dba -- Gradient w.r.t the bias, of shape (n_a, 1)

"""

### START CODE HERE ###

# Retrieve values from the first cache (t=1) of caches (≈2 lines)

(caches, x) = caches

(a1, a0, x1, parameters) = caches[0] # t=1 时的值

# Retrieve dimensions from da's and x1's shapes (≈2 lines)

n_a, m, T_x = da.shape

n_x, m = x1.shape

# initialize the gradients with the right sizes (≈6 lines)

dx = np.zeros((n_x, m, T_x))

dWax = np.zeros((n_a, n_x))

dWaa = np.zeros((n_a, n_a))

dba = np.zeros((n_a, 1))

da0 = np.zeros((n_a, m))

da_prevt = np.zeros((n_a, m))

# Loop through all the time steps

for t in reversed(range(T_x)):

# Compute gradients at time step t. Choose wisely the "da_next" and the "cache" to use in the backward propagation step. (≈1 line)

gradients = rnn_cell_backward(da[:, :, t] + da_prevt, caches[t]) # da[:,:,t] + da_prevt ,每一个时间步后更新梯度

# Retrieve derivatives from gradients (≈ 1 line)

dxt, da_prevt, dWaxt, dWaat, dbat = gradients["dxt"], gradients["da_prev"], gradients["dWax"], gradients["dWaa"], gradients["dba"]

# Increment global derivatives w.r.t parameters by adding their derivative at time-step t (≈4 lines)

dx[:, :, t] = dxt

dWax += dWaxt

dWaa += dWaat

dba += dbat

# Set da0 to the gradient of a which has been backpropagated through all time-steps (≈1 line)

da0 = da_prevt

### END CODE HERE ###

# Store the gradients in a python dictionary

gradients = {"dx": dx, "da0": da0, "dWax": dWax, "dWaa": dWaa,"dba": dba}

return gradients

In [16]:

np.random.seed(1)

x = np.random.randn(3,10,4)

a0 = np.random.randn(5,10)

Wax = np.random.randn(5,3)

Waa = np.random.randn(5,5)

Wya = np.random.randn(2,5)

ba = np.random.randn(5,1)

by = np.random.randn(2,1)

parameters = {"Wax": Wax, "Waa": Waa, "Wya": Wya, "ba": ba, "by": by}

a, y, caches = rnn_forward(x, a0, parameters)

da = np.random.randn(5, 10, 4)

gradients = rnn_backward(da, caches)

print("gradients[\"dx\"][1][2] =", gradients["dx"][1][2])

print("gradients[\"dx\"].shape =", gradients["dx"].shape)

print("gradients[\"da0\"][2][3] =", gradients["da0"][2][3])

print("gradients[\"da0\"].shape =", gradients["da0"].shape)

print("gradients[\"dWax\"][3][1] =", gradients["dWax"][3][1])

print("gradients[\"dWax\"].shape =", gradients["dWax"].shape)

print("gradients[\"dWaa\"][1][2] =", gradients["dWaa"][1][2])

print("gradients[\"dWaa\"].shape =", gradients["dWaa"].shape)

print("gradients[\"dba\"][4] =", gradients["dba"][4])

print("gradients[\"dba\"].shape =", gradients["dba"].shape)

gradients["dx"][1][2] = [-2.07101689 -0.59255627 0.02466855 0.01483317]

gradients["dx"].shape = (3, 10, 4)

gradients["da0"][2][3] = -0.31494237512664996

gradients["da0"].shape = (5, 10)

gradients["dWax"][3][1] = 11.264104496527777

gradients["dWax"].shape = (5, 3)

gradients["dWaa"][1][2] = 2.303333126579893

gradients["dWaa"].shape = (5, 5)

gradients["dba"][4] = [-0.74747722]

gradients["dba"].shape = (5, 1)

预期输出:

gradients[“dx”][1][2] = [-2.07101689 -0.59255627 0.02466855 0.01483317]

gradients[“dx”].shape = (3, 10, 4)

gradients[“da0”][2][3] = -0.31494237512664996

gradients[“da0”].shape = (5, 10)

gradients[“dWax”][3][1] = 11.264104496527777

gradients[“dWax”].shape = (5, 3)

gradients[“dWaa”][1][2] = 2.303333126579893

gradients[“dWaa”].shape = (5, 5)

gradients[“dba”][4] = [-0.74747722]

gradients[“dba”].shape = (5, 1)

3.2 LSTM反向传播

3.2.1 反向传播一步

LSTM反向传播比正向传播要复杂得多。我们在下面为你提供了LSTM反向传播的所有方程式。(如果你喜欢微积分练习,可以尝试从头开始自己演算)

3.2.2 门求导

$$

d \Gamma_o^{\langle t \rangle} = da_{next}\tanh(c_{next}) * \Gamma_o^{\langle t \rangle}(1-\Gamma_o^{\langle t \rangle})\tag{7}

$$

d c ~ ⟨ t ⟩ = d c n e x t ∗ Γ i ⟨ t ⟩ + Γ o ⟨ t ⟩ ( 1 − tanh ( c n e x t ) 2 ) ∗ i t ∗ d a n e x t ∗ c ~ ⟨ t ⟩ ∗ ( 1 − tanh ( c ~ ) 2 ) (8) d\tilde c^{\langle t \rangle} = dc_{next}*\Gamma_i^{\langle t \rangle}+ \Gamma_o^{\langle t \rangle} (1-\tanh(c_{next})^2) * i_t * da_{next} * \tilde c^{\langle t \rangle} * (1-\tanh(\tilde c)^2) \tag{8} dc~⟨t⟩=dcnext∗Γi⟨t⟩+Γo⟨t⟩(1−tanh(cnext)2)∗it∗danext∗c~⟨t⟩∗(1−tanh(c~)2)(8)

d Γ u ⟨ t ⟩ = d c n e x t ∗ c ~ ⟨ t ⟩ + Γ o ⟨ t ⟩ ( 1 − tanh ( c n e x t ) 2 ) ∗ c ~ ⟨ t ⟩ ∗ d a n e x t ∗ Γ u ⟨ t ⟩ ∗ ( 1 − Γ u ⟨ t ⟩ ) (9) d\Gamma_u^{\langle t \rangle} = dc_{next}*\tilde c^{\langle t \rangle} + \Gamma_o^{\langle t \rangle} (1-\tanh(c_{next})^2) * \tilde c^{\langle t \rangle} * da_{next}*\Gamma_u^{\langle t \rangle}*(1-\Gamma_u^{\langle t \rangle})\tag{9} dΓu⟨t⟩=dcnext∗c~⟨t⟩+Γo⟨t⟩(1−tanh(cnext)2)∗c~⟨t⟩∗danext∗Γu⟨t⟩∗(1−Γu⟨t⟩)(9)

d Γ f ⟨ t ⟩ = d c n e x t ∗ c ~ p r e v + Γ o ⟨ t ⟩ ( 1 − tanh ( c n e x t ) 2 ) ∗ c p r e v ∗ d a n e x t ∗ Γ f ⟨ t ⟩ ∗ ( 1 − Γ f ⟨ t ⟩ ) (10) d\Gamma_f^{\langle t \rangle} = dc_{next}*\tilde c_{prev} + \Gamma_o^{\langle t \rangle} (1-\tanh(c_{next})^2) * c_{prev} * da_{next}*\Gamma_f^{\langle t \rangle}*(1-\Gamma_f^{\langle t \rangle})\tag{10} dΓf⟨t⟩=dcnext∗c~prev+Γo⟨t⟩(1−tanh(cnext)2)∗cprev∗danext∗Γf⟨t⟩∗(1−Γf⟨t⟩)(10)

3.2.3 参数求导

d W f = d Γ f ⟨ t ⟩ ∗ ( a p r e v x t ) T (11) dW_f = d\Gamma_f^{\langle t \rangle} * \begin{pmatrix} a_{prev} \\ x_t\end{pmatrix}^T \tag{11} dWf=dΓf⟨t⟩∗(aprevxt)T(11)

d W u = d Γ u ⟨ t ⟩ ∗ ( a p r e v x t ) T (12) dW_u = d\Gamma_u^{\langle t \rangle} * \begin{pmatrix} a_{prev} \\ x_t\end{pmatrix}^T \tag{12} dWu=dΓu⟨t⟩∗(aprevxt)T(12)

d W c = d c ~ ⟨ t ⟩ ∗ ( a p r e v x t ) T (13) dW_c = d\tilde c^{\langle t \rangle} * \begin{pmatrix} a_{prev} \\ x_t\end{pmatrix}^T \tag{13} dWc=dc~⟨t⟩∗(aprevxt)T(13)

d W o = d Γ o ⟨ t ⟩ ∗ ( a p r e v x t ) T (14) dW_o = d\Gamma_o^{\langle t \rangle} * \begin{pmatrix} a_{prev} \\ x_t\end{pmatrix}^T \tag{14} dWo=dΓo⟨t⟩∗(aprevxt)T(14)

要计算

d

b

f

,

d

b

u

,

d

b

c

,

d

b

o

db_f, db_u, db_c, db_o

dbf,dbu,dbc,dbo,你只需要在

d

Γ

f

⟨

t

⟩

,

d

Γ

u

⟨

t

⟩

,

d

c

~

⟨

t

⟩

,

d

Γ

o

⟨

t

⟩

d\Gamma_f^{\langle t \rangle}, d\Gamma_u^{\langle t \rangle}, d\tilde c^{\langle t \rangle}, d\Gamma_o^{\langle t \rangle}

dΓf⟨t⟩,dΓu⟨t⟩,dc~⟨t⟩,dΓo⟨t⟩的水平(axis=1)轴上分别求和。注意,你应该有keep_dims = True选项。

最后,你将针对先前的隐藏状态,先前的记忆状态和输入计算导数。

d

a

p

r

e

v

=

W

f

T

∗

d

Γ

f

⟨

t

⟩

+

W

u

T

∗

d

Γ

u

⟨

t

⟩

+

W

c

T

∗

d

c

~

⟨

t

⟩

+

W

o

T

∗

d

Γ

o

⟨

t

⟩

(15)

da_{prev} = W_f^T*d\Gamma_f^{\langle t \rangle} + W_u^T * d\Gamma_u^{\langle t \rangle}+ W_c^T * d\tilde c^{\langle t \rangle} + W_o^T * d\Gamma_o^{\langle t \rangle} \tag{15}

daprev=WfT∗dΓf⟨t⟩+WuT∗dΓu⟨t⟩+WcT∗dc~⟨t⟩+WoT∗dΓo⟨t⟩(15)

在这里,等式13的权重是第n_a个(即

W

f

=

W

f

[

:

n

a

,

:

]

W_f = W_f[:n_a,:]

Wf=Wf[:na,:]等…)

d

c

p

r

e

v

=

d

c

n

e

x

t

Γ

f

⟨

t

⟩

+

Γ

o

⟨

t

⟩

∗

(

1

−

tanh

(

c

n

e

x

t

)

2

)

∗

Γ

f

⟨

t

⟩

∗

d

a

n

e

x

t

(16)

dc_{prev} = dc_{next}\Gamma_f^{\langle t \rangle} + \Gamma_o^{\langle t \rangle} * (1- \tanh(c_{next})^2)*\Gamma_f^{\langle t \rangle}*da_{next} \tag{16}

dcprev=dcnextΓf⟨t⟩+Γo⟨t⟩∗(1−tanh(cnext)2)∗Γf⟨t⟩∗danext(16)

d x ⟨ t ⟩ = W f T ∗ d Γ f ⟨ t ⟩ + W u T ∗ d Γ u ⟨ t ⟩ + W c T ∗ d c ~ t + W o T ∗ d Γ o ⟨ t ⟩ (17) dx^{\langle t \rangle} = W_f^T*d\Gamma_f^{\langle t \rangle} + W_u^T * d\Gamma_u^{\langle t \rangle}+ W_c^T * d\tilde c_t + W_o^T * d\Gamma_o^{\langle t \rangle}\tag{17} dx⟨t⟩=WfT∗dΓf⟨t⟩+WuT∗dΓu⟨t⟩+WcT∗dc~t+WoT∗dΓo⟨t⟩(17)

其中等式15的权重是从n_a到末尾(即 W f = W f [ n a : , : ] W_f = W_f[n_a:,:] Wf=Wf[na:,:]etc…)

练习:通过实现下面的等式7−17来实现lstm_cell_backward。祝好运!

In [17]:

def lstm_cell_backward(da_next, dc_next, cache):

"""

Implement the backward pass for the LSTM-cell (single time-step).

Arguments:

da_next -- Gradients of next hidden state, of shape (n_a, m)

dc_next -- Gradients of next cell state, of shape (n_a, m)

cache -- cache storing information from the forward pass

Returns:

gradients -- python dictionary containing:

dxt -- Gradient of input data at time-step t, of shape (n_x, m)

da_prev -- Gradient w.r.t. the previous hidden state, numpy array of shape (n_a, m)

dc_prev -- Gradient w.r.t. the previous memory state, of shape (n_a, m, T_x)

dWf -- Gradient w.r.t. the weight matrix of the forget gate, numpy array of shape (n_a, n_a + n_x)

dWi -- Gradient w.r.t. the weight matrix of the input gate, numpy array of shape (n_a, n_a + n_x)

dWc -- Gradient w.r.t. the weight matrix of the memory gate, numpy array of shape (n_a, n_a + n_x)

dWo -- Gradient w.r.t. the weight matrix of the save gate, numpy array of shape (n_a, n_a + n_x)

dbf -- Gradient w.r.t. biases of the forget gate, of shape (n_a, 1)

dbi -- Gradient w.r.t. biases of the update gate, of shape (n_a, 1)

dbc -- Gradient w.r.t. biases of the memory gate, of shape (n_a, 1)

dbo -- Gradient w.r.t. biases of the save gate, of shape (n_a, 1)

"""

# Retrieve information from "cache"

(a_next, c_next, a_prev, c_prev, ft, it, cct, ot, xt, parameters) = cache

### START CODE HERE ###

# Retrieve dimensions from xt's and a_next's shape (≈2 lines)

n_x, m = xt.shape

n_a, m = a_next.shape

# Compute gates related derivatives, you can find their values can be found by looking carefully at equations (7) to (10) (≈4 lines)

dot = da_next * np.tanh(c_next) * ot * (1 - ot)

dcct = (dc_next * it + ot * (1 - np.square(np.tanh(c_next))) * it * da_next) * (1 - np.square(cct))

dit = (dc_next * cct + ot * (1 - np.square(np.tanh(c_next))) * cct * da_next) * it * (1 - it)

dft = (dc_next * c_prev + ot *(1 - np.square(np.tanh(c_next))) * c_prev * da_next) * ft * (1 - ft)

# Code equations (7) to (10) (≈4 lines)

# dit = None

# dft = None

# dot = None

# dcct = None

# Compute parameters related derivatives. Use equations (11)-(14) (≈8 lines)

dWf = np.dot(dft,np.concatenate((a_prev, xt), axis=0).T)

dWi = np.dot(dit,np.concatenate((a_prev, xt), axis=0).T)

dWc = np.dot(dcct,np.concatenate((a_prev, xt), axis=0).T)

dWo = np.dot(dot,np.concatenate((a_prev, xt), axis=0).T)

dbf = np.sum(dft, axis=1 ,keepdims = True)

dbi = np.sum(dit, axis=1, keepdims = True)

dbc = np.sum(dcct, axis=1, keepdims = True)

dbo = np.sum(dot, axis=1, keepdims = True)

# Compute derivatives w.r.t previous hidden state, previous memory state and input. Use equations (15)-(17). (≈3 lines)

da_prev = np.dot(parameters['Wf'][:,:n_a].T,dft)+np.dot(parameters['Wi'][:,:n_a].T,dit)+np.dot(parameters['Wc'][:,:n_a].T,dcct)+np.dot(parameters['Wo'][:,:n_a].T,dot)

dc_prev = dc_next*ft+ot*(1-np.square(np.tanh(c_next)))*ft*da_next

dxt = np.dot(parameters['Wf'][:,n_a:].T,dft)+np.dot(parameters['Wi'][:,n_a:].T,dit)+np.dot(parameters['Wc'][:,n_a:].T,dcct)+np.dot(parameters['Wo'][:,n_a:].T,dot)

# parameters['Wf'][:, :n_a].T 每一行的 第 0 到 n_a-1 列的数据取出来

# parameters['Wf'][:, n_a:].T 每一行的 第 n_a 到最后列的数据取出来

### END CODE HERE ###

# Save gradients in dictionary

gradients = {"dxt": dxt, "da_prev": da_prev, "dc_prev": dc_prev, "dWf": dWf,"dbf": dbf, "dWi": dWi,"dbi": dbi,

"dWc": dWc,"dbc": dbc, "dWo": dWo,"dbo": dbo}

return gradients

In [18]:

np.random.seed(1)

xt = np.random.randn(3,10)

a_prev = np.random.randn(5,10)

c_prev = np.random.randn(5,10)

Wf = np.random.randn(5, 5+3)

bf = np.random.randn(5,1)

Wi = np.random.randn(5, 5+3)

bi = np.random.randn(5,1)

Wo = np.random.randn(5, 5+3)

bo = np.random.randn(5,1)

Wc = np.random.randn(5, 5+3)

bc = np.random.randn(5,1)

Wy = np.random.randn(2,5)

by = np.random.randn(2,1)

parameters = {"Wf": Wf, "Wi": Wi, "Wo": Wo, "Wc": Wc, "Wy": Wy, "bf": bf, "bi": bi, "bo": bo, "bc": bc, "by": by}

a_next, c_next, yt, cache = lstm_cell_forward(xt, a_prev, c_prev, parameters)

da_next = np.random.randn(5,10)

dc_next = np.random.randn(5,10)

gradients = lstm_cell_backward(da_next, dc_next, cache)

print("gradients[\"dxt\"][1][2] =", gradients["dxt"][1][2])

print("gradients[\"dxt\"].shape =", gradients["dxt"].shape)

print("gradients[\"da_prev\"][2][3] =", gradients["da_prev"][2][3])

print("gradients[\"da_prev\"].shape =", gradients["da_prev"].shape)

print("gradients[\"dc_prev\"][2][3] =", gradients["dc_prev"][2][3])

print("gradients[\"dc_prev\"].shape =", gradients["dc_prev"].shape)

print("gradients[\"dWf\"][3][1] =", gradients["dWf"][3][1])

print("gradients[\"dWf\"].shape =", gradients["dWf"].shape)

print("gradients[\"dWi\"][1][2] =", gradients["dWi"][1][2])

print("gradients[\"dWi\"].shape =", gradients["dWi"].shape)

print("gradients[\"dWc\"][3][1] =", gradients["dWc"][3][1])

print("gradients[\"dWc\"].shape =", gradients["dWc"].shape)

print("gradients[\"dWo\"][1][2] =", gradients["dWo"][1][2])

print("gradients[\"dWo\"].shape =", gradients["dWo"].shape)

print("gradients[\"dbf\"][4] =", gradients["dbf"][4])

print("gradients[\"dbf\"].shape =", gradients["dbf"].shape)

print("gradients[\"dbi\"][4] =", gradients["dbi"][4])

print("gradients[\"dbi\"].shape =", gradients["dbi"].shape)

print("gradients[\"dbc\"][4] =", gradients["dbc"][4])

print("gradients[\"dbc\"].shape =", gradients["dbc"].shape)

print("gradients[\"dbo\"][4] =", gradients["dbo"][4])

print("gradients[\"dbo\"].shape =", gradients["dbo"].shape)

gradients["dxt"][1][2] = 3.2305591151091875

gradients["dxt"].shape = (3, 10)

gradients["da_prev"][2][3] = -0.06396214197109236

gradients["da_prev"].shape = (5, 10)

gradients["dc_prev"][2][3] = 0.7975220387970015

gradients["dc_prev"].shape = (5, 10)

gradients["dWf"][3][1] = -0.1479548381644968

gradients["dWf"].shape = (5, 8)

gradients["dWi"][1][2] = 1.0574980552259903

gradients["dWi"].shape = (5, 8)

gradients["dWc"][3][1] = 2.3045621636876668

gradients["dWc"].shape = (5, 8)

gradients["dWo"][1][2] = 0.3313115952892109

gradients["dWo"].shape = (5, 8)

gradients["dbf"][4] = [0.18864637]

gradients["dbf"].shape = (5, 1)

gradients["dbi"][4] = [-0.40142491]

gradients["dbi"].shape = (5, 1)

gradients["dbc"][4] = [0.25587763]

gradients["dbc"].shape = (5, 1)

gradients["dbo"][4] = [0.13893342]

gradients["dbo"].shape = (5, 1)

预期输出:

gradients[“dxt”][1][2] = 3.2305591151091875

gradients[“dxt”].shape = (3, 10)

gradients[“da_prev”][2][3] = -0.06396214197109236

gradients[“da_prev”].shape = (5, 10)

gradients[“dc_prev”][2][3] = 0.7975220387970015

gradients[“dc_prev”].shape = (5, 10)

gradients[“dWf”][3][1] = -0.1479548381644968

gradients[“dWf”].shape = (5, 8)

gradients[“dWi”][1][2] = 1.0574980552259903

gradients[“dWi”].shape = (5, 8)

gradients[“dWc”][3][1] = 2.3045621636876668

gradients[“dWc”].shape = (5, 8)

gradients[“dWo”][1][2] = 0.3313115952892109

gradients[“dWo”].shape = (5, 8)

gradients[“dbf”][4] = [0.18864637]

gradients[“dbf”].shape = (5, 1)

gradients[“dbi”][4] = [-0.40142491]

gradients[“dbi”].shape = (5, 1)

gradients[“dbc”][4] = [0.25587763]

gradients[“dbc”].shape = (5, 1)

gradients[“dbo”][4] = [0.13893342]

gradients[“dbo”].shape = (5, 1)

3.3 反向传播LSTM RNN

这部分与你在上面实现的rnn_backward函数非常相似。首先将创建与返回变量相同维度的变量。然后,你将从头开始遍历所有时间步,并在每次迭代中调用为LSTM实现的一步函数。然后,你将通过分别汇总参数来更新参数。最后返回带有新梯度的字典。

说明:实现lstm_backward函数。创建一个从

T

x

T_x

Tx开始并向后的for循环。对于每个步骤,请调用lstm_cell_backward并通过向其添加新梯度来更新旧梯度。请注意,dxt不会更新而是存储。

In [19]:

def lstm_backward(da, caches):

"""

Implement the backward pass for the RNN with LSTM-cell (over a whole sequence).

Arguments:

da -- Gradients w.r.t the hidden states, numpy-array of shape (n_a, m, T_x)

dc -- Gradients w.r.t the memory states, numpy-array of shape (n_a, m, T_x)

caches -- cache storing information from the forward pass (lstm_forward)

Returns:

gradients -- python dictionary containing:

dx -- Gradient of inputs, of shape (n_x, m, T_x)

da0 -- Gradient w.r.t. the previous hidden state, numpy array of shape (n_a, m)

dWf -- Gradient w.r.t. the weight matrix of the forget gate, numpy array of shape (n_a, n_a + n_x)

dWi -- Gradient w.r.t. the weight matrix of the update gate, numpy array of shape (n_a, n_a + n_x)

dWc -- Gradient w.r.t. the weight matrix of the memory gate, numpy array of shape (n_a, n_a + n_x)

dWo -- Gradient w.r.t. the weight matrix of the save gate, numpy array of shape (n_a, n_a + n_x)

dbf -- Gradient w.r.t. biases of the forget gate, of shape (n_a, 1)

dbi -- Gradient w.r.t. biases of the update gate, of shape (n_a, 1)

dbc -- Gradient w.r.t. biases of the memory gate, of shape (n_a, 1)

dbo -- Gradient w.r.t. biases of the save gate, of shape (n_a, 1)

"""

# Retrieve values from the first cache (t=1) of caches.

(caches, x) = caches

(a1, c1, a0, c0, f1, i1, cc1, o1, x1, parameters) = caches[0]

### START CODE HERE ###

# Retrieve dimensions from da's and x1's shapes (≈2 lines)

n_a, m, T_x = da.shape

n_x, m = x1.shape

# initialize the gradients with the right sizes (≈12 lines)

dx = np.zeros((n_x, m, T_x))

da0 = np.zeros((n_a, m))

da_prevt = np.zeros((n_a, m))

dc_prevt = np.zeros((n_a, m))

dWf = np.zeros((n_a, n_a + n_x))

dWi = np.zeros((n_a, n_a + n_x))

dWc = np.zeros((n_a, n_a + n_x))

dWo = np.zeros((n_a, n_a + n_x))

dbf = np.zeros((n_a, 1))

dbi = np.zeros((n_a, 1))

dbc = np.zeros((n_a, 1))

dbo = np.zeros((n_a, 1))

# loop back over the whole sequence

for t in reversed(range(T_x)):

# Compute all gradients using lstm_cell_backward

gradients = lstm_cell_backward(da[:,:,t]+da_prevt,dc_prevt,caches[t])

# Store or add the gradient to the parameters' previous step's gradient

dx[:, :, t] = gradients['dxt']

dWf = dWf+gradients['dWf']

dWi = dWi+gradients['dWi']

dWc = dWc+gradients['dWc']

dWo = dWo+gradients['dWo']

dbf = dbf+gradients['dbf']

dbi = dbi+gradients['dbi']

dbc = dbc+gradients['dbc']

dbo = dbo+gradients['dbo']

# Set the first activation's gradient to the backpropagated gradient da_prev.

da0 = gradients['da_prev']

### END CODE HERE ###

# Store the gradients in a python dictionary

gradients = {"dx": dx, "da0": da0, "dWf": dWf,"dbf": dbf, "dWi": dWi,"dbi": dbi,

"dWc": dWc,"dbc": dbc, "dWo": dWo,"dbo": dbo}

return gradients

In [20]:

np.random.seed(1)

x = np.random.randn(3,10,7)

a0 = np.random.randn(5,10)

Wf = np.random.randn(5, 5+3)

bf = np.random.randn(5,1)

Wi = np.random.randn(5, 5+3)

bi = np.random.randn(5,1)

Wo = np.random.randn(5, 5+3)

bo = np.random.randn(5,1)

Wc = np.random.randn(5, 5+3)

bc = np.random.randn(5,1)

parameters = {"Wf": Wf, "Wi": Wi, "Wo": Wo, "Wc": Wc, "Wy": Wy, "bf": bf, "bi": bi, "bo": bo, "bc": bc, "by": by}

a, y, c, caches = lstm_forward(x, a0, parameters)

da = np.random.randn(5, 10, 4)

gradients = lstm_backward(da, caches)

print("gradients[\"dx\"][1][2] =", gradients["dx"][1][2])

print("gradients[\"dx\"].shape =", gradients["dx"].shape)

print("gradients[\"da0\"][2][3] =", gradients["da0"][2][3])

print("gradients[\"da0\"].shape =", gradients["da0"].shape)

print("gradients[\"dWf\"][3][1] =", gradients["dWf"][3][1])

print("gradients[\"dWf\"].shape =", gradients["dWf"].shape)

print("gradients[\"dWi\"][1][2] =", gradients["dWi"][1][2])

print("gradients[\"dWi\"].shape =", gradients["dWi"].shape)

print("gradients[\"dWc\"][3][1] =", gradients["dWc"][3][1])

print("gradients[\"dWc\"].shape =", gradients["dWc"].shape)

print("gradients[\"dWo\"][1][2] =", gradients["dWo"][1][2])

print("gradients[\"dWo\"].shape =", gradients["dWo"].shape)

print("gradients[\"dbf\"][4] =", gradients["dbf"][4])

print("gradients[\"dbf\"].shape =", gradients["dbf"].shape)

print("gradients[\"dbi\"][4] =", gradients["dbi"][4])

print("gradients[\"dbi\"].shape =", gradients["dbi"].shape)

print("gradients[\"dbc\"][4] =", gradients["dbc"][4])

print("gradients[\"dbc\"].shape =", gradients["dbc"].shape)

print("gradients[\"dbo\"][4] =", gradients["dbo"][4])

print("gradients[\"dbo\"].shape =", gradients["dbo"].shape)

gradients["dx"][1][2] = [-0.00173313 0.08287442 -0.30545663 -0.43281115]

gradients["dx"].shape = (3, 10, 4)

gradients["da0"][2][3] = -0.09591150195400468

gradients["da0"].shape = (5, 10)

gradients["dWf"][3][1] = -0.06981985612744011

gradients["dWf"].shape = (5, 8)

gradients["dWi"][1][2] = 0.10237182024854777

gradients["dWi"].shape = (5, 8)

gradients["dWc"][3][1] = -0.06249837949274524

gradients["dWc"].shape = (5, 8)

gradients["dWo"][1][2] = 0.04843891314443014

gradients["dWo"].shape = (5, 8)

gradients["dbf"][4] = [-0.0565788]

gradients["dbf"].shape = (5, 1)

gradients["dbi"][4] = [-0.15399065]

gradients["dbi"].shape = (5, 1)

gradients["dbc"][4] = [-0.29691142]

gradients["dbc"].shape = (5, 1)

gradients["dbo"][4] = [-0.29798344]

gradients["dbo"].shape = (5, 1)

预期输出:

gradients[“dx”][1][2] = [-0.00173313 0.08287442 -0.30545663 -0.43281115]

gradients[“dx”].shape = (3, 10, 4)

gradients[“da0”][2][3] = -0.09591150195400468

gradients[“da0”].shape = (5, 10)

gradients[“dWf”][3][1] = -0.06981985612744011

gradients[“dWf”].shape = (5, 8)

gradients[“dWi”][1][2] = 0.10237182024854777

gradients[“dWi”].shape = (5, 8)

gradients[“dWc”][3][1] = -0.06249837949274524

gradients[“dWc”].shape = (5, 8)

gradients[“dWo”][1][2] = 0.04843891314443014

gradients[“dWo”].shape = (5, 8)

gradients[“dbf”][4] = [-0.0565788]

gradients[“dbf”].shape = (5, 1)

gradients[“dbi”][4] = [-0.15399065]

gradients[“dbi”].shape = (5, 1)

gradients[“dbc”][4] = [-0.29691142]

gradients[“dbc”].shape = (5, 1)

gradients[“dbo”][4] = [-0.29798344]

gradients[“dbo”].shape = (5, 1)

祝贺你完成此作业。你现在了解了循环神经网络的工作原理!

让我们继续下一个练习,在下一个练习中,你将学习使用RNN来构建字符级的语言模型。