| 内容 | 版本号 |

|---|---|

| CentOS | 7.6.1810 |

| ZooKeeper | 3.4.6 |

| Hadoop | 2.9.1 |

| HBase | 1.2.0 |

| MySQL | 5.6.51 |

| HIVE | 2.3.7 |

| Sqoop | 1.4.6 |

| flume | 1.9.0 |

| kafka | 2.8.1 |

| scala | 2.12 |

| davinci | 3.0.1 |

| spark | 2.4.8 |

| flink | 1.13.5 |

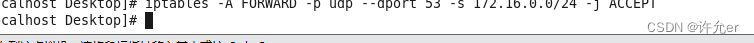

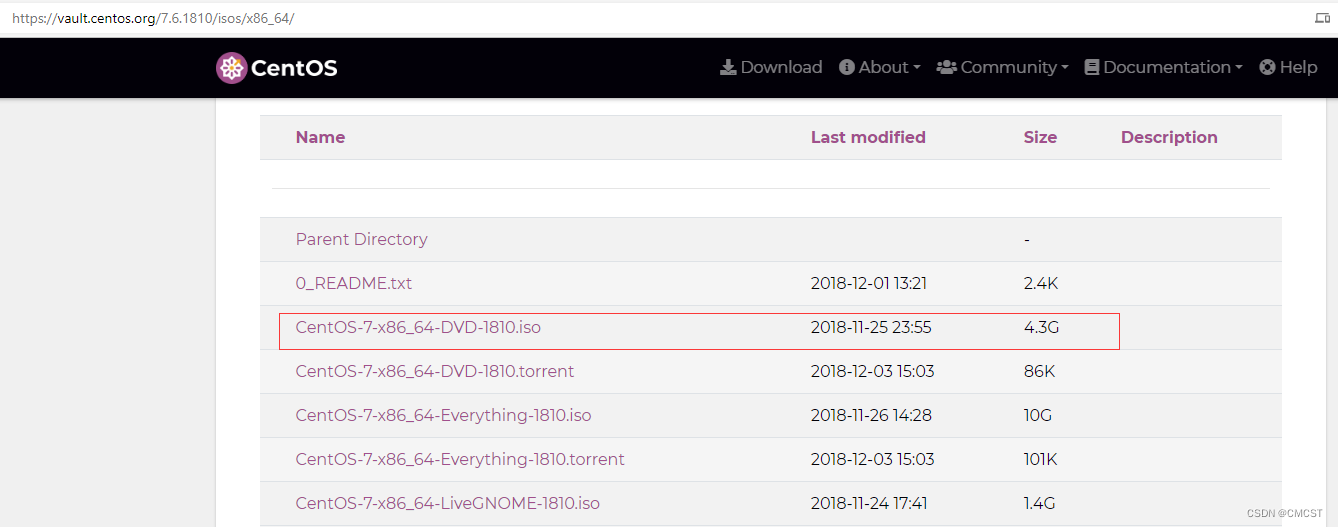

1. 下载CentOS 7镜像

CentOS官网

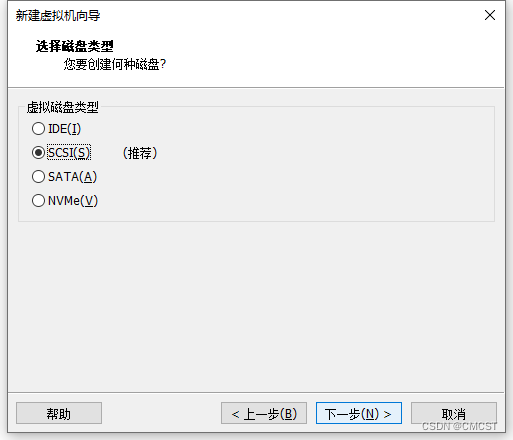

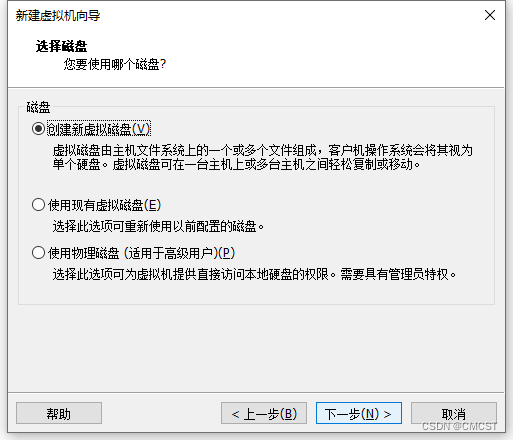

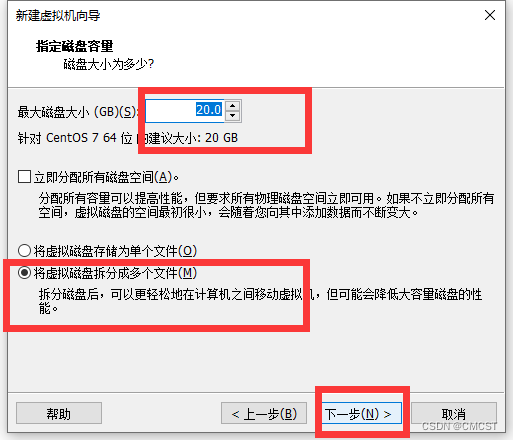

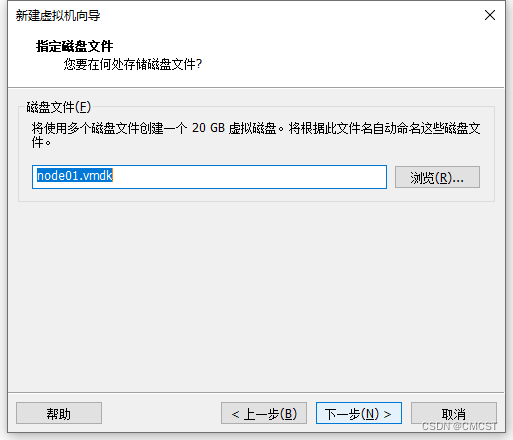

2. 安装CentOS 7系统——采用虚拟机方式

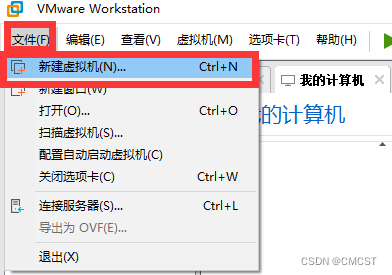

2.1 新建虚拟机

2.2.1 [依次选择]->自定义->下一步

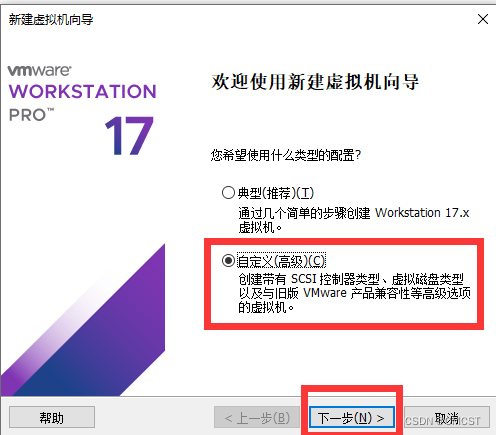

2.2.2 [选择]下一步

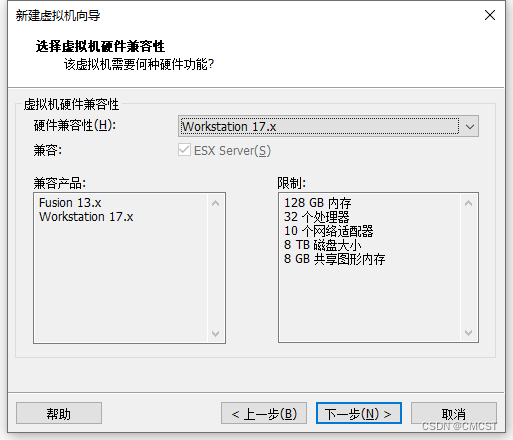

2.2.3 [依次选择]->稍后安装OS->下一步

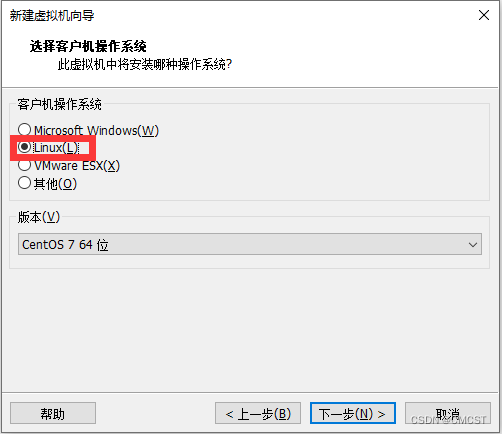

2.2.4 选择Linux系统

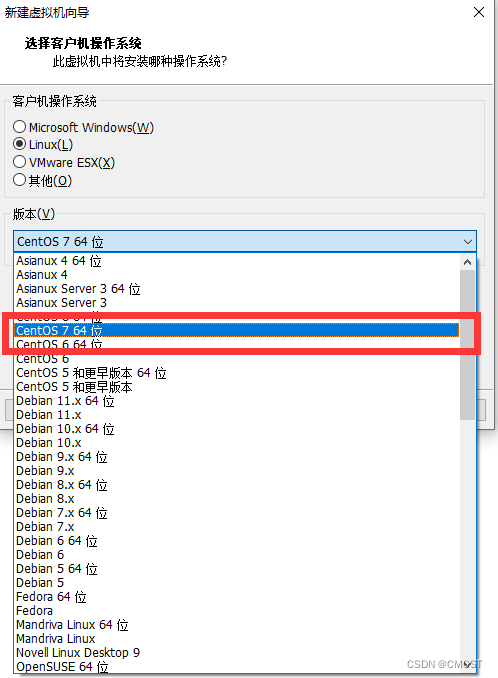

2.2.5 选择CentOS 7 64位

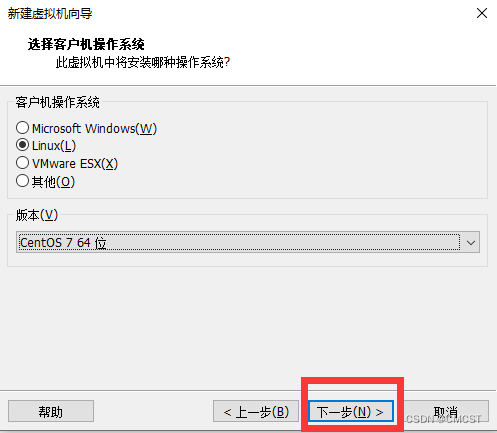

2.2.5 [选择]下一步

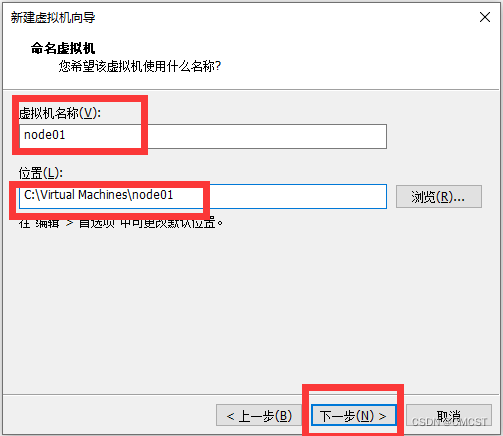

2.2.6 [自行修改] 虚拟机名称 及其 安装位置

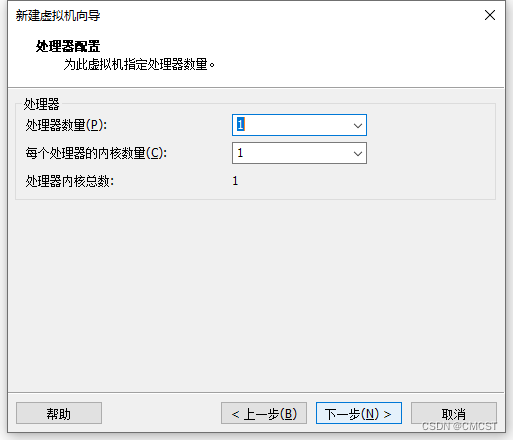

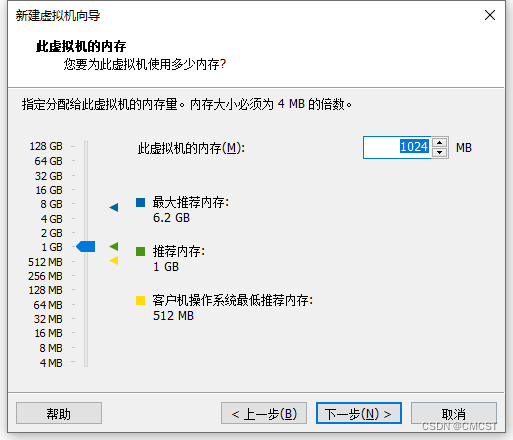

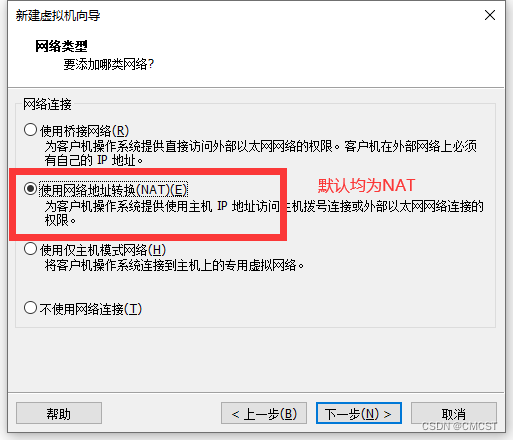

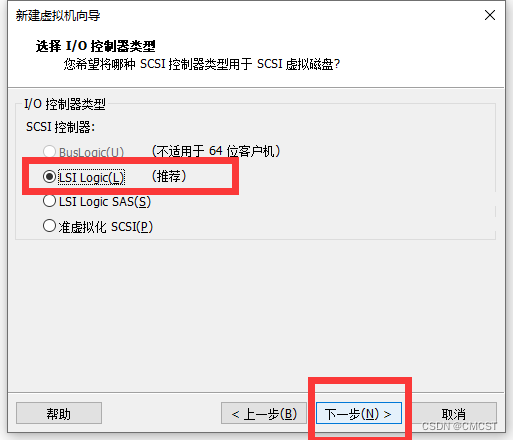

2.2.7 [均选择]下一步

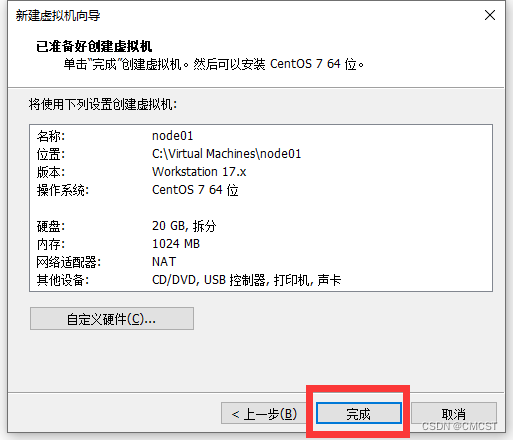

2.2.6 [选择]完成

2.2.7 [为虚拟机设置] 镜像文件

3. 设置静态IP

3.1 查看虚拟机网关地址

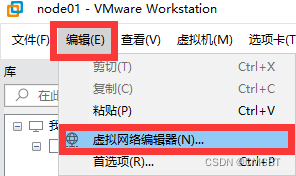

3.1.1 [依次选择] 编辑-> 虚拟网络编辑器

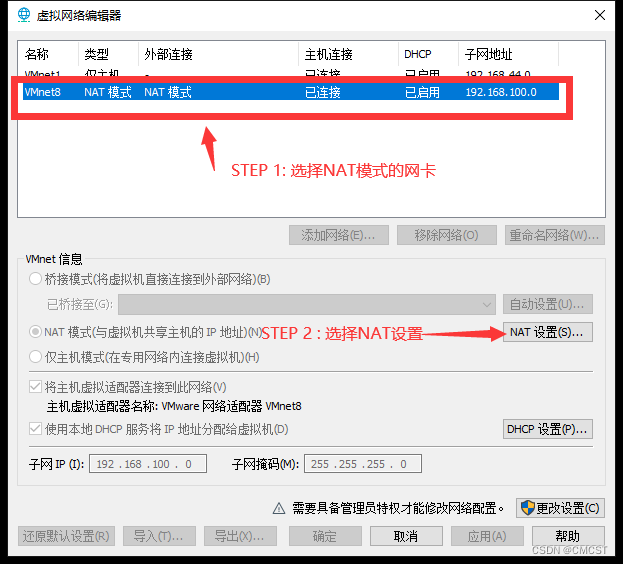

3.1.2

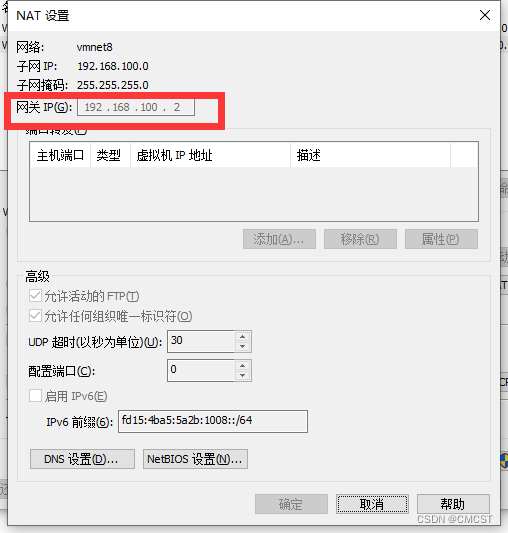

得到网关IP : 192.168.100.2

3.2 修改网卡文件

设置静态IP地址

vi /etc/sysconfig/network-scripts/ifcfg-ens33

初次打开时,文件内容如下:

YPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="dhcp"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="21a7872b-2226-49cd-93be-7b8cfc439b67"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.100.100

NETMASK=255.255.255.0

GATEWAY=192.168.100.2

DNS=8.8.8.8

修改内容如下:

# 将BOOTPROTO值由dhcp修改为static

BOOTPROTO="static"

# 添加如下内容

IPADDR=192.168.100.100 # 设置静态IP地址

NETMASK=255.255.255.0 # 设置子网掩码

GATEWAY=192.168.100.2 # 设置网关IP

DNS=8.8.8.8 # 设置DNS服务器

3.3 修改DNS文件

vi /etc/resolv.conf

添加如下文件内容:

nameserver 114.114.114.114

nameserver 223.5.5.5

nameserver 8.8.8.8

3.4 重启网卡文件

service netwrok restart

输入如下命令试验是否成功

ping www.baidu.com

ifconfig

3.4.1 如遇-bash: ifconfig: command not found问题

输入如下命令以安装

yum install -y net-tools.x86_64

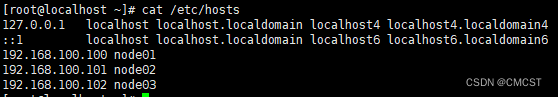

3.5 修改/etc/hosts文件,最后一行添加如下内容

vi /etc/hosts

192.168.100.100 node01

192.168.100.101 node02

192.168.100.102 node03

修改结果如下:

4. 安装JAVA环境

4.1 解压

- -C 参数指定解压文件至何处 ,本人解压在/usr/local/app/目录下

[root@node01 ~]tar -zxvf jdk-8u51-linux-x64.tar.gz -C /usr/local/app/

[root@node01 ~]mv /usr/local/app/jdk-8u51-linux-x64 /usr/local/app/jdk

4.2 配置环境变量

[root@node01 ~]vi ~/.bashrc

- ~/.bashrc文件内容如下:

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

JAVA_HOME=/usr/local/app/jdk

CLASSPATH=,:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:/root/tools:$PATH

export JAVA_HOME CLASSPATH PATH

[root@node01 ~]source ~/.bashrc

5. Hadoop分布式部署

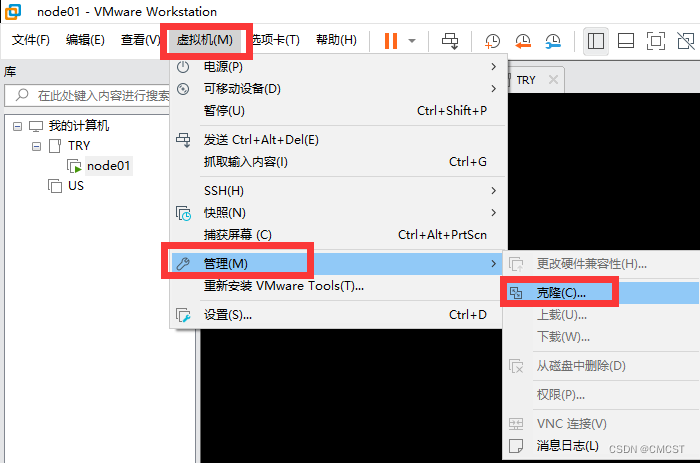

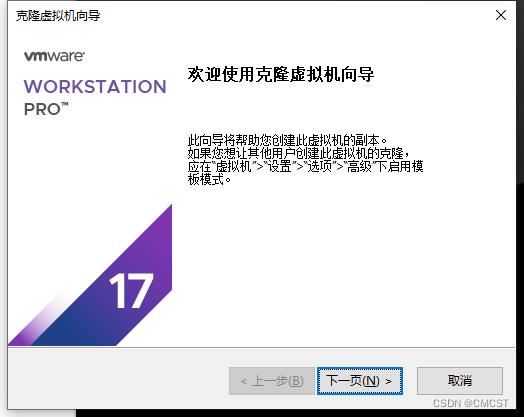

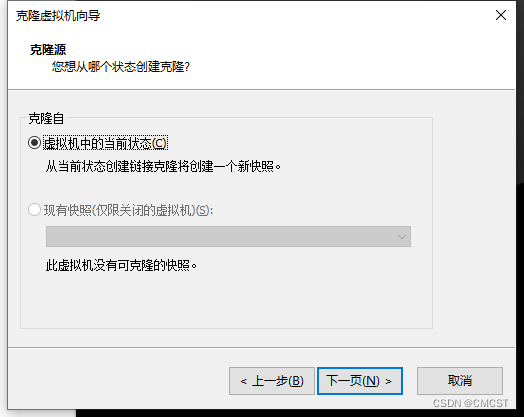

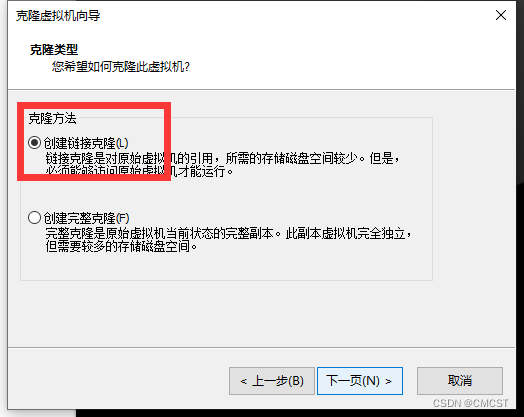

5.1 克隆虚拟机 [以node02为例,node03同样][均是克隆node01]

5.1.1

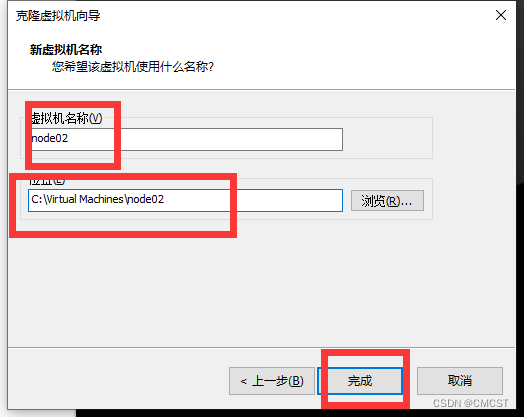

5.1.2 [自行修改] 虚拟机名称 及其 安装位置

5.2 修改node02的IP

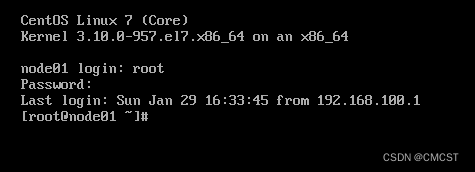

node02开机后如下图所示

5.2.1 修改node02网卡文件

vi /etc/sysconfig/network-scripts/ifcfg-ens33

将node02的IP设置为 : 192.168.100.101

5.2.2 重启node02

reboot

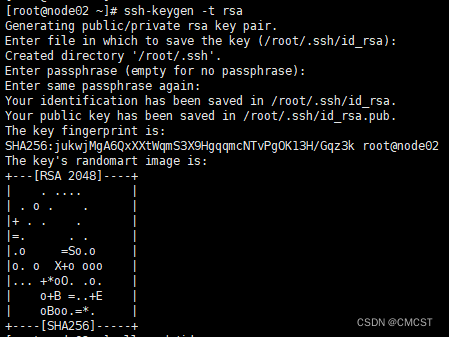

5.3 配置免密登录[以node02为例]

5.3.1 创建生成密钥,这里连续三个回车默认即可

ssh-keygen -t rsa

5.3.2 公钥文件id_rsa.pub中的内容复制到相同目录下的authorized_keys文件

cp .ssh/id_rsa.pub .ssh/authorized_keys

5.3.3 使用ssh命令登录node02

- 第一次登录需要输入yes进行确认,

- 第二次以后登录则不需要,此时表明设置成功

5.3.4 三个节点互相免密登录

node01、node02、node03都进行5.3.3配置免密登录后

- 在node02中,将node02的公钥复制到node01的公钥文件中

[root@node02 ~]cat .ssh/authorized_keys | ssh root@node01 'cat >> ~/.ssh/authorized_keys'

- 在node03中,将node03的公钥复制到node01的公钥文件中

[root@node03 ~]cat .ssh/authorized_keys | ssh root@node01 'cat >> ~/.ssh/authorized_keys'

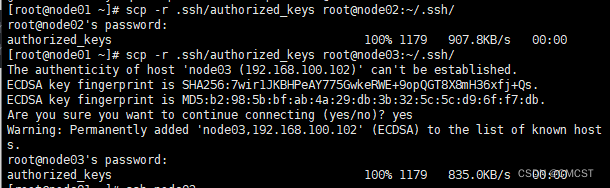

- 在node01中,将node01的公钥发送至node02和node03

scp -r .ssh/authorized_keys root@node02:~/.ssh/

scp -r .ssh/authorized_keys root@node03:~/.ssh/

5.4 集群规划

| node01 | node02 | node03 | |

|---|---|---|---|

| NameNode | √ | √ | |

| DataNode | √ | √ | √ |

| ResourceManager | √ | √ | |

| JournalManager | √ | √ | √ |

| NodeManager | √ | √ | √ |

| ZooKeeper | √ | √ | √ |

| HMaster | √ | √ | |

| HRegionServer | √ | √ | √ |

| Hive | √ | ||

| Sqoop | √ |

5.4.1 软件规划

5.4.1 用户规划

5.4.1 目录规划

5.5 集群配置

| 文件名 | 用途 |

|---|---|

| $HADOOP_HOME/etc/hadoop/hadoop-env.sh | |

| $HADOOP_HOME/etc/hadoop/core-site.sh | 配置HDFS公用属性 |

| $HADOOP_HOME/etc/hadoop/hdfs-site.sh | 配置与HDFS相关属性 |

| $HADOOP_HOME/etc/hadoop/slaves | 配置DataNode节点所在主机名(根据集群规划) |

5.5.1 解压

- -C 参数指定解压文件至何处 ,本人解压在/usr/local/app/目录下

[root@node01 ~]tar -zxvf hadoop-2.9.1.tar.gz -C /usr/local/app/

[root@node01 ~]mv /usr/local/app/hadoop-2.9.1 /usr/local/app/hadoop

5.5.2 配置环境变量

[root@node01 ~]vi ~/.bashrc

- ~/.bashrc文件内容如下:

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

JAVA_HOME=/usr/local/app/jdk

HADOOP_HOME=/usr/local/app/hadoop

CLASSPATH=,:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:/root/tools:$PATH

export JAVA_HOME CLASSPATH PATH HADOOP_HOME

[root@node01 ~]source ~/.bashrc

5.5.3 $HADOOP_HOME/etc/hadoop/hadoop-env.sh

#

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Set Hadoop-specific environment variables here.

##

## THIS FILE ACTS AS THE MASTER FILE FOR ALL HADOOP PROJECTS.

## SETTINGS HERE WILL BE READ BY ALL HADOOP COMMANDS. THEREFORE,

## ONE CAN USE THIS FILE TO SET YARN, HDFS, AND MAPREDUCE

## CONFIGURATION OPTIONS INSTEAD OF xxx-env.sh.

##

## Precedence rules:

##

## {yarn-env.sh|hdfs-env.sh} > hadoop-env.sh > hard-coded defaults

##

## {YARN_xyz|HDFS_xyz} > HADOOP_xyz > hard-coded defaults

##

# Many of the options here are built from the perspective that users

# may want to provide OVERWRITING values on the command line.

# For example:

#

# JAVA_HOME=/usr/java/testing hdfs dfs -ls

#

# Therefore, the vast majority (BUT NOT ALL!) of these defaults

# are configured for substitution and not append. If append

# is preferable, modify this file accordingly.

###

# Generic settings for HADOOP

###

# Technically, the only required environment variable is JAVA_HOME.

# All others are optional. However, the defaults are probably not

# preferred. Many sites configure these options outside of Hadoop,

# such as in /etc/profile.d

# The java implementation to use. By default, this environment

# variable is REQUIRED on ALL platforms except OS X!

export JAVA_HOME=/usr/local/app/jdk

# Location of Hadoop. By default, Hadoop will attempt to determine

# this location based upon its execution path.

export HADOOP_HOME=/usr/local/app/hadoop

# Location of Hadoop's configuration information. i.e., where this

# file is living. If this is not defined, Hadoop will attempt to

# locate it based upon its execution path.

#

# NOTE: It is recommend that this variable not be set here but in

# /etc/profile.d or equivalent. Some options (such as

# --config) may react strangely otherwise.

#

# export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

# The maximum amount of heap to use (Java -Xmx). If no unit

# is provided, it will be converted to MB. Daemons will

# prefer any Xmx setting in their respective _OPT variable.

# There is no default; the JVM will autoscale based upon machine

# memory size.

# export HADOOP_HEAPSIZE_MAX=

# The minimum amount of heap to use (Java -Xms). If no unit

# is provided, it will be converted to MB. Daemons will

# prefer any Xms setting in their respective _OPT variable.

# There is no default; the JVM will autoscale based upon machine

# memory size.

# export HADOOP_HEAPSIZE_MIN=

# Enable extra debugging of Hadoop's JAAS binding, used to set up

# Kerberos security.

# export HADOOP_JAAS_DEBUG=true

# Extra Java runtime options for all Hadoop commands. We don't support

# IPv6 yet/still, so by default the preference is set to IPv4.

# export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true"

# For Kerberos debugging, an extended option set logs more information

# export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Dsun.security.krb5.debug=true -Dsun.security.spnego.debug"

# Some parts of the shell code may do special things dependent upon

# the operating system. We have to set this here. See the next

# section as to why....

export HADOOP_OS_TYPE=${HADOOP_OS_TYPE:-$(uname -s)}

# Extra Java runtime options for some Hadoop commands

# and clients (i.e., hdfs dfs -blah). These get appended to HADOOP_OPTS for

# such commands. In most cases, # this should be left empty and

# let users supply it on the command line.

# export HADOOP_CLIENT_OPTS=""

#

# A note about classpaths.

#

# By default, Apache Hadoop overrides Java's CLASSPATH

# environment variable. It is configured such

# that it starts out blank with new entries added after passing

# a series of checks (file/dir exists, not already listed aka

# de-deduplication). During de-deduplication, wildcards and/or

# directories are *NOT* expanded to keep it simple. Therefore,

# if the computed classpath has two specific mentions of

# awesome-methods-1.0.jar, only the first one added will be seen.

# If two directories are in the classpath that both contain

# awesome-methods-1.0.jar, then Java will pick up both versions.

# An additional, custom CLASSPATH. Site-wide configs should be

# handled via the shellprofile functionality, utilizing the

# hadoop_add_classpath function for greater control and much

# harder for apps/end-users to accidentally override.

# Similarly, end users should utilize ${HOME}/.hadooprc .

# This variable should ideally only be used as a short-cut,

# interactive way for temporary additions on the command line.

# export HADOOP_CLASSPATH="/some/cool/path/on/your/machine"

# Should HADOOP_CLASSPATH be first in the official CLASSPATH?

# export HADOOP_USER_CLASSPATH_FIRST="yes"

# If HADOOP_USE_CLIENT_CLASSLOADER is set, the classpath along

# with the main jar are handled by a separate isolated

# client classloader when 'hadoop jar', 'yarn jar', or 'mapred job'

# is utilized. If it is set, HADOOP_CLASSPATH and

# HADOOP_USER_CLASSPATH_FIRST are ignored.

# export HADOOP_USE_CLIENT_CLASSLOADER=true

# HADOOP_CLIENT_CLASSLOADER_SYSTEM_CLASSES overrides the default definition of

# system classes for the client classloader when HADOOP_USE_CLIENT_CLASSLOADER

# is enabled. Names ending in '.' (period) are treated as package names, and

# names starting with a '-' are treated as negative matches. For example,

# export HADOOP_CLIENT_CLASSLOADER_SYSTEM_CLASSES="-org.apache.hadoop.UserClass,java.,javax.,org.apache.hadoop."

# Enable optional, bundled Hadoop features

# This is a comma delimited list. It may NOT be overridden via .hadooprc

# Entries may be added/removed as needed.

# export HADOOP_OPTIONAL_TOOLS="hadoop-aliyun,hadoop-openstack,hadoop-azure,hadoop-azure-datalake,hadoop-aws,hadoop-kafka"

###

# Options for remote shell connectivity

###

# There are some optional components of hadoop that allow for

# command and control of remote hosts. For example,

# start-dfs.sh will attempt to bring up all NNs, DNS, etc.

# Options to pass to SSH when one of the "log into a host and

# start/stop daemons" scripts is executed

# export HADOOP_SSH_OPTS="-o BatchMode=yes -o StrictHostKeyChecking=no -o ConnectTimeout=10s"

# The built-in ssh handler will limit itself to 10 simultaneous connections.

# For pdsh users, this sets the fanout size ( -f )

# Change this to increase/decrease as necessary.

# export HADOOP_SSH_PARALLEL=10

# Filename which contains all of the hosts for any remote execution

# helper scripts # such as workers.sh, start-dfs.sh, etc.

# export HADOOP_WORKERS="${HADOOP_CONF_DIR}/workers"

###

# Options for all daemons

###

#

#

# Many options may also be specified as Java properties. It is

# very common, and in many cases, desirable, to hard-set these

# in daemon _OPTS variables. Where applicable, the appropriate

# Java property is also identified. Note that many are re-used

# or set differently in certain contexts (e.g., secure vs

# non-secure)

#

# Where (primarily) daemon log files are stored.

# ${HADOOP_HOME}/logs by default.

# Java property: hadoop.log.dir

# export HADOOP_LOG_DIR=${HADOOP_HOME}/logs

# A string representing this instance of hadoop. $USER by default.

# This is used in writing log and pid files, so keep that in mind!

# Java property: hadoop.id.str

# export HADOOP_IDENT_STRING=$USER

# How many seconds to pause after stopping a daemon

# export HADOOP_STOP_TIMEOUT=5

# Where pid files are stored. /tmp by default.

# export HADOOP_PID_DIR=/tmp

# Default log4j setting for interactive commands

# Java property: hadoop.root.logger

# export HADOOP_ROOT_LOGGER=INFO,console

# Default log4j setting for daemons spawned explicitly by

# --daemon option of hadoop, hdfs, mapred and yarn command.

# Java property: hadoop.root.logger

# export HADOOP_DAEMON_ROOT_LOGGER=INFO,RFA

# Default log level and output location for security-related messages.

# You will almost certainly want to change this on a per-daemon basis via

# the Java property (i.e., -Dhadoop.security.logger=foo). (Note that the

# defaults for the NN and 2NN override this by default.)

# Java property: hadoop.security.logger

# export HADOOP_SECURITY_LOGGER=INFO,NullAppender

# Default process priority level

# Note that sub-processes will also run at this level!

# export HADOOP_NICENESS=0

# Default name for the service level authorization file

# Java property: hadoop.policy.file

# export HADOOP_POLICYFILE="hadoop-policy.xml"

#

# NOTE: this is not used by default! <-----

# You can define variables right here and then re-use them later on.

# For example, it is common to use the same garbage collection settings

# for all the daemons. So one could define:

#

# export HADOOP_GC_SETTINGS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps"

#

# .. and then use it as per the b option under the namenode.

###

# Secure/privileged execution

###

#

# Out of the box, Hadoop uses jsvc from Apache Commons to launch daemons

# on privileged ports. This functionality can be replaced by providing

# custom functions. See hadoop-functions.sh for more information.

#

# The jsvc implementation to use. Jsvc is required to run secure datanodes

# that bind to privileged ports to provide authentication of data transfer

# protocol. Jsvc is not required if SASL is configured for authentication of

# data transfer protocol using non-privileged ports.

# export JSVC_HOME=/usr/bin

#

# This directory contains pids for secure and privileged processes.

#export HADOOP_SECURE_PID_DIR=${HADOOP_PID_DIR}

#

# This directory contains the logs for secure and privileged processes.

# Java property: hadoop.log.dir

# export HADOOP_SECURE_LOG=${HADOOP_LOG_DIR}

#

# When running a secure daemon, the default value of HADOOP_IDENT_STRING

# ends up being a bit bogus. Therefore, by default, the code will

# replace HADOOP_IDENT_STRING with HADOOP_xx_SECURE_USER. If one wants

# to keep HADOOP_IDENT_STRING untouched, then uncomment this line.

# export HADOOP_SECURE_IDENT_PRESERVE="true"

###

# NameNode specific parameters

###

# Default log level and output location for file system related change

# messages. For non-namenode daemons, the Java property must be set in

# the appropriate _OPTS if one wants something other than INFO,NullAppender

# Java property: hdfs.audit.logger

# export HDFS_AUDIT_LOGGER=INFO,NullAppender

# Specify the JVM options to be used when starting the NameNode.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# a) Set JMX options

# export HDFS_NAMENODE_OPTS="-Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.port=1026"

#

# b) Set garbage collection logs

# export HDFS_NAMENODE_OPTS="${HADOOP_GC_SETTINGS} -Xloggc:${HADOOP_LOG_DIR}/gc-rm.log-$(date +'%Y%m%d%H%M')"

#

# c) ... or set them directly

# export HDFS_NAMENODE_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintGCDateStamps -Xloggc:${HADOOP_LOG_DIR}/gc-rm.log-$(date +'%Y%m%d%H%M')"

# this is the default:

# export HDFS_NAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS"

###

# SecondaryNameNode specific parameters

###

# Specify the JVM options to be used when starting the SecondaryNameNode.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# This is the default:

# export HDFS_SECONDARYNAMENODE_OPTS="-Dhadoop.security.logger=INFO,RFAS"

###

# DataNode specific parameters

###

# Specify the JVM options to be used when starting the DataNode.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# This is the default:

# export HDFS_DATANODE_OPTS="-Dhadoop.security.logger=ERROR,RFAS"

# On secure datanodes, user to run the datanode as after dropping privileges.

# This **MUST** be uncommented to enable secure HDFS if using privileged ports

# to provide authentication of data transfer protocol. This **MUST NOT** be

# defined if SASL is configured for authentication of data transfer protocol

# using non-privileged ports.

# This will replace the hadoop.id.str Java property in secure mode.

# export HDFS_DATANODE_SECURE_USER=hdfs

# Supplemental options for secure datanodes

# By default, Hadoop uses jsvc which needs to know to launch a

# server jvm.

# export HDFS_DATANODE_SECURE_EXTRA_OPTS="-jvm server"

###

# NFS3 Gateway specific parameters

###

# Specify the JVM options to be used when starting the NFS3 Gateway.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_NFS3_OPTS=""

# Specify the JVM options to be used when starting the Hadoop portmapper.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_PORTMAP_OPTS="-Xmx512m"

# Supplemental options for priviliged gateways

# By default, Hadoop uses jsvc which needs to know to launch a

# server jvm.

# export HDFS_NFS3_SECURE_EXTRA_OPTS="-jvm server"

# On privileged gateways, user to run the gateway as after dropping privileges

# This will replace the hadoop.id.str Java property in secure mode.

# export HDFS_NFS3_SECURE_USER=nfsserver

###

# ZKFailoverController specific parameters

###

# Specify the JVM options to be used when starting the ZKFailoverController.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_ZKFC_OPTS=""

###

# QuorumJournalNode specific parameters

###

# Specify the JVM options to be used when starting the QuorumJournalNode.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_JOURNALNODE_OPTS=""

###

# HDFS Balancer specific parameters

###

# Specify the JVM options to be used when starting the HDFS Balancer.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_BALANCER_OPTS=""

###

# HDFS Mover specific parameters

###

# Specify the JVM options to be used when starting the HDFS Mover.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_MOVER_OPTS=""

###

# Router-based HDFS Federation specific parameters

# Specify the JVM options to be used when starting the RBF Routers.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_DFSROUTER_OPTS=""

###

# HDFS StorageContainerManager specific parameters

###

# Specify the JVM options to be used when starting the HDFS Storage Container Manager.

# These options will be appended to the options specified as HADOOP_OPTS

# and therefore may override any similar flags set in HADOOP_OPTS

#

# export HDFS_STORAGECONTAINERMANAGER_OPTS=""

###

# Advanced Users Only!

###

#

# When building Hadoop, one can add the class paths to the commands

# via this special env var:

# export HADOOP_ENABLE_BUILD_PATHS="true"

#

# To prevent accidents, shell commands be (superficially) locked

# to only allow certain users to execute certain subcommands.

# It uses the format of (command)_(subcommand)_USER.

#

# For example, to limit who can execute the namenode command,

# export HDFS_NAMENODE_USER=hdfs

5.5.4 $HADOOP_HOME/etc/hadoop/core-site.xml

[root@node01 ~]vi $HADOOP_HOME/etc/hadoop/core-site.xml

-$HADOOP_HOME/etc/hadoop/core-site.sh文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--默认的HDFS路径-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster</value>

</property>

<!--hadoop的临时目录,如果需要配置多个目录,需要逗号隔开-->

<property>

<name>hadoop.tmp.dir</name>

<value>/root/data/hadoop/tmp</value>

</property>

<property>

<!--配置Zookeeper 管理HDFS-->

<name>ha.zookeeper.quorum</name>

<value>node01:2181,node02:2181,node03:2181</value>

</property>

</configuration>

5.5.5 $HADOOP_HOME/etc/hadoop/hdfs-site.xml

[root@node01 ~]vi $HADOOP_HOME/etc/hadoop/hdfs-site.xml

- $HADOOP_HOME/etc/hadoop/hdfs-site.xml文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<!--数据块副本数为3-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--权限默认配置为false-->

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!--命名空间,它的值与fs.defaultFS的值要对应,namenode高可用之后有两个namenode,cluster是对外提供的统一入口-->

<property>

<name>dfs.nameservices</name>

<value>cluster</value>

</property>

<!-- 指定 nameService 是 cluster时的nameNode有哪些,这里的值也是逻辑名称,名字随便起,相互不重复即可-->

<property>

<name>dfs.ha.namenodes.cluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster.nn1</name>

<value>node01:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster.nn1</name>

<value>node01:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster.nn2</name>

<value>node02:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster.nn2</name>

<value>node02:50070</value>

</property>

<!--启动故障自动恢复-->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--指定NameNode的元数据在JournalNode上的存放位置-->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://node01:8485;node02:8485;node03:8485/cluster</value>

</property>

<!--指定cluster 出故障时,哪个实现类负责执行故障切换-->

<property>

<name>dfs.client.failover.proxy.provider.cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/root/data/journaldata/jn</value>

</property>

<!-- 配置隔离机制,shell通过ssh连接active namenode节点,杀掉进程-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>shell(/bin/true)</value>

</property>

<!-- 为了实现SSH登录杀掉进程,还需要配置免密码登录的SSH密匙信息 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>10000</value>

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

</property>

</configuration>

5.5.6 $HADOOP_HOME/etc/hadoop/slaves

[root@node01 ~]vi $HADOOP_HOME/etc/hadoop/slaves

- $HADOOP_HOME/etc/hadoop/slaves文件内容如下:

node01

node02

node03

5.5.7 $HADOOP_HOME/etc/hadoop/yarn-site.xml

[root@node01 ~]vi $HADOOP_HOME/etc/hadoop/yarn-site.xml

- $HADOOP_HOME/etc/hadoop/yarn-site.xml文件内容如下:

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<!--打开高可用-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--启动故障自动恢复-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--rm启动内置选举active-->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!--给yarn cluster 取个名字yarn-rm-cluster-->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-rm-cluster</value>

</property>

<!--ResourceManager高可用 rm1,rm2-->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>node01</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>node02</value>

</property>

<!--启用resourcemanager 自动恢复-->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--配置Zookeeper地址作为状态存储和leader选举-->

<property>

<name>hadoop.zk.address</name>

<value>node01:2181,node02:2181,node03:2181</value>

</property>

<!--rm1端口号-->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>node01:8032</value>

</property>

<!-- rm1调度器的端口号-->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>node01:8034</value>

</property>

<!-- rm1 webapp端口号-->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>node01:8088</value>

</property>

<!-- rm2端口号-->

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>node02:8032</value>

</property>

<!-- rm2调度器的端口号-->

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>node02:8034</value>

</property>

<!-- rm2 webapp端口号-->

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>node02:8088</value>

</property>

<!--执行MapReduce需要配置的shuffle过程-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

</property>

</configuration>

5.5.6 $HADOOP_HOME/etc/hadoop/mapred-site.xml

[root@node01 ~]vi $HADOOP_HOME/etc/hadoop/mapred-site.xml

- $HADOOP_HOME/etc/hadoop/mapred-site.xml文件内容如下:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<!--MapReduce以yarn模式运行-->

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

5.6 启动HDFS

5.6.1 向所有节点远程复制hadoop安装目录

deploy.sh脚本内容

[root@node01 ~]deploy.sh /usr/local/app/hadoop /usr/local/app/ slave

5.6.2 启动zookeeper集群

[root@node01 ~]runRemote.sh "/usr/local/app/zookeeper/bin/zkServer.sh start" all

# 【非必须执行】查看各节点状态

[root@node01 ~]runRemote.sh "/usr/local/app/zookeeper/bin/zkServer.sh start" all

5.6.3 启动Journalnode集群

[root@node01 ~]runRemote.sh "/usr/local/app/hadoop/sbin/hadoop-daemon.sh start journalnode" all

5.6.4 格式化主节点NameNode

- 在node01节点上格式化

[root@node01 ~]/usr/local/app/hadoop/bin/hdfs namenode -format

[root@node01 ~]/usr/local/app/hadoop/bin/hdfs zkfc -formatZK

[root@node01 ~]/usr/local/app/hadoop/bin/hdfs namenode

- 在node02节点上同步主节点元数据

[root@node02 ~]/usr/local/app/hadoop/bin/hdfs namenode -bootstrapStandby

5.6.5 关闭Journalnode集群

[root@node01 ~]runRemote.sh "/usr/local/app/hadoop/sbin/hadoop-daemon.sh stop journalnode" all

5.6.6 一键启动HDFS集群

[root@node01 ~]/usr/local/app/hadoop/sbin/start-dfs.sh

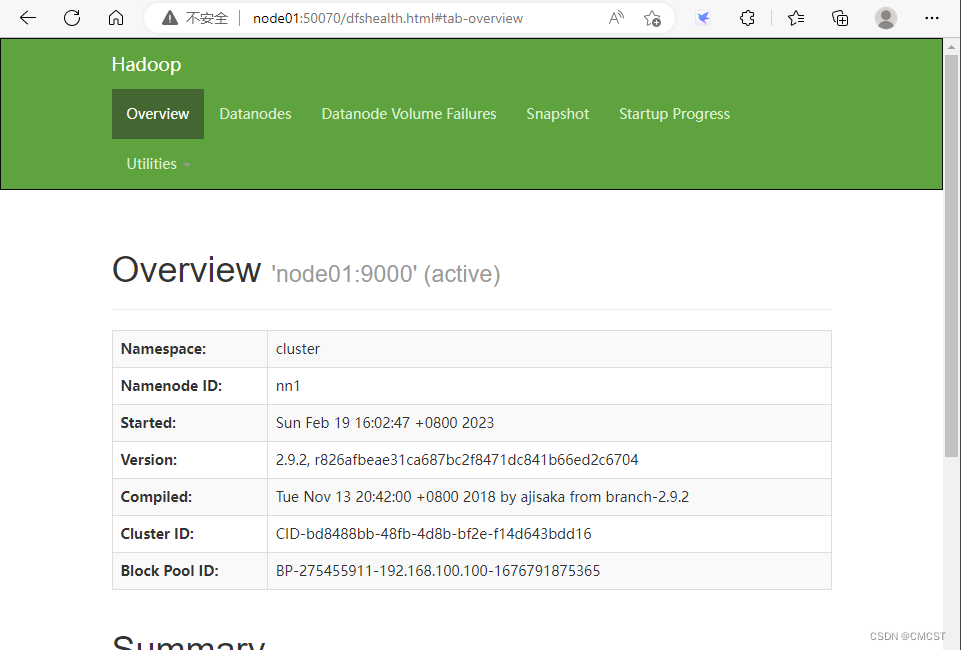

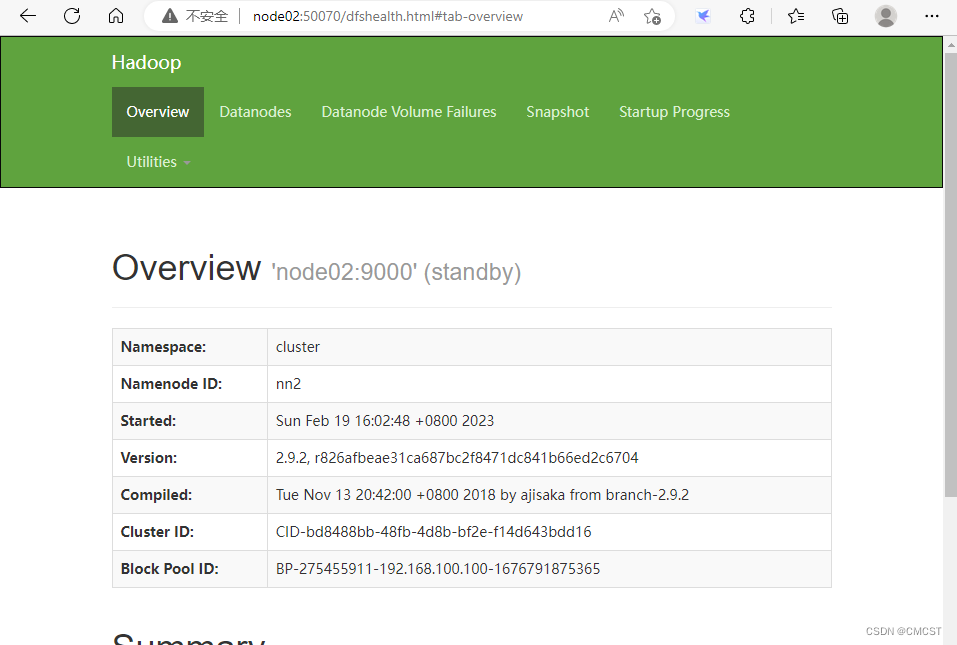

5.7 HDFS集群测试

- 一个为active,另一个为standby即可

网址 http://192.168.100.100:50070

http://192.168.100.101:50070

5.8 Yarn集群启动

5.8.1 在node01上一键启动

[root@node01 ~]/usr/local/app/hadoop/sbin/start-yarn.sh

5.8.2 在node02上启动备用ResourceManager

[root@node01 ~]/usr/local/app/hadoop/sbin/yarn-daemon.sh start resourcemanager

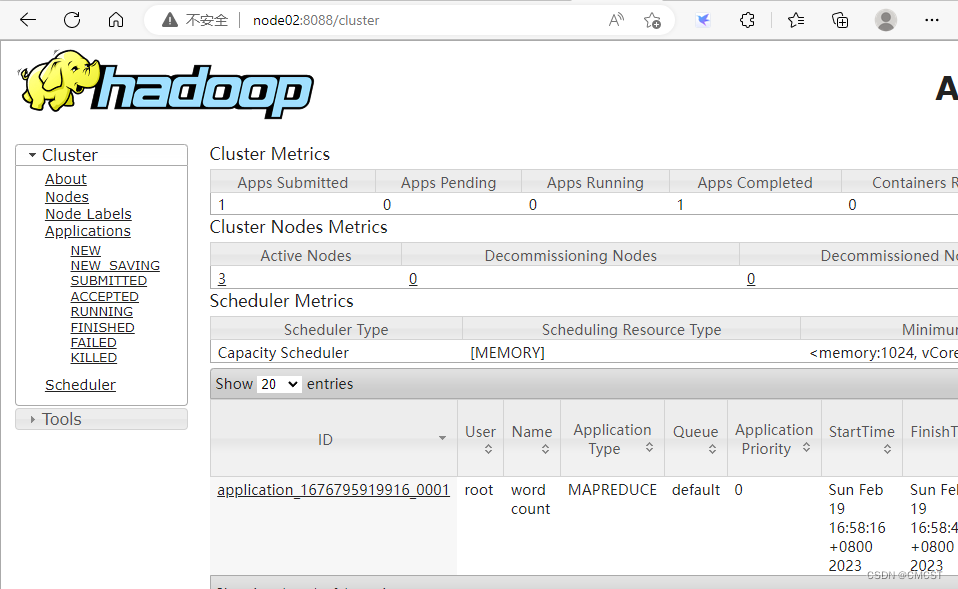

5.9 Yarn集群测试

5.9.1 Web页面查看Yarn集群

网址 http://192.168.100.100:8088

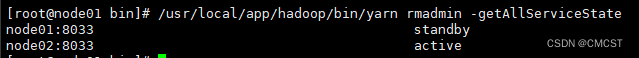

5.9.2 查看ResourceManager状态

[root@node01 ~]/usr/local/app/hadoop/bin/yarn rmadmin -getAllServicceState

6. zookeeper部署

6.1 配置NTP服务器

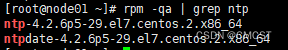

6.1.1 检查NTP服务是否安装

键入如下指令查询是否存在

rpm -qa | grep ntp

ntp-4.2.6p5-29.el7.centos.2.x86_64 用来和某台服务器同步服务

ntpdate-4.2.6p5-29.el7.centos.2.x86_64 用来提供时间同步服务

若无NTP服务,键入如下指令安装ntp服务

yum install -y ntp

6.1.2 修改配置文件/etc/ntp.conf

cat /etc/ntp.conf

初始内容如下:

# For more information about this file, see the man pages

# ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).

driftfile /var/lib/ntp/drift

# Permit time synchronization with our time source, but do not

# permit the source to query or modify the service on this system.

restrict default nomodify notrap nopeer noquery

# Permit all access over the loopback interface. This could

# be tightened as well, but to do so would effect some of

# the administrative functions.

restrict 127.0.0.1

restrict ::1

# Hosts on local network are less restricted.

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

#manycastserver 239.255.254.254 # manycast server

#manycastclient 239.255.254.254 autokey # manycast client

# Enable public key cryptography.

#crypto

includefile /etc/ntp/crypto/pw

# Key file containing the keys and key identifiers used when operating

# with symmetric key cryptography.

keys /etc/ntp/keys

# Specify the key identifiers which are trusted.

#trustedkey 4 8 42

# Specify the key identifier to use with the ntpdc utility.

#requestkey 8

# Specify the key identifier to use with the ntpq utility.

#controlkey 8

# Enable writing of statistics records.

#statistics clockstats cryptostats loopstats peerstats

# Disable the monitoring facility to prevent amplification attacks using ntpdc

# monlist command when default restrict does not include the noquery flag. See

# CVE-2013-5211 for more details.

# Note: Monitoring will not be disabled with the limited restriction flag.

disable monitor

修改后文件内容如下:

# For more information about this file, see the man pages

# ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).

driftfile /var/lib/ntp/drift

# Permit time synchronization with our time source, but do not

# permit the source to query or modify the service on this system.

restrict default nomodify notrap nopeer noquery

# Permit all access over the loopback interface. This could

# be tightened as well, but to do so would effect some of

# the administrative functions.

restrict 127.0.0.1

restrict ::1

# Hosts on local network are less restricted.

restrict 192.168.100.100 mask 255.255.255.0 nomodify notrap

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

# server 0.centos.pool.ntp.org iburst

# server 1.centos.pool.ntp.org iburst

# server 2.centos.pool.ntp.org iburst

# server 3.centos.pool.ntp.org iburst

#broadcast 192.168.1.255 autokey # broadcast server

#broadcastclient # broadcast client

#broadcast 224.0.1.1 autokey # multicast server

#multicastclient 224.0.1.1 # multicast client

#manycastserver 239.255.254.254 # manycast server

#manycastclient 239.255.254.254 autokey # manycast client

# Enable public key cryptography.

#crypto

includefile /etc/ntp/crypto/pw

# Key file containing the keys and key identifiers used when operating

# with symmetric key cryptography.

keys /etc/ntp/keys

# Specify the key identifiers which are trusted.

#trustedkey 4 8 42

# Specify the key identifier to use with the ntpdc utility.

#requestkey 8

# Specify the key identifier to use with the ntpq utility.

#controlkey 8

# Enable writing of statistics records.

#statistics clockstats cryptostats loopstats peerstats

# Disable the monitoring facility to prevent amplification attacks using ntpdc

# monlist command when default restrict does not include the noquery flag. See

# CVE-2013-5211 for more details.

# Note: Monitoring will not be disabled with the limited restriction flag.

disable monitor

server 127.127.1.0

fudge 127.127.1.0 straturn 10

所作修改如下:

- 启动restrict 限定机器网段,修改为node01的IP地址

restrict 192.168.100.100 mask 255.255.255.0 nomodify notrap - 注释掉server域名配置

- 文末添加如下内容:[让本机和本地硬件时间同步]

server 127.127.1.0

fudge 127.127.1.0 straturn 10

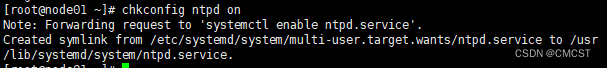

6.1.3 启动NTP服务

执行如下命令自启动NTP服务[开机自启动]

chkconfig ntpd on

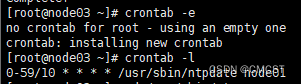

6.1.4 配置node02和node03定时同步时间[以node02为例]

crontab -e

文件内容如下:

0-59/10 * * * * /usr/sbin/ntpdate node01

键入如下命令可查看

crontab -e

6.2 配置zookeeper文件

此处zookeeper解压后位置为/usr/local/app/zookeeper

6.2.1 创建zoo.cfg

cp /usr/local/app/zookeeper/conf/zoo_sample.cfg /usr/local/app/zookeeper/conf/zoo.cfg

vi /usr/local/app/zookeeper/conf/zoo.cfg

# The number of milliseconds of each tick

#这个时间是作为Zookeeper服务器之间或客户端与服务器之间维持心跳的时间间隔

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

#配置 Zookeeper 接受客户端初始化连接时最长能忍受多少个心跳时间间隔数。

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

#Leader 与 Follower 之间发送消息,请求和应答时间长度

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

#数据目录需要提前创建

dataDir=/root/data/zookeeper/zkdata

#日志目录需要提前创建

dataLogDir=/root/data/zookeeper/zkdatalog

# the port at which the clients will connect

#访问端口号

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

#server.每个节点服务编号=服务器ip地址:集群通信端口:选举端口

server.1=node01:2888:3888

server.2=node02:2888:3888

server.3=node03:2888:3888

所做修改如下:

- 修改数据目录

- 添加日志目录

- 添加服务器节点地址

6.2.2 分发至node02、node03

scp -r /usr/local/app/zookeeper root@node02:/usr/local/app/

scp -r /usr/local/app/zookeeper root@node03:/usr/local/app/

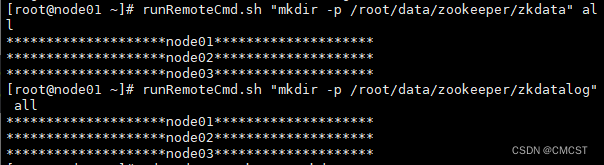

6.2.3 创建数据目录及日志目录[三个节点均需要创建]

mkdir -p /root/data/zookeeper/zkdata

mkdir -p /root/data/zookeeper/zkdatalog

使用脚本仅需一条命令

runRemoteCmd.sh "mkdir -p /root/data/zookeeper/zkdata" all

runRemoteCmd.sh "mkdir -p /root/data/zookeeper/zkdatalog" all

在这里插入图片描述

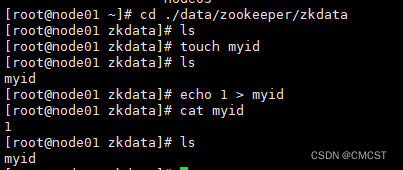

6.2.4 创建各节点服务编号

6.2.4.1 node01

cd ~/data/zookeeper/zkdata

touch myid

cat 1 > myid

6.2.4.2 node02

cd ~/data/zookeeper/zkdata

touch myid

cat 2 > myid

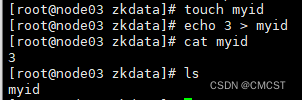

6.2.4.3 node03

cd ~/data/zookeeper/zkdata

touch myid

cat 3 > myid

6.2.5 启动zookeeper集群

6.2.5.1 启动

runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh start" all

6.2.5.2 查看状态

runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh status" all

6.2.5.3 终止

runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh stop" all

7. Hive安装[仅在node01安装]

7.1 MySQL安装

7.1.1 在node01节点上,使用yum命令在线安装MySQL数据库

yum install -y mysql-server

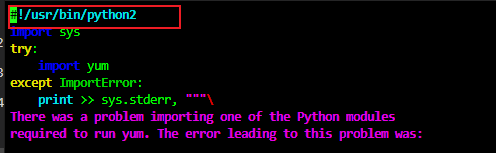

- 若出现如下问题

[root@node01 ~]# yum install mysql-server

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.bupt.edu.cn

* extras: mirrors.bupt.edu.cn

* updates: mirrors.bupt.edu.cn

base | 3.6 kB 00:00

extras | 2.9 kB 00:00

updates | 2.9 kB 00:00

No package mysql-server available.

Error: Nothing to do

- 解决方法如下

# step1 : 安装wget

yum install -y wget

# step2 (可选命令):切换至一会儿使用wget下载内容的位置

cd /usr/local/app/

# step3 : 使用wget下载mysql套件

wget http://repo.mysql.com/mysql-community-release-el7-5.noarch.rpm

# step4 : 安装mysql套件

rpm -ivh mysql-community-release-el7-5.noarch.rpm

# step5 : 安装mysql

yum install -y mysql-server

Installed:

mysql-community-libs.x86_64 0:5.6.51-2.el7 mysql-community-server.x86_64 0:5.6.51-2.el7

Dependency Installed:

mysql-community-client.x86_64 0:5.6.51-2.el7 mysql-community-common.x86_64 0:5.6.51-2.el7

perl.x86_64 4:5.16.3-299.el7_9 perl-Carp.noarch 0:1.26-244.el7

perl-Compress-Raw-Bzip2.x86_64 0:2.061-3.el7 perl-Compress-Raw-Zlib.x86_64 1:2.061-4.el7

perl-DBI.x86_64 0:1.627-4.el7 perl-Data-Dumper.x86_64 0:2.145-3.el7

perl-Encode.x86_64 0:2.51-7.el7 perl-Exporter.noarch 0:5.68-3.el7

perl-File-Path.noarch 0:2.09-2.el7 perl-File-Temp.noarch 0:0.23.01-3.el7

perl-Filter.x86_64 0:1.49-3.el7 perl-Getopt-Long.noarch 0:2.40-3.el7

perl-HTTP-Tiny.noarch 0:0.033-3.el7 perl-IO-Compress.noarch 0:2.061-2.el7

perl-Net-Daemon.noarch 0:0.48-5.el7 perl-PathTools.x86_64 0:3.40-5.el7

perl-PlRPC.noarch 0:0.2020-14.el7 perl-Pod-Escapes.noarch 1:1.04-299.el7_9

perl-Pod-Perldoc.noarch 0:3.20-4.el7 perl-Pod-Simple.noarch 1:3.28-4.el7

perl-Pod-Usage.noarch 0:1.63-3.el7 perl-Scalar-List-Utils.x86_64 0:1.27-248.el7

perl-Socket.x86_64 0:2.010-5.el7 perl-Storable.x86_64 0:2.45-3.el7

perl-Text-ParseWords.noarch 0:3.29-4.el7 perl-Time-HiRes.x86_64 4:1.9725-3.el7

perl-Time-Local.noarch 0:1.2300-2.el7 perl-constant.noarch 0:1.27-2.el7

perl-libs.x86_64 4:5.16.3-299.el7_9 perl-macros.x86_64 4:5.16.3-299.el7_9

perl-parent.noarch 1:0.225-244.el7 perl-podlators.noarch 0:2.5.1-3.el7

perl-threads.x86_64 0:1.87-4.el7 perl-threads-shared.x86_64 0:1.43-6.el7

Replaced:

mariadb-libs.x86_64 1:5.5.60-1.el7_5

Complete!

7.1.2 启动mysql服务

MySQL数据库安装成功之后,通过命令行启动MySQL服务,具体操作如下所示。

[root@node01 ~]# service mysqld start

7.1.3 设置MySQL root用户密码

- MySQL刚刚安装完成,默认root用户是没有密码的,需要登录MySQL设置root用户密码,具体步骤如下。

(1)无密码登录MySQL

- 因为MySQL默认没有密码,所以使用root用户可直接登录MySQL,输入密码时可按Enter键回车即可,具体操作如下所示。

[root@hadoop1 ~]# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.6.51 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

(2)设置root用户密码

- 在MySQL客户端设置root用户密码,具体操作如下所示。

mysql>set password for root@localhost=password('root');

(3)有密码登录MySQL

- 设置完MySQL root用户密码之后,退出并重新登录MySQL,用户名为:root,密码为:root。

[root@hadoop1 ~]# mysql -u root –p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 3

Server version: 5.6.51 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

- 如果能成功登录MySQL,就说明MySQL 的root用户密码设置成功。

7.1.4.创建Hive账户

首先输入如下命令创建Hive账户,操作命令如下。

mysql>create user 'hive' identified by 'hive';

将MySQL所有权限授予Hive账户,操作命令如下所示。

# mysql>grant all on *.* to 'hive'@'主机名' identified by 'hive';

mysql>grant all on *.* to 'hive'@'node01' identified by 'hive';

mysql>grant all on *.* to 'hive'@'%' identified by 'hive';

通过命令使上述授权生效,操作命令如下所示。

mysql> flush privileges;

如果上述操作成功,就可以使用Hive账户登录MySQL数据库,具体命令如下。

# -h 指定主机名 -u 指定用户名

[root@node01 ~]# mysql -h node01 -u hive -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

Server version: 5.6.51 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

7.2 安装Hive

7.2.1 下载Hive

官网下载 Hive 安装包apache-hive-2.3.7-bin.tar.gz

7.2.2 解压Hive

# 在node01节点上,使用解压命令解压Hive安装包,具体操作如下所示。

[root@node01 ~]$ tar -zxvf apache-hive-2.3.7-bin.tar.gz -C /usr/local/app/

# 改名

[root@node01 ~]$ mv apache-hive-2.3.7-bin hive

7.2.3 配置环境变量

vi ~/.bashrc

./bashrc修改后内容如下:

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

JAVA_HOME=/usr/local/app/jdk

HADOOP_HOME=/usr/local/app/hadoop

HIVE_HOME=/usr/local/app/hive

CLASSPATH=,:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:/root/tools:$HIVE_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH HADOOP_HOME HIVE_HOME

source ~/.bashrc

7.2.4 修改hive-site.xml配置文件

cd $HIVE_HOME/conf

cp hive-default.xml.template hive-site.xml

vi hive-site.xml

修改第一项内容

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<!--

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

-->

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

修改第二项内容

<property>

<name>javax.jdo.option.ConnectionURL</name>

<!--

<value>jdbc:derby:;databaseName=metastore_db;create=true</value>

-->

<value>jdbc:mysql://node01:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

修改第三项内容

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<!--

<value>APP</value>

-->

<vaule>hive</value>

<description>Username to use against metastore database</description>

</property>

修改第四项内容

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<!--<value>mine</value>-->

<value>hive</value>

<description>password to use against metastore database</description>

</property>

修改第五项内容

<property>

<name>hive.querylog.location</name>

<!--

<value>${system:java.io.tmpdir}/${system:user.name}</value>

-->

<value>/root/data/hive/iotmp</value>

<description>Location of Hive run time structured log file</description>

</property>

修改第六项内容

<property>

<name>hive.exec.local.scratchdir</name>

<!--

<value>${system:java.io.tmpdir}/${system:user.name}</value>

-->

<value>/root/data/hive/iotmp</value>

<description>Local scratch space for Hive jobs</description>

</property>

修改第七项内容

修改第项内容

修改第项内容

修改第项内容

修改第项内容

修改第项内容

修改第项内容

修改第项内容

7.2.4 为hive添加jdbc的驱动

- 下载mysql-connector-java-5.1.38.jar驱动包

mv mysql-connector-java-5.1.38.jar $HIVE_HOME/lib/

7.3 启动Hive服务

7.3.1 启动Hive前

启动Hive前先启动HDFS集群

# 先启动zookeeper

[root@node01 hive]# runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh start" all

********************node01********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

********************node02********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

********************node03********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

# step2: 启动HDFS

[root@node01 hive]# runRemoteCmd.sh "/usr/local/app/hadoop/sbin/start-all.sh" all

[root@node01 hive]# jps

[root@node01 hive]# jps

7713 QuorumPeerMain

7937 NameNode

8245 JournalNode

8524 DFSZKFailoverController

8045 DataNode

8638 ResourceManager

8750 NodeManager

9358 Jps

[root@node01 hive]# ssh node01

Last login: Fri Feb 10 11:17:21 2023 from 192.168.100.1

[root@node01 ~]# ssh node02

Last login: Thu Feb 9 09:55:00 2023 from 192.168.100.1

[root@node02 ~]# jps

7764 DFSZKFailoverController

7844 NodeManager

8342 ResourceManager

7448 DataNode

8650 Jps

7276 QuorumPeerMain

7548 JournalNode

7374 NameNode

[root@node02 ~]# ssh node03

Last login: Thu Feb 9 09:55:06 2023 from 192.168.100.1

[root@node03 ~]# jps

7456 JournalNode

7265 QuorumPeerMain

7554 NodeManager

8227 Jps

7356 DataNode

[root@node03 ~]# ssh node01

Last login: Fri Feb 10 12:33:55 2023 from node01

[root@node01 ~]#

7.3.2 启动Hive

# 第一次启动Hive服务需要先进行初始化,具体操作如下所示。

[root@node01 hive]# bin/schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/app/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/app/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://node01:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: hive

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

# 然后再执行bin/hive脚本启动Hive服务,具体操作如下所示。

[root@node01 hive]$ bin/hive

which: no hbase in (/usr/local/app/jdk/bin:/usr/local/app/hadoop/bin:/root/tools:/usr/local/app/hive/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/app/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/app/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/local/app/hive/lib/hive-common-2.3.7.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive>

hive>show databases;

如果上述操作没有问题,则说明Hive客户端已经安装成功。

8. HBase安装

8.1 集群规划

| node01 | node02 | node03 | |

|---|---|---|---|

| DataNode | √ | √ | √ |

| NameNode | √ | √ | |

| ZooKeeper | √ | √ | √ |

| HMaster | √ | √ | |

| HRegionServer | √ | √ | √ |

8.2 软件规划

| 软件 | 版本 | 说明 |

|---|---|---|

| JDK | 1.8 | |

| Hadoop | 2.9.1 | |

| Zookeeper | 3.4.6 | |

| HBase | 1.2.0 |

8.3 数据目录规划

| 目录名称 | 目录路径 |

|---|---|

| HBase软件安装目录 | /usr/local/app |

| RegionServer共享目录 | hdfs://mycluster/hbase |

| Zookeeper数据目录 | /root/data/zookeeper |

8.4 配置

8.4.1 下载 并 解压

官网 下载1.2.0版本

tar -zxvf hbase-1.2.0-bin.tar.gz -C /usr/local/app/

mv /usr/local/app/hbase-1.2.0 /usr/local/app/hbase

8.4.2 修改配置文件

vi /usr/local/app/hbase/conf/hbase-site.xml

vi /usr/local/app/hbase/conf/hbase-env.sh

vi /usr/local/app/hbase/conf/regionservers

vi /usr/local/app/hbase/conf/backup-masters

vi ~/.bashrc

8.4.2.1 hbase-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

/**

*

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<property>

<!--指定Zookeeper集群节点-->

<name>hbase.zookeeper.quorum</name>

<value>node01,node02,node03</value>

</property>

<property>

<!--指定Zookeeper数据存储目录-->

<name>hbase.zookeeper.property.dataDir</name>

<value>/root/data/zookeeper/zkdata</value>

</property>

<property>

<!--指定Zookeeper端口号-->

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<!--指定HBase在HDFS上的根目录-->

<name>hbase.rootdir</name>

<value>hdfs://mycluster/hbase</value>

</property>

<property>

<!--指定true为分布式集群部署-->

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

</configuration>

8.4.2.2 hbase-env.sh

#

#/**

# * Licensed to the Apache Software Foundation (ASF) under one

# * or more contributor license agreements. See the NOTICE file

# * distributed with this work for additional information

# * regarding copyright ownership. The ASF licenses this file

# * to you under the Apache License, Version 2.0 (the

# * "License"); you may not use this file except in compliance

# * with the License. You may obtain a copy of the License at

# *

# * http://www.apache.org/licenses/LICENSE-2.0

# *

# * Unless required by applicable law or agreed to in writing, software

# * distributed under the License is distributed on an "AS IS" BASIS,

# * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# * See the License for the specific language governing permissions and

# * limitations under the License.

# */

# Set environment variables here.

# This script sets variables multiple times over the course of starting an hbase process,

# so try to keep things idempotent unless you want to take an even deeper look

# into the startup scripts (bin/hbase, etc.)

# The java implementation to use. Java 1.7+ required.

# export JAVA_HOME=/usr/java/jdk1.6.0/

export JAVA_HOME=/usr/local/app/jdk

# Extra Java CLASSPATH elements. Optional.

# export HBASE_CLASSPATH=

# The maximum amount of heap to use. Default is left to JVM default.

# export HBASE_HEAPSIZE=1G

# Uncomment below if you intend to use off heap cache. For example, to allocate 8G of

# offheap, set the value to "8G".

# export HBASE_OFFHEAPSIZE=1G

# Extra Java runtime options.

# Below are what we set by default. May only work with SUN JVM.

# For more on why as well as other possible settings,

# see http://wiki.apache.org/hadoop/PerformanceTuning

export HBASE_OPTS="-XX:+UseConcMarkSweepGC"

# Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+

export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

# Uncomment one of the below three options to enable java garbage collection logging for the server-side processes.

# This enables basic gc logging to the .out file.

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH>"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export SERVER_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH> -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# Uncomment one of the below three options to enable java garbage collection logging for the client processes.

# This enables basic gc logging to the .out file.

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps"

# This enables basic gc logging to its own file.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH>"

# This enables basic GC logging to its own file with automatic log rolling. Only applies to jdk 1.6.0_34+ and 1.7.0_2+.

# If FILE-PATH is not replaced, the log file(.gc) would still be generated in the HBASE_LOG_DIR .

# export CLIENT_GC_OPTS="-verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:<FILE-PATH> -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=1 -XX:GCLogFileSize=512M"

# See the package documentation for org.apache.hadoop.hbase.io.hfile for other configurations

# needed setting up off-heap block caching.

# Uncomment and adjust to enable JMX exporting

# See jmxremote.password and jmxremote.access in $JRE_HOME/lib/management to configure remote password access.

# More details at: http://java.sun.com/javase/6/docs/technotes/guides/management/agent.html

# NOTE: HBase provides an alternative JMX implementation to fix the random ports issue, please see JMX

# section in HBase Reference Guide for instructions.

# export HBASE_JMX_BASE="-Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10101"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10102"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10103"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10104"

# export HBASE_REST_OPTS="$HBASE_REST_OPTS $HBASE_JMX_BASE -Dcom.sun.management.jmxremote.port=10105"

# File naming hosts on which HRegionServers will run. $HBASE_HOME/conf/regionservers by default.

# export HBASE_REGIONSERVERS=${HBASE_HOME}/conf/regionservers

# Uncomment and adjust to keep all the Region Server pages mapped to be memory resident

#HBASE_REGIONSERVER_MLOCK=true

#HBASE_REGIONSERVER_UID="hbase"

# File naming hosts on which backup HMaster will run. $HBASE_HOME/conf/backup-masters by default.

# export HBASE_BACKUP_MASTERS=${HBASE_HOME}/conf/backup-masters

# Extra ssh options. Empty by default.

# export HBASE_SSH_OPTS="-o ConnectTimeout=1 -o SendEnv=HBASE_CONF_DIR"

# Where log files are stored. $HBASE_HOME/logs by default.

# export HBASE_LOG_DIR=${HBASE_HOME}/logs

# Enable remote JDWP debugging of major HBase processes. Meant for Core Developers

# export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8070"

# export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8071"

# export HBASE_THRIFT_OPTS="$HBASE_THRIFT_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8072"

# export HBASE_ZOOKEEPER_OPTS="$HBASE_ZOOKEEPER_OPTS -Xdebug -Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=8073"

# A string representing this instance of hbase. $USER by default.

# export HBASE_IDENT_STRING=$USER

# The scheduling priority for daemon processes. See 'man nice'.

# export HBASE_NICENESS=10

# The directory where pid files are stored. /tmp by default.

# export HBASE_PID_DIR=/var/hadoop/pids

# Seconds to sleep between slave commands. Unset by default. This

# can be useful in large clusters, where, e.g., slave rsyncs can

# otherwise arrive faster than the master can service them.

# export HBASE_SLAVE_SLEEP=0.1

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

# export HBASE_MANAGES_ZK=true

export HBASE_MANAGES_ZK=false

# The default log rolling policy is RFA, where the log file is rolled as per the size defined for the

# RFA appender. Please refer to the log4j.properties file to see more details on this appender.

# In case one needs to do log rolling on a date change, one should set the environment property

# HBASE_ROOT_LOGGER to "<DESIRED_LOG LEVEL>,DRFA".

# For example:

# HBASE_ROOT_LOGGER=INFO,DRFA

# The reason for changing default to RFA is to avoid the boundary case of filling out disk space as

# DRFA doesn't put any cap on the log size. Please refer to HBase-5655 for more context.

- 配置jdk安装路径

export JAVA_HOME=/home/hadoop/app/jdk

- 使用独立的Zookeeper集群

export HBASE_MANAGES_ZK=false

8.4.2.3 regionservers

node01

node02

node03

8.4.2.4 backup-masters

node02

8.4.2.5 ~/.bashrc

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

JAVA_HOME=/usr/local/app/jdk

HADOOP_HOME=/usr/local/app/hadoop

HIVE_HOME=/usr/local/app/hive

CLASSPATH=,:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:/root/tools:$HIVE_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH HADOOP_HOME HIVE_HOME

export HBASE_HOME=/usr/local/app/hbase

- 添加HBase环境变量

export HBASE_HOME=/usr/local/app/hbase

8.4.3 启动HBase

8.4.3.1 配置文件同步到集群其他节点

deploy.sh /usr/local/app/hbase /usr/local/app/ slave

8.4.3.2 启动zookeeper

[root@node01 conf]# runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh start" all

********************node01********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

********************node02********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

********************node03********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@node01 conf]# runRemoteCmd.sh "/usr/local/app/zookeeper/bin/zkServer.sh status" all

********************node01********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Mode: follower

********************node02********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Mode: leader

********************node03********************

JMX enabled by default

Using config: /usr/local/app/zookeeper/bin/../conf/zoo.cfg

Mode: follower

8.4.3.3 启动HDFS

[root@node01 conf]# $HADOOP_HOME/sbin/start-dfs.sh

Starting namenodes on [node01 node02]

node01: starting namenode, logging to /usr/local/app/hadoop/logs/hadoop-root-namenode-node01.out

node02: starting namenode, logging to /usr/local/app/hadoop/logs/hadoop-root-namenode-node02.out

node03: starting datanode, logging to /usr/local/app/hadoop/logs/hadoop-root-datanode-node03.out

node01: starting datanode, logging to /usr/local/app/hadoop/logs/hadoop-root-datanode-node01.out

node02: starting datanode, logging to /usr/local/app/hadoop/logs/hadoop-root-datanode-node02.out

Starting journal nodes [node01 node02 node03]

node01: starting journalnode, logging to /usr/local/app/hadoop/logs/hadoop-root-journalnode-node01.out

node02: starting journalnode, logging to /usr/local/app/hadoop/logs/hadoop-root-journalnode-node02.out

node03: starting journalnode, logging to /usr/local/app/hadoop/logs/hadoop-root-journalnode-node03.out

Starting ZK Failover Controllers on NN hosts [node01 node02]

node01: starting zkfc, logging to /usr/local/app/hadoop/logs/hadoop-root-zkfc-node01.out

node02: starting zkfc, logging to /usr/local/app/hadoop/logs/hadoop-root-zkfc-node02.out

[root@node01 conf]# jps

7984 JournalNode

8337 Jps

7783 DataNode

7673 NameNode

8266 DFSZKFailoverController

7487 QuorumPeerMain

8.4.3.3 启动HBase

[root@node01 conf]# $HBASE_HOME/bin/start-hbase.sh

master running as process 8820. Stop it first.

node03: starting regionserver, logging to /usr/local/app/hbase/bin/../logs/hbase-root-regionserver-node03.out

node02: starting regionserver, logging to /usr/local/app/hbase/bin/../logs/hbase-root-regionserver-node02.out

node01: regionserver running as process 8959. Stop it first.

node03: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node03: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node02: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node02: starting master, logging to /usr/local/app/hbase/bin/../logs/hbase-root-master-node02.out

[root@node01 conf]# jps

7984 JournalNode

9536 Jps

8820 HMaster

7783 DataNode

7673 NameNode

8266 DFSZKFailoverController

7487 QuorumPeerMain

8959 HRegionServer

8.5 登录网站

http://192.168.100.100:16010/

http://192.168.100.101:16010/

9. Sqoop安装【仅在node01安装】

- Sqoop命令最终会转换为Map作业

9.1 下载解压

官网 下载1.4.6版本

tar -zxvf sqoop-1.4.6.bin__hadoop-2.0.4-alpha.tar.gz -C /usr/local/app/

mv /usr/local/app/sqoop-1.4.6.bin__hadoop-2.0.4-alpha /usr/local/app/sqoop

9.2 修改配置文件

9.2.1 sqoop-env.sh

cp /usr/local/app/sqoop/conf/sqoop-env-template.sh /usr/local/app/sqoop/conf/sqoop-env.sh

vi /usr/local/app/sqoop/conf/sqoop-env.sh

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# included in all the hadoop scripts with source command

# should not be executable directly

# also should not be passed any arguments, since we need original $*

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

#export HADOOP_COMMON_HOME=

export HADOOP_COMMON_HOME=/usr/local/app/hadoop

#Set path to where hadoop-*-core.jar is available

#export HADOOP_MAPRED_HOME=

export HADOOP_MAPRED_HOME=/usr/local/app/hadoop

#set the path to where bin/hbase is available

#export HBASE_HOME=

# export HBASE_HOME=/usr/local/app/hbase

#Set the path to where bin/hive is available

#export HIVE_HOME=

export HIVE_HOME=/usr/local/app/hive

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=

export ZOOCFGDIR=/usr/local/app/zookeeper

9.2.2 ~/.bashrc

[root@node01 app]# vi ~/.bashrc

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

JAVA_HOME=/usr/local/app/jdk

HADOOP_HOME=/usr/local/app/hadoop

HIVE_HOME=/usr/local/app/hive

CLASSPATH=,:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:/root/tools:$HIVE_HOME/bin:$PATH

export JAVA_HOME CLASSPATH PATH HADOOP_HOME HIVE_HOME

export HBASE_HOME=/usr/local/app/hbase

export SQOOP_HOME=/usr/local/app/sqoop

[root@node01 app]# source ~/.bashrc

- 添加Sqoop环境变量

export SQOOP_HOME=/usr/local/app/sqoop

9.2.3 复制jar包

cp /usr/local/app/hive/lib/mysql-connector-java-5.1.38.jar /usr/local/app/sqoop/lib/

9.3 测试运行

[root@node01 sqoop]# bin/sqoop help

Warning: /usr/local/app/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/app/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/app/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/app/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

23/02/13 17:35:07 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

[root@node01 sqoop]# bin/sqoop list-databases --connect jdbc:mysql://node01 --username hive --password hive

Warning: /usr/local/app/sqoop/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /usr/local/app/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/app/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/app/hbase/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

23/02/13 17:39:10 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

23/02/13 17:39:10 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

23/02/13 17:39:10 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

hive

mysql

performance_schema

10. Flume安装

10.1 下载解压

官网 下载1.9.0版本

tar -zxvf apache-flume-1.9.0-bin.tar.gz -C /usr/local/app/

mv /usr/local/app/apache-flume-1.9.0-bin /usr/local/app/flume

10.2 测试Flume

- 启动Flume Agent

[root@node01 flume]#cp /usr/local/app/flume/conf/flume-conf.properties.template /usr/local/app/flume/conf/flume-conf.properties

[root@node01 flume]# bin/flume-ng agent -n agent -c conf -f conf/flume-conf.properties -Dflume.root.logger=INFO,console

- Flume命令行参数解释如下:

- Flume-ng脚本后面的agent代表启动Flume进程,

- -n指定的是配置文件中Agent的名称

- -c指定配置文件所在目录

- -f指定具体的配置文件

- -Dflume.root.logger=INFO,console指的是控制台打印INFO,console级别的日志信息。

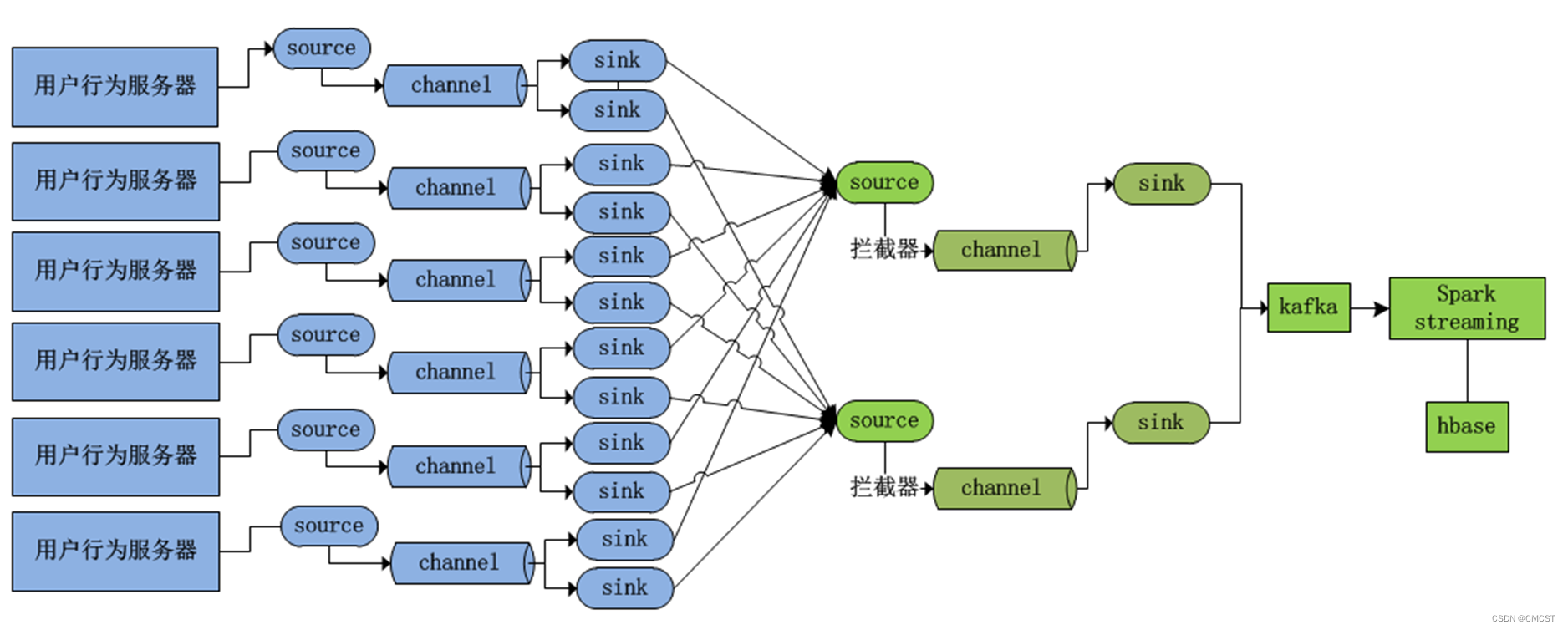

10.3 Flume分布式集群搭建

- 集群架构图

10.3.1 配置Flume采集任务

vi taildir-file-selector-avro.properties

#定义source、channel、sink的名称

agent1.sources = taildirSource