VINS中没有直接使用opencv的去畸变函数,而是自己编写了迭代函数完成去畸变操作,主要是为了加快去畸变计算速度

本文对二者的结果精度和耗时进行了对比

VINS-Mono/Fusion与OpenCV去畸变对比

- 1 去畸变原理

- 2 代码实现

- 2.1 OpenCV去畸变

- 2.2 VINS去畸变

- 3 二者对比

1 去畸变原理

opencv去畸变操作由cv::undistortPoints实现

VINS去畸变由PinholeCamera::liftProjective实现(以针孔相机为例)

二者均采用了迭代求解,通过多次迭代逼近真值。其中cv::undistortPoints方法中默认迭代5次,并计算每次重投影误差是否小于阈值,VINS去畸变方法只设置了迭代8次。

二者均输入像素坐标,输出归一化坐标。

2 代码实现

2.1 OpenCV去畸变

opencv去畸变操作由cv::undistortPoints实现,代码在opencv-3.4.13/modules/imgproc/src

undistortPoints首先处理了输入参数,主要实现部分调用cvUndistortPointsInternal

void undistortPoints( InputArray _src, OutputArray _dst,InputArray _cameraMatrix, InputArray _distCoeffs,InputArray _Rmat, InputArray _Pmat, TermCriteria criteria)

void undistortPoints( InputArray _src, OutputArray _dst,

InputArray _cameraMatrix,

InputArray _distCoeffs,

InputArray _Rmat,

InputArray _Pmat,

TermCriteria criteria)

{

Mat src = _src.getMat(), cameraMatrix = _cameraMatrix.getMat();

Mat distCoeffs = _distCoeffs.getMat(), R = _Rmat.getMat(), P = _Pmat.getMat();

int npoints = src.checkVector(2), depth = src.depth();

if (npoints < 0)

src = src.t();

npoints = src.checkVector(2);

CV_Assert(npoints >= 0 && src.isContinuous() && (depth == CV_32F || depth == CV_64F));

if (src.cols == 2)

src = src.reshape(2);

_dst.create(npoints, 1, CV_MAKETYPE(depth, 2), -1, true);

Mat dst = _dst.getMat();

CvMat _csrc = cvMat(src), _cdst = cvMat(dst), _ccameraMatrix = cvMat(cameraMatrix);

CvMat matR, matP, _cdistCoeffs, *pR=0, *pP=0, *pD=0;

if( !R.empty() )

pR = &(matR = cvMat(R));

if( !P.empty() )

pP = &(matP = cvMat(P));

if( !distCoeffs.empty() )

pD = &(_cdistCoeffs = cvMat(distCoeffs));

cvUndistortPointsInternal(&_csrc, &_cdst, &_ccameraMatrix, pD, pR, pP, criteria);

}

static void cvUndistortPointsInternal( const CvMat* _src, CvMat* _dst, const CvMat* _cameraMatrix, const CvMat* _distCoeffs, const CvMat* matR, const CvMat* matP, cv::TermCriteria criteria)

static void cvUndistortPointsInternal( const CvMat* _src, CvMat* _dst, const CvMat* _cameraMatrix,

const CvMat* _distCoeffs,

const CvMat* matR, const CvMat* matP, cv::TermCriteria criteria)

{

CV_Assert(criteria.isValid());

double A[3][3], RR[3][3], k[14]={0,0,0,0,0,0,0,0,0,0,0,0,0,0};

CvMat matA=cvMat(3, 3, CV_64F, A), _Dk;

CvMat _RR=cvMat(3, 3, CV_64F, RR);

cv::Matx33d invMatTilt = cv::Matx33d::eye();

cv::Matx33d matTilt = cv::Matx33d::eye();

CV_Assert( CV_IS_MAT(_src) && CV_IS_MAT(_dst) &&

(_src->rows == 1 || _src->cols == 1) &&

(_dst->rows == 1 || _dst->cols == 1) &&

_src->cols + _src->rows - 1 == _dst->rows + _dst->cols - 1 &&

(CV_MAT_TYPE(_src->type) == CV_32FC2 || CV_MAT_TYPE(_src->type) == CV_64FC2) &&

(CV_MAT_TYPE(_dst->type) == CV_32FC2 || CV_MAT_TYPE(_dst->type) == CV_64FC2));

CV_Assert( CV_IS_MAT(_cameraMatrix) &&

_cameraMatrix->rows == 3 && _cameraMatrix->cols == 3 );

cvConvert( _cameraMatrix, &matA );

if( _distCoeffs )

{

CV_Assert( CV_IS_MAT(_distCoeffs) &&

(_distCoeffs->rows == 1 || _distCoeffs->cols == 1) &&

(_distCoeffs->rows*_distCoeffs->cols == 4 ||

_distCoeffs->rows*_distCoeffs->cols == 5 ||

_distCoeffs->rows*_distCoeffs->cols == 8 ||

_distCoeffs->rows*_distCoeffs->cols == 12 ||

_distCoeffs->rows*_distCoeffs->cols == 14));

_Dk = cvMat( _distCoeffs->rows, _distCoeffs->cols,

CV_MAKETYPE(CV_64F,CV_MAT_CN(_distCoeffs->type)), k);

cvConvert( _distCoeffs, &_Dk );

if (k[12] != 0 || k[13] != 0)

{

cv::detail::computeTiltProjectionMatrix<double>(k[12], k[13], NULL, NULL, NULL, &invMatTilt);

cv::detail::computeTiltProjectionMatrix<double>(k[12], k[13], &matTilt, NULL, NULL);

}

}

if( matR )

{

CV_Assert( CV_IS_MAT(matR) && matR->rows == 3 && matR->cols == 3 );

cvConvert( matR, &_RR );

}

else

cvSetIdentity(&_RR);

if( matP )

{

double PP[3][3];

CvMat _P3x3, _PP=cvMat(3, 3, CV_64F, PP);

CV_Assert( CV_IS_MAT(matP) && matP->rows == 3 && (matP->cols == 3 || matP->cols == 4));

cvConvert( cvGetCols(matP, &_P3x3, 0, 3), &_PP );

cvMatMul( &_PP, &_RR, &_RR );

}

const CvPoint2D32f* srcf = (const CvPoint2D32f*)_src->data.ptr;

const CvPoint2D64f* srcd = (const CvPoint2D64f*)_src->data.ptr;

CvPoint2D32f* dstf = (CvPoint2D32f*)_dst->data.ptr;

CvPoint2D64f* dstd = (CvPoint2D64f*)_dst->data.ptr;

int stype = CV_MAT_TYPE(_src->type);

int dtype = CV_MAT_TYPE(_dst->type);

int sstep = _src->rows == 1 ? 1 : _src->step/CV_ELEM_SIZE(stype);

int dstep = _dst->rows == 1 ? 1 : _dst->step/CV_ELEM_SIZE(dtype);

double fx = A[0][0];

double fy = A[1][1];

double ifx = 1./fx;

double ify = 1./fy;

double cx = A[0][2];

double cy = A[1][2];

int n = _src->rows + _src->cols - 1;

for( int i = 0; i < n; i++ )

{

double x, y, x0 = 0, y0 = 0, u, v;

if( stype == CV_32FC2 )

{

x = srcf[i*sstep].x;

y = srcf[i*sstep].y;

}

else

{

x = srcd[i*sstep].x;

y = srcd[i*sstep].y;

}

u = x; v = y;

x = (x - cx)*ifx;

y = (y - cy)*ify;

if( _distCoeffs ) {

// compensate tilt distortion

cv::Vec3d vecUntilt = invMatTilt * cv::Vec3d(x, y, 1);

double invProj = vecUntilt(2) ? 1./vecUntilt(2) : 1;

x0 = x = invProj * vecUntilt(0);

y0 = y = invProj * vecUntilt(1);

double error = std::numeric_limits<double>::max();

// compensate distortion iteratively

for( int j = 0; ; j++ )

{

//在这里判断

if ((criteria.type & cv::TermCriteria::COUNT) && j >= criteria.maxCount)

break;

if ((criteria.type & cv::TermCriteria::EPS) && error < criteria.epsilon)

break;

double r2 = x*x + y*y;

double icdist = (1 + ((k[7]*r2 + k[6])*r2 + k[5])*r2)/(1 + ((k[4]*r2 + k[1])*r2 + k[0])*r2);

if (icdist < 0) // test: undistortPoints.regression_14583

{

x = (u - cx)*ifx;

y = (v - cy)*ify;

break;

}

double deltaX = 2*k[2]*x*y + k[3]*(r2 + 2*x*x)+ k[8]*r2+k[9]*r2*r2;

double deltaY = k[2]*(r2 + 2*y*y) + 2*k[3]*x*y+ k[10]*r2+k[11]*r2*r2;

x = (x0 - deltaX)*icdist;

y = (y0 - deltaY)*icdist;

if(criteria.type & cv::TermCriteria::EPS)

{

double r4, r6, a1, a2, a3, cdist, icdist2;

double xd, yd, xd0, yd0;

cv::Vec3d vecTilt;

r2 = x*x + y*y;

r4 = r2*r2;

r6 = r4*r2;

a1 = 2*x*y;

a2 = r2 + 2*x*x;

a3 = r2 + 2*y*y;

cdist = 1 + k[0]*r2 + k[1]*r4 + k[4]*r6;

icdist2 = 1./(1 + k[5]*r2 + k[6]*r4 + k[7]*r6);

xd0 = x*cdist*icdist2 + k[2]*a1 + k[3]*a2 + k[8]*r2+k[9]*r4;

yd0 = y*cdist*icdist2 + k[2]*a3 + k[3]*a1 + k[10]*r2+k[11]*r4;

vecTilt = matTilt*cv::Vec3d(xd0, yd0, 1);

invProj = vecTilt(2) ? 1./vecTilt(2) : 1;

xd = invProj * vecTilt(0);

yd = invProj * vecTilt(1);

double x_proj = xd*fx + cx;

double y_proj = yd*fy + cy;

error = sqrt( pow(x_proj - u, 2) + pow(y_proj - v, 2) );

}

}

}

double xx = RR[0][0]*x + RR[0][1]*y + RR[0][2];

double yy = RR[1][0]*x + RR[1][1]*y + RR[1][2];

double ww = 1./(RR[2][0]*x + RR[2][1]*y + RR[2][2]);

x = xx*ww;

y = yy*ww;

if( dtype == CV_32FC2 )

{

dstf[i*dstep].x = (float)x;

dstf[i*dstep].y = (float)y;

}

else

{

dstd[i*dstep].x = x;

dstd[i*dstep].y = y;

}

}

}

2.2 VINS去畸变

void

PinholeCamera::liftProjective(const Eigen::Vector2d& p, Eigen::Vector3d& P) const

{

double mx_d, my_d,mx2_d, mxy_d, my2_d, mx_u, my_u;

double rho2_d, rho4_d, radDist_d, Dx_d, Dy_d, inv_denom_d;

//double lambda;

// Lift points to normalised plane

mx_d = m_inv_K11 * p(0) + m_inv_K13;

my_d = m_inv_K22 * p(1) + m_inv_K23;

if (m_noDistortion)

{

mx_u = mx_d;

my_u = my_d;

}

else

{

if (0)

{

double k1 = mParameters.k1();

double k2 = mParameters.k2();

double p1 = mParameters.p1();

double p2 = mParameters.p2();

// Apply inverse distortion model

// proposed by Heikkila

mx2_d = mx_d*mx_d;

my2_d = my_d*my_d;

mxy_d = mx_d*my_d;

rho2_d = mx2_d+my2_d;

rho4_d = rho2_d*rho2_d;

radDist_d = k1*rho2_d+k2*rho4_d;

Dx_d = mx_d*radDist_d + p2*(rho2_d+2*mx2_d) + 2*p1*mxy_d;

Dy_d = my_d*radDist_d + p1*(rho2_d+2*my2_d) + 2*p2*mxy_d;

inv_denom_d = 1/(1+4*k1*rho2_d+6*k2*rho4_d+8*p1*my_d+8*p2*mx_d);

mx_u = mx_d - inv_denom_d*Dx_d;

my_u = my_d - inv_denom_d*Dy_d;

}

else

{

// Recursive distortion model

int n = 8;

Eigen::Vector2d d_u;

distortion(Eigen::Vector2d(mx_d, my_d), d_u);

// Approximate value

mx_u = mx_d - d_u(0);

my_u = my_d - d_u(1);

for (int i = 1; i < n; ++i)

{

distortion(Eigen::Vector2d(mx_u, my_u), d_u);

mx_u = mx_d - d_u(0);

my_u = my_d - d_u(1);

}

}

}

// Obtain a projective ray

P << mx_u, my_u, 1.0;

}

3 二者对比

在相机坐标系下随机生成了 20 个观测点,并将其归算到归一化坐标系下作为真值。

#include <iostream>

#include <vector>

#include <random>

#include <Eigen/Core>

#include <Eigen/Geometry>

#include <opencv2/opencv.hpp>

#include <opencv2/core/eigen.hpp>

#include <chrono>

#include "Camera.h"

using namespace std;

int main()

{

// 随机数生成 20 个 三维特征点

int featureNums=20;

default_random_engine generator;

vector<cv::Point2f> pts_truth; //归一化真值

vector<cv::Point2f> uv_pts; //像素坐标

vector<cv::Point2f> cv_un_pts, vins_un_pts; //归一化坐标

for(int i = 0; i < featureNums; ++i)

{

uniform_real_distribution<double> xy_rand(-4, 4.0);

uniform_real_distribution<double> z_rand(8., 10.);

double tx = xy_rand(generator);

double ty = xy_rand(generator);

double tz = z_rand(generator);

Eigen::Vector2d p(tx/tz, ty/tz);

Eigen::Vector2d p_distorted;

distortion(p, p_distorted); //归一化坐标畸变

p_distorted+=p;

pts_truth.push_back(cv::Point2f(p(0), p(1)));

cv::Point2f uv(fx*p_distorted(0)+cx, fy*p_distorted(1)+cy); //投影到像素坐标

uv_pts.push_back(uv);

}

//OpenCV去畸变,输入像素坐标,输出归一化坐标

chrono::steady_clock::time_point cv_t1 = chrono::steady_clock::now();

cv::undistortPoints(uv_pts, cv_un_pts, K, distCoeffs);

chrono::steady_clock::time_point cv_t2 = chrono::steady_clock::now();

double cv_time = chrono::duration_cast<chrono::duration<double,milli>>(cv_t2-cv_t1).count();

cout<<"OpenCV"<<endl;

cout<<"used time: "<<cv_time/cv_un_pts.size()<<"ms"<<endl;

cout<<"pixel error: "<<GetResidual(cv_un_pts, pts_truth)<<endl;

//VINS去畸变

chrono::steady_clock::time_point vins_t1 = chrono::steady_clock::now();

liftProjective(uv_pts, vins_un_pts);

chrono::steady_clock::time_point vins_t2 = chrono::steady_clock::now();

double vins_time = chrono::duration_cast<chrono::duration<double, milli>>(vins_t2-vins_t1).count();

cout<<"VINS"<<endl;

cout<<"used time: "<<vins_time/vins_un_pts.size()<<"ms"<<endl;

cout<<"pixel error: "<<GetResidual(vins_un_pts, pts_truth)<<endl;

return 0;

}

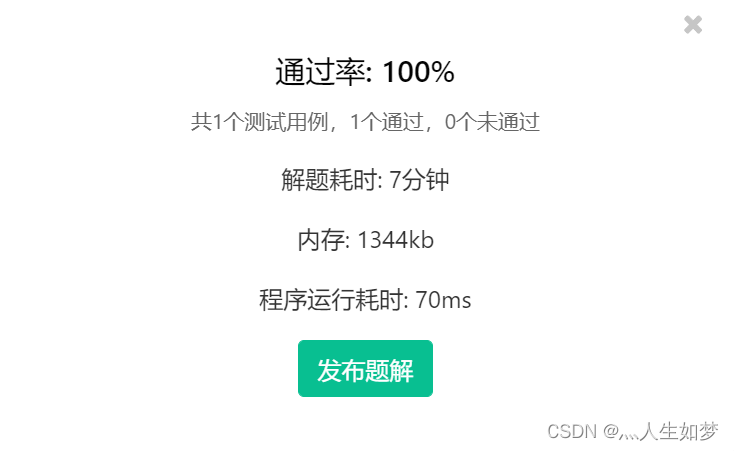

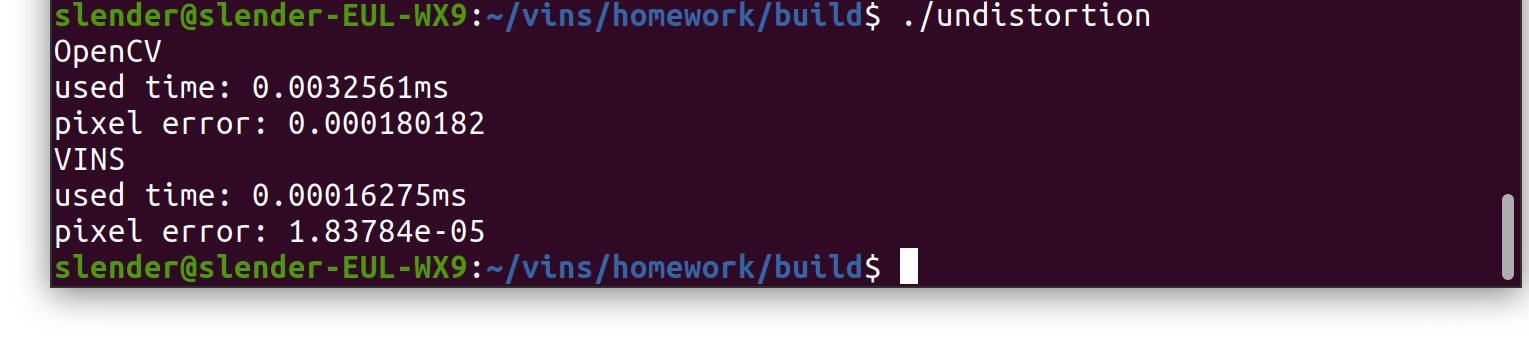

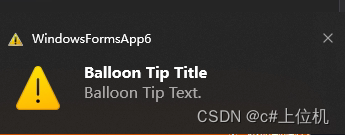

输出结果

给出了每个观测点的平均去畸变耗时和像素坐标系下的重投影误差。

VINS所采用的去畸变算法耗时更少,重投影误差平均值更小,opencv方法与其相差一个数量级。

![Reverse入门[不断记录]](https://img-blog.csdnimg.cn/4f5350c563364eac9cdf63440df5b863.png)