文章目录

- Conv2d

- 计算

- Conv2d 函数解析

- 代码示例

- MaxPool2d

- 计算

- 函数说明

- 卷积过程动画

- Transposed convolution animations

- Transposed convolution animations

参考视频:土堆说 卷积计算

https://www.bilibili.com/video/BV1hE411t7RN

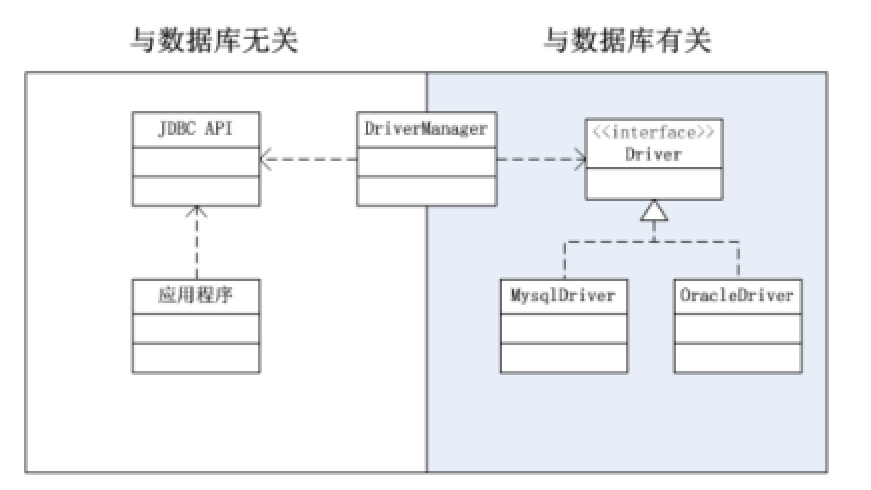

关于 torch.nn 和 torch.nn.function

torch.nn 是对 torch.nn.function 的封装,前者更方便实用。

Conv2d

卷积过程可见文末动画

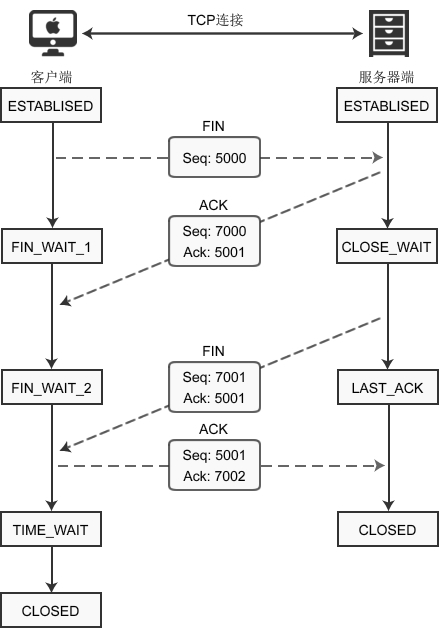

计算

卷积层输入特征图(input feature map)的尺寸为:H_i × W_i × C_i

- H_i :输入特征图的高

- W_i :输入特征图的宽

- C_i :输入特征图的通道数

(如果是第一个卷积层则是输入图像的通道数,如果是中间的卷积层,则是上一层的输出通道数

卷积层的参数如下:

- P:padding,补零的行数和列数

- F:正方形卷积核的边长

- S:stride,步幅

- K:输出通道数

输出特征图(output feature map)的尺寸为 H_o × W_o × C_o ,其中每一个变量的计算方式如下:

- H_o = (H_i + 2P − F)/S + 1

- W_o = (W_i + 2P − F)/S + 1

- C_o = K

- 卷积时,超出边界的不计算。

参数量大小的计算,分为weights和biases:

首先,计算weights的参数量:F × F × C_i × K

接着计算biases的参数量:K

所以总参数量为:F × F × C_i × K + K

计算示例

| 输入 | 卷积核 | 步长 | padding | 输出 | 计算 |

|---|---|---|---|---|---|

| 5x5 | 2x2 | 1 | 0 | 4x4 | 4 = (5-2)/1 + 1 |

| 5x5 | 3x3 | 1 | 0 | 3x3 | 3 = (5-3)/1 + 1 |

| 5x5 | 2x2 | 2 | 0 | 2x2 | 2 = (5-2)/2 + 1 |

| 6x6 | 2x2 | 2 | 0 | 3x3 | 3 = (6-2)/2 + 1 |

| 5x5 | 2x2 | 1 | 1 | 6x6 | 4 = (5 + 1*2 - 2)/1 + 1 |

| 5x5 | 3x3 | 1 | 1 | 5x5 | 3 = (5 + 1*2 - 3)/1 + 1 |

| 5x5 | 3x3 | 2 | 2 | 4x4 | 3 = (5 + 2*2 - 3)/2 + 1 |

Conv2d 函数解析

torch.nn.functional.conv2d官方说明

https://pytorch.org/docs/stable/generated/torch.nn.functional.conv2d.html#torch.nn.functional.conv2d

torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True, padding_mode=‘zeros’, device=None, dtype=None)

- in_channels

- out_channels

- kernel_size,卷积核大小;可以是一个数(n*n矩阵),也可以是一个元组。这个值在训练过程中,会不断被调整。

- stride=1

- padding=0

- dilation=1,卷积核对应位的距离

- groups=1,分组卷积;一般为1,很少改动。

- bias=True,偏置,一般为True

- padding_mode=‘zeros’,如果设置了 padding,填充模式。默认为 zeros,即填充0。

- device=None

- dtype=None)

一般只设置前五个参数

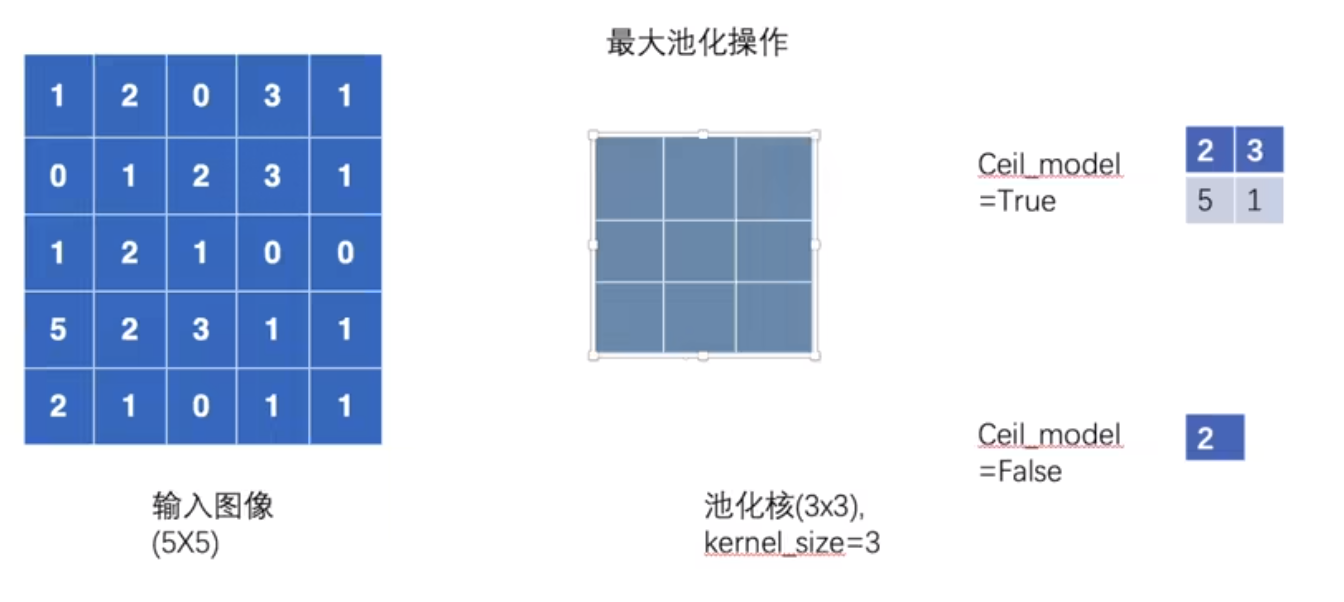

代码示例

import torch

import torch.nn.functional as F

t1 = torch.Tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1], ])

kernel = torch.Tensor([

[1, 2, 1],

[0, 1, 0],

[2, 1, 0]

])

t1.shape, kernel.shape

# (torch.Size([5, 5]), torch.Size([3, 3]))

# channel 和 batch_size 为 1

ip = torch.reshape(t1, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

ip.shape, kernel.shape

# (torch.Size([1, 1, 5, 5]), torch.Size([1, 1, 3, 3]))

op = F.conv2d(ip, kernel, stride=1)

op, op.shape

'''

(tensor([[[[10., 12., 12.],

[18., 16., 16.],

[13., 9., 3.]]]]),

torch.Size([1, 1, 3, 3]))

'''

# 不同 stride

op = F.conv2d(ip, kernel, stride=2)

op, op.shape

'''

(tensor([[[[10., 12.],

[13., 3.]]]]),

torch.Size([1, 1, 2, 2]))

'''

# 增加 padding

op = F.conv2d(ip, kernel, stride=2, padding=1)

op, op.shape

'''

(tensor([[[[ 1., 4., 8.],

[ 7., 16., 8.],

[14., 9., 4.]]]]),

torch.Size([1, 1, 3, 3]))

'''

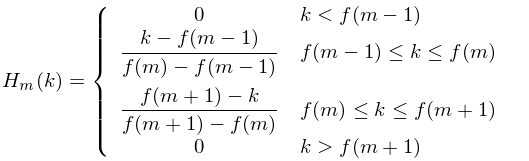

MaxPool2d

池化的目的是保留特征,减少数据量;

最大池化也被称为 下采样;

另外池化操作是分别应用到每一个深度切片层。输出深度 与 输入的深度 相同。

计算

- 输入宽高深:H_i,W_i, D_i

- 滤波器宽高:f_w, f_h

- S: stride,步长

输出为:

H_o = (H_i - f_h)/S + 1

W_o = (W_i - f_w)/S + 1

D_o = D_i

输入维度是 4x4x5 (HxWxD)

滤波器大小 2x2 (HxW)

stride 的高和宽都是 2 (S)

函数说明

- 官方说明

https://pytorch.org/docs/stable/generated/torch.nn.MaxPool2d.html#torch.nn.MaxPool2d

torch.nn.MaxPool2d(kernel_size, stride=None, padding=0, dilation=1, return_indices=False, ceil_mode=False)

- ceil_mode,超出范围时是否计算

代码实现

import torch

import torch.nn as nn

# MaxPool2d 函数 input 需要是 4维

ip = torch.reshape(t1, (-1, 1, 5, 5))

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.maxpool = nn.MaxPool2d(kernel_size=3, ceil_mode=True)

# self.maxpool = nn.MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self, input):

output = self.maxpool(input)

return output

net = Net()

ret = net(ip)

ret

# tensor([[[[2., 3.], [5., 1.]]]]) # ceil_mode=True

# tensor([[[[2.]]]]) # ceil_mode=False

数据集中调用

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

data_path = '/xxxx/cifar10'

datasets = torchvision.datasets.CIFAR10(data_path, train=False, download=True,

transform=torchvision.transforms.ToTensor())

data_loader = DataLoader(datasets, batch_size=64)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.maxpool1 = nn.MaxPool2d(kernel_size=3, ceil_mode=False)

# self.maxpool = nn.MaxPool2d(kernel_size=3, ceil_mode=False)

def forward(self, input):

output = self.maxpool1(input)

return output

writer = SummaryWriter('logs_maxpool1')

step = 0

net = Net()

for data in data_loader:

imgs, targets = data

writer.add_images('input', imgs, step)

output = net(imgs)

writer.add_images('output', output, step)

step = step + 1

writer.close()

- 启动 tensorboard:

tensorboard --logdir=logs_maxpool1

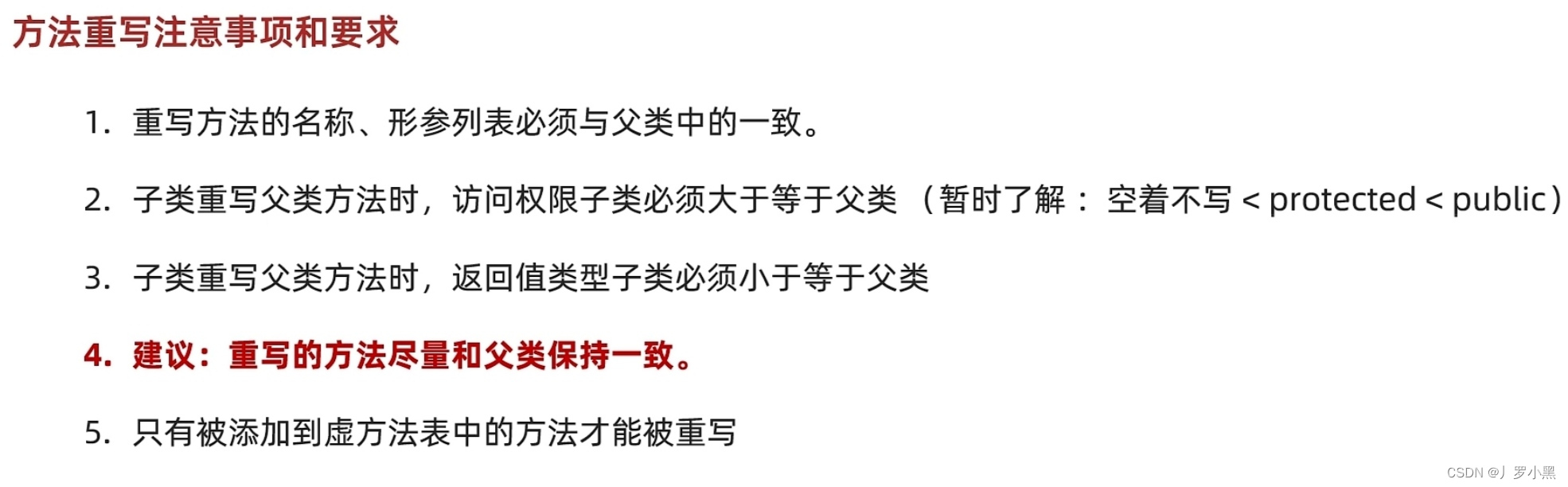

卷积过程动画

图片来自:https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md

Transposed convolution animations

No padding, no strides

Arbitrary padding, no strides

Half padding, no strides

Full padding, no strides

No padding, strides

Padding, strides

Padding, strides (odd)

Transposed convolution animations

No padding, no strides, transposed

Arbitrary padding, no strides, transposed

Half padding, no strides, transposed

Full padding, no strides, transposed

No padding, strides, transposed

Padding, strides, transposed

Padding, strides, transposed (odd)

![Reverse入门[不断记录]](https://img-blog.csdnimg.cn/4f5350c563364eac9cdf63440df5b863.png)