修改计算机名称

//修改计算机名称

[root@localhost ~]# hostnamectl set-hostname ant150

//快速生效

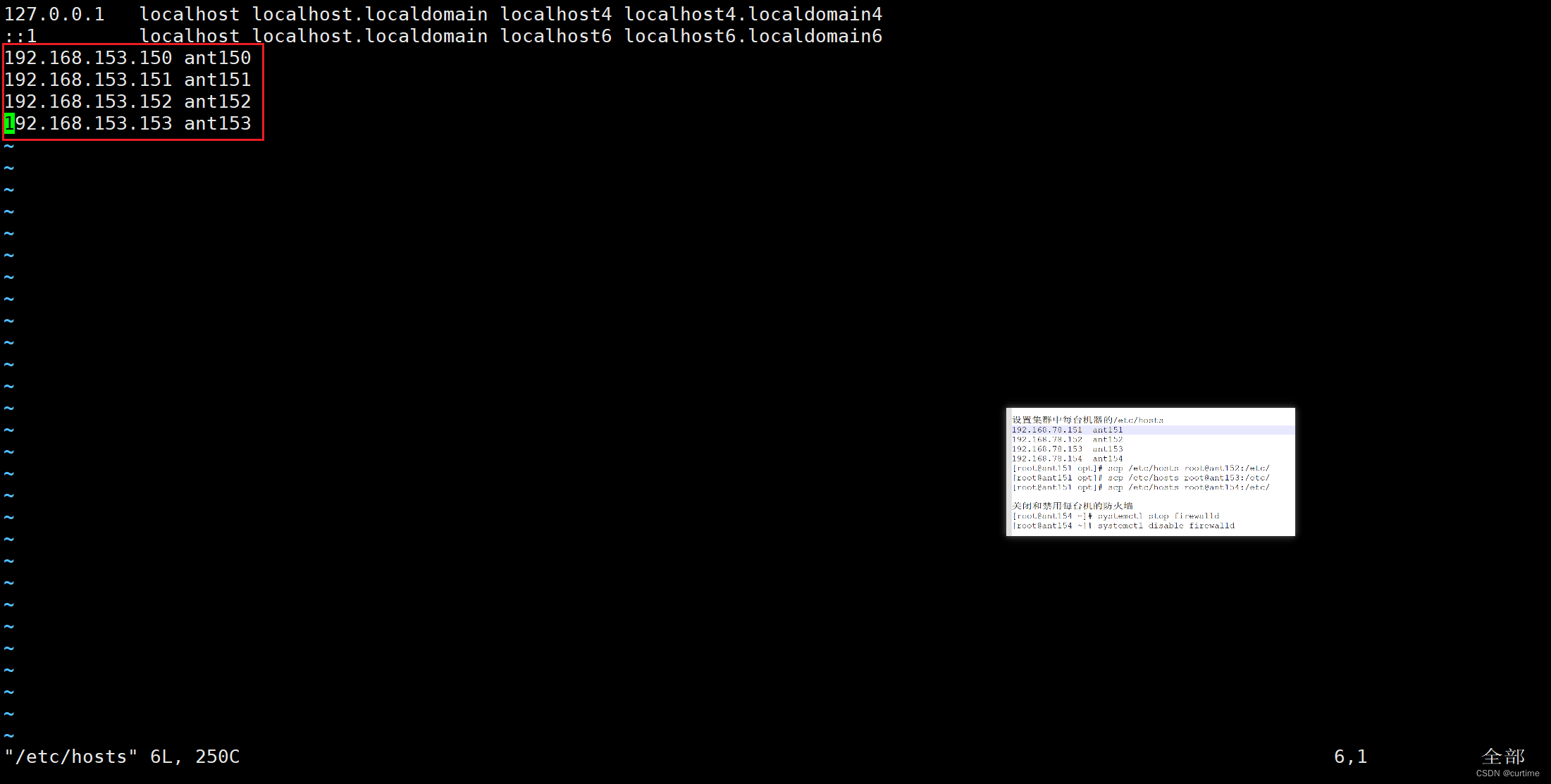

[root@localhost ~]# bash主机名称映射

[root@ant150 ~]# vim /etc/hosts192.168.153.150 ant150

192.168.153.151 ant151

192.168.153.152 ant152

192.168.153.153 ant153

免密登录

免密登录

[root@ant150 ~]# ssh-keygen -t rsa -P ''

[root@ant150 ~]# cd .ssh/

[root@ant151 .ssh]# cat id_rsa.pub >> ./authorized_keys

//将本机的公钥拷贝到要免密登陆的目标机器

[root@ant150 .ssh]# ssh-copy-id -i ./id_rsa.pub -p22 root@ant150

[root@ant150 .ssh]# ssh-copy-id -i ./id_rsa.pub -p22 root@ant151

[root@ant150 .ssh]# ssh-copy-id -i ./id_rsa.pub -p22 root@ant152

[root@ant150 .ssh]# ssh-copy-id -i ./id_rsa.pub -p22 root@ant153

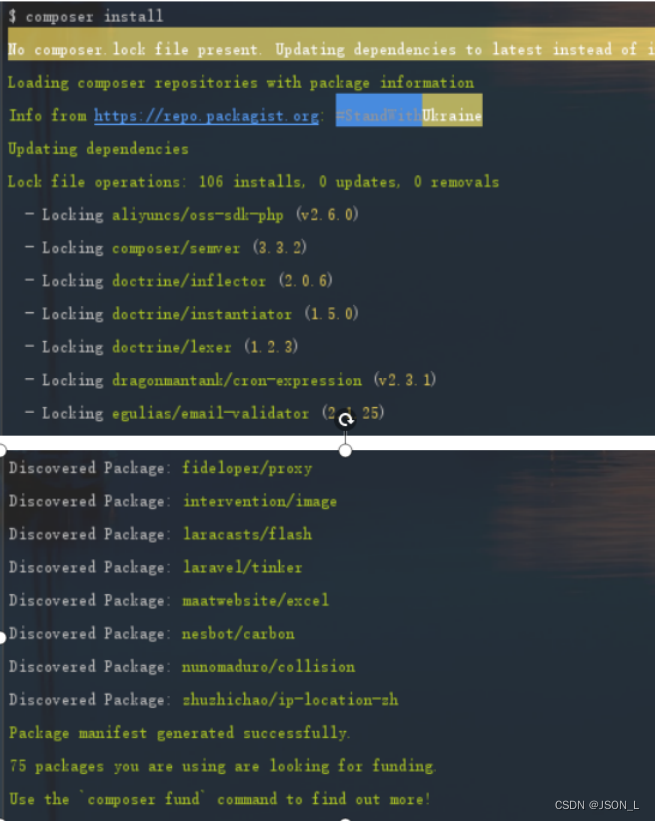

使用脚本安装jdk和zookeeper

#! /bin/bash

echo 'auto install begining....'#global var

jdk=true

zk=trueif [ "$jdk" = true ];then

echo 'jdk install set true'

echo 'setup jdk-8u321-linux-x64.tar.gz'

tar -zxf /opt/install/jdk-8u321-linux-x64.tar.gz -C /opt/soft/

mv /opt/soft/jdk1.8.0_321 /opt/soft/jdk180

sed -i '73a\export PATH=$PATH:$JAVA_HOME/bin' /etc/profile

sed -i '73a\export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar' /etc/profile

sed -i '73a\export JAVA_HOME=/opt/soft/jdk180' /etc/profile

sed -i '73a\#jdk' /etc/profile

echo 'setup jdk 8 success!!!'

fihostname=`hostname`

if [ "$zk" = true ];then

echo 'zookeeper install set true'

echo 'setup zookeeper-3.4.5-cdh5.14.2.tar.gz'

tar -zxf /opt/install/zookeeper-3.4.5-cdh5.14.2.tar.gz -C /opt/soft/

mv /opt/soft/zookeeper-3.4.5-cdh5.14.2 /opt/soft/zk345

cp /opt/soft/zk345/conf/zoo_sample.cfg /opt/soft/zk345/conf/zoo.cfg

mkdir -p /opt/soft/zk345/datas

sed -i '12c dataDir=/opt/soft/zk345/datas' /opt/soft/zk345/conf/zoo.cfg

echo "server.0=$hostname:2287:3387" >> /opt/soft/zk345/conf/zoo.cfg

echo "0" > /opt/soft/zk345/datas/myid

sed -i '73a\export PATH=$PATH:$ZOOKEEPER_HOME/bin' /etc/profile

sed -i '73a\export ZOOKEEPER_HOME=/opt/soft/zk345' /etc/profile

sed -i '73a\#ZK' /etc/profile

echo 'setup zookeeper success!!!'

fi

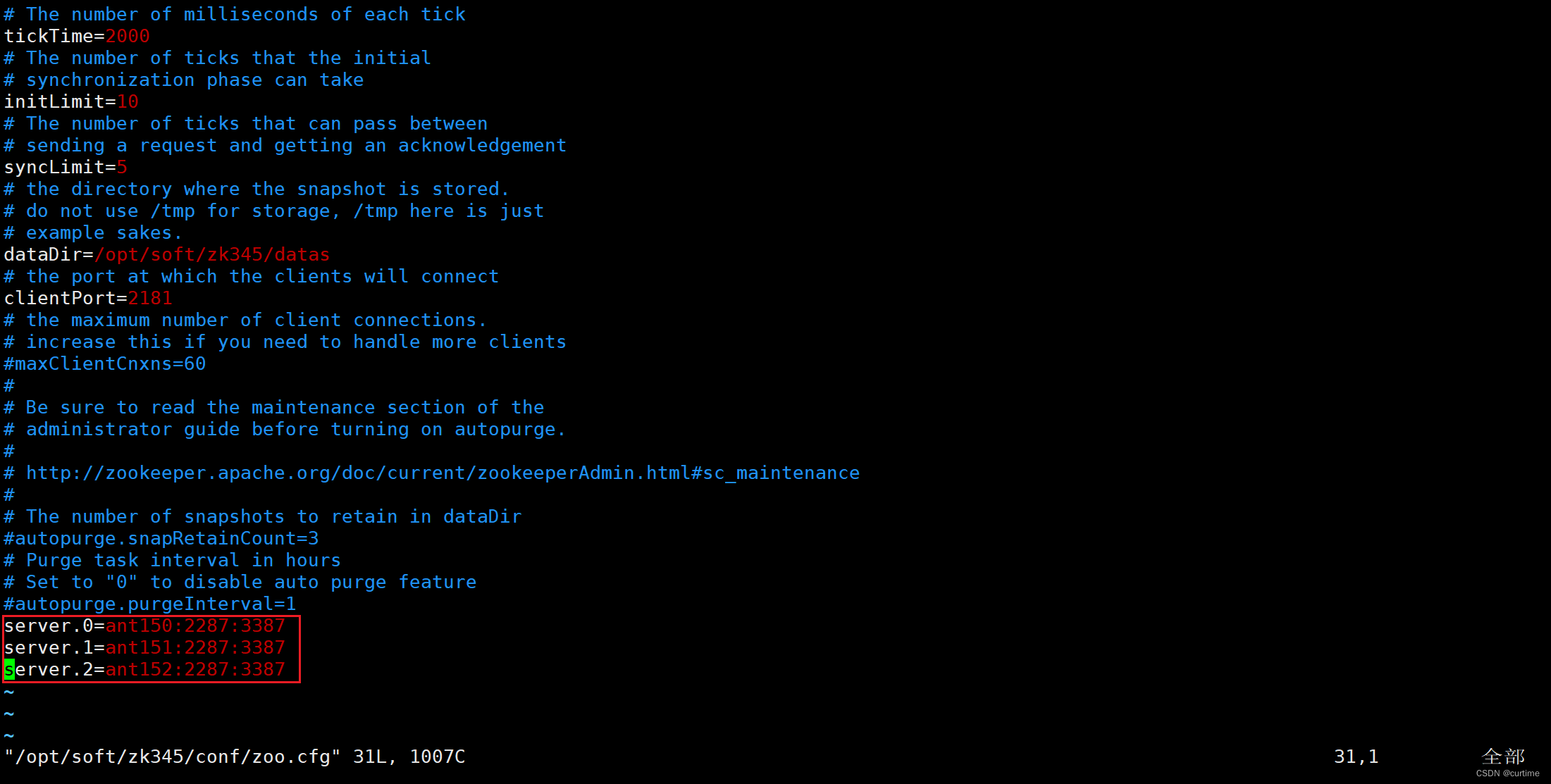

zoo.cfg添加配置

server.0=ant150:2287:3387

server.1=ant151:2287:3387

server.2=ant152:2287:3387

删除datas目录下所有文件

[root@ant150 datas]# rm -rf /opt/soft/zk345/datas/*把ant150 上的jdk、zk、profile复制到其他服务器后

[root@ant150 zk345]# scp -r ../zk345/ root@ant151:/opt/soft/

[root@ant150 zk345]# scp -r ../zk345/ root@ant152:/opt/soft/

[root@ant150 zk345]# scp -r ../zk345/ root@ant153:/opt/soft/

[root@ant150 opt]# scp -r ./soft/jdk180/ root@ant151:/opt/soft/

[root@ant150 opt]# scp -r ./soft/jdk180/ root@ant152:/opt/soft/

[root@ant150 opt]# scp -r ./soft/jdk180/ root@ant153:/opt/soft/

[root@ant150 datas]# scp /etc/profile root@ant151:/etc/

[root@ant150 datas]# scp /etc/profile root@ant152:/etc/

[root@ant150 datas]# scp /etc/profile root@ant153:/etc/

[root@ant151 datas]# source /etc/profile

[root@ant152 datas]# source /etc/profile在服务器150、151 、152 /opt/soft/zk345/datas目录下

[root@ant150 datas]# echo "0" > myid

[root@ant151 datas]# echo "1" > myid

[root@ant152 datas]# echo "2" > myid启动zkServer.sh start

[root@ant150 datas]# zkServer.sh start

[root@ant151 datas]# zkServer.sh start

[root@ant152 datas]# zkServer.sh start

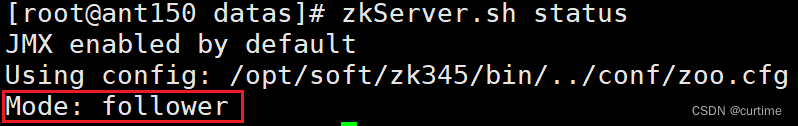

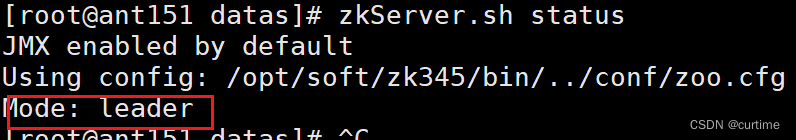

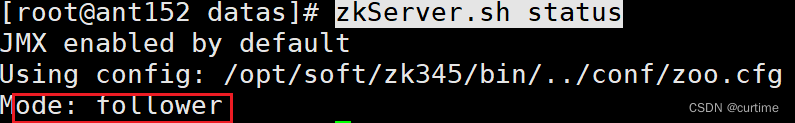

//查看状态

[root@ant150 datas]# zkServer.sh status

[root@ant151 datas]# zkServer.sh status

[root@ant152 datas]# zkServer.sh status出现任意一台mode为leader 另外两台为follower 安装成功

时间同步

//安装ntpdate

[root@ant150 datas]# yum install ntpdate -y

//创建定时任务

[root@ant150 conf]# crontab -e

*/10 * * * * /usr/sbin/ntpdate time.windows.com

[root@ant150 conf]# service crond reload

[root@ant150 conf]# service crond restart编写脚本批量操作zookeeper启动、关闭、查看状态

[root@ant150 shell]# vim zkop.sh

[root@ant150 shell]# chmod 777 ./zkop.sh

#! /bin/bash

case $1 in

"start") {

for i in ant150 ant151 ant152

do

ssh $i "source /etc/profile; /opt/soft/zk345/bin/zkServer.sh start "

done

};;

"stop"){

for i in ant150 ant151 ant152

do

ssh $i "source /etc/profile; /opt/soft/zk345/bin/zkServer.sh stop "

done

};;

"status"){

for i in ant150 ant151 ant152

do

ssh $i "source /etc/profile; /opt/soft/zk345/bin/zkServer.sh status "

done

};;

esac编写脚本批量查看服务器启动状态

[root@ant150 shell]# vim showjps.sh

[root@ant150 shell]# chmod 777 ./showjps.sh

#! /bin/bash

for i in ant150 ant151 ant152 ant153

do

echo --------$i 服务启动状态---------

ssh $i "source /etc/profile; /opt/soft/jdk180/bin/jps"

done

安装psmisc

[root@ant150 shell]# yum install psmisc -y

[root@ant151 shell]# yum install psmisc -y

[root@ant152 shell]# yum install psmisc -y

[root@ant153 shell]# yum install psmisc -y解压hadoop-3.1.3.tar.gz

[root@ant150 install]# tar -zxf hadoop-3.1.3.tar.gz -C ../soft/

//修改文件名

[root@ant150 install]# mv hadoop-3.1.3/ hadoop313修改 /opt/soft/hadoop313/etc/hadoop 目录下配置文件

workers

ant150

ant151

ant152

ant153

hadoop-env.sh

export JAVA_HOME=/opt/soft/jdk180

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export HDFS_JOURNALNODE_USER=root

export HDFS_ZKFC_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://gky</value>

<description>逻辑名称,必须与hdfs-site.xml中dfs.nameservices保持一致</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/soft/hadoop313/tmpdata</value>

<description>namenode上本地的hadoop临时文件夹</description>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

<description>默认用户</description>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

<description>读写文件的buffer大小为:128K</description>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>ant150:2181,ant151:2181,ant152:2181</value>

<description></description>

</property>

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>10000</value>

<description>hadoop链接zookeeper的超时时长设置为10s</description>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

<description>hadoop中每一个block文件的备份数量</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/soft/hadoop313/data/dfs/name</value>

<description>namenode上存储hdfs名字空间元数据的目录</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/soft/hadoop313/data/dfs/data</value>

<description>datanode上数据块的物理存储位置目录</description>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>ant150:9869</value>

<description></description>

</property>

<property>

<name>dfs.nameservices</name>

<value>gky</value>

<description>指定hdfs的nameservice,需要和core-site.xml中保持一致</description>

</property>

<property>

<name>dfs.ha.namenodes.gky</name>

<value>nn1,nn2</value>

<description>gky为集群的逻辑名称,映射了两个namenode的逻辑名</description>

</property>

<property>

<name>dfs.namenode.rpc-address.gky.nn1</name>

<value>ant150:9000</value>

<description>namenode1的RPC通信地址</description>

</property>

<property>

<name>dfs.namenode.http-address.gky.nn1</name>

<value>ant150:9870</value>

<description>namenode1的HTTP通信地址</description>

</property>

<property>

<name>dfs.namenode.rpc-address.gky.nn2</name>

<value>ant151:9000</value>

<description>namenode2的RPC通信地址</description>

</property>

<property>

<name>dfs.namenode.http-address.gky.nn2</name>

<value>ant151:9870</value>

<description>namenode2的HTTP通信地址</description>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://ant150:8485;ant151:8485;ant152:8485/gky</value>

<description>指定NameNode的edits元数据的共享存储位置(jouranalNode列表)</description>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/soft/hadoop313/data/journaldata</value>

<description>指定JournalNode在本地磁盘数据的位置</description>

</property>

<!--容错-->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

<description>开启NameNode故障自动切换</description>

</property>

<property>

<name>dfs.client.failover.proxy.provider.gky</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

<description>失败后自动切换的实现方式</description>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

<description>防止脑裂的处理</description>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

<description>使用sshfence隔离机制时,需要ssh免密登录</description>

</property><property>

<name>dfs.permissions.enabled</name>

<value>false</value>

<description>关闭HDFS操作权限验证</description>

</property>

<property>

<name>dfs.image.transfer.bandwidthPerSec</name>

<value>1048576</value>

<description></description>

</property>

<property>

<name>dfs.block.scanner.volume.bytes.per.second</name>

<value>1048576</value>

<description></description>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>job执行框架: local, classic or yarn</description>

<final>true</final>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>/opt/soft/hadoop313/etc/hadoop:/opt/soft/hadoop313/share/hadoop/common/lib/*:/opt/soft/hadoop313/share/hadoop/common/*:/opt/soft/hadoop313/share/hadoop/hdfs/*:/opt/soft/hadoop313/share/hadoop/hdfs/lib/*:/opt/soft/hadoop313/share/hadoop/mapreduce/*:/opt/soft/hadoop313/share/hadoop/mapreduce/lib/*:/opt/soft/hadoop313/share/hadoop/yarn/*:/opt/soft/hadoop313/share/hadoop/yarn/lib/*</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>ant150:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>ant150:19888</value>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1024</value>

<description>map阶段的task工作内存</description>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

<description>reduce阶段的task工作内存</description>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

<description>开启resourcemanager高可用</description>

</property><property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrcabc</value>

<description>指定yarn集群中的id</description>

</property><property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

<description>指定resourcemanager的名字</description>

</property><property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>ant152</value>

<description>设置rm1的名字</description>

</property><property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>ant153</value>

<description>设置rm2的名字</description>

</property><property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>ant152:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>ant153:8088</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>ant150:2181,ant151:2181,ant152:2181</value>

<description>指定zk集群地址</description>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<description>运行mapreduce程序必须配置的附属服务</description>

</property><property>

<name>yarn.nodemanager.local-dirs</name>

<value>/opt/soft/hadoop313/tmpdata/yarn/local</value>

<description>nodemanager本地存储目录</description>

</property>

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/opt/soft/hadoop313/tmpdata/yarn/log</value>

<description>nodemanager本地日志目录</description>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

<description>resource进程的工作内存</description>

</property><property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

<description>resource工作中所能使用机器的核数</description>

</property><property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>256</value>

<description></description>

</property><property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

<description>合并yarn日志</description>

</property><property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>86400</value>

<description>yarn日志保留多少秒</description>

</property><property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

<description></description>

</property><property>

<name>yarn.application.classpath</name>

<value>/opt/soft/hadoop313/etc/hadoop:/opt/soft/hadoop313/share/hadoop/common/lib/*:/opt/soft/hadoop313/share/hadoop/common/*:/opt/soft/hadoop313/share/hadoop/hdfs/*:/opt/soft/hadoop313/share/hadoop/hdfs/lib/*:/opt/soft/hadoop313/share/hadoop/mapreduce/*:/opt/soft/hadoop313/share/hadoop/mapreduce/lib/*:/opt/soft/hadoop313/share/hadoop/yarn/*:/opt/soft/hadoop313/share/hadoop/yarn/lib/*</value>

<description></description>

</property><property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

<description></description>

</property></configuration>

在ant150添加环境变量

[root@ant150 ~]# vim /etc/profile

//添加内容如下

# HADOOP_HOME

export HADOOP_HOME=/opt/soft/hadoop313

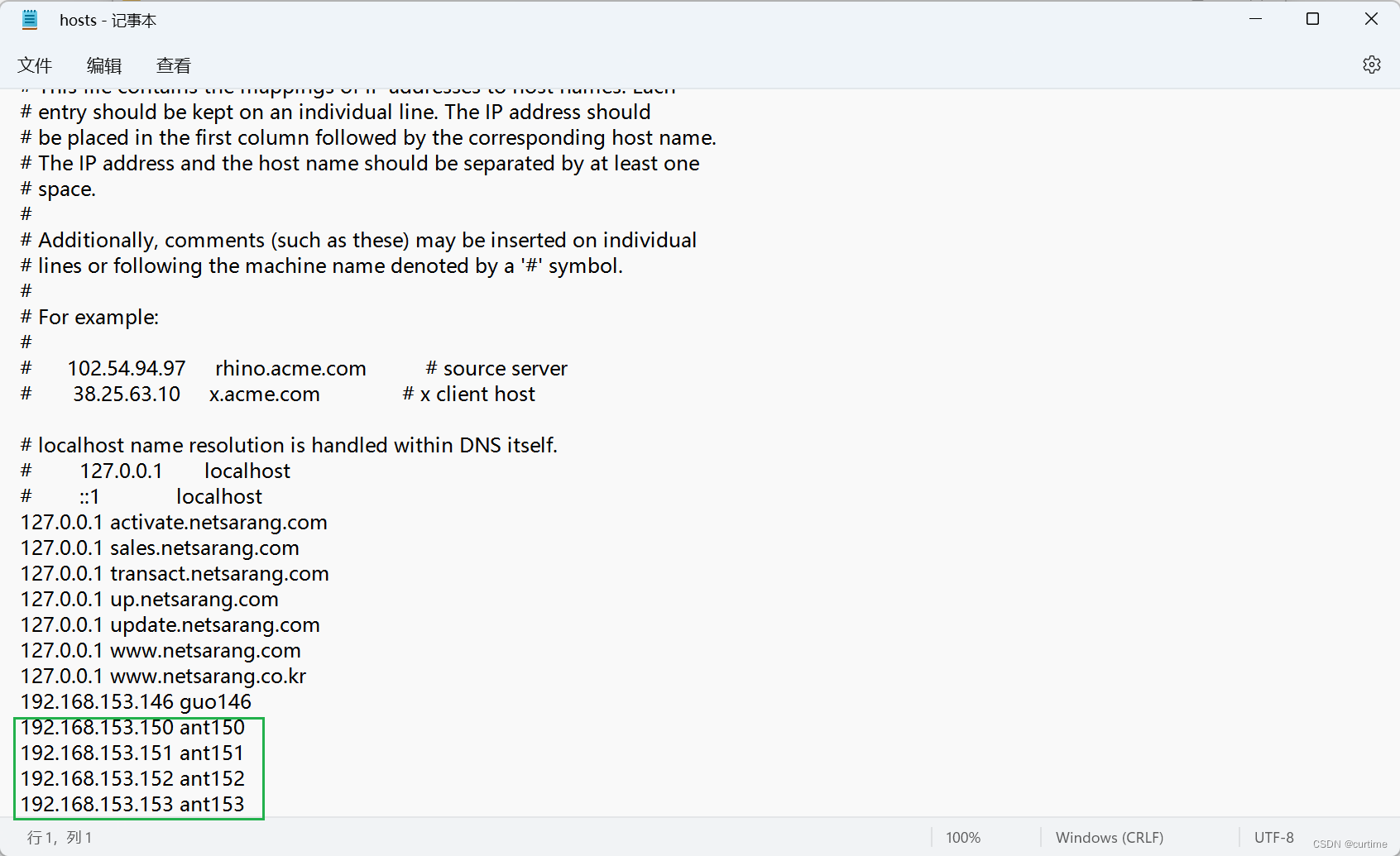

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/lib在windows映射IP地址

C:\Windows\System32\drivers\etc

把修改后的hadoop313、环境变量拷贝到另外三台服务器

[root@ant150 soft]# scp -r hadoop313/ root@ant151:/opt/soft/

[root@ant150 soft]# scp -r hadoop313/ root@ant152:/opt/soft/

[root@ant150 soft]# scp -r hadoop313/ root@ant153:/opt/soft/

[root@ant150 etc]# scp /etc/profile root@ant151:/etc//

[root@ant150 etc]# scp /etc/profile root@ant152:/etc/

[root@ant150 etc]# scp /etc/profile root@ant153:/etc/集群首次启动

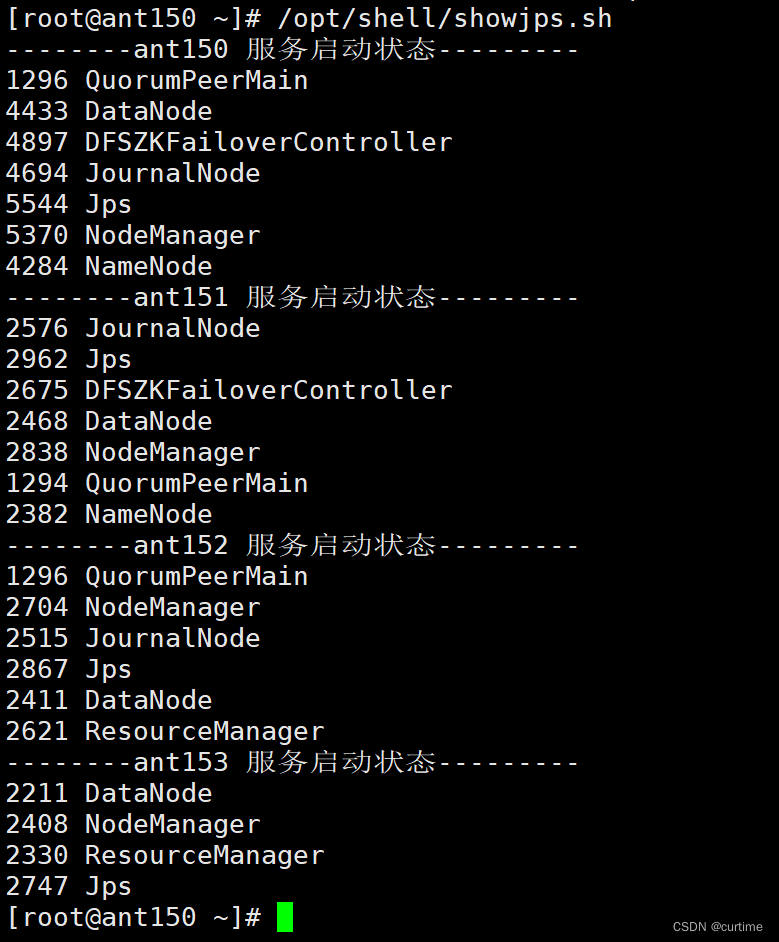

1、使用脚本启动zookeeper、查看服务

[root@ant150 ~]# /opt/shell/zkop.sh start

[root@ant150 ~]# /opt/shell/showjps.sh

2、启动ant150,ant151,ant152的journalnode服务

[root@ant150 ~]# hdfs --daemon start journalnode

[root@ant151 ~]# hdfs --daemon start journalnode

[root@ant152 ~]# hdfs --daemon start journalnode3、在ant150格式化hfds namenode

[root@ant150 ~]# hdfs namenode -format4、在ant150启动namenode服务

[root@ant150 ~]# hdfs --daemon start namenode5、在ant151机器上同步namenode信息

[root@ant151 soft]# hdfs namenode -bootstrapStandby6、在ant151启动namenode服务

[root@ant151 soft]# hdfs --daemon start namenode

//查看namenode节点状态

[root@ant150 soft]# hdfs haadmin -getServiceState nn1

[root@ant150 soft]# hdfs haadmin -getServiceState nn27、关闭所有dfs有关的服务

[root@ant150 soft]# stop-dfs.sh8、格式化zk

[root@ant150 soft]# hdfs zkfc -formatZK9、启动dfs

[root@ant150 soft]# start-dfs.sh10、启动yarn

[root@ant150 soft]# start-yarn.sh

//查看resourcemanager节点状态

[root@ant150 soft]# yarn rmadmin -getServiceState rm1

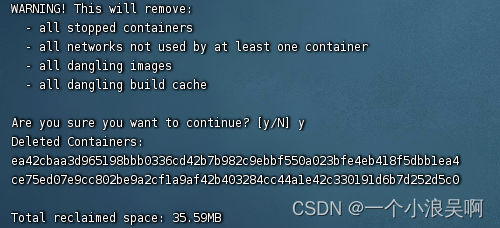

[root@ant150 soft]# yarn rmadmin -getServiceState rm2使用脚本查看服务,出现以下服务配置完成

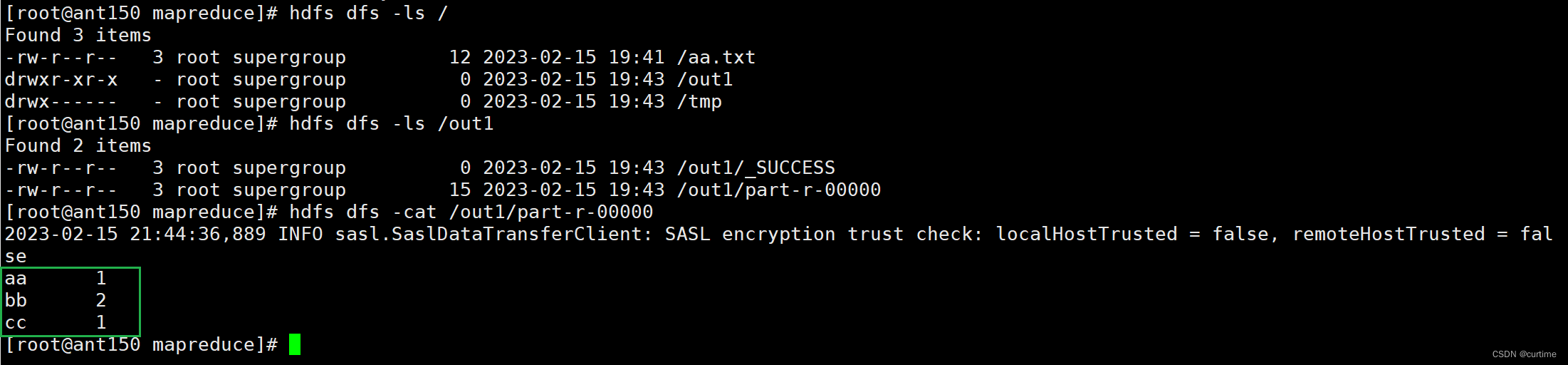

测试

[root@ant150 soft]# vim aa.txt

//添加如下内容

aa bb bb cc

//上传文件到服务器

[root@ant150 soft]# hdfs dfs -put ./aa.txt /

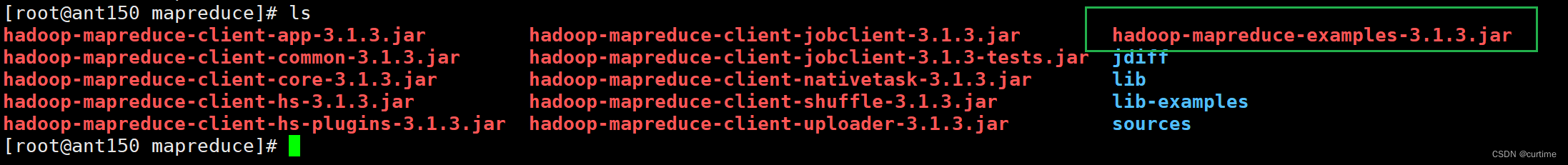

[root@ant150 mapreduce]# cd /opt/soft/hadoop313/share/hadoop/mapreduce

//使用jar包

[root@ant150 mapreduce]# hadoop jar ./hadoop-mapreduce-examples-3.1.3.jar wordcount /aa.txt /out1

//查看输出

[root@ant150 mapreduce]# hdfs dfs -cat /out1/part-r-00000