本文主要参考这里 1’ 2 的解析和 linux 源码 3。

此处推荐一个可以便捷查看 linux 源码的网站 bootlin 4。

更新:2022 / 02 / 11

驱动 | Linux | NVMe 不完全总结

- NVMe 的前世今生

- 从系统角度看 NVMe 驱动

- NVMe Command

- PCI 总线

- 从架构角度看 NVMe 驱动

- NVMe 驱动的文件构成

- NVMe Driver 工作原理

- core.c

- nvme_core_init

- nvme_dev_fops

- nvme_dev_open

- nvme_dev_release

- nvme_dev_ioctl

- NVME_IO_RESET

- NVME_IOCTL_SUBSYS_RESET

- pci.c

- nvme_init

- BUILD_BUG_ON

- pci_register_driver

- nvme_id_table

- nvme_probe

- nvme_pci_alloc_dev

- nvme_dev_map

- nvme_setup_prp_pools

- nvme_init_ctrl_finish

- DMA

- 参考链接

NVMe 的前世今生

NVMe 离不开 PCIe,NVMe SSD 是 PCIe 的 endpoint。PCIe 是 x86 平台上一种流行的外设总线,由于其 Plug and Play 的特性,目前很多外设都通过 PCI Bus 与 Host 通信,甚至不少CPU 的集成外设都通过 PCI Bus 连接,如 APIC 等。

NVMe SSD 在 PCIe 接口上使用新的标准协议 NVMe,由大厂 Intel 推出并交由 nvmexpress 组织推广,现在被全球大部分存储企业采纳。

从系统角度看 NVMe 驱动

NVMe Command

NVMe Host( Server )和 NVMe Controller( SSD )通过 NVMe Command 进行信息交互。NVMe Spec 中定义了 NVMe Command 的格式,占用 64 字节。

NVMe Command 分为 Admin Command 和 IO Command 两大类,前者主要是用于配置,后者用于数据传输。

NVMe Command 是 Host 与 SSD Controller 交流的基本单元,应用的 I/O 请求也要转化成NVMe Command。

或许可以将 NVMe Command 理解为英语,host和SSD controller分别为韩国人和日本人,这两个 对象 需要通过英语统一语法来进行彼此的沟通和交流,Admin Command 是语法 负责语句的主谓宾结构,IO Command是单词 构成语句的具体使用词语。一句话需要语法组织语句结构和单词作为语句的填充。

PCI 总线

- 在操作系统启动时,

BIOS会枚举整个PCI的总线,之后将扫描到的设备通过ACPItables传给操作系统。 - 当操作系统加载时,

PCIBus驱动则会根据此信息读取各个PCI设备的PCI Header Config空间,从class code寄存器获得一个特征值。

class code是PCIbus用来选择哪个驱动加载设备的唯一根据。

NVMeSpec定义的class code是010802h。NVMeSSD内部的Controller PCIe Header中class code都会设置成010802h。

所以,需要在驱动中指定 class code 为 010802h,将 010802h 放入 pci_driver nvme_driver 的id_table。

之后当 nvme_driver 注册到 PCI Bus 后,PCI Bus 就知道这个驱动是给 class code=010802h 的设备使用的。

nvme_driver 中有一个 probe 函数,nvme_probe(),这个函数才是真正加载设备的处理函数。

从架构角度看 NVMe 驱动

学习 Linux NVMe Driver之前,先看一下 Driver 在 Linux 架构中的位置,如下图所示:

NVMe driver 在 Block Layer 之下,负责与 NVMe 设备交互。

为了紧跟时代的大趋势,现在的 NVMe driver 已经很强大了,也可以支持 NVMe over Fabric 相关设备,如下图所示:

不过,本文还是以 NVMe over PCIe 为主。

NVMe 驱动的文件构成

最新的代码位于 linux/drivers/nvme/ 5。

其文件目录构成如下所示:

nvme ----

在分析一个 driver 时,最好先看这个 driver 相关的 kconfig 及 Makefile 文件,了解其文件架构,再阅读相关的 source code。

Kconfig 文件的作用是:

- 控制

make menuconfig时,出现的配置选项; - 根据用户配置界面的选择,将配置结果保存在

.config配置文件(该文件将提供给Makefile使用,用以决定要编译的内核组件以及如何编译)

先看一下 linux/drivers/nvme/host/Kconfig 6 的内容(NVMeOF相关内容已省略,后续不再注明),如下所示:

# SPDX-License-Identifier: GPL-2.0-only

config NVME_CORE

tristate

select BLK_DEV_INTEGRITY_T10 if BLK_DEV_INTEGRITY

......

接着,再看一下 linux/drivers/nvme/host/Makefile 7 的内容,如下所示:

# SPDX-License-Identifier: GPL-2.0

ccflags-y += -I$(src)

obj-$(CONFIG_NVME_CORE) += nvme-core.o

obj-$(CONFIG_BLK_DEV_NVME) += nvme.o

obj-$(CONFIG_NVME_FABRICS) += nvme-fabrics.o

obj-$(CONFIG_NVME_RDMA) += nvme-rdma.o

obj-$(CONFIG_NVME_FC) += nvme-fc.o

obj-$(CONFIG_NVME_TCP) += nvme-tcp.o

obj-$(CONFIG_NVME_APPLE) += nvme-apple.o

......

从 Kconfig 和 Makefile 来看,了解 NVMe over PCIe 相关的知识点,我们主要关注 core.c,pci.c 就好。

NVMe Driver 工作原理

core.c

通过该文件了解 NVMe 驱动可以如何操作 NVMe 设备。

nvme_core_init

从 linux/drivers/nvme/host/core.c 8 找到程序入口 module_init(nvme_core_init);,如下所示:

static int __init nvme_core_init(void)

{

int result = -ENOMEM;

......

// 1. 注册字符设备 "nvme"

result = alloc_chrdev_region(&nvme_ctrl_base_chr_devt, 0,

NVME_MINORS, "nvme");

if (result < 0)

goto destroy_delete_wq;

// 2. 新建一个nvme class,拥有者(Owner)是为THIS_MODULE

// 如果有Error发生,删除字符设备nvme

nvme_class = class_create(THIS_MODULE, "nvme");

if (IS_ERR(nvme_class)) {

result = PTR_ERR(nvme_class);

goto unregister_chrdev;

}

nvme_class->dev_uevent = nvme_class_uevent;

nvme_subsys_class = class_create(THIS_MODULE, "nvme-subsystem");

if (IS_ERR(nvme_subsys_class)) {

result = PTR_ERR(nvme_subsys_class);

goto destroy_class;

}

result = alloc_chrdev_region(&nvme_ns_chr_devt, 0, NVME_MINORS,

"nvme-generic");

if (result < 0)

goto destroy_subsys_class;

nvme_ns_chr_class = class_create(THIS_MODULE, "nvme-generic");

if (IS_ERR(nvme_ns_chr_class)) {

result = PTR_ERR(nvme_ns_chr_class);

goto unregister_generic_ns;

}

result = nvme_init_auth();

if (result)

goto destroy_ns_chr;

return 0;

从上面来看,nvme_core_init 主要做了两件事:

- 调用

alloc_chrdev_region9 函数,如下所示,注册一个名为nvme的字符设备。

int alloc_chrdev_region(dev_t *dev, unsigned baseminor, unsigned count,

const char *name)

{

struct char_device_struct *cd;

cd = __register_chrdev_region(0, baseminor, count, name);

if (IS_ERR(cd))

return PTR_ERR(cd);

*dev = MKDEV(cd->major, cd->baseminor);

return 0;

}

- 调用

class_create函数 10,如下所示,动态创建设备的逻辑类,并完成部分字段的初始化,然后将其添加到内核中。创建的逻辑类位于/sys/class/。

#define class_create(owner, name) \

({ \

static struct lock_class_key __key; \

__class_create(owner, name, &__key); \

})

#endif /* _DEVICE_CLASS_H_ */

在注册字符设备时,涉及到了设备号的知识点:

一个字符设备或者块设备都有一个主设备号(

Major)和次设备号(Minor)。主设备号用来表示一个特定的驱动程序,次设备号用来表示使用该驱动程序的各个设备。比如,我们在Linux系统上挂了两块NVMeSSD,那么主设备号就可以自动分配一个数字 (比如8),次设备号分别为1和2。

例如,在32位机子中,设备号共32位,高12位表示主设备号,低20位表示次设备号。

nvme_dev_fops

通过 nvme_core_init 进行字符设备的注册后,我们就可以通过 nvme_dev_fops 中的 open,ioctrl,release 接口对其进行操作了。

nvme 字符设备的文件操作结构体 nvme_dev_fops 8 定义如下:

static const struct file_operations nvme_dev_fops = {

.owner = THIS_MODULE,

// open()用来打开一个设备,在该函数中可以对设备进行初始化。

.open = nvme_dev_open,

// release()用来释放open()函数中申请的资源。

.release = nvme_dev_release,

// nvme_dev_ioctl()提供一种执行设备特定命令的方法。

// 这里的ioctl有两个unlocked_ioctl和compat_ioctl。

// 如果这两个同时存在的话,优先调用unlocked_ioctl。

// 对于compat_ioctl只有打来了CONFIG_COMPAT 才会调用compat_ioctl。*/

.unlocked_ioctl = nvme_dev_ioctl,

.compat_ioctl = compat_ptr_ioctl,

.uring_cmd = nvme_dev_uring_cmd,

};

nvme_dev_open

nvme_dev_open 8 的定义如下:

static int nvme_dev_open(struct inode *inode, struct file *file)

{

// 从incode中获取次设备号

struct nvme_ctrl *ctrl =

container_of(inode->i_cdev, struct nvme_ctrl, cdev);

switch (ctrl->state) {

case NVME_CTRL_LIVE:

break;

default:

// -EWOULDBLOCK: Operation would block

return -EWOULDBLOCK;

}

nvme_get_ctrl(ctrl);

if (!try_module_get(ctrl->ops->module)) {

nvme_put_ctrl(ctrl);

// -EINVAL: Invalid argument

return -EINVAL;

}

file->private_data = ctrl;

return 0;

}

找到与 inode 次设备号对应的 nvme 设备,接着判断 nvme_ctrl_live 和 module,最后找到的 nvme 设备放到 file->private_data 区域。

nvme_dev_release

nvme_dev_release 的定义如下:

static int nvme_dev_release(struct inode *inode, struct file *file)

{

struct nvme_ctrl *ctrl =

container_of(inode->i_cdev, struct nvme_ctrl, cdev);

module_put(ctrl->ops->module);

nvme_put_ctrl(ctrl);

return 0;

}

用来释放 nvme_dev_open 中申请的资源。

nvme_dev_ioctl

nvme_dev_ioctl 11 的定义如下:

long nvme_dev_ioctl(struct file *file, unsigned int cmd,

unsigned long arg)

{

struct nvme_ctrl *ctrl = file->private_data;

void __user *argp = (void __user *)arg;

switch (cmd) {

case NVME_IOCTL_ADMIN_CMD:

return nvme_user_cmd(ctrl, NULL, argp, 0, file->f_mode);

case NVME_IOCTL_ADMIN64_CMD:

return nvme_user_cmd64(ctrl, NULL, argp, 0, file->f_mode);

case NVME_IOCTL_IO_CMD:

return nvme_dev_user_cmd(ctrl, argp, file->f_mode);

case NVME_IOCTL_RESET:

if (!capable(CAP_SYS_ADMIN))

return -EACCES;

dev_warn(ctrl->device, "resetting controller\n");

return nvme_reset_ctrl_sync(ctrl);

case NVME_IOCTL_SUBSYS_RESET:

if (!capable(CAP_SYS_ADMIN))

return -EACCES;

return nvme_reset_subsystem(ctrl);

case NVME_IOCTL_RESCAN:

if (!capable(CAP_SYS_ADMIN))

return -EACCES;

nvme_queue_scan(ctrl);

return 0;

default:

// 错误的ioctrl命令

return -ENOTTY;

}

}

从上面可以发现在 nvme_dev_ioctl 中总共实现了 5 种 Command:

| 命令 | 解释 |

|---|---|

NVME_IOCTL_ADMIN_CMD | NVMe Spec 定义的 Admin 命令。 |

NVME_IOCTL_IO_CMD | NVMe Spec 定义的 IO 命令。不支持 NVMe 设备有多个namespace 的情况。 |

NVME_IOCTL_RESET | |

NVME_IOCTL_SUBSYS_RESET | 通过向相关 bar 寄存器写入指定值 0x4E564D65 来完成 |

NVME_IOCTL_RESCAN |

NVME_IO_RESET

先来看看 nvme_reset_ctrl_sync 的定义,如下:

int nvme_reset_ctrl_sync(struct nvme_ctrl *ctrl)

{

int ret;

ret = nvme_reset_ctrl(ctrl);

if (!ret) {

flush_work(&ctrl->reset_work);

if (ctrl->state != NVME_CTRL_LIVE)

// ENETRESET: Network dropped connection because of reset */

ret = -ENETRESET;

}

return ret;

}

再来看看 nvme_reset_ctrl,如下:

int nvme_reset_ctrl(struct nvme_ctrl *ctrl)

{

if (!nvme_change_ctrl_state(ctrl, NVME_CTRL_RESETTING))

return -EBUSY;

if (!queue_work(nvme_reset_wq, &ctrl->reset_work))

// EBUSH: Device or resource busy

return -EBUSY;

return 0;

}

EXPORT_SYMBOL_GPL(nvme_reset_ctrl);

从上面的定义中可以了解到,如果通过调用 nvme_reset_ctrl 获取当前设备和资源的状态:

- 如果处于

busy状态,则不进行相关的reset的动作; - 如果处于空闲状态,则调用

flush_work(&ctrl->reset_work)来reset nvme controller。

再来看一下 flush_work 的定义,如下:

static bool __flush_work(struct work_struct *work, bool from_cancel)

{

struct wq_barrier barr;

if (WARN_ON(!wq_online))

return false;

if (WARN_ON(!work->func))

return false;

lock_map_acquire(&work->lockdep_map);

lock_map_release(&work->lockdep_map);

if (start_flush_work(work, &barr, from_cancel)) {

wait_for_completion(&barr.done);

destroy_work_on_stack(&barr.work);

return true;

} else {

return false;

}

}

/**

* flush_work - wait for a work to finish executing the last queueing instance

* @work: the work to flush

*

* Wait until @work has finished execution. @work is guaranteed to be idle

* on return if it hasn't been requeued since flush started.

*

* Return:

* %true if flush_work() waited for the work to finish execution,

* %false if it was already idle.

*/

bool flush_work(struct work_struct *work)

{

return __flush_work(work, false);

}

EXPORT_SYMBOL_GPL(flush_work);

NVME_IOCTL_SUBSYS_RESET

先来看看 nvme_reset_subsystem 的定义,如下:

static inline int nvme_reset_subsystem(struct nvme_ctrl *ctrl)

{

int ret;

if (!ctrl->subsystem)

return -ENOTTY;

if (!nvme_wait_reset(ctrl))

// EBUSH: Device or resource busy

return -EBUSY;

ret = ctrl->ops->reg_write32(ctrl, NVME_REG_NSSR, 0x4E564D65);

if (ret)

return ret;

return nvme_try_sched_reset(ctrl);

}

先来看一下 nvme_wait_reset 的定义,如下:

/*

* Waits for the controller state to be resetting, or returns false if it is

* not possible to ever transition to that state.

*/

bool nvme_wait_reset(struct nvme_ctrl *ctrl)

{

wait_event(ctrl->state_wq,

nvme_change_ctrl_state(ctrl, NVME_CTRL_RESETTING) ||

nvme_state_terminal(ctrl));

return ctrl->state == NVME_CTRL_RESETTING;

}

EXPORT_SYMBOL_GPL(nvme_wait_reset);

通过 nvme_wait_reset 获取当前设备或资源的状态:

- 如果

controller state是resetting,说明当前设备或资源处于繁忙状态,无法完成nvme_reset_subsystem; - 如果

controller state是resetting,通过ctrl->ops->reg_write32对NVME_REG_NSSR写入0x4E564D65来完成nvme_reset_subsystem。

再来看一下 reg_write32 的定义:

struct nvme_ctrl_ops {

const char *name;

struct module *module;

unsigned int flags;

#define NVME_F_FABRICS (1 << 0)

#define NVME_F_METADATA_SUPPORTED (1 << 1)

#define NVME_F_BLOCKING (1 << 2)

const struct attribute_group **dev_attr_groups;

int (*reg_read32)(struct nvme_ctrl *ctrl, u32 off, u32 *val);

int (*reg_write32)(struct nvme_ctrl *ctrl, u32 off, u32 val);

int (*reg_read64)(struct nvme_ctrl *ctrl, u32 off, u64 *val);

void (*free_ctrl)(struct nvme_ctrl *ctrl);

void (*submit_async_event)(struct nvme_ctrl *ctrl);

void (*delete_ctrl)(struct nvme_ctrl *ctrl);

void (*stop_ctrl)(struct nvme_ctrl *ctrl);

int (*get_address)(struct nvme_ctrl *ctrl, char *buf, int size);

void (*print_device_info)(struct nvme_ctrl *ctrl);

bool (*supports_pci_p2pdma)(struct nvme_ctrl *ctrl);

};

从上面,不难看出,reg_write32 是 nvme_ctrl_ops 的其中一种操作。

再看一下 nvme_ctrl_ops 的定义,如下:

static const struct nvme_ctrl_ops nvme_pci_ctrl_ops = {

.name = "pcie",

.module = THIS_MODULE,

.flags = NVME_F_METADATA_SUPPORTED,

.dev_attr_groups = nvme_pci_dev_attr_groups,

.reg_read32 = nvme_pci_reg_read32,

.reg_write32 = nvme_pci_reg_write32,

.reg_read64 = nvme_pci_reg_read64,

.free_ctrl = nvme_pci_free_ctrl,

.submit_async_event = nvme_pci_submit_async_event,

.get_address = nvme_pci_get_address,

.print_device_info = nvme_pci_print_device_info,

.supports_pci_p2pdma = nvme_pci_supports_pci_p2pdma,

};

从上面看,reg_write32 相当于 nvme_pci_reg_write32。

那么,再看 nvme_pci_reg_write32 的定义,即:

static int nvme_pci_reg_write32(struct nvme_ctrl *ctrl, u32 off, u32 val)

{

writel(val, to_nvme_dev(ctrl)->bar + off);

return 0;

}

static inline struct nvme_dev *to_nvme_dev(struct nvme_ctrl *ctrl)

{

return container_of(ctrl, struct nvme_dev, ctrl);

}

从上面看,reg_write32 先通过调用 to_nvme_dev 获取 nvme_ctrl 的地址并赋值于nvme_dev,而后 writel 向指定 bar 寄存器中写入指定的 val 值。

pci.c

通过该文件可以了解 NVMe 驱动的初始化是怎样进行的。

nvme_init

从 linux/drivers/nvme/host/pci.c 12 找到程序入口 module_init(nvme_init);,如下所示:

static int __init nvme_init(void)

{

BUILD_BUG_ON(sizeof(struct nvme_create_cq) != 64);

BUILD_BUG_ON(sizeof(struct nvme_create_sq) != 64);

BUILD_BUG_ON(sizeof(struct nvme_delete_queue) != 64);

BUILD_BUG_ON(IRQ_AFFINITY_MAX_SETS < 2);

BUILD_BUG_ON(DIV_ROUND_UP(nvme_pci_npages_prp(), NVME_CTRL_PAGE_SIZE) >

S8_MAX);

// 注册NVMe驱动

return pci_register_driver(&nvme_driver);

}

从上面来看,初始化的过程只进行了两步:

- 通过

BUILD_BUG_ON检测一些相关参数是否为异常值; - 通过

pci_register_driver注册NVMe驱动;

BUILD_BUG_ON

先看其定义 13,如下:

/**

* BUILD_BUG_ON - break compile if a condition is true.

* @condition: the condition which the compiler should know is false.

*

* If you have some code which relies on certain constants being equal, or

* some other compile-time-evaluated condition, you should use BUILD_BUG_ON to

* detect if someone changes it.

*/

#define BUILD_BUG_ON(condition) \

BUILD_BUG_ON_MSG(condition, "BUILD_BUG_ON failed: " #condition)

所以,在初始化阶段会通过 BUILD_BUG_ON 监测 nvme_create_cq,nvme_create_sq,nvme_delete_queue 等参数是否同预期值一致。

pci_register_driver

先看其定义 14,如下:

static inline int pci_register_driver(struct pci_driver *drv)

{ return 0; }

/* pci_register_driver() must be a macro so KBUILD_MODNAME can be expanded */

#define pci_register_driver(driver) \

__pci_register_driver(driver, THIS_MODULE, KBUILD_MODNAME)

在初始化过程中,调用 pci_register_driver 函数将 nvme_driver 注册到 PCI Bus。

然后,PCI Bus 是怎么将 NVMe 驱动匹配到对应的 NVMe 设备的呢?

nvme_id_table

系统启动时,BIOS 会枚举整个 PCI Bus, 之后将扫描到的设备通过 ACPI tables 传给操作系统。

当操作系统加载时,PCI Bus 驱动则会根据此信息读取各个 PCI 设备的 PCIe Header Config 空间,从 class code 寄存器获得一个特征值。

class code 就是 PCI bus 用来选择哪个驱动加载设备的唯一根据。

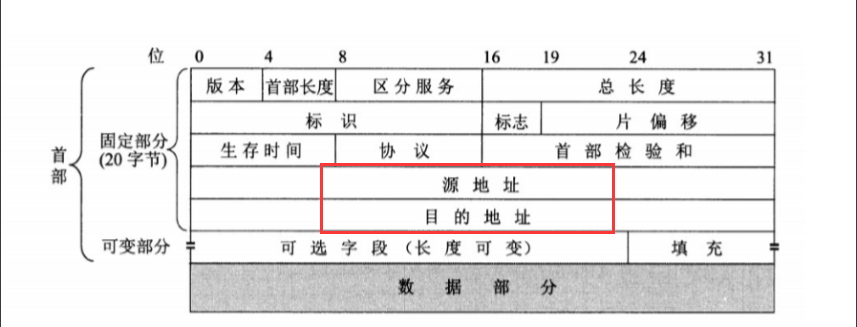

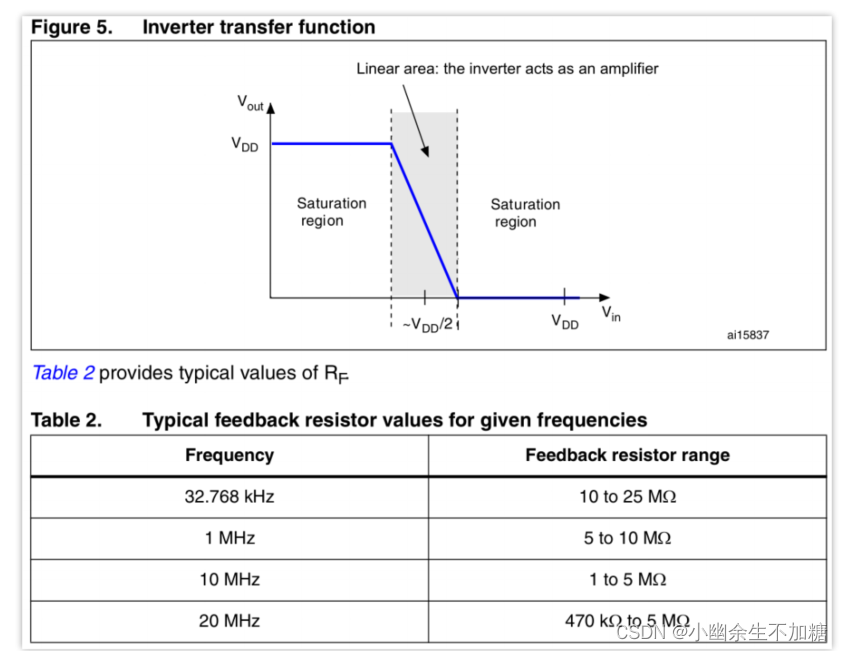

NVMe Spec 定义 NVMe 设备的 Class code=0x010802h, 如下图。

然后,nvme driver 会将 class code 写入 nvme_id_table 12,如下所示:

static struct pci_driver nvme_driver = {

.name = "nvme",

.id_table = nvme_id_table,

.probe = nvme_probe,

.remove = nvme_remove,

.shutdown = nvme_shutdown,

.driver = {

.probe_type = PROBE_PREFER_ASYNCHRONOUS,

#ifdef CONFIG_PM_SLEEP

.pm = &nvme_dev_pm_ops,

#endif

},

.sriov_configure = pci_sriov_configure_simple,

.err_handler = &nvme_err_handler,

};

nvme_id_table 的内容如下,

static const struct pci_device_id nvme_id_table[] = {

{ PCI_VDEVICE(INTEL, 0x0953), /* Intel 750/P3500/P3600/P3700 */

.driver_data = NVME_QUIRK_STRIPE_SIZE |

NVME_QUIRK_DEALLOCATE_ZEROES, },

......

{ PCI_DEVICE(PCI_VENDOR_ID_APPLE, 0x2005),

.driver_data = NVME_QUIRK_SINGLE_VECTOR |

NVME_QUIRK_128_BYTES_SQES |

NVME_QUIRK_SHARED_TAGS |

NVME_QUIRK_SKIP_CID_GEN |

NVME_QUIRK_IDENTIFY_CNS },

{ PCI_DEVICE_CLASS(PCI_CLASS_STORAGE_EXPRESS, 0xffffff) },

{ 0, }

};

MODULE_DEVICE_TABLE(pci, nvme_id_table);

再看一下 PCI_CLASS_STORAGE_EXPRESS 的定义,如下:

/**

* PCI_DEVICE_CLASS - macro used to describe a specific PCI device class

* @dev_class: the class, subclass, prog-if triple for this device

* @dev_class_mask: the class mask for this device

*

* This macro is used to create a struct pci_device_id that matches a

* specific PCI class. The vendor, device, subvendor, and subdevice

* fields will be set to PCI_ANY_ID.

*/

#define PCI_DEVICE_CLASS(dev_class,dev_class_mask) \

.class = (dev_class), .class_mask = (dev_class_mask), \

.vendor = PCI_ANY_ID, .device = PCI_ANY_ID, \

.subvendor = PCI_ANY_ID, .subdevice = PCI_ANY_ID

它是一个用来描述特定类的 PCI 设备的宏变量。

在 PCI_DEVICE_CLASS(PCI_CLASS_STORAGE_EXPRESS, 0xffffff) 中的 PCI_CLASS_STORAGE_EXPRESS 的值遵循 NVMe Spec 中定义的 Class code=0x010802h,在 linux/include/linux/pci_ids.h 15 中有所体现,如下所示:

#define PCI_CLASS_STORAGE_EXPRESS 0x010802

因此,pci_register_driver 将 nvme_driver 注册到 PCI Bus 之后,PCI Bus 就明白了这个驱动是给NVMe 设备( Class code=0x010802h ) 用的。

到这里,只是找到 PCI Bus 上面驱动与 NVMe 设备的对应关系。

nvme_init 执行完毕,返回后,nvme 驱动就啥事不做了,直到 PCI 总线枚举出了这个 nvme 设备,就开始调用 nvme_probe() 函数开始 “干活”。

nvme_probe

现在,再回忆一下 nvme_driver 的结构体,如下所示:

static struct pci_driver nvme_driver = {

.name = "nvme",

.id_table = nvme_id_table,

.probe = nvme_probe,

.remove = nvme_remove,

.shutdown = nvme_shutdown,

.driver = {

.probe_type = PROBE_PREFER_ASYNCHRONOUS,

#ifdef CONFIG_PM_SLEEP

.pm = &nvme_dev_pm_ops,

#endif

},

.sriov_configure = pci_sriov_configure_simple,

.err_handler = &nvme_err_handler,

};

那么现在看一下 nvme_probe 都做了什么?如下所示:

static int nvme_probe(struct pci_dev *pdev, const struct pci_device_id *id)

{

struct nvme_dev *dev;

int result = -ENOMEM;

// 1.

dev = nvme_pci_alloc_dev(pdev, id);

if (!dev)

return -ENOMEM;

// 2. 获得PCI Bar的虚拟地址

result = nvme_dev_map(dev);

if (result)

goto out_uninit_ctrl;

// 3. 设置 DMA 需要的 PRP 内存池

result = nvme_setup_prp_pools(dev);

if (result)

goto out_dev_unmap;

// 4.

result = nvme_pci_alloc_iod_mempool(dev);

if (result)

goto out_release_prp_pools;

// 5. 打印日志

dev_info(dev->ctrl.device, "pci function %s\n", dev_name(&pdev->dev));

// 6.

result = nvme_pci_enable(dev);

if (result)

goto out_release_iod_mempool;

// 7.

result = nvme_alloc_admin_tag_set(&dev->ctrl, &dev->admin_tagset,

&nvme_mq_admin_ops, sizeof(struct nvme_iod));

if (result)

goto out_disable;

/*

* Mark the controller as connecting before sending admin commands to

* allow the timeout handler to do the right thing.

*/

// 8.

if (!nvme_change_ctrl_state(&dev->ctrl, NVME_CTRL_CONNECTING)) {

dev_warn(dev->ctrl.device,

"failed to mark controller CONNECTING\n");

result = -EBUSY;

goto out_disable;

}

// 9. 初始化NVMe Controller结构

result = nvme_init_ctrl_finish(&dev->ctrl, false);

if (result)

goto out_disable;

// 10.

nvme_dbbuf_dma_alloc(dev);

// 11.

result = nvme_setup_host_mem(dev);

if (result < 0)

goto out_disable;

// 12.

result = nvme_setup_io_queues(dev);

if (result)

goto out_disable;

// 13.

if (dev->online_queues > 1) {

nvme_alloc_io_tag_set(&dev->ctrl, &dev->tagset, &nvme_mq_ops,

nvme_pci_nr_maps(dev), sizeof(struct nvme_iod));

nvme_dbbuf_set(dev);

}

// 14.

if (!dev->ctrl.tagset)

dev_warn(dev->ctrl.device, "IO queues not created\n");

// 15.

if (!nvme_change_ctrl_state(&dev->ctrl, NVME_CTRL_LIVE)) {

dev_warn(dev->ctrl.device,

"failed to mark controller live state\n");

result = -ENODEV;

goto out_disable;

}

// 16. 为设备设置私有数据指针

pci_set_drvdata(pdev, dev);

nvme_start_ctrl(&dev->ctrl);

nvme_put_ctrl(&dev->ctrl);

flush_work(&dev->ctrl.scan_work);

return 0;

out_disable:

nvme_change_ctrl_state(&dev->ctrl, NVME_CTRL_DELETING);

nvme_dev_disable(dev, true);

nvme_free_host_mem(dev);

nvme_dev_remove_admin(dev);

nvme_dbbuf_dma_free(dev);

nvme_free_queues(dev, 0);

out_release_iod_mempool:

mempool_destroy(dev->iod_mempool);

out_release_prp_pools:

nvme_release_prp_pools(dev);

out_dev_unmap:

nvme_dev_unmap(dev);

out_uninit_ctrl:

nvme_uninit_ctrl(&dev->ctrl);

return result;

}

下面针对 nvme_probe 过程中的一些函数的使用进行进一步分析:

nvme_pci_alloc_dev

先看它的定义,如下:

static struct nvme_dev *nvme_pci_alloc_dev(struct pci_dev *pdev,

const struct pci_device_id *id)

{

unsigned long quirks = id->driver_data;

// 通过调用 dev_to_node 得到这个 pci_dev 的 numa 节点。

// 如果没有制定的话,默认用 first_memory_node,也就是第一个 numa 节点.

int node = dev_to_node(&pdev->dev);

struct nvme_dev *dev;

int ret = -ENOMEM;

if (node == NUMA_NO_NODE)

set_dev_node(&pdev->dev, first_memory_node);

// 为 nvme dev 节点分配空间

dev = kzalloc_node(sizeof(*dev), GFP_KERNEL, node);

if (!dev)

return NULL;

// 初始化两个work变量, 放在nvme_workq中执行

// 初始化互斥锁

INIT_WORK(&dev->ctrl.reset_work, nvme_reset_work);

mutex_init(&dev->shutdown_lock);

// 分配queue

dev->nr_write_queues = write_queues;

dev->nr_poll_queues = poll_queues;

dev->nr_allocated_queues = nvme_max_io_queues(dev) + 1;

dev->queues = kcalloc_node(dev->nr_allocated_queues,

sizeof(struct nvme_queue), GFP_KERNEL, node);

if (!dev->queues)

goto out_free_dev;

// 增加设备对象的引用计数

dev->dev = get_device(&pdev->dev);

quirks |= check_vendor_combination_bug(pdev);

if (!noacpi && acpi_storage_d3(&pdev->dev)) {

/*

* Some systems use a bios work around to ask for D3 on

* platforms that support kernel managed suspend.

*/

dev_info(&pdev->dev,

"platform quirk: setting simple suspend\n");

quirks |= NVME_QUIRK_SIMPLE_SUSPEND;

}

// 初始化 NVMe Controller 结构

ret = nvme_init_ctrl(&dev->ctrl, &pdev->dev, &nvme_pci_ctrl_ops,

quirks);

if (ret)

goto out_put_device;

dma_set_min_align_mask(&pdev->dev, NVME_CTRL_PAGE_SIZE - 1);

dma_set_max_seg_size(&pdev->dev, 0xffffffff);

/*

* Limit the max command size to prevent iod->sg allocations going

* over a single page.

*/

dev->ctrl.max_hw_sectors = min_t(u32,

NVME_MAX_KB_SZ << 1, dma_max_mapping_size(&pdev->dev) >> 9);

dev->ctrl.max_segments = NVME_MAX_SEGS;

/*

* There is no support for SGLs for metadata (yet), so we are limited to

* a single integrity segment for the separate metadata pointer.

*/

dev->ctrl.max_integrity_segments = 1;

return dev;

out_put_device:

put_device(dev->dev);

kfree(dev->queues);

out_free_dev:

kfree(dev);

return ERR_PTR(ret);

}

再看一下 nvme_init_ctrl 的定义,如下:

/*

* Initialize a NVMe controller structures. This needs to be called during

* earliest initialization so that we have the initialized structured around

* during probing.

*/

int nvme_init_ctrl(struct nvme_ctrl *ctrl, struct device *dev,

const struct nvme_ctrl_ops *ops, unsigned long quirks)

{

int ret;

ctrl->state = NVME_CTRL_NEW;

clear_bit(NVME_CTRL_FAILFAST_EXPIRED, &ctrl->flags);

spin_lock_init(&ctrl->lock);

mutex_init(&ctrl->scan_lock);

INIT_LIST_HEAD(&ctrl->namespaces);

xa_init(&ctrl->cels);

init_rwsem(&ctrl->namespaces_rwsem);

ctrl->dev = dev;

ctrl->ops = ops;

ctrl->quirks = quirks;

ctrl->numa_node = NUMA_NO_NODE;

INIT_WORK(&ctrl->scan_work, nvme_scan_work);

INIT_WORK(&ctrl->async_event_work, nvme_async_event_work);

INIT_WORK(&ctrl->fw_act_work, nvme_fw_act_work);

INIT_WORK(&ctrl->delete_work, nvme_delete_ctrl_work);

init_waitqueue_head(&ctrl->state_wq);

INIT_DELAYED_WORK(&ctrl->ka_work, nvme_keep_alive_work);

INIT_DELAYED_WORK(&ctrl->failfast_work, nvme_failfast_work);

memset(&ctrl->ka_cmd, 0, sizeof(ctrl->ka_cmd));

ctrl->ka_cmd.common.opcode = nvme_admin_keep_alive;

BUILD_BUG_ON(NVME_DSM_MAX_RANGES * sizeof(struct nvme_dsm_range) >

PAGE_SIZE);

ctrl->discard_page = alloc_page(GFP_KERNEL);

if (!ctrl->discard_page) {

ret = -ENOMEM;

goto out;

}

ret = ida_alloc(&nvme_instance_ida, GFP_KERNEL);

if (ret < 0)

goto out;

ctrl->instance = ret;

device_initialize(&ctrl->ctrl_device);

ctrl->device = &ctrl->ctrl_device;

ctrl->device->devt = MKDEV(MAJOR(nvme_ctrl_base_chr_devt),

ctrl->instance);

ctrl->device->class = nvme_class;

ctrl->device->parent = ctrl->dev;

if (ops->dev_attr_groups)

ctrl->device->groups = ops->dev_attr_groups;

else

ctrl->device->groups = nvme_dev_attr_groups;

ctrl->device->release = nvme_free_ctrl;

dev_set_drvdata(ctrl->device, ctrl);

ret = dev_set_name(ctrl->device, "nvme%d", ctrl->instance);

if (ret)

goto out_release_instance;

nvme_get_ctrl(ctrl);

cdev_init(&ctrl->cdev, &nvme_dev_fops);

ctrl->cdev.owner = ops->module;

ret = cdev_device_add(&ctrl->cdev, ctrl->device);

if (ret)

goto out_free_name;

/*

* Initialize latency tolerance controls. The sysfs files won't

* be visible to userspace unless the device actually supports APST.

*/

ctrl->device->power.set_latency_tolerance = nvme_set_latency_tolerance;

dev_pm_qos_update_user_latency_tolerance(ctrl->device,

min(default_ps_max_latency_us, (unsigned long)S32_MAX));

nvme_fault_inject_init(&ctrl->fault_inject, dev_name(ctrl->device));

nvme_mpath_init_ctrl(ctrl);

ret = nvme_auth_init_ctrl(ctrl);

if (ret)

goto out_free_cdev;

return 0;

out_free_cdev:

cdev_device_del(&ctrl->cdev, ctrl->device);

out_free_name:

nvme_put_ctrl(ctrl);

kfree_const(ctrl->device->kobj.name);

out_release_instance:

ida_free(&nvme_instance_ida, ctrl->instance);

out:

if (ctrl->discard_page)

__free_page(ctrl->discard_page);

return ret;

}

EXPORT_SYMBOL_GPL(nvme_init_ctrl);

从上面的代码中,我们可以了解到,nvme_init_ctrl 的作用主要是调用 dev_set_name 函数创建一个名字叫 nvmex 的字符设备。

下面,看一下 dev_set_name 的定义,如下:

/**

* dev_set_name - set a device name

* @dev: device

* @fmt: format string for the device's name

*/

int dev_set_name(struct device *dev, const char *fmt, ...)

{

va_list vargs;

int err;

va_start(vargs, fmt);

err = kobject_set_name_vargs(&dev->kobj, fmt, vargs);

va_end(vargs);

return err;

}

EXPORT_SYMBOL_GPL(dev_set_name);

这个 nvmex 中的 x 是通过 kobject_set_name_vargs 获得唯一的索引值。

再来看一下 kobject_set_name_vargs 的实现,如下:

/**

* kobject_set_name_vargs() - Set the name of a kobject.

* @kobj: struct kobject to set the name of

* @fmt: format string used to build the name

* @vargs: vargs to format the string.

*/

int kobject_set_name_vargs(struct kobject *kobj, const char *fmt,

va_list vargs)

{

const char *s;

if (kobj->name && !fmt)

return 0;

s = kvasprintf_const(GFP_KERNEL, fmt, vargs);

if (!s)

return -ENOMEM;

/*

* ewww... some of these buggers have '/' in the name ... If

* that's the case, we need to make sure we have an actual

* allocated copy to modify, since kvasprintf_const may have

* returned something from .rodata.

*/

if (strchr(s, '/')) {

char *t;

t = kstrdup(s, GFP_KERNEL);

kfree_const(s);

if (!t)

return -ENOMEM;

strreplace(t, '/', '!');

s = t;

}

kfree_const(kobj->name);

kobj->name = s;

return 0;

}

nvme_dev_map

先看它的定义,如下:

static int nvme_dev_map(struct nvme_dev *dev)

{

struct pci_dev *pdev = to_pci_dev(dev->dev);

if (pci_request_mem_regions(pdev, "nvme"))

return -ENODEV;

if (nvme_remap_bar(dev, NVME_REG_DBS + 4096))

goto release;

return 0;

release:

pci_release_mem_regions(pdev);

return -ENODEV;

}

再看一下 pci_request_mem_regions 14 的定义,如下:

static inline int

pci_request_mem_regions(struct pci_dev *pdev, const char *name)

{

return pci_request_selected_regions(pdev,

pci_select_bars(pdev, IORESOURCE_MEM), name);

}

/**

* pci_select_bars - Make BAR mask from the type of resource

* @dev: the PCI device for which BAR mask is made

* @flags: resource type mask to be selected

*

* This helper routine makes bar mask from the type of resource.

*/

int pci_select_bars(struct pci_dev *dev, unsigned long flags)

{

int i, bars = 0;

for (i = 0; i < PCI_NUM_RESOURCES; i++)

if (pci_resource_flags(dev, i) & flags)

bars |= (1 << i);

return bars;

}

EXPORT_SYMBOL(pci_select_bars);

综合上面代码可得知,nvme_dev_map 的执行过程可以分为三步:

- 调用

pci_select_bars,其返回值为mask。

因为PCI设备的Header Config有6个32位的Bar寄存器(如下图),所以mark中的每一位的值就代表其中一个Bar是否被置起。

2. 调用 pci_request_selected_regions,这个函数的一个参数就是之前调用 pci_select_bars 返回的mask 值,作用就是把对应的这个几个 bar 保留起来,不让别人使用。

3. 调用 nvme_remap_bar。在 linux 中我们无法直接访问物理地址,需要映射到虚拟地址,nvme_remap_bar 就是这个作用。映射完后,我们访问 dev->bar 就可以直接操作 nvme 设备上的寄存器了。但是代码中,并没有根据 pci_select_bars 的返回值来决定映射哪个 bar,而是直接映射 bar0,原因是 NVMe 协议中强制规定了 BAR0 就是内存映射的基址。

nvme_setup_prp_pools

先来看一下它的定义,如下:

static int nvme_setup_prp_pools(struct nvme_dev *dev)

{

dev->prp_page_pool = dma_pool_create("prp list page", dev->dev,

NVME_CTRL_PAGE_SIZE,

NVME_CTRL_PAGE_SIZE, 0);

if (!dev->prp_page_pool)

return -ENOMEM;

/* Optimisation for I/Os between 4k and 128k */

dev->prp_small_pool = dma_pool_create("prp list 256", dev->dev,

256, 256, 0);

if (!dev->prp_small_pool) {

dma_pool_destroy(dev->prp_page_pool);

return -ENOMEM;

}

return 0;

}

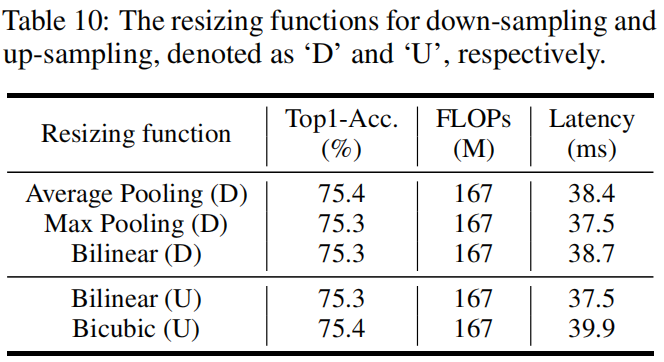

从上面的代码可以了解到 nvme_setup_prp_pools,主要是创建了两个 dma pool:

prp_page_pool提供的是块大小为Page_Size(格式化时确定,例如4KB) 的内存,主要是为了对于不一样长度的prp list来做优化;prp_small_pool里提供的是块大小为256字节的内存;

后面就可以通过其他 dma 函数从 dma pool 中获得 memory 了。

nvme_init_ctrl_finish

DMA

PCIe 有个寄存器位 Bus Master Enable,这个 bit 置 1 后,PCIe 设备就可以向Host 发送 DMA Read Memory 和 DMA Write Memory 请求。

当 Host 的 driver 需要跟 PCIe 设备传输数据的时候,只需要告诉 PCIe 设备存放数据的地址就可以。

NVMe Command 占用 64 个字节,另外其 PCIe BAR 空间被映射到虚拟内存空间(其中包括用来通知 NVMe SSD Controller 读取 Command 的 Doorbell 寄存器)。

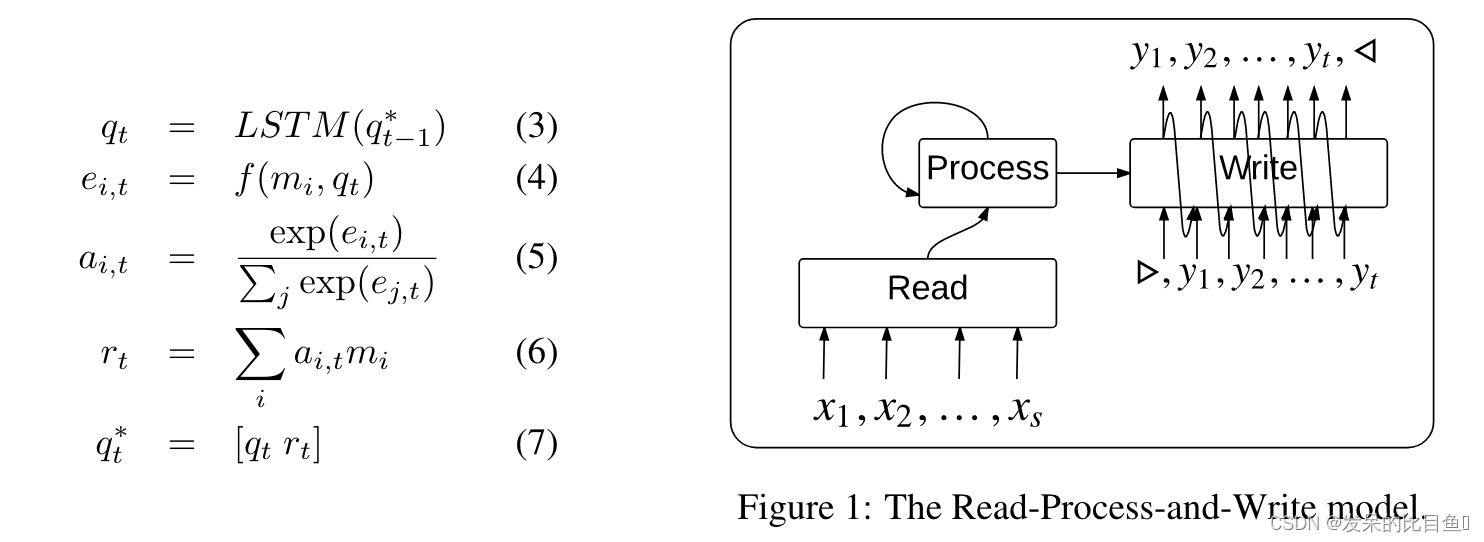

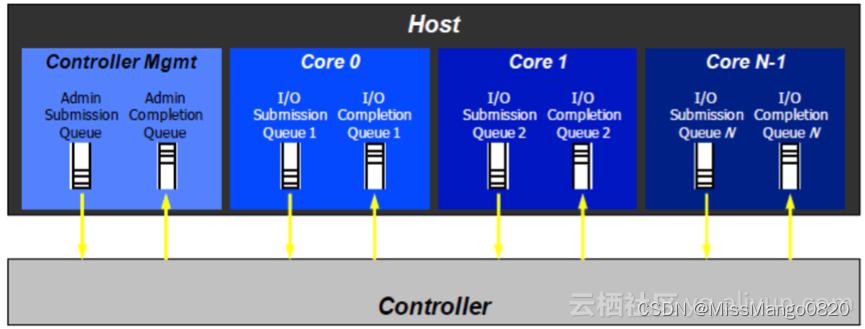

NVMe 数据传输都是通过 NVMe Command,而 NVMe Command 则存放在 NVMe Queue 中,其配置如下图所示:

其中队列中有 Submission Queue,Completion Queue 两个。

参考链接

NVMe驱动系列文章 ↩︎

Linux NVMe Driver学习笔记之1:概述与nvme_core_init函数解析 ↩︎

linux ↩︎

bootlin ↩︎

linux/drivers/nvme/ ↩︎

linux/drivers/nvme/host/Kconfig ↩︎

linux/drivers/nvme/host/Makefile ↩︎

linux/drivers/nvme/host/core.c ↩︎ ↩︎ ↩︎

linux/fs/char_dev.c ↩︎

linux/include/linux/device.h ↩︎

linux/drivers/nvme/host/ioctl.c ↩︎

linux/drivers/nvme/host/pci.c ↩︎ ↩︎

linux/include/linux/build_bug.h ↩︎

linux/include/linux/pci.h ↩︎ ↩︎

linux/include/linux/pci_ids.h ↩︎