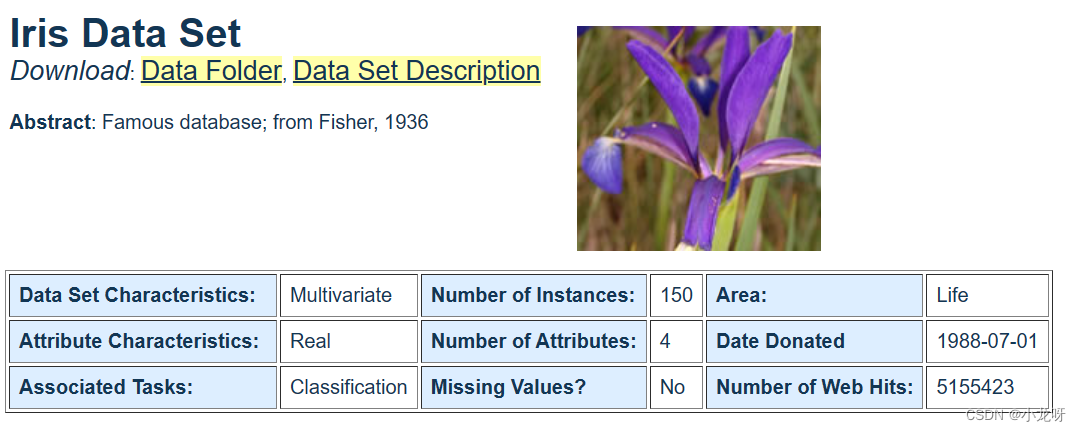

一、数据集介绍

Data Set Information:

This is perhaps the best known database to be found in the pattern recognition literature. Fisher’s paper is a classic in the field and is referenced frequently to this day. (See Duda & Hart, for example.) The data set contains 3 classes of 50 instances each, where each class refers to a type of iris plant. One class is linearly separable from the other 2; the latter are NOT linearly separable from each other.

Attribute Information:

- sepal length in cm

- sepal width in cm

- petal length in cm

- petal width in cm

- class:

– Iris Setosa

– Iris Versicolour

– Iris Virginica

二、使用贝叶斯分类

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn import datasets

# 读取数据

iris = datasets.load_iris()

iris_data = iris.data

iris_target = iris.target

# 划分训练集和测试集

data_size = iris_data.shape[0]

train_data = iris_data[: int(data_size * 0.8)]

train_target = iris_target[: int(data_size * 0.8)]

test_data = iris_data[int(data_size * 0.8):]

test_target = iris_target[int(data_size * 0.8):]

# 定义模型

class BayesianModel(nn.Module):

def __init__(self, input_size, output_size):

super(BayesianModel, self).__init__()

self.fc = nn.Linear(input_size, output_size)

def forward(self, x):

x = self.fc(x)

return x

# 实例化模型

model = BayesianModel(input_size=4, output_size=3)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.03)

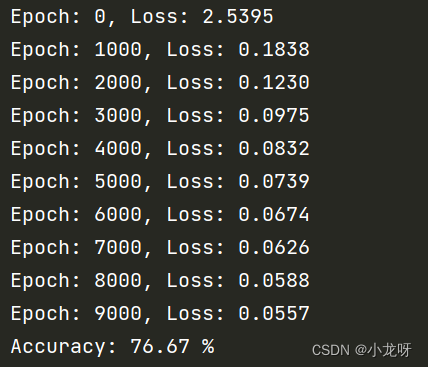

# 训练模型

for epoch in range(10000):

optimizer.zero_grad()

outputs = model(torch.tensor(train_data, dtype=torch.float32))

loss = criterion(outputs, torch.tensor(train_target, dtype=torch.long))

loss.backward()

optimizer.step()

if epoch % 1000 == 0:

print("Epoch: %d, Loss: %.4f" % (epoch, loss.item()))

# 评估模型

with torch.no_grad():

outputs = model(torch.tensor(test_data, dtype=torch.float32))

_, predicted = torch.max(outputs, 1)

accuracy = (predicted == torch.tensor(test_target, dtype=torch.long)).sum().item() / len(test_target)

print("Accuracy: %.2f %%" % (accuracy * 100))

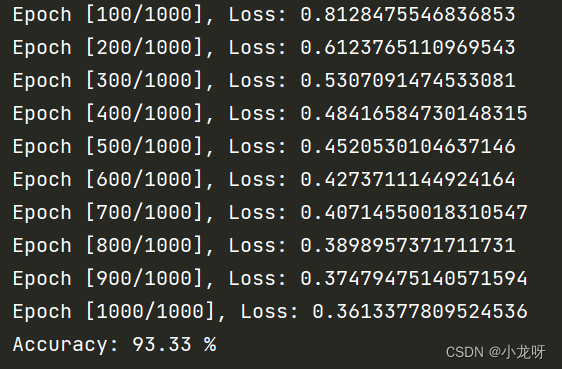

三、使用支持向量机分类

import torch

import torch.nn as nn

import torch.optim as optim

from sklearn import datasets

from sklearn.model_selection import train_test_split

# 加载数据集

iris = datasets.load_iris()

X = iris["data"]

y = iris["target"]

# 划分训练集和测试集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

# 转换为PyTorch tensor

X_train = torch.tensor(X_train, dtype=torch.float32)

y_train = torch.tensor(y_train, dtype=torch.long)

X_test = torch.tensor(X_test, dtype=torch.float32)

y_test = torch.tensor(y_test, dtype=torch.long)

# 定义SVM模型

class SVM(nn.Module):

def __init__(self, input_dim, num_classes):

super().__init__()

self.linear = nn.Linear(input_dim, num_classes)

def forward(self, x):

return self.linear(x)

# 创建模型实例

input_dim = X_train.shape[1]

num_classes = 3

model = SVM(input_dim, num_classes)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# 训练模型

num_epochs = 1000

for epoch in range(num_epochs):

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

if (epoch + 1) % 100 == 0:

print(f"Epoch [{epoch + 1}/{num_epochs}], Loss: {loss.item()}")

# 评估模型

with torch.no_grad():

outputs = model(X_test)

_, pred = torch.max(outputs, 1)

correct = (pred == y_test).sum().item()

accuracy = correct / y_test.shape[0]

print("Accuracy: %.2f %%" % (accuracy * 100))

四、使用神经网络分类

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from sklearn import datasets

import numpy as np

# 加载鸢尾花数据集

iris = datasets.load_iris()

X = iris["data"].astype(np.float32)

y = iris["target"].astype(np.int64)

# 将数据分为训练集和测试集

train_ratio = 0.8

index = np.random.permutation(X.shape[0])

train_index = index[:int(X.shape[0] * train_ratio)]

test_index = index[int(X.shape[0] * train_ratio):]

X_train, y_train = X[train_index], y[train_index]

X_test, y_test = X[test_index], y[test_index]

# 定义神经网络模型

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.fc1 = nn.Linear(4, 32)

self.fc2 = nn.Linear(32, 32)

self.fc3 = nn.Linear(32, 3)

def forward(self, x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 初始化模型、损失函数和优化器

model = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

# 训练模型

num_epochs = 100

for epoch in range(num_epochs):

inputs = torch.from_numpy(X_train)

labels = torch.from_numpy(y_train)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 评估模型

with torch.no_grad():

inputs = torch.from_numpy(X_test)

labels = torch.from_numpy(y_test)

outputs = model(inputs)

_, predictions = torch.max(outputs, 1)

accuracy = (predictions == labels).float().mean()

print("Accuracy: %.2f %%" % (accuracy.item() * 100))

![[Android Studio]Android 数据存储-文件存储学习笔记-结合保存QQ账户与密码存储到指定文件中的演练](https://img-blog.csdnimg.cn/24b696d76d374a9992017e1625389592.gif)