前言:

对于lio-sam前端中图像投影和特征提取部分的学习。

一、imageProjection.cpp

main函数:

int main(int argc, char** argv)

{

ros::init(argc, argv, "lio_sam");

ImageProjection IP;

ROS_INFO("\033[1;32m----> Image Projection Started.\033[0m");

ros::MultiThreadedSpinner spinner(3);

spinner.spin();

return 0;

}利用ImageProjection生成了IP对象,主要实现也在IP里面。

有3个并发多线程。

ImageProjection类:

私有变量:

std::mutex imuLock;

std::mutex odoLock;

ros::Subscriber subLaserCloud;

ros::Publisher pubLaserCloud;

ros::Publisher pubExtractedCloud;

ros::Publisher pubLaserCloudInfo;

ros::Subscriber subImu;

std::deque<sensor_msgs::Imu> imuQueue;

ros::Subscriber subOdom;

std::deque<nav_msgs::Odometry> odomQueue;

std::deque<sensor_msgs::PointCloud2> cloudQueue;

sensor_msgs::PointCloud2 currentCloudMsg;两个线程锁;

两个subscriber,一个订阅点云,一个订阅Imu;

三个发布端,发布点云,提取了特征点云,和点云信息;

一个imu数据队列;

一个里程计队列;

一个点云队列;

以及当前点云信息。

double *imuTime = new double[queueLength];

double *imuRotX = new double[queueLength];

double *imuRotY = new double[queueLength];

double *imuRotZ = new double[queueLength];设置了四个数组用来存放imu的时间,旋转轴的数据。

int imuPointerCur;

bool firstPointFlag;

Eigen::Affine3f transStartInverse;

pcl::PointCloud<PointXYZIRT>::Ptr laserCloudIn;

pcl::PointCloud<OusterPointXYZIRT>::Ptr tmpOusterCloudIn;

pcl::PointCloud<PointType>::Ptr fullCloud;

pcl::PointCloud<PointType>::Ptr extractedCloud;一些点云指针。

int deskewFlag;

cv::Mat rangeMat;

bool odomDeskewFlag;

float odomIncreX;

float odomIncreY;

float odomIncreZ;

lio_sam::cloud_info cloudInfo;

double timeScanCur;

double timeScanEnd;

std_msgs::Header cloudHeader;

vector<int> columnIdnCountVec;一些初始化信息。

构造函数:

ImageProjection():

deskewFlag(0)

{

subImu = nh.subscribe<sensor_msgs::Imu>(imuTopic, 2000, &ImageProjection::imuHandler, this, ros::TransportHints().tcpNoDelay());

subOdom = nh.subscribe<nav_msgs::Odometry>(odomTopic+"_incremental", 2000, &ImageProjection::odometryHandler, this, ros::TransportHints().tcpNoDelay());

subLaserCloud = nh.subscribe<sensor_msgs::PointCloud2>(pointCloudTopic, 5, &ImageProjection::cloudHandler, this, ros::TransportHints().tcpNoDelay());

pubExtractedCloud = nh.advertise<sensor_msgs::PointCloud2> ("lio_sam/deskew/cloud_deskewed", 1);

pubLaserCloudInfo = nh.advertise<lio_sam::cloud_info> ("lio_sam/deskew/cloud_info", 1);

allocateMemory();

resetParameters();

pcl::console::setVerbosityLevel(pcl::console::L_ERROR);

}订阅三个,发布两个。

其中imuHander主要作用是经过坐标转换后的imu数据存放到imuQueue里面;

odometryHandler作用类似,存放到odomQueue里面;

cloudHandler就是对于点云进行处理,后面详细解释;

allocateMemory:

void allocateMemory()

{

laserCloudIn.reset(new pcl::PointCloud<PointXYZIRT>());

tmpOusterCloudIn.reset(new pcl::PointCloud<OusterPointXYZIRT>());

fullCloud.reset(new pcl::PointCloud<PointType>());

extractedCloud.reset(new pcl::PointCloud<PointType>());

fullCloud->points.resize(N_SCAN*Horizon_SCAN);

cloudInfo.startRingIndex.assign(N_SCAN, 0);

cloudInfo.endRingIndex.assign(N_SCAN, 0);

cloudInfo.pointColInd.assign(N_SCAN*Horizon_SCAN, 0);

cloudInfo.pointRange.assign(N_SCAN*Horizon_SCAN, 0);

resetParameters();

}它的作用是给一些指针变量分配内存,并重置参数。

resetParameters:

void resetParameters()

{

laserCloudIn->clear();

extractedCloud->clear();

// reset range matrix for range image projection

rangeMat = cv::Mat(N_SCAN, Horizon_SCAN, CV_32F, cv::Scalar::all(FLT_MAX));

imuPointerCur = 0;

firstPointFlag = true;

odomDeskewFlag = false;

for (int i = 0; i < queueLength; ++i)

{

imuTime[i] = 0;

imuRotX[i] = 0;

imuRotY[i] = 0;

imuRotZ[i] = 0;

}

columnIdnCountVec.assign(N_SCAN, 0);

}将laserCloudIn和extractedCloud的内容清空;

初始化rangeMat;

并将存放imu时间和三个旋转轴的数组全部置零。

cloudHandler:

void cloudHandler(const sensor_msgs::PointCloud2ConstPtr& laserCloudMsg)

{

if (!cachePointCloud(laserCloudMsg))

return;

if (!deskewInfo())

return;

projectPointCloud();

cloudExtraction();

publishClouds();

resetParameters();

}首先是cachePointCloud函数,主要功能是将点云的数据缓存一下。

// cache point cloud

cloudQueue.push_back(*laserCloudMsg);

if (cloudQueue.size() <= 2)

return false;将点云押入cloudQueue中。

// convert cloud

currentCloudMsg = std::move(cloudQueue.front());

cloudQueue.pop_front();

if (sensor == SensorType::VELODYNE || sensor == SensorType::LIVOX)

{

pcl::moveFromROSMsg(currentCloudMsg, *laserCloudIn);

}

else if (sensor == SensorType::OUSTER)

{

// Convert to Velodyne format

pcl::moveFromROSMsg(currentCloudMsg, *tmpOusterCloudIn);

laserCloudIn->points.resize(tmpOusterCloudIn->size());

laserCloudIn->is_dense = tmpOusterCloudIn->is_dense;

for (size_t i = 0; i < tmpOusterCloudIn->size(); i++)

{

auto &src = tmpOusterCloudIn->points[i];

auto &dst = laserCloudIn->points[i];

dst.x = src.x;

dst.y = src.y;

dst.z = src.z;

dst.intensity = src.intensity;

dst.ring = src.ring;

dst.time = src.t * 1e-9f;

}

}

else

{

ROS_ERROR_STREAM("Unknown sensor type: " << int(sensor));

ros::shutdown();

}转换成pcl点云的数据格式,支持velodyne、livox和ouster;

其中laserCloudIn存放点云信息;

cloudHeader是点云头文件信息;

timeScanCur是点云开始时间;

timeScanEnd是这一帧点云结束时间。

// check dense flag

if (laserCloudIn->is_dense == false)

{

ROS_ERROR("Point cloud is not in dense format, please remove NaN points first!");

ros::shutdown();

}

// check ring channel

static int ringFlag = 0;

if (ringFlag == 0)

{

ringFlag = -1;

for (int i = 0; i < (int)currentCloudMsg.fields.size(); ++i)

{

if (currentCloudMsg.fields[i].name == "ring")

{

ringFlag = 1;

break;

}

}

if (ringFlag == -1)

{

ROS_ERROR("Point cloud ring channel not available, please configure your point cloud data!");

ros::shutdown();

}

}判断是否都是有效点;

然后判断是否有scan信息,如果没有就退出。

// check point time

if (deskewFlag == 0)

{

deskewFlag = -1;

for (auto &field : currentCloudMsg.fields)

{

if (field.name == "time" || field.name == "t")

{

deskewFlag = 1;

break;

}

}

if (deskewFlag == -1)

ROS_WARN("Point cloud timestamp not available, deskew function disabled, system will drift significantly!");

}判断是否有时间信息。

deskewInfo函数:

bool deskewInfo()

{

std::lock_guard<std::mutex> lock1(imuLock);

std::lock_guard<std::mutex> lock2(odoLock);

// make sure IMU data available for the scan

if (imuQueue.empty() || imuQueue.front().header.stamp.toSec() > timeScanCur || imuQueue.back().header.stamp.toSec() < timeScanEnd)

{

ROS_DEBUG("Waiting for IMU data ...");

return false;

}

imuDeskewInfo();

odomDeskewInfo();

return true;

}获取运动补偿所需要的信息。

确保imu的数据符合要求,然后开始imu补偿点云。

imuDeskewInfo函数:

while (!imuQueue.empty())

{

if (imuQueue.front().header.stamp.toSec() < timeScanCur - 0.01)

imuQueue.pop_front();

else

break;

}

if (imuQueue.empty())

return;

imuPointerCur = 0;丢弃过早的imu信息,判断imu队列是否为0,是就直接返回。

for (int i = 0; i < (int)imuQueue.size(); ++i)

{

sensor_msgs::Imu thisImuMsg = imuQueue[i];

double currentImuTime = thisImuMsg.header.stamp.toSec();

// get roll, pitch, and yaw estimation for this scan

if (currentImuTime <= timeScanCur)

imuRPY2rosRPY(&thisImuMsg, &cloudInfo.imuRollInit, &cloudInfo.imuPitchInit, &cloudInfo.imuYawInit);

if (currentImuTime > timeScanEnd + 0.01)

break;

if (imuPointerCur == 0){

imuRotX[0] = 0;

imuRotY[0] = 0;

imuRotZ[0] = 0;

imuTime[0] = currentImuTime;

++imuPointerCur;

continue;

}

// get angular velocity

double angular_x, angular_y, angular_z;

imuAngular2rosAngular(&thisImuMsg, &angular_x, &angular_y, &angular_z);

// integrate rotation

double timeDiff = currentImuTime - imuTime[imuPointerCur-1];

imuRotX[imuPointerCur] = imuRotX[imuPointerCur-1] + angular_x * timeDiff;

imuRotY[imuPointerCur] = imuRotY[imuPointerCur-1] + angular_y * timeDiff;

imuRotZ[imuPointerCur] = imuRotZ[imuPointerCur-1] + angular_z * timeDiff;

imuTime[imuPointerCur] = currentImuTime;

++imuPointerCur;

}主要作用是将imuQueue中存放的imu数据转换成roll, pitch, and yaw存放到cloudInfo中,转换成angular velocity。然后利用angular velocity计算imu下一帧的4个数组信息。

odomDeskewInfo函数,主要作用是利用预积分节点发出的里程计信息进行一个初始位姿估计。

cloudInfo.odomAvailable = false;

while (!odomQueue.empty())

{

if (odomQueue.front().header.stamp.toSec() < timeScanCur - 0.01)

odomQueue.pop_front();

else

break;

}判断时间是否合适。

if (odomQueue.empty())

return;

if (odomQueue.front().header.stamp.toSec() > timeScanCur)

return;判断是否有数据。

// get start odometry at the beinning of the scan

nav_msgs::Odometry startOdomMsg;

for (int i = 0; i < (int)odomQueue.size(); ++i)

{

startOdomMsg = odomQueue[i];

if (ROS_TIME(&startOdomMsg) < timeScanCur)

continue;

else

break;

}选择这一帧之后的点云时间。

tf::Quaternion orientation;

tf::quaternionMsgToTF(startOdomMsg.pose.pose.orientation, orientation);

double roll, pitch, yaw;

tf::Matrix3x3(orientation).getRPY(roll, pitch, yaw);

// Initial guess used in mapOptimization

cloudInfo.initialGuessX = startOdomMsg.pose.pose.position.x;

cloudInfo.initialGuessY = startOdomMsg.pose.pose.position.y;

cloudInfo.initialGuessZ = startOdomMsg.pose.pose.position.z;

cloudInfo.initialGuessRoll = roll;

cloudInfo.initialGuessPitch = pitch;

cloudInfo.initialGuessYaw = yaw;

cloudInfo.odomAvailable = true;利用tf获取roll,pitch,yaw并将它们赋值给cloudInfo。

// get end odometry at the end of the scan

odomDeskewFlag = false;

if (odomQueue.back().header.stamp.toSec() < timeScanEnd)

return;

nav_msgs::Odometry endOdomMsg;

for (int i = 0; i < (int)odomQueue.size(); ++i)

{

endOdomMsg = odomQueue[i];

if (ROS_TIME(&endOdomMsg) < timeScanEnd)

continue;

else

break;

}获取最后时间里面的odometry的信息。

if (int(round(startOdomMsg.pose.covariance[0])) != int(round(endOdomMsg.pose.covariance[0])))

return;

Eigen::Affine3f transBegin = pcl::getTransformation(startOdomMsg.pose.pose.position.x, startOdomMsg.pose.pose.position.y, startOdomMsg.pose.pose.position.z, roll, pitch, yaw);

tf::quaternionMsgToTF(endOdomMsg.pose.pose.orientation, orientation);

tf::Matrix3x3(orientation).getRPY(roll, pitch, yaw);

Eigen::Affine3f transEnd = pcl::getTransformation(endOdomMsg.pose.pose.position.x, endOdomMsg.pose.pose.position.y, endOdomMsg.pose.pose.position.z, roll, pitch, yaw);

Eigen::Affine3f transBt = transBegin.inverse() * transEnd;

float rollIncre, pitchIncre, yawIncre;

pcl::getTranslationAndEulerAngles(transBt, odomIncreX, odomIncreY, odomIncreZ, rollIncre, pitchIncre, yawIncre);

odomDeskewFlag = true;这里的转换和之前imu计算的思想是类似的。都是计算开始的transBegin数据格式和transEnd的数据格式。求出两者之间的差值,将差值转化为里程计xyz的增量和rpy的增量。

projectPointCloud函数:

void projectPointCloud()

{

int cloudSize = laserCloudIn->points.size();

// range image projection

for (int i = 0; i < cloudSize; ++i)

{

PointType thisPoint;

thisPoint.x = laserCloudIn->points[i].x;

thisPoint.y = laserCloudIn->points[i].y;

thisPoint.z = laserCloudIn->points[i].z;

thisPoint.intensity = laserCloudIn->points[i].intensity;

float range = pointDistance(thisPoint);

if (range < lidarMinRange || range > lidarMaxRange)

continue;

int rowIdn = laserCloudIn->points[i].ring;

if (rowIdn < 0 || rowIdn >= N_SCAN)

continue;

if (rowIdn % downsampleRate != 0)

continue;

int columnIdn = -1;

if (sensor == SensorType::VELODYNE || sensor == SensorType::OUSTER)

{

float horizonAngle = atan2(thisPoint.x, thisPoint.y) * 180 / M_PI;

static float ang_res_x = 360.0/float(Horizon_SCAN);

columnIdn = -round((horizonAngle-90.0)/ang_res_x) + Horizon_SCAN/2;

if (columnIdn >= Horizon_SCAN)

columnIdn -= Horizon_SCAN;

}

else if (sensor == SensorType::LIVOX)

{

columnIdn = columnIdnCountVec[rowIdn];

columnIdnCountVec[rowIdn] += 1;

}

if (columnIdn < 0 || columnIdn >= Horizon_SCAN)

continue;

if (rangeMat.at<float>(rowIdn, columnIdn) != FLT_MAX)

continue;

thisPoint = deskewPoint(&thisPoint, laserCloudIn->points[i].time);

rangeMat.at<float>(rowIdn, columnIdn) = range;

int index = columnIdn + rowIdn * Horizon_SCAN;

fullCloud->points[index] = thisPoint;

}

}将每个点取出来,然后使用和aloam中一样的计算这个点在一层1800中的index。然后计算将点云矫正后在赋值给fullCloud。

deskewPoint函数主要作用是利用时间来补偿点,主要是使用旋转补偿。

PointType deskewPoint(PointType *point, double relTime)

{

if (deskewFlag == -1 || cloudInfo.imuAvailable == false)

return *point;

double pointTime = timeScanCur + relTime;

float rotXCur, rotYCur, rotZCur;

findRotation(pointTime, &rotXCur, &rotYCur, &rotZCur);

float posXCur, posYCur, posZCur;

findPosition(relTime, &posXCur, &posYCur, &posZCur);

if (firstPointFlag == true)

{

transStartInverse = (pcl::getTransformation(posXCur, posYCur, posZCur, rotXCur, rotYCur, rotZCur)).inverse();

firstPointFlag = false;

}

// transform points to start

Eigen::Affine3f transFinal = pcl::getTransformation(posXCur, posYCur, posZCur, rotXCur, rotYCur, rotZCur);

Eigen::Affine3f transBt = transStartInverse * transFinal;

PointType newPoint;

newPoint.x = transBt(0,0) * point->x + transBt(0,1) * point->y + transBt(0,2) * point->z + transBt(0,3);

newPoint.y = transBt(1,0) * point->x + transBt(1,1) * point->y + transBt(1,2) * point->z + transBt(1,3);

newPoint.z = transBt(2,0) * point->x + transBt(2,1) * point->y + transBt(2,2) * point->z + transBt(2,3);

newPoint.intensity = point->intensity;

return newPoint;

}其中旋转补偿在函数findRotation里面实现:

{

*rotXCur = 0; *rotYCur = 0; *rotZCur = 0;

int imuPointerFront = 0;

while (imuPointerFront < imuPointerCur)

{

if (pointTime < imuTime[imuPointerFront])

break;

++imuPointerFront;

}

if (pointTime > imuTime[imuPointerFront] || imuPointerFront == 0)

{

*rotXCur = imuRotX[imuPointerFront];

*rotYCur = imuRotY[imuPointerFront];

*rotZCur = imuRotZ[imuPointerFront];

} else {

int imuPointerBack = imuPointerFront - 1;

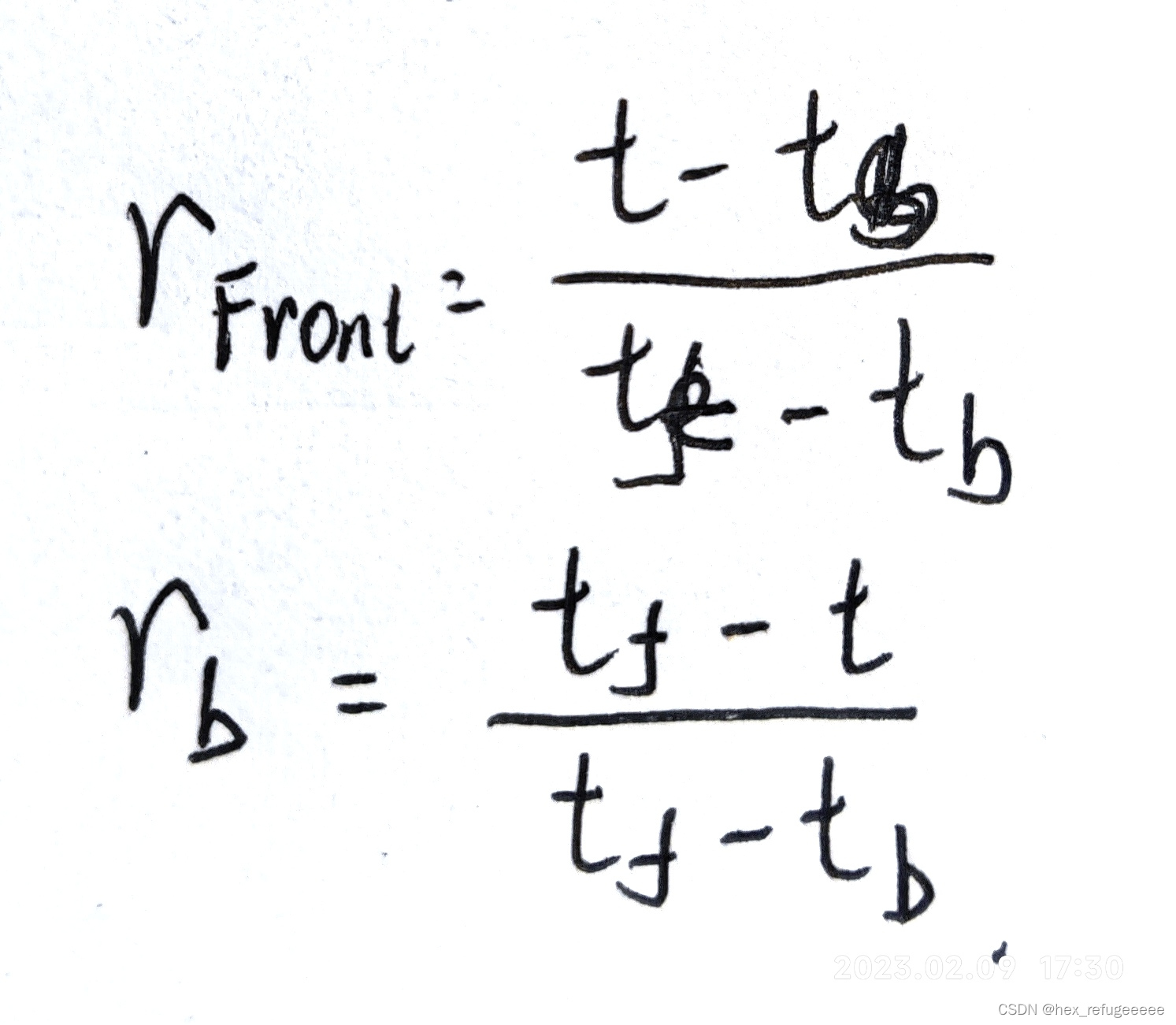

double ratioFront = (pointTime - imuTime[imuPointerBack]) / (imuTime[imuPointerFront] - imuTime[imuPointerBack]);

double ratioBack = (imuTime[imuPointerFront] - pointTime) / (imuTime[imuPointerFront] - imuTime[imuPointerBack]);

*rotXCur = imuRotX[imuPointerFront] * ratioFront + imuRotX[imuPointerBack] * ratioBack;

*rotYCur = imuRotY[imuPointerFront] * ratioFront + imuRotY[imuPointerBack] * ratioBack;

*rotZCur = imuRotZ[imuPointerFront] * ratioFront + imuRotZ[imuPointerBack] * ratioBack;

}

}里面的实现和aloam里面旋转补偿的实现方法类似。

将x,y,z轴的角度值求出来。

最后将这些点全部转换到开始时刻坐标系里面。

void cloudExtraction()

{

int count = 0;

// extract segmented cloud for lidar odometry

for (int i = 0; i < N_SCAN; ++i)

{

cloudInfo.startRingIndex[i] = count - 1 + 5;

for (int j = 0; j < Horizon_SCAN; ++j)

{

if (rangeMat.at<float>(i,j) != FLT_MAX)

{

// mark the points' column index for marking occlusion later

cloudInfo.pointColInd[count] = j;

// save range info

cloudInfo.pointRange[count] = rangeMat.at<float>(i,j);

// save extracted cloud

extractedCloud->push_back(fullCloud->points[j + i*Horizon_SCAN]);

// size of extracted cloud

++count;

}

}

cloudInfo.endRingIndex[i] = count -1 - 5;

}

}主要作用是提取点的相关信息,存储到cloudInfo和extractedCloud里面。最后发布出去。

二、 featureExtraction.cpp

这部分的内容和aloam中提取线面特征几乎一模一样,只是没有了不那么sharp的线点和不那么平坦的面点。就不做分析了。

看之前写的笔记:

aloam学习笔记(二)_hex_refugeeeee的博客-CSDN博客

三、总结

粗略看完,感觉有些地方源码理解的不是很透彻,还是得多看几遍。