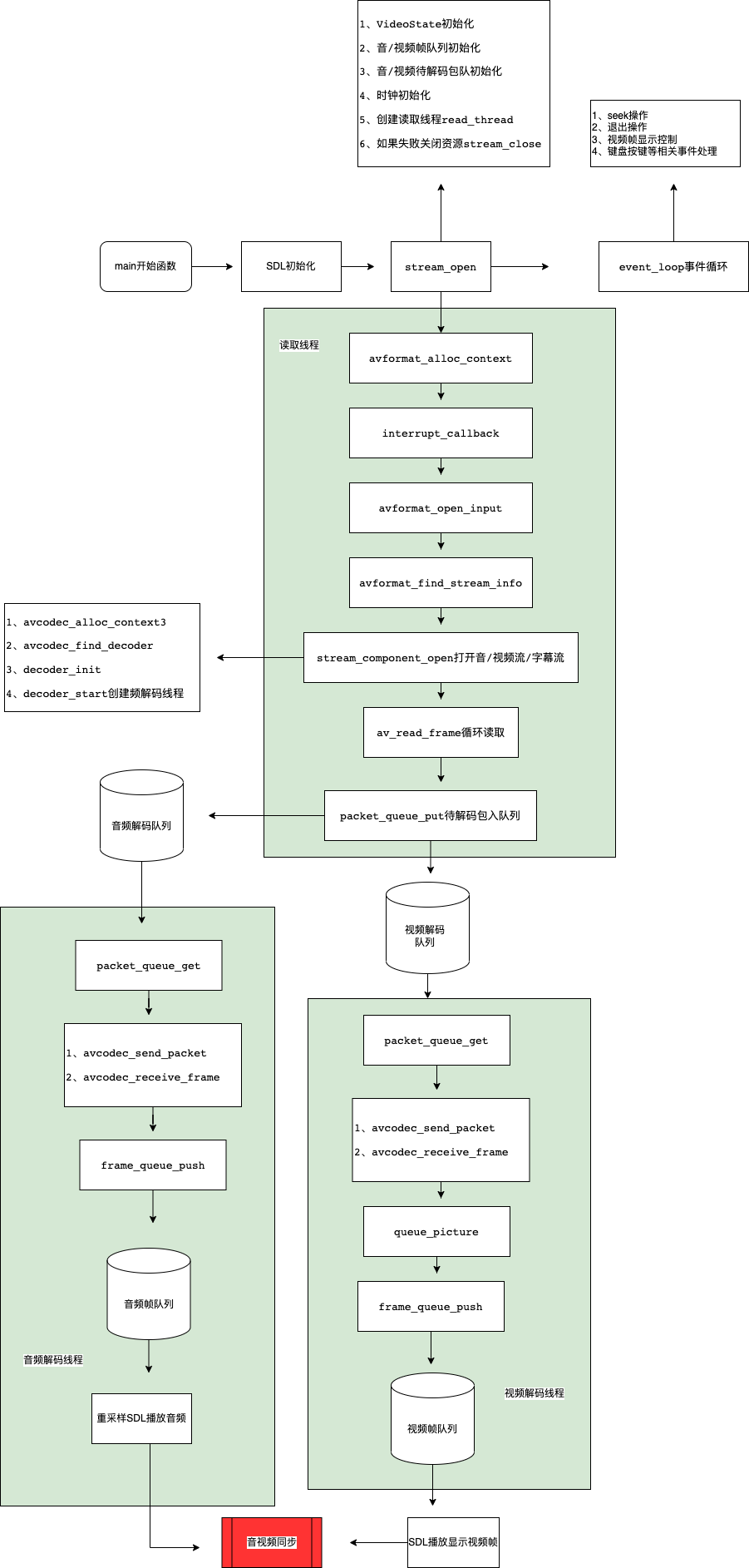

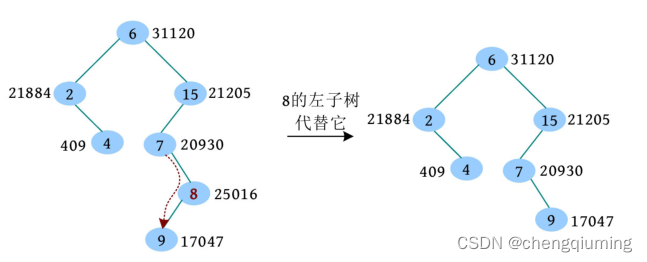

从这张图开始,主要介绍ffplay的读取线程部分。

从图中可以看出,解码线程的主要工作内容是将资源包从待解码列队中取出,然后送进解码器,最后将解码出的数据帧放入帧队列中,等待SDL获取播放。

【学习地址】:FFmpeg/WebRTC/RTMP/NDK/Android音视频流媒体高级开发

【文章福利】:免费领取更多音视频学习资料包、大厂面试题、技术视频和学习路线图,资料包括(C/C++,Linux,FFmpeg webRTC rtmp hls rtsp ffplay srs 等等)有需要的可以点击1079654574加群领取哦~

解码过程

解码线程是在打开流的时候创建的,也就是在函数stream_component_open创建的,视频解码线程的工作函数是video_thread。

以下是函数video_thread的内容,可以看到去掉filter的相关处理后,这个函数是非常精简的,就是在for循环中通过函数get_video_frame获取到一帧图像数据后,调整数据帧的pts,然后将数据帧放入帧队列中:

/**

* 视频解码

* @param arg

* @return

*/

static int video_thread(void *arg)

{

VideoState *is = arg;

// 分配frame

AVFrame *frame = av_frame_alloc();

double pts;

double duration;

int ret;

AVRational tb = is->video_st->time_base;

AVRational frame_rate = av_guess_frame_rate(is->ic, is->video_st, NULL);

#if CONFIG_AVFILTER

AVFilterGraph *graph = NULL;

AVFilterContext *filt_out = NULL, *filt_in = NULL;

int last_w = 0;

int last_h = 0;

enum AVPixelFormat last_format = -2;

int last_serial = -1;

int last_vfilter_idx = 0;

#endif

if (!frame)

return AVERROR(ENOMEM);

for (;;) {

// 获取一帧视频图像,返回负值会退出线程,什么时候会返回负值?问管家VideoState

ret = get_video_frame(is, frame);

if (ret < 0)

goto the_end;

if (!ret)

continue;

#if CONFIG_AVFILTER

if ( last_w != frame->width

|| last_h != frame->height

|| last_format != frame->format

|| last_serial != is->viddec.pkt_serial

|| last_vfilter_idx != is->vfilter_idx) {

av_log(NULL, AV_LOG_DEBUG,

"Video frame changed from size:%dx%d format:%s serial:%d to size:%dx%d format:%s serial:%d\n",

last_w, last_h,

(const char *)av_x_if_null(av_get_pix_fmt_name(last_format), "none"), last_serial,

frame->width, frame->height,

(const char *)av_x_if_null(av_get_pix_fmt_name(frame->format), "none"), is->viddec.pkt_serial);

avfilter_graph_free(&graph);

graph = avfilter_graph_alloc();

if (!graph) {

ret = AVERROR(ENOMEM);

goto the_end;

}

graph->nb_threads = filter_nbthreads;

if ((ret = configure_video_filters(graph, is, vfilters_list ? vfilters_list[is->vfilter_idx] : NULL, frame)) < 0) {

SDL_Event event;

event.type = FF_QUIT_EVENT;

event.user.data1 = is;

SDL_PushEvent(&event);

goto the_end;

}

filt_in = is->in_video_filter;

filt_out = is->out_video_filter;

last_w = frame->width;

last_h = frame->height;

last_format = frame->format;

last_serial = is->viddec.pkt_serial;

last_vfilter_idx = is->vfilter_idx;

frame_rate = av_buffersink_get_frame_rate(filt_out);

}

ret = av_buffersrc_add_frame(filt_in, frame);

if (ret < 0)

goto the_end;

while (ret >= 0) {

is->frame_last_returned_time = av_gettime_relative() / 1000000.0;

ret = av_buffersink_get_frame_flags(filt_out, frame, 0);

if (ret < 0) {

if (ret == AVERROR_EOF)

is->viddec.finished = is->viddec.pkt_serial;

ret = 0;

break;

}

is->frame_last_filter_delay = av_gettime_relative() / 1000000.0 - is->frame_last_returned_time;

if (fabs(is->frame_last_filter_delay) > AV_NOSYNC_THRESHOLD / 10.0)

is->frame_last_filter_delay = 0;

tb = av_buffersink_get_time_base(filt_out);

#endif

// 计算持续播放时间

duration = (frame_rate.num && frame_rate.den ? av_q2d((AVRational){frame_rate.den, frame_rate.num}) : 0);

pts = (frame->pts == AV_NOPTS_VALUE) ? NAN : frame->pts * av_q2d(tb);

// 将解码得到的帧放进队列

ret = queue_picture(is, frame, pts, duration, frame->pkt_pos, is->viddec.pkt_serial);

av_frame_unref(frame);

#if CONFIG_AVFILTER

if (is->videoq.serial != is->viddec.pkt_serial)

break;

}

#endif

if (ret < 0)

goto the_end;

}

the_end:

#if CONFIG_AVFILTER

avfilter_graph_free(&graph);

#endif

av_frame_free(&frame);

return 0;

}

我们来看看函数get_video_frame做了什么:

/**

* 获取一帧图像

* @param is

* @param frame

* @return

*/

static int get_video_frame(VideoState *is, AVFrame *frame)

{

int got_picture;

// 获取解码数据

if ((got_picture = decoder_decode_frame(&is->viddec, frame, NULL)) < 0)

return -1;

// 分析是否需要丢帧,例如解码出来的图片已经比真正播放的音频都慢了,那就要丢帧了

if (got_picture) {

double dpts = NAN;

if (frame->pts != AV_NOPTS_VALUE)

// 计算pts

dpts = av_q2d(is->video_st->time_base) * frame->pts;

frame->sample_aspect_ratio = av_guess_sample_aspect_ratio(is->ic, is->video_st, frame);

// 同步时钟不以视频为基准时

if (framedrop>0 || (framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) {

if (frame->pts != AV_NOPTS_VALUE) {

// 理论上如果需要连续接上播放的话 dpts + diff = get_master_clock(is)

// 所以可以算出diff 注意绝对值

double diff = dpts - get_master_clock(is);

if (!isnan(diff) && fabs(diff) < AV_NOSYNC_THRESHOLD &&

diff - is->frame_last_filter_delay < 0 &&

is->viddec.pkt_serial == is->vidclk.serial &&

is->videoq.nb_packets) {

is->frame_drops_early++;

av_frame_unref(frame);

got_picture = 0;

}

}

}

}

return got_picture;

}

这个函数内部又调用了函数decoder_decode_frame获取解码帧,然后根据同步时钟判断是否需要进行丢帧处理。那么我们再看看函数decoder_decode_frame

static int decoder_decode_frame(Decoder *d, AVFrame *frame, AVSubtitle *sub) {

int ret = AVERROR(EAGAIN);

for (;;) {

// 解码器的序列需要和解码包队列的序列一致

if (d->queue->serial == d->pkt_serial) {

do {

// 请求退出了则返回-1

if (d->queue->abort_request)

return -1;

switch (d->avctx->codec_type) {

case AVMEDIA_TYPE_VIDEO:

// 获取1帧解码数据

ret = avcodec_receive_frame(d->avctx, frame);

if (ret >= 0) {

// 更新pts为AVPacket的pts

if (decoder_reorder_pts == -1) {

frame->pts = frame->best_effort_timestamp;

} else if (!decoder_reorder_pts) {

frame->pts = frame->pkt_dts;

}

}

break;

case AVMEDIA_TYPE_AUDIO:

ret = avcodec_receive_frame(d->avctx, frame);

if (ret >= 0) {

AVRational tb = (AVRational){1, frame->sample_rate};

if (frame->pts != AV_NOPTS_VALUE)

frame->pts = av_rescale_q(frame->pts, d->avctx->pkt_timebase, tb);

else if (d->next_pts != AV_NOPTS_VALUE)

frame->pts = av_rescale_q(d->next_pts, d->next_pts_tb, tb);

if (frame->pts != AV_NOPTS_VALUE) {

d->next_pts = frame->pts + frame->nb_samples;

d->next_pts_tb = tb;

}

}

break;

}

if (ret == AVERROR_EOF) {

d->finished = d->pkt_serial;

// 刷新解码器

avcodec_flush_buffers(d->avctx);

return 0;

}

if (ret >= 0)

return 1;

} while (ret != AVERROR(EAGAIN));

}

do {

if (d->queue->nb_packets == 0)

// 队列空了,唤醒读取线程,赶紧读取数据

SDL_CondSignal(d->empty_queue_cond);

if (d->packet_pending) {

// 处理有缓冲的数据

d->packet_pending = 0;

} else {

int old_serial = d->pkt_serial;

if (packet_queue_get(d->queue, d->pkt, 1, &d->pkt_serial) < 0)

return -1;

if (old_serial != d->pkt_serial) {

// 刷新解码器

avcodec_flush_buffers(d->avctx);

d->finished = 0;

d->next_pts = d->start_pts;

d->next_pts_tb = d->start_pts_tb;

}

}

if (d->queue->serial == d->pkt_serial)

break;

av_packet_unref(d->pkt);

} while (1);

if (d->avctx->codec_type == AVMEDIA_TYPE_SUBTITLE) {

int got_frame = 0;

ret = avcodec_decode_subtitle2(d->avctx, sub, &got_frame, d->pkt);

if (ret < 0) {

ret = AVERROR(EAGAIN);

} else {

if (got_frame && !d->pkt->data) {

d->packet_pending = 1;

}

ret = got_frame ? 0 : (d->pkt->data ? AVERROR(EAGAIN) : AVERROR_EOF);

}

av_packet_unref(d->pkt);

} else {

// 数据送进解码器失败,遇到EAGAIN怎么办?

if (avcodec_send_packet(d->avctx, d->pkt) == AVERROR(EAGAIN)) {

av_log(d->avctx, AV_LOG_ERROR, "Receive_frame and send_packet both returned EAGAIN, which is an API violation.\n");

d->packet_pending = 1;

} else {

av_packet_unref(d->pkt);

}

}

}

}

原来函数decoder_decode_frame才是真正的解码核心,可以看到视频、音频和字幕都是通过调用这个函数进行解码的。这个函数的主要工作内容就是在for循环中不断取出数据包然后调用FFmpeg的API进行解码。

1、首先判断待解码队列的播放序列和解码器的播放序列是否一致:

```apache

if (d->queue->serial == d->pkt_serial)

```

如果一致则通过FFmpeg函数avcodec_receive_frame获取数据帧,然后更新数据帧pts。

在这里有个疑问,我们之前不是说解码过程是先avcodec_send_packet然后是n个avcodec_receive_frame获取数据帧吗?这里怎么直接就先avcodec_receive_frame获取数据帧了呢?

这是因为函数decoder_decode_frame是一个不断被调用的for循环,优先调用avcodec_receive_frame可以防止解码器内部缓冲过多数据而导致解码包无法送入解码器的现象出现。

2、待解码队列中是否有数据,如果没有则唤醒读取线程

```apache

if (d->queue->nb_packets == 0)

// 队列空了,唤醒读取线程,赶紧读取数据

SDL_CondSignal(d->empty_queue_cond);

```

3、判断是否有送入解码器失败的包,如果有则优先处理

```apache

if (d->packet_pending) {

// 处理有缓冲的数据

d->packet_pending = 0;

}

```

这包是在调用`avcodec_send_packet`返回了`AVERROR(EAGAIN)`时所缓存下来的。

4、如果没有缓存的包则从队列中获取packet_queue_get

```apache

int old_serial = d->pkt_serial;

if (packet_queue_get(d->queue, d->pkt, 1, &d->pkt_serial) < 0)

return -1;

if (old_serial != d->pkt_serial) {

// 刷新解码器

avcodec_flush_buffers(d->avctx);

d->finished = 0;

d->next_pts = d->start_pts;

d->next_pts_tb = d->start_pts_tb;

}

```

5、将包送进解码器,如果返回的值是`AVERROR(EAGAIN)`,则将包缓存起来,下次再优先送入解码器处理

同时这里也可以解析了在第一步中为什么优先调用`avcodec_receive_frame`的原因,就是为了数据可以顺利地送进解码器。

```apache

// 数据送进解码器失败,遇到EAGAIN怎么办?

if (avcodec_send_packet(d->avctx, d->pkt) == AVERROR(EAGAIN)) {

av_log(d->avctx, AV_LOG_ERROR, "Receive_frame and send_packet both returned EAGAIN, which is an API violation.\n");

d->packet_pending = 1;

} else {

av_packet_unref(d->pkt);

}

```

![[附源码]java毕业设计小区疫情防控系统](https://img-blog.csdnimg.cn/14fd2a05578040889d56c8ae6f97ec72.png)