问了搭建k8s集群踩了很多坑,问题主要出现在网络插件处,因此主要是master节点操作问题。重新走一下流程整理一下笔记。

目录

虚拟机准备

虚拟机

系统版本信息

修改镜像地址

配置静态ip

关闭防火前和交换分区

转发 IPv4 并让 iptables 看到桥接流量

编辑

k8s集群安装

安装运行容器Containerd

配置 systemd cgroup 驱动

安装 kubeadm、kubelet 和 kubectl

服务安装

初始化 (仅仅主机执行)

初始化执行成功结果

编辑

根据提示执行

初始化完成后检查

装网络插件

问题排查

虚拟机准备

虚拟机

系统版本信息

[root@k8smaster ~]# hostnamectl

Static hostname: k8smaster

Icon name: computer-vm

Chassis: vm

Machine ID: 58f72fdf6d904baab18787a82e3d7dce

Boot ID: 38d7be129cb04282921c6d8e88d420dc

Virtualization: vmware

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-1160.71.1.el7.x86_64

Architecture: x86-64

修改镜像地址

虚拟机器centos7无法识别yum 命令异常处理笔记_虚拟机找不到yum命令-CSDN博客

安装常用的命令工具

yum install -y net-tools vim wget

配置静态ip

systemctl restart network # 重启生效

# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="node"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.110.143

NETMASK=255.255.255.0

GATEWAY=192.168.110.2

DNS1=8.8.8.8

DNS2=8.8.4.4

UUID="0539496e-433b-4973-9618-d721fb5592da"

3.修改用户名和hosts

hostnamectl set-hostname xxxxx # 永久修改用户名

参考链接:

【Kubernetes】(K8S)彻底卸载详细教程_卸载kubernetes-CSDN博客

在 Linux 系统中安装并设置 kubectl | Kubernetes

安装 kubeadm | Kubernetes

关闭防火前和交换分区

1.确保每个节点上 MAC 地址和 product_uuid 的唯一性

- 你可以使用命令

ip link或ifconfig -a来获取网络接口的 MAC 地址 - 可以使用

sudo cat /sys/class/dmi/id/product_uuid命令对 product_uuid 校验

一般来讲,硬件设备会拥有唯一的地址,但是有些虚拟机的地址可能会重复。 Kubernetes 使用这些值来唯一确定集群中的节点。 如果这些值在每个节点上不唯一,可能会导致安装失败。

2. 在所有节点上关闭防护墙与核心防护,并且关闭swap交换

- 交换分区的配置。kubelet 的默认行为是在节点上检测到交换内存时无法启动。 kubelet 自 v1.22 起已开始支持交换分区。自 v1.28 起,仅针对 cgroup v2 支持交换分区; kubelet 的 NodeSwap 特性门控处于 Beta 阶段,但默认被禁用。

- 如果 kubelet 未被正确配置使用交换分区,则你必须禁用交换分区。 例如,

sudo swapoff -a将暂时禁用交换分区。要使此更改在重启后保持不变,请确保在如/etc/fstab、systemd.swap等配置文件中禁用交换分区,具体取决于你的系统如何配置。

安装 kubeadm | Kubernetes

systemctl stop firewalld # 关闭防火墙

systemctl disable firewalld #禁止开启启动

# 关闭交换分区

swapoff -a #交换分区必须要关闭 重启失效

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭swap分区

以下指令适用于 Kubernetes 1.28。

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config转发 IPv4 并让 iptables 看到桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sudo sysctl --system

通过运行以下指令确认 br_netfilter 和 overlay 模块被加载:

lsmod | grep br_netfilter

lsmod | grep overlay

通过运行以下指令确认 net.bridge.bridge-nf-call-iptables、net.bridge.bridge-nf-call-ip6tables 和 net.ipv4.ip_forward 系统变量在你的 sysctl 配置中被设置为 1:

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

k8s集群安装

如果没有说明是全部节点都执行

安装运行容器Containerd

安装容器时 不同的版本有不同的要求;查看官方文档大概意思是,1.24.版本以后有大的变动,移除了docker的集成支持。

容器运行时 | Kubernetes

参考: 轻量级容器管理工具Containerd的两种安装方式 - 只为心情愉悦 - 博客园

移除本机已安装的容器

yum remove containerd.io

yum install -y yum-utils

# 配置docker yum 源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装containerd

yum install -y containerd.io

安装成功后

移除 默认配置

mv /etc/containerd/config.toml /tmp

重置 containerd 配置,然后相应地设置上述配置参数。

containerd config default > /etc/containerd/config.toml

配置 systemd cgroup 驱动

结合 runc 使用 systemd cgroup 驱动,在 /etc/containerd/config.toml 中设置:

# vi /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

#重载沙箱(pause)镜像

[plugins."io.containerd.grpc.v1.cri"]

# sandbox_image = "registry.k8s.io/pause:3.2" #注释

sandbox_image = "registry.aliyuncs.com/k8sxio/pause:3.9" # 替换为

参考:k8s安装文档v1.28.10版本_kubeadm 1.28.10-CSDN博客

重启容器: systemctl restart containerd

查看容器状态:systemctl status containerd

设置开启启动: systemctl enable containerd

注意:如果有报错需要先解决报错问题,否则后面还会有问题

安装 kubeadm、kubelet 和 kubectl

服务安装

你需要在每台机器上安装以下的软件包:

-

kubeadm:用来初始化集群的指令。 -

kubelet:在集群中的每个节点上用来启动 Pod 和容器等。 -

kubectl:用来与集群通信的命令行工具。

采用yum的安装方式 指定镜像库,指定版本v1.28.15

# 这会覆盖 /etc/yum.repos.d/kubernetes.repo 中现存的所有配置

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/repodata/repomd.xml.key

EOF

--------- 只配置一个即可,失败在尝试其他的

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[Kubernetes]

name=Kubernetes v1.28 (Stable) (rpm)

type=rpm-md

baseurl=https://download.opensuse.org/repositories/isv:/kubernetes:/core:/stable:/v1.28/rpm/

gpgcheck=1

gpgkey=https://download.opensuse.org/repositories/isv:/kubernetes:/core:/stable:/v1.28/rpm/repodata/repomd.xml.key

enabled=1

EOF

-----------------------

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/repodata/repomd.xml.key

EOF

原文链接:https://blog.csdn.net/weixin_40136446/article/details/137007671

#安装命令 可能会失败,不管是阿里云源还是官方文档的源,可以多次尝试,三个yum 都成功过

yum install -y kubelet-1.28.15 kubeadm-1.28.15 kubectl-1.28.15

注意:yum install -y kubelet kubeadm kubectl -- 会安装1.28的最新版本 1.28.15

#如果执行失败 添加 --disableexcludes=kubernetes 再次尝试

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

以上都失败 可以先yum update 后在执行安装

查看可用的版本

[root@k8smaster2 ~]# yum list kubelet --showduplicates |grep 1.28

kubelet.x86_64 1.28.0-150500.1.1 kubernetes

kubelet.x86_64 1.28.1-150500.1.1 kubernetes

kubelet.x86_64 1.28.2-150500.1.1 kubernetes

kubelet.x86_64 1.28.3-150500.1.1 kubernetes

kubelet.x86_64 1.28.4-150500.1.1 kubernetes

kubelet.x86_64 1.28.5-150500.1.1 kubernetes

kubelet.x86_64 1.28.6-150500.1.1 kubernetes

kubelet.x86_64 1.28.7-150500.1.1 kubernetes

kubelet.x86_64 1.28.8-150500.1.1 kubernetes

kubelet.x86_64 1.28.9-150500.2.1 kubernetes

kubelet.x86_64 1.28.10-150500.1.1 kubernetes

kubelet.x86_64 1.28.11-150500.1.1 kubernetes

kubelet.x86_64 1.28.12-150500.1.1 kubernetes

kubelet.x86_64 1.28.13-150500.1.1 kubernetes

kubelet.x86_64 1.28.14-150500.2.1 kubernetes

kubelet.x86_64 1.28.15-150500.1.1 kubernetes

服务名添加上x86_64在执行 后执行成功

yum install -y kubelet.x86_64

yum install -y kubeadm.x86_64

yum install -y kubectl.x86_64

#开机启动并启动

设置 开机启动systemctl enable kubelet

启动服务 systemctl start kubelet # 这一步服务是无法正常启动的

原文链接:https://blog.csdn.net/qq_36838700/article/details/141165373

初始化 (仅仅主机执行)

方式一:

执行: kubectl edit cm kubelet-config -n kube-system

修改或新增 : cgroupDriver: systemd

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

kubeadm init --apiserver-advertise-address=192.168.110.135 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=1.28.15 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16 --cri-socket=unix:///run/containerd/containerd.sock

方式二:获取默认配置文件修改

修改完配置文件后 执行命令: kubeadm init --config kubeadm.yaml

# 获取kubead默认配置文件

kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadm.yaml

# 配置大部分不用修改

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.110.135 # 修改为自己的主节点ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock # 可能需要修改前面容器使用containerd,这个里配置成这个

imagePullPolicy: IfNotPresent

name: k8smaster ## 主机节点名称

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers ## 替换为阿里云

kind: ClusterConfiguration

kubernetesVersion: 1.28.15 ## 版本号与自己的实际版本对应

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd ## 修改为systemd 或新增

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: ""

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

如果修改的是配置文件,可以先执行这一步 然后在执行初始化命令

[root@k8smaster2 ~]# kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.15

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.15

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.15

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.28.15

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.15-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.10.1

[root@k8smaster2 ~]# 初始化执行成功结果

根据提示执行

原文链接:https://blog.csdn.net/qq_36838700/article/details/141165373

######按照提示继续######

## init完成后第一步:复制相关文件夹

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

## 导出环境变量

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

如果假如集群的命令过期 可以通过下面的命令获取集群添加节点的命令

kubeadm token create --print-join-command

初始化完成后检查

查看服务运行状态

systemctl status kubelet

[root@k8smaster2 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since 三 2025-01-08 18:05:03 CST; 3min 15s ago

Docs: https://kubernetes.io/docs/

Main PID: 21616 (kubelet)

Tasks: 11

Memory: 34.9M

CGroup: /system.slice/kubelet.service

└─21616 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config...

1月 08 18:07:33 k8smaster2 kubelet[21616]: E0108 18:07:33.542971 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:07:38 k8smaster2 kubelet[21616]: E0108 18:07:38.545528 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:07:43 k8smaster2 kubelet[21616]: E0108 18:07:43.546429 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:07:48 k8smaster2 kubelet[21616]: E0108 18:07:48.548064 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:07:53 k8smaster2 kubelet[21616]: E0108 18:07:53.549266 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:07:58 k8smaster2 kubelet[21616]: E0108 18:07:58.550236 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:08:03 k8smaster2 kubelet[21616]: E0108 18:08:03.551508 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:08:08 k8smaster2 kubelet[21616]: E0108 18:08:08.554728 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:08:13 k8smaster2 kubelet[21616]: E0108 18:08:13.556942 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

1月 08 18:08:18 k8smaster2 kubelet[21616]: E0108 18:08:18.559070 21616 kubelet.go:2874] "Container runtime network not ready" networkReady="NetworkReady=false...nitialized"

Hint: Some lines were ellipsized, use -l to show in full.

[root@k8smaster2 ~]#

kubectl get nodes

kubectl get pods -n kube-system

服务状态为not ready,

查看日志命令 kubectl describe po calico-node-2kr9c -n kube-system

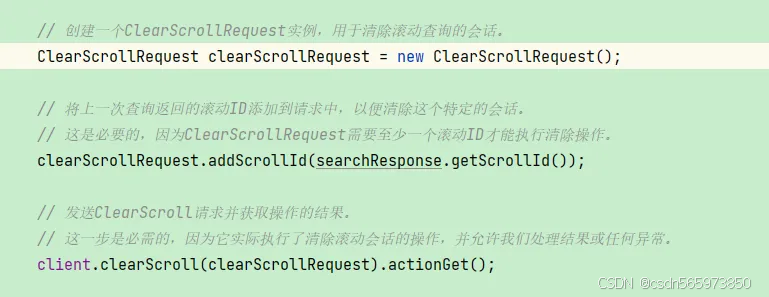

装网络插件

# 拉取对应的镜像

vi images.sh

#!/bin/bash

images=(

flannel:v0.25.5

flannel-cni-plugin:v1.5.1-flannel1

)

for imageName in ${images[@]} ; do

ctr -n k8s.io image pull registry.cn-shenzhen.aliyuncs.com/kube-image-dongdong/$imageName

done

chmod 755 images.sh

执行 images.sh 脚本后执行下面步骤

# 上传镜像

ctr -n k8s.io image tag registry.cn-shenzhen.aliyuncs.com/kube-image-dongdong/flannel-cni-plugin:v1.5.1-flannel1 docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1

ctr -n k8s.io image tag registry.cn-shenzhen.aliyuncs.com/kube-image-dongdong/flannel:v0.25.5 docker.io/flannel/flannel:v0.25.5

原文链接:https://blog.csdn.net/qq_36838700/article/details/141165373完整部署一套k8s-v.1.28.0版本的集群-CSDN博客

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

## 查看pods状态 发现还是在pending

[root@k8smaster2 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-6sdld 0/1 Init:ErrImagePull 0 75s

kube-system coredns-66f779496c-5d6sr 0/1 Pending 0 22m

kube-system coredns-66f779496c-lwdgc 0/1 Pending 0 22m

kube-system etcd-k8smaster2 1/1 Running 0 22m

kube-system kube-apiserver-k8smaster2 1/1 Running 0 22m

kube-system kube-controller-manager-k8smaster2 1/1 Running 0 22m

kube-system kube-proxy-vtxcc 1/1 Running 0 22m

kube-system kube-scheduler-k8smaster2 1/1 Running 0 22m

问题排查

查看日志

kubectl describe po kube-flannel-ds-6sdld -n kube-flannel

1. 镜像中找不到该版本(注意:前面image.sh脚本下载的是该组件,版本不一样)

Failed 84s kubelet Failed to pull image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to pull and unpack image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to resolve reference "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to do request: Head "https://registry-1.docker.io/v2/flannel/flannel-cni-plugin/manifests/v1.6.0-flannel1": dial tcp 128.242.245.244:443: connect: connection refused

Normal BackOff 44s (x4 over 2m) kubelet Back-off pulling image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1"

Warning Failed 44s (x4 over 2m) kubelet Error: ImagePullBackOff

Normal Pulling 32s (x4 over 3m11s) kubelet Pulling image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1"

Warning Failed 10s (x4 over 2m50s) kubelet Error: ErrImagePull

Warning Failed 10s kubelet Failed to pull image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to pull and unpack image "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to resolve reference "docker.io/flannel/flannel-cni-plugin:v1.6.0-flannel1": failed to do request: Head "https://registry-1.docker.io/v2/flannel/flannel-cni-plugin/manifests/v1.6.0-flannel1": dial tcp 98.159.108.58:443: connect: connection refused

解决方法:

1. 修改kube-flannel.yml 中的版本,与images.sh 执行的版本相同

2.修改拉去镜像为本地拉取

vim kube-flannel.yml

修改后先卸载 在安装

kubectl delete -f kube-flannel.yml

kubectl apply -f kube-flannel.yml

重新执行后结果正常

查看日志命令

journalctl -xeu kubelet

查看报错容器信息

kubectl describe po calico-node-2kr9c -n kube-system

发现pod的状态为ErrImagePull 或者 ImagePullBackOff,这两个状态都表示镜像拉取失败

其他参考文档:

kubernetes(1.28)配置flannel:kubelet无法拉取镜像(NotReady ImagePullBackOff)同时解决k8s配置harbor私人镜像仓库问题_flannel镜像拉取失败-CSDN博客

centos7升级内核版本到5.19.0 - sky_cheng - 博客园

Kubernetes[k8s] 使用containerd,安装1.27.3 - 1.28.0安装教程_kubernetes containerd 安装-CSDN博客

This system is not registered with an entitlement server. You can use subscription-manager to regist-CSDN博客