进阶任务四 InternVL 多模态模型部署微调实践

- 任务

- 文档

- 视频

1 InternVL 基本介绍

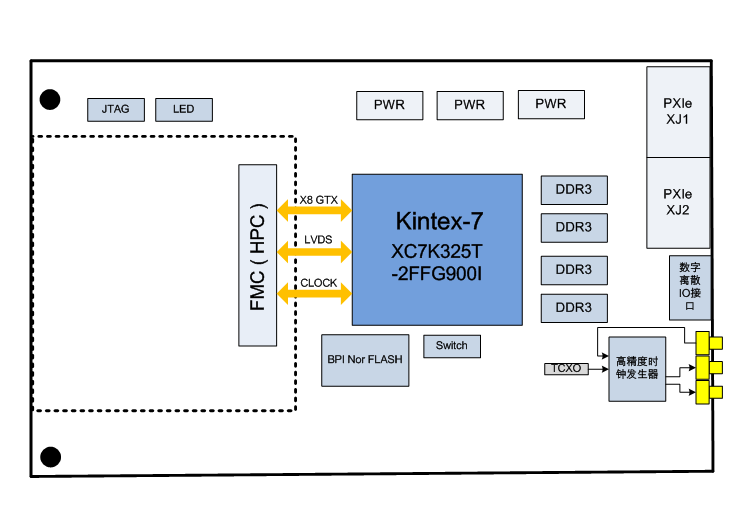

InternVL 是一种用于多模态任务的深度学习模型,旨在处理和理解多种类型的数据输入,如图像和文本。它结合了视觉和语言模型,能够执行复杂的跨模态任务,比如图文匹配、图像描述生成等。通过整合视觉特征和语言信息,InternVL 可以在多模态领域取得更好的表现

InternVL的 vision模块是一个微调过的 ViT,LLM 模块是一个 InternLM 的模型。对于视觉模块来说,它的特殊之处在 Dynamic High Resolution 动态高分辨率。

1.1 Dynamic High Resolution

InternVL 采取一种独特的预处理模块:动态高分辨率;

可以使 ViT 模型尽可能获取到更细节的图像信息,提高视觉特征的表达能力。对于输入的图片,首先resize 成 448 的倍数,然后按照预定义的尺寸比例从图片上 crop 对应的区域。细节如下图所示:

1.2 Pixel Shuffle

Pixel Shuffle是超分任务中是一个常见的操作,PyTorch官方实现为 nn.PixelShuffle(upscale_factor) ;该类的作用就是将一个 tensor 中的元素值进行重排列,假设 tensor 维度为 [B, C, H, W], PixelShuffle 操作不仅可以改变 tensor 的通道数,也会改变特征图的大小。

2 InternVL 部署和微调实战

主要任务:

让InternVL-2B生成文生图提示词,这个任务需要 VLM 对图片有格式化的描述并输出。微调 InterenVL 使用 Xtuner,部署 InternVL 使用 LMDeploy。

2.1 环境配置和工具安装

首先,我们准备预先挂载在开发机的 InternVL2-2B 模型;

cd /root

mkdir -p model

# cp 模型

cp -r /root/share/new_models/OpenGVLab/InternVL2-2B /root/model/

然后,用以下指令配置虚拟环境,安装 XTuner,安装 LMDeploy 等工具:

conda create --name xtuner python=3.10 -y

# 激活虚拟环境(注意:后续的所有操作都需要在这个虚拟环境中进行)

conda activate xtuner

# 安装一些必要的库

conda install pytorch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 pytorch-cuda=12.1 -c pytorch -c nvidia -y

# 安装其他依赖

apt install libaio-dev

pip install transformers==4.39.3

pip install streamlit==1.36.0

pip install flash-attn

# 创建一个目录,用来存放源代码

mkdir -p /root/InternLM/code

cd /root/InternLM/code

# Clone XTuner 源码

git clone -b v0.1.23 https://github.com/InternLM/XTuner

# 安装 XTuner

cd /root/InternLM/code/XTuner

pip install -e '.[deepspeed]'

# 安装 LMDeploy

pip install lmdeploy==0.5.3

# 安装验证

xtuner version

##命令

xtuner help

2.2 微调数据集准备

然后,准备微调数据集,我们使用 huggingface上的zhongshsh/CLoT-Oogiri-GO 数据集:

@misc{zhong2023clot,

title={Let's Think Outside the Box: Exploring Leap-of-Thought in Large Language Models with Creative Humor Generation},

author={Zhong, Shanshan and Huang, Zhongzhan and Gao, Shanghua and Wen, Weushao and Lin, Liang and Zitnik, Marinka and Zhou, Pan},

journal={arXiv preprint arXiv:2312.02439},

year={2023}

}

数据集我们从官网下载下来并进行去重,只保留中文数据。并制作成 XTuner 要求的形式。

## 首先让我们安装一下需要的包

pip install datasets matplotlib Pillow timm

## 让我们把数据集挪出来

cp -r /root/share/new_models/datasets/CLoT_cn_2000 /root/InternLM/datasets/

然后,我们可以简单查看部分数据集中的图片:

而这些图片的标签为这些数据里梗图的一些文本标注,比如:

{

"image": "ex_images/MjxjVcrFf9TFLbr2BKR4Py1L5qAic8K4VzEQAsTph0ztWe9vj3d8DGDdAC3tJV0aiaOrSBcsKpBIXIAh6O1CDXcA.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "果然!大家都会把鼻屎抹在课桌下面"

}

]

},

{

"image": "ex_images/MjxjVcrFf9ReweWibHwWQsM2iaiajJd0GQvFPsMPic79PnZGffSNfdVTQV9Z5J4FW1XciaIibRoosGuIfSPaFBuADCQA.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "你嘴里啃的是什么!我问你嘴里啃的是什么!"

}

]

},

{

"image": "ex_images/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "『住手!现在的你还不是那家伙的对手!先撤吧!!"

}

]

}

2.3 InternVL 部署与推理:微调前

我们使用 LMDeploy 来推理图片,我们选用的图片为 007aPnLRgy1hb39z0im50j30ci0el0wm.jpg,运用以下指令将图片移到特定文件夹方便查看,并去文本描述的 json 文件中查看具体内容:

cp InternLM/datasets/ex_images/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg InternLM/

{

"image": "ex_images/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "『住手!现在的你还不是那家伙的对手!先撤吧!!"

}

]

}

我们使用 LMDeploy 自带的 pipeline 工具进行开箱即用的推理,首先新建一个文件:

touch /root/InternLM/code/test_lmdeploy.py

cd /root/InternLM/code/

其中内容如下,可以看到我们使用的是 2B 的多模态模型,InternVL2-2B:

from lmdeploy import pipeline

from lmdeploy.vl import load_image

pipe = pipeline('/root/model/InternVL2-2B')

image = load_image('/root/InternLM/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg')

response = pipe(('请你根据这张图片,讲一个脑洞大开的梗', image))

print(response.text)

然后,我们执行推理结果:

python3 test_lmdeploy.py

推理结果如下,发现 InternVL 在微调前,能够对图像进行一定的认知和解读,但并没有给出符合我们预期的冷笑话:

2.4 InternVL 在冷笑话数据集上微调

我们已经准备好了微调数据集,现在,我们可以配置微调参数,具体的配置文件 config 为 /root/InternLM/code/XTuner/xtuner/configs/internvl/v2/internvl_v2_internlm2_2b_qlora_finetune.py:

修改后的配置文件为:

# Copyright (c) OpenMMLab. All rights reserved.

from mmengine.hooks import (CheckpointHook, DistSamplerSeedHook, IterTimerHook,

LoggerHook, ParamSchedulerHook)

from mmengine.optim import AmpOptimWrapper, CosineAnnealingLR, LinearLR

from peft import LoraConfig

from torch.optim import AdamW

from transformers import AutoTokenizer

from xtuner.dataset import InternVL_V1_5_Dataset

from xtuner.dataset.collate_fns import default_collate_fn

from xtuner.dataset.samplers import LengthGroupedSampler

from xtuner.engine.hooks import DatasetInfoHook

from xtuner.engine.runner import TrainLoop

from xtuner.model import InternVL_V1_5

from xtuner.utils import PROMPT_TEMPLATE

#######################################################################

# PART 1 Settings #

#######################################################################

# Model

path = '/root/model/InternVL2-2B'

# Data

# data_root = '/root/InternLM/datasets/CLoT_cn_2000/'

data_root = '/root/InternLM/datasets/'

data_path = data_root + 'ex_cn.json'

image_folder = data_root

prompt_template = PROMPT_TEMPLATE.internlm2_chat

max_length = 6656

# Scheduler & Optimizer

batch_size = 4 # per_device

accumulative_counts = 4

dataloader_num_workers = 4

max_epochs = 6

optim_type = AdamW

# official 1024 -> 4e-5

lr = 2e-5

betas = (0.9, 0.999)

weight_decay = 0.05

max_norm = 1 # grad clip

warmup_ratio = 0.03

# Save

save_steps = 1000

save_total_limit = 1 # Maximum checkpoints to keep (-1 means unlimited)

#######################################################################

# PART 2 Model & Tokenizer & Image Processor #

#######################################################################

model = dict(

type=InternVL_V1_5,

model_path=path,

freeze_llm=True,

freeze_visual_encoder=True,

quantization_llm=True, # or False

quantization_vit=False, # or True and uncomment visual_encoder_lora

# comment the following lines if you don't want to use Lora in llm

llm_lora=dict(

type=LoraConfig,

r=128,

lora_alpha=256,

lora_dropout=0.05,

target_modules=None,

task_type='CAUSAL_LM'),

# uncomment the following lines if you don't want to use Lora in visual encoder # noqa

# visual_encoder_lora=dict(

# type=LoraConfig, r=64, lora_alpha=16, lora_dropout=0.05,

# target_modules=['attn.qkv', 'attn.proj', 'mlp.fc1', 'mlp.fc2'])

)

#######################################################################

# PART 3 Dataset & Dataloader #

#######################################################################

llava_dataset = dict(

type=InternVL_V1_5_Dataset,

model_path=path,

data_paths=data_path,

image_folders=image_folder,

template=prompt_template,

max_length=max_length)

train_dataloader = dict(

batch_size=batch_size,

num_workers=dataloader_num_workers,

dataset=llava_dataset,

sampler=dict(

type=LengthGroupedSampler,

length_property='modality_length',

per_device_batch_size=batch_size * accumulative_counts),

collate_fn=dict(type=default_collate_fn))

#######################################################################

# PART 4 Scheduler & Optimizer #

#######################################################################

# optimizer

optim_wrapper = dict(

type=AmpOptimWrapper,

optimizer=dict(

type=optim_type, lr=lr, betas=betas, weight_decay=weight_decay),

clip_grad=dict(max_norm=max_norm, error_if_nonfinite=False),

accumulative_counts=accumulative_counts,

loss_scale='dynamic',

dtype='float16')

# learning policy

# More information: https://github.com/open-mmlab/mmengine/blob/main/docs/en/tutorials/param_scheduler.md # noqa: E501

param_scheduler = [

dict(

type=LinearLR,

start_factor=1e-5,

by_epoch=True,

begin=0,

end=warmup_ratio * max_epochs,

convert_to_iter_based=True),

dict(

type=CosineAnnealingLR,

eta_min=0.0,

by_epoch=True,

begin=warmup_ratio * max_epochs,

end=max_epochs,

convert_to_iter_based=True)

]

# train, val, test setting

train_cfg = dict(type=TrainLoop, max_epochs=max_epochs)

#######################################################################

# PART 5 Runtime #

#######################################################################

# Log the dialogue periodically during the training process, optional

tokenizer = dict(

type=AutoTokenizer.from_pretrained,

pretrained_model_name_or_path=path,

trust_remote_code=True)

custom_hooks = [

dict(type=DatasetInfoHook, tokenizer=tokenizer),

]

# configure default hooks

default_hooks = dict(

# record the time of every iteration.

timer=dict(type=IterTimerHook),

# print log every 10 iterations.

logger=dict(type=LoggerHook, log_metric_by_epoch=False, interval=10),

# enable the parameter scheduler.

param_scheduler=dict(type=ParamSchedulerHook),

# save checkpoint per `save_steps`.

checkpoint=dict(

type=CheckpointHook,

save_optimizer=False,

by_epoch=False,

interval=save_steps,

max_keep_ckpts=save_total_limit),

# set sampler seed in distributed evrionment.

sampler_seed=dict(type=DistSamplerSeedHook),

)

# configure environment

env_cfg = dict(

# whether to enable cudnn benchmark

cudnn_benchmark=False,

# set multi process parameters

mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0),

# set distributed parameters

dist_cfg=dict(backend='nccl'),

)

# set visualizer

visualizer = None

# set log level

log_level = 'INFO'

# load from which checkpoint

load_from = None

# whether to resume training from the loaded checkpoint

resume = False

# Defaults to use random seed and disable `deterministic`

randomness = dict(seed=None, deterministic=False)

# set log processor

log_processor = dict(by_epoch=False)

修改好微调配置文件后,用以下指令进行模型训练,我们使用 QLoRA 微调方法,并调整 Batch Size:

cd XTuner

NPROC_PER_NODE=1 xtuner train /root/InternLM/code/XTuner/xtuner/configs/internvl/v2/internvl_v2_internlm2_2b_qlora_finetune.py --work-dir /root/InternLM/work_dir/internvl_ft_run_8_filter --deepspeed deepspeed_zero1

微调过程示意如下图:

在 50% 的 A100上,按照我们的配置文件微调,需要接近 3 个小时;

由于 QLoRA 并不是微调整个模型 Backbone,而是采取一种基于适配器的微调方法,所以我们微调结束后还需要进行权重合并与模型转换,直接使用官方脚本进行权重合并和模型转换:

cd XTuner

# transfer weights

python3 xtuner/configs/internvl/v1_5/convert_to_official.py xtuner/configs/internvl/v2/internvl_v2_internlm2_2b_qlora_finetune.py /root/InternLM/work_dir/internvl_ft_run_8_filter/iter_2000.pth /root/InternLM/InternVL2-2B/

如果这里执行的epoch不是6,是更小一些的数字。可能会发现

internvl_ft_run_8_filter下没有iter_3000.pth,那需要把iter_3000.pth切换成实际internvl_ft_run_8_filter目录下的.pth文件即可。

最后微调,合并,转换好的模型在路径 /root/InternLM/InternVL2-2B/ 之下,文件结构为:

(xtuner) root@intern-studio-50055:~/InternLM/InternVL2-2B# tree -l

.

|-- added_tokens.json

|-- config.json

|-- configuration_intern_vit.py

|-- configuration_internlm2.py

|-- configuration_internvl_chat.py

|-- conversation.py

|-- generation_config.json

|-- model.safetensors

|-- modeling_intern_vit.py

|-- modeling_internlm2.py

|-- modeling_internvl_chat.py

|-- special_tokens_map.json

|-- tokenization_internlm2.py

|-- tokenizer.model

`-- tokenizer_config.json

0 directories, 15 files

完成微调以及权重合并,模型转换后,我们尝试用我们微调过的 InternVL 进行推理,我们的推理代码改为:

from lmdeploy import pipeline

from lmdeploy.vl import load_image

# pipe = pipeline('/root/model/InternVL2-2B') # 微调前

pipe = pipeline('/root/InternLM/InternVL2-2B') # 微调后

image = load_image('/root/InternLM/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg')

# image = load_image('/root/InternLM/datasets/ex_images/004atEXYgy1gpmpsexl8jj60u00x4jvj02.jpg')

# image = load_image('/root/InternLM/datasets/ex_images/004atEXYgy1gpsh1yszp5j60lx0x1q7302.jpg')

response = pipe(('请你根据这张图片,讲一个脑洞大开的梗', image))

print(response.text)

运行以下指令进行推理测试:

cd /root/InternLM/code

python3 test_lmdeploy.py

这 3 个测试用的梗图在训练集中具有如下 ground truth:

{

"image": "ex_images/007aPnLRgy1hb39z0im50j30ci0el0wm.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "『住手!现在的你还不是那家伙的对手!先撤吧!!"

}

]

},

... ...

{

"image": "ex_images/004atEXYgy1gpmpsexl8jj60u00x4jvj02.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "割腕次数过多"

}

]

},

... ...

{

"image": "ex_images/004atEXYgy1gpsh1yszp5j60lx0x1q7302.jpg",

"conversations": [

{

"from": "human",

"value": "<image>\n请你根据这张图片,讲一个脑洞大开的梗"

},

{

"from": "gpt",

"value": "家长会上,爸爸来晚了"

}

]

}

上面三个训练集中的图片,重新推理的结果和训练集中的 ground truth 并不完全一致,起码表明模型没有过拟合!!

![[GKCTF 2021]excel 骚操作1](https://i-blog.csdnimg.cn/direct/515b2d8cac0e47fc87c24c51b5f59f7b.png)