1.首先用一个网格世界来理解

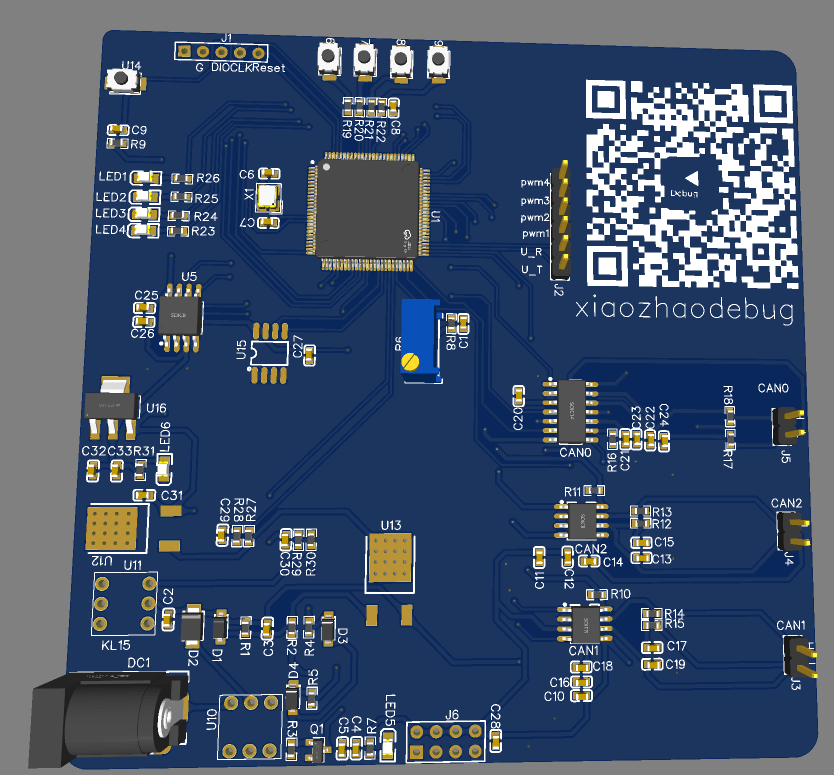

机器人在网格世界行走有四种形式,Accessible/forbidden/target cells, boundary.

提出一个任务,找到一个good的方式去到target

什么是good,不碰到boundary不进入forbidden最短的道路进入target

2.state

State: The status of the agent with respect to the environment.

对于网格世界机器人有九种状态

State space: the set of all states ![]()

对于双足机器人行走state来说,可以分为抬髋,屈膝,放髋,直膝四种state

3.Action

Action: For each state, there are five possible actions: a1, . . . , a5

• a1: move upward;

• a2: move rightward;

• a3: move downward;

• a4: move leftward;

• a5: stay unchanged;

Action space of a state: the set of all possible actions of a state.

对于双足机器人来说,在抬髋时有两种action ,屈膝或者是放髋

Question: can different states have different sets of actions?

4.State transition

When taking an action, the agent may move from one state to another. Such a process is called state transition.

Example: At state s1, if we choose action a2, then what is the next state?

Example: At state s1, if we choose action a1, then what is the next state?

State transition describes the interaction with the environment.

Question: Can we define the state transition in other ways? Simulation vs physics

simulation我们可以任意的定义

physics不可以的

Pay attention to forbidden areas: Example: at state s5, if we choose action a2, then what is the next state?

Case 1: the forbidden area is accessible but with penalty. Then,

Case 2: the forbidden area is inaccessible (e.g., surrounded by a wall)

We consider the first case, which is more general and challenging.

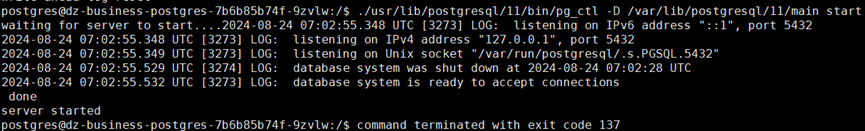

Tabular representation: We can use a table to describe the state transition: Can only represent deterministic cases

Can only represent deterministic cases

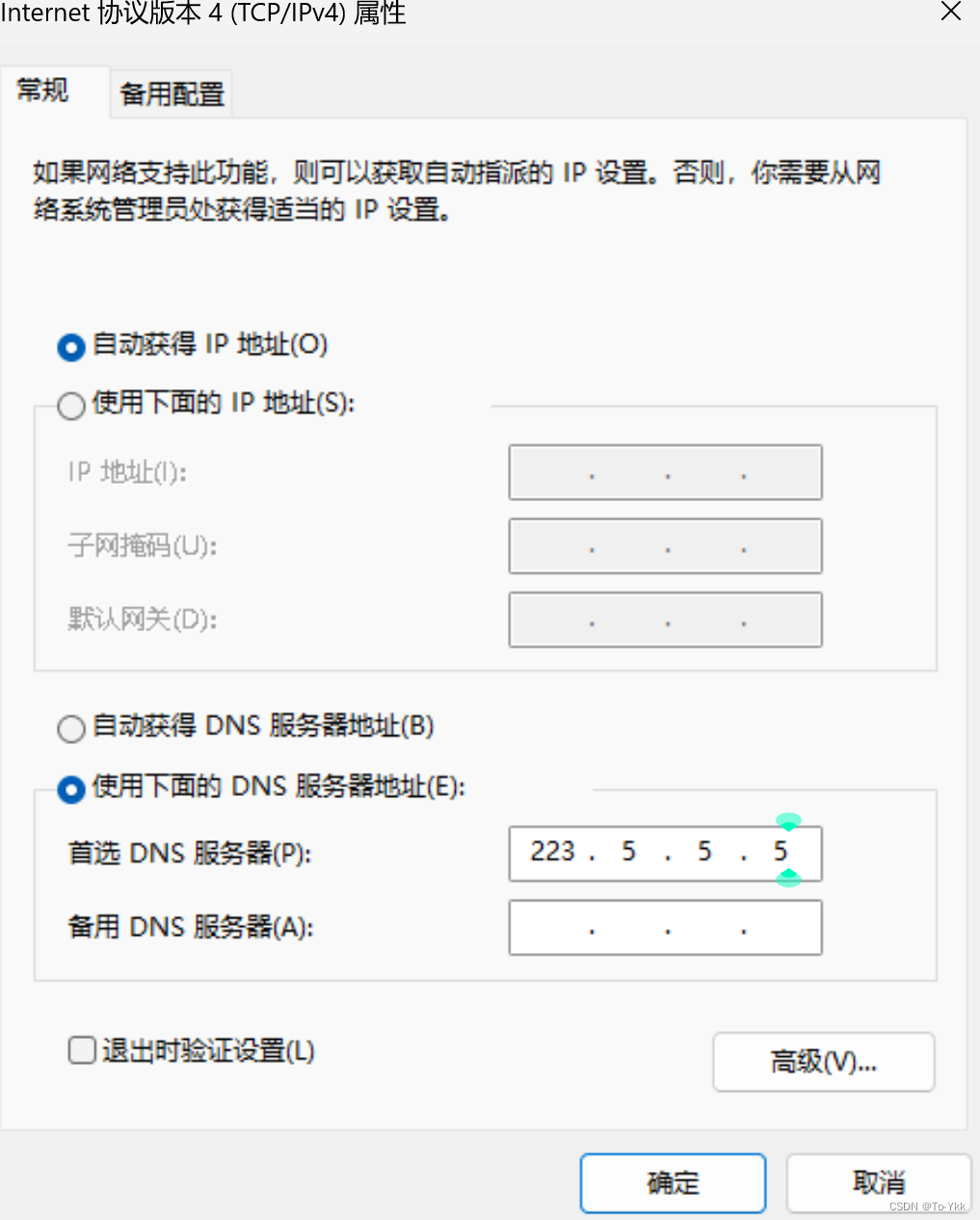

State transition probability: use probability to describe state transition!

Intuition: At state s1, if we choose action a2, the next state is s2.

Math:

Here it is a deterministic case. The state transition could be stochastic (for example, wind gust).

Here it is a deterministic case. The state transition could be stochastic (for example, wind gust).

5.Policy

Policy tells the agent what actions to take at a state.

告诉agent在这个state应该采取什么action

即在双足机器人中抬髋之后应该屈膝

Intuitive representation: We use arrows to describe a policy.

Based on this policy, we get the following trajectories with different starting points.

规定了在每个state中agent应该怎么走,比如双足机器人在抬髋state时,下一个action要是屈膝而不是直膝

Mathematical representation: using conditional probability For example, for state s1:

Mathematical representation: using conditional probability For example, for state s1:

It is a deterministic policy.

上面的都是确定的policy

There are stochastic policies. For example:

In this policy, for s1:

Tabular representation of a policy: how to use this table.

This table can represent either deterministic or stochastic cases.

This table can represent either deterministic or stochastic cases.

6.Reward

Reward is one of the most unique concepts of RL.

Reward: a real number we get after taking an action.

• A positive reward represents encouragement to take such actions.

• A negative reward represents punishment to take such actions.

注意:是这次action

Questions:

• What about a zero reward? No punishment.

• Can positive mean punishment? Yes.

In the grid-world example, the rewards are designed as follows:

• If the agent attempts to get out of the boundary, let rbound = −1

• If the agent attempts to enter a forbidden cell, let rforbid = −1

• If the agent reaches the target cell, let rtarget = +1

• Otherwise, the agent gets a reward of r = 0.

Reward can be interpreted as a human-machine interface, with which we can guide the agent to behave as what we expect.

For example, with the above designed rewards, the agent will try to avoid getting out of the boundary or stepping into the forbidden cells.

Tabular representation of reward transition: how to use the table?

Can only represent deterministic cases.

Mathematical description: conditional probability

• Intuition: At state s1, if we choose action a1, the reward is −1.

• Math: p(r = −1|s1, a1) = 1 and p(r 6= −1|s1, a1) = 0

Remarks:

Here it is a deterministic case. The reward transition could be stochastic. For example, if you study hard, you will get rewards. But how much is uncertain.

7.Trajectory and return

A trajectory is a state-action-reward chain:

The return of this trajectory is the sum of all the rewards collected along the trajectory:

return = 0 + 0 + 0 + 1 = 1

A different policy gives a different trajectory:

The return of this path is: return = 0 − 1 + 0 + 1 = 0

The return of this path is: return = 0 − 1 + 0 + 1 = 0

Which policy is better?

• Intuition: the first is better, because it avoids the forbidden areas.

• Mathematics: the first one is better, since it has a greater return!

• Return could be used to evaluate whether a policy is good or not (see details in the next lecture)!

8.Discounted return

A trajectory may be infinite:

The return is

return = 0 + 0 + 0 + 1+1 + 1 + . . . = ∞

The definition is invalid since the return diverges!\

Need to introduce a discount rate γ ∈ [0, 1):

Roles: 1) the sum becomes finite; 2) balance the far and near future rewards:

• If γ is close to 0, the value of the discounted return is dominated by the rewards obtained in the near future.

• If γ is close to 1, the value of the discounted return is dominated by the rewards obtained in the far future.

9.Episode

When interacting with the environment following a policy, the agent may stop at some terminal states. The resulting trajectory is called an episode (or a trial).

Example: episode

An episode is usually assumed to be a finite trajectory. Tasks with episodes are called episodic tasks.

Some tasks may have no terminal states, meaning the interaction with the environment will never end. Such tasks are called continuing tasks.

In the grid-world example, should we stop after arriving the target?

In fact, we can treat episodic and continuing tasks in a unified mathematical way by converting episodic tasks to continuing tasks.

• Option 1: Treat the target state as a special absorbing state. Once the agent reaches an absorbing state, it will never leave. The consequent rewards r = 0.

• Option 2: Treat the target state as a normal state with a policy. The agent can still leave the target state and gain r = +1 when entering the target state.如果policy好的话,agent就会一直留在那,如果policy不好的话,agent还可能跳出来

We consider option 2 in this course so that we don’t need to distinguish the target state from the others and can treat it as a normal state.

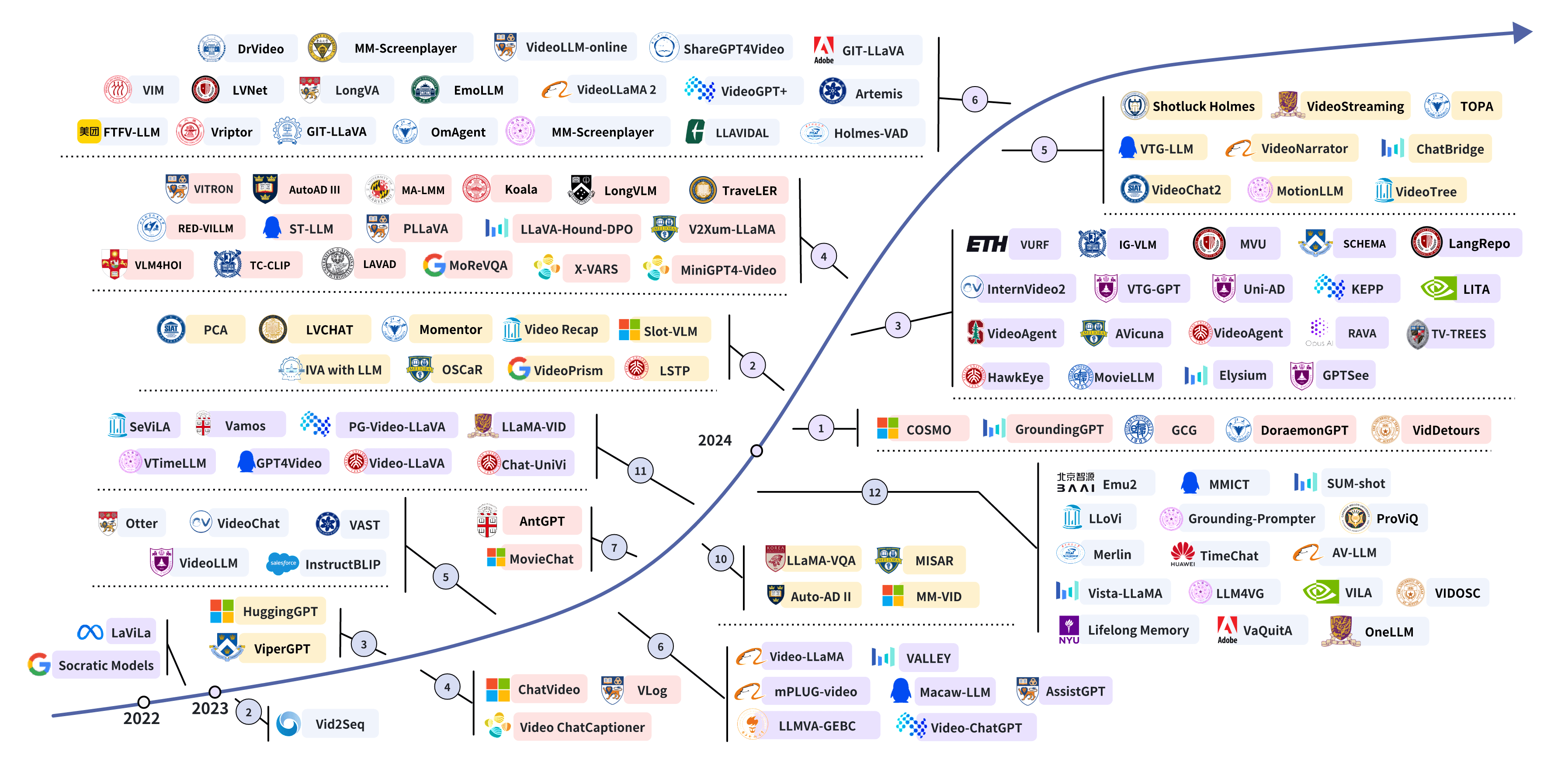

10.Markov decision process (MDP)

Key elements of MDP:

• Sets:

• State: the set of states S

• Action: the set of actions A(s) is associated for state s ∈ S.

• Reward: the set of rewards R(s, a).

• Probability distribution (or called system model):

• State transition probability: at state s, taking action a, the probability to transit to state s′ is p(s′|s, a)

• Reward probability: at state s, taking action a, the probability to get reward r is p(r|s, a)

• Policy: at state s, the probability to choose action a is π(a|s)

• Markov property: memoryless property

All the concepts introduced in this lecture can be put in the framework in MDP.

The grid world could be abstracted as a more general model, Markov process.

The circles represent states and the links with arrows represent the state transition.

11.Summary

By using grid-world examples, we demonstrated the following key concepts:

• State

• Action

• State transition, state transition probability p(s′|s, a)

• Reward, reward probability p(r|s, a)

• Trajectory, episode, return, discounted return

• Markov decision process

![[数据集][目标检测]红外场景下车辆和行人检测数据集VOC+YOLO格式19069张4类别](https://i-blog.csdnimg.cn/direct/dc64255d3e294f138c58f83fd8334275.png)