ml_movice_recommend_flask

- http://127.0.0.1:5000/recommend

- 【ai】学习笔记:电影推荐1:协同过滤 TF-DF 余弦相似性 进行了学习和理解,成功运行了工程。

- 本文进一步分析。

- 不知道是否有引入语义分析?

- 还是单独只是匹配算法?

电影推荐中的深度学习常用算法

- Movie recommendation systems often leverage a variety of machine learning algorithms to analyze user preferences and predict what movies users might like. Here are some common algorithms used:

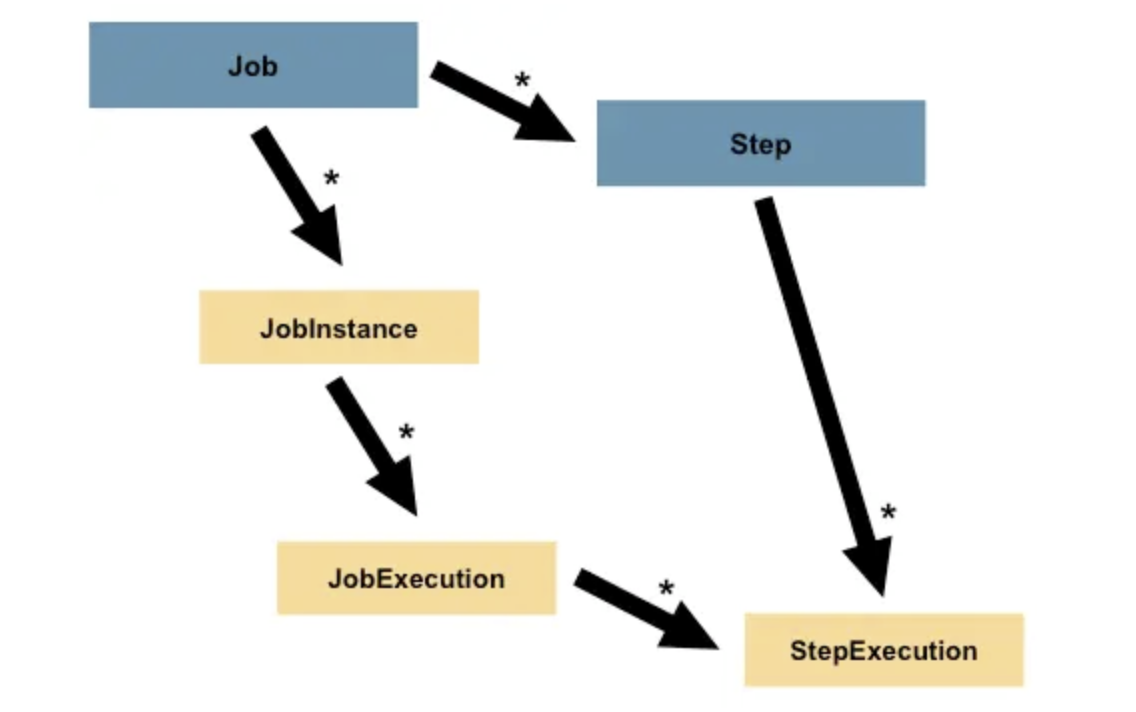

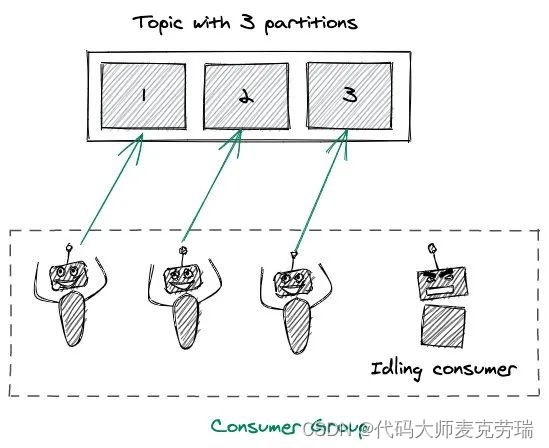

- Collaborative Filtering: This method makes recommendations based on the collective preferences of users. It can be further divided into:

- User-based Collaborative Filtering: Recommends items by finding similar users. This is often measured by observing the items that similar users have liked.

- Item-based Collaborative Filtering: Recommends items that are similar to items the user has liked in the past. Similarity is determined by the rating patterns of the users.

- Content-Based Filtering: This approach recommends items by comparing the content of the items and a user profile. The content of each item is represented as a set of descriptors or terms, typically the words that describe the item best, and the user profile is built based on the content of items the user has liked.

- Matrix Factorization Techniques: Such as Singular Value Decomposition (SVD) and Alternating Least Squares (ALS). These techniques are used to predict missing ratings in the user-item association matrix, providing the basis for recommendations.

- Deep Learning: Neural networks, especially autoencoders and convolutional neural networks (CNNs), have been used for feature learning in recommendation systems. They can capture the nonlinear relationships between users and items.

- 混合模型:

- Hybrid Models: Combining collaborative filtering, content-based filtering, and other methods to improve recommendation quality. Hybrid models can leverage the strengths of multiple recommendation approaches to provide more accurate recommendations.

- Each of these algorithms has its strengths and is chosen based on the specific requirements and characteristics of the recommendation system being developed.

结果

为什么TfidfVectorizer的计算得到的tfidf,可以多次进行fit_transform

- scikit-learn 特征提取模块中的 TfidfVectorizer 旨在将原始文档集合转换为 TF-IDF 特征矩阵。 TfidfVectorizer 可以在不同的文档集上多次与 fit_transform 一起使用的原因是由于它的设计和功能:

拟合阶段:在拟合阶段,TfidfVectorizer 从训练集中学习词汇和 idf(逆文档频率)。它确定将文本数据转换为特征向量所需的参数。词汇表是术语到特征索引的映射。 - 转换阶段:在转换阶段,它使用学习到的词汇和 idf 值将文档转换为 TF-IDF 特征矩阵。这意味着对于任何新的文档集合,只要调用transform方法,它就可以根据学习到的参数将这些文档转换到相应的TF-IDF特征空间中。

- 变换的无状态性:fit_transform方法本质上是fit和transform的组合。它用数据拟合模型,然后根据拟合模型转换数据。这个过程在不同的调用之间是无状态的,这意味着对 fit_transform 的每次调用都是独立的。当您对一组新文档调用 fit_transform 时,它会忽略任何先前的状态并重新开始,从新文档组中学习新词汇和 idf 值。

灵活性:这种设计允许灵活地处理文档。您可以将矢量化器安装在一组文档上以学习词汇表,然后使用学习到的词汇表将其他文档集转换到相同的特征空间。或者,如果您需要将不同的文档集视为具有词汇表和 idf 值的单独集合,则可以对不同的文档集使用 fit_transform。

使用案例:此功能在您可能拥有需要独立矢量化的不同文档集合(例如,不同语言或来自不同域的文档)的情况下特别有用。

综上所述,多次使用 fit_transform 的能力可以实现文本数据的多功能预处理,适应文本分析和机器学习中的各种场景和要求。 - The TfidfVectorizer from scikit-learn’s feature extraction module is designed to convert a collection of raw documents into a matrix of TF-IDF features. The reason why TfidfVectorizer can be used multiple times with fit_transform on different sets of documents is due to its design and functionality:

Fit Phase: During the fit phase, TfidfVectorizer learns the vocabulary and idf (inverse document frequency) from the training set. It determines the parameters needed to transform the text data into feature vectors. The vocabulary is a mapping of terms to feature indices.

Transform Phase: In the transform phase, it uses the learned vocabulary and idf values to transform the documents into a matrix of TF-IDF features. This means that for any new set of documents, as long as the transform method is called, it can convert these documents into the corresponding TF-IDF feature space based on the learned parameters.

Statelessness of Transformations: The fit_transform method is essentially a combination of fit and transform. It fits the model with the data and then transforms the data according to the fitted model. This process is stateless between different calls, meaning that each call to fit_transform is independent. When you call fit_transform on a new set of documents, it disregards any previous state and starts fresh, learning a new vocabulary and idf values from the new set of documents.

Flexibility: This design allows for flexibility in processing documents. You can fit the vectorizer on one set of documents to learn the vocabulary and then transform other sets of documents into the same feature space using the learned vocabulary. Alternatively, you can use fit_transform on different sets of documents if you need to treat them as separate collections with their vocabularies and idf values.

Use Cases: This feature is particularly useful in scenarios where you might have different collections of documents that need to be vectorized independently (e.g., documents in different languages or from different domains).

In summary, the ability to use fit_transform multiple times allows for versatile preprocessing of text data, accommodating various scenarios and requirements in text analysis and machine learnin

介绍

- To analyze the ml_movice_recommend_flask project and understand how it performs recommendations, we’ll need to consider the provided excerpts 抽印 and the project’s characteristics 特征 . Given the information, the project likely involves a Flask application (a Python web framework) for serving movie recommendations, which utilizes machine learning libraries such as pandas, scikit-learn, and numpy for data processing and model training.

machine learning libraries such as pandas, scikit-learn

numpy for data processing and model training.

推荐过程使用一个元数据 存入csv里的

- The tmdb_5000_credits.csv file suggests that movie metadata is used as part of the recommendation process.

General Steps for Movie Recommendation System:

-

Data Loading: Load movie data from tmdb_5000_credits.csv using pandas. This file contains movie metadata that is crucial for making recommendations.

-

Data Preprocessing: Clean and preprocess the data. This might involve handling missing values, extracting relevant features (e.g., genres, keywords), and encoding categorical variables.

-

特征工程 Feature Engineering: Create a feature matrix from the movie metadata. Techniques like TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings might be used to convert text data into numerical form, making it suitable for machine learning models.

-

Model Training: Train a machine learning model using scikit-learn. 训练一个机器学习模型。 The model could be a content-based 基于内容的 or 协同过滤的 collaborative filtering system.

-

Content-based systems recommend movies similar to what a user likes based on movie features. Collaborative filtering recommends movies by finding similar users. 基于电影的特征,按照用户的喜好,来推荐电影。

-

协同过出来是通过找到相似的读者来推荐电影。

-

Recommendation: Implement a function to generate movie recommendations. This function takes user input (e.g., a favorite movie) and **outputs a list of recommended movies based on the trained model.** -

Flask Application: Develop a Flask application that serves the recommendation system. It would include routes to accept

-

user input (e.g., through a web form) and display the recommended movies.

-

Integration with Frontend: Use HTML/CSS/JavaScript to create a user-friendly interface where users can input their preferences and view recommendations.

-

大体过程

from flask import Flask, request, render_template

import pandas as pd

# Assume recommendation_function is a function that takes user input and returns recommendations

from recommendation_system import recommendation_function

app = Flask(__name__)

@app.route('/', methods=['GET', 'POST'])

def recommend():

if request.method == 'POST':

user_input = request.form['user_input']

recommendations = recommendation_function(user_input)

return render_template('recommendations.html', recommendations=recommendations)

return render_template('index.html')

if __name__ == '__main__':

app.run(debug=True)

- This code snippet outlines a basic Flask application setup. The actual implementation of recommendation_function would depend on the specifics of the recommendation logic, which involves data preprocessing, feature engineering, and model training as described in the general steps.

- recommendation_function 由特定的推荐逻辑提供。

- 将前面的步骤都走一遍。

以第二行作为输入,查找相似的行

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

#import openpyxl

def load_and_preprocess_data(filepath):

data = pd.read_excel(filepath)

data_cleaned = data.iloc[1:, [2, 4, 6, 19]].rename(columns={

'Unnamed: 2': 'Resource Name',

'格式': 'Format',

'公共标签': 'Public Tags',

'Unnamed: 19': 'Description'

})

# Drop rows with missing descriptions

data_cleaned = data_cleaned.dropna(subset=['Description'])

return data_cleaned

def create_similarity_matrix(data_cleaned):

# Initialize TF-IDF Vectorizer

tfidf_vectorizer = TfidfVectorizer(stop_words='english')

# Generate TF-IDF vectors for resource descriptions

tfidf_matrix = tfidf_vectorizer.fit_transform(data_cleaned['Description'])

# Compute cosine similarity matrix

cosine_sim_matrix = cosine_similarity(tfidf_matrix)

return cosine_sim_matrix

def recommend_materials(cosine_sim_matrix, data_cleaned, base_index, num_recommendations=5):

# Get similarity scores

similarity_scores = list(enumerate(cosine_sim_matrix[base_index]))

similarity_scores = sorted(similarity_scores, key=lambda x: x[1], reverse=True)

# Get the indices of the most similar materials

most_similar_materials = similarity_scores[1:num_recommendations+1] # Skip the first one as it's the material itself

# Print recommended materials

print("Recommended Materials:")

for i, score in most_similar_materials:

print(f"{data_cleaned.iloc[i]['Resource Name']} - Similarity Score: {score:.2f}")

def main():

# Load and preprocess data

filepath = 'D:\\XTRANS\\cuda\\03-graph-db\\RoleCamera.xlsx' # Update this to your file path

data_cleaned = load_and_preprocess_data(filepath)

# Create similarity matrix

cosine_sim_matrix = create_similarity_matrix(data_cleaned)

# Recommend materials for a given index, for example, index 2

recommend_materials(cosine_sim_matrix, data_cleaned, 2)

if __name__ == "__main__":

main()

打印第二行的内容

def recommend_materials(cosine_sim_matrix, data_cleaned, base_index, num_recommendations=5):

# Print the content of the material at the given index

print(f"Content at index {base_index}:")

print(f"Resource Name: {data_cleaned.iloc[base_index]['Resource Name']}")

print(f"Description: {data_cleaned.iloc[base_index]['Description']}\n")

# Get similarity scores

similarity_scores = list(enumerate(cosine_sim_matrix[base_index]))

similarity_scores = sorted(similarity_scores, key=lambda x: x[1], reverse=True)

# Get the indices of the most similar materials

most_similar_materials = similarity_scores[1:num_recommendations+1] # Skip the first one as it's the material itself

# Print recommended materials

print("Recommended Materials:")

for i, score in most_similar_materials:

print(f"{data_cleaned.iloc[i]['Resource Name']} - Similarity Score: {score:.2f}")

打印index

def recommend_materials(cosine_sim_matrix, data_cleaned, base_index, num_recommendations=5):

print(f"Content at index {base_index}:")

print(f"Resource Name: {data_cleaned.iloc[base_index]['Resource Name']}")

print(f"Description: {data_cleaned.iloc[base_index]['Description']}\n")

similarity_scores = list(enumerate(cosine_sim_matrix[base_index]))

similarity_scores = sorted(similarity_scores, key=lambda x: x[1], reverse=True)

most_similar_materials = similarity_scores[1:num_recommendations+1]

print("Recommended Materials:")

for i, score in most_similar_materials:

resource_name = data_cleaned.iloc[i]['Resource Name']

description = data_cleaned.iloc[i]['Description']

print(f"Index: {i} - Resource Name: {resource_name} - Description: {description} - Similarity Score: {score:.2f}")

结合标签和描述计算相似度

- 结合标签和描述计算相似度可以通过将这些信息合并成一个单一的文本字段,然后使用TF-IDF向量化器对合并后的文本进行向量化处理。这样做可以确保相似度计算不仅考虑到资源描述,还包括公共标签和分类标签的信息。以下是具体的实现步骤和完整的Python脚本:

实现步骤

加载和预处理数据:

加载数据并清理列名。

合并资源描述、公共标签和分类标签为一个单一的文本字段。

创建TF-IDF矩阵:

使用TfidfVectorizer对合并后的文本进行向量化处理,生成TF-IDF矩阵。

计算相似度:

计算用户输入描述与所有资源之间的余弦相似度。

推荐系统:

基于计算的相似度推荐最相似的素材。

解释

- 加载和预处理数据:

打印列名,确保了解实际的列名。

清理列名中的空格。

重命名相关列名为标准化名称。

合并相关列(资源描述、公共标签和分类标签)为组合文本列。

- 创建TF-IDF矩阵:

初始化TF-IDF向量器并生成TF-IDF矩阵。

- 推荐系统:

打印用户输入的描述。

将用户输入描述转换为TF-IDF向量。

计算用户描述与所有资源描述的余弦相似度。

- 根据相似度分数推荐最相似的素材,并打印推荐结果。

- 请根据上述代码进行测试。如果仍有问题,请提供错误的详细信息,以便进一步排查。

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

def load_and_preprocess_data(filepath):

print("Loading data from file...")

data = pd.read_excel(filepath)

# Check the actual column names

print("Columns in the dataset:", data.columns)

data_cleaned = data.iloc[1:, :].rename(columns=lambda x: x.strip())

# Extracting relevant columns after ensuring correct column names

data_cleaned = data_cleaned.rename(columns={

'Unnamed: 2': 'Resource Name',

'格式': 'Format',

'公共标签': 'Public Tags',

'Unnamed: 19': 'Description'

})

# Drop rows with missing descriptions

data_cleaned = data_cleaned.dropna(subset=['Description'])

# Combine relevant columns into a single text column for TF-IDF processing

data_cleaned['Combined'] = data_cleaned['Description'].astype(str) + ' ' + \

data_cleaned['Public Tags'].astype(str) + ' ' + \

data_cleaned['分类标签'].astype(str)

print("Data loaded and preprocessed successfully.")

print("Sample data:")

print(data_cleaned.head())

return data_cleaned

def create_similarity_matrix(data_cleaned):

print("Creating TF-IDF matrix...")

# Initialize TF-IDF Vectorizer

tfidf_vectorizer = TfidfVectorizer(stop_words='english')

# Generate TF-IDF vectors for combined text

tfidf_matrix = tfidf_vectorizer.fit_transform(data_cleaned['Combined'])

print("TF-IDF matrix created successfully.")

return tfidf_vectorizer, tfidf_matrix

def recommend_materials_based_on_description(tfidf_vectorizer, tfidf_matrix, data_cleaned, user_description, num_recommendations=5):

# Combine user description with empty tags for consistency in TF-IDF processing

combined_user_input = user_description + ' '

print(f"User input description: {combined_user_input}")

# Transform the user input description into TF-IDF vector

user_tfidf_vector = tfidf_vectorizer.transform([combined_user_input])

# Compute cosine similarity between user description and all resource descriptions

cosine_similarities = cosine_similarity(user_tfidf_vector, tfidf_matrix).flatten()

# Get the indices of the most similar materials

similarity_scores = list(enumerate(cosine_similarities))

similarity_scores = sorted(similarity_scores, key=lambda x: x[1], reverse=True)

most_similar_materials = similarity_scores[:num_recommendations]

# Print recommended materials

print("Recommended Materials:")

for i, score in most_similar_materials:

print(f"Resource Name: {data_cleaned.iloc[i]['Resource Name']} - Similarity Score: {score:.2f}")

def main():

# Load and preprocess data

filepath = 'D:\\XTRANS\\cuda\\03-graph-db\\RoleCamera.xlsx' # Update this to your file path if needed

data_cleaned = load_and_preprocess_data(filepath)

# Create similarity matrix

tfidf_vectorizer, tfidf_matrix = create_similarity_matrix(data_cleaned)

# User input description

user_description = "描述一个角色在环境中的动态镜头" # Replace with actual user input

# Recommend materials based on user description

recommend_materials_based_on_description(tfidf_vectorizer, tfidf_matrix, data_cleaned, user_description)

if __name__ == "__main__":

main()

-

得分全部为0

D:\Users\zhangbin\anaconda3\python.exe D:\XTRANS\cuda\03-graph-db\04-cmkg\zhb_learn\多维度2.py

Loading data from file...

Columns in the dataset: Index(['Unnamed: 0', 'Unnamed: 1', 'Unnamed: 2', 'Unnamed: 3', '格式', '公共标签',

'分类标签', 'Unnamed: 7', 'Unnamed: 8', 'Unnamed: 9', 'Unnamed: 10',

'Unnamed: 11', 'Unnamed: 12', '筛选标签', 'Unnamed: 14', 'Unnamed: 15',

'特殊标签', 'Unnamed: 17', 'Unnamed: 18', 'Unnamed: 19', 'Unnamed: 20',

'Unnamed: 21', 'Unnamed: 22', 'Unnamed: 23', 'Unnamed: 24',

'Unnamed: 25', 'Unnamed: 26', 'Unnamed: 27', 'Unnamed: 28'],

dtype='object')

Data loaded and preprocessed successfully.

Sample data:

Unnamed: 0 ... Combined

2 c356b439-d6be-4a6a-b28c-c5c04ef2a98c ... 突出角色 ZYK 资源类型

3 99bd7474-e62f-4d47-9945-c2775a9965fd ... 高潮部分,时间不宜长留,适合3~5s ZYK 资源类型

4 cc6d168e-bc95-47f7-8787-b140091ffde6 ... 展示角色与环境关系,突出展示人物姿态 ZYK 资源类型

5 c89d039d-b455-486e-bf94-bf6eeaf74637 ... 展示角色与环境关系,突出展示人物姿态 ZYK 资源类型

6 68431200-5ffe-4059-8aca-323ca568fd14 ... 展示角色与环境关系,突出展示人物姿态 ZYK 资源类型

[5 rows x 30 columns]

Creating TF-IDF matrix...

TF-IDF matrix created successfully.

User input description: 描述一个角色在环境中的动态镜头

Recommended Materials:

Resource Name: 正面全身推半身 - Similarity Score: 0.00

Resource Name: 正面半身推脸特写 - Similarity Score: 0.00

Resource Name: 左边半身环绕到右边半身 - Similarity Score: 0.00

Resource Name: 左侧全景向右环拍至正面 - Similarity Score: 0.00

Resource Name: 右侧大全景仰拍-摇镜 - Similarity Score: 0.00

进程已结束,退出代码为 0

修复得分全为0

‘- 得分都是0的原因可能有以下几个方面:

用户输入的描述与数据集中的描述不匹配:用户输入的描述可能包含了一些在数据集中不存在的词汇或短语,导致TF-IDF向量中全是0。

TF-IDF向量器没有正确处理中文:TfidfVectorizer默认是处理英文的,需要为中文进行适当的预处理,例如分词。

解决方案

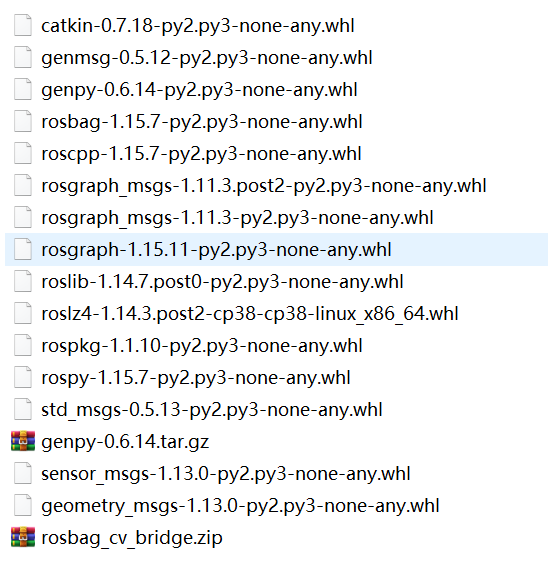

我们需要对中文文本进行分词,并确保在处理过程中包含所有必要的词汇。可以使用像jieba这样的中文分词库来处理中文文本,然后再使用TfidfVectorizer进行向量化

import pandas as pd

import jieba

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

def chinese_tokenizer(text):

return jieba.lcut(text)

def load_and_preprocess_data(filepath):

print("Loading data from file...")

data = pd.read_excel(filepath)

# Check the actual column names

print("Columns in the dataset:", data.columns)

data_cleaned = data.iloc[1:, :].rename(columns=lambda x: x.strip())

# Extracting relevant columns after ensuring correct column names

data_cleaned = data_cleaned.rename(columns={

'Unnamed: 2': 'Resource Name',

'格式': 'Format',

'公共标签': 'Public Tags',

'Unnamed: 19': 'Description'

})

# Drop rows with missing descriptions

data_cleaned = data_cleaned.dropna(subset=['Description'])

# Combine relevant columns into a single text column for TF-IDF processing

data_cleaned['Combined'] = data_cleaned['Description'].astype(str) + ' ' + \

data_cleaned['Public Tags'].astype(str) + ' ' + \

data_cleaned['分类标签'].astype(str)

# Print the combined column to debug

print("Combined column for TF-IDF processing:")

print(data_cleaned['Combined'].head())

print("Data loaded and preprocessed successfully.")

print("Sample data:")

print(data_cleaned.head())

return data_cleaned

def create_similarity_matrix(data_cleaned):

print("Creating TF-IDF matrix...")

# Initialize TF-IDF Vectorizer with custom tokenizer for Chinese

tfidf_vectorizer = TfidfVectorizer(tokenizer=chinese_tokenizer, stop_words='english')

# Generate TF-IDF vectors for combined text

tfidf_matrix = tfidf_vectorizer.fit_transform(data_cleaned['Combined'])

# Print some values of the TF-IDF matrix to debug

print("TF-IDF matrix sample values:")

print(tfidf_matrix.toarray()[:5])

print("TF-IDF matrix created successfully.")

return tfidf_vectorizer, tfidf_matrix

def recommend_materials_based_on_description(tfidf_vectorizer, tfidf_matrix, data_cleaned, user_description, num_recommendations=5):

# Combine user description with empty tags for consistency in TF-IDF processing

combined_user_input = user_description + ' '

print(f"User input description: {combined_user_input}")

# Transform the user input description into TF-IDF vector

user_tfidf_vector = tfidf_vectorizer.transform([combined_user_input])

# Print the user TF-IDF vector to debug

print("User TF-IDF vector:")

print(user_tfidf_vector.toarray())

# Compute cosine similarity between user description and all resource descriptions

cosine_similarities = cosine_similarity(user_tfidf_vector, tfidf_matrix).flatten()

# Print the cosine similarity scores to debug

print("Cosine similarity scores:")

print(cosine_similarities)

# Get the indices of the most similar materials

similarity_scores = list(enumerate(cosine_similarities))

similarity_scores = sorted(similarity_scores, key=lambda x: x[1], reverse=True)

most_similar_materials = similarity_scores[:num_recommendations]

# Print recommended materials

print("Recommended Materials:")

for i, score in most_similar_materials:

print(f"Resource Name: {data_cleaned.iloc[i]['Resource Name']} - Similarity Score: {score:.2f}")

def main():

# Load and preprocess data

filepath = 'D:\\XTRANS\\cuda\\03-graph-db\\RoleCamera.xlsx' # Update this to your file path if needed

data_cleaned = load_and_preprocess_data(filepath)

# Create similarity matrix

tfidf_vectorizer, tfidf_matrix = create_similarity_matrix(data_cleaned)

# User input description

user_description = "描述一个角色在环境中的动态镜头" # Replace with actual user input

# Recommend materials based on user description

recommend_materials_based_on_description(tfidf_vectorizer, tfidf_matrix, data_cleaned, user_description)

if __name__ == "__main__":

main()

- 解释

使用jieba进行中文分词:在TF-IDF向量器中使用自定义的中文分词器。

合并相关列:确保所有相关列合并到组合文本列中,并对其进行分词处理。

检查用户输入描述的TF-IDF向量:确保用户输入的描述经过分词和向量化处理。

通过这种方法,可以更准确地处理中文文本,从而提高相似度计算的准确性。如果问题仍然存在,请提供调试信息的输出,以便进一步分析和解决问题

User input description: 描述一个角色在环境中的动态镜头