秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录 :《YOLOv8改进有效涨点》专栏介绍 & 专栏目录 | 目前已有50+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进——点击即可跳转

本文介绍了一种基于注意力尺度序列融合的一阶段目标检测框架(ASF-YOLO),该框架结合了空间和尺度特征,用于准确快速的细胞实例分割。在YOLO分割框架的基础上,我们采用了尺度序列特征融合(SSFF)模块来增强网络的多尺度信息提取能力,以及三级特征编码(TFE)模块来融合不同尺度的特征图,以增加详细信息。我们进一步引入了通道和位置注意力机制(CPAM),以整合SSFF和TFE模块,该机制专注于信息丰富的通道和与空间位置相关的小物体,以提升检测和分割性能。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。对于学有余力的同学,可以挑战进阶模块。文章内容丰富,可以帮助您更好地面对深度学习目标检测YOLO系列的挑战。

专栏地址:YOLOv8改进——更新各种有效涨点方法——点击即可跳转

目录

1. 原理

2. ASF-YOLO代码实现

2.1 将ASF-YOLO添加到YOLOv8中

2.2 更改init.py文件

2.3 添加yaml文件

2.4 在task.py中进行注册

2.5 执行程序

3. 完整代码分享

4. GFLOPs

5.进阶

6.总结

1. 原理

论文地址:ASF-YOLO: A Novel YOLO Model with Attentional Scale Sequence Fusion for Cell Instance Segmentation——点击即可跳转

官方代码:官方代码仓库——点击即可跳转

ASF-YOLO是一种基于YOLO(You Only Look Once)框架的改进模型,专门用于细胞实例分割。它通过结合空间和尺度特征,提供了更准确和快速的细胞实例分割。ASF-YOLO的主要原理包括以下几个方面:

1. YOLO框架的基础结构

ASF-YOLO基于YOLO框架,该框架包括三个主要部分:骨干网络(backbone)、颈部网络(neck)和头部网络(head)。其中,骨干网络负责在不同粒度上提取图像特征,颈部网络进行多尺度特征融合,头部网络则用于目标的边界框预测和分割掩码生成。

2. 规模序列特征融合模块(SSFF)

ASF-YOLO引入了SSFF模块,该模块通过归一化、上采样和多尺度特征的3D卷积,结合了不同尺度的全局语义信息。这种方法有效地处理了不同大小、方向和长宽比的物体,提升了分割性能。

3. 三重特征编码器模块(TFE)

TFE模块融合了不同尺度(大、中、小)的特征图,捕捉了小物体的细节信息。通过将这些详细特征整合到每个特征分支中,TFE模块增强了对密集细胞的小物体检测能力。

4. 通道和位置注意力机制(CPAM)

CPAM模块集成了来自SSFF和TFE模块的特征信息。它通过适应性地调整对相关通道和空间位置的关注,提高了小物体的检测和分割精度。

5. 高效的损失函数和后处理方法

ASF-YOLO在训练阶段使用了EIoU(Enhanced Intersection over Union)损失函数,该函数比传统的CIoU(Complete IoU)更能捕捉小物体的位置关系。此外,ASF-YOLO还在后处理阶段采用了软非极大值抑制(Soft-NMS),进一步改善了密集重叠细胞的检测问题。

结论

ASF-YOLO通过引入SSFF、TFE和CPAM模块,优化了YOLO框架在细胞实例分割中的性能,使其在处理小、密集和重叠物体时表现出色。这些创新使得ASF-YOLO在医学图像分析和细胞生物学领域具有广泛的应用潜力。

2. ASF-YOLO代码实现

2.1 将ASF-YOLO添加到YOLOv8中

关键步骤一: 将下面代码粘贴到在/ultralytics/ultralytics/nn/modules/block.py中,并在该文件的__all__中添加['Zoom_cat', 'ScalSeq', 'Add', 'channel_att', 'attention_model']

import torch

import torch.nn as nn

import torch.nn.functional as F

import math

def autopad(k, p=None, d=1): # kernel, padding, dilation

# Pad to 'same' shape outputs

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p

class Conv(nn.Module):

# Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

return self.act(self.conv(x))

class Zoom_cat(nn.Module):

def __init__(self):

super().__init__()

# self.conv_l_post_down = Conv(in_dim, 2*in_dim, 3, 1, 1)

def forward(self, x):

"""l,m,s表示大中小三个尺度,最终会被整合到m这个尺度上"""

l, m, s = x[0], x[1], x[2]

tgt_size = m.shape[2:]

l = F.adaptive_max_pool2d(l, tgt_size) + F.adaptive_avg_pool2d(l, tgt_size)

# l = self.conv_l_post_down(l)

# m = self.conv_m(m)

# s = self.conv_s_pre_up(s)

s = F.interpolate(s, m.shape[2:], mode='nearest')

# s = self.conv_s_post_up(s)

lms = torch.cat([l, m, s], dim=1)

return lms

class ScalSeq(nn.Module):

def __init__(self, inc, channel):

super(ScalSeq, self).__init__()

self.conv0 = Conv(inc[0], channel, 1)

self.conv1 = Conv(inc[1], channel, 1)

self.conv2 = Conv(inc[2], channel, 1)

self.conv3d = nn.Conv3d(channel, channel, kernel_size=(1, 1, 1))

self.bn = nn.BatchNorm3d(channel)

self.act = nn.LeakyReLU(0.1)

self.pool_3d = nn.MaxPool3d(kernel_size=(3, 1, 1))

def forward(self, x):

p3, p4, p5 = x[0], x[1], x[2]

p3 = self.conv0(p3)

p4_2 = self.conv1(p4)

p4_2 = F.interpolate(p4_2, p3.size()[2:], mode='nearest')

p5_2 = self.conv2(p5)

p5_2 = F.interpolate(p5_2, p3.size()[2:], mode='nearest')

p3_3d = torch.unsqueeze(p3, -3)

p4_3d = torch.unsqueeze(p4_2, -3)

p5_3d = torch.unsqueeze(p5_2, -3)

combine = torch.cat([p3_3d, p4_3d, p5_3d], dim=2)

conv_3d = self.conv3d(combine)

bn = self.bn(conv_3d)

act = self.act(bn)

x = self.pool_3d(act)

x = torch.squeeze(x, 2)

return x

class Add(nn.Module):

# Concatenate a list of tensors along dimension

def __init__(self, ch=256):

super().__init__()

def forward(self, x):

input1, input2 = x[0], x[1]

x = input1 + input2

return x

class channel_att(nn.Module):

def __init__(self, channel, b=1, gamma=2):

super(channel_att, self).__init__()

kernel_size = int(abs((math.log(channel, 2) + b) / gamma))

kernel_size = kernel_size if kernel_size % 2 else kernel_size + 1

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv = nn.Conv1d(1, 1, kernel_size=kernel_size, padding=(kernel_size - 1) // 2, bias=False)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

y = self.avg_pool(x)

y = y.squeeze(-1)

y = y.transpose(-1, -2)

y = self.conv(y).transpose(-1, -2).unsqueeze(-1)

y = self.sigmoid(y)

return x * y.expand_as(x)

class local_att(nn.Module):

def __init__(self, channel, reduction=16):

super(local_att, self).__init__()

self.conv_1x1 = nn.Conv2d(in_channels=channel, out_channels=channel // reduction, kernel_size=1, stride=1,

bias=False)

self.relu = nn.ReLU()

self.bn = nn.BatchNorm2d(channel // reduction)

self.F_h = nn.Conv2d(in_channels=channel // reduction, out_channels=channel, kernel_size=1, stride=1,

bias=False)

self.F_w = nn.Conv2d(in_channels=channel // reduction, out_channels=channel, kernel_size=1, stride=1,

bias=False)

self.sigmoid_h = nn.Sigmoid()

self.sigmoid_w = nn.Sigmoid()

def forward(self, x):

_, _, h, w = x.size()

x_h = torch.mean(x, dim=3, keepdim=True).permute(0, 1, 3, 2)

x_w = torch.mean(x, dim=2, keepdim=True)

x_cat_conv_relu = self.relu(self.bn(self.conv_1x1(torch.cat((x_h, x_w), 3))))

x_cat_conv_split_h, x_cat_conv_split_w = x_cat_conv_relu.split([h, w], 3)

s_h = self.sigmoid_h(self.F_h(x_cat_conv_split_h.permute(0, 1, 3, 2)))

s_w = self.sigmoid_w(self.F_w(x_cat_conv_split_w))

out = x * s_h.expand_as(x) * s_w.expand_as(x)

return out

class attention_model(nn.Module):

# Concatenate a list of tensors along dimension

def __init__(self, ch=256):

super().__init__()

self.channel_att = channel_att(ch)

self.local_att = local_att(ch)

def forward(self, x):

input1, input2 = x[0], x[1]

input1 = self.channel_att(input1)

x = input1 + input2

x = self.local_att(x)

return x

2.2 更改init.py文件

关键步骤二:修改modules文件夹下的__init__.py文件,先导入函数

然后在下面的__all__中声明函数

2.3 添加yaml文件

关键步骤三:在/ultralytics/ultralytics/cfg/models/v8下面新建文件yolov8_ASF.yaml文件,粘贴下面的内容

- 改进一

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, Conv, [512, 1, 1]] # 10

- [4, 1, Conv, [512, 1, 1]] # 11

- [[-1, 6, -2], 1, Zoom_cat, []] # 12 cat backbone P4

- [-1, 3, C2f, [512]] # 13

- [-1, 1, Conv, [256, 1, 1]] # 14

- [2, 1, Conv, [256, 1, 1]] # 15

- [[-1, 4, -2], 1, Zoom_cat, []] # 16 cat backbone P3

- [-1, 3, C2f, [256]] # 17 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]] # 18

- [[-1, 14], 1, Concat, [1]] # 19 cat head P4

- [-1, 3, C2f, [512]] # 20 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]] # 21

- [[-1, 10], 1, Concat, [1]] # 22 cat head P5

- [-1, 3, C2f, [1024]] # 23 (P5/32-large)

- [[4, 6, 8], 1, ScalSeq, [256]] # 24 args[inchane]

- [[17, -1], 1, Add, [64]] # 25

- [[25, 20, 23], 1, Detect, [nc]] # RTDETRDecoder(P3, P4, P5)- 改进二

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, Conv, [512, 1, 1]] # 10

- [4, 1, Conv, [512, 1, 1]] # 11

- [[-1, 6, -2], 1, Zoom_cat, []] # 12 cat backbone P4

- [-1, 3, C2f, [512]] # 13

- [-1, 1, Conv, [256, 1, 1]] # 14

- [2, 1, Conv, [256, 1, 1]] # 15

- [[-1, 4, -2], 1, Zoom_cat, []] # 16 cat backbone P3

- [-1, 3, C2f, [256]] # 17 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]] # 18

- [[-1, 14], 1, Concat, [1]] # 19 cat head P4

- [-1, 3, C2f, [512]] # 20 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]] # 21

- [[-1, 10], 1, Concat, [1]] # 22 cat head P5

- [-1, 3, C2f, [1024]] # 23 (P5/32-large)

- [[4, 6, 8], 1, ScalSeq, [256]] # 24 args[inchane]

- [[17, -1], 1, attention_model, [256]] # 25

- [[25, 20, 23], 1, Detect, [nc]] # RTDETRDecoder(P3, P4, P5)温馨提示:本文只是对yolov8基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。

# YOLOv8n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

# YOLOv8s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

# YOLOv8l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

# YOLOv8m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

# YOLOv8x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple2.4 在task.py中进行注册

关键步骤四:在task.py的parse_model函数中进行注册

elif m is Zoom_cat:

c2 = sum(ch[x] for x in f)

elif m is Add:

c2 = ch[f[-1]]

elif m is ScalSeq:

c1 = [ch[x] for x in f]

c2 = make_divisible(args[0] * width, 8)

args = [c1, c2]

elif m is attention_model:

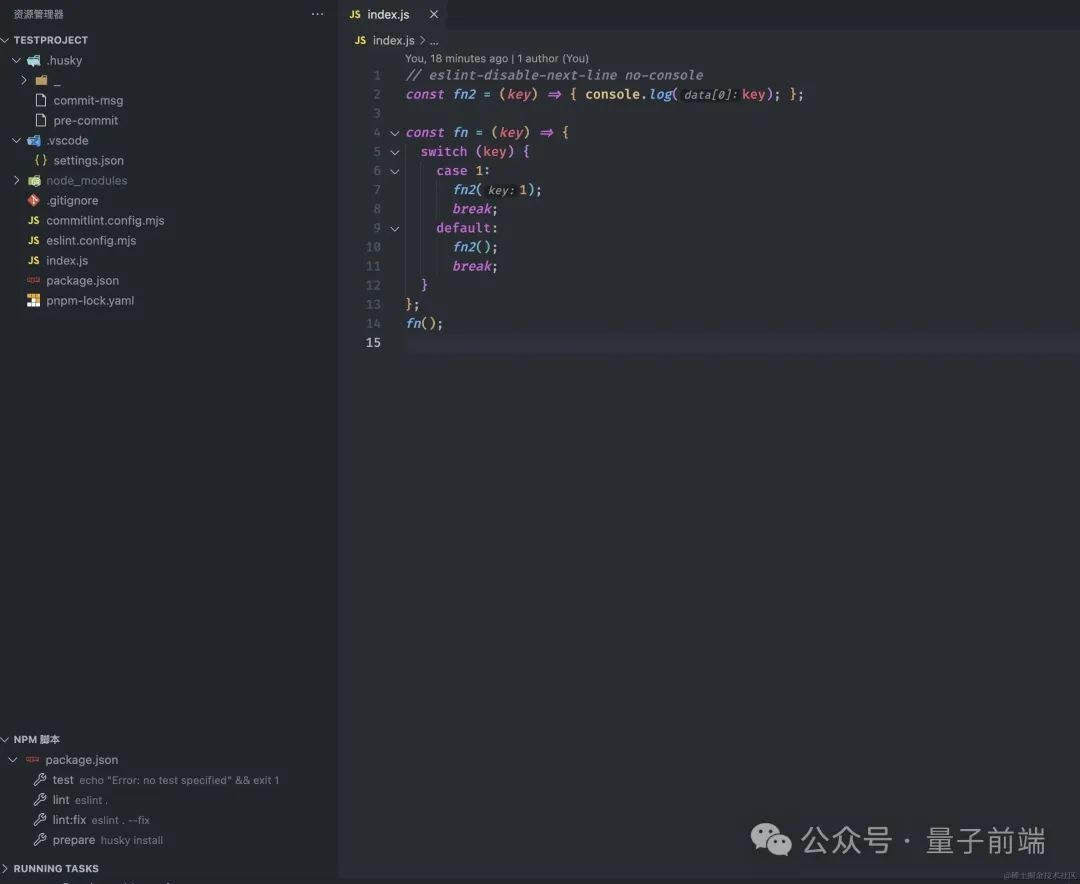

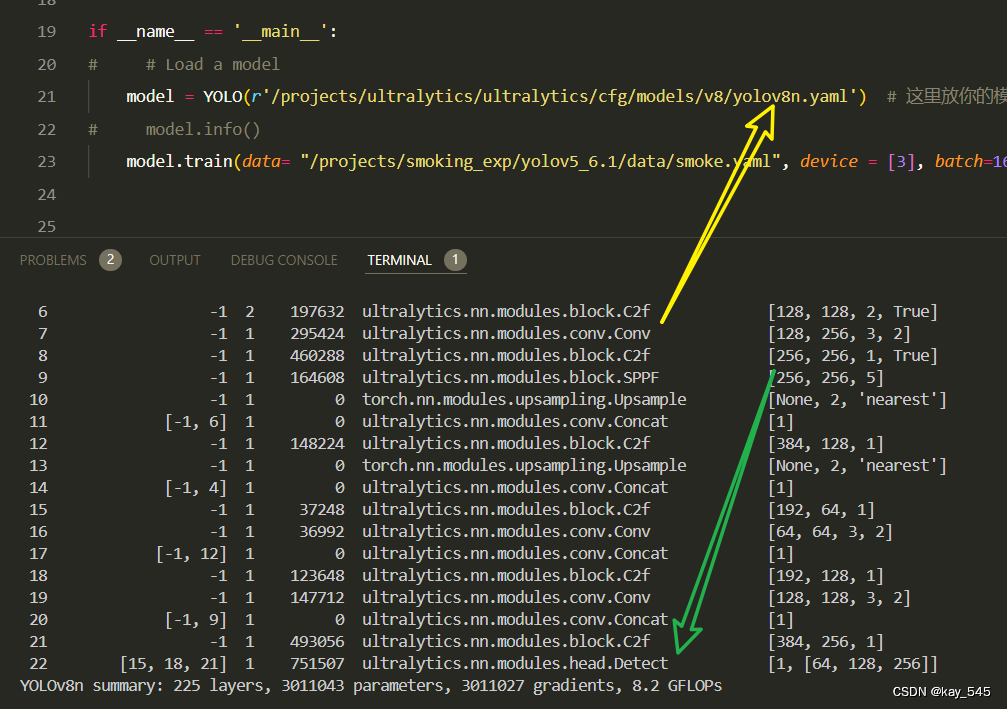

args = [ch[f[-1]]]2.5 执行程序

关键步骤五:在ultralytics文件中新建train.py,将model的参数路径设置为yolov8_ASF.yaml的路径即可

建议大家写绝对路径,确保一定能找到

from ultralytics import YOLO

# Load a model

# model = YOLO('yolov8n.yaml') # build a new model from YAML

# model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

model = YOLO(r'/projects/ultralytics/ultralytics/cfg/models/v8/yolov8_ASF.yaml') # build from YAML and transfer weights

# Train the model

model.train(batch=16)🚀运行程序,如果出现下面的内容则说明添加成功🚀

from n params module arguments

0 -1 1 464 ultralytics.nn.modules.conv.Conv [3, 16, 3, 2]

1 -1 1 4672 ultralytics.nn.modules.conv.Conv [16, 32, 3, 2]

2 -1 1 7360 ultralytics.nn.modules.block.C2f [32, 32, 1, True]

3 -1 1 18560 ultralytics.nn.modules.conv.Conv [32, 64, 3, 2]

4 -1 2 49664 ultralytics.nn.modules.block.C2f [64, 64, 2, True]

5 -1 1 73984 ultralytics.nn.modules.conv.Conv [64, 128, 3, 2]

6 -1 2 197632 ultralytics.nn.modules.block.C2f [128, 128, 2, True]

7 -1 1 295424 ultralytics.nn.modules.conv.Conv [128, 256, 3, 2]

8 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1, True]

9 -1 1 164608 ultralytics.nn.modules.block.SPPF [256, 256, 5]

10 -1 1 33024 ultralytics.nn.modules.conv.Conv [256, 128, 1, 1]

11 4 1 8448 ultralytics.nn.modules.conv.Conv [64, 128, 1, 1]

12 [-1, 6, -2] 1 0 ultralytics.nn.modules.block.Zoom_cat []

13 -1 1 148224 ultralytics.nn.modules.block.C2f [384, 128, 1]

14 -1 1 8320 ultralytics.nn.modules.conv.Conv [128, 64, 1, 1]

15 2 1 2176 ultralytics.nn.modules.conv.Conv [32, 64, 1, 1]

16 [-1, 4, -2] 1 0 ultralytics.nn.modules.block.Zoom_cat []

17 -1 1 37248 ultralytics.nn.modules.block.C2f [192, 64, 1]

18 -1 1 36992 ultralytics.nn.modules.conv.Conv [64, 64, 3, 2]

19 [-1, 14] 1 0 ultralytics.nn.modules.conv.Concat [1]

20 -1 1 115456 ultralytics.nn.modules.block.C2f [128, 128, 1]

21 -1 1 147712 ultralytics.nn.modules.conv.Conv [128, 128, 3, 2]

22 [-1, 10] 1 0 ultralytics.nn.modules.conv.Concat [1]

23 -1 1 460288 ultralytics.nn.modules.block.C2f [256, 256, 1]

24 [4, 6, 8] 1 33344 ultralytics.nn.modules.block.ScalSeq [[64, 128, 256], 64]

25 [17, -1] 1 0 ultralytics.nn.modules.block.Add [64]

26 [25, 20, 23] 1 751507 ultralytics.nn.modules.head.Detect [1, [64, 128, 256]]

YOLOv8_ASF summary: 252 layers, 3,055,395 parameters, 3,055,379 gradients, 8.7 GFLOPs3. 完整代码分享

https://pan.baidu.com/s/12QFr3ntMIYv7Ni88AwAtRA?pwd=c8k6提取码: c8k6

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的YOLOv8n GFLOPs

改进后的GFLOPs

5.进阶

可以结合损失函数和注意力机制进行改进

6.总结

ASF-YOLO是一种基于YOLO(You Only Look Once)框架的改进模型,专门用于细胞实例分割。其主要原理包括利用CSPDarknet53骨干网络进行多尺度特征提取,然后通过规模序列特征融合模块(SSFF)和三重特征编码器模块(TFE)进行多尺度和细节特征的融合。该模型还引入了通道和位置注意力机制(CPAM),通过适应性地调整对相关通道和空间位置的关注,增强了小物体的检测和分割能力。在检测和分割阶段,ASF-YOLO使用增强的EIoU损失函数优化边界框的位置关系,并通过头部网络生成分割掩码。最后,在后处理阶段,ASF-YOLO采用软非极大值抑制(Soft-NMS)方法处理密集重叠的检测框,从而提高检测精度和分割性能。通过这些创新,ASF-YOLO在医学图像分析和细胞实例分割任务中表现出色。