作者: Dora 原文来源: https://tidb.net/blog/8ee8f295

问题背景

-

集群之前由于TIUP目录被删除导致TLLS证书丢失,后续需要重新开启TLS

-

在测试环境测试TLS开启步骤,导致后续两台PD扩容失败,步骤如下:

- 缩容两台PD

- 开启TLS

- 扩容原有的两台PD,最后PD启动的时候报错

- 集群Restart

集群重启后导致三台PD全部Down机,需要pd-recover恢复或者销毁集群重建恢复

注:TLS开启需要只保留一台PD,若有多台PD需要先缩容成1台

排查过程

TIUP日志排查

- 查看TIUP的日志,看到开启TLS的时候有报错

2024-01-04T16:11:00.718+0800 DEBUG TaskFinish {"task": "Restart Cluster", "error": "failed to stop: failed to stop: xxxx node_exporter-9100.service, please check the instance's log() for more detail.: timed out waiting for port 9100 to be stopped after 2m0s", "errorVerbose": "timed out waiting for port 9100 to be stopped after 2m0s\ngithub.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\ngithub.com/pingcap/tiup/pkg/cluster/spec.PortStopped\n\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:130\ngithub.com/pingcap/tiup/pkg/cluster/operation.systemctlMonitor.func1\n\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:338\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.0.0-20220819030929-7fc1605a5dde/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594\nfailed to stop: 10.196.32.49 node_exporter-9100.service, please check the instance's log() for more detail.\nfailed to stop"}

2024-01-04T16:11:00.718+0800 INFO Execute command finished {"code": 1, "error": "failed to stop: failed to stop: xxxx node_exporter-9100.service, please check the instance's log() for more detail.: timed out waiting for port 9100 to be stopped after 2m0s", "errorVerbose": "timed out waiting for port 9100 to be stopped after 2m0s\ngithub.com/pingcap/tiup/pkg/cluster/module.(*WaitFor).Execute\n\tgithub.com/pingcap/tiup/pkg/cluster/module/wait_for.go:91\ngithub.com/pingcap/tiup/pkg/cluster/spec.PortStopped\n\tgithub.com/pingcap/tiup/pkg/cluster/spec/instance.go:130\ngithub.com/pingcap/tiup/pkg/cluster/operation.systemctlMonitor.func1\n\tgithub.com/pingcap/tiup/pkg/cluster/operation/action.go:338\ngolang.org/x/sync/errgroup.(*Group).Go.func1\n\tgolang.org/x/sync@v0.0.0-20220819030929-7fc1605a5dde/errgroup/errgroup.go:75\nruntime.goexit\n\truntime/asm_amd64.s:1594\nfailed to stop: 10.196.32.49 node_exporter-9100.service, please check the instance's log() for more detail.\nfailed to stop"}

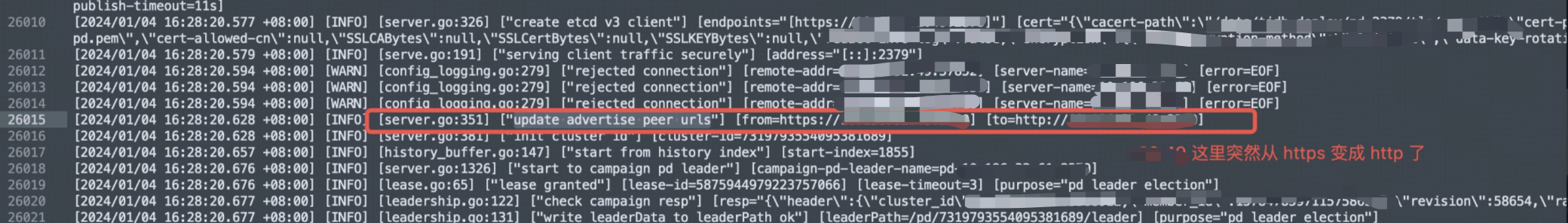

PD日志排查

某一台PD启动的时候是http而不是https

问题大致明朗,在开启TLS的时候因为报错导致后续PD的配置有问题

复现过程

缩容PD

tiup cluster scale-in tidb-cc -N 172.16.201.159:52379

开启TLS

开启TLS的时候发现最后一步是修改PD的配置信息

+ [ Serial ] - Reload PD Members Update pd-172.16.201.73-52379 peerURLs [ https://172.16.201.73:52380 ]

[tidb@vm172-16-201-73 /tidb-deploy/cc/pd-52379/scripts]$ tiup cluster tls tidb-cc enable

tiup is checking updates for component cluster ...

A new version of cluster is available:

The latest version: v1.14.1

Local installed version: v1.12.3

Update current component: tiup update cluster

Update all components: tiup update --all

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.12.3/tiup-cluster tls tidb-cc enable

Enable/Disable TLS will stop and restart the cluster `tidb-cc`

Do you want to continue? [y/N]:(default=N) y

Generate certificate: /home/tidb/.tiup/storage/cluster/clusters/tidb-cc/tls

+ [ Serial ] - SSHKeySet: privateKey=/home/tidb/.tiup/storage/cluster/clusters/tidb-cc/ssh/id_rsa, publicKey=/home/tidb/.tiup/storage/cluster/clusters/tidb-cc/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.159

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.159

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.99

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ [Parallel] - UserSSH: user=tidb, host=172.16.201.73

+ Copy certificate to remote host

- Generate certificate pd -> 172.16.201.73:52379 ... Done

- Generate certificate tikv -> 172.16.201.73:25160 ... Done

- Generate certificate tikv -> 172.16.201.159:25160 ... Done

- Generate certificate tikv -> 172.16.201.99:25160 ... Done

- Generate certificate tidb -> 172.16.201.73:54000 ... Done

- Generate certificate tidb -> 172.16.201.159:54000 ... Done

- Generate certificate prometheus -> 172.16.201.73:59090 ... Done

- Generate certificate grafana -> 172.16.201.73:43000 ... Done

- Generate certificate alertmanager -> 172.16.201.73:59093 ... Done

+ Copy monitor certificate to remote host

- Generate certificate node_exporter -> 172.16.201.159 ... Done

- Generate certificate node_exporter -> 172.16.201.99 ... Done

- Generate certificate node_exporter -> 172.16.201.73 ... Done

- Generate certificate blackbox_exporter -> 172.16.201.73 ... Done

- Generate certificate blackbox_exporter -> 172.16.201.159 ... Done

- Generate certificate blackbox_exporter -> 172.16.201.99 ... Done

+ Refresh instance configs

- Generate config pd -> 172.16.201.73:52379 ... Done

- Generate config tikv -> 172.16.201.73:25160 ... Done

- Generate config tikv -> 172.16.201.159:25160 ... Done

- Generate config tikv -> 172.16.201.99:25160 ... Done

- Generate config tidb -> 172.16.201.73:54000 ... Done

- Generate config tidb -> 172.16.201.159:54000 ... Done

- Generate config prometheus -> 172.16.201.73:59090 ... Done

- Generate config grafana -> 172.16.201.73:43000 ... Done

- Generate config alertmanager -> 172.16.201.73:59093 ... Done

+ Refresh monitor configs

- Generate config node_exporter -> 172.16.201.73 ... Done

- Generate config node_exporter -> 172.16.201.159 ... Done

- Generate config node_exporter -> 172.16.201.99 ... Done

- Generate config blackbox_exporter -> 172.16.201.73 ... Done

- Generate config blackbox_exporter -> 172.16.201.159 ... Done

- Generate config blackbox_exporter -> 172.16.201.99 ... Done

+ [ Serial ] - Save meta

+ [ Serial ] - Restart Cluster

Stopping component alertmanager

Stopping instance 172.16.201.73

Stop alertmanager 172.16.201.73:59093 success

Stopping component grafana

Stopping instance 172.16.201.73

Stop grafana 172.16.201.73:43000 success

Stopping component prometheus

Stopping instance 172.16.201.73

Stop prometheus 172.16.201.73:59090 success

Stopping component tidb

Stopping instance 172.16.201.159

Stopping instance 172.16.201.73

Stop tidb 172.16.201.159:54000 success

Stop tidb 172.16.201.73:54000 success

Stopping component tikv

Stopping instance 172.16.201.99

Stopping instance 172.16.201.73

Stopping instance 172.16.201.159

Stop tikv 172.16.201.73:25160 success

Stop tikv 172.16.201.99:25160 success

Stop tikv 172.16.201.159:25160 success

Stopping component pd

Stopping instance 172.16.201.73

Stop pd 172.16.201.73:52379 success

Stopping component node_exporter

Stopping instance 172.16.201.99

Stopping instance 172.16.201.73

Stopping instance 172.16.201.159

Stop 172.16.201.73 success

Stop 172.16.201.99 success

Stop 172.16.201.159 success

Stopping component blackbox_exporter

Stopping instance 172.16.201.99

Stopping instance 172.16.201.73

Stopping instance 172.16.201.159

Stop 172.16.201.73 success

Stop 172.16.201.99 success

Stop 172.16.201.159 success

Starting component pd

Starting instance 172.16.201.73:52379

Start instance 172.16.201.73:52379 success

Starting component tikv

Starting instance 172.16.201.99:25160

Starting instance 172.16.201.73:25160

Starting instance 172.16.201.159:25160

Start instance 172.16.201.99:25160 success

Start instance 172.16.201.73:25160 success

Start instance 172.16.201.159:25160 success

Starting component tidb

Starting instance 172.16.201.159:54000

Starting instance 172.16.201.73:54000

Start instance 172.16.201.159:54000 success

Start instance 172.16.201.73:54000 success

Starting component prometheus

Starting instance 172.16.201.73:59090

Start instance 172.16.201.73:59090 success

Starting component grafana

Starting instance 172.16.201.73:43000

Start instance 172.16.201.73:43000 success

Starting component alertmanager

Starting instance 172.16.201.73:59093

Start instance 172.16.201.73:59093 success

Starting component node_exporter

Starting instance 172.16.201.159

Starting instance 172.16.201.99

Starting instance 172.16.201.73

Start 172.16.201.73 success

Start 172.16.201.99 success

Start 172.16.201.159 success

Starting component blackbox_exporter

Starting instance 172.16.201.159

Starting instance 172.16.201.99

Starting instance 172.16.201.73

Start 172.16.201.73 success

Start 172.16.201.99 success

Start 172.16.201.159 success

+ [ Serial ] - Reload PD Members

Update pd-172.16.201.73-52379 peerURLs: [https://172.16.201.73:52380]

Enabled TLS between TiDB components for cluster `tidb-cc` successfully

模拟开启TLS的时候停止node_exports失败

查看member信息

[tidb@vm172-16-201-73 ~]$ tiup ctl:v7.1.1 pd -u https://172.16.201.73:52379 --cacert=/home/tidb/.tiup/storage/cluster/clusters/tidb-cc/tls/ca.crt --key=/home/tidb/.tiup/storage/cluster/clusters/tidb-cc/tls/client.pem --cert=/home/tidb/.tiup/storage/cluster/clusters/tidb-cc/tls/client.crt member

{

"header": {

"cluster_id": 7299695983639846267

},

"members": [

{

"name": "pd-172.16.201.73-52379",

"member_id": 781118713925753452,

"peer_urls": [

"http://172.16.201.73:52380"

],

"client_urls": [

"https://172.16.201.73:52379"

],

发现 members中的peer_urls确实是http的而非https,复现成功

总结

- 开启TLS的时候失败,因为停止node_export失败,认为无关紧要,所以继续接下来的步骤

- 开启TLS最后一步是pd member的配置更新,停止node_export失败导致这一步没有正常执行

- 后续需要确保TLS开启成功后才能做下一步,也可以使用pd-ctl 查看member的情况

- 如果后续遇到此类情况,可以先关闭TLS,再次开启,开启的时候还遇到停止node_export时间过长,可以tiup执行时增加--wait-timeout参数以及手动kill 机器的node_export进程,确保TLS继续进行