索引示例二:创建本地索引

-

需求

在程序中,我们可能会根据订单ID、订单状态、支付金额、支付方式、用户ID来查询订单。所以,我们需要在这些列上来查询订单。

针对这种场景,我们可以使用本地索引来提高查询效率。 -

创建本地索引

create local index LOCAL_IDX_ORDER_DTL on ORDER_DTL("id", "status", "money", "pay_way", "user_id") ;

通过查看WebUI,我们并没有发现创建名为:LOCAL_IDX_ORDER_DTL 的表。那索引数据是存储在哪儿呢?我们可以通过HBase shellhbase(main):031:0> scan "ORDER_DTL", {LIMIT => 1 ROW COLUMN+CELL \x00\x00\x0402602f66-adc7-40d4-8485-76 column=L#0:\x00\x00\x00\x00, timestamp=1589350314539, value=\x00\x00\x00\x00 b5632b5b53\x00\xE5\xB7\xB2\xE6\x8F\x90 \xE4\xBA\xA4\x00\xC2)G\x00\xC1\x02\x00 4944191 1 row(s) Took 0.0155 seconds可以看到Phoenix对数据进行处理,原有的数据发生了变化。建立了本地二级索引表,不能再使用Hbase的Java API查询,只能通过JDBC来查询。

-

查看数据

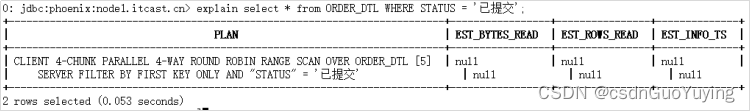

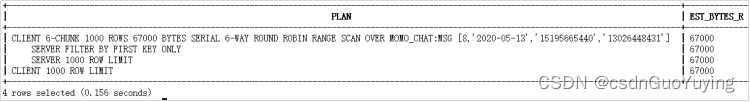

explain select * from ORDER_DTL WHERE "status" = '已提交';

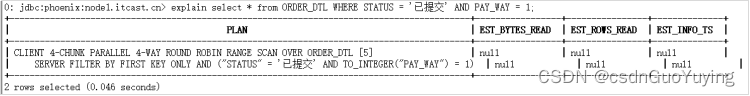

explain select * from ORDER_DTL WHERE "status" = '已提交' AND "pay_way" = 1;

通过观察上面的两个执行计划发现,两个查询都是通过RANGE SCAN来实现的。说明本地索引生效。 -

删除本地索引

drop index LOCAL_IDX_ORDER_DTL on ORDER_DTL;

重新执行一次扫描,你会发现数据变魔术般的恢复出来了。hbase(main):007:0> scan "ORDER_DTL", {LIMIT => 1} ROW COLUMN+CELL \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x00\x00\x00\x00, timestamp=1599542260011, value=x \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x0B, timestamp=1599542260011, value=\xE5\xB7\xB2\xE4\xBB\x98\xE6\xAC\xBE \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x0C, timestamp=1599542260011, value=\xC6\x12\x90\x01 \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x0D, timestamp=1599542260011, value=\x80\x00\x00\x01 \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x0E, timestamp=1599542260011, value=2993700 \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x0F, timestamp=1599542260011, value=2020-04-25 12:09:46 \x000f46d542-34cb-4ef4-b7fe-6dcfa5f14751 column=C1:\x80\x10, timestamp=1599542260011, value=\xE7\xBB\xB4\xE4\xBF\xAE;\xE6\x89\x8B\xE6\x9C\xBA; 1 row(s) Took 0.0266 seconds

使用Phoenix建立二级索引高效查询

- 创建本地函数索引

CREATE LOCAL INDEX LOCAL_IDX_MOMO_MSG ON MOMO_CHAT.MSG(substr("msg_time", 0, 10), "sender_account", "receiver_account"); - 执行数据查询

SELECT * FROM "MOMO_CHAT"."MSG" T WHERE substr("msg_time", 0, 10) = '2020-08-29' AND T."sender_account" = '13504113666' AND T."receiver_account" = '18182767005' LIMIT 100;

可以看到,查询速度非常快,0.1秒就查询出来了数据。

8. 常见问题

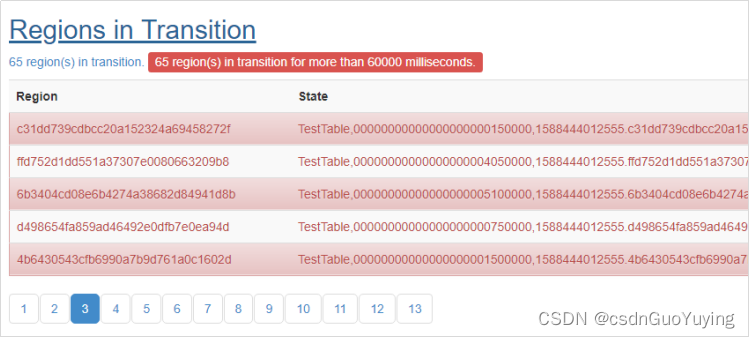

Regions In Transition

错误信息如下:

2020-05-09 12:14:22,760 WARN [RS_OPEN_REGION-regionserver/node1:16020-2] handler.AssignRegionHandler: Failed to open region TestTable,00000000000000000006900000,1588444012555.8a72d1ccdadd3b14284a24ec01918023., will report to master

java.io.IOException: Missing table descriptor for TestTable,00000000000000000006900000,1588444012555.8a72d1ccdadd3b14284a24ec01918023.

at org.apache.hadoop.hbase.regionserver.handler.AssignRegionHandler.process(AssignRegionHandler.java:129)

at org.apache.hadoop.hbase.executor.EventHandler.run(EventHandler.java:104)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

- 问题解析

在执行Region Split时,因为系统中断或者HDFS中的Region文件已经被删除。

Region的状态由master跟踪,包括以下状态:

| State | Description |

|---|---|

| Offline | Region is offline |

| Pending Open | A request to open the region was sent to the server |

| Opening | The server has started opening the region |

| Open | The region is open and is fully operational |

| Pending Close | A request to close the region has been sent to the server |

| Closing | The server has started closing the region |

| Closed | The region is closed |

| Splitting | The server started splitting the region |

| Split | The region has been split by the server |

Region在这些状态之间的迁移(transition)可以由master引发,也可以由region server引发。

- 解决方案

1.使用 hbase hbck 找到哪些Region出现Error

2.使用以下命令将失效的Region删除

deleteall "hbase:meta","TestTable,00000000000000000005850000,1588444012555.89e1c07384a56c77761e490ae3f34a8d."

3.重启hbase即可

Phoenix: Table is read only

Error: ERROR 505 (42000): Table is read only. (state=42000,code=505)

org.apache.phoenix.schema.ReadOnlyTableException: ERROR 505 (42000): Table is read only.

at org.apache.phoenix.query.ConnectionQueryServicesImpl.ensureTableCreated(ConnectionQueryServicesImpl.java:1126)

at org.apache.phoenix.query.ConnectionQueryServicesImpl.createTable(ConnectionQueryServicesImpl.java:1501)

at org.apache.phoenix.schema.MetaDataClient.createTableInternal(MetaDataClient.java:2721)

at org.apache.phoenix.schema.MetaDataClient.createTable(MetaDataClient.java:1114)

at org.apache.phoenix.compile.CreateTableCompiler$1.execute(CreateTableCompiler.java:192)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:408)

at org.apache.phoenix.jdbc.PhoenixStatement$2.call(PhoenixStatement.java:391)

at org.apache.phoenix.call.CallRunner.run(CallRunner.java:53)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:390)

at org.apache.phoenix.jdbc.PhoenixStatement.executeMutation(PhoenixStatement.java:378)

at org.apache.phoenix.jdbc.PhoenixStatement.execute(PhoenixStatement.java:1825)

at sqlline.Commands.execute(Commands.java:822)

at sqlline.Commands.sql(Commands.java:732)

at sqlline.SqlLine.dispatch(SqlLine.java:813)

at sqlline.SqlLine.begin(SqlLine.java:686)

at sqlline.SqlLine.start(SqlLine.java:398)

at sqlline.SqlLine.main(SqlLine.java:291)

phoenix连接hbase数据库,创建二级索引报错:Error: org.apache.phoenix.exception.PhoenixIOException: Failed after attempts=36, exceptions: Tue Mar 06 10:32:02 CST 2018, null, java.net.SocketTimeoutException: callTimeout

修改phoenix的hbase-site.xml配置文件为

<property>

<name>phoenix.query.timeoutMs</name>

<value>1800000</value>

</property>

<property>

<name>hbase.regionserver.lease.period</name>

<value>1200000</value>

</property>

<property>

<name>hbase.rpc.timeout</name>

<value>1200000</value>

</property>

<property>

<name>hbase.client.scanner.caching</name>

<value>1000</value>

</property>

<property>

<name>hbase.client.scanner.timeout.period</name>

<value>1200000</value>

</property>

设置完以上内容后,重新通过sqlline.py连接hbase