1、SSRF漏洞审计点

服务端请求伪造(Server-Side Request Forge)简称 SSRF,它是由攻击者构造的 payload传给服务端,服务端对传回的 payload 未作处理直接执行后造成的漏洞,一般用于在内网探测或攻击内网服务。

利用: 扫描内网 、 向内部任意主机的任意端口发送精心构造的攻击载荷请求 、 攻击内网的 Web 应用 、 读取文件 、 拒绝服务攻击

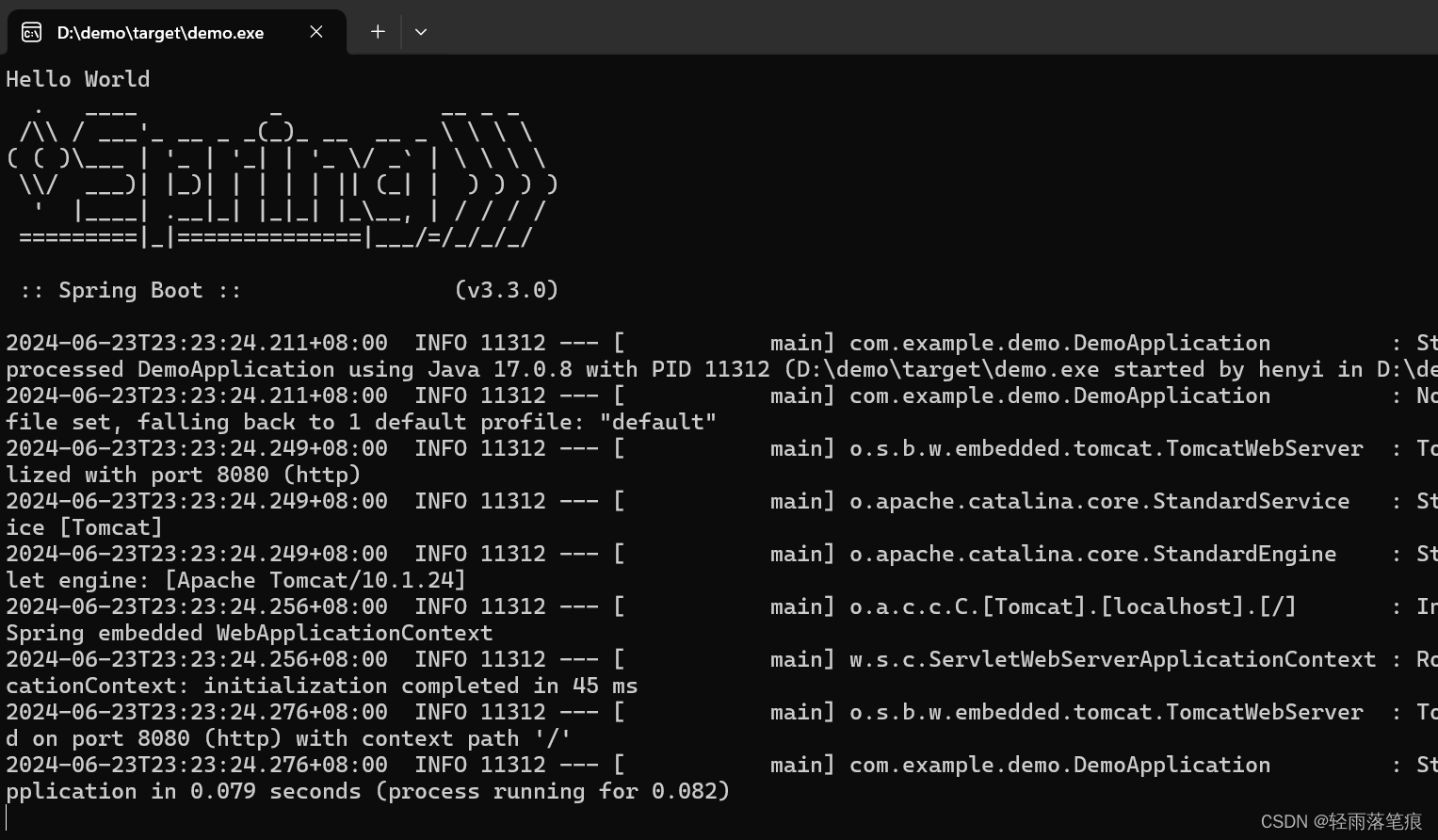

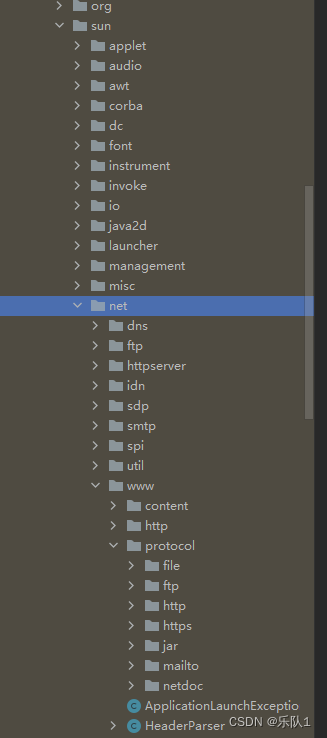

Java网络请求支持的协议很多,包括:http,https,file,ftp,mailto,jar, netdoc。 Java 支持

的协议可以在rt. sun.net.www.protocol 包下看到 (jdk1.7)

Gopher是Internet上一个非常有名的信息查找系统,它将Internet上的文件组织成某种索引,很方便地将用户从Internet的一处带到另一处。 gopher协议支持发出GET、POST请求:可以先截获get请求包和post请求包,在构成符 合gopher协议的请求。 在高版本的 JDK7 里,虽然sun.net.www.protocol 中还有 gopher 包,但是实际也已 经不能使用,会抛java.net.MalformedURLException: unknown protocol: gopher 的 异常,所以只能在JDK7以下才能使用。

jdk1.8 可以看到gopher 在 JDK8 中已经被移除。

Java中的SSRF相对于在PHP中来说,协议支持少一些,而且部分协议是受限的比如 gopher协议,所以总体上来说Java的SSRF危害肯没PHP中那么大。

容易出现漏洞的地方 :

基本上都是发起url请求的地方:

-

分享:通过URL地址分享网页内容

-

转码服务

-

在线翻译

-

图片加载与下载:通过URL地址加载或下载图片

-

图片、文章收藏功能

-

未公开的API实现以及其他调用URL的功能

-

从URL关键字中寻找:share、wap、url、link、src、source、target、u、3g、 display、sourceURI、imageURL、domain

-

云服务器商。(各种网站数据库操作)

常用函数 url关键词: share、wap、url、link、src、source、target、u、display、sourceURI、imageURL、domain...

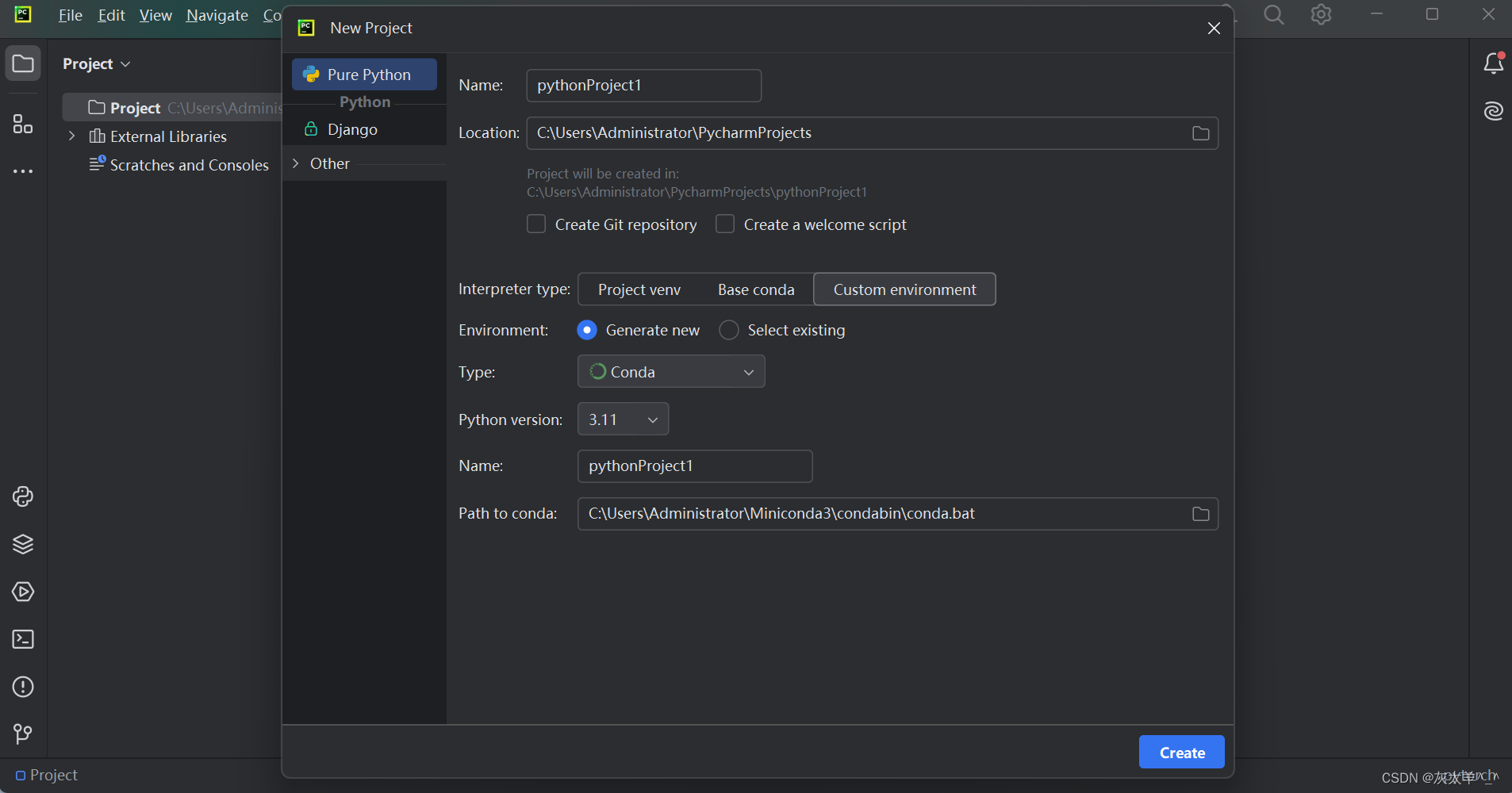

代码审计时需要关注的发起HTTP请求的类及函数,部分如下:

HttpURLConnection.getInputStream URLConnection.getInputStream Request.Get.execute Request.Post.execute URL.openStream ImageIO.read OkHttpClient.newCall.execute HttpClients.execute HttpClient.execute BasicHttpEntityEnclosingRequest() DefaultBHttpClientConnection() BasicHttpRequest()

1.1 URLConnection.getInputStream

URLConnection 是一个抽象类,表示指向 URL 指定的资源链接,其本身依赖于 Socket 类实现网络连接。 支持的协议有:file ftp mailto http https jar netdoc gopher

依据url读取文件内容:

例如:

/**

* 通过URLConnectionU读取本地文件内容到程序中

*/

public class Demo01 {

public static void main(String[] args) throws IOException {

String url = "file:///1.txt";

//构造一个 URL 对象

URL u = new URL(url);

//调用 URL.openConnection() 方法来获取一个 URLConnection 实例

URLConnection urlConnection = u.openConnection();

//调用 getInputStream() 拿到请求的响应流,此时已经建立连接。

BufferedReader in = new BufferedReader(new InputStreamReader(urlConnection.getInputStream())); //发起请求

String inputLine;

StringBuffer html = new StringBuffer();

while ((inputLine = in.readLine()) != null) {

html.append(inputLine);

}

System.out.println("html:" + html.toString());

in.close();

}

}

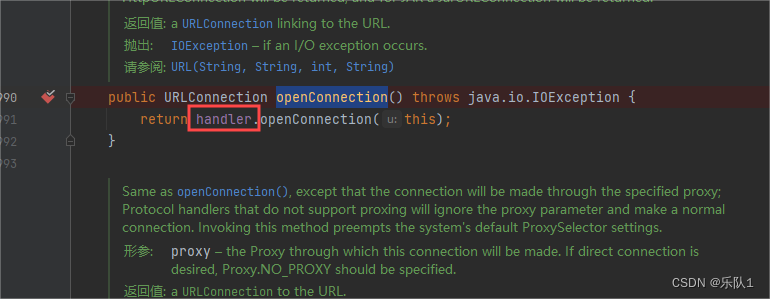

URLStreamHandler 是一个抽象类,每个协议都有继承它的子类 —— Handler。 Handler 定义了该如何去打开一个连接,即 openConnection() 。 如果直接传入一个 URL 字符串,会在构造对象时,根据 protocol 自动创建对应 的 Handler 对象

在调用 URL.openConnection() 获取 URLConnection 实例的时候,真实的网络连接实 际上并没有建立,只有在调用 URLConnection.connect() 方法后才会建立连接。

控制台输入文件内容:

例如:

/**

*

* 通过URLConnection以http协议请求网络资源

*/

public class Demo02 {

public static void main(String[] args) throws IOException {

String htmlContent;

// String url = "http://www.baidu.com";

String url = "https://www.baidu.com";

//String url = "file:///etc/passwd";

URL u = new URL(url);

URLConnection urlConnection = u.openConnection();//打开一个URL连接,建立连接

BufferedReader base = new BufferedReader(new InputStreamReader(urlConnection.getInputStream(), "UTF-8"));

StringBuffer html = new StringBuffer();

while ((htmlContent = base.readLine()) != null) {

System.out.println(htmlContent);

html.append(htmlContent); //htmlContent添加到html里面

}

base.close();

// System.out.println("探测:"+url);

System.out.println("----------Response------------");

}

}

文件读取:

http://127.0.0.1:8080/ssrf/urlConnection/vuln?url=file:///1.txt

文件下載:

SSRF 中的文件下载和文件读取不同点在于响应头

response.setHeader("content-disposition", "attachment;fileName=" + filename);

https://developer.mozilla.org/zh-CN/docs/Web/HTTP/Headers/Content-Disposition

例如:

RequestMapping(value = "/urlConnection/download", method = {RequestMethod.POST,

RequestMethod.GET})

public void downloadServlet(HttpServletRequest request, HttpServletRespons

e response) throws ServletException, IOException {

String filename = "1.txt";

String url = request.getParameter("url");

response.setHeader("content-disposition", "attachment;fileName=" + filename);

int len;

OutputStream outputStream = response.getOutputStream();

URL file = new URL(url);

byte[] bytes = new byte[1024];

InputStream inputStream = file.openStream();

while ((len = inputStream.read(bytes)) > 0) {

outputStream.write(bytes, 0, len);

}

}

/**

* Download the url file.

* http://localhost:8080/ssrf/openStream?url=file:///etc/passwd

* <p>

* new URL(String url).openConnection()

* new URL(String url).openStream()

* new URL(String url).getContent()

*/

@GetMapping("/openStream")

public void openStream(@RequestParam String url, HttpServletResponse response)

throws IOException {

InputStream inputStream = null;

OutputStream outputStream = null;

try {

String downLoadImgFileName = WebUtils.getNameWithoutExtension(url) + "." +

WebUtils.getFileExtension(url);

// download

response.setHeader("content-disposition", "attachment;fileName=" +

downLoadImgFileName);

URL u = new URL(url);

int length;

byte[] bytes = new byte[1024];

inputStream = u.openStream(); // send request

outputStream = response.getOutputStream();

while ((length = inputStream.read(bytes)) > 0) {

outputStream.write(bytes, 0, length);

}

} catch (Exception e) {

logger.error(e.toString());

} finally {

if (inputStream != null) {

inputStream.close();

}

if (outputStream != null) {

outputStream.close();

}

}

}

http://127.0.0.1:8080/ssrf/openStream?url=file:///1.txt

1.2 HttpURLConnection.getInputStream

HttpURLConnection 是 URLConnection 的子类, 用来实现基于 HTTP URL 的请求、响应功能,每个 HttpURLConnection 实例都可用于生成单个网络请求,支持GET、POST、PUT、DELETE等方式。

例如:

/**

*

* 通过HttpURLConnection以http协议请求网络资源

*/

public class Demo03 {

public static void main(String[] args) throws IOException {

String htmlContent;

// String url = "http://www.baidu.com";

String url = "https://www.baidu.com";

URL u = new URL(url);

URLConnection urlConnection = u.openConnection();

HttpURLConnection httpUrl = (HttpURLConnection) urlConnection;

BufferedReader base = new BufferedReader(new InputStreamReader(httpUrl.getInputStream(), "UTF-8"));

StringBuffer html = new StringBuffer();

while ((htmlContent = base.readLine()) != null) {

html.append(htmlContent);

}

base.close();

System.out.println("探测:"+url);

System.out.println("----------Response------------");

System.out.println(html);

}

}

HttpURLConnection 不支持file协议, 例如:file协议读取文件 file:///etc/passwd ,

FileURLConnection类型不能转换为 HttpURLConnection类型

1.3 Request.Get/Post.execute

Request类对HttpClient进行了封装。类似Python的requests库。 例如:

Request.Get(url).execute().returnContent().toString();

添加依赖:

<dependency> <groupId>org.apache.httpcomponents</groupId> <artifactId>fluent-hc</artifactId> <version>4.5.13</version> </dependency>

访问百度 :

/**

*

* 通过 Request.Get/Post.execute请求网络资源

*/

public class Demo04 {

public static void main(String[] args) throws IOException {

// String html = Request.Get("http://www.baidu.com").execute().returnContent().toString();

String html = Request.Get("https://www.baidu.com/").execute().returnContent().toString();

System.out.println(html);

}

}

1.4 URL.openStream

String url = request.getParameter("url");

URL u = new URL(url);

InputStream inputStream = u.openStream();

例如:

/**

* URL.openStream

*/

public class Demo05 {

public static void main(String[] args) throws IOException {

String htmlContent;

// String url = "http://www.baidu.com";

String url = "https://www.baidu.com";

// String url = "file:///etc/passwd";

URL u = new URL(url);

System.out.println("探测:"+url);

System.out.println("----------Response------------");

BufferedReader base = new BufferedReader(new InputStreamReader(u.openStream(), "UTF-8")); //获取url中的资源

StringBuffer html = new StringBuffer();

while ((htmlContent = base.readLine()) != null) {

html.append(htmlContent); //htmlContent添加到html里面

}

System.out.println(html);

}

}

1.5 HttpClients.execute

String url = request.getParameter("url");

CloseableHttpClient client = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(url);

HttpResponse httpResponse = client.execute(httpGet); //发起请求

例如:

/**

* HttpGet

*/

public class Demo06 {

public static void main(String[] args) throws IOException {

String htmlContent;

// String url = "http://www.baidu.com";

String url = "https://www.baidu.com";

CloseableHttpClient client = HttpClients.createDefault();

HttpGet httpGet = new HttpGet(url);

System.out.println("探测:"+url);

System.out.println("----------Response------------");

HttpResponse httpResponse = client.execute(httpGet); //发起请求

BufferedReader base = new BufferedReader(new InputStreamReader(

httpResponse.getEntity().getContent()));

StringBuffer html = new StringBuffer();

while ((htmlContent = base.readLine()) != null) {

html.append(htmlContent); //htmlContent添加到html里面

}

System.out.println(html);

}

}

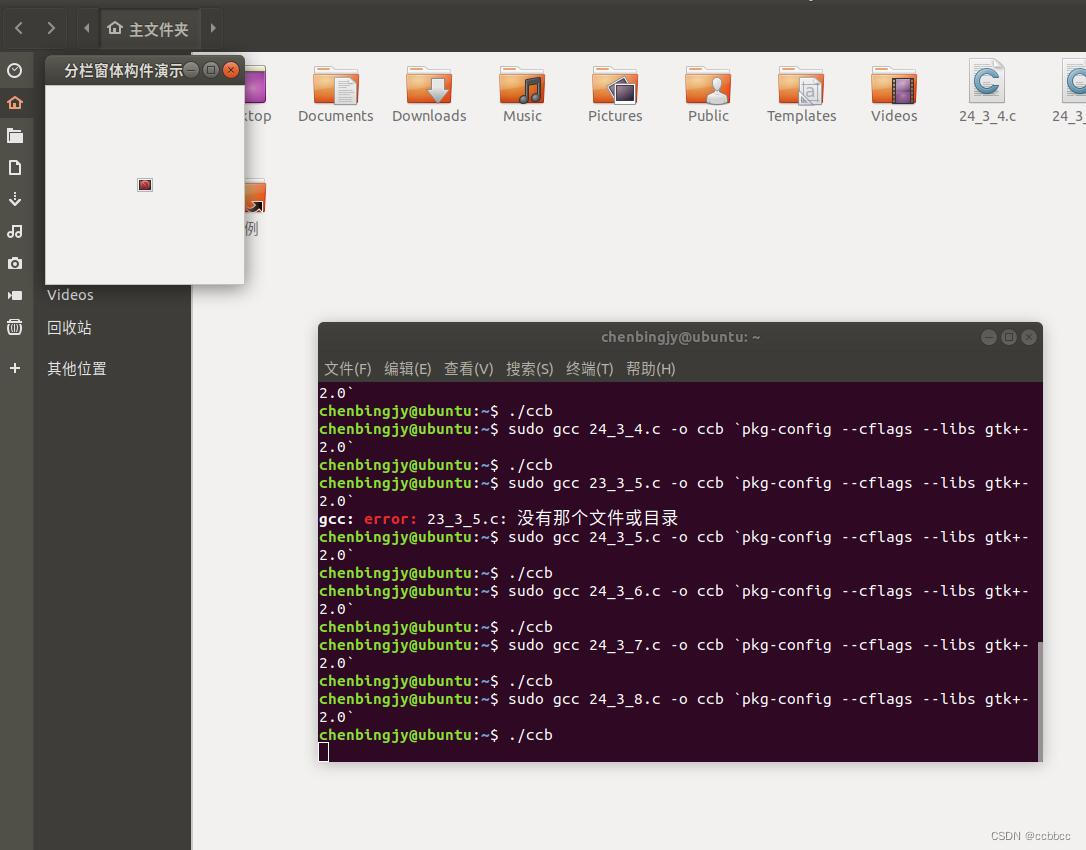

1.6 ImageIO.read

javax.imageio.ImageIO 类是JDK自带的类,使用read() 方法来加载图片。 它可以传入一个 URL 对象,且没有协议限制。

String url = request.getParameter("url");

URL u = new URL(url);

BufferedImage img = ImageIO.read(u);

例如:

@GetMapping("/ImageIO/vul")

public void ImageIO(@RequestParam String url, HttpServletResponse response) {

try {

ServletOutputStream outputStream = response.getOutputStream();

ByteArrayOutputStream os = new ByteArrayOutputStream();

URL u = new URL(url);

InputStream istream = u.openStream();

ImageInputStream stream = ImageIO.createImageInputStream(istream); //获取文件流

BufferedImage bi = ImageIO.read(stream); //BufferedImage作为供给的

ImageIO.write(bi, "png", os);

InputStream input = new ByteArrayInputStream(os.toByteArray());

int len;

byte[] bytes = new byte[1024];

while ((len = input.read(bytes)) > 0) {

outputStream.write(bytes, 0, len);

}

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

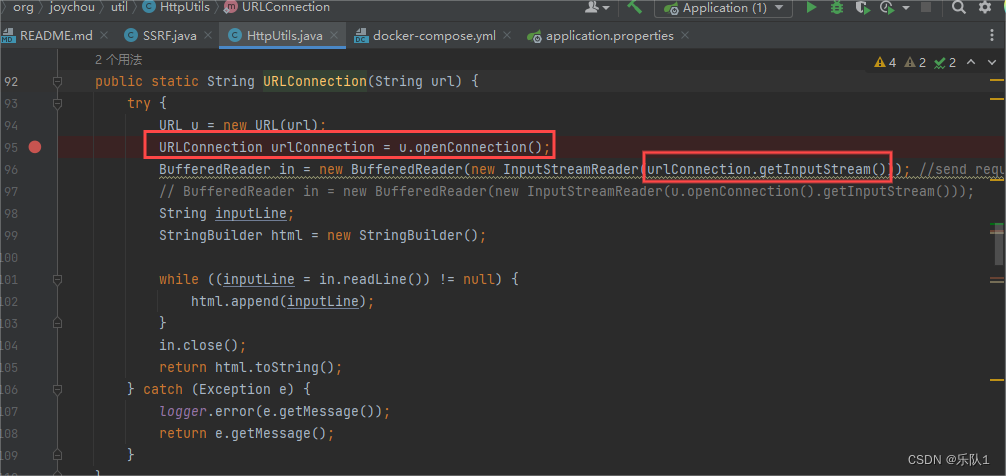

1.7 HttpUtils.URLConnection(url)

用到了 URLConnection.getInputStream:

2 SSRF漏洞修复方案

1 /**

2 * check SSRF (判断逻辑为判断URL的IP是否是内网IP)

3 * 如果是内网IP,返回false,表示checkSSRF不通过。否则返回true。即合法返回true

4 * URL只支持HTTP协议

5 * 设置了访问超时时间为3s

6 */

7 public static Boolean checkSSRF(String url) {

8 HttpURLConnection connection;

9 String finalUrl = url;

10 try {

11 do {

12 // 判断当前请求的URL是否是内网ip

13 Boolean bRet = isInnerIpFromUrl(finalUrl);

14 if (bRet) {

15 return false;

16 }

17 connection = (HttpURLConnection) new URL(finalUrl).openConnection();

18 connection.setInstanceFollowRedirects(false);

19 connection.setUseCaches(false); // 设置为false,手动处理跳转,可以拿到每个

跳转的URL

20 connection.setConnectTimeout(3*1000); // 设置连接超时时间为3s

21 connection.setRequestMethod("GET");

22 connection.connect(); // send dns request

23 int responseCode = connection.getResponseCode(); // 发起网络请求

24 if (responseCode >= 300 && responseCode <=307 && responseCode!= 304 &&

responseCode != 306) {

25 String redirectedUrl = connection.getHeaderField("Location");

26 if (null == redirectedUrl)

27 break;

28 finalUrl = redirectedUrl;

29 System.out.println("redirected url: " + finalUrl);

30 }else

31 break;

32 } while (connection.getResponseCode() != HttpURLConnection.HTTP_OK);

33 connection.disconnect();

34 } catch (Exception e) {

35 return true;

36 }

37 return true;

38 }

2. 1、白名单校验url及ip

/**

2 * 判断一个URL的IP是否是内网IP

3 * 如果是内网IP,返回true

4 * 非内网IP,返回false

5 */

public static boolean isInnerIpFromUrl(String url) throws Exception {

String domain = getUrlDomain(url);

if (domain.equals("")) {

return true; // 异常URL当成内网IP等非法URL处理

}

ip = DomainToIP(domain);

if(ip.equals("")){

return true; // 如果域名转换为IP异常,则认为是非法URL

}

return isInnerIp(ip);

}

/**

*

* 内网IP:

* 10.0.0.1 - 10.255.255.254 (10.0.0.0/8)

* 192.168.0.1 - 192.168.255.254 (192.168.0.0/16)

* 127.0.0.1 - 127.255.255.254 (127.0.0.0/8)

* 172.16.0.1 - 172.31.255.254 (172.16.0.0/12)

*/

public static boolean isInnerIp(String strIP) throws IOException {

try {

String[] ipArr = strIP.split("\\.");

if (ipArr.length != 4) {

return false;

}

int ip_split1 = Integer.parseInt(ipArr[1]);

return (ipArr[0].equals("10") || ipArr[0].equals("127") ||

(ipArr[0].equals("172") && ip_split1 >= 16 && ip_split1 <= 31) ||

(ipArr[0].equals("192") && ipArr[1].equals("168")));

} catch (Exception e) {

return false;

}

}

2.2、限制协议与端口

/**

* 从URL中获取域名

* 限制为http/https协议

*/

public static String getUrlDomain(String url) throws IOException{

try {

URL u = new URL(url);

if (!u.getProtocol().startsWith("http") && !u.getProtocol().start

sWith("https")) {

throw new IOException("Protocol error: " + u.getProtocol());

}

return u.getHost();

} catch (Exception e) {

return "";

}

}