2023.1.29

在机器学习的过程中,过拟合是一个常见的问题。过拟合指的是只能够拟合训练数据,但不能很好的拟合测试数据;机器学习的目的就是提高泛化能力,即便是没有包括在训练数据里的测试数据,也希望神经网络模型可以正确识别。

关于过拟合现象可能出现的情景在这篇文章:https://blog.csdn.net/m0_72675651/article/details/128671496

对应的,学习抑制过拟合的技巧是非常重要的

一、过拟合:

原因:1,模型拥有大量的参数;2,训练数据很少;

根据教材内容,利用MNIST数据集模拟过拟合现象。基本情况:7层神经网络,每层100个神经元,激活函数为ReLU函数,只用300个训练数据;

实验代码:

from dataset.mnist import load_mnist

import numpy as np

from collections import OrderedDict

import sys

import os

import matplotlib.pyplot as plt

sys.path.append(os.pardir)

def softmax(x):

if x.ndim == 2:

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

x = x - np.max(x)

return np.exp(x) / np.sum(np.exp(x))

def sigmoid(x):

return 1 / (1 + np.exp(-x))

class Relu:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

out[self.mask] = 0

return out

def backward(self, dout):

dout[self.mask] = 0

dx = dout

return dx

class Sigmoid:

def __init__(self):

self.out = None

def forward(self, x):

out = sigmoid(x)

self.out = out

return out

def backward(self, dout):

dx = dout * (1.0 - self.out) * self.out

return dx

def cross_entropy_error(y, t):

if y.ndim == 1:

t = t.reshape(1, t.size)

y = y.reshape(1, y.size)

if t.size == y.size:

t = t.argmax(axis=1)

batch_size = y.shape[0]

return -np.sum(np.log(y[np.arange(batch_size), t] + 1e-7)) / batch_size

class Affine:

def __init__(self, W, b):

self.W = W

self.b = b

self.x = None

self.original_x_shape = None

# 权重和偏置参数的导数

self.dW = None

self.db = None

def forward(self, x):

# 对应张量

self.original_x_shape = x.shape

x = x.reshape(x.shape[0], -1)

self.x = x

out = np.dot(self.x, self.W) + self.b

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T)

self.dW = np.dot(self.x.T, dout)

self.db = np.sum(dout, axis=0)

dx = dx.reshape(*self.original_x_shape) # 还原输入数据的形状(对应张量)

return dx

class SoftmaxWithLoss:

def __init__(self):

self.loss = None

self.y = None # softmax的输出

self.t = None # 监督数据

def forward(self, x, t):

self.t = t

self.y = softmax(x)

self.loss = cross_entropy_error(self.y, self.t)

return self.loss

def backward(self, dout=1):

batch_size = self.t.shape[0]

if self.t.size == self.y.size: # 监督数据是one-hot-vector的情况

dx = (self.y - self.t) / batch_size

else:

dx = self.y.copy()

dx[np.arange(batch_size), self.t] -= 1

dx = dx / batch_size

return dx

def numerical_gradient(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

it = np.nditer(x, flags=['multi_index'], op_flags=['readwrite'])

while not it.finished:

idx = it.multi_index

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2 * h)

x[idx] = tmp_val # 还原值

it.iternext()

return grad

class MultiLayerNet:

"""全连接的多层神经网络

Parameters

----------

input : 输入大小(MNIST的情况下为784)

hidden_list : 隐藏层的神经元数量的列表(e.g. [100, 100, 100])

output : 输出大小(MNIST的情况下为10)

activation : 'relu' or 'sigmoid'

weight_init_std : 指定权重的标准差(e.g. 0.01)

指定'relu'或'he'的情况下设定“He的初始值”

指定'sigmoid'或'xavier'的情况下设定“Xavier的初始值”

weight_decay_lambda : Weight Decay(L2范数)的强度

"""

def __init__(self, input, hidden_list, output,

activation='relu', weight_init_std='relu', weight_decay_lambda=0): # 权值衰减设置为0

self.input_size = input

self.output_size = output

self.hidden_size_list = hidden_list

self.hidden_layer_num = len(hidden_list)

self.weight_decay_lambda = weight_decay_lambda

self.params = {}

# 初始化权重

self.__init_weight(weight_init_std)

# 生成层

activation_layer = {'sigmoid': Sigmoid, 'relu': Relu}

self.layers = OrderedDict()

for idx in range(1, self.hidden_layer_num + 1):

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.layers['Activation_function' + str(idx)] = activation_layer[activation]()

idx = self.hidden_layer_num + 1

self.layers['Affine' + str(idx)] = Affine(self.params['W' + str(idx)],

self.params['b' + str(idx)])

self.last_layer = SoftmaxWithLoss()

def __init_weight(self, weight_init_std):

"""设定权重的初始值

Parameters

----------

weight_init_std : 指定权重的标准差(e.g. 0.01)

指定'relu'或'he'的情况下设定“He的初始值”

指定'sigmoid'或'xavier'的情况下设定“Xavier的初始值”

"""

all_size_list = [self.input_size] + self.hidden_size_list + [self.output_size]

for idx in range(1, len(all_size_list)):

scale = weight_init_std

if str(weight_init_std).lower() in ('relu', 'he'):

scale = np.sqrt(2.0 / all_size_list[idx - 1]) # 使用ReLU的情况下推荐的初始值

elif str(weight_init_std).lower() in ('sigmoid', 'xavier'):

scale = np.sqrt(1.0 / all_size_list[idx - 1]) # 使用sigmoid的情况下推荐的初始值

self.params['W' + str(idx)] = scale * np.random.randn(all_size_list[idx - 1], all_size_list[idx])

self.params['b' + str(idx)] = np.zeros(all_size_list[idx])

def predict(self, x):

for layer in self.layers.values():

x = layer.forward(x)

return x

def loss(self, x, t):

"""求损失函数

Parameters

----------

x : 输入数据

t : 监督标签

Returns

-------

损失函数的值

"""

y = self.predict(x)

weight_decay = 0

for idx in range(1, self.hidden_layer_num + 2):

W = self.params['W' + str(idx)]

weight_decay += 0.5 * self.weight_decay_lambda * np.sum(W ** 2)

return self.last_layer.forward(y, t) + weight_decay

def accuracy(self, x, t):

y = self.predict(x)

y = np.argmax(y, axis=1)

if t.ndim != 1: t = np.argmax(t, axis=1)

accuracy = np.sum(y == t) / float(x.shape[0])

return accuracy

def numerical_gradient(self, x, t):

"""求梯度(数值微分)

Parameters

----------

x : 输入数据

t : 监督标签

Returns

-------

具有各层的梯度的字典变量

grads['W1']、grads['W2']、...是各层的权重

grads['b1']、grads['b2']、...是各层的偏置

"""

loss_W = lambda W: self.loss(x, t)

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = numerical_gradient(loss_W, self.params['W' + str(idx)])

grads['b' + str(idx)] = numerical_gradient(loss_W, self.params['b' + str(idx)])

return grads

def gradient(self, x, t):

"""求梯度(误差反向传播法)

Parameters

----------

x : 输入数据

t : 教师标签

Returns

-------

具有各层的梯度的字典变量

grads['W1']、grads['W2']、...是各层的权重

grads['b1']、grads['b2']、...是各层的偏置

"""

# forward

self.loss(x, t)

# backward

dout = 1

dout = self.last_layer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = self.layers['Affine' + str(idx)].dW + self.weight_decay_lambda * self.layers[

'Affine' + str(idx)].W

grads['b' + str(idx)] = self.layers['Affine' + str(idx)].db

return grads

class SGD:

"""随机梯度下降法(Stochastic Gradient Descent)"""

def __init__(self, lr=0.01):

self.lr = lr

def update(self, params, grads):

for key in params.keys():

params[key] -= self.lr * grads[key]

(x_train, t_train), (x_test, t_test) = load_mnist(normalize=True)

# 满足训练数据少的条件

# 为了再现过拟合,减少学习数据

x_train = x_train[:300]

t_train = t_train[:300] # 方便观察测试数据也用300张MINIST图片

network = MultiLayerNet(input=784, hidden_list=[100, 100, 100, 100, 100, 100], output=10,

weight_decay_lambda=0) # 满足模型有大量参数的条件

# 超参数

lr = 0.01

optimizer = SGD(lr)

# 按epoch分别算出所有训练数据和所以测试数据的识别精度

max_epochs = 201

train_size = x_train.shape[0]

batch_size = 100

train_loss_list = []

train_acc_list = []

test_acc_list = []

iter_per_epoch = max(train_size / batch_size, 1)

epoch_cnt = 0

for i in range(1000000000):

batch_mask = np.random.choice(train_size, batch_size) # mini_batch 处理

x_batch = x_train[batch_mask]

t_batch = t_train[batch_mask]

grads = network.gradient(x_batch, t_batch)

optimizer.update(network.params, grads) # 更新参数 神经网络的学习

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, t_train) # 计算识别精度

test_acc = network.accuracy(x_test, t_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("epoch:" + str(epoch_cnt) + ", train acc:" + str(train_acc) + ", test acc:" + str(test_acc))

epoch_cnt += 1

if epoch_cnt >= max_epochs:

break

# 3.绘制图形==========

markers = {'train': 'o', 'test': 's'}

x = np.arange(max_epochs)

plt.plot(x, train_acc_list, marker='o', label='train', markevery=10)

plt.plot(x, test_acc_list, marker='s', label='test', markevery=10)

plt.xlabel("epochs")

plt.ylabel("accuracy")

plt.ylim(0, 1.0)

plt.legend(loc='lower right')

plt.show()

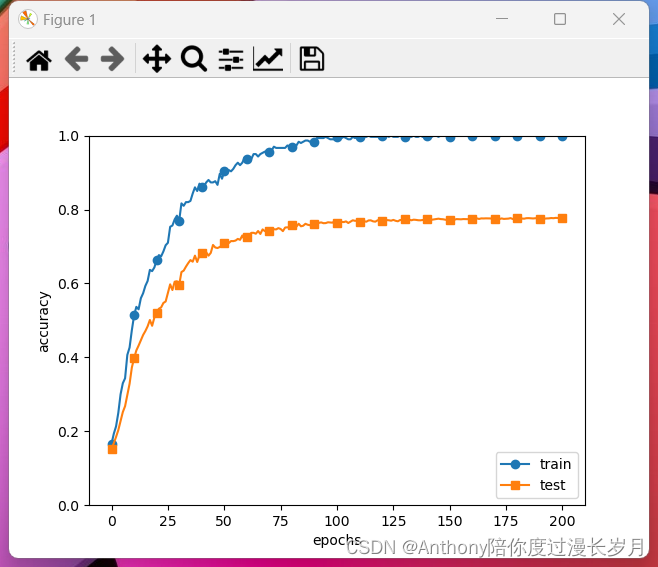

观察结果:

可以看出模型对测试数据的拟合效果不是很好,出现了过拟合现象;

二,权值衰减:

权值衰减是一种用来抑制过拟合的方法,其作用是通过在学习的过程中对大的权重进行惩罚,来抑制过拟合现象(防止权重值更大产生过拟合现象);

神经网络的学习的目的是为了减小损失函数的值。这时,将权重的范数加到损失函数的上,这样就可以抑制权重变大。

计算过程:

有权重 ,其平方范数的权值衰减就是

,然后将这个值,加到损失函数值上

其中

是正则化的超参数,其值越大则衰减效果越强。

用于方便计算反向传播的导数

# weight decay(权值衰减)的设定

# weight_decay_lambda = 0 # 不使用权值衰减的情况 出现过拟合

# weight_decay_lambda = 0.1

# 设定

grads = {}

for idx in range(1, self.hidden_layer_num + 2):

grads['W' + str(idx)] = self.layers['Affine' + str(idx)].dW + self.weight_decay_lambda * self.layers['Affine' + str(idx)].W

grads['b' + str(idx)] = self.layers['Affine' + str(idx)].db

return grads

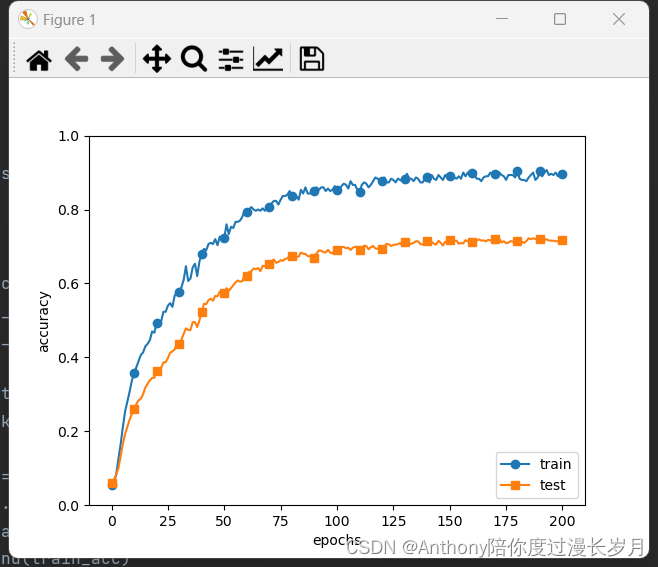

设置 weight_decay_lambda = 0.1 ,带入上一段代码

network = MultiLayerNet(input_size=784, hidden_size_list=[100, 100, 100, 100, 100, 100], output_size=10, weight_decay_lambda=weight_decay_lambda) 实验结果:

在没有使用权值衰减时,训练数据的精度达到了100%,训练数据的精度和测试数据的精度相差很大;使用以后,训练数据的精度并没有达到100%,而且测试数据与训练数据的精度差距也变小。

MNIST数据集的导入代码:

代码需要在一个命名为命名为dataset的文件夹下命名为mnist,并且与上个代码在同一个文件夹;

# coding: utf-8

try:

import urllib.request

except ImportError:

raise ImportError('You should use Python 3.x')

import os.path

import gzip

import pickle

import os

import numpy as np

url_base = 'http://yann.lecun.com/exdb/mnist/'

key_file = {

'train_img':'train-images-idx3-ubyte.gz',

'train_label':'train-labels-idx1-ubyte.gz',

'test_img':'t10k-images-idx3-ubyte.gz',

'test_label':'t10k-labels-idx1-ubyte.gz'

}

dataset_dir = os.path.dirname(os.path.abspath(__file__))

save_file = dataset_dir + "/mnist.pkl"

train_num = 60000

test_num = 10000

img_dim = (1, 28, 28)

img_size = 784

def _download(file_name):

file_path = dataset_dir + "/" + file_name

if os.path.exists(file_path):

return

print("Downloading " + file_name + " ... ")

urllib.request.urlretrieve(url_base + file_name, file_path)

print("Done")

def download_mnist():

for v in key_file.values():

_download(v)

def _load_label(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

labels = np.frombuffer(f.read(), np.uint8, offset=8)

print("Done")

return labels

def _load_img(file_name):

file_path = dataset_dir + "/" + file_name

print("Converting " + file_name + " to NumPy Array ...")

with gzip.open(file_path, 'rb') as f:

data = np.frombuffer(f.read(), np.uint8, offset=16)

data = data.reshape(-1, img_size)

print("Done")

return data

def _convert_numpy():

dataset = {}

dataset['train_img'] = _load_img(key_file['train_img'])

dataset['train_label'] = _load_label(key_file['train_label'])

dataset['test_img'] = _load_img(key_file['test_img'])

dataset['test_label'] = _load_label(key_file['test_label'])

return dataset

def init_mnist():

download_mnist()

dataset = _convert_numpy()

print("Creating pickle file ...")

with open(save_file, 'wb') as f:

pickle.dump(dataset, f, -1)

print("Done!")

def _change_one_hot_label(X):

T = np.zeros((X.size, 10))

for idx, row in enumerate(T):

row[X[idx]] = 1

return T

def load_mnist(normalize=True, flatten=True, one_hot_label=False):

"""读入MNIST数据集

Parameters

----------

normalize : 将图像的像素值正规化为0.0~1.0

one_hot_label :

one_hot_label为True的情况下,标签作为one-hot数组返回

one-hot数组是指[0,0,1,0,0,0,0,0,0,0]这样的数组

flatten : 是否将图像展开为一维数组

Returns

-------

(训练图像, 训练标签), (测试图像, 测试标签)

"""

if not os.path.exists(save_file):

init_mnist()

with open(save_file, 'rb') as f:

dataset = pickle.load(f)

if normalize:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].astype(np.float32)

dataset[key] /= 255.0

if one_hot_label:

dataset['train_label'] = _change_one_hot_label(dataset['train_label'])

dataset['test_label'] = _change_one_hot_label(dataset['test_label'])

if not flatten:

for key in ('train_img', 'test_img'):

dataset[key] = dataset[key].reshape(-1, 1, 28, 28)

return (dataset['train_img'], dataset['train_label']), (dataset['test_img'], dataset['test_label'])

if __name__ == '__main__':

init_mnist()