文章目录

- 前言

- 一、说明

- 二、具体实例

- 1.程序说明

- 2.代码示例

- 总结

前言

介绍卷积神经网络的基本概念及具体实例

一、说明

1.如果一个网络由线性形式串联起来,那么就是一个全连接的网络。

2.全连接会丧失图像的一些空间信息,因为是按照一维结构保存。CNN是按照图像原始结构进行保存数据,不会丧失,可以保留原始空间信息。

3.图像卷积后仍是一个三维张量。

4.subsampling(下采样)后通道数不变,但是图像的高度和宽度变,减少数据数量,降低运算需求。

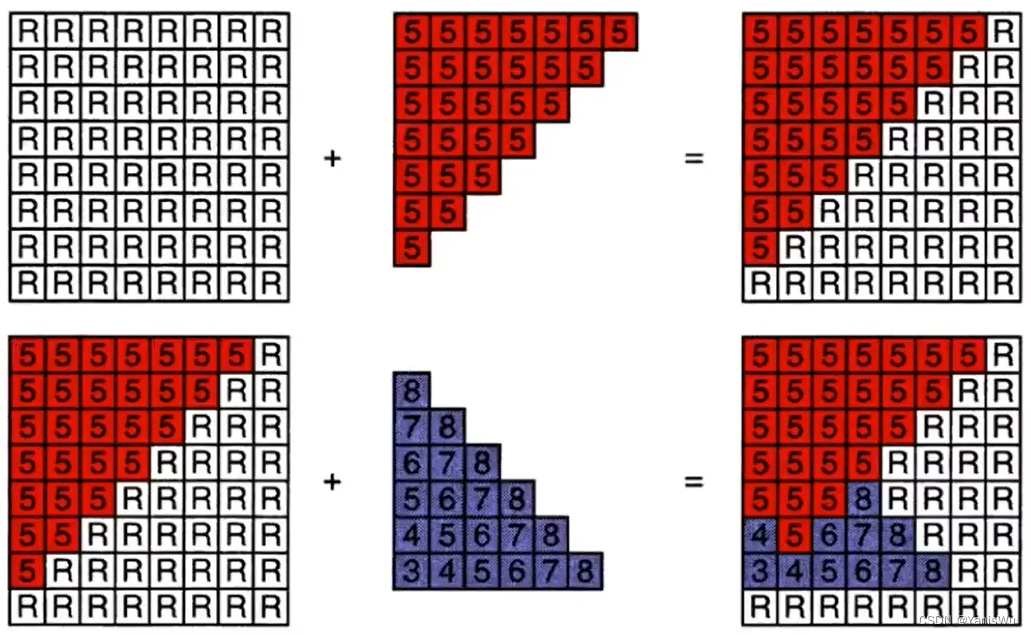

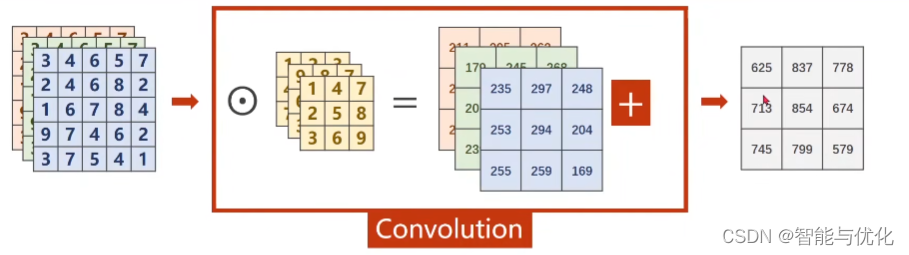

5.卷积运算示意图

6.padding参数:在输入外面再套圈,用0填充。

7.stride参数:做卷积操作时的步长。

8.下采样通常采用最大池化层,通道数量不变,图像宽和高改变。

二、具体实例

1.程序说明

输入尺寸为1*28*28,经过10个1*5*5的卷积操作变为10*24*24;经过2*2的最大池化变为10*12*12;经过20个10*5*5的卷积操作变为20*8*8;经过2*2的最大池化变为20*4*4;变为一维320个向量,再经过全连接层变为10个向量。

2.代码示例

代码如下(示例):

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import pickle

# prepare dataset

# design model using class

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

loss_s = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_s += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

return loss_s / len(train_loader)

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

return 100 * correct / total

if __name__ == '__main__':

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

model = Net()

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

epoch_list = []

loss_list = []

accuracy_list = []

for epoch in range(10):

epoch_list.append(epoch)

loss_lis=train(epoch)

loss_list.append(loss_lis)

tes=test()

accuracy_list.append(tes)

with open('9/epoch_list.pkl', 'wb') as f:

pickle.dump(epoch_list, f)

with open('9/loss_list.pkl', 'wb') as f:

pickle.dump(loss_list, f)

with open('9/accuracy_list.pkl', 'wb') as f:

pickle.dump(accuracy_list, f)

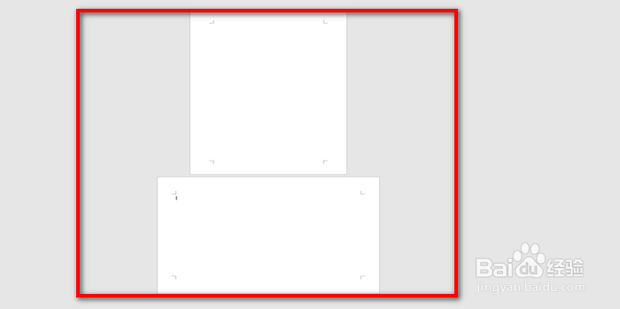

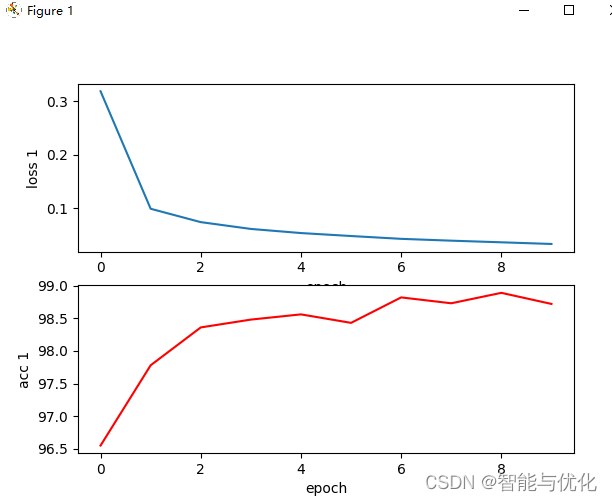

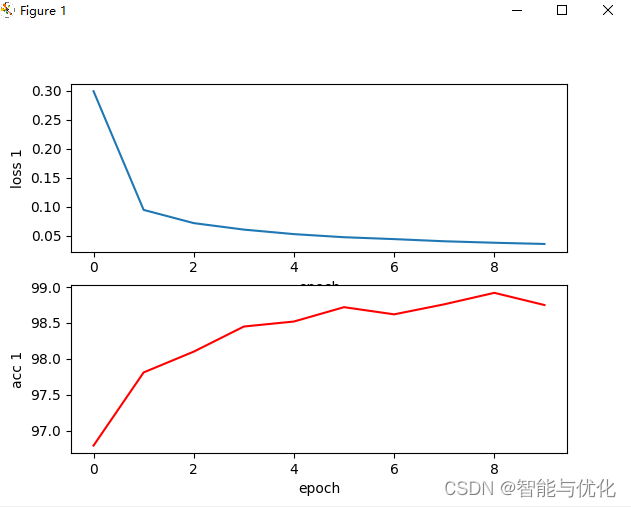

画图程序如下:

import pickle

import matplotlib.pyplot as plt

with open('9/epoch_list.pkl', 'rb') as f:

loaded_epoch_list = pickle.load(f)

with open('9/loss_list.pkl', 'rb') as f:

loaded_loss_list = pickle.load(f)

with open('9/accuracy_list.pkl', 'rb') as f:

loaded_acc_list = pickle.load(f)

plt.subplot(2, 1, 1) # 创建子图,2行1列,第1个子图

plt.plot(loaded_epoch_list, loaded_loss_list)

plt.xlabel('epoch')

plt.ylabel('loss 1')

plt.subplot(2, 1, 2) # 创建子图,2行1列,第2个子图

plt.plot(loaded_epoch_list, loaded_acc_list,'r')

plt.xlabel('epoch')

plt.ylabel('acc 1')

plt.show()

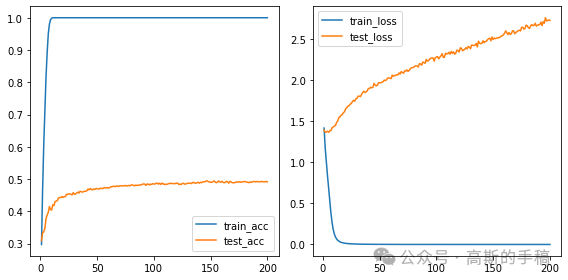

得到如下结果:

利用GPU运行的程序如下:

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

import pickle

import time

# prepare dataset

# design model using class

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# flatten data from (n,1,28,28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size, -1) # -1 此处自动算出的是320

x = self.fc(x)

return x

# training cycle forward, backward, update

def train(epoch):

running_loss = 0.0

loss_s = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_s += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

return loss_s / len(train_loader)

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('accuracy on test set: %d %% ' % (100 * correct / total))

return 100 * correct / total

if __name__ == '__main__':

start_time = time.time()

batch_size = 64

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.1307,), (0.3081,))])

train_dataset = datasets.MNIST(root='../dataset/mnist/', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, shuffle=True, batch_size=batch_size)

test_dataset = datasets.MNIST(root='../dataset/mnist/', train=False, download=True, transform=transform)

test_loader = DataLoader(test_dataset, shuffle=False, batch_size=batch_size)

model = Net()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

# construct loss and optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)

epoch_list = []

loss_list = []

accuracy_list = []

for epoch in range(10):

epoch_list.append(epoch)

loss_lis=train(epoch)

loss_list.append(loss_lis)

tes=test()

accuracy_list.append(tes)

with open('9/epoch_list.pkl', 'wb') as f:

pickle.dump(epoch_list, f)

with open('9/loss_list.pkl', 'wb') as f:

pickle.dump(loss_list, f)

with open('9/accuracy_list.pkl', 'wb') as f:

pickle.dump(accuracy_list, f)

end_time = time.time()

print('training time: %.2f s' % (end_time - start_time))

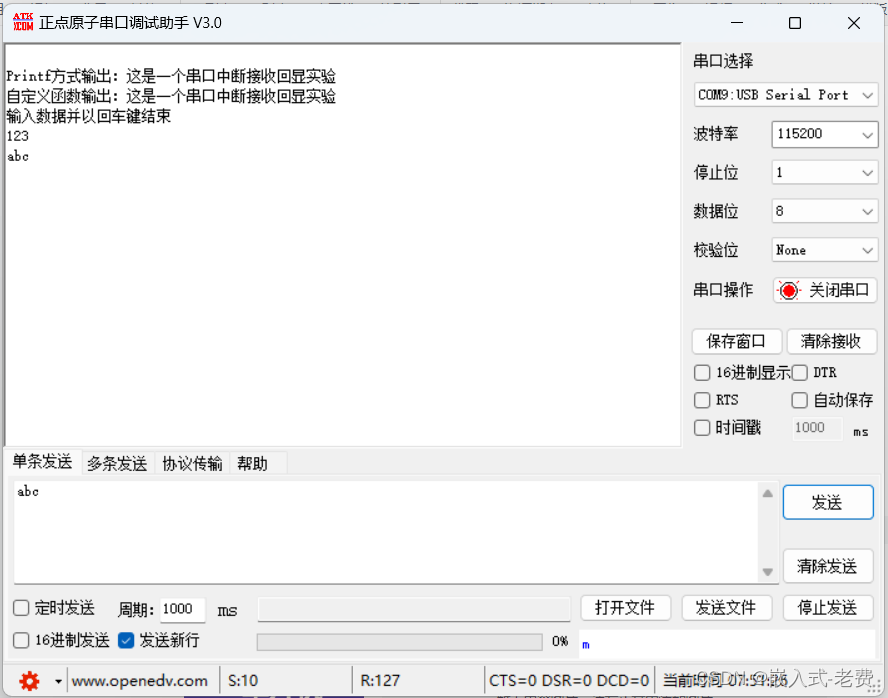

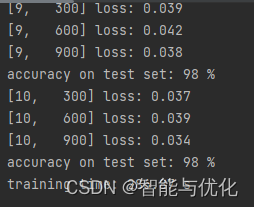

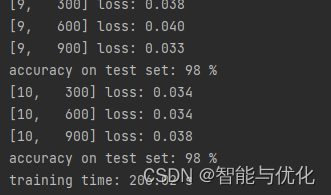

得到如下结果:

总结

PyTorch学习9:卷积神经网络

![[大模型]MiniCPM-2B-chat Lora Full 微调](https://img-blog.csdnimg.cn/direct/1b9775b35ecf41d0b84e94ae4974da3d.png#pic_center)