情感分析(一):基于 NLTK 的 Naive Bayes 实现

朴素贝叶斯(Naive Bayes)分类器可以用来确定输入文本属于某一组类别的概率。例如,预测评论是正面的还是负面的。

它是 “朴素的”,它假设文本中的单词是独立的(但在现实的自然人类语言中,单词的顺序传达了上下文信息)。尽管有这些假设,但朴素贝叶斯在使用少量训练集预测类别时具有很高的准确性。

推荐阅读:Baines, O., Naive Bayes: Machine Learning and Text Classification Application of Bayes’ Theorem.

本文代码已上传至 我的GitHub,需要可自行下载。

1.数据集

我们使用 tensorflow-datasets 提供的 imdb_reviews 数据集。这是一个大型电影评论数据集,可用于二元情感分类,包含比以前的基准数据集多得多的数据。它提供了一组

25000

25000

25000 条极性电影评论用于训练,

25000

25000

25000 条用于测试,还有其他未标记的数据可供使用。

2.环境准备

安装 tensorflow 和 tensorflow-datasets,注意版本匹配问题,博主在此处踩了坑,最好不要用太新的版本,否则不兼容的问题会比较多。

首先,建一个单独的虚拟环境。

安装 tensorflow。

pip install tensorflow==2.0 -i https://pypi.tuna.tsinghua.edu.cn/simple/

安装 tensorflow-datasets。

pip install tensorflow-datasets==2.0.0 -i https://pypi.tuna.tsinghua.edu.cn/simple/

安装 nltk。

pip install nltk -i https://pypi.tuna.tsinghua.edu.cn/simple/

如果导入 nltk 时报错,并提示 nltk.download(‘omw-1.4’),可以按照提示进行下载,或者直接去 NLTK Corpora 网站将文件手动下载下来放到对应的目录中。

其他包都比较好安装。

在 jupyter notebook 中编写代码之前,一定要确定好对应的虚拟环境是否选择正确,可以按照如下方法进行监测。

import sys

sys.executable

可以看到是我们为了本次项目所选择的虚拟环境。

3.导入包

import nltk

from nltk.metrics.scores import precision, recall, f_measure

import pandas as pd

import collections

import sys

sys.path.append("..") # Adds higher directory to python modules path.

from NLPmoviereviews.data import load_data_sent

from NLPmoviereviews.utilities import preprocessing

其中,NLPmoviereviews.data 利用 tensorflow-datasets 封装了数据下载功能。(注:NLPmoviereviews 是自己写的一个包。)

import tensorflow_datasets as tfds

from tensorflow.keras.preprocessing.text import text_to_word_sequence

def load_data(percentage_of_sentences=10):

"""

Load the imdb_reviews dataset for given percentage of the dataset.

Returns train-test sets

X--> returned as list of words in lower case

y--> returned as two classes 0 and 1 for bad and good reviews

"""

train_data, test_data = tfds.load(name="imdb_reviews", split=["train", "test"], batch_size=-1, as_supervised=True)

train_sentences, y_train = tfds.as_numpy(train_data)

test_sentences, y_test = tfds.as_numpy(test_data)

# Take only a given percentage of the entire data

if percentage_of_sentences is not None:

assert(percentage_of_sentences> 0 and percentage_of_sentences<=100)

len_train = int(percentage_of_sentences/100*len(train_sentences))

train_sentences, y_train = train_sentences[:len_train], y_train[:len_train]

len_test = int(percentage_of_sentences/100*len(test_sentences))

test_sentences, y_test = test_sentences[:len_test], y_test[:len_test]

X_train = [text_to_word_sequence(_.decode("utf-8")) for _ in train_sentences]

X_test = [text_to_word_sequence(_.decode("utf-8")) for _ in test_sentences]

return X_train, y_train, X_test, y_test

def load_data_sent(percentage_of_sentences=10):

"""

Load the imdb_reviews dataset for given percentage of the dataset.

Returns train-test sets

X--> returned as sentences in lower case

y--> returned as two classes 0 and 1 for bad and good reviews

"""

X_train, y_train, X_test, y_test = load_data(percentage_of_sentences)

X_train = [' '.join(_) for _ in X_train]

X_test = [' '.join(_) for _ in X_test]

return X_train, y_train, X_test, y_test

而 NLPmoviereviews.utilities 包含了一些功能函数,比如 preprocessing、embed_sentence_with_TF 等函数。

import string

from nltk.corpus import stopwords

from nltk import word_tokenize

from nltk.stem import WordNetLemmatizer

def preprocessing(sentence):

"""

Use NLTK to clean text: remove numbers, stop words, and lemmatize verbs and nouns

"""

# Basic cleaning

sentence = sentence.strip() # remove whitespaces

sentence = sentence.lower() # lowercasing

sentence = ''.join(char for char in sentence if not char.isdigit()) # removing numbers

# Advanced cleaning

for punctuation in string.punctuation:

sentence = sentence.replace(punctuation, '') # removing punctuation

tokenized_sentence = word_tokenize(sentence) # tokenizing

stop_words = set(stopwords.words('english')) # defining stopwords

tokenized_sentence_cleaned = [w for w in tokenized_sentence

if not w in stop_words] # remove stopwords

# 1 - Lemmatizing the verbs

verb_lemmatized = [WordNetLemmatizer().lemmatize(word, pos = "v") # v --> verbs

for word in tokenized_sentence_cleaned]

# 2 - Lemmatizing the nouns

noun_lemmatized = [WordNetLemmatizer().lemmatize(word, pos = "n") # n --> nouns

for word in verb_lemmatized]

cleaned_sentence= ' '.join(w for w in noun_lemmatized)

return cleaned_sentence

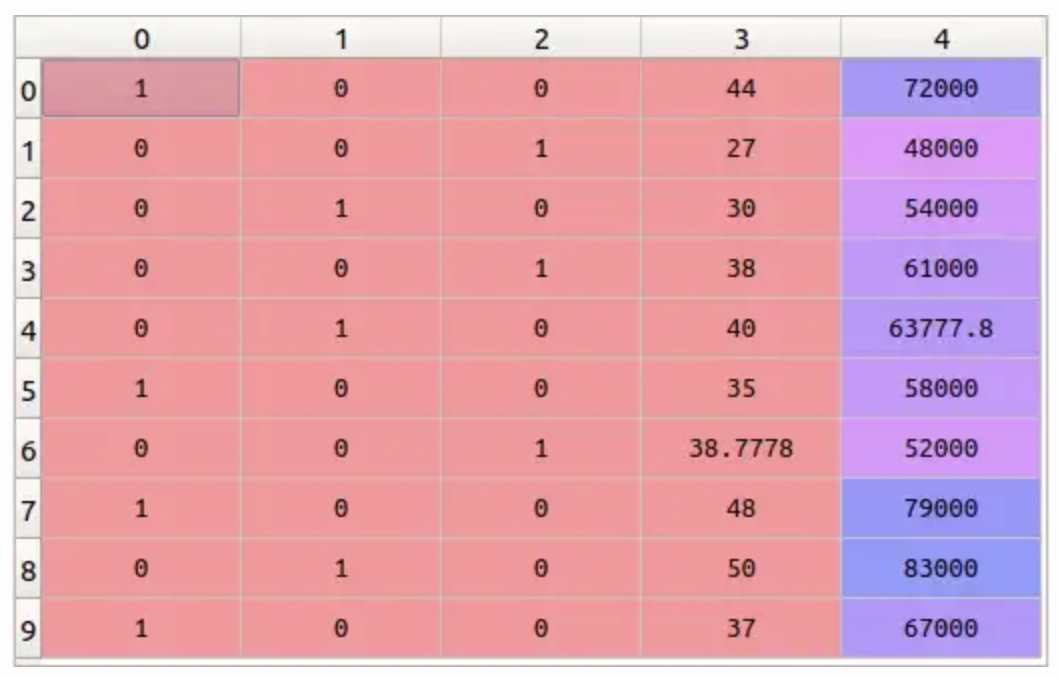

4.导入数据

# load data

X_train, y_train, X_test, y_test = load_data_sent(percentage_of_sentences=10)

X_train

X_train 是一个列表,存储了一条条文本信息,如下所示。

["this is a big step down after the surprisingly enjoyable original this sequel isn't nearly as fun as part one and it instead spends too much time on plot development tim thomerson is still the best thing about this series but his wisecracking is toned down in this entry the performances are all adequate but this time the script lets us down the action is merely routine and the plot is only mildly interesting so i need lots of silly laughs in order to stay entertained during a trancers movie unfortunately the laughs are few and far between and so this film is watchable at best",

"perhaps because i was so young innocent and brainwashed when i saw it this movie was the cause of many sleepless nights for me i haven't seen it since i was in seventh grade at a presbyterian school so i am not sure what effect it would have on me now however i will say that it left an impression on me and most of my friends it did serve its purpose at least until we were old enough and knowledgeable enough to analyze and create our own opinions i was particularly terrified of what the newly converted post rapture christians had to endure when not receiving the mark of the beast i don't want to spoil the movie for those who haven't seen it so i will not mention details of the scenes but i can still picture them in my head and it's been 19 years",

...]

y_train 存储了每一条文本对应的极性:

0

0

0(负面的)或

1

1

1(正面的)。

y_train

5.数据预处理

rm_custom_stops 函数:移除停用词。

# remove custom stop-words

def rm_custom_stops(sentence):

'''

Custom stop word remover

Parameters:

sentence (str): a string of words

Returns:

list_of_words (list): cleaned sentence as a list of words

'''

words = sentence.split()

stop_words = {'br', 'movie', 'film'}

return [w for w in words if not w in stop_words]

process_df 函数:数据清洗、格式转换。

# perform preprocessing (cleaning) & transform to dataframe

def process_df(X, y):

'''

Transform texts and labels into dataframe of

cleaned texts (as list of words) and human readable target labels

Parameters:

X (list): list of strings (reviews)

y (list): list of target labels (0/1)

Returns:

df (dataframe): dataframe of processed reviews (as list of words)

and corresponding sentiment label (positive/negative)

'''

# create dataframe from data

d = {'text': X, 'sentiment': y}

df = pd.DataFrame(d)

# make sentiment human-readable

df['sentiment'] = df.sentiment.map(lambda x: 'positive' if x==1 else 'negative')

# clean and split text into list of words

df['text'] = df.text.apply(preprocessing)

df['text'] = df.text.apply(rm_custom_stops)

# Generate the feature sets for the movie review documents one by one

return df

开始处理数据。

# process data

train_df = process_df(X_train, y_train)

test_df = process_df(X_test, y_test)

查看转换格式后的训练数据 train。

# inspect dataframe

train_df.head()

6.获取常用词

获取语料库中单词的频率分布,并选择 2000 2000 2000 个最常见的单词。

# get frequency distribution of words in corpus & select 2000 most common words

def most_common(df, n=2000):

'''

Get n most common words from data frame of text reviews

Parameters:

df (dataframe): dataframe with column of processed text reviews

n (int): number of most common words to get

Returns:

most_common_words (list): list of n most common words

'''

# create list of all words in the train data

complete_corpus = df.text.sum()

# Construct a frequency dict of all words in the overall corpus

all_words = nltk.FreqDist(w.lower() for w in complete_corpus)

# select the 2,000 most frequent words (incl. frequency)

most_common_words = all_words.most_common(n)

return [item[0] for item in most_common_words]

# get 2000 most common words

most_common_2000 = most_common(train_df)

# inspect first 10 most common words

most_common_2000[0:10]

7.创建 NLTK 特征集

对于 NLTK 朴素贝叶斯分类器,我们必须对句子进行分词,并找出句子与 all_words / most_common_words 共享哪些词,构成了句子的特征。(注:其实就是利用 词袋模型 构建特征)

# for a given text, create a featureset (dict of features - {'word': True/False})

def review_features(review, most_common_words):

'''

Feature extractor that checks whether each of the most

common words is present in a given review

Parameters:

review (list): text reviews as list of words

most_common_words (list): list of n most common words

Returns:

features (dict): dict of most common words & corresponding True/False

'''

review_words = set(review)

features = {}

for word in most_common_words:

features['contains(%s)' % word] = (word in review_words)

return features

# create featureset for each text in a given dataframe

def make_set(df, most_common_words):

'''

Generates nltk featuresets for each movie review in dataframe.

Feature sets are composed of a dict describing whether each of the most

common words is present in the text review or not

Parameters:

df (dataframe): processed dataframe of text reviews

most_common_words (list): list of most common words

Returns:

feature_set (list): list of dicts of most common words & corresponding True/False

'''

return [(review_features(df.text[i], most_common_words), df.sentiment[i]) for i in range(len(df.sentiment))]

# make data into featuresets (for nltk naive bayes classifier)

train_set = make_set(train_df, most_common_2000)

test_set = make_set(test_df, most_common_2000)

# inspect first train featureset

train_set[0]

({'contains(one)': True,

'contains(make)': False,

'contains(like)': False,

'contains(see)': False,

'contains(get)': False,

'contains(time)': True,

'contains(good)': False,

'contains(watch)': False,

'contains(character)': False,

'contains(story)': False,

'contains(go)': False,

'contains(even)': False,

'contains(think)': False,

'contains(really)': False,

'contains(well)': False,

'contains(show)': False,

'contains(would)': False,

'contains(scene)': False,

'contains(end)': False,

'contains(look)': False,

'contains(much)': True,

'contains(say)': False,

'contains(know)': False,

...},

'negative')

8.训练并评估模型

选用 nltk 提供的朴素贝叶斯分类器(NaiveBayesClassifier)。

# Train a naive bayes classifier with train set by nltk

classifier = nltk.NaiveBayesClassifier.train(train_set)

# Get the accuracy of the naive bayes classifier with test set

accuracy = nltk.classify.accuracy(classifier, test_set)

accuracy

# build reference and test set of observed values (for each label)

refsets = collections.defaultdict(set)

testsets = collections.defaultdict(set)

for i, (feats, label) in enumerate(train_set):

refsets[label].add(i) # 存储不同标签对应的训练数据(分类前结果)

observed = classifier.classify(feats) # 根据训练数据的特征进行分类

testsets[observed].add(i) # 存储不同标签对应的训练数据(分类后结果)

# print precision, recall, and f-measure

print('pos precision:', precision(refsets['positive'], testsets['positive']))

print('pos recall:', recall(refsets['positive'], testsets['positive']))

print('pos F-measure:', f_measure(refsets['positive'], testsets['positive']))

print('neg precision:', precision(refsets['negative'], testsets['negative']))

print('neg recall:', recall(refsets['negative'], testsets['negative']))

print('neg F-measure:', f_measure(refsets['negative'], testsets['negative']))

显示前

n

n

n 个最有用的特征:

# show top n most informative features

classifier.show_most_informative_features(10)

9.预测

# predict on new review (from mubi.com)

new_review = "Surprisingly effective and moving, The Balcony Movie takes the Front Up \

concept of talking to strangers, but here attaches it to a fixed perspective \

in order to create a strong sense of the stream of life passing us by. \

It's possible to not only witness the subtle changing of seasons\

but also the gradual opening of trust and confidence in Lozinski's \

repeating characters. A Pandemic movie, pre-pandemic. 3.5 stars"

# perform preprocessing (cleaning & featureset transformation)

processed_review = rm_custom_stops(preprocessing(new_review))

processed_review = review_features(processed_review, most_common_2000)

# predict label

classifier.classify(processed_review)

获取每个标签及对应单词的概率:

# to get individual probability for each label and word, taken from:

# https://stackoverflow.com/questions/20773200/python-nltk-naive-bayes-probabilities

for label in classifier.labels():

print(f'\n\n{label}:')

for (fname, fval) in classifier.most_informative_features(50):

print(f" {fname}({fval}): ", end="")

print("{0:.2f}%".format(100*classifier._feature_probdist[label, fname].prob(fval)))

negative:

contains(delightful)(True): 0.12%

contains(absurd)(True): 2.51%

contains(beautifully)(True): 0.28%

contains(noir)(True): 0.20%

contains(unfunny)(True): 2.03%

contains(magnificent)(True): 0.20%

contains(poorly)(True): 4.49%

contains(dreadful)(True): 1.71%

contains(worst)(True): 15.63%

contains(waste)(True): 12.29%

contains(turkey)(True): 1.47%

contains(vietnam)(True): 1.47%

contains(restore)(True): 0.20%

contains(lame)(True): 4.73%

contains(brilliantly)(True): 0.28%

contains(awful)(True): 8.15%

contains(garbage)(True): 3.14%

contains(worse)(True): 8.39%

contains(intense)(True): 0.44%

contains(wonderfully)(True): 0.36%

contains(laughable)(True): 2.59%

contains(unbelievable)(True): 2.90%

contains(finest)(True): 0.36%

contains(pointless)(True): 3.30%

contains(crap)(True): 5.85%

contains(trial)(True): 0.28%

contains(disappointment)(True): 3.62%

contains(warm)(True): 0.36%

contains(unconvincing)(True): 1.47%

contains(lincoln)(True): 0.12%

contains(underrate)(True): 0.36%

contains(pathetic)(True): 2.98%

contains(unfold)(True): 0.36%

contains(zero)(True): 2.11%

contains(existent)(True): 1.71%

contains(shallow)(True): 1.71%

contains(dull)(True): 5.37%

contains(cheap)(True): 4.18%

contains(mess)(True): 4.89%

contains(perfectly)(True): 0.91%

contains(ridiculous)(True): 5.85%

contains(excuse)(True): 3.70%

contains(che)(True): 0.12%

contains(gritty)(True): 0.36%

contains(pleasant)(True): 0.36%

contains(mediocre)(True): 2.59%

contains(rubbish)(True): 1.55%

contains(insult)(True): 2.90%

contains(porn)(True): 1.87%

contains(douglas)(True): 0.36%

positive:

contains(delightful)(True): 1.97%

contains(absurd)(True): 0.20%

contains(beautifully)(True): 3.33%

contains(noir)(True): 2.37%

contains(unfunny)(True): 0.20%

contains(magnificent)(True): 1.73%

contains(poorly)(True): 0.52%

contains(dreadful)(True): 0.20%

contains(worst)(True): 1.89%

contains(waste)(True): 1.65%

contains(turkey)(True): 0.20%

contains(vietnam)(True): 0.20%

contains(restore)(True): 1.33%

contains(lame)(True): 0.76%

contains(brilliantly)(True): 1.73%

contains(awful)(True): 1.33%

contains(garbage)(True): 0.52%

contains(worse)(True): 1.41%

contains(intense)(True): 2.61%

contains(wonderfully)(True): 2.13%

contains(laughable)(True): 0.44%

contains(unbelievable)(True): 0.52%

contains(finest)(True): 1.97%

contains(pointless)(True): 0.60%

contains(crap)(True): 1.08%

contains(trial)(True): 1.49%

contains(disappointment)(True): 0.68%

contains(warm)(True): 1.89%

contains(unconvincing)(True): 0.28%

contains(lincoln)(True): 0.60%

contains(underrate)(True): 1.81%

contains(pathetic)(True): 0.60%

contains(unfold)(True): 1.73%

contains(zero)(True): 0.44%

contains(existent)(True): 0.36%

contains(shallow)(True): 0.36%

contains(dull)(True): 1.16%

contains(cheap)(True): 0.92%

contains(mess)(True): 1.08%

contains(perfectly)(True): 4.06%

contains(ridiculous)(True): 1.33%

contains(excuse)(True): 0.84%

contains(che)(True): 0.52%

contains(gritty)(True): 1.57%

contains(pleasant)(True): 1.57%

contains(mediocre)(True): 0.60%

contains(rubbish)(True): 0.36%

contains(insult)(True): 0.68%

contains(porn)(True): 0.44%

contains(douglas)(True): 1.49%

比如

d

e

l

i

g

h

t

f

u

l

delightful

delightful,在 negative 下是

0.12

%

0.12\%

0.12%,在 positive 下是

1.97

%

1.97\%

1.97%,而

1.97

%

∶

0.12

%

=

16.5

∶

1.0

1.97\% ∶ 0.12\% = 16.5 ∶ 1.0

1.97%∶0.12%=16.5∶1.0。

![[Linux]进程地址空间](https://img-blog.csdnimg.cn/980719c3338f4eea8c90e2219186019e.png)