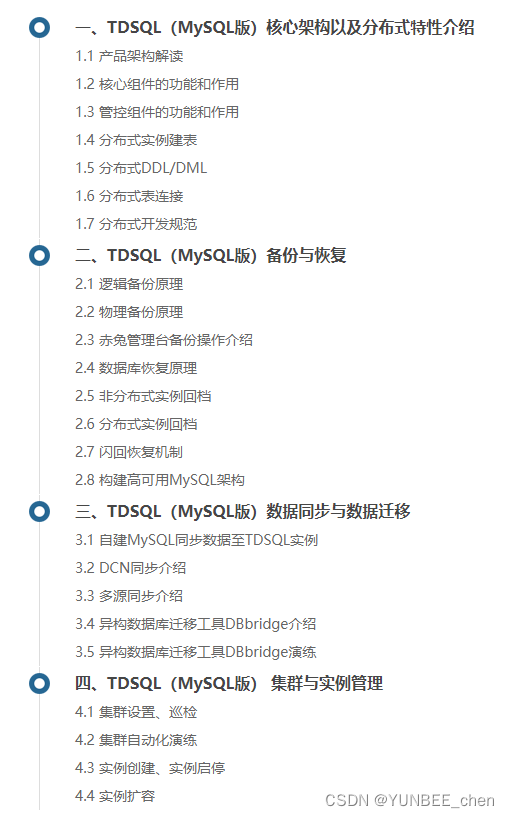

文章目录

- 1、概览

- 为什么我们需要链?

- 2、快速入门 (Get started) - Using `LLMChain`

- 多个变量 使用字典输入

- 在 `LLMChain` 中使用聊天模型:

- 3、异步 API

- 4、不同的调用方法

- `__call__`调用

- 仅返回输出键值 return_only_outputs

- 只有一个输出键 run

- 只有一个输入键

- 5、自定义chain

- 6、调试链 (Debugging chains)

- 7、从 LangChainHub 加载

- 8、添加记忆(state)

- 9、序列化

- 将chain 保存到磁盘

- 从磁盘加载

- 分开保存组件

- 基础

- 10、LLM

- 基本使用

- LLM 链的其他运行方式

- apply 允许您对一组输入运行链逻辑

- generate 返回 LLMResult

- predict 输入键为关键字

- 解析输出 apply_and_parse

- 从字符串初始化 LLMChain

- 11、Router

- LLMRouterChain

- EmbeddingRouterChain

- 12、序列(Sequential)

- 顺序链(Sequential Chain)

- 顺序链中的记忆(Memory in Sequential Chains)

- 13、转换

- 14、文档

- Stuff documents

- Refine

- Map reduce

- Map re-rank

- 热门(Popular)

- 15、检索型问答(Retrieval QA)

- 链类型 (Chain Type)

- from_chain_type 指定链类型

- combine_documents_chain 直接加载链

- 自定义提示 (Custom Prompts)

- 返回源文档

- RetrievalQAWithSourceChain

- 16、对话式检索问答(Conversational Retrieval QA)

- 传入聊天历史

- 使用不同的模型来压缩问题

- 返回源文档

- ConversationalRetrievalChain 与 `search_distance`

- ConversationalRetrievalChain with `map_reduce`

- ConversationalRetrievalChain 与带有来源的问答

- ConversationalRetrievalChain 与流式传输至 `stdout`

- get_chat_history 函数

- 17、SQL

- 使用查询检查器 Query Checker

- 自定义提示

- 返回中间步骤 (Return Intermediate Steps)

- 选择如何限制返回的行数

- 添加每个表的示例行

- 自定义表信息 (Custom Table Info)

- SQLDatabaseSequentialChain

- 使用本地语言模型

- 18、摘要 summarize

- 准备数据

- 快速开始

- The `stuff` Chain

- The `map_reduce` Chain

- 自定义 `MapReduceChain`

- The `refine` Chain

- 附加 ( Additional )

- 19、分析文档 (Analyze Document)

- 总结

- 问答

- 20、ConstitutionalChain 自我批判链

- UnifiedObjective

- Custom Principles

- 运行多个 principle

- Intermediate Steps - ConstitutionalChain

- No revision necessary

- All Principles

- 21、抽取

- 抽取实体

- Pydantic示例

- 22、Graph QA

- 创建 graph

- 查询图

- Save the graph

- 23、虚拟文档嵌入

- 多次生成

- 使用我们自己的提示

- 使用HyDE

- 24、Bash chain

- Customize Prompt

- Persistent Terminal

- 25、自检链

- 26、数学链

- 27、HTTP request chain

- 28、Summarization checker chain

- 审查 Moderation

- 如何使用审核链

- 如何将审核链附加到 LLMChain

- 29、动态从多个提示中选择 multi_prompt_router

- 30、动态选择多个检索器 multi_retrieval_qa_router

- 31、使用OpenAI函数进行检索问答

- 使用 Pydantic

- 在ConversationalRetrievalChain中使用

- 32、OpenAPI chain

- Load the spec

- Select the Operation

- Construct the chain

- Return raw response

- Example POST message

- 33、Program-aided language model (PAL) chain

- Math Prompt

- Colored Objects

- Intermediate Steps

- 34、Question-Answering Citations

- 35、文档问答 qa_with_sources

- 准备数据

- 快速入门

- The `stuff` Chain

- The `map_reduce` Chain

- 中间步骤 (Intermediate Steps)

- The `refine` Chain

- The `map-rerank` Chain

- 带有来源的文档问答

- 36、标记

- 最简单的方法,只指定类型

- 更多控制

- 使用 Pydantic 指定模式

- 37、Vector store-augmented text generation

- Prepare Data

- Set Up Vector DB

- Set Up LLM Chain with Custom Prompt

- Generate Text

本文转载改编自:

https://python.langchain.com.cn/docs/modules/chains/

1、概览

在简单应用中,单独使用LLM是可以的, 但更复杂的应用需要将LLM进行链接 - 要么相互链接,要么与其他组件链接。

LangChain为这种"链接"应用提供了Chain接口。

我们将链定义得非常通用,它是对组件调用的序列,可以包含其他链。基本接口很简单:

class Chain(BaseModel, ABC):

"""Base interface that all chains should implement."""

memory: BaseMemory

callbacks: Callbacks

def __call__(

self,

inputs: Any,

return_only_outputs: bool = False,

callbacks: Callbacks = None,

) -> Dict[str, Any]:

...

将组件组合成链的思想简单而强大。

它极大地简化了复杂应用的实现,并使应用更模块化,从而更容易调试、维护和改进应用。

更多具体信息请查看:

- 如何使用,了解不同链特性的详细步骤

- 基础,熟悉核心构建块链

- 文档,了解如何将文档纳入链中

- 常用 链,用于最常见的用例

- 附加,查看一些更高级的链和集成,可以直接使用

为什么我们需要链?

链允许我们将 多个组件组合在一起 创建一个单一的、连贯的应用。

例如,我们可以创建一个链,该链接收用户输入,使用PromptTemplate对其进行格式化,然后将格式化后的响应传递给LLM。

我们可以通过将多个链 组合在一起 或将 链与其他组件组合来构建更复杂的链。

2、快速入门 (Get started) - Using LLMChain

LLMChain 是最基本的构建块链。

它接受一个提示模板,将其与用户输入进行格式化,并返回 LLM 的响应。

要使用 LLMChain,首先创建一个提示模板。

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

现在我们可以创建一个非常简单的链,它将接受用户输入,使用它格式化提示,然后将其发送到 LLM。

from langchain.chains import LLMChain

chain = LLMChain(llm=llm, prompt=prompt)

# Run the chain only specifying the input variable.

print(chain.run("colorful socks"))

Colorful Toes Co.

多个变量 使用字典输入

如果有多个变量,您可以使用字典一次性输入它们。

prompt = PromptTemplate(

input_variables=["company", "product"],

template="What is a good name for {company} that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run({

'company': "ABC Startup",

'product': "colorful socks"

}))

Socktopia Colourful Creations.

在 LLMChain 中使用聊天模型:

from langchain.chat_models import ChatOpenAI

from langchain.prompts.chat import (

ChatPromptTemplate,

HumanMessagePromptTemplate,

)

human_message_prompt = HumanMessagePromptTemplate(

prompt=PromptTemplate(

template="What is a good name for a company that makes {product}?",

input_variables=["product"],

)

)

chat_prompt_template = ChatPromptTemplate.from_messages([human_message_prompt])

chat = ChatOpenAI(temperature=0.9)

chain = LLMChain(llm=chat, prompt=chat_prompt_template)

print(chain.run("colorful socks"))

Rainbow Socks Co.

3、异步 API

LangChain通过利用 asyncio 库为链提供了异步支持。

目前在 LLMChain(通过 arun、apredict、acall)和 LLMMathChain(通过 arun 和 acall)、ChatVectorDBChain 和 QA chains 中支持异步方法。

其他链的异步支持正在路上。

import asyncio

import time

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

def generate_serially():

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

for _ in range(5):

resp = chain.run(product="toothpaste")

print(resp)

async def async_generate(chain):

resp = await chain.arun(product="toothpaste")

print(resp)

async def generate_concurrently():

llm = OpenAI(temperature=0.9)

prompt = PromptTemplate(

input_variables=["product"],

template="What is a good name for a company that makes {product}?",

)

chain = LLMChain(llm=llm, prompt=prompt)

tasks = [async_generate(chain) for _ in range(5)]

await asyncio.gather(*tasks)

s = time.perf_counter()

# 如果在 Jupyter 之外运行,请使用 asyncio.run(generate_concurrently())

await generate_concurrently()

elapsed = time.perf_counter() - s

print("\033[1m" + f"并发执行花费了 {elapsed:0.2f} 秒." + "\033[0m")

s = time.perf_counter()

generate_serially()

elapsed = time.perf_counter() - s

print("\033[1m" + f"串行执行花费了 {elapsed:0.2f} 秒." + "\033[0m")

BrightSmile Toothpaste Company

...

BrightSmile Toothpaste.

4、不同的调用方法

__call__调用

所有从 Chain 继承的类都提供了几种运行链逻辑的方式。最直接的一种是使用 __call__:

chat = ChatOpenAI(temperature=0)

prompt_template = "Tell me a {adjective} joke"

llm_chain = LLMChain(llm=chat, prompt=PromptTemplate.from_template(prompt_template) )

llm_chain(inputs={"adjective": "corny"})

{'adjective': 'corny',

'text': 'Why did the tomato turn red? Because it saw the salad dressing!'}

仅返回输出键值 return_only_outputs

默认情况下,__call__ 返回输入和输出键值。

您可以通过将 return_only_outputs 设置为 True 来配置它 仅返回输出键值。

llm_chain("corny", return_only_outputs=True)

{'text': 'Why did the tomato turn red? Because it saw the salad dressing!'}

只有一个输出键 run

如果 Chain 只输出一个输出键(即其 output_keys 中只有一个元素),则可以使用 run 方法。

注意,run 输出的是字符串而不是字典。

# llm_chain 只有一个输出键,所以我们可以使用 run

llm_chain.output_keys

# -> ['text']

llm_chain.run({"adjective": "corny"})

# -> 'Why did the tomato turn red? Because it saw the salad dressing!'

只有一个输入键

在只有一个输入键的情况下,您可以直接输入字符串 而不指定输入映射。

# 这两种方式是等效的

llm_chain.run({"adjective": "corny"})

llm_chain.run("corny")

# 这两种方式也是等效的

llm_chain("corny")

llm_chain({"adjective": "corny"})

{'adjective': 'corny',

'text': 'Why did the tomato turn red? Because it saw the salad dressing!'}

提示:您可以通过其 run 方法轻松地将 Chain 对象作为 Agent 中的 Tool 集成。在此处查看一个示例 here。

5、自定义chain

要实现自己的自定义chain ,您可以继承 Chain 并实现以下方法:

from __future__ import annotations

from typing import Any, Dict, List, Optional

from pydantic import Extra

from langchain.base_language import BaseLanguageModel

from langchain.callbacks.manager import (

AsyncCallbackManagerForChainRun,

CallbackManagerForChainRun,

)

from langchain.chains.base import Chain

from langchain.prompts.base import BasePromptTemplate

class MyCustomChain(Chain):

prompt: BasePromptTemplate

"""Prompt object to use."""

llm: BaseLanguageModel

output_key: str = "text" #: :meta private:

class Config:

"""Configuration for this pydantic object."""

extra = Extra.forbid

arbitrary_types_allowed = True

@property

def input_keys(self) -> List[str]:

"""Will be whatever keys the prompt expects.

:meta private:

"""

return self.prompt.input_variables

@property

def output_keys(self) -> List[str]:

"""Will always return text key.

:meta private:

"""

return [self.output_key]

def _call(

self,

inputs: Dict[str, Any],

run_manager: Optional[CallbackManagerForChainRun] = None,

) -> Dict[str, str]:

# Your custom chain logic goes here

# This is just an example that mimics LLMChain

prompt_value = self.prompt.format_prompt(**inputs)

# Whenever you call a language model, or another chain, you should pass

# a callback manager to it. This allows the inner run to be tracked by

# any callbacks that are registered on the outer run.

# You can always obtain a callback manager for this by calling

# `run_manager.get_child()` as shown below.

response = self.llm.generate_prompt(

[prompt_value], callbacks=run_manager.get_child() if run_manager else None

)

# If you want to log something about this run, you can do so by calling

# methods on the `run_manager`, as shown below. This will trigger any

# callbacks that are registered for that event.

if run_manager:

run_manager.on_text("Log something about this run")

return {self.output_key: response.generations[0][0].text}

async def _acall(

self,

inputs: Dict[str, Any],

run_manager: Optional[AsyncCallbackManagerForChainRun] = None,

) -> Dict[str, str]:

# Your custom chain logic goes here

# This is just an example that mimics LLMChain

prompt_value = self.prompt.format_prompt(**inputs)

# Whenever you call a language model, or another chain, you should pass

# a callback manager to it. This allows the inner run to be tracked by

# any callbacks that are registered on the outer run.

# You can always obtain a callback manager for this by calling

# `run_manager.get_child()` as shown below.

response = await self.llm.agenerate_prompt(

[prompt_value], callbacks=run_manager.get_child() if run_manager else None

)

# If you want to log something about this run, you can do so by calling

# methods on the `run_manager`, as shown below. This will trigger any

# callbacks that are registered for that event.

if run_manager:

await run_manager.on_text("Log something about this run")

return {self.output_key: response.generations[0][0].text}

@property

def _chain_type(self) -> str:

return "my_custom_chain"

from langchain.callbacks.stdout import StdOutCallbackHandler

from langchain.chat_models.openai import ChatOpenAI

from langchain.prompts.prompt import PromptTemplate

chain = MyCustomChain(

prompt=PromptTemplate.from_template("tell us a joke about {topic}"),

llm=ChatOpenAI(),

)

chain.run({"topic": "callbacks"}, callbacks=[StdOutCallbackHandler()])

[1m> Entering new MyCustomChain chain...[0m

Log something about this run

[1m> Finished chain.[0m

'Why did the callback function feel lonely? Because it was always waiting for someone to call it back!'

6、调试链 (Debugging chains)

从输出中仅仅通过Chain对象来调试可能很困难,因为大多数Chain对象涉及到大量的 输入提示预处理 和 LLM输出后处理。

将 verbose 设置为 True ,将在运行过程中打印出 Chain 对象的一些内部状态。

conversation = ConversationChain(

llm=chat,

memory=ConversationBufferMemory(),

verbose=True

)

conversation.run("What is ChatGPT?")

> Entering new ConversationChain chain...

Prompt after formatting:

The following is a ... says it does not know.

Current conversation:

Human: What is ChatGPT?

AI:

> Finished chain.

'ChatGPT is an AI ... AI applications.'

7、从 LangChainHub 加载

本例介绍了如何从 LangChainHub 加载chain 。

from langchain.chains import load_chain

chain = load_chain("lc://chains/llm-math/chain.json")

chain.run("whats 2 raised to .12")

[1m> Entering new LLMMathChain chain...[0m

whats 2 raised to .12[32;1m[1;3m

Answer: 1.0791812460476249[0m

[1m> Finished chain.[0m

'Answer: 1.0791812460476249'

有时,链将需要一些额外参数,没有和链一起序列化。

例如,在向量数据库上进行问答的链,将需要向量数据库。

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.text_splitter import CharacterTextSplitter

from langchain import OpenAI, VectorDBQA

from langchain.document_loaders import TextLoader

loader = TextLoader("../../state_of_the_union.txt")

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_documents(texts, embeddings)

Running Chroma using direct local API.

Using DuckDB in-memory for database. Data will be transient.

chain = load_chain("lc://chains/vector-db-qa/stuff/chain.json", vectorstore=vectorstore)

query = "What did the president say about Ketanji Brown Jackson"

chain.run(query)

" The president said that Ketanji ... legacy of excellence."

8、添加记忆(state)

链组件可以使用 Memory 对象进行初始化,该对象将在 对链组件的多次调用之间 保留数据。这使得链组件具有状态。

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

conversation = ConversationChain(

llm=chat,

memory=ConversationBufferMemory()

)

conversation.run("Answer briefly. What are the first 3 colors of a rainbow?")

# -> The first three colors of a rainbow are red, orange, and yellow.

conversation.run("And the next 4?")

# -> The next four colors of a rainbow are green, blue, indigo, and violet.

基本上,BaseMemory 定义了 langchain 存储内存的接口。

它通过 load_memory_variables 方法读取存储的数据,并通过 save_context 方法存储新数据。

您可以在 Memory 部分了解更多信息。

9、序列化

本例介绍了 如何将chain 序列化到 磁盘,并从磁盘中反序列化。

我们使用的序列化格式是 JSON 或 YAML。

目前,只有一些chain 支持这种类型的序列化。随着时间的推移,我们将增加支持的chain 数量。

将chain 保存到磁盘

可以使用 .save 方法来实现,需要指定一个具有 JSON 或 YAML 扩展名的文件路径。

from langchain import PromptTemplate, OpenAI, LLMChain

template = """Question: {question}

Answer: Let's think step by step."""

prompt = PromptTemplate(template=template,

input_variables=["question"])

llm_chain = LLMChain(prompt=prompt,

llm=OpenAI(temperature=0),

verbose=True)

llm_chain.save("llm_chain.json")

查看保存的文件:

!cat llm_chain.json

{

"memory": null,

"verbose": true,

"prompt": {

"input_variables": [

"question"

],

"output_parser": null,

"template": "Question: {question}\n\nAnswer: Let's think step by step.",

"template_format": "f-string"

},

"llm": {

"model_name": "text-davinci-003",

"temperature": 0.0,

"max_tokens": 256,

"top_p": 1,

"frequency_penalty": 0,

"presence_penalty": 0,

"n": 1,

"best_of": 1,

"request_timeout": null,

"logit_bias": {},

"_type": "openai"

},

"output_key": "text",

"_type": "llm_chain"

}

从磁盘加载

We can load a chain from disk by using the load_chain method.

from langchain.chains import load_chain

chain = load_chain("llm_chain.json")

chain.run("whats 2 + 2")

分开保存组件

在上面的例子中,我们可以看到 prompt 和 llm 配置信息,保存在与整个链相同的json中。

我们也可以将它们拆分并单独保存。这通常有助于使保存的组件更加模块化。

为了做到这一点,我们只需要指定llm_path而不是llm组件,以及prompt_path而不是 prompt组件。

prompt

llm_chain.prompt.save("prompt.json")

!cat prompt.json

{

"input_variables": [

"question"

],

"output_parser": null,

"template": "Question: {question}\n\nAnswer: Let's think step by step.",

"template_format": "f-string"

}

llm

llm_chain.llm.save("llm.json")

!cat llm.json

{

"model_name": "text-davinci-003",

"temperature": 0.0,

"max_tokens": 256,

"top_p": 1,

"frequency_penalty": 0,

"presence_penalty": 0,

"n": 1,

"best_of": 1,

"request_timeout": null,

"logit_bias": {},

"_type": "openai"

}

config

config = {

"memory": None,

"verbose": True,

"prompt_path": "prompt.json",

"llm_path": "llm.json",

"output_key": "text",

"_type": "llm_chain",

}

import json

with open("llm_chain_separate.json", "w") as f:

json.dump(config, f, indent=2)

!cat llm_chain_separate.json

{

"memory": null,

"verbose": true,

"prompt_path": "prompt.json",

"llm_path": "llm.json",

"output_key": "text",

"_type": "llm_chain"

}

加载所有

chain = load_chain("llm_chain_separate.json")

chain.run("whats 2 + 2")

' 2 + 2 = 4'

基础

10、LLM

LLMChain是一个简单的链式结构,它在语言模型周围添加了一些功能。

它广泛用于LangChain中,包括其他链式结构和代理程序。

LLMChain由PromptTemplate和语言模型(LLM或聊天模型)组成。

它使用提供的输入键值(如果有的话,还包括内存键值)格式化提示模板,将格式化的字符串传递给LLM并返回LLM输出。

基本使用

from langchain import PromptTemplate, OpenAI, LLMChain

prompt_template = "What is a good name for a company that makes {product}?"

llm = OpenAI(temperature=0)

llm_chain = LLMChain(

llm=llm,

prompt=PromptTemplate.from_template(prompt_template)

)

llm_chain("colorful socks")

# -> {'product': 'colorful socks', 'text': '\n\nSocktastic!'}

LLM 链的其他运行方式

除了所有 Chain 对象共享的 __call__ 和 run 方法之外,LLMChain还提供了几种调用链逻辑的方式:

apply 允许您对一组输入运行链逻辑

input_list = [

{"product": "socks"},

{"product": "computer"},

{"product": "shoes"}

]

llm_chain.apply(input_list)

[{'text': '\n\nSocktastic!'},

{'text': '\n\nTechCore Solutions.'},

{'text': '\n\nFootwear Factory.'}]

generate 返回 LLMResult

generate 与 apply 类似,但是它返回一个 LLMResult 而不是字符串。

LLMResult 通常包含有用的生成信息,如令牌使用情况和完成原因。

llm_chain.generate(input_list)

LLMResult(generations=[

[Generation(

text='\n\nSocktastic!',

generation_info={'finish_reason': 'stop', 'logprobs': None})],

[Generation(

text='\n\nTechCore Solutions.',

generation_info={'finish_reason': 'stop', 'logprobs': None})],

[Generation(

text='\n\nFootwear Factory.',

generation_info={'finish_reason': 'stop', 'logprobs': None})]],

llm_output={

'token_usage': {'prompt_tokens': 36, 'total_tokens': 55, 'completion_tokens': 19},

'model_name': 'text-davinci-003'})

predict 输入键为关键字

predict 与 run 方法类似,区别在于输入键是指定为关键字参数而不是 Python 字典。

Single input example

llm_chain.predict(product="colorful socks")

# -> '\n\nSocktastic!'

多输入示例:

template = """Tell me a {adjective} joke about {subject}."""

prompt = PromptTemplate(template=template,

input_variables=["adjective", "subject"])

llm_chain = LLMChain(prompt=prompt, llm=OpenAI(temperature=0))

llm_chain.predict(adjective="sad", subject="ducks")

# -> '\n\nQ: What did the duck say when his friend died?\nA: Quack, quack, goodbye.'

解析输出 apply_and_parse

默认情况下,LLMChain 不会解析输出,即使底层的 prompt 对象具有输出解析器。

如果您想在 LLM 输出上 应用该输出解析器,请使用 predict_and_parse 而不是 predict,使用 apply_and_parse 而不是 apply。

使用 predict:

from langchain.output_parsers import CommaSeparatedListOutputParser

output_parser = CommaSeparatedListOutputParser()

template = """List all the colors in a rainbow"""

prompt = PromptTemplate(template=template,

input_variables=[],

output_parser=output_parser)

llm_chain = LLMChain(prompt=prompt, llm=llm)

llm_chain.predict()

# -> '\n\nRed, orange, yellow, green, blue, indigo, violet'

使用 predict_and_parser:

llm_chain.predict_and_parse()

# -> ['Red', 'orange', 'yellow', 'green', 'blue', 'indigo', 'violet']

从字符串初始化 LLMChain

您还可以直接从字符串模板 构建 LLMChain。

template = """Tell me a {adjective} joke about {subject}."""

llm_chain = LLMChain.from_string(llm=llm, template=template)

llm_chain.predict(adjective="sad", subject="ducks")

# -> '\n\nQ: What did the duck say when his friend died?\nA: Quack, quack, goodbye.'

11、Router

RouterChain:根据给定输入动态 选择下一个chain 的chain 。

路由器chain 由两个组件组成:

- RouterChain (负责 选择 下一个要调用 的chain )

- destination_chains: 路由器chain 可以路由到的chain

在本例中,我们将重点讨论不同类型的 路由chain 。

我们将展示这些 路由chain 在 MultiPromptChain 中的使用方式,以创建一个问答chain ,该chain 根据给定的问题选择最相关的提示,并使用该提示回答问题。

from langchain.chains.router import MultiPromptChain

from langchain.llms import OpenAI

from langchain.chains import ConversationChain

from langchain.chains.llm import LLMChain

from langchain.prompts import PromptTemplate

physics_template = """You are a very smart physics professor.

You are great at answering questions about physics in a concise and easy to understand manner.

When you don't know the answer to a question you admit that you don't know.

Here is a question:

{input}"""

math_template = """You are a very good mathematician. You are great at answering math questions.

You are so good because you are able to break down hard problems into their component parts,

answer the component parts, and then put them together to answer the broader question.

Here is a question:

{input}"""

prompt_infos = [

{

"name": "physics",

"description": "Good for answering questions about physics",

"prompt_template": physics_template,

},

{

"name": "math",

"description": "Good for answering math questions",

"prompt_template": math_template,

},

]

llm = OpenAI()

destination_chains = {}

for p_info in prompt_infos:

name = p_info["name"]

prompt_template = p_info["prompt_template"]

prompt = PromptTemplate(template=prompt_template, input_variables=["input"])

chain = LLMChain(llm=llm, prompt=prompt)

destination_chains[name] = chain

default_chain = ConversationChain(llm=llm, output_key="text")

LLMRouterChain

此chain 使用LLM来 确定 如何路由事物。

from langchain.chains.router.llm_router import LLMRouterChain, RouterOutputParser

from langchain.chains.router.multi_prompt_prompt import MULTI_PROMPT_ROUTER_TEMPLATE

destinations = [f"{p['name']}: {p['description']}" for p in prompt_infos]

destinations_str = "\n".join(destinations)

router_template = MULTI_PROMPT_ROUTER_TEMPLATE.format(destinations=destinations_str)

router_prompt = PromptTemplate(

template=router_template,

input_variables=["input"],

output_parser=RouterOutputParser(),

)

router_chain = LLMRouterChain.from_llm(llm, router_prompt)

chain = MultiPromptChain(

router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain,

verbose=True,

)

print(chain.run("What is black body radiation?"))

Black body radiation is the term used to describe the electromagnetic radiation emitted by a “black body”—an object that absorbs all radiation incident upon it. A black body is an idealized physical body that absorbs all incident electromagnetic radiation, regardless of frequency or angle of incidence. It does not reflect, emit or transmit energy. This type of radiation is the result of the thermal motion of the body's atoms and molecules, and it is emitted at all wavelengths. The spectrum of radiation emitted is described by Planck's law and is known as the black body spectrum.

text = "What is the first prime number greater than 40 such that one plus the prime number is divisible by 3"

print(chain.run(text))

# -> The answer is 43. One plus 43 is 44 which is divisible by 3.

text = "What is the name of the type of cloud that rins"

print(chain.run(text))

[1m> Entering new MultiPromptChain chain...[0m

None: {'input': 'What is the name of the type of cloud that rains?'}

[1m> Finished chain.[0m

The type of cloud that rains is called a cumulonimbus cloud. It is a tall and dense cloud that is often accompanied by thunder and lightning.

EmbeddingRouterChain

EmbeddingRouterChain 使用嵌入和相似性在目标chain 之间进行路由。

from langchain.chains.router.embedding_router import EmbeddingRouterChain

from langchain.embeddings import CohereEmbeddings

from langchain.vectorstores import Chroma

names_and_descriptions = [

("physics", ["for questions about physics"]),

("math", ["for questions about math"]),

]

router_chain = EmbeddingRouterChain.from_names_and_descriptions(

names_and_descriptions, Chroma, CohereEmbeddings(), routing_keys=["input"]

)

chain = MultiPromptChain(

router_chain=router_chain,

destination_chains=destination_chains,

default_chain=default_chain,

verbose=True,

)

print(chain.run("What is black body radiation?"))

[1m> Entering new MultiPromptChain chain...[0m

physics: {'input': 'What is black body radiation?'}

[1m> Finished chain.[0m

Black body radiation is the emission of energy from an idealized physical body (known as a black body) that is in thermal equilibrium with its environment. It is emitted in a characteristic pattern of frequencies known as a black-body spectrum, which depends only on the temperature of the body. The study of black body radiation is an important part of astrophysics and atmospheric physics, as the thermal radiation emitted by stars and planets can often be approximated as black body radiation.

text = "What is the first prime number greater than 40 such that one plus the prime number is divisible by 3"

print(chain.run(text))

[1m> Entering new MultiPromptChain chain...[0m

math: {'input': 'What is the first prime number greater than 40 such that one plus the prime number is divisible by 3'}

[1m> Finished chain.[0m

?

Answer: The first prime number greater than 40 such that one plus the prime number is divisible by 3 is 43.

12、序列(Sequential)

接下来,在调用语言模型之后,要对语言模型进行一系列的调用。

当您希望将 一个调用的输出 作为 另一个调用的输入时,这尤其有用。

在这个笔记本中,我们将通过一些示例来演示如何使用顺序链来实现这一点。

顺序链允许您 连接多个链,并将它们 组合成执行某个特定场景的管道。

有两种类型的顺序链:

SimpleSequentialChain:最简单的顺序链形式,每个步骤都有一个单一的输入/输出,一个步骤的输出是下一个步骤的输入。SequentialChain:更一般的顺序链形式,允许多个输入/输出。

from langchain.llms import OpenAI

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

# This is an LLMChain to write a synopsis given a title of a play.

llm = OpenAI(temperature=.7)

template = """You are a playwright. Given the title of play, it is your job to write a synopsis for that title.

Title: {title}

Playwright: This is a synopsis for the above play:"""

prompt_template = PromptTemplate(input_variables=["title"], template=template)

synopsis_chain = LLMChain(llm=llm, prompt=prompt_template)

这是一个LLMChain,用于在给定剧情简介的情况下 撰写戏剧评论。

llm = OpenAI(temperature=.7)

template = """You are a play critic from the New York Times. Given the synopsis of play, it is your job to write a review for that play.

Play Synopsis:

{synopsis}

Review from a New York Times play critic of the above play:"""

prompt_template = PromptTemplate(input_variables=["synopsis"], template=template)

review_chain = LLMChain(llm=llm, prompt=prompt_template)

This is the overall chain where we run these two chains in sequence.

from langchain.chains import SimpleSequentialChain

overall_chain = SimpleSequentialChain(chains=[synopsis_chain, review_chain], verbose=True)

review = overall_chain.run("Tragedy at sunset on the beach")

> Entering new SimpleSequentialChain chain...

Tragedy at ...leave audiences feeling inspired and hopeful.

> Finished chain.

print(review)

Tragedy at ... hopeful.

顺序链(Sequential Chain)

下例尝试使用 涉及多个输入 和 多个最终输出 的更复杂的链。

特别重要的是 如何命名 输入/输出变量名。

如下示例 一个LLMChain,用于在 给定戏剧标题 及 其所处时代的情况下 编写简介。

synopsis

llm = OpenAI(temperature=.7)

template = """You are a playwright. Given the title of play and the era it is set in, it is your job to write a synopsis for that title.

Title: {title}

Era: {era}

Playwright: This is a synopsis for the above play:"""

prompt_template = PromptTemplate(

input_variables=["title", 'era'],

template=template)

synopsis_chain = LLMChain(

llm=llm,

prompt=prompt_template,

output_key="synopsis")

review

llm = OpenAI(temperature=.7)

template = """You are a play critic from the New York Times. Given the synopsis of play, it is your job to write a review for that play.

Play Synopsis:

{synopsis}

Review from a New York Times play critic of the above play:"""

prompt_template = PromptTemplate(

input_variables=["synopsis"],

template=template)

review_chain = LLMChain(

llm=llm,

prompt=prompt_template,

output_key="review")

这是我们 按顺序 运行这两个链的 整个链。

overall

from langchain.chains import SequentialChain

overall_chain = SequentialChain(

chains=[synopsis_chain, review_chain],

input_variables=["era", "title"],

output_variables=["synopsis", "review"], # 这里返回多个变量

verbose=True)

overall_chain({"title":"Tragedy at sunset on the beach", "era": "Victorian England"})

> Entering new SequentialChain chain...

> Finished chain.

{'title': 'Tragedy at sunset on the beach',

'era': 'Victorian England',

'synopsis': "\n\nThe play ... backdrop of 19th century England.",

'review': "\n\nThe latest production ... recommended."}

顺序链中的记忆(Memory in Sequential Chains)

有时,您可能希望在 链的每个步骤 或 链的后面的某个部分 传递一些上下文,但是维护和链接输入/输出变量可能会很快变得混乱。

使用 SimpleMemory 是一种方便的方法来 管理这个上下文 并简化您的链。

例如,使用前面的 playwright 顺序链,假设您想要包含有关剧本的日期、时间和位置的某些上下文,并使用生成的简介 和评论 创建一些社交媒体发布文本。

您可以将这些新的上下文变量添加为 input_variables,或者我们可以在链中添加一个 SimpleMemory 来管理这个上下文:

from langchain.chains import SequentialChain

from langchain.memory import SimpleMemory

llm = OpenAI(temperature=.7)

template = """You are a social media manager for a theater company. Given the title of play, the era it is set in, the date,time and location, the synopsis of the play, and the review of the play, it is your job to write a social media post for that play.

Here is some context about the time and location of the play:

Date and Time: {time}

Location: {location}

Play Synopsis:

{synopsis}

Review from a New York Times play critic of the above play:

{review}

Social Media Post:

"""

prompt_template = PromptTemplate(

input_variables=["synopsis", "review", "time", "location"],

template=template

)

social_chain = LLMChain(

llm=llm,

prompt=prompt_template,

output_key="social_post_text")

overall_chain = SequentialChain(

memory=SimpleMemory(

memories={"time": "December 25th, 8pm PST", "location": "Theater in the Park"}),

chains=[synopsis_chain, review_chain, social_chain],

input_variables=["era", "title"],

output_variables=["social_post_text"],

verbose=True)

overall_chain({"title":"Tragedy at sunset on the beach",

"era": "Victorian England"})

> Entering new SequentialChain chain...

> Finished chain.

{'title': 'Tragedy at sunset on the beach',

'era': 'Victorian England',

'time': 'December 25th, 8pm PST',

'location': 'Theater in the Park',

'social_post_text': "\nSpend your Christmas night with us at Theater in the Park and experience the heartbreaking story of love and loss that is 'A Walk on the Beach'. Set in Victorian England, this romantic tragedy follows the story of Frances and Edward, a young couple whose love is tragically cut short. Don't miss this emotional and thought-provoking production that is sure to leave you in tears. #AWalkOnTheBeach #LoveAndLoss #TheaterInThePark #VictorianEngland"}

13、转换

本例展示了如何使用通用的转换链。

作为示例,我们将创建一个虚拟转换,它接收一个超长的文本,将文本过滤为仅保留前三个段落,然后将其传递给 LLMChain 进行摘要生成。

from langchain.chains import TransformChain, LLMChain, SimpleSequentialChain

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

with open("../../state_of_the_union.txt") as f:

state_of_the_union = f.read()

def transform_func(inputs: dict) -> dict:

text = inputs["text"]

shortened_text = "\n\n".join(text.split("\n\n")[:3])

return {"output_text": shortened_text}

transform_chain = TransformChain(

input_variables=["text"],

output_variables=["output_text"],

transform=transform_func

)

template = """Summarize this text:

{output_text}

Summary:"""

prompt = PromptTemplate(input_variables=["output_text"],

template=template)

llm_chain = LLMChain(llm=OpenAI(), prompt=prompt)

sequential_chain = SimpleSequentialChain(

chains=[transform_chain, llm_chain])

sequential_chain.run(state_of_the_union)

' The speaker addresses the nation, noting that while last year they were kept apart due to COVID-19, this year they are together again. They are reminded that regardless of their political affiliations, they are all Americans.'

14、文档

这些是处理文档的核心链组件。

它们用于对文档进行 总结、回答关于文档的问题、从文档中提取信息等等。

这些链组件都实现了一个公共接口:

class BaseCombineDocumentsChain(Chain, ABC):

"""Base interface for chains combining documents."""

@abstractmethod

def combine_docs(self, docs: List[Document], **kwargs: Any) -> Tuple[str, dict]:

"""Combine documents into a single string."""

Stuff documents

东西文档链(“东西” 的意思是 “填充” 或 “填充”)是最直接的文档链之一。

它接受一个文档列表,将它们全部插入到提示中,并将该提示传递给 LLM。

此链适用于 文档较小 且大多数调用 仅传递少数文档 的应用程序。

Refine

Refine 文档链通过循环遍历输入文档 并迭代更新其答案 来构建响应。

对于每个文档,它将所有非文档输入、当前文档和最新的中间答案传递给LLM链以获得新的答案。

由于精化链 每次只向 LLM传递单个文档,因此非常适合需要分析 超出模型上下文范围 的文档数量的任务。

显而易见的权衡是,与“Stuff documents chain”等链相比,该链会进行更多的LLM调用。

还有某些任务在迭代执行时很难完成。

例如,当文档经常相互引用 或 任务需要从多个文档中获取详细信息时,精化链的性能可能较差。

Map reduce

map reduce 文档链首先将 LLM 链应用于 每个单独的文档(Map 步骤),将链的输出视为新文档。

然后将所有新文档传递给 单独的合并文档链,以获得单个输出(Reduce 步骤)。

如果需要,它可以选择先压缩或折叠映射的文档,以确保它们适合合并文档链(通常将它们传递给 LLM)。

如果需要,此压缩步骤会递归执行。

Map re-rank

该链组件在每个文档上 运行一个初始提示;

该提示不仅尝试完成任务,而且还给出了答案的确定性得分。

返回得分最高的响应。

热门(Popular)

15、检索型问答(Retrieval QA)

这个示例展示了在索引上进行问答的过程。

from langchain.chains import RetrievalQA

from langchain.document_loaders import TextLoader

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.llms import OpenAI

from langchain.text_splitter import CharacterTextSplitter

from langchain.vectorstores import Chroma

loader = TextLoader("../../state_of_the_union.txt")

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

texts = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

docsearch = Chroma.from_documents(texts, embeddings)

qa = RetrievalQA.from_chain_type(llm=OpenAI(),

chain_type="stuff",

retriever=docsearch.as_retriever())

query = "What did the president say about Ketanji Brown Jackson"

qa.run(query)

" The president said that she is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, and from a family of public school educators and police officers. He also said that she is a consensus builder and has received a broad range of support, from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

链类型 (Chain Type)

您可以轻松指定 要在 RetrievalQA 链中 加载和使用的不同链类型。

有关这些类型的更详细的步骤,请参见 此笔记本。

有两种加载不同链类型的方法。

from_chain_type 指定链类型

首先,您可以在 from_chain_type 方法中指定链类型参数。

这允许您传递 要使用的链类型的名称。

例如,在下面的示例中,我们将链类型更改为 map_reduce。

qa = RetrievalQA.from_chain_type(

llm=OpenAI(),

chain_type="map_reduce",

retriever=docsearch.as_retriever()

)

query = "What did the president say about Ketanji Brown Jackson"

qa.run(query)

# -> " The president said that Judge ... Republicans."

combine_documents_chain 直接加载链

上述方法允许您非常简单地更改链类型,但它确实在链类型的参数上提供了大量的灵活性。

如果您想要控制这些参数,您可以直接加载链(就像在 此笔记本 中所做的那样),然后将其直接传递给 RetrievalQA 链,使用 combine_documents_chain 参数。例如:

from langchain.chains.question_answering import load_qa_chain

qa_chain = load_qa_chain(

OpenAI(temperature=0),

chain_type="stuff"

)

qa = RetrievalQA(combine_documents_chain=qa_chain,

retriever=docsearch.as_retriever())

query = "What did the president say about Ketanji Brown Jackson"

qa.run(query)

# -> " The president ... Democrats and Republicans."

自定义提示 (Custom Prompts)

您可以传递 自定义提示 来进行问题回答。

这些提示与您可以传递给 基本问题回答链 的提示相同。

from langchain.prompts import PromptTemplate

prompt_template = """Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

{context}

Question: {question}

Answer in Italian:"""

PROMPT = PromptTemplate(

template=prompt_template,

input_variables=["context", "question"]

)

chain_type_kwargs = {"prompt": PROMPT}

qa = RetrievalQA.from_chain_type(

llm=OpenAI(),

chain_type="stuff",

retriever=docsearch.as_retriever(),

chain_type_kwargs=chain_type_kwargs

)

query = "What did the president say about Ketanji Brown Jackson"

qa.run(query)

" Il presidente ha detto che Ketanji Brown Jackson è una delle menti legali più importanti del paese, che continuerà l'eccellenza di Justice Breyer e che ha ricevuto un ampio sostegno, da Fraternal Order of Police a ex giudici nominati da democratici e repubblicani."

返回源文档

此外,我们可以在构建链时指定一个可选参数来返回用于回答问题的源文档。

qa = RetrievalQA.from_chain_type(

llm=OpenAI(),

chain_type="stuff",

retriever=docsearch.as_retriever(),

return_source_documents=True)

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"query": query})

result["result"]

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice and a former federal public defender from a family of public school educators and police officers, and that she has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

result["source_documents"]

[

Document(page_content='Tonight. I call on the Senate to: Pass the Freedom to Vote Act. Pass the John Lewis Voting Rights Act. And while you’re at it, pass the Disclose Act ... excellence.',

lookup_str='',

metadata={'source': '../../state_of_the_union.txt'},

lookup_index=0),

Document(page_content='A former top ... their own borders.',

lookup_str='',

metadata={'source': '../../state_of_the_union.txt'},

lookup_index=0),

...

]

RetrievalQAWithSourceChain

或者,如果我们的文档有一个 “source” 元数据键,我们可以使用 RetrievalQAWithSourceChain 来引用我们的来源:

docsearch = Chroma.from_texts(texts, embeddings,

metadatas=[{"source": f"{i}-pl"} for i in range(len(texts))])

from langchain.chains import RetrievalQAWithSourcesChain

from langchain import OpenAI

chain = RetrievalQAWithSourcesChain.from_chain_type(

OpenAI(temperature=0),

chain_type="stuff",

retriever=docsearch.as_retriever()

)

chain({"question": "What did the president say about Justice Breyer"}, return_only_outputs=True)

{'answer': ' The president honored Justice Breyer for his service and mentioned his legacy of excellence.\n',

'sources': '31-pl'}

16、对话式检索问答(Conversational Retrieval QA)

对话式检索问答链(ConversationalRetrievalQA chain)是在检索问答链(RetrievalQAChain)的基础上提供了一个聊天历史组件。

它首先将聊天历史(可以是显式传入的或从提供的内存中检索到的)和问题合并成一个独立的问题,然后从检索器中查找相关文档,最后将这些文档和问题传递给问答链以返回一个响应。

要创建一个对话式检索问答链,您需要一个检索器。在下面的示例中,我们将从一个向量存储中创建一个检索器,这个向量存储可以由嵌入向量创建。

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import Chroma

from langchain.text_splitter import CharacterTextSplitter

from langchain.llms import OpenAI

from langchain.chains import ConversationalRetrievalChain

from langchain.document_loaders import TextLoader

# 加载文档。您可以将其替换为任何类型数据的加载器

loader = TextLoader("../../state_of_the_union.txt")

documents = loader.load()

# 如果您有多个要合并的加载器,可以这样做:

loaders = [....]

# docs = []

# for loader in loaders:

# docs.extend(loader.load())

# 我们现在拆分文档,为它们创建嵌入,并将它们放在向量库中。这让我们可以对它们进行语义搜索。

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

documents = text_splitter.split_documents(documents)

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_documents(documents, embeddings)

# -> Using embedded DuckDB without persistence: data will be transient

# 现在我们可以创建一个内存对象,这对于跟踪输入/输出并进行对话是必要的。

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

qa = ConversationalRetrievalChain.from_llm(

OpenAI(temperature=0),

vectorstore.as_retriever(),

memory=memory)

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query})

result["answer"]

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, and from a family of public school educators and police officers. He also said that she is a consensus builder and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

query = "Did he mention who she suceeded"

result = qa({"question": query})

result['answer']

' Ketanji Brown Jackson succeeded Justice Stephen Breyer on the United States Supreme Court.'

传入聊天历史

在上面的示例中,我们使用了一个内存对象来跟踪聊天历史。我们也可以直接将其 传递进去。

为了做到这一点,我们需要初始化一个没有任何内存对象的链。

qa = ConversationalRetrievalChain.from_llm(

OpenAI(temperature=0),

vectorstore.as_retriever()

)

以下是没有聊天历史的问题示例

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query, "chat_history": chat_history})

result["answer"]

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, and from a family of public school educators and police officers. He also said that she is a consensus builder and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

如下示例 使用一些聊天记录 提问

chat_history = [(query, result["answer"])]

query = "Did he mention who she suceeded"

result = qa({"question": query, "chat_history": chat_history})

result['answer']

' Ketanji Brown Jackson succeeded Justice Stephen Breyer on the United States Supreme Court.'

使用不同的模型来压缩问题

该链有两个步骤:

首先,它将当前问题和聊天历史 压缩为一个独立的问题。

这是创建用于检索的独立向量的必要步骤。

之后,它进行检索,然后使用单独的模型 进行检索增强生成来回答问题。

LangChain 声明性的特性之一是 您可以轻松为每个调用 使用单独的语言模型。

这对于在简化问题 的较简单任务中使用 更便宜和更快的模型,然后在回答问题时使用 更昂贵的模型非常有用。

下面是一个示例。

from langchain.chat_models import ChatOpenAI

qa = ConversationalRetrievalChain.from_llm(

ChatOpenAI(temperature=0, model="gpt-4"),

vectorstore.as_retriever(),

condense_question_llm = ChatOpenAI(temperature=0, model='gpt-3.5-turbo'),

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query, "chat_history": chat_history})

chat_history = [(query, result["answer"])]

query = "Did he mention who she suceeded"

result = qa({"question": query, "chat_history": chat_history})

返回源文档

您还可以轻松地从 ConversationalRetrievalChain 返回源文档。

这在您想要检查 返回了哪些文档时非常有用。

qa = ConversationalRetrievalChain.from_llm(

OpenAI(temperature=0),

vectorstore.as_retriever(),

return_source_documents=True

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query, "chat_history": chat_history})

result['source_documents'][0]

Document(page_content='Tonight. I call on the Senate to: Pass the Freedom to Vote Act. Pass the John Lewis Voting Rights Act. And while you’re at it, pass the Disclose Act so Americans can know who is funding our elections. \n\nTonight, I’d like to honor someone who has dedicated his life to serve this country: Justice Stephen Breyer—an Army veteran, Constitutional scholar, and retiring Justice of the United States Supreme Court. Justice Breyer, thank you for your service. \n\nOne of the most serious constitutional responsibilities a President has is nominating someone to serve on the United States Supreme Court. \n\nAnd I did that 4 days ago, when I nominated Circuit Court of Appeals Judge Ketanji Brown Jackson. One of our nation’s top legal minds, who will continue Justice Breyer’s legacy of excellence.', metadata={'source': '../../state_of_the_union.txt'})

ConversationalRetrievalChain 与 search_distance

如果您正在使用支持 按搜索距离进行过滤 的向量存储,可以添加 阈值参数。

vectordbkwargs = {"search_distance": 0.9}

qa = ConversationalRetrievalChain.from_llm(

OpenAI(temperature=0),

vectorstore.as_retriever(),

return_source_documents=True

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({

"question": query,

"chat_history": chat_history,

"vectordbkwargs": vectordbkwargs

})

ConversationalRetrievalChain with map_reduce

我们也可以通过 ConversationalRetrievalChain 使用不同类型的混合文档链。

from langchain.chains import LLMChain

from langchain.chains.question_answering import load_qa_chain

from langchain.chains.conversational_retrieval.prompts import CONDENSE_QUESTION_PROMPT

llm = OpenAI(temperature=0)

question_generator = LLMChain(llm=llm, prompt=CONDENSE_QUESTION_PROMPT)

doc_chain = load_qa_chain(llm, chain_type="map_reduce")

chain = ConversationalRetrievalChain(

retriever=vectorstore.as_retriever(),

question_generator=question_generator,

combine_docs_chain=doc_chain,

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = chain({"question": query, "chat_history": chat_history})

result['answer']

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, from a family of public school educators and police officers, a consensus builder, and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

ConversationalRetrievalChain 与带有来源的问答

您还可以将此链 与带有来源的问答链一起使用。

from langchain.chains.qa_with_sources import load_qa_with_sources_chain

llm = OpenAI(temperature=0)

question_generator = LLMChain(llm=llm, prompt=CONDENSE_QUESTION_PROMPT)

doc_chain = load_qa_with_sources_chain(llm, chain_type="map_reduce")

chain = ConversationalRetrievalChain(

retriever=vectorstore.as_retriever(),

question_generator=question_generator,

combine_docs_chain=doc_chain,

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = chain({"question": query, "chat_history": chat_history})

result['answer']

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, from a family of public school educators and police officers, a consensus builder, and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans. \nSOURCES: ../../state_of_the_union.txt"

ConversationalRetrievalChain 与流式传输至 stdout

在此示例中,链的输出将逐个令牌流式传输到 stdout。

from langchain.chains.llm import LLMChain

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.chains.conversational_retrieval.prompts import CONDENSE_QUESTION_PROMPT, QA_PROMPT

from langchain.chains.question_answering import load_qa_chain

# Construct a ConversationalRetrievalChain with a streaming llm for combine docs

# and a separate, non-streaming llm for question generation

llm = OpenAI(temperature=0)

streaming_llm = OpenAI(streaming=True, callbacks=[StreamingStdOutCallbackHandler()], temperature=0)

question_generator = LLMChain(llm=llm, prompt=CONDENSE_QUESTION_PROMPT)

doc_chain = load_qa_chain(streaming_llm, chain_type="stuff", prompt=QA_PROMPT)

qa = ConversationalRetrievalChain(

retriever=vectorstore.as_retriever(),

combine_docs_chain=doc_chain,

question_generator=question_generator

)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query, "chat_history": chat_history})

The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, and from a family of public school educators and police officers. He also said that she is a consensus builder and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans.

chat_history = [(query, result["answer"])]

query = "Did he mention who she suceeded"

result = qa({"question": query, "chat_history": chat_history})

# -> Ketanji Brown Jackson succeeded Justice Stephen Breyer on the United States Supreme Court.

get_chat_history 函数

您还可以指定一个 get_chat_history 函数,用于格式化聊天历史字符串。

def get_chat_history(inputs) -> str:

res = []

for human, ai in inputs:

res.append(f"Human:{human}\nAI:{ai}")

return "\n".join(res)

qa = ConversationalRetrievalChain.from_llm(OpenAI(temperature=0), vectorstore.as_retriever(), get_chat_history=get_chat_history)

chat_history = []

query = "What did the president say about Ketanji Brown Jackson"

result = qa({"question": query, "chat_history": chat_history})

result['answer']

" The president said that Ketanji Brown Jackson is one of the nation's top legal minds, a former top litigator in private practice, a former federal public defender, and from a family of public school educators and police officers. He also said that she is a consensus builder and has received a broad range of support from the Fraternal Order of Police to former judges appointed by Democrats and Republicans."

17、SQL

这个示例演示了如何使用 SQLDatabaseChain 来对 SQL 数据库进行问题回答。

在底层,LangChain 使用 SQLAlchemy 连接 SQL 数据库。

因此,SQLDatabaseChain 可与 SQLAlchemy 支持的任何 SQL 方言一起使用,例如 MS SQL、MySQL、MariaDB、PostgreSQL、Oracle SQL、Databricks 和 SQLite。

有关连接到数据库的要求的详细信息,请参阅 SQLAlchemy 文档。

例如,连接到 MySQL 需要适当的连接器,如 PyMySQL。

MySQL 连接的 URI 可能如下所示:mysql+pymysql://user:pass@some_mysql_db_address/db_name。

此演示使用 SQLite 和示例 Chinook 数据库。

要设置它,请按照 https://database.guide/2-sample-databases-sqlite/ 上的说明,在此存储库根目录的 notebooks 文件夹中放置 .db 文件。

**注意:**对于数据敏感项目,您可以在 SQLDatabaseChain 初始化中指定 return_direct=True,以直接返回SQL查询的输出,而不需要任何其他格式。

这将阻止LLM查看数据库中的任何内容。但是,请注意,默认情况下,LLM仍然可以访问数据库方案(即方言、表和关键字名称)。

from langchain import OpenAI, SQLDatabase, SQLDatabaseChain

db = SQLDatabase.from_uri("sqlite:///../../../../notebooks/Chinook.db")

llm = OpenAI(temperature=0, verbose=True)

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)

db_chain.run("How many employees are there?")

> Entering new SQLDatabaseChain chain...

How many employees are there?

SQLQuery:

/workspace/langchain/langchain/sql_database.py:191: SAWarning: Dialect sqlite+pysqlite does *not* support Decimal objects natively, and SQLAlchemy must convert from floating point - rounding errors and other issues may occur. Please consider storing Decimal numbers as strings or integers on this platform for lossless storage.

sample_rows = connection.execute(command)

SELECT COUNT(*) FROM "Employee";

SQLResult: [(8,)]

Answer:There are 8 employees.

> Finished chain.

'There are 8 employees.'

使用查询检查器 Query Checker

有时,语言模型会生成具有小错误的无效 SQL,可以使用 SQL Database Agent 使用的相同技术来自行纠正 SQL。

您只需在创建链时指定此选项即可:

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True, use_query_checker=True)

db_chain.run("How many albums by Aerosmith?")

> Entering new SQLDatabaseChain chain...

How many albums by Aerosmith?

SQLQuery:SELECT COUNT(*) FROM Album WHERE ArtistId = 3;

SQLResult: [(1,)]

Answer:There is 1 album by Aerosmith.

> Finished chain.

'There is 1 album by Aerosmith.'

自定义提示

您还可以自定义使用的提示。这是一个将其提示为理解 foobar 与 Employee 表相同的示例:

from langchain.prompts.prompt import PromptTemplate

_DEFAULT_TEMPLATE = """Given an input question, first create a syntactically correct {dialect} query to run, then look at the results of the query and return the answer.

Use the following format:

Question: "Question here"

SQLQuery: "SQL Query to run"

SQLResult: "Result of the SQLQuery"

Answer: "Final answer here"

Only use the following tables:

{table_info}

If someone asks for the table foobar, they really mean the employee table.

Question: {input}"""

PROMPT = PromptTemplate(

input_variables=["input", "table_info", "dialect"],

template=_DEFAULT_TEMPLATE

)

db_chain = SQLDatabaseChain.from_llm(llm, db, prompt=PROMPT, verbose=True)

db_chain.run("How many employees are there in the foobar table?")

> Entering new SQLDatabaseChain chain...

How many employees are there in the foobar table?

SQLQuery:SELECT COUNT(*) FROM Employee;

SQLResult: [(8,)]

Answer:There are 8 employees in the foobar table.

> Finished chain.

'There are 8 employees in the foobar table.'

返回中间步骤 (Return Intermediate Steps)

您还可以返回 SQLDatabaseChain 的中间步骤。

这允许您访问生成的 SQL 语句 以及针对 SQL 数据库运行的结果。

db_chain = SQLDatabaseChain.from_llm(llm, db, prompt=PROMPT, verbose=True, use_query_checker=True, return_intermediate_steps=True)

result = db_chain("How many employees are there in the foobar table?")

result["intermediate_steps"]

> Entering new SQLDatabaseChain chain...

How many employees are there in the foobar table?

SQLQuery:SELECT COUNT(*) FROM Employee;

SQLResult: [(8,)]

Answer:There are 8 employees in the foobar table.

> Finished chain.

[{'input': 'How many employees are there in the foobar table?\nSQLQuery:SELECT COUNT(*) FROM Employee;\nSQLResult: [(8,)]\nAnswer:',

'top_k': '5',

'dialect': 'sqlite',

'table_info': '\nCREATE TABLE "Artist" (\n\t"ArtistId" INTEGER NOT NULL, \n\t"Name" NVARCHAR(120), \n\tPRIMARY KEY ("ArtistId")\n)\n\n/*\n3 rows from Artist table:\nArtistId\tName\n1...89\n1\t3390\n*/',

'stop': ['\nSQLResult:']},

'SELECT COUNT(*) FROM Employee;',

{'query': 'SELECT COUNT(*) FROM Employee;', 'dialect': 'sqlite'},

'SELECT COUNT(*) FROM Employee;',

'[(8,)]']

选择如何限制返回的行数

如果您查询表的多行,可以使用 top_k 参数(默认为 10)选择要获取的最大结果数。

这对于避免查询结果 超过提示的最大长度 或不必要地消耗令牌很有用。

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True, use_query_checker=True, top_k=3)

db_chain.run("What are some example tracks by composer Johann Sebastian Bach?")

> Entering new SQLDatabaseChain chain...

What are some example tracks by composer Johann Sebastian Bach?

SQLQuery:SELECT Name FROM Track WHERE Composer = 'Johann Sebastian Bach' LIMIT 3

SQLResult: [('Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace',), ('Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria',), ('Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude',)]

Answer:Examples of tracks by Johann Sebastian Bach are Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace, Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria, and Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude.

> Finished chain.

'Examples of tracks by Johann Sebastian Bach are Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace, Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria, and Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude.'

添加每个表的示例行

有时,数据的格式并不明显,最佳选择是在提示中包含来自表中的行的样本,以便 LLM 在提供最终查询之前了解数据。

在这里,我们将使用此功能,通过从 Track 表中提供两行来让 LLM 知道艺术家是以他们的全名保存的。

db = SQLDatabase.from_uri(

"sqlite:///../../../../notebooks/Chinook.db",

include_tables=['Track'], # we include only one table to save tokens in the prompt :)

sample_rows_in_table_info=2)

样本行将在每个相应表的列信息之后添加到提示中:

print(db.table_info)

CREATE TABLE "Track" (

"TrackId" INTEGER NOT NULL,

"Name" NVARCHAR(200) NOT NULL,

"AlbumId" INTEGER,

"MediaTypeId" INTEGER NOT NULL,

"GenreId" INTEGER,

"Composer" NVARCHAR(220),

"Milliseconds" INTEGER NOT NULL,

"Bytes" INTEGER,

"UnitPrice" NUMERIC(10, 2) NOT NULL,

PRIMARY KEY ("TrackId"),

FOREIGN KEY("MediaTypeId") REFERENCES "MediaType" ("MediaTypeId"),

FOREIGN KEY("GenreId") REFERENCES "Genre" ("GenreId"),

FOREIGN KEY("AlbumId") REFERENCES "Album" ("AlbumId")

)

/*

2 rows from Track table:

TrackId Name AlbumId MediaTypeId GenreId Composer Milliseconds Bytes UnitPrice

1 For Those About To Rock (We Salute You) 1 1 1 Angus Young, Malcolm Young, Brian Johnson 343719 11170334 0.99

2 Balls to the Wall 2 2 1 None 342562 5510424 0.99

*/

db_chain = SQLDatabaseChain.from_llm(llm, db, use_query_checker=True, verbose=True)

db_chain.run("What are some example tracks by Bach?")

> Entering new SQLDatabaseChain chain...

What are some example tracks by Bach?

SQLQuery:SELECT "Name", "Composer" FROM "Track" WHERE "Composer" LIKE '%Bach%' LIMIT 5

SQLResult: [('American Woman', 'B. Cummings/G. Peterson/M.J. Kale/R. Bachman'), ('Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace', 'Johann Sebastian Bach'), ('Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria', 'Johann Sebastian Bach'), ('Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude', 'Johann Sebastian Bach'), ('Toccata and Fugue in D Minor, BWV 565: I. Toccata', 'Johann Sebastian Bach')]

Answer:Tracks by Bach include 'American Woman', 'Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace', 'Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria', 'Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude', and 'Toccata and Fugue in D Minor, BWV 565: I. Toccata'.

> Finished chain.

'Tracks by Bach include \'American Woman\', \'Concerto for 2 Violins in D Minor, BWV 1043: I. Vivace\', \'Aria Mit 30 Veränderungen, BWV 988 "Goldberg Variations": Aria\', \'Suite for Solo Cello No. 1 in G Major, BWV 1007: I. Prélude\', and \'Toccata and Fugue in D Minor, BWV 565: I. Toccata\'.'

自定义表信息 (Custom Table Info)

在某些情况下,提供自定义表信息而不是使用自动生成的表定义和第一个 sample_rows_in_table_info 示例行可能很有用。

例如,如果您知道表的前几行无关紧要,手动提供更多样化的示例行或为模型提供更多信息可能会有所帮助。

如果存在不必要的列,还可以限制模型可见的列。

此信息可以作为字典提供,其中表名称为键,表信息为值。

例如,让我们为仅有几列的 Track 表提供自定义定义和示例行:

custom_table_info = {

"Track": """CREATE TABLE Track (

"TrackId" INTEGER NOT NULL,

"Name" NVARCHAR(200) NOT NULL,

"Composer" NVARCHAR(220),

PRIMARY KEY ("TrackId")

)

/*

3 rows from Track table:

TrackId Name Composer

1 For Those About To Rock (We Salute You) Angus Young, Malcolm Young, Brian Johnson

2 Balls to the Wall None

3 My favorite song ever The coolest composer of all time

*/"""

}

db = SQLDatabase.from_uri(

"sqlite:///../../../../notebooks/Chinook.db",

include_tables=['Track', 'Playlist'],

sample_rows_in_table_info=2,

custom_table_info=custom_table_info)

print(db.table_info)

CREATE TABLE "Playlist" (

"PlaylistId" INTEGER NOT NULL,

"Name" NVARCHAR(120),

PRIMARY KEY ("PlaylistId")

)

/*

2 rows from Playlist table:

PlaylistId Name

1 Music

2 Movies

*/

CREATE TABLE Track (

"TrackId" INTEGER NOT NULL,

"Name" NVARCHAR(200) NOT NULL,

"Composer" NVARCHAR(220),

PRIMARY KEY ("TrackId")

)

/*

3 rows from Track table:

TrackId Name Composer

1 For Those About To Rock (We Salute You) Angus Young, Malcolm Young, Brian Johnson

2 Balls to the Wall None

3 My favorite song ever The coolest composer of all time

*/

请注意,我们为 Track 的自定义表定义和示例行覆盖了 sample_rows_in_table_info 参数。

未被 custom_table_info 覆盖的表(在此示例中为 Playlist)将像往常一样自动收集其表信息。

db_chain = SQLDatabaseChain.from_llm(llm, db, verbose=True)

db_chain.run("What are some example tracks by Bach?")

SQLDatabaseSequentialChain

用于查询 SQL 数据库的顺序链。

链的顺序如下:

- 基于查询确定要使用的表。

- 基于这些表,调用正常的 SQL 数据库链。

这在数据库中的表数量很大的情况下 非常有用。

from langchain.chains import SQLDatabaseSequentialChain

db = SQLDatabase.from_uri("sqlite:///../../../../notebooks/Chinook.db")

chain = SQLDatabaseSequentialChain.from_llm(llm, db, verbose=True)

chain.run("How many employees are also customers?")

> Entering new SQLDatabaseSequentialChain chain...

Table names to use:

['Employee', 'Customer']

> Entering new SQLDatabaseChain chain...

How many employees are also customers?

SQLQuery:SELECT COUNT(*) FROM Employee e INNER JOIN Customer c ON e.EmployeeId = c.SupportRepId;

SQLResult: [(59,)]

Answer:59 employees are also customers.

> Finished chain.

> Finished chain.

'59 employees are also customers.'

使用本地语言模型

有时,您可能无法使用 OpenAI 或其他托管服务的大型语言模型。

您当然可以尝试使用 SQLDatabaseChain 与本地模型一起使用,但很快会意识到,即使使用大型 GPU 运行本地模型,大多数模型仍然难以生成正确的输出。

import logging

import torch

from transformers import AutoTokenizer, GPT2TokenizerFast, pipeline, AutoModelForSeq2SeqLM, AutoModelForCausalLM

from langchain import HuggingFacePipeline

# Note: This model requires a large GPU, e.g. an 80GB A100. See documentation for other ways to run private non-OpenAI models.

model_id = "google/flan-ul2"

model = AutoModelForSeq2SeqLM.from_pretrained(model_id, temperature=0)

device_id = -1 # default to no-GPU, but use GPU and half precision mode if available

if torch.cuda.is_available():

device_id = 0

try:

model = model.half()

except RuntimeError as exc:

logging.warn(f"Could not run model in half precision mode: {str(exc)}")

tokenizer = AutoTokenizer.from_pretrained(model_id)

pipe = pipeline(task="text2text-generation", model=model, tokenizer=tokenizer, max_length=1024, device=device_id)

local_llm = HuggingFacePipeline(pipeline=pipe)

/workspace/langchain/.venv/lib/python3.9/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

Loading checkpoint shards: 100%|██████████| 8/8 [00:32<00:00, 4.11s/it]

from langchain import SQLDatabase, SQLDatabaseChain

db = SQLDatabase.from_uri("sqlite:///../../../../notebooks/Chinook.db", include_tables=['Customer'])

local_chain = SQLDatabaseChain.from_llm(local_llm, db, verbose=True, return_intermediate_steps=True, use_query_checker=True)

# 使用查询检查器

local_chain("How many customers are there?")

即使对于相对复杂的 SQL,这个相对较大的模型也很可能无法独立生成。

但是,您可以记录其输入和输出,以便手动纠正它们,并将纠正后的示例用于后续的 few shot prompt 示例。

实际上,您可以记录任何引发异常的链执行(如下例所示),或在结果不正确的情况下直接获得用户反馈(但没有引发异常)。

poetry run pip install pyyaml chromadb

import yaml

from typing import Dict

QUERY = "List all the customer first names that start with 'a'"

def _parse_example(result: Dict) -> Dict:

sql_cmd_key = "sql_cmd"

sql_result_key = "sql_result"

table_info_key = "table_info"

input_key = "input"

final_answer_key = "answer"

_example = {

"input": result.get("query"),

}

steps = result.get("intermediate_steps")

answer_key = sql_cmd_key # the first one

for step in steps:

# The steps are in pairs, a dict (input) followed by a string (output).

# Unfortunately there is no schema but you can look at the input key of the

# dict to see what the output is supposed to be

if isinstance(step, dict):

# Grab the table info from input dicts in the intermediate steps once

if table_info_key not in _example:

_example[table_info_key] = step.get(table_info_key)

if input_key in step:

if step[input_key].endswith("SQLQuery:"):

answer_key = sql_cmd_key # this is the SQL generation input

if step[input_key].endswith("Answer:"):

answer_key = final_answer_key # this is the final answer input

elif sql_cmd_key in step:

_example[sql_cmd_key] = step[sql_cmd_key]

answer_key = sql_result_key # this is SQL execution input

elif isinstance(step, str):

# The preceding element should have set the answer_key

_example[answer_key] = step

return _example

example: any

try:

result = local_chain(QUERY)

print("*** Query succeeded")

example = _parse_example(result)

except Exception as exc:

print("*** Query failed")

result = {

"query": QUERY,

"intermediate_steps": exc.intermediate_steps

}

example = _parse_example(result)

# print for now, in reality you may want to write this out to a YAML file or database for manual fix-ups offline

yaml_example = yaml.dump(example, allow_unicode=True)

print("\n" + yaml_example)

多次运行上面的片段,或在部署环境中记录异常,以收集大量由语言模型生成的输入、table_info 和 sql_cmd 的示例。

sql_cmd 的值将是不正确的,您可以手动修正它们以建立示例集合。

例如,在这里,我们使用 YAML 来保持我们的输入和纠正后的 SQL 输出的整洁记录,以便随着时间的推移逐步建立它们。

YAML_EXAMPLES = """

- input: How many customers are not from Brazil?

table_info: |

CREATE TABLE "Customer" (

"CustomerId" INTEGER NOT NULL,

"FirstName" NVARCHAR(40) NOT NULL,

"LastName" NVARCHAR(20) NOT NULL,

"Company" NVARCHAR(80),

"Address" NVARCHAR(70),

"City" NVARCHAR(40),

"State" NVARCHAR(40),

"Country" NVARCHAR(40),

"PostalCode" NVARCHAR(10),

"Phone" NVARCHAR(24),

"Fax" NVARCHAR(24),

"Email" NVARCHAR(60) NOT NULL,

"SupportRepId" INTEGER,

PRIMARY KEY ("CustomerId"),

FOREIGN KEY("SupportRepId") REFERENCES "Employee" ("EmployeeId")

)

sql_cmd: SELECT COUNT(*) FROM "Customer" WHERE NOT "Country" = "Brazil";

sql_result: "[(54,)]"

answer: 54 customers are not from Brazil.

- input: list all the genres that start with 'r'

table_info: |

CREATE TABLE "Genre" (

"GenreId" INTEGER NOT NULL,

"Name" NVARCHAR(120),

PRIMARY KEY ("GenreId")

)

/*

3 rows from Genre table:

GenreId Name

1 Rock

2 Jazz

3 Metal

*/

sql_cmd: SELECT "Name" FROM "Genre" WHERE "Name" LIKE 'r%';

sql_result: "[('Rock',), ('Rock and Roll',), ('Reggae',), ('R&B/Soul',)]"

answer: The genres that start with 'r' are Rock, Rock and Roll, Reggae and R&B/Soul.

"""

现在您有了一些示例(具有手动纠正的输出 SQL),您可以按通常的方式执行 few shot prompt 播种:

from langchain import FewShotPromptTemplate, PromptTemplate

from langchain.chains.sql_database.prompt import _sqlite_prompt, PROMPT_SUFFIX

from langchain.embeddings.huggingface import HuggingFaceEmbeddings

from langchain.prompts.example_selector.semantic_similarity import SemanticSimilarityExampleSelector

from langchain.vectorstores import Chroma

example_prompt = PromptTemplate(

input_variables=["table_info", "input", "sql_cmd", "sql_result", "answer"],

template="{table_info}\n\nQuestion: {input}\nSQLQuery: {sql_cmd}\nSQLResult: {sql_result}\nAnswer: {answer}",

)

examples_dict = yaml.safe_load(YAML_EXAMPLES)

local_embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

example_selector = SemanticSimilarityExampleSelector.from_examples(

# This is the list of examples available to select from.

examples_dict,

# This is the embedding class used to produce embeddings which are used to measure semantic similarity.

local_embeddings,

# This is the VectorStore class that is used to store the embeddings and do a similarity search over.

Chroma, # type: ignore

# This is the number of examples to produce and include per prompt

k=min(3, len(examples_dict)),

)

few_shot_prompt = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=example_prompt,

prefix=_sqlite_prompt + "Here are some examples:",

suffix=PROMPT_SUFFIX,

input_variables=["table_info", "input", "top_k"],

)

Using embedded DuckDB without persistence: data will be transient

现在,使用这个 few shot prompt,模型应该表现得更好,特别是对于与您种子相似的输入。

local_chain = SQLDatabaseChain.from_llm(local_llm, db, prompt=few_shot_prompt, use_query_checker=True, verbose=True, return_intermediate_steps=True)

result = local_chain("How many customers are from Brazil?")

> Entering new SQLDatabaseChain chain...

How many customers are from Brazil?

SQLQuery:SELECT count(*) FROM Customer WHERE Country = "Brazil";

SQLResult: [(5,)]

Answer:[5]

> Finished chain.

result = local_chain("How many customers are not from Brazil?")

> Entering new SQLDatabaseChain chain...

How many customers are not from Brazil?

SQLQuery:SELECT count(*) FROM customer WHERE country NOT IN (SELECT country FROM customer WHERE country = 'Brazil')

SQLResult: [(54,)]

Answer:54 customers are not from Brazil.

> Finished chain.

result = local_chain("How many customers are there in total?")

> Entering new SQLDatabaseChain chain...

How many customers are there in total?

SQLQuery:SELECT count(*) FROM Customer;

SQLResult: [(59,)]

Answer:There are 59 customers in total.

> Finished chain.

18、摘要 summarize

摘要链可以用于对多个文档进行摘要。

一种方法是将多个较小的文档输入,将它们划分为块,并使用 MapReduceDocumentsChain 对其进行操作。

您还可以选择将摘要链设置为 StuffDocumentsChain 或 RefineDocumentsChain。

准备数据

本例从一个长文档中创建多个文档,但是这些文档可以以任何方式获取(这个笔记本的重点是强调在获取文档之后要做什么)。

from langchain import OpenAI, PromptTemplate, LLMChain

from langchain.text_splitter import CharacterTextSplitter

from langchain.chains.mapreduce import MapReduceChain

from langchain.prompts import PromptTemplate

llm = OpenAI(temperature=0)

text_splitter = CharacterTextSplitter()

with open("../../state_of_the_union.txt") as f:

state_of_the_union = f.read()

texts = text_splitter.split_text(state_of_the_union)

from langchain.docstore.document import Document

docs = [Document(page_content=t) for t in texts[:3]]

快速开始

from langchain.chains.summarize import load_summarize_chain

chain = load_summarize_chain(llm, chain_type="map_reduce")

chain.run(docs)

' In response to Russian aggression in Ukraine, the United States and its allies are taking action to hold Putin accountable, including economic sanctions, asset seizures, and military assistance. The US is also providing economic and humanitarian aid to Ukraine, and has passed the American Rescue Plan and the Bipartisan Infrastructure Law to help struggling families and create jobs. The US remains unified and determined to protect Ukraine and the free world.'

如果你想对发生了什么,有更多控制,可以看以下信息。

The stuff Chain

这部分展示,使用 stuff Chain 来做摘要的结果。

chain = load_summarize_chain(llm, chain_type="stuff")

chain.run(docs)

' In his speech, President ... America.'

自定义 Prompts

你也可以在这个chain 中使用你自己的 prompts。本例中,我们将使用 Italian 回复。

prompt_template = """Write a concise summary of the following:

{text}

CONCISE SUMMARY IN ITALIAN:"""

PROMPT = PromptTemplate(template=prompt_template, input_variables=["text"])

chain = load_summarize_chain(llm, chain_type="stuff", prompt=PROMPT)

chain.run(docs)

"\n\nIn questa serata, .... Questo porterà a creare posti"

The map_reduce Chain

这里展示使用 map_reduce Chain 来做摘要。

This sections shows results of using the map_reduce Chain to do summarization.

chain = load_summarize_chain(llm, chain_type="map_reduce")

chain.run(docs)

" In response to Russia's ...infrastructure."

Intermediate Steps

如果我们想检查map_reduce链,我们也可以返回它们的中间步骤。这是通过return_map_steps变量完成的。

chain = load_summarize_chain(

OpenAI(temperature=0),

chain_type="map_reduce",

return_intermediate_steps=True

)

chain({"input_documents": docs}, return_only_outputs=True)

{'map_steps': [" In response ... ill-gotten gains.",

' The United ...Ukrainian-American citizens.',

" President Biden and... support American jobs."],

'output_text': " In response to ...Ukrainian-American citizens."}

Custom Prompts

您还可以使用自己的提示来使用此链。在这个例子中,我们将以意大利语回答。

prompt_template = """Write a concise summary of the following:

{text}

CONCISE SUMMARY IN ITALIAN:"""

PROMPT = PromptTemplate(template=prompt_template, input_variables=["text"])

chain = load_summarize_chain(OpenAI(temperature=0), chain_type="map_reduce", return_intermediate_steps=True, map_prompt=PROMPT, combine_prompt=PROMPT)

chain({"input_documents": docs}, return_only_outputs=True)

{'intermediate_steps': ["\n\nQuesta ... oligarchi russi.",

"\n\nStiamo unendo... per la libertà.",

"\n\nIl Presidente...navigabili in"],

'output_text': "\n\nIl Pre...bertà."}

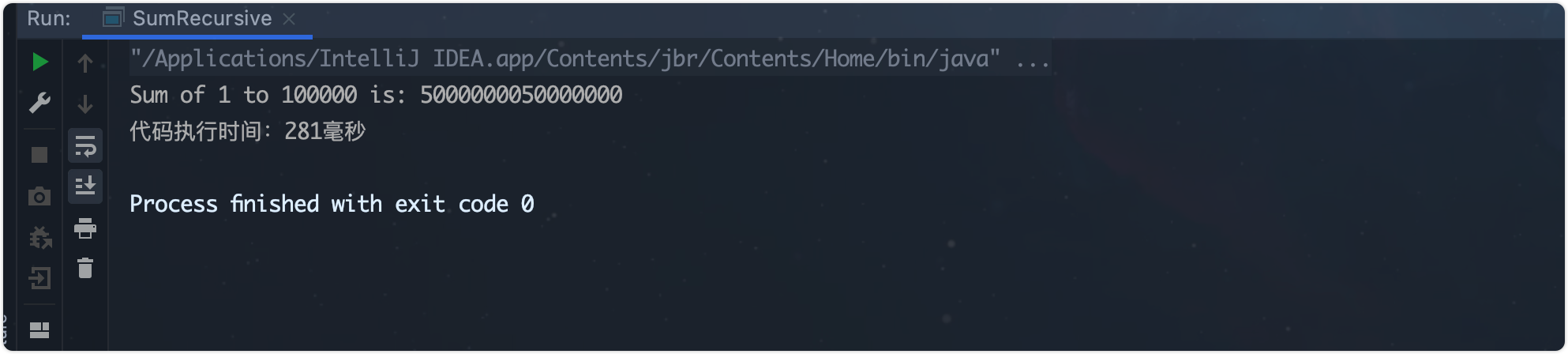

自定义 MapReduceChain

多样化输入提示 (Multi input prompt)

from langchain.chains.combine_documents.map_reduce import MapReduceDocumentsChain

from langchain.chains.combine_documents.stuff import StuffDocumentsChain

map_template_string = """Give the following python code information, generate a description that explains what the code does and also mention the time complexity.

Code:

{code}

Return the the description in the following format:

name of the function: description of the function

"""

reduce_template_string = """Given the following python function names and descriptions, answer the following question

{code_description}

Question: {question}

Answer:

"""

MAP_PROMPT = PromptTemplate(input_variables=["code"], template=map_template_string)

REDUCE_PROMPT = PromptTemplate(input_variables=["code_description", "question"], template=reduce_template_string)

llm = OpenAI()

map_llm_chain = LLMChain(llm=llm, prompt=MAP_PROMPT)

reduce_llm_chain = LLMChain(llm=llm, prompt=REDUCE_PROMPT)

generative_result_reduce_chain = StuffDocumentsChain(

llm_chain=reduce_llm_chain,

document_variable_name="code_description",

)

combine_documents = MapReduceDocumentsChain(

llm_chain=map_llm_chain,

combine_document_chain=generative_result_reduce_chain,

document_variable_name="code",

)

map_reduce = MapReduceChain(

combine_documents_chain=combine_documents,

text_splitter=CharacterTextSplitter(separator="\n##\n ", chunk_size = 100, chunk_overlap = 0),

)

code = """

def bubblesort(list):

for iter_num in range(len(list)-1,0,-1):

for idx in range(iter_num):

if list[idx]>list[idx+1]:

temp = list[idx]

list[idx] = list[idx+1]

list[idx+1] = temp

return list

##

def insertion_sort(InputList):

for i in range(1, len(InputList)):

j = i-1

nxt_element = InputList[i]