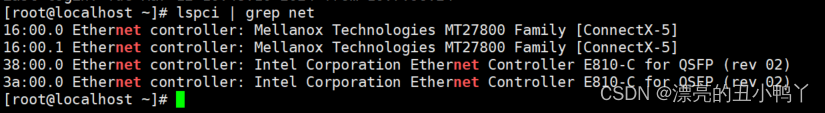

这个卡是个双口100G双芯片的卡,QSFP28 单口速率100G,双口200G

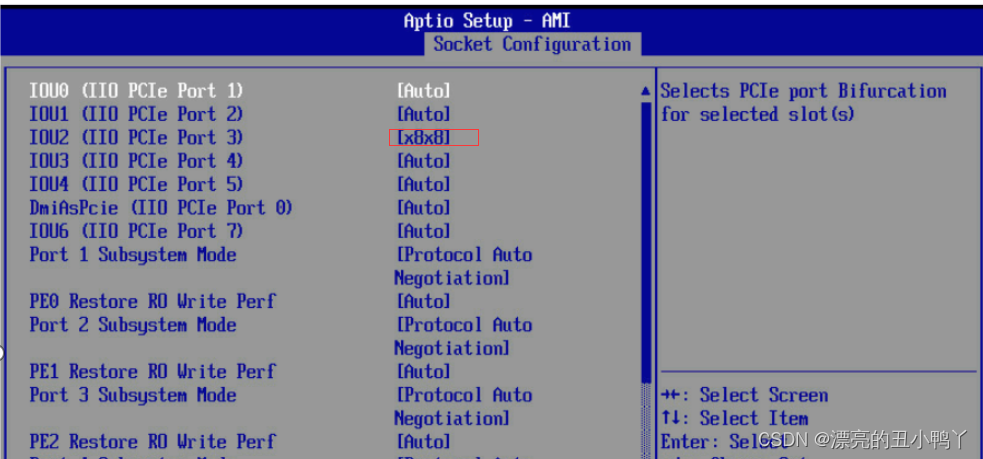

1.BIOS下pcie带宽设置

服务器BIOS下支持设置PCIE link width 设置x8x8,否则只能显示一个网口,如下图

E810-2CQDA2需要BIOS下设置该卡槽位pcie slot link width 设置x8x8

开机post页面按del键进入Bios设置对应安装pcie port为x8x8,不设置则只能识别到一个网口,有的机型不支持这个设置,所以需要先确认一下机型bios是否支持及X16插槽

Socket Configuration-> IIO Configuration

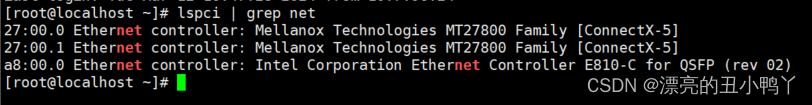

未设置pcie slot link width时系统下只能识别到一个网口

pcie slot link width 设置x8x8

修改保存配置重启进入系统可正常识别两个网口

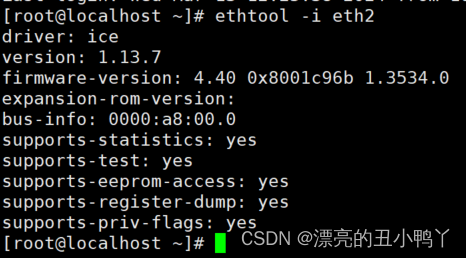

2,驱动固件安装

先刷ice驱动再刷固件,否则会提示更新失败https://www.intel.com/content/www/us/en/download/15084/intel-ethernet-adapter-complete-driver-pack.html

cd ice-1.13.7/src/

make install

rmmod ice

modprobe ice

ethtool -i eth0

cd E810/Linux_x64/

./nvmupdate64e

ethtool -i eth0

查看日志:dmesg |grep -w ice

3.iperf带宽测试

单口速率可以达到99G 双口速率可以达到198G

安装iperf

1、下载安装包:

2、解压:tar -zxvf iperf-2.0.9-source.tar.gz

3、cd iperf-2.0.9-source.tar.gz

4、./configure && make && make install && cd …

注:arm机型编译netperf注意:

./configure --build=arm-linux # --build=编译平台

单口带宽测试

server: iperf -s

Client: iperf -c 10.1.1.1 -w 64k -t 600 -i 1 -P 80

双口bond4测试

配置bond

vim ifcfg-bond4

DEVICE=bond4

BOOTPROTO=static

ONBOOT=yes

TYPE=Bond

USERCTL=no

IPV6INIT=no

PEERDNS=yes

BONDING_MASTER=yes

BONDING_OPTS=“mode=4 miimon=100 xmit_hash_policy=layer3+4”

IPADDR=10.10.0.2

NETMASK=255.255.255.0

GATEWAY=10.10.0.1

vim ifcfg-ens25f0

DEVICE=ens25f0

BOOTPROTO=static

MTU=9000

ONBOOT=yes

TYPE=Ethernet

USERCTL=no

IPV6INIT=no

PEERDNS=yes

SLAVE=yes

MASTER=bond4

vim ifcfg-ens25f1

DEVICE=ens25f1

BOOTPROTO=static

MTU=9000

ONBOOT=yes

TYPE=Ethernet

USERCTL=no

IPV6INIT=no

PEERDNS=yes

SLAVE=yes

MASTER=bond4

测试命令同单口一样

设置好bond后需要重启网口关闭防火墙

systemctl stop firewalld.service

systemctl restart Network.service

双向测试

-r, –tradeoff 先做传送再做接收(對 Client 而言)

-d, –dualtest 同时做传送与接收

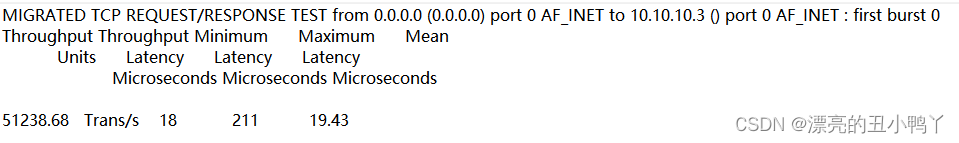

4.netperf延时测试

服务端:netserver

客户端:netperf -H 10.10.10.4 -t TCP_RR – -d rr -O “THROUGHPUT, THROUGHPUT_UNITS, MIN_LATENCY, MAX_LATENCY, MEAN_LATENCY”

5.rdma测试

5.1.步骤2已经装好驱动和固件

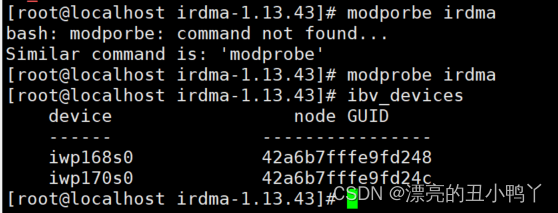

5.2.装irdma–>装rdma-core

安装依赖包

下载irdma安装包

https://www.intel.cn/content/www/cn/zh/download/19632/30368/linux-rdma-driver-for-the-e810-and-x722-intel-ethernet-controllers.html

下载rdma-core-46.0.tar.gz

解压安装

安装

yum install -y cmake

yum install -y libudev-devel

yum install -y python3-devel

yum install -y python3-docutils

yum install -y systemd-devel

yum install -y pkgconf-pkg-config

下载安装包:https://developer.aliyun.com/packageSearch?word=python3-Cython

rpm -ivh ninja-build-1.8.2-1.el8.x86_64.rpm

rpm -ivh pandoc-2.0.6-5.el8.x86_64.rpm pandoc-common-2.0.6-5.el8.noarch.rpm

rpm -ivh python3-Cython-0.29.2-1.el8.x86_64.rpm

tar zxf irdma-1.12.55.tgz

cd irdma-1.12.55

./buold.sh

./build_core.sh -y && ./install_core.sh安装

./build_core.sh -t /root/RDMA/Linux/irdma-1.13.43/rdma-core-46.0.tar.gz

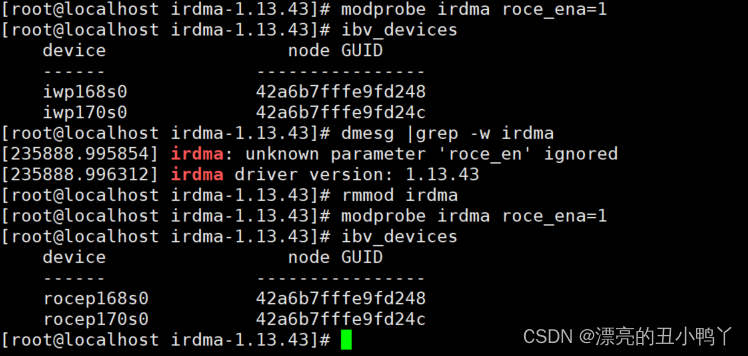

modprobe irdma

ibv_devices

默认是iWARP,切换iwrap和roce2

roce2 :modprobe irdma roce_ena=1

改回iwarp

rmmod irdma && modprobe irdma

rmmod irdma && modprobe irdma roce_ena=1

5.3配置IP

TYPE=Ethernet

DEVICE=eth1

BOOTPROTO=static

IPADDR=10.10.0.1

NETMASK=255.255.255.0

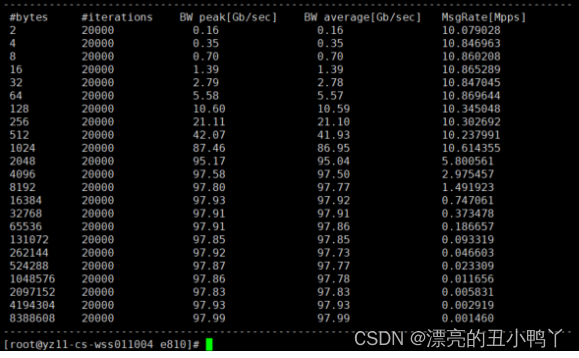

5.4测试rdma

带宽测试调优设置

tuned-adm profile network-throughput

systemctl stop irqbalance.service

ifconfig eth1 mtu 9000

cpupower frequency-set -g performance

ulimit -s unlimited

ethtool -A eth1 tx on rx on

cd /root/Desktop/fw/ice-1.13.7/scripts/

./set_irq_affinity -x local eth1

关防火墙

systemctl stop firewalld.service

setenforce 0

iptables -F

iptables -L

关闭selinux

vim /etc/selinux/config

把SELINUX设置为disabled

SELINUX=disabled

带宽测试命令

ib_read_bw/ib_write_bw/ib_send_bw -F -R -q 4 -s 4096 -d rocep56s0 -a --report_gbits

ib_read_bw/ib_write_bw/ib_send_bw -F -S 1 -R -q 4 -s 4096 -d rocep168s0 10.10.10.3 -a --report_gbits

延时测试命令

ib_read_lat/ib_write_lat/ib_send_lat -s 2 -I 96 -a -d rocep58s0 -p 22341 -F

ib_read_lat/ib_write_lat/ib_send_lat -s 2 -I 96 -a -d rocep168s0 -p 22341 10.10.11.3 -F

厂商给的测试调优设置

Throughput

Driver/FW:

Linux*: ice.1.8.3

irdma: 1.8.45

NVM: 0x8000d846

Adapter Tuning:

systemctl stop irqbalance

scripts/set_irq_affinity -x all

RDMA Latency:

systemctl stop irqbalance

scripts/set_irq_affinity -x local

Enable push mode:

echo 1 > /sys/kernel/config/irdma/{rdmadev}

RDMA Bandwidth:

Enable Link Level Control:

ethtool -A tx on rx on

scripts/set_irq_affinity -x local

Latency:

Tuned-adm profile network-latency

systemctl stop irqbalance

ethtool -L {interface} combined {num of local CPUs}

ethtool -C adaptive-rx off adaptive-tx off

ethtool –C rx-usecs 0 tx-usecs 0

scripts/set_irq_affinity -x local

echo 16384 > /proc/sys/net/core/rps_sock_flow_entries

Enable aRFS:

for file in /sys/class/net/{interface}/queues/rx-*/rps_flow_cnt; do echo

{16384/num local cpus} > $file; done

Busy poll disabled:

sysctl -w net.core.busy_poll=0

sysctl -w net.core.busy_read=0

Busy Poll enabled:

sysctl -w net.core.busy_poll=50

sysctl -w net.core.busy_read=50

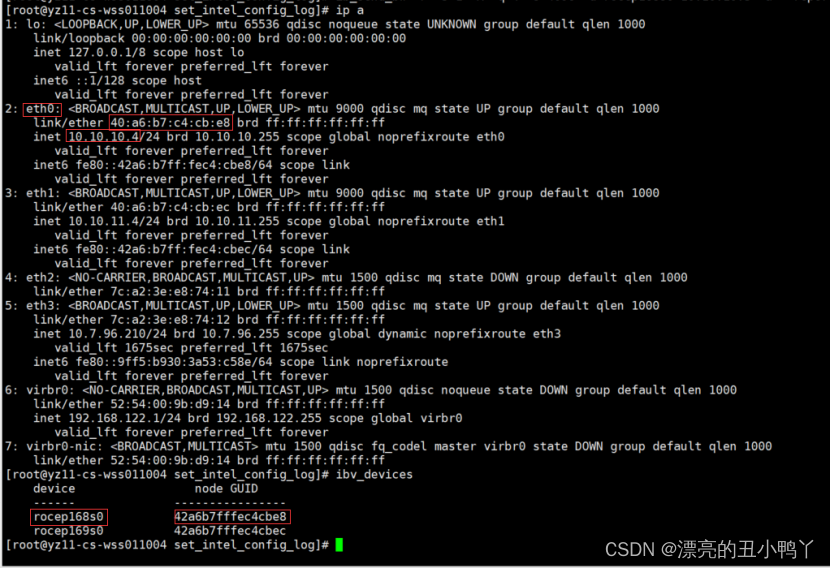

网口对应信息

Eth0 -> 10.10.10.4 -> rocep168s0 -> 42a6b7fffec4cbe8

![[数据结构]栈和队列结构的简单制作](https://img-blog.csdnimg.cn/direct/5c2865d1632c4c2089590db161d9b656.png)