文章目录

- 简介

- KnowAgent思路

- 准备知识

- Action Knowledge的定义

- Planning Path Generation with Action Knowledge

- Planning Path Refinement via Knowledgeable Self-Learning

- KnowAgent的实验结果

- 总结

- 参考资料

简介

《KnowAgent: Knowledge-Augmented Planning for LLM-Based Agents》是2024年03月出的一篇论文。

论文要解决的问题:LLM与环境交互生成执行动作时的规划幻觉(planning hallucination),即模型会生成不必要的或者冲突的动作序列。如"attempting to look up information without performing a search operation"或"trying to pick an apple from a table without verifying the presence of both the table and the apple"

下图是KnowAgent的概览图。

knowAgent的框架如下图,后面的动图(来自论文GitHub)演示了knowAgent框架的步骤示意。

KnowAgent思路

准备知识

KnowAgent是在ReAct提出的规划轨迹格式的基础上来训练和评估的。

如果用数学语言来定义ReAct,轨迹 τ \tau τ由Thought-Action-Observation三元组( T , A , O \mathcal{T},\mathcal{A},\mathcal{O} T,A,O)表示,其中 T \mathcal{T} T表示agent的想法, A \mathcal{A} A表示可执行动作, O \mathcal{O} O表示从环境获得的反馈信息。

在时刻t的历史轨迹为:

H

t

=

(

T

0

,

A

0

,

O

0

,

T

1

,

⋯

,

T

t

−

1

,

A

t

−

1

,

O

t

−

1

)

\mathcal{H}_t = (\mathcal{T}_0, \mathcal{A}_0, \mathcal{O}_0, \mathcal{T}_1, \cdots,\mathcal{T}_{t-1}, \mathcal{A}_{t-1}, \mathcal{O}_{t-1})

Ht=(T0,A0,O0,T1,⋯,Tt−1,At−1,Ot−1)

基于历史轨迹,agent将生成新的想法和动作。给定参数为

θ

\theta

θ的模型agent

π

\pi

π,根据历史轨迹生成

T

t

\mathcal{T}_t

Tt可表示为下式,式中的

T

t

i

\mathcal{T}_t^i

Tti 和

∣

T

t

∣

|\mathcal{T}_t|

∣Tt∣分别是

T

t

\mathcal{T}_t

Tt的第i个token及长度。

p

(

T

t

∣

H

t

)

=

∏

i

=

1

∣

T

t

∣

π

θ

(

T

t

i

∣

H

t

,

T

t

<

i

)

p\left(\mathcal{T}_t \mid \mathcal{H}_t\right)=\prod_{i=1}^{\left|\mathcal{T}_t\right|} \pi_\theta\left(\mathcal{T}_t^i \mid \mathcal{H}_t, \mathcal{T}_t^{<i}\right)

p(Tt∣Ht)=i=1∏∣Tt∣πθ(Tti∣Ht,Tt<i)

接着,动作

A

t

\mathcal{A}_t

At将由

T

t

\mathcal{T}_t

Tt和

H

t

\mathcal{H}_t

Ht来决定,式中的

A

t

j

\mathcal{A}_t^j

Atj 和

∣

A

t

∣

|\mathcal{A}_t|

∣At∣分别是

A

t

\mathcal{A}_t

At的第j个token及长度:

p

(

A

t

∣

H

t

,

T

t

)

=

∏

j

=

1

∣

A

t

∣

π

θ

(

A

t

j

∣

H

t

,

T

t

,

A

t

<

j

)

p\left(\mathcal{A}_t \mid \mathcal{H}_t, \mathcal{T}_t\right)=\prod_{j=1}^{\left|\mathcal{A}_t\right|} \pi_\theta\left(\mathcal{A}_t^j \mid \mathcal{H}_t, \mathcal{T}_t, \mathcal{A}_t^{<j}\right)

p(At∣Ht,Tt)=j=1∏∣At∣πθ(Atj∣Ht,Tt,At<j)

最后,动作

A

t

\mathcal{A}_t

At的得到的反馈结果被当做观察

O

\mathcal{O}

O并添加到轨迹中得到

H

t

+

1

\mathcal{H}_{t+1}

Ht+1。

注:在论文后面将动作集称为 E a E_a Ea,里面的动作 a i a_i ai与 A i \mathcal{A}_i Ai是一样的。

Action Knowledge的定义

动作Action: E a = a 1 , ⋯ , a N − 1 E_a = {a_1, \cdots, a_{N-1}} Ea=a1,⋯,aN−1表示动作集合,LLM为了完成特定任务需要执行的离散动作。

动作规则Action Rules: R = r 1 , ⋯ , r N − 1 \mathcal{R} = {r_1, \cdots, r_{N-1}} R=r1,⋯,rN−1定义了动作转换的逻辑和顺序。 r k : a i → a j r_k: a_i \rightarrow a_j rk:ai→aj 定义了可行的动作转换。

动作知识Action Knowledge:表示为 ( E a , R ) (E_a, \mathcal{R}) (Ea,R),由动作集合 E a E_a Ea和规则集 R \mathcal{R} R组成,不同任务的动作知识构成了动作知识库(Action KB)

提取动作知识的策略:人工构建耗时且费力,所以使用GPT-4进行初始构建后进行人工校正。

Planning Path Generation with Action Knowledge

使用动作知识来规划路径生成的第一步是将动作知识转换成文本(Action knowledge to text),比如Search: (Search, Retrieve, Lookup, Finish), 表示Search后面的动作可以是Search, Retrieve, Lookup, Finish中的任一个。

第二步是根据动作知识来进行路径生成(Path Generation), 作者们设计了一个如上面图片右侧的prompt,prompt由四部分构成:

- 动作知识库的概述来定义基本概念和规则

- 定义每一个动作的含义、操作详情

- 定义规划路径生成的原则,用来约束输出过程

- 提供几个实际规划路径的例子

在HotpotQA上使用的prompt举例(Table 4):

"""

Your task is to answer a question using a specific graph-based method. You must navigate from the "Start" node to the "Finish" node by following the paths outlined in the graph. The correct path is a series of actions that will lead you to the answer.

The decision graph is constructed upon a set of principles known as "Action Knowledge", outlined as follows:

Start:(Search, Retrieve)

Retrieve:(Retrieve, Search, Lookup, Finish)

Search:(Search, Retrieve, Lookup, Finish)

Lookup:(Lookup, Search, Retrieve, Finish)

Finish:()

Here's how to interpret the graph's Action Knowledge:

From "Start", you can initiate with either a "Search" or a "Retrieve" action.

At the "Retrieve" node, you have the options to persist with "Retrieve", shift to "Search", experiment with "Lookup", or advance to "Finish".

At the "Search" node, you can repeat "Search", switch to "Retrieve" or "Lookup", or proceed to "Finish".

At the "Lookup" node, you have the choice to keep using "Lookup", switch to "Search" or "Retrieve", or complete the task by going to "Finish".

The "Finish" node is the final action where you provide the answer and the task is completed. Each node action is defined as follows:

(1) Retrieve[entity]: Retrieve the exact entity on Wikipedia and return the first paragraph if it exists. If not, return some similar entities for searching.

(2) Search[topic]: Use Bing Search to find relevant information on a specified topic, question, or term.

(3) Lookup[keyword]: Return the next sentence that contains the keyword in the last passage successfully found by Search or Retrieve.

(4) Finish[answer]: Return the answer and conclude the task.

As you solve the question using the above graph structure, interleave ActionPath, Thought, Action, and Observation steps. ActionPath documents the sequence of nodes you have traversed within the graph. Thought analyzes the current node to reveal potential next steps and reasons for the current situation.

You may take as many steps as necessary.

Here are some examples:

{examples}

(END OF EXAMPLES)

Question: {question}{scratchpad}

"""

论文在ALFWorld 上执行Pick任务使用的prompt举例(Table 5):

"""

Interact with a household to solve a task by following the structured "Action Knowledge". The guidelines are:

Goto(receptacle) -> Open(receptacle)

[Goto(receptacle), Open(receptacle)] -> Take(object, from: receptacle)

Take(object, from: receptacle) -> Goto(receptacle)

[Goto(receptacle), Take(object, from: receptacle)] -> Put(object, in/on: receptacle)

Here's how to interpret the Action Knowledge:

Before you open a receptacle, you must first go to it. This rule applies when the receptacle is closed. To take an object from a receptacle, you either need to be at the receptacle's location, or if it's closed, you need to open it first.

Before you go to the new receptacle where the object is to be placed, you should take it. Putting an object in or on a receptacle can follow either going to the location of the receptacle or after taking an object with you.

The actions are as follows:

1) go to receptacle

2) take object from receptacle

3) put object in/on receptacle

4) open receptacle

As you tackle the question with Action Knowledge, utilize both the ActionPath and Think steps. ActionPath records the series of actions you've taken, and the Think step understands the current situation and guides your next moves.

Here are two examples.

{examples}

Here is the task.

"""

路径(path)和轨迹(trajectory)的区别如下:

-

路径是指agent采取的一系列动作

-

轨迹包括模型在解决问题过程中的完整输出,所以路径是轨迹的一部分。

如果像在准备知识一样用数学语言来定义knowAgent,轨迹

τ

\tau

τ由四元组(

P

,

T

,

A

,

O

\mathcal{P},\mathcal{T},\mathcal{A},\mathcal{O}

P,T,A,O)表示,其中

P

\mathcal{P}

P表示动作路径,

T

\mathcal{T}

T表示agent的想法,

A

\mathcal{A}

A表示可执行动作,

O

\mathcal{O}

O表示从环境获得的反馈信息。在时刻t的历史轨迹为:

H

t

=

(

P

0

,

T

0

,

A

0

,

O

0

,

⋯

,

P

t

−

1

,

T

t

−

1

,

A

t

−

1

,

O

t

−

1

)

\mathcal{H}_t = (\mathcal{P}_0,\mathcal{T}_0, \mathcal{A}_0, \mathcal{O}_0, \cdots,\mathcal{P}_{t-1},\mathcal{T}_{t-1}, \mathcal{A}_{t-1}, \mathcal{O}_{t-1})

Ht=(P0,T0,A0,O0,⋯,Pt−1,Tt−1,At−1,Ot−1)

基于历史轨迹,agent将生成新的动作路径、想法和动作。给定参数为

θ

\theta

θ的模型agent

π

\pi

π,根据历史轨迹生成接下来的动作路径

P

t

\mathcal{P}_t

Pt可表示为下式,式中的

P

t

k

\mathcal{P}_t^k

Ptk 和

∣

P

t

∣

|\mathcal{P}_t|

∣Pt∣分别是

P

t

\mathcal{P}_t

Pt的第k个token及长度。

p

(

P

t

∣

H

t

)

=

∏

k

=

1

∣

P

t

∣

π

θ

(

P

t

k

∣

H

t

,

P

t

<

k

)

p\left(\mathcal{P}_t \mid \mathcal{H}_t\right)=\prod_{k=1}^{\left|\mathcal{P}_t\right|} \pi_\theta\left(\mathcal{P}_t^k \mid \mathcal{H}_t, \mathcal{P}_t^{<k}\right)

p(Pt∣Ht)=k=1∏∣Pt∣πθ(Ptk∣Ht,Pt<k)

与前面准备知识中提到的React思路一样,后续想法和动作的生成可以表示为下面的式子:

p

(

T

t

∣

H

t

,

P

t

)

=

∏

i

=

1

∣

T

t

∣

π

θ

(

T

t

i

∣

H

t

,

P

t

,

T

t

<

i

)

p

(

A

t

∣

H

t

,

P

t

,

T

t

)

=

∏

j

=

1

∣

A

t

∣

π

θ

(

A

t

j

∣

H

t

,

P

t

,

T

t

,

A

t

<

j

)

p\left(\mathcal{T}_t \mid \mathcal{H}_t, \mathcal{P}_t\right)=\prod_{i=1}^{\left|\mathcal{T}_t\right|} \pi_\theta\left(\mathcal{T}_t^i \mid \mathcal{H}_t, \mathcal{P}_t, \mathcal{T}_t^{<i}\right) \\ p\left(\mathcal{A}_t \mid \mathcal{H}_t, \mathcal{P}_t, \mathcal{T}_t\right)=\prod_{j=1}^{\left|\mathcal{A}_t\right|} \pi_\theta\left(\mathcal{A}_t^j \mid \mathcal{H}_t,\mathcal{P}_t, \mathcal{T}_t, \mathcal{A}_t^{<j}\right)

p(Tt∣Ht,Pt)=i=1∏∣Tt∣πθ(Tti∣Ht,Pt,Tt<i)p(At∣Ht,Pt,Tt)=j=1∏∣At∣πθ(Atj∣Ht,Pt,Tt,At<j)

Planning Path Refinement via Knowledgeable Self-Learning

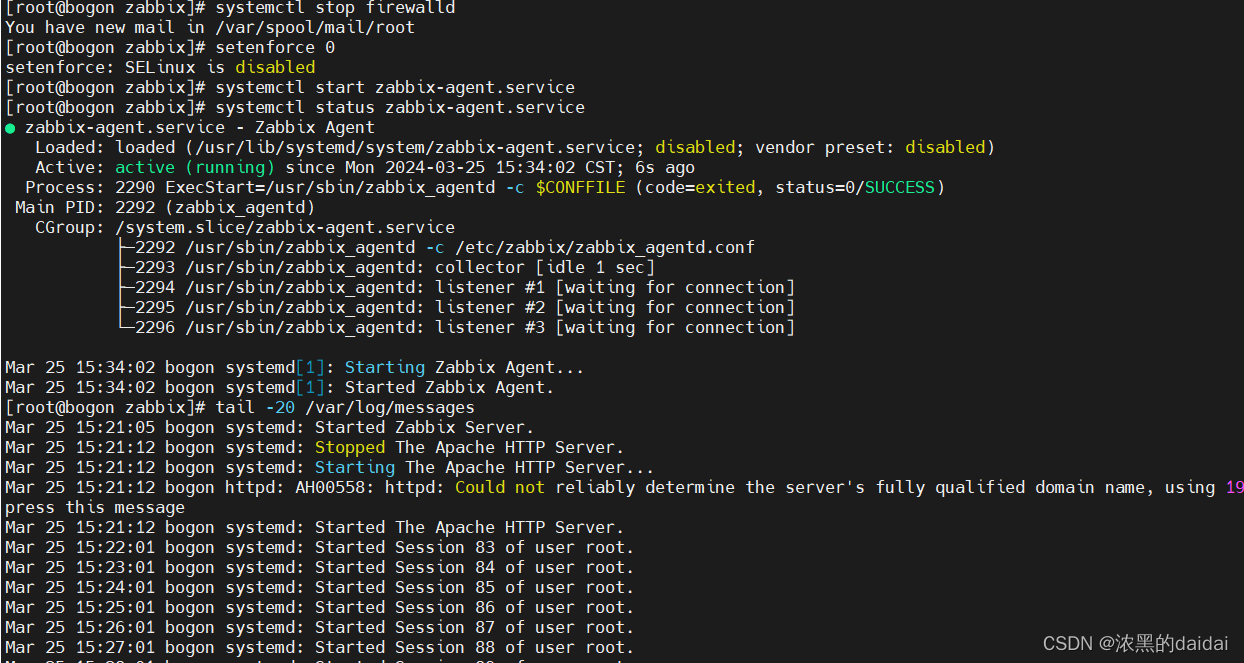

Knowledgeable Self-Learning的目标是通过迭代微调使得模型更好的理解动作知识,其算法如下图所示:

具体来说,算法过程如下:

-

Knowledgeable Self-Learning的输入为初始训练集 D 0 D_0 D0、动作知识 A K m AK_m AKm、未训练模型 M 0 M_0 M0,由模型生成初始轨迹集合 T 0 = { τ 1 , τ 2 , ⋯ , τ n } T_0=\{\tau_1, \tau_2, \cdots, \tau_n\} T0={τ1,τ2,⋯,τn},将 T 0 T_0 T0过滤后微调模型 M 0 M_0 M0后得到模型 M 1 M_1 M1。

-

模型 M 1 M_1 M1在 D 0 D_0 D0上评估得到新的轨迹集合 T 1 = { τ 1 ′ , τ 2 ′ , ⋯ , τ n ′ } T_1=\{\tau_1^{\prime}, \tau_2^{\prime}, \cdots, \tau_n^{\prime}\} T1={τ1′,τ2′,⋯,τn′}, 将 T 1 T_1 T1与 T 0 T_0 T0经过滤和合并(merging)操作后用来微调模型 M 1 M_1 M1后得到模型 M 2 M_2 M2。

-

一直重复第二步直到模型在测试集 D t e s t D_{test} Dtest上的提升变得非常小。

其中,

- 过滤Filtering:先根据生成结果选择正确的轨迹

T

c

o

r

r

e

c

t

T_{correct}

Tcorrect,然后根据动作知识库

A

K

m

AK_m

AKm来去掉未与其对齐的轨迹,主要是invalid动作和misordered的动作序列。

- invalid动作是指不符合动作规则的动作

- misordered序列是指动作的逻辑顺序与动作知识库不一样

- 合并merging:合并不同迭代轮次里的轨迹,对于解决同一任务的多条轨迹,保留更高效(更短)的轨迹。

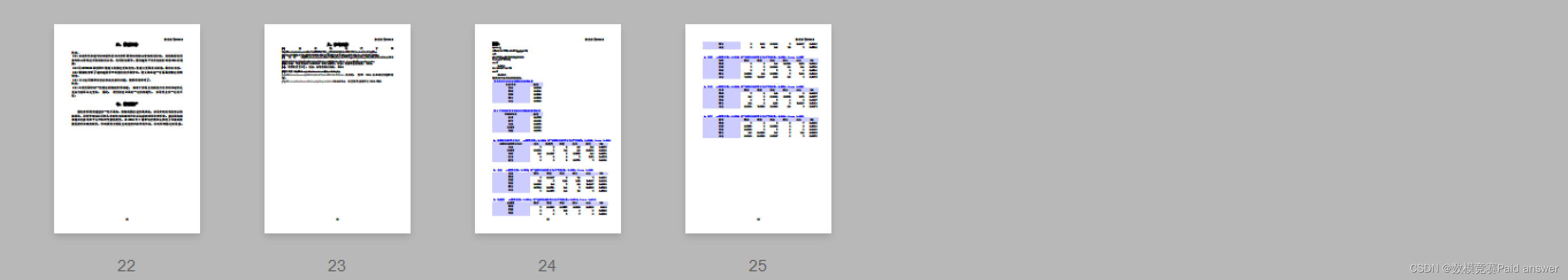

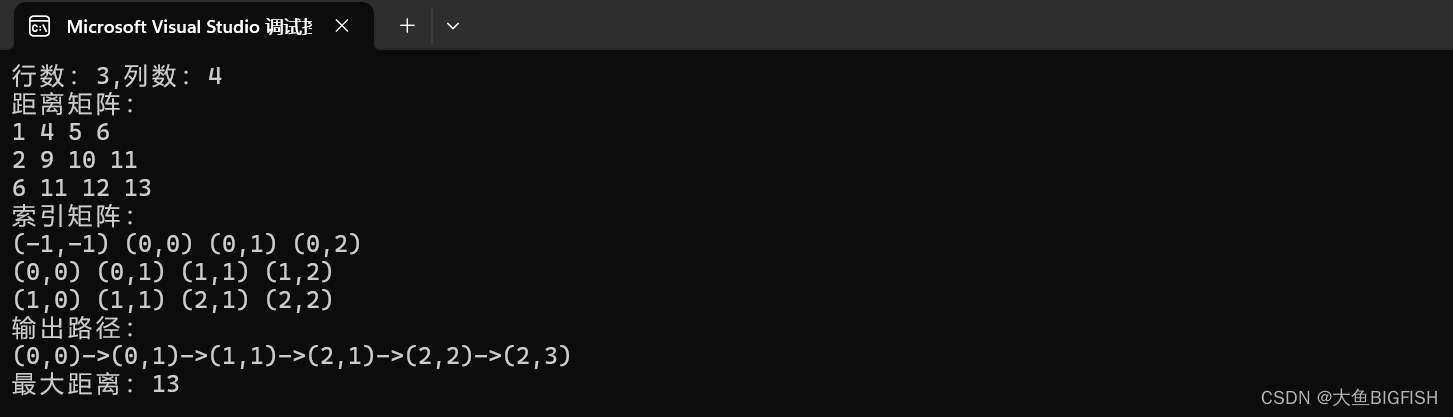

KnowAgent的实验结果

在HotpotQA和ALFWorld上来评估KnowAgent,使用Llama2-{7,13,70}b-chat模型作为基础模型,同时测试了Vicuna和Mistral模型的效果。

与KnowAgent比较的基准有:CoT、ReAct、Reflexion、FiReAct。结果如下图

错误分析:knowagent难以有效蒸馏关键信息,经常无法给出准确回复。主要原因在于处理长上下文窗口时推理能力和记忆能力的不足。

总结

knowAgent在ReAct的基础上新增了路径(path)概念,通过构建一个动作知识库来指导agent更好的规划任务,减少规划幻觉(planning hallucination)。个人觉得动作知识库的引入与RAG减少幻觉的思路是类似的,knowAgent复杂的prompt也是方法不可忽视的一点。

knowAgent另一个很重要的思路是自学习,使用迭代的思路不断微调模型使模型更好的理解动作知识。

论文中的几个数学公式主要是用来表示生成过程的,即使不理解公式也没有关系,个人觉得即使论文中不添加那些公式表示也不影响思路的完整性。

参考资料

- Zhu, Yuqi, Shuofei Qiao, Yixin Ou, Shumin Deng, Ningyu Zhang, Shiwei Lyu, Yue Shen, et al. n.d. “KnowAgent: Knowledge-Augmented Planning for LLM-Based Agents.”

- KnowAgent github

![[技术杂谈]解决windows上出现文件名太长错误](https://img-blog.csdnimg.cn/direct/39643677e5004307b46f9ffd2d5fd4c2.png)

![[套路] 浏览器引入Vue.js场景-WangEditor富文本编辑器的使用 (永久免费)](https://img-blog.csdnimg.cn/direct/7d003533fd61452c805872c31636e5b8.png)