目录

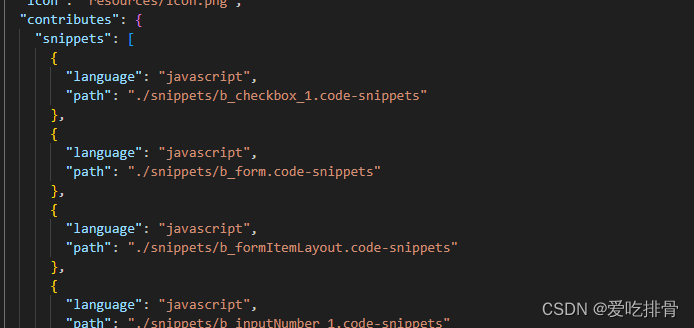

1.安装partainer

2.安装myql

3.安装redis

4.安装Minio

5.安装zibkin

6.安装nacos

7.安装RabbitMq

8.安装RocketMq

8.1启动service

8.2修改对应配置

8.3启动broker

8.4启动控制台

9.安装sentinel

10.安装elasticsearch

11.安装Kibana

12.安装logstash/filebeat

前置:docker安装:docker、docker-component安装-CSDN博客

内存至少8G

坑:1.类似网络错误等

删除对应失败启动容器,重新启动

sudo systemctl restart docker1.安装partainer

docker run -d \

-p 8000:8000 -p 9000:9000 \

--name portainer \

--restart=always \

-v /var/run/docker.sock:/var/run/docker.sock \

-v portainer_data:/data \

portainer/portainer 启动:ip:9000

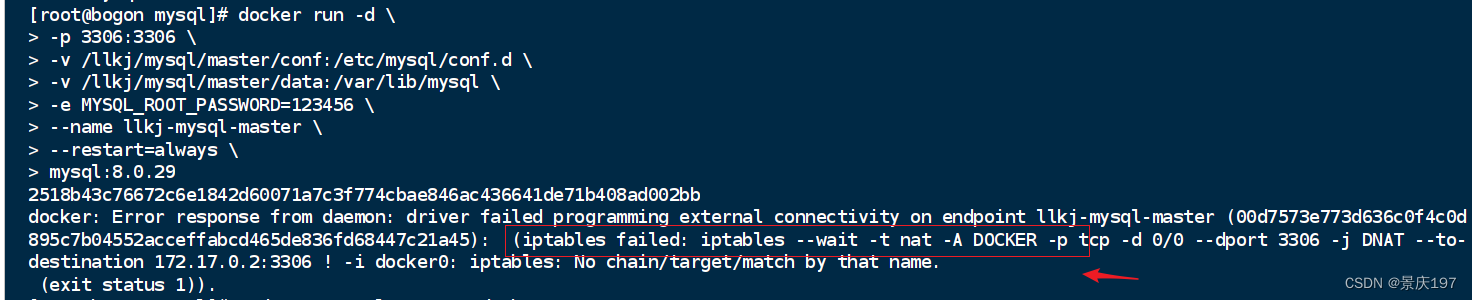

2.安装myql

docker run -d \

-p 3306:3306 \

-v /llkj/mysql/master/conf:/etc/mysql/conf.d \

-v /llkj/mysql/master/data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=123456 \

--name llkj-mysql-master \

--restart=always \

mysql:8.0.29修改对应配置文件

#修改配置文件

vim /llkj/mysql/master/conf/my.cnf

#修改配置文件

[mysqld]

# 服务器唯一id,默认值1

server-id=1

# 设置日志格式,默认值ROW

binlog_format=STATEMENT

# 二进制日志名,默认binlog

# log-bin=binlog

# 设置需要复制的数据库,默认复制全部数据库

#binlog-do-db=mytestdb1

#binlog-do-db=mytestdb2

# 设置不需要复制的数据库

#binlog-ignore-db=mysql

#binlog-ignore-db=infomation_schema

#重启docker容器

docker restart llkj-mysql-master3.安装redis

docker pull redis:6.2.5

docker run -d \

--name=redis -p 6379:6379 \

--restart=always \

redis:6.2.54.安装Minio

docker run -p 9002:9000 -p 9003:9001 \

--name=minio -d --restart=always \

-e "MINIO_ROOT_USER=admin" \

-e "MINIO_ROOT_PASSWORD=admin123456" \

-v /home/minio/data:/data \

-v /home/minio/config:/root/.minio \

minio/minio server /data --console-address ":9001"5.安装zibkin

docker pull openzipkin/zipkin

docker run --name zipkin --restart=always -d -p 9411:9411 openzipkin/zipkin访问地址http://ip:9411/zipkin/

6.安装nacos

docker pull nacos/nacos-server:1.4.1

docker run --env MODE=standalone \

--name nacos \

--restart=always -d -p 8848:8848 \

-e JVM_XMS=512m -e JVM_XMX=512m \

nacos/nacos-server:1.4.1地址:http://ip:8848/nacos/

7.安装RabbitMq

登录:guest guest

docker pull rabbitmq:3.9.11-management

docker run -d -p 5672:5672 -p 15672:15672 \

--restart=always --name rabbitmq \

rabbitmq:3.9.11-management8.安装RocketMq

8.1启动service

docker pull rocketmqinc/rocketmq

mkdir -p /home/rocketmq/data/namesrv/logs /home/rocketmq/data/namesrv/store

docker run -d --name rmqnamesrv \

-p 9876:9876 --restart=always \

-v /home/rocketmq/data/namesrv/logs:/root/logs \

-v /home/rocketmq/data/namesrv/store:/root/store \

-e "MAX_POSSIBLE_HEAP=100000000" rocketmqinc/rocketmq sh mqnamesrv 8.2修改对应配置

mkdir -p /home/rocketmq/data/broker/logs /home/rocketmq/data/broker/store

vim /home/rocketmq/conf/broker.conf# 所属集群名称,如果节点较多可以配置多个

brokerClusterName = DefaultCluster

#broker名称,master和slave使用相同的名称,表明他们的主从关系

brokerName = broker-a

#0表示Master,大于0表示不同的slave

brokerId = 0

#表示几点做消息删除动作,默认是凌晨4点

deleteWhen = 04

#在磁盘上保留消息的时长,单位是小时

fileReservedTime = 48

#有三个值:SYNC_MASTER,ASYNC_MASTER,SLAVE;同步和异步表示Master和Slave之间同步数据的机制;

brokerRole = ASYNC_MASTER

#刷盘策略,取值为:ASYNC_FLUSH,SYNC_FLUSH表示同步刷盘和异步刷盘;SYNC_FLUSH消息写入磁盘后才返回成功状态,ASYNC_FLUSH不需要;

flushDiskType = ASYNC_FLUSH

#自动创建topic

autoCreateTopicEnable=true

#设置broker节点所在服务器的ip地址

brokerIP1 = 你服务器外网ip

8.3启动broker

docker run -d --name rmqbroker --link rmqnamesrv:namesrv \

-p 10911:10911 -p 10909:10909 --restart=always\

-v /home/rocketmq/data/broker/logs:/root/logs \

-v /home/rocketmq/data/broker/store:/root/store \

-v /home/rocketmq/conf/broker.conf:/opt/rocketmq-4.4.0/conf/broker.conf \

--privileged=true -e "NAMESRV_ADDR=namesrv:9876" \

-e "MAX_POSSIBLE_HEAP=200000000" rocketmqinc/rocketmq sh mqbroker \

-c /opt/rocketmq-4.4.0/conf/broker.conf8.4启动控制台

# 下载监控台,访问ip:9999

docker pull styletang/rocketmq-console-ng

docker run -d --name rocketmqConsule --restart=always \

-e "JAVA_OPTS=-Drocketmq.namesrv.addr=ip:9876 -Dcom.rocketmq.sendMessageWithVIPChannel=false -Duser.timezone='Asia/Shanghai'" \

-v /etc/localtime:/etc/localtime \

-p 9999:8080 styletang/rocketmq-console-ng 9.安装sentinel

访问:ip:8858 账号sentinel sentinel

docker pull bladex/sentinel-dashboard

docker run --name=sentinel --restart=always \

-p 8858:8858 -d bladex/sentinel-dashboard:latest10.安装elasticsearch

docker pull elasticsearch:7.8.0

mkdir -p /home/elasticsearch/plugins

mkdir -p /home/elasticsearch/data

chmod 777 /home/elasticsearch/data

docker run -p 9200:9200 -p 9300:9300 --name es --restart=always \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms512m -Xmx512m" \

-v /mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-v /mydata/elasticsearch/data:/usr/share/elasticsearch/data \

-v /mydata/elasticsearch/conf:/usr/share/elasticsearch/conf \

-d elasticsearch:7.8.011.安装Kibana

docker pull kibana:7.8.0

#此处ip为虚拟机连接es的ip

docker run --name kibana --restart=always \

-e ELASTICSEARCH_URL=http://192.168.10.100:9200 \

-p 5601:5601 -d kibana:7.8.0

docker exec -it kibana /bin/bash

#修改对应ip

vi config/kibana.yml

elasticsearch.hosts: [ "http://192.168.10.100:9200" ]

docker restart 容器id

http://ip:560112.安装logstash/filebeat

待更新!!!!!

docker pull logstash:7.8.0

vim /home/logstash/logstash.conf

input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 5044

codec => json_lines

}

}

filter{

}

output {

elasticsearch {

hosts => "ip:9200"

index => "gmall-%{+YYYY.MM.dd}"

}

}docker run --name logstash -p 5044:5044 \

--restart=always \

--link es \

-v /home/logstash/logstash.conf:/usr/share/logstash/pipeline/logstash.conf \

-d logstash:7.8.0