👨🎓个人主页:研学社的博客

💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

📚2 运行结果

🎉3 参考文献

🌈4 Matlab代码实现

💥1 概述

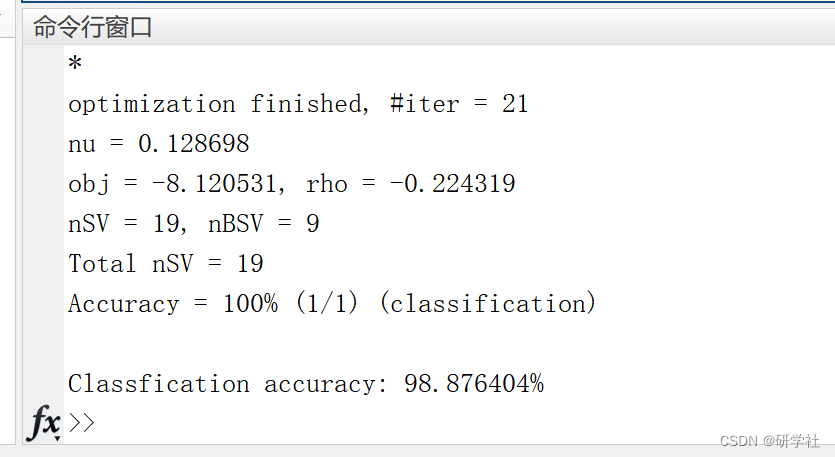

度量学习和支持向量机(SVM)的想法在最近的许多工作中相互学习。从 SVM 算法获得的分类器具有许多优点,包括最大的余量和内核技巧。同时,度量学习算法可以得到一个强调相关特征并降低非信息特征影响的马氏距离函数。这两种方法的组合,称为SVM与基于马氏距离的径向基函数(MDRBF)核,似乎是大多数分类问题的良好解决方案。本文的算法,该算法学习马氏距离核以支持向量机分类。

文献来源:

Jiangyuan Mei, Xiianqiang Yang, and Huijun Gao, Learning a Mahalanobis distance kernel for Support Vector Machine classification, Journal of The Franklin Institute, in review.

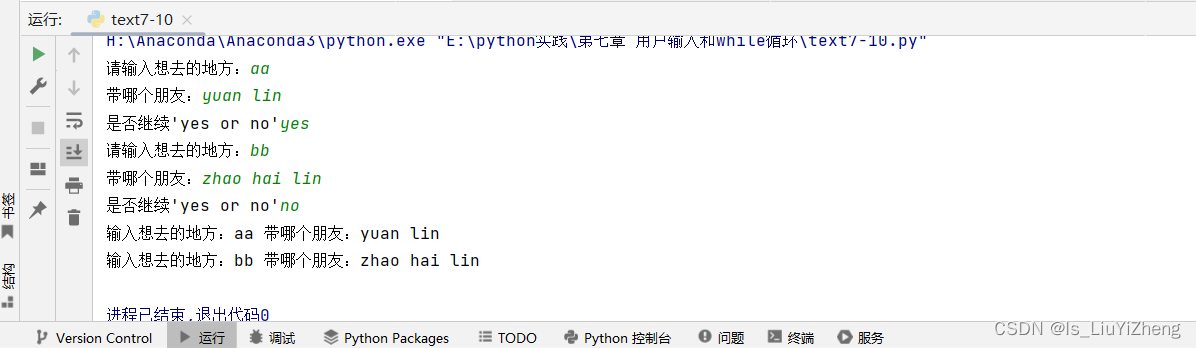

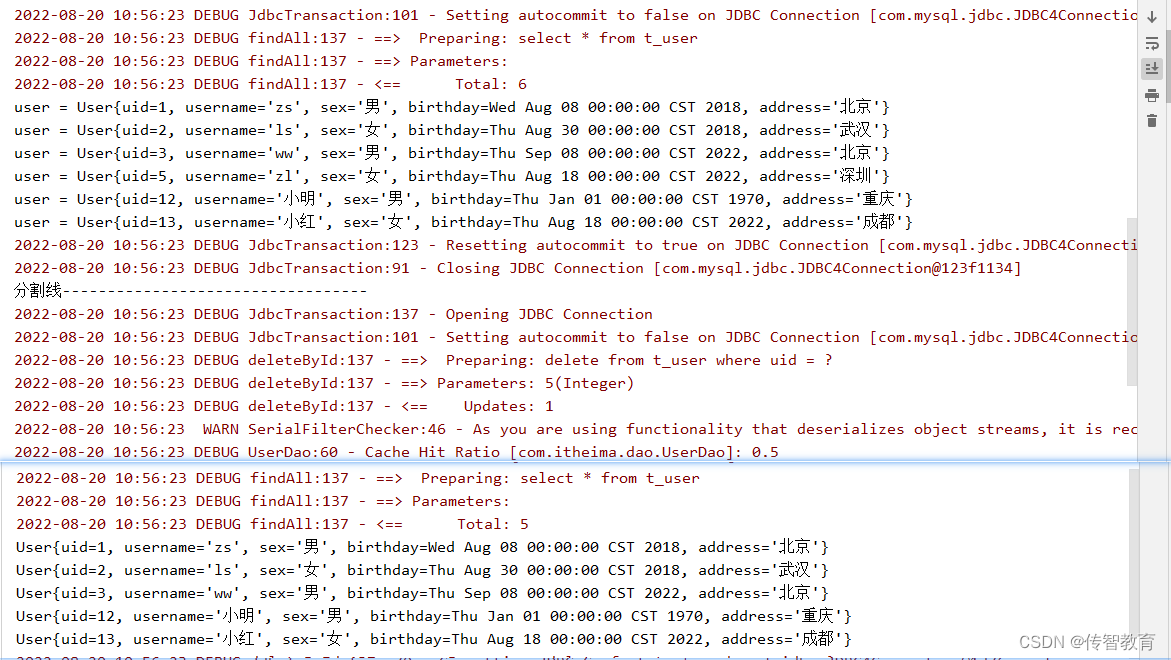

📚2 运行结果

部分代码:

disp('Cross-validating....')

for cross=1:k_fold

%% crossvalidation

disp(sprintf('\nFold %d', cross));

test_start = ceil(n_samples/k_fold * (cross-1)) + 1;

test_end = ceil(n_samples/k_fold * cross);

Y_train = [];

X_train = zeros(0, n_features);

if (cross > 1);

Y_train = Y_index(1:test_start-1);

X_train = X(1:test_start-1,:);

end

if (cross < k_fold),

Y_train = [Y_train; Y_index(test_end+1:n_samples)];

X_train = [X_train; X(test_end+1:n_samples, :)];

end

X_test = X(test_start:test_end, :);

Y_test = Y_index(test_start:test_end);

[X_train,Y_train]=data_rank(X_train,Y_train);

[M_struct,SVM_model_struct]=DAGSVMMDRBF_train(X_train,Y_train);

Predict=DAGSVMMDRBF_predict(M_struct,SVM_model_struct,X_train,Y_train,X_test,Y_test);

Predict_all(test_start:test_end,1)=Predict;

end

accuracy = sum(Predict_all==Y_index)/n_samples*100;

disp(sprintf('\nClassfication accuracy: %f%%',accuracy));

🎉3 参考文献

部分理论来源于网络,如有侵权请联系删除。

[1]Jiangyuan Mei, Xiianqiang Yang, and Huijun Gao, Learning a Mahalanobis distance kernel for Support Vector Machine classification, Journal of The Franklin Institute, in review.

![[附源码]SSM计算机毕业设计茶园认养管理平台JAVA](https://img-blog.csdnimg.cn/430bb767f95644dfb6e88e9c0f6eb240.png)

![PGL图学习之图神经网络GNN模型GCN、GAT[系列六]](https://img-blog.csdnimg.cn/img_convert/6bd4e7bafcc2405f63cf2124f9924c02.jpeg)