20240202在WIN10下部署faster-whisper

2024/2/2 12:15

前提条件,可以通过技术手段上外网!^_

首先你要有一张NVIDIA的显卡,比如我用的PDD拼多多的二手GTX1080显卡。【并且极其可能是矿卡!】800¥

2、请正确安装好NVIDIA最新的545版本的驱动程序和CUDA、cuDNN。

2、安装Torch

3、配置whisper

https://developer.aliyun.com/article/1366662

2023-11-03持续进化,快速转录,Faster-Whisper对视频进行双语字幕转录实践(Python3.10)

https://zhuanlan.zhihu.com/p/664892334

持续进化,快速转录,Faster-Whisper对视频进行双语字幕转录实践(Python3.10)

构建Faster-Whisper转录环境

首先确保本地已经安装好Python3.10版本以上的开发环境,随后克隆项目:

git clone https://github.com/ycyy/faster-whisper-webui.git

进入项目的目录:

cd faster-whisper-webui

安装项目依赖:

pip3 install -r requirements.txt

这里需要注意的是,除了基础依赖,还得再装一下faster-whisper依赖:

pip3 install -r requirements-fasterWhisper.txt

如此,转录速度会更快。

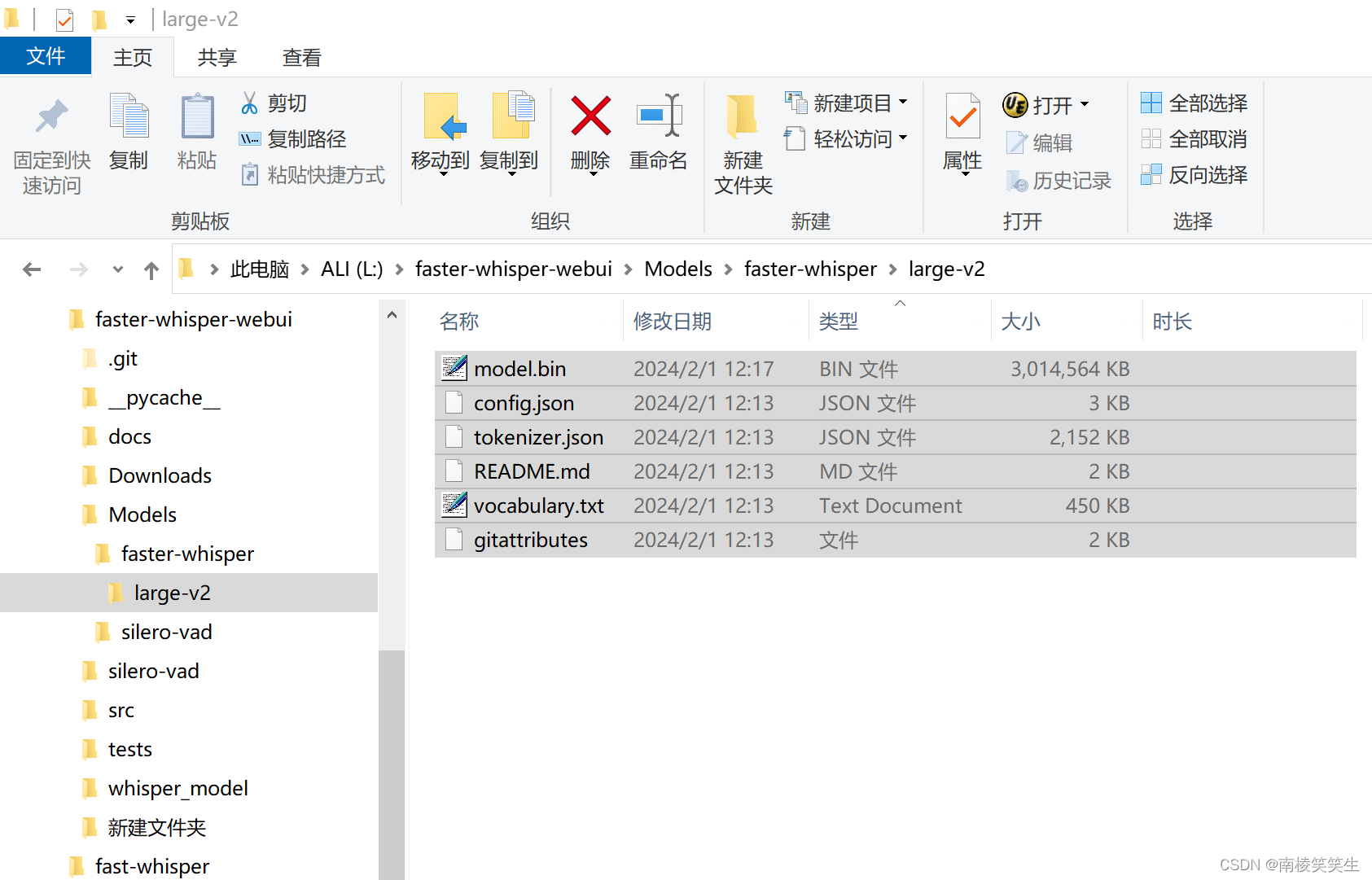

模型的下载和配置

首先在项目的目录建立模型文件夹:

mkdir Models

faster-whisper项目内部已经整合了VAD算法,VAD是一种音频活动检测的算法,它可以准确的把音频中的每一句话分离开来,并且让whisper更精准的定位语音开始和结束的位置。

所有首先需要配置VAD模型:

git clone https://github.com/snakers4/silero-vad

然后将克隆下来的vad模型放入刚刚建立的Models文件夹中即可。

接着下载faster-whisper模型,下载地址:

https://huggingface.co/guillaumekln/faster-whisper-large-v2

这里建议只下载faster-whisper-large-v2模型,也就是大模型的第二版,因为faster-whisper本来就比whisper快,所以使用large模型优势就会更加的明显。

模型放入models文件夹的faster-whisper目录,最终目录结构如下:

models

├─faster-whisper

│ ├─large-v2

└─silero-vad

├─examples

│ ├─cpp

│ ├─microphone_and_webRTC_integration

│ └─pyaudio-streaming

├─files

└─__pycache__

至此,模型就配置好了。

本地推理进行转录

现在,我们可以试一试faster-whisper的效果了,以「原神」神里绫华日语视频:《谁能拒绝一只蝴蝶忍呢?》为例子,原视频地址:

https://www.bilibili.com/video/BV1fG4y1b74e/

项目根目录运行命令:

python cli.py --model large-v2 --vad silero-vad --language Japanese --output_dir d:/whisper_model d:/Downloads/test.mp4

这里:

--model指定large-v2模型,

--vad算法使用silero-vad,

--language语言指定日语,

输出目录为d:/whisper_model,

转录视频是d:/Downloads/test.mp4。

参考资料:

https://blog.csdn.net/qq_43907505/article/details/135048613?spm=1001.2101.3001.6650.4&utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EYuanLiJiHua%7EPosition-4-135048613-blog-127843094.235%5Ev43%5Epc_blog_bottom_relevance_base1&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EYuanLiJiHua%7EPosition-4-135048613-blog-127843094.235%5Ev43%5Epc_blog_bottom_relevance_base1&utm_relevant_index=9

https://blog.csdn.net/qq_43907505/article/details/135048613

开源语音识别faster-whisper部署教程

日语源视频:

https://www.bilibili.com/video/BV1fG4y1b74e/?vd_source=4a6b675fa22dfa306da59f67b1f22616

「原神」神里绫华日语配音,谁能拒绝一只蝴蝶忍呢?

C:\faster-whisper-webui\Models\faster-whisper\large-v2

Microsoft Windows [版本 10.0.19045.3930]

(c) Microsoft Corporation。保留所有权利。

C:\Users\wb491>pip install faster-whisper

Requirement already satisfied: faster-whisper in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (0.10.0)

Requirement already satisfied: av==10.* in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from faster-whisper) (10.0.0)

Requirement already satisfied: ctranslate2<4,>=3.22 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from faster-whisper) (3.24.0)

Requirement already satisfied: huggingface-hub>=0.13 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from faster-whisper) (0.20.3)

Requirement already satisfied: tokenizers<0.16,>=0.13 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from faster-whisper) (0.15.1)

Requirement already satisfied: onnxruntime<2,>=1.14 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from faster-whisper) (1.17.0)

Requirement already satisfied: setuptools in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from ctranslate2<4,>=3.22->faster-whisper) (65.5.0)

Requirement already satisfied: numpy in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from ctranslate2<4,>=3.22->faster-whisper) (1.26.3)

Requirement already satisfied: pyyaml<7,>=5.3 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from ctranslate2<4,>=3.22->faster-whisper) (6.0.1)

Requirement already satisfied: filelock in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (3.13.1)

Requirement already satisfied: fsspec>=2023.5.0 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (2023.12.2)

Requirement already satisfied: requests in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (2.31.0)

Requirement already satisfied: tqdm>=4.42.1 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (4.66.1)

Requirement already satisfied: typing-extensions>=3.7.4.3 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (4.9.0)

Requirement already satisfied: packaging>=20.9 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from huggingface-hub>=0.13->faster-whisper) (23.2)

Requirement already satisfied: coloredlogs in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from onnxruntime<2,>=1.14->faster-whisper) (15.0.1)

Requirement already satisfied: flatbuffers in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from onnxruntime<2,>=1.14->faster-whisper) (23.5.26)

Requirement already satisfied: protobuf in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from onnxruntime<2,>=1.14->faster-whisper) (4.25.2)

Requirement already satisfied: sympy in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from onnxruntime<2,>=1.14->faster-whisper) (1.12)

Requirement already satisfied: colorama in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from tqdm>=4.42.1->huggingface-hub>=0.13->faster-whisper) (0.4.6)

Requirement already satisfied: humanfriendly>=9.1 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from coloredlogs->onnxruntime<2,>=1.14->faster-whisper) (10.0)

Requirement already satisfied: charset-normalizer<4,>=2 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from requests->huggingface-hub>=0.13->faster-whisper) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from requests->huggingface-hub>=0.13->faster-whisper) (3.6)

Requirement already satisfied: urllib3<3,>=1.21.1 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from requests->huggingface-hub>=0.13->faster-whisper) (2.2.0)

Requirement already satisfied: certifi>=2017.4.17 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from requests->huggingface-hub>=0.13->faster-whisper) (2023.11.17)

Requirement already satisfied: mpmath>=0.19 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from sympy->onnxruntime<2,>=1.14->faster-whisper) (1.3.0)

Requirement already satisfied: pyreadline3 in c:\users\wb491\appdata\local\programs\python\python310\lib\site-packages (from humanfriendly>=9.1->coloredlogs->onnxruntime<2,>=1.14->faster-whisper) (3.4.1)

C:\Users\wb491>python

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> from faster_whisper import WhisperModel

>>> model_size = "large-v2"

>>> model = WhisperModel(model_size, device="cuda", compute_type="float32")

An error occured while synchronizing the model Systran/faster-whisper-large-v2 from the Hugging Face Hub:

An error happened while trying to locate the files on the Hub and we cannot find the appropriate snapshot folder for the specified revision on the local disk. Please check your internet connection and try again.

Trying to load the model directly from the local cache, if it exists.

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 198, in _new_conn

sock = connection.create_connection(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\connection.py", line 85, in create_connection

raise err

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\connection.py", line 73, in create_connection

sock.connect(sa)

ConnectionRefusedError: [WinError 10061] 由于目标计算机积极拒绝,无法连接。

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 793, in urlopen

response = self._make_request(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 491, in _make_request

raise new_e

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 467, in _make_request

self._validate_conn(conn)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 1099, in _validate_conn

conn.connect()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 616, in connect

self.sock = sock = self._new_conn()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 213, in _new_conn

raise NewConnectionError(

urllib3.exceptions.NewConnectionError: <urllib3.connection.HTTPSConnection object at 0x00000297A6C0A530>: Failed to establish a new connection: [WinError 10061] 由于目标计算机积极拒绝,无法连接。

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\adapters.py", line 486, in send

resp = conn.urlopen(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 847, in urlopen

retries = retries.increment(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\retry.py", line 515, in increment

raise MaxRetryError(_pool, url, reason) from reason # type: ignore[arg-type]

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/Systran/faster-whisper-large-v2/revision/main (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x00000297A6C0A530>: Failed to establish a new connection: [WinError 10061] 由于目标计算机积极拒绝,无法连接。'))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 179, in snapshot_download

repo_info = api.repo_info(repo_id=repo_id, repo_type=repo_type, revision=revision, token=token)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\hf_api.py", line 2275, in repo_info

return method(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\hf_api.py", line 2084, in model_info

r = get_session().get(path, headers=headers, timeout=timeout, params=params)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 602, in get return self.request("GET", url, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 589, in request

resp = self.send(prep, **send_kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 703, in send

r = adapter.send(request, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_http.py", line 67, in send

return super().send(request, *args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\adapters.py", line 519, in send

raise ConnectionError(e, request=request)

requests.exceptions.ConnectionError: (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/Systran/faster-whisper-large-v2/revision/main (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x00000297A6C0A530>: Failed to establish a new connection: [WinError 10061] 由于目标计算机积极拒绝,无法连接。'))"), '(Request ID: b9f1826a-095f-4bd5-bb64-a1edad8b66d9)')

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\utils.py", line 100, in download_model

return huggingface_hub.snapshot_download(repo_id, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 251, in snapshot_download

raise LocalEntryNotFoundError(

huggingface_hub.utils._errors.LocalEntryNotFoundError: An error happened while trying to locate the files on the Hub and we cannot find the appropriate snapshot folder for the specified revision on the local disk. Please check your internet connection and try again.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 124, in __init__

model_path = download_model(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\utils.py", line 116, in download_model

return huggingface_hub.snapshot_download(repo_id, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 235, in snapshot_download

raise LocalEntryNotFoundError(

huggingface_hub.utils._errors.LocalEntryNotFoundError: Cannot find an appropriate cached snapshot folder for the specified revision on the local disk and outgoing traffic has been disabled. To enable repo look-ups and downloads online, pass 'local_files_only=False' as input.

>>>

>>> model = WhisperModel(model_size, device="cuda", compute_type="float32")

An error occured while synchronizing the model Systran/faster-whisper-large-v2 from the Hugging Face Hub:

An error happened while trying to locate the files on the Hub and we cannot find the appropriate snapshot folder for the specified revision on the local disk. Please check your internet connection and try again.

Trying to load the model directly from the local cache, if it exists.

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 198, in _new_conn

sock = connection.create_connection(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\connection.py", line 85, in create_connection

raise err

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\connection.py", line 73, in create_connection

sock.connect(sa)

TimeoutError: [WinError 10060] 由于连接方在一段时间后没有正确答复或连接的主机没有反应,连接尝试失败。

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 793, in urlopen

response = self._make_request(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 491, in _make_request

raise new_e

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 467, in _make_request

self._validate_conn(conn)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 1099, in _validate_conn

conn.connect()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 616, in connect

self.sock = sock = self._new_conn()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connection.py", line 207, in _new_conn

raise ConnectTimeoutError(

urllib3.exceptions.ConnectTimeoutError: (<urllib3.connection.HTTPSConnection object at 0x00000297A6C08AF0>, 'Connection to huggingface.co timed out. (connect timeout=None)')

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\adapters.py", line 486, in send

resp = conn.urlopen(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\connectionpool.py", line 847, in urlopen

retries = retries.increment(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\urllib3\util\retry.py", line 515, in increment

raise MaxRetryError(_pool, url, reason) from reason # type: ignore[arg-type]

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/Systran/faster-whisper-large-v2/revision/main (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x00000297A6C08AF0>, 'Connection to huggingface.co timed out. (connect timeout=None)'))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 179, in snapshot_download

repo_info = api.repo_info(repo_id=repo_id, repo_type=repo_type, revision=revision, token=token)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\hf_api.py", line 2275, in repo_info

return method(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\hf_api.py", line 2084, in model_info

r = get_session().get(path, headers=headers, timeout=timeout, params=params)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 602, in get

return self.request("GET", url, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 589, in request

resp = self.send(prep, **send_kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\sessions.py", line 703, in send

r = adapter.send(request, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_http.py", line 67, in send

return super().send(request, *args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\requests\adapters.py", line 507, in send

raise ConnectTimeout(e, request=request)

requests.exceptions.ConnectTimeout: (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /api/models/Systran/faster-whisper-large-v2/revision/main (Caused by ConnectTimeoutError(<urllib3.connection.HTTPSConnection object at 0x00000297A6C08AF0>, 'Connection to huggingface.co timed out. (connect timeout=None)'))"), '(Request ID: fdc269a6-6cab-4569-8f49-98b602682277)')

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\utils.py", line 100, in download_model

return huggingface_hub.snapshot_download(repo_id, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 251, in snapshot_download

raise LocalEntryNotFoundError(

huggingface_hub.utils._errors.LocalEntryNotFoundError: An error happened while trying to locate the files on the Hub and we cannot find the appropriate snapshot folder for the specified revision on the local disk. Please check your internet connection and try again.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 124, in __init__

model_path = download_model(

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\utils.py", line 116, in download_model

return huggingface_hub.snapshot_download(repo_id, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\utils\_validators.py", line 118, in _inner_fn

return fn(*args, **kwargs)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\huggingface_hub\_snapshot_download.py", line 235, in snapshot_download

raise LocalEntryNotFoundError(

huggingface_hub.utils._errors.LocalEntryNotFoundError: Cannot find an appropriate cached snapshot folder for the specified revision on the local disk and outgoing traffic has been disabled. To enable repo look-ups and downloads online, pass 'local_files_only=False' as input.

>>> exit()

C:\Users\wb491>

C:\Users\wb491>

C:\Users\wb491>CD C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2>

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2>dir

驱动器 C 中的卷是 WIN10

卷的序列号是 9273-D6A8

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2 的目录

2024/01/31 18:00 <DIR> .

2024/01/31 18:00 <DIR> ..

2024/01/31 18:00 119,183 下载.json

2024/01/12 01:28 3,465,644 下载.mp4

2024/01/31 18:00 19,296 下载.srt

2024/01/31 18:00 12,500 下载.tsv

2024/01/31 18:00 8,650 下载.txt

2024/01/31 18:00 16,301 下载.vtt

6 个文件 3,641,574 字节

2 个目录 189,758,509,056 可用字节

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2>

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2>ffmpeg -i 下载.mp4 output.wav

ffmpeg version git-2020-06-28-4cfcfb3 Copyright (c) 2000-2020 the FFmpeg developers

built with gcc 9.3.1 (GCC) 20200621

configuration: --enable-gpl --enable-version3 --enable-sdl2 --enable-fontconfig --enable-gnutls --enable-iconv --enable-libass --enable-libdav1d --enable-libbluray --enable-libfreetype --enable-libmp3lame --enable-libopencore-amrnb --enable-libopencore-amrwb --enable-libopenjpeg --enable-libopus --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libsrt --enable-libtheora --enable-libtwolame --enable-libvpx --enable-libwavpack --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxml2 --enable-libzimg --enable-lzma --enable-zlib --enable-gmp --enable-libvidstab --enable-libvmaf --enable-libvorbis --enable-libvo-amrwbenc --enable-libmysofa --enable-libspeex --enable-libxvid --enable-libaom --enable-libgsm --disable-w32threads --enable-libmfx --enable-ffnvcodec --enable-cuda-llvm --enable-cuvid --enable-d3d11va --enable-nvenc --enable-nvdec --enable-dxva2 --enable-avisynth --enable-libopenmpt --enable-amf

libavutil 56. 55.100 / 56. 55.100

libavcodec 58. 93.100 / 58. 93.100

libavformat 58. 47.100 / 58. 47.100

libavdevice 58. 11.100 / 58. 11.100

libavfilter 7. 86.100 / 7. 86.100

libswscale 5. 8.100 / 5. 8.100

libswresample 3. 8.100 / 3. 8.100

libpostproc 55. 8.100 / 55. 8.100

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from '下载.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41iso5

comment : vid:v0d004g10000cmbmsrjc77ubc8r79ssg

encoder : Lavf58.76.100

Duration: 00:07:01.78, start: -0.042667, bitrate: 65 kb/s

Stream #0:0(und): Audio: aac (LC) (mp4a / 0x6134706D), 48000 Hz, stereo, fltp, 64 kb/s (default)

Metadata:

handler_name : Bento4 Sound Handler

Stream mapping:

Stream #0:0 -> #0:0 (aac (native) -> pcm_s16le (native))

Press [q] to stop, [?] for help

Output #0, wav, to 'output.wav':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41iso5

ICMT : vid:v0d004g10000cmbmsrjc77ubc8r79ssg

ISFT : Lavf58.47.100

Stream #0:0(und): Audio: pcm_s16le ([1][0][0][0] / 0x0001), 48000 Hz, stereo, s16, 1536 kb/s (default)

Metadata:

handler_name : Bento4 Sound Handler

encoder : Lavc58.93.100 pcm_s16le

size= 79084kB time=00:07:01.78 bitrate=1536.0kbits/s speed= 879x

video:0kB audio:79084kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 0.000153%

C:\2024-01-05 1106国产冲锋衣杀疯了!百元骆驼如何营销卖爆?-IC实验室-SAW2\temp2>cd c:\faster-whisper-webui

c:\faster-whisper-webui>dir

驱动器 C 中的卷是 WIN10

卷的序列号是 9273-D6A8

c:\faster-whisper-webui 的目录

2024/02/01 16:31 <DIR> .

2024/02/01 16:31 <DIR> ..

2024/02/01 14:07 1,412 .gitattributes

2024/02/01 14:07 3,255 .gitignore

2024/02/01 14:07 190 app-local.py

2024/02/01 14:07 251 app-network.py

2024/02/01 14:07 202 app-shared.py

2024/02/01 14:07 34,314 app.py

2024/02/01 14:07 11,411 cli.py

2024/02/01 14:07 6,226 config.json5

2024/02/01 14:07 1,093 dockerfile

2024/02/01 14:07 <DIR> docs

2024/02/01 14:17 <DIR> Downloads

2024/02/01 14:07 11,558 LICENSE

2024/02/01 14:07 10,690 LICENSE.md

2024/02/01 14:16 <DIR> Models

2024/02/01 14:07 8,823 options.md

2024/02/01 14:07 8,893 README.md

2024/02/01 14:07 3,084 README_zh_CN.md

2024/02/01 14:07 116 requirements-fasterWhisper.txt

2024/02/01 14:07 177 requirements-whisper.txt

2024/02/01 14:07 116 requirements.txt

2024/02/01 14:07 1,789 setup.py

2024/02/01 14:18 <DIR> src

2024/02/01 12:29 19,337,093 test.mp4

2024/02/01 14:07 <DIR> tests

2024/02/01 14:07 418 webui-start.bat

2024/02/01 14:17 <DIR> whisper_model

2024/02/01 14:29 <DIR> __pycache__

20 个文件 19,441,111 字节

9 个目录 187,358,117,888 可用字节

c:\faster-whisper-webui>python cli.py --model large-v2 --vad silero-vad --language Japanese --output_dir c:\faster-whisper-webui\whisper_model c:\faster-whisper-webui\Downloads\test.mp4

Using faster-whisper for Whisper

[Auto parallel] Using GPU devices None and 8 CPU cores for VAD/transcription.

Creating whisper container for faster-whisper

Using parallel devices: None

Created Silerio model

Parallel VAD: Executing chunk from 0 to 74.072 on CPU device 0

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\test.mp4, start: 0, duration: 74.072

Processing VAD in chunk from 00:00.000 to 01:14.072

VAD processing took 4.941727200000059 seconds

Transcribing non-speech:

[{'end': 75.0716875, 'start': 0.0}]

Parallel VAD processing took 13.50156190000007 seconds

Device None (index 0) has 1 segments

(get_merged_timestamps) Using override timestamps of size 1

Processing timestamps:

[{'end': 75.0716875, 'start': 0.0}]

Running whisper from 00:00.000 to 01:15.072 , duration: 75.0716875 expanded: 0 prompt: None language: None

Loading faster whisper model large-v2 for device None

WARNING: fp16 option is ignored by faster-whisper - use compute_type instead.

multiprocessing.pool.RemoteTraceback:

"""

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 51, in starmapstar

return list(itertools.starmap(args[0], args[1]))

File "c:\faster-whisper-webui\src\vadParallel.py", line 292, in transcribe

return super().transcribe(audio, whisperCallable, config, progressListener)

File "c:\faster-whisper-webui\src\vad.py", line 213, in transcribe

segment_result = whisperCallable.invoke(segment_audio, segment_index, segment_prompt, detected_language, progress_listener=scaled_progress_listener)

File "c:\faster-whisper-webui\src\whisper\fasterWhisperContainer.py", line 148, in invoke

for segment in segments_generator:

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 445, in generate_segments

encoder_output = self.encode(segment)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 629, in encode

return self.model.encode(features, to_cpu=to_cpu)

RuntimeError: Library cublas64_11.dll is not found or cannot be loaded

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "c:\faster-whisper-webui\cli.py", line 173, in <module>

cli()

File "c:\faster-whisper-webui\cli.py", line 159, in cli

result = transcriber.transcribe_file(model, source_path, temperature=temperature, vadOptions=vadOptions, **args)

File "c:\faster-whisper-webui\app.py", line 266, in transcribe_file

result = self.process_vad(audio_path, whisperCallable, self.vad_model, process_gaps, progressListener=progressListener)

File "c:\faster-whisper-webui\app.py", line 334, in process_vad

return parallel_vad.transcribe_parallel(transcription=vadModel, audio=audio_path, whisperCallable=whisperCallable,

File "c:\faster-whisper-webui\src\vadParallel.py", line 183, in transcribe_parallel

results = results_async.get()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 774, in get

raise self._value

RuntimeError: Library cublas64_11.dll is not found or cannot be loaded

c:\faster-whisper-webui>python cli.py --model large-v2 --vad silero-vad --language Japanese --output_dir c:\faster-whisper-webui\whisper_model c:\faster-whisper-webui\Downloads\test.mp4

Using faster-whisper for Whisper

[Auto parallel] Using GPU devices None and 8 CPU cores for VAD/transcription.

Creating whisper container for faster-whisper

Using parallel devices: None

Created Silerio model

Parallel VAD: Executing chunk from 0 to 74.072 on CPU device 0

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\test.mp4, start: 0, duration: 74.072

Processing VAD in chunk from 00:00.000 to 01:14.072

VAD processing took 4.8260292999998455 seconds

Transcribing non-speech:

[{'end': 75.0716875, 'start': 0.0}]

Parallel VAD processing took 13.406057099999998 seconds

Device None (index 0) has 1 segments

(get_merged_timestamps) Using override timestamps of size 1

Processing timestamps:

[{'end': 75.0716875, 'start': 0.0}]

Running whisper from 00:00.000 to 01:15.072 , duration: 75.0716875 expanded: 0 prompt: None language: None

Loading faster whisper model large-v2 for device None

WARNING: fp16 option is ignored by faster-whisper - use compute_type instead.

multiprocessing.pool.RemoteTraceback:

"""

Traceback (most recent call last):

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 51, in starmapstar

return list(itertools.starmap(args[0], args[1]))

File "c:\faster-whisper-webui\src\vadParallel.py", line 292, in transcribe

return super().transcribe(audio, whisperCallable, config, progressListener)

File "c:\faster-whisper-webui\src\vad.py", line 213, in transcribe

segment_result = whisperCallable.invoke(segment_audio, segment_index, segment_prompt, detected_language, progress_listener=scaled_progress_listener)

File "c:\faster-whisper-webui\src\whisper\fasterWhisperContainer.py", line 148, in invoke

for segment in segments_generator:

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 445, in generate_segments

encoder_output = self.encode(segment)

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\site-packages\faster_whisper\transcribe.py", line 629, in encode

return self.model.encode(features, to_cpu=to_cpu)

RuntimeError: Library cublas64_11.dll is not found or cannot be loaded

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "c:\faster-whisper-webui\cli.py", line 173, in <module>

cli()

File "c:\faster-whisper-webui\cli.py", line 159, in cli

result = transcriber.transcribe_file(model, source_path, temperature=temperature, vadOptions=vadOptions, **args)

File "c:\faster-whisper-webui\app.py", line 266, in transcribe_file

result = self.process_vad(audio_path, whisperCallable, self.vad_model, process_gaps, progressListener=progressListener)

File "c:\faster-whisper-webui\app.py", line 334, in process_vad

return parallel_vad.transcribe_parallel(transcription=vadModel, audio=audio_path, whisperCallable=whisperCallable,

File "c:\faster-whisper-webui\src\vadParallel.py", line 183, in transcribe_parallel

results = results_async.get()

File "C:\Users\wb491\AppData\Local\Programs\Python\Python310\lib\multiprocessing\pool.py", line 774, in get

raise self._value

RuntimeError: Library cublas64_11.dll is not found or cannot be loaded

c:\faster-whisper-webui>python cli.py --model large-v2 --vad silero-vad --language Japanese --output_dir c:\faster-whisper-webui\whisper_model c:\faster-whisper-webui\Downloads\test.mp4

Using faster-whisper for Whisper

[Auto parallel] Using GPU devices None and 8 CPU cores for VAD/transcription.

Creating whisper container for faster-whisper

Using parallel devices: None

Created Silerio model

Parallel VAD: Executing chunk from 0 to 74.072 on CPU device 0

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\test.mp4, start: 0, duration: 74.072

Processing VAD in chunk from 00:00.000 to 01:14.072

VAD processing took 4.756216799999947 seconds

Transcribing non-speech:

[{'end': 75.0716875, 'start': 0.0}]

Parallel VAD processing took 13.169814100000167 seconds

Device None (index 0) has 1 segments

(get_merged_timestamps) Using override timestamps of size 1

Processing timestamps:

[{'end': 75.0716875, 'start': 0.0}]

Running whisper from 00:00.000 to 01:15.072 , duration: 75.0716875 expanded: 0 prompt: None language: None

Loading faster whisper model large-v2 for device None

WARNING: fp16 option is ignored by faster-whisper - use compute_type instead.

[00:00:00.000->00:00:03.200] 稲妻神里流太刀術免許開伝

[00:00:03.200->00:00:04.500] 神里綾香

[00:00:04.500->00:00:05.500] 参ります!

[00:00:06.600->00:00:08.200] よろしくお願いします

[00:00:08.200->00:00:12.600] こののどかな時間がもっと増えると嬉しいのですが

[00:00:13.600->00:00:15.900] わたくしって欲張りですね

[00:00:15.900->00:00:18.100] 神里家の宿命や

[00:00:18.100->00:00:19.900] 社部業の重りは

[00:00:19.900->00:00:23.600] お兄様が一人で背負うべきものではありません

[00:00:23.600->00:00:27.600] 多くの方々がわたくしを継承してくださるのは

[00:00:27.600->00:00:28.700] わたくしを

[00:00:28.700->00:00:30.900] 白鷺の姫君や

[00:00:30.900->00:00:34.500] 社部業神里家の霊章として見ているからです

[00:00:34.500->00:00:38.400] 彼らが継承しているのはわたくしの立場であって

[00:00:38.400->00:00:41.600] 綾香という一戸人とは関係ございません

[00:00:41.600->00:00:43.300] 今のわたくしは

[00:00:43.300->00:00:47.300] みなさんから信頼される人になりたいと思っています

[00:00:47.300->00:00:49.700] その気持ちを鼓舞するものは

[00:00:49.700->00:00:52.300] 肩にのしかかる銃石でも

[00:00:52.300->00:00:54.700] 他人からの期待でもございません

[00:00:54.700->00:00:56.700] あなたがすでに

[00:00:56.800->00:00:58.800] そのようなお方だからです

[00:00:58.800->00:01:00.500] 今から言うことは

[00:01:00.500->00:01:03.900] 稲妻幕府社部業神里家の肩書きに

[00:01:03.900->00:01:06.200] ふさわしくないものかもしれません

[00:01:06.200->00:01:11.100] あなたはわたくしのわがままを受け入れてくださる方だと信じています

[00:01:11.100->00:01:12.500] 神里流

[00:01:12.500->00:01:14.000] 壮烈

Whisper took 39.726560900000095 seconds

Parallel transcription took 51.383910800000194 seconds

Max line width 80

Closing parallel contexts

Closing pool of 1 processes

Closing pool of 8 processes

c:\faster-whisper-webui>

c:\faster-whisper-webui>

c:\faster-whisper-webui>python cli.py --model large-v2 --vad silero-vad --language Japanese --output_dir c:\faster-whisper-webui\whisper_model c:\faster-whisper-webui\Downloads\下载.mp4

Using faster-whisper for Whisper

[Auto parallel] Using GPU devices None and 8 CPU cores for VAD/transcription.

Creating whisper container for faster-whisper

Using parallel devices: None

Created Silerio model

Parallel VAD: Executing chunk from 0 to 120 on CPU device 0

Parallel VAD: Executing chunk from 120 to 240 on CPU device 1

Parallel VAD: Executing chunk from 240 to 360 on CPU device 2

Parallel VAD: Executing chunk from 360 to 421.781333 on CPU device 3

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\下载.mp4, start: 0, duration: 120

Processing VAD in chunk from 00:00.000 to 02:00.000

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\下载.mp4, start: 120, duration: 240

Processing VAD in chunk from 02:00.000 to 04:00.000

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\下载.mp4, start: 240, duration: 360

Processing VAD in chunk from 04:00.000 to 06:00.000

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\下载.mp4, start: 360, duration: 421.781333

Processing VAD in chunk from 06:00.000 to 07:01.781

VAD processing took 4.5075393000001895 seconds

VAD processing took 8.374324500000057 seconds

VAD processing took 8.246470000000045 seconds

VAD processing took 8.29483479999999 seconds

Transcribing non-speech:

[{'end': 29.520000000000003, 'expand_amount': 0.0, 'start': 0.0},

{'end': 58.08, 'expand_amount': 0.0, 'start': 29.520000000000003},

{'end': 64.75200000000001, 'expand_amount': 0.0, 'start': 58.08},

{'end': 120.033, 'expand_amount': 0.0, 'start': 64.75200000000001},

{'end': 240.049, 'expand_amount': 0.0, 'start': 120.033},

{'end': 360.033, 'expand_amount': 0.0, 'start': 240.049},

{'end': 422.47, 'start': 360.033}]

Parallel VAD processing took 16.871334999999817 seconds

Device None (index 0) has 7 segments

(get_merged_timestamps) Using override timestamps of size 7

Processing timestamps:

[{'end': 29.520000000000003, 'expand_amount': 0.0, 'start': 0.0},

{'end': 58.08, 'expand_amount': 0.0, 'start': 29.520000000000003},

{'end': 64.75200000000001, 'expand_amount': 0.0, 'start': 58.08},

{'end': 120.033, 'expand_amount': 0.0, 'start': 64.75200000000001},

{'end': 240.049, 'expand_amount': 0.0, 'start': 120.033},

{'end': 360.033, 'expand_amount': 0.0, 'start': 240.049},

{'end': 422.47, 'start': 360.033}]

Running whisper from 00:00.000 to 00:29.520 , duration: 29.520000000000003 expanded: 0.0 prompt: None language: None

Loading faster whisper model large-v2 for device None

WARNING: fp16 option is ignored by faster-whisper - use compute_type instead.

[00:00:00.000->00:00:01.400] 前段時間有個巨勢橫幅

[00:00:01.400->00:00:03.000] 某某是男人最好衣媚

[00:00:03.000->00:00:03.800] 這裏的某某

[00:00:03.800->00:00:05.800] 可以替換為減肥、長髮、西裝、考研、

[00:00:05.800->00:00:07.800] 舒暢、永結無間等等等等

[00:00:07.800->00:00:09.300] 我聽到最新的壹個說法是

[00:00:09.300->00:00:12.000] 微分碎蓋加口罩加半框眼鏡加春風衣

[00:00:12.000->00:00:13.300] 等於男人最好衣媚

[00:00:13.300->00:00:14.400] 大概也就前幾年

[00:00:14.400->00:00:16.200] 春風衣還和格子襯衫並列為

[00:00:16.200->00:00:17.400] 程序員穿搭精華

[00:00:17.400->00:00:18.800] 紫紅色春風衣還被譽為

[00:00:18.800->00:00:20.000] 廣場舞大媽標配

[00:00:20.000->00:00:21.600] 駱駝牌還是我爹這個年紀的人

[00:00:21.600->00:00:22.800] 才會願意買的牌子

[00:00:22.900->00:00:24.500] 不知道風向為啥變得這麽快

[00:00:24.500->00:00:26.800] 為啥這東西突然變成男生逆襲神器

[00:00:26.800->00:00:27.900] 時尚潮流單品

[00:00:27.900->00:00:29.500] 後來我翻了壹下小紅書就懂了

Whisper took 34.66302619999988 seconds

Running whisper from 00:29.520 to 00:58.080 , duration: 28.559999999999995 expanded: 0.0 prompt: 為啥這東西突然變成男生逆襲神器 時尚潮流單品 後來我翻了壹下小紅書就懂了 language: ja

[00:00:00.000->00:00:02.800] 時尚這個時期 重點不在於衣服 在於人

[00:00:02.800->00:00:05.120] 現在小紅書上面和春風衣相關的筆記

[00:00:05.120->00:00:06.840] 照片裏的男生都是這樣的

[00:00:06.840->00:00:08.560] 這樣的 還有這樣的

[00:00:08.560->00:00:11.080] 妳們哪裏是看穿搭的 妳們明明是看臉

[00:00:11.080->00:00:12.360] 就這個造型 這個年齡

[00:00:12.360->00:00:14.560] 妳換上老頭衫也能穿出氛圍感好嗎

[00:00:14.560->00:00:17.200] 我又想起了當年郭德綱老師穿季帆西的殘劇

[00:00:17.200->00:00:19.080] 這個世界對我們這些長得不好看的人

[00:00:19.080->00:00:20.160] 還真是苛刻呢

[00:00:20.160->00:00:22.560] 所以說 我總結了壹下春風衣傳達的要領

[00:00:22.560->00:00:24.800] 大概就是壹張白凈且人畜無憾的臉

[00:00:24.800->00:00:26.560] 充足的髮量 纖細的體型

[00:00:26.560->00:00:28.800] 當然 身上的春風衣還得是駱駝的

Whisper took 10.559502200000225 seconds

Running whisper from 00:58.080 to 01:04.752 , duration: 6.672000000000011 expanded: 0.0 prompt: 充足的髮量 纖細的體型 當然 身上的春風衣還得是駱駝的 language: ja

[00:00:00.000->00:00:06.560] 去年在戶外用品界 最頂流的既不是鳥像術 也不是有校服之稱的北面 或者老牌頂流哥倫比亞 而是駱駝

Whisper took 2.5670344999998633 seconds

Running whisper from 01:04.752 to 02:00.033 , duration: 55.28099999999999 expanded: 0.0 prompt: 去年在戶外用品界 最頂流的既不是鳥像術 也不是有校服之稱的北面 或者老牌頂流哥倫比亞 而是駱駝 language: ja

[00:00:00.000->00:00:05.320] 雙十一 駱駝在天貓戶外服飾品類 拿下銷售額和銷量雙料冠軍 銷量達到百萬級

[00:00:05.320->00:00:11.240] 在抖音 駱駝銷售同比增幅高達296% 旗下主打的三合一高性價比春風衣成為爆品

[00:00:11.240->00:00:16.400] 哪怕不看雙十一 隨手一搜 駱駝在春風衣的七日銷售榜上都是土榜的存在

[00:00:16.400->00:00:19.520] 這是線上的銷售表現 至於線下 還是網友總結的好

[00:00:19.520->00:00:25.160] 如今在南方街頭的駱駝比沙漠裡的都多 爬個華山 滿山的駱駝 隨便逛個街撞山了

[00:00:25.200->00:00:30.680] 至於駱駝為啥這麼火 便宜呀 拿賣得最好的釘針同款 幻影黑三合一春風衣舉個例子

[00:00:30.680->00:00:35.880] 線下買 標牌價格2198 但是跑到網上看一下 標價就變成了699

[00:00:35.880->00:00:40.360] 至於折扣 日常也都是有的 400出頭就能買到 甚至有時候能低到300價

[00:00:40.360->00:00:46.920] 要是你還嫌貴 路上還有200塊出頭的單層春風衣 就這個價格 割上海恐怕還不夠兩次Citywalk的報名費

[00:00:46.920->00:00:53.480] 看來這個價格 再對比一下北面 1000塊錢起步 你就能理解為啥北面這麼快就被大學生踢出了校服序列了

[00:00:53.480->00:00:55.640] 我不知道現在大學生每個月生活

Whisper took 20.24641240000028 seconds

Running whisper from 02:00.033 to 04:00.049 , duration: 120.016 expanded: 0.0 prompt: 看來這個價格 再對比一下北面 1000塊錢起步 你就能理解為啥北面這麼快就被大學生踢出了校服序列了 我不知道現在大學生每個月生活 language: ja

[00:00:00.000->00:00:02.000] 反正我上學時候的生活費

[00:00:02.000->00:00:04.000] 一個月不吃不喝 也就買得起倆袖子 加一個帽子

[00:00:04.000->00:00:08.000] 難怪當年全是假北面 現在都是真駱駝 至少人家是正品啊

[00:00:08.000->00:00:13.000] 我翻了一下社交媒體 發現對駱駝的吐槽和買了駱駝的 基本上是1比1的比例

[00:00:13.000->00:00:15.000] 吐槽最多的就是衣服會掉色 還會串色

[00:00:15.000->00:00:18.000] 比如徒增洗個幾次 穿個兩天就掉光了

[00:00:18.000->00:00:22.000] 比如不同倉庫發的貨 質量參差不齊 買衣服還得看戶口 拼出身

[00:00:22.000->00:00:26.000] 至於什麼做工比較差 內檔薄 走線糙 不防水之類的 就更多了

[00:00:26.000->00:00:32.000] 但是這些吐槽並不意味著會影響駱駝的銷量 甚至還會有不少自來水表示 就這價格 要啥自行車

[00:00:32.000->00:00:37.000] 所謂性價比性價比 脫離價位談性能 這就不符合消費者的需求嘛

[00:00:37.000->00:00:41.000] 無數次價格戰告訴我們 只要肯降價 就沒有賣不出去的產品

[00:00:41.000->00:00:46.000] 一件春風衣1000多 你覺得平平無奇 500多你覺得差點意思 200塊你就秒下單了

[00:00:46.000->00:00:48.000] 到99恐怕就要拼點手速了

[00:00:48.000->00:00:50.000] 像春風衣這個品類 本來價格跨度就大

[00:00:51.000->00:00:56.000] 北面最便宜的GORE-TEX春風衣 價格3000起步 大概是同品牌最便宜春風衣的三倍價格

[00:00:56.000->00:01:00.000] 至於十足鳥 搭載了GORE-TEX的硬殼起步價就要到4500

[00:01:00.000->00:01:05.000] 而且同樣是GORE-TEX 內部也有不同的系列和檔次 做成衣服 中間的差價恐怕就夠買兩件駱駝了

[00:01:05.000->00:01:11.000] 至於智能控溫 防水拉鍊 全壓膠 更加不可能出現在駱駝這裡了 至少不會是三四百的駱駝身上會有的

[00:01:11.000->00:01:16.000] 有的價位的衣服 買的就是一個放棄幻想 吃到肚子裏的科技魚很活 是能給你省錢的

[00:01:20.000->00:01:26.000] 所以正如羅曼羅蘭所說 這世界上只有一種英雄主義 就是在認清了駱駝的本質以後 依然選擇買駱駝

[00:01:26.000->00:01:32.000] 關於駱駝的火爆 我有一些小小的看法 駱駝這個東西 它其實就是個潮牌 看看它的行銷方式就知道了

[00:01:32.000->00:01:37.000] 現在打開小紅書 日常可以看到駱駝穿搭是這樣的 加一點氛圍感 是這樣的

[00:01:37.000->00:01:40.000] 對比一下 其他品牌的風格是這樣的 這樣的

[00:01:40.000->00:01:46.000] 其實對比一下就知道了 其他品牌突出一個時程 能防風就一定要講防風 能扛動就一定要講扛動

[00:01:46.000->00:01:52.000] 但駱駝在行銷的時候 主打的就是一個城市戶外風 雖然造型是春風衣 但場景往往是在城市裏

[00:01:52.000->00:01:58.000] 哪怕在野外也要突出一個風和日麗 陽光明媚 至少不會在明顯的嚴寒 高海拔或是惡劣氣候下

[00:01:58.000->00:02:00.000] 如果用一個詞形容駱駝的行銷風格

Whisper took 44.35973899999999 seconds

Running whisper from 04:00.049 to 06:00.033 , duration: 119.98400000000001 expanded: 0.0 prompt: 哪怕在野外也要突出一個風和日麗 陽光明媚 至少不會在明顯的嚴寒 高海拔或是惡劣氣候下 如果用一個詞形容駱駝的行銷風格 language: ja

[00:00:00.000->00:00:03.900] 那就是星系 或者說他很理解自己的消費者是誰 需要什麼產品

[00:00:03.900->00:00:08.600] 從使用場景來說 駱駝的消費者買春風衣 不是真的有什麼大風大雨要去應對

[00:00:08.600->00:00:13.500] 春風衣的作用是下雨沒帶傘的時候 臨時頂個幾分鐘 讓你能圖書館跑回宿舍

[00:00:13.500->00:00:18.300] 或者是冬天騎電動車 被風吹得不行的時候 稍微扛一下風 不至於體感太冷

[00:00:18.300->00:00:23.900] 當然 他們也會出門 但大部分時候也都是去別的城市 或者在城市周邊搞搞簡單的徒步

[00:00:23.900->00:00:29.200] 這種情況下 穿個駱駝已經夠了 從購買動機來說 駱駝就更沒有必要上那些硬核科技了

[00:00:29.300->00:00:33.500] 消費者買駱駝買的是個什麼呢 不是春風衣的功能性 而是春風衣的造型

[00:00:33.500->00:00:39.500] 寬鬆的版型 能精準遮住微微隆起的小肚子 棱角分明的質感 能隱藏一切不完美的整體線條

[00:00:39.500->00:00:45.200] 選售的副作用就是顯年輕 再配上一條牛仔褲 配上一雙大黃靴 大學生的氣質就出來了

[00:00:45.200->00:00:50.700] 要是自拍的時候再配上大學宿舍洗漱台 那永遠擦不乾淨的鏡子 瞬間青春無敵了

[00:00:50.700->00:00:55.900] 說得更直白一點 人家買的是個減齡神器 所以說 吐槽穿駱駝都是假戶外愛好者的人

[00:00:55.900->00:01:03.000] 其實並沒有理解駱駝的定位 駱駝其實是給了想要入門山西穿搭 想要追逐流行的人一個最平價 決策成本最低的選擇

[00:01:03.000->00:01:07.100] 至於那些真正的硬核戶外愛好者 駱駝既沒有能力 也沒有打算觸打他們

[00:01:07.100->00:01:11.700] 反過來說 那些自駕穿越邊疆國道 或者去阿爾卑斯山區登山探險的人

[00:01:11.700->00:01:16.500] 也不太可能在戶外服飾上省錢 畢竟光是交通住宿 請假出行 成本就不低了

[00:01:16.500->00:01:21.000] 對他們來說 戶外裝備很多時候是保命用的 也就不存在跟風凹造型的必要了

[00:01:21.000->00:01:25.800] 最後我再說個題外話 年輕人追捧駱駝 一個隱藏的原因 其實是羽絨服越來越貴了

[00:01:25.800->00:01:31.900] 有媒體統計 現在國產羽絨服的平均售價已經高達881元 波斯登均價最高 接近2000元

[00:01:31.900->00:01:38.400] 而且過去幾年 國產羽絨服品牌都在轉向高端化 羽絨服市場分為8000元以上的奢侈級 2000元以下的大眾級

[00:01:38.400->00:01:43.500] 而在中間的高端級 國產品牌一直沒有存在感 所以過去幾年 波斯登天空人這些品牌

[00:01:43.500->00:01:46.600] 都把2000元到8000元這個市場當成未來的發展趨勢

[00:01:46.700->00:01:52.100] 東新證券研報顯示 從2018到2021年 波斯登均價4年漲幅達到60%以上

[00:01:52.100->00:01:55.900] 過去5個財年 這個品牌的營銷開支從20多億漲到了60多億

[00:01:55.900->00:01:59.900] 羽絨服價格往上走 年輕消費者就開始拋棄羽絨服 購買平價衝刺

Whisper took 45.460721999999805 seconds

Running whisper from 06:00.033 to 07:02.470 , duration: 62.43700000000001 expanded: 0 prompt: 羽絨服價格往上走 年輕消費者就開始拋棄羽絨服 購買平價衝刺 language: ja

[00:00:00.000->00:00:05.000] 裏面で普通の羽絨服や羽絨小夾克を着ても 大きな羽絨服よりは少ない

[00:00:05.000->00:00:07.000] 結局 今は消費社会が発達している

[00:00:07.000->00:00:11.000] 必要はありません 特定の解決方案 特定の価格の商品が実現できる

[00:00:11.000->00:00:15.000] 暖かい羽絨服はもちろん良いが 羽絨服に内装もとても暖かい

[00:00:15.000->00:00:18.000] ファッション的には 大きな羽絨服のデザイナーの品牌はとても良い

[00:00:18.000->00:00:20.000] しかし3510のピンドドーフは 大きな羽絨服でも販売できる

[00:00:20.000->00:00:23.000] 野外を走るために 56000円の羽絨服を買ってもいい

[00:00:23.000->00:00:25.000] しかし ディカノンは大きな羽絨服を扱うことができる

[00:00:25.000->00:00:27.000] だから 高価な羽絨服を買ってもいい

[00:00:27.000->00:00:29.000] 3400円のヌードルを買ってもいい

[00:00:29.000->00:00:31.000] ヌードルは少しだけ効能性がある

[00:00:31.000->00:00:33.000] しかし どうしても羽絨服なのに

[00:00:33.000->00:00:36.000] このことを理解すると 知識税の価格が分かりやすくなる

[00:00:36.000->00:00:39.000] あなたに依頼された品牌を使わないと

[00:00:39.000->00:00:41.000] あなたに依頼された商品だけが満足する

[00:00:41.000->00:00:44.000] あなたの品牌はあなたの品類の絶対の比試鏈の頂点

[00:00:44.000->00:00:46.000] このような銀行の知識税の量は必ず高い

[00:00:46.000->00:00:48.000] 目的はあなたの選択の権利を奪うこと

[00:00:48.000->00:00:51.000] あなたは比値を放棄し 平坦な考えを探し

[00:00:51.000->00:00:53.000] 他の品牌と競争しない

[00:00:53.000->00:00:56.000] 競争のない市場は知識税の量が最も高い市場

[00:00:56.000->00:00:58.000] 消費 商業動線 近在IC實驗室

[00:00:58.000->00:01:00.000] 私は館長 次回お会いしましょう

Whisper took 25.157735799999955 seconds

Parallel transcription took 196.19273870000006 seconds

Max line width 40

Closing parallel contexts

Closing pool of 1 processes

Closing pool of 8 processes

c:\faster-whisper-webui>

c:\faster-whisper-webui>python cli.py --model large-v2 --vad silero-vad --language Chinese --output_dir c:\faster-whisper-webui\whisper_model c:\faster-whisper-webui\Downloads\chi.mp4

Using faster-whisper for Whisper

[Auto parallel] Using GPU devices None and 8 CPU cores for VAD/transcription.

Creating whisper container for faster-whisper

Using parallel devices: None

Created Silerio model

Parallel VAD: Executing chunk from 0 to 120 on CPU device 0

Parallel VAD: Executing chunk from 120 to 240 on CPU device 1

Parallel VAD: Executing chunk from 240 to 360 on CPU device 2

Parallel VAD: Executing chunk from 360 to 421.781333 on CPU device 3

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\chi.mp4, start: 0, duration: 120

Processing VAD in chunk from 00:00.000 to 02:00.000

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\chi.mp4, start: 120, duration: 240

Processing VAD in chunk from 02:00.000 to 04:00.000

Loaded Silerio model from cache.

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\chi.mp4, start: 360, duration: 421.781333

Loaded Silerio model from cache.

Processing VAD in chunk from 06:00.000 to 07:01.781

Getting timestamps from audio file: c:\faster-whisper-webui\Downloads\chi.mp4, start: 240, duration: 360

Processing VAD in chunk from 04:00.000 to 06:00.000

VAD processing took 4.639110800000253 seconds

VAD processing took 8.159098900000117 seconds

VAD processing took 8.465097299999798 seconds

VAD processing took 8.469800100000157 seconds

Transcribing non-speech:

[{'end': 29.520000000000003, 'expand_amount': 0.0, 'start': 0.0},

{'end': 58.08, 'expand_amount': 0.0, 'start': 29.520000000000003},

{'end': 64.75200000000001, 'expand_amount': 0.0, 'start': 58.08},

{'end': 120.033, 'expand_amount': 0.0, 'start': 64.75200000000001},

{'end': 240.049, 'expand_amount': 0.0, 'start': 120.033},

{'end': 360.033, 'expand_amount': 0.0, 'start': 240.049},

{'end': 422.47, 'start': 360.033}]

Parallel VAD processing took 17.05337690000033 seconds

Device None (index 0) has 7 segments

(get_merged_timestamps) Using override timestamps of size 7

Processing timestamps:

[{'end': 29.520000000000003, 'expand_amount': 0.0, 'start': 0.0},

{'end': 58.08, 'expand_amount': 0.0, 'start': 29.520000000000003},

{'end': 64.75200000000001, 'expand_amount': 0.0, 'start': 58.08},

{'end': 120.033, 'expand_amount': 0.0, 'start': 64.75200000000001},

{'end': 240.049, 'expand_amount': 0.0, 'start': 120.033},

{'end': 360.033, 'expand_amount': 0.0, 'start': 240.049},

{'end': 422.47, 'start': 360.033}]

Running whisper from 00:00.000 to 00:29.520 , duration: 29.520000000000003 expanded: 0.0 prompt: None language: None

Loading faster whisper model large-v2 for device None

WARNING: fp16 option is ignored by faster-whisper - use compute_type instead.

[00:00:00.000->00:00:01.400] 前段時間有個句式很火

[00:00:01.400->00:00:03.000] 某某是男人最好的衣媚

[00:00:03.000->00:00:03.800] 這裏的某某

[00:00:03.800->00:00:05.600] 可以替換為減肥 長髮 西裝

[00:00:05.600->00:00:07.800] 考研 舒暢 永結無間等等等等

[00:00:07.800->00:00:09.200] 我聽到最新的一個說法是

[00:00:09.200->00:00:12.000] 微分碎蓋加口罩加半框眼鏡加春風衣

[00:00:12.000->00:00:13.400] 等於男人最好的衣媚

[00:00:13.400->00:00:14.400] 大概也就前幾年

[00:00:14.400->00:00:16.200] 春風衣還和格子襯衫並列為

[00:00:16.200->00:00:17.400] 程序員穿搭精華

[00:00:17.400->00:00:18.800] 紫紅色春風衣還被譽為

[00:00:18.800->00:00:20.000] 廣場舞大媽標配

[00:00:20.000->00:00:21.600] 駱駝牌還是我爹這個年紀的人

[00:00:21.600->00:00:22.800] 才會願意買的牌子

[00:00:22.800->00:00:24.400] 不知道風向為啥變得這麽快

[00:00:24.600->00:00:26.800] 為啥這東西突然變成男生逆襲神器

[00:00:26.800->00:00:27.800] 時尚潮流單品

[00:00:27.800->00:00:29.400] 後來我翻了一下小紅書就懂了

Whisper took 40.29062799999974 seconds

Running whisper from 00:29.520 to 00:58.080 , duration: 28.559999999999995 expanded: 0.0 prompt: 為啥這東西突然變成男生逆襲神器 時尚潮流單品 後來我翻了一下小紅書就懂了 language: zh

[00:00:00.000->00:00:02.800] 時尚這個時期 重點不在於衣服 在於人

[00:00:02.800->00:00:05.120] 現在小紅書上面和春風衣相關的筆記

[00:00:05.120->00:00:06.680] 照片裏的男生都是這樣的

[00:00:06.680->00:00:07.560] 這樣的

[00:00:07.560->00:00:08.560] 還有這樣的

[00:00:08.560->00:00:09.920] 你們哪裏是看穿搭的

[00:00:09.920->00:00:11.080] 你們明明是看臉

[00:00:11.080->00:00:12.360] 就這個造型 這個年齡

[00:00:12.360->00:00:14.560] 你換上老頭衫也能穿出氛圍感好嗎

[00:00:14.560->00:00:17.200] 我又想起了當年郭德綱老師穿季帆西的殘劇

[00:00:17.200->00:00:19.080] 這個世界對我們這些長得不好看的人

[00:00:19.080->00:00:20.160] 還真是苛刻呢

[00:00:20.160->00:00:22.560] 所以說我總結了一下春風衣傳達的要領

[00:00:22.560->00:00:24.800] 大概就是一張白淨且人畜無憾的臉

[00:00:24.800->00:00:26.560] 充足的髮量 纖細的體型

[00:00:26.560->00:00:28.800] 當然身上的春風衣還得是駱駝的

Whisper took 10.647091300000284 seconds

Running whisper from 00:58.080 to 01:04.752 , duration: 6.672000000000011 expanded: 0.0 prompt: 充足的髮量 纖細的體型 當然身上的春風衣還得是駱駝的 language: zh

[00:00:00.000->00:00:02.000] 去年在戶外用品界最頂流的

[00:00:02.000->00:00:03.000] 既不是鳥像鼠

[00:00:03.000->00:00:04.600] 也不是有校服之稱的北面

[00:00:04.600->00:00:06.080] 或者老牌頂流哥倫比亞

[00:00:06.080->00:00:06.600] 而是駱駝

Whisper took 2.689283200000318 seconds

Running whisper from 01:04.752 to 02:00.033 , duration: 55.28099999999999 expanded: 0.0 prompt: 也不是有校服之稱的北面 或者老牌頂流哥倫比亞 而是駱駝 language: zh

[00:00:00.000->00:00:02.320] 雙十一 駱駝在天貓戶外服飾品類

[00:00:02.320->00:00:05.320] 拿下銷售額和銷量雙料冠軍 銷量達到百萬級

[00:00:05.320->00:00:08.520] 在抖音 駱駝銷售同比增幅高達296%

[00:00:08.520->00:00:11.240] 旗下主打的三合一高性價比春風衣成為爆品

[00:00:11.240->00:00:13.320] 哪怕不看雙十一 隨手一搜

[00:00:13.320->00:00:16.400] 駱駝在春風衣的七日銷售榜上都是圖榜的存在

[00:00:16.400->00:00:19.520] 這是線上的銷售表現 至於線下還是網友總結的好

[00:00:19.520->00:00:22.040] 如今在南方街頭的駱駝比沙漠裡的都多

[00:00:22.080->00:00:25.200] 塔克化山 滿山的駱駝 隨便逛個街撞山了

[00:00:25.200->00:00:27.120] 至於駱駝為啥這麼火 便宜啊

[00:00:27.120->00:00:30.680] 拿賣得最好的丁真同款 幻影黑三合一春風衣舉個例子

[00:00:30.680->00:00:34.320] 線下買 標牌價格2198 但是跑到網上看一下

[00:00:34.320->00:00:37.560] 標價就變成了699 至於折扣 日常也都是有的

[00:00:37.560->00:00:40.360] 400出頭就能買到 甚至有時候能低到300價

[00:00:40.360->00:00:43.560] 要是你還嫌貴 路上還有200塊出頭的單層春風衣

[00:00:43.560->00:00:46.920] 就這個價格 割上海恐怕還不夠兩次Citywalk的報名費

[00:00:46.920->00:00:48.880] 看了這個價格 再對比一下北面

[00:00:48.960->00:00:51.320] 1000塊錢起步 你就能理解為啥北面

[00:00:51.320->00:00:53.560] 這麼快就被大學生踢出了校服序列了

[00:00:53.560->00:00:55.280] 我不知道現在大學生每個月生活費

Whisper took 23.000137900000027 seconds

Running whisper from 02:00.033 to 04:00.049 , duration: 120.016 expanded: 0.0 prompt: 這麼快就被大學生踢出了校服序列了 我不知道現在大學生每個月生活費 language: zh

[00:00:00.000->00:00:02.000] 反正按照我上學時候的生活費

[00:00:02.000->00:00:03.000] 一個月不吃不喝

[00:00:03.000->00:00:04.000] 也就買得起兩袖子

[00:00:04.000->00:00:05.000] 加一個帽子

[00:00:05.000->00:00:06.000] 難怪當年全是假北面

[00:00:06.000->00:00:07.000] 現在都是真駱駝

[00:00:07.000->00:00:08.000] 至少人家是正品啊

[00:00:08.000->00:00:10.000] 我翻了一下社交媒體

[00:00:10.000->00:00:11.000] 發現對駱駝的吐槽

[00:00:11.000->00:00:12.000] 和買了駱駝的

[00:00:12.000->00:00:13.000] 基本上是1比1的比例

[00:00:13.000->00:00:14.000] 吐槽最多的就是

[00:00:14.000->00:00:15.000] 衣服會掉色

[00:00:15.000->00:00:16.000] 還會串色

[00:00:16.000->00:00:17.000] 比如圖層洗個幾次

[00:00:17.000->00:00:18.000] 穿個兩天就掉光了

[00:00:18.000->00:00:19.000] 比如不同倉庫發的貨

[00:00:19.000->00:00:20.000] 質量參差不齊

[00:00:20.000->00:00:21.000] 買衣服還得看戶口

[00:00:21.000->00:00:22.000] 拼出身

[00:00:22.000->00:00:23.000] 至於什麼做工比較差

[00:00:23.000->00:00:24.000] 內檔薄

[00:00:24.000->00:00:25.000] 走線糙

[00:00:25.000->00:00:26.000] 不防水之類的

[00:00:26.000->00:00:27.000] 就更多了

[00:00:27.000->00:00:28.000] 但是這些吐槽

[00:00:28.000->00:00:29.000] 並不意味著會影響

[00:00:29.000->00:00:30.000] 駱駝的銷量

[00:00:30.000->00:00:31.000] 甚至還會有不少自來水表示

[00:00:31.000->00:00:32.000] 就這價格

[00:00:32.000->00:00:33.000] 要啥自行車

[00:00:33.000->00:00:34.000] 所謂性價比性價比

[00:00:34.000->00:00:35.000] 脫離價位談性質

[00:00:35.000->00:00:37.000] 這就不符合消費者的需求嘛

[00:00:37.000->00:00:38.000] 無數次價格戰告訴我們

[00:00:38.000->00:00:39.000] 只要肯降價

[00:00:39.000->00:00:41.000] 就沒有賣不出去的產品

[00:00:41.000->00:00:42.000] 一件春風衣

[00:00:42.000->00:00:43.000] 1000多

[00:00:43.000->00:00:44.000] 你覺得平平無奇

[00:00:44.000->00:00:45.000] 500多你覺得差點意思

[00:00:45.000->00:00:46.000] 200塊你就秒下單了

[00:00:46.000->00:00:47.000] 到99

[00:00:47.000->00:00:48.000] 恐怕就要拼點手速了

[00:00:48.000->00:00:49.000] 像春風衣這個品類

[00:00:49.000->00:00:50.000] 本來價格跨度就大

[00:00:51.000->00:00:52.000] 北面最便宜的

[00:00:52.000->00:00:53.000] GORE-TEX春風衣

[00:00:53.000->00:00:54.000] 價格3000起步

[00:00:54.000->00:00:55.000] 大概是同品牌

[00:00:55.000->00:00:56.000] 最便宜春風衣的三倍價格

[00:00:56.000->00:00:57.000] 至於十足鳥

[00:00:57.000->00:00:58.000] 搭載了GORE-TEX的

[00:00:58.000->00:00:59.000] 硬殼起步價

[00:00:59.000->00:01:00.000] 就要到4500

[00:01:00.000->00:01:01.000] 而且同樣是GORE-TEX

[00:01:01.000->00:01:03.000] 內部也有不同的系列和檔次

[00:01:03.000->00:01:04.000] 做成衣服

[00:01:04.000->00:01:05.000] 中間的插架

[00:01:05.000->00:01:06.000] 恐怕就夠買兩件駱駝了

[00:01:06.000->00:01:07.000] 至於智能控溫

[00:01:07.000->00:01:08.000] 防水拉鍊

[00:01:08.000->00:01:09.000] 全壓膠

[00:01:09.000->00:01:10.000] 更加不可能出現在駱駝這裡了

[00:01:10.000->00:01:11.000] 至少不會是

[00:01:11.000->00:01:12.000] 三四百的駱駝身上會有的

[00:01:12.000->00:01:13.000] 有的價位的衣服

[00:01:14.000->00:01:16.000] 吃到肚子裡的科技魚很活

[00:01:16.000->00:01:17.000] 是能給你省錢的

[00:01:17.000->00:01:18.000] 穿在身上的科技魚很活

[00:01:18.000->00:01:20.000] 裝裝件件都是要加錢的

[00:01:20.000->00:01:21.000] 所以正如羅曼羅蘭所說

[00:01:21.000->00:01:23.000] 這世界上只有一種英雄主義

[00:01:23.000->00:01:25.000] 就是在認清了駱駝的本質以後

[00:01:25.000->00:01:26.000] 依然選擇買駱駝

[00:01:26.000->00:01:27.000] 關於駱駝的火爆

[00:01:27.000->00:01:28.000] 我有一些小小的看法

[00:01:28.000->00:01:29.000] 駱駝這個東西

[00:01:29.000->00:01:30.000] 它其實就是個潮牌

[00:01:30.000->00:01:32.000] 看看它的營銷方式就知道了

[00:01:32.000->00:01:33.000] 現在打開小紅書

[00:01:33.000->00:01:35.000] 日常可以看到駱駝穿搭是這樣的

[00:01:35.000->00:01:36.000] 加一點氛圍感

[00:01:36.000->00:01:37.000] 是這樣的

[00:01:37.000->00:01:38.000] 對比一下

[00:01:38.000->00:01:39.000] 其他品牌的風格是這樣的

[00:01:39.000->00:01:40.000] 這樣的

[00:01:40.000->00:01:41.000] 其實對比一下就知道了

[00:01:41.000->00:01:42.000] 其他品牌突出一個時程

[00:01:42.000->00:01:44.000] 能防風就一定要講防風

[00:01:44.000->00:01:46.000] 能扛凍就一定要講扛凍

[00:01:46.000->00:01:47.000] 但駱駝在營銷的時候

[00:01:47.000->00:01:49.000] 主打的就是一個城市戶外風

[00:01:49.000->00:01:50.000] 雖然造型是春風衣

[00:01:50.000->00:01:52.000] 但場景往往是在城市裏

[00:01:52.000->00:01:53.000] 哪怕在野外

[00:01:53.000->00:01:54.000] 也要突出一個風和日麗

[00:01:54.000->00:01:55.000] 陽光明媚

[00:01:55.000->00:01:56.000] 至少不會在明顯的

[00:01:56.000->00:01:57.000] 嚴寒 高海拔

[00:01:57.000->00:01:58.000] 或是惡劣氣候下

[00:01:58.000->00:01:59.000] 如果用一個詞

[00:01:59.000->00:02:00.000] 形容駱駝的營銷風格

Whisper took 52.58393429999933 seconds

Running whisper from 04:00.049 to 06:00.033 , duration: 119.98400000000001 expanded: 0.0 prompt: 或是惡劣氣候下 如果用一個詞 形容駱駝的營銷風格 language: zh

[00:00:00.000->00:00:01.000] 那就是欣喜

[00:00:01.000->00:00:03.000] 或者說他很理解自己的消費者是誰

[00:00:03.000->00:00:04.000] 需要什麼產品

[00:00:04.000->00:00:05.200] 從使用場景來說

[00:00:05.200->00:00:06.600] 駱駝的消費者買春風衣

[00:00:06.600->00:00:08.700] 不是真的有什麼大風大雨要去應對

[00:00:08.700->00:00:10.900] 春風衣的作用是下雨沒帶傘的時候

[00:00:10.900->00:00:12.000] 臨時頂個幾分鐘

[00:00:12.000->00:00:13.600] 讓你能圖書館跑回宿舍

[00:00:13.600->00:00:14.900] 或者是冬天騎電動車

[00:00:14.900->00:00:16.200] 被風吹的不行的時候

[00:00:16.200->00:00:17.200] 稍微扛一下風

[00:00:17.200->00:00:18.400] 不至於體感太冷

[00:00:18.400->00:00:19.700] 當然他們也會出門

[00:00:19.700->00:00:21.800] 但大部分時候也都是去別的城市

[00:00:21.800->00:00:24.000] 或者在城市周邊搞搞簡單的徒步

[00:00:24.000->00:00:24.800] 這種情況下

[00:00:24.800->00:00:25.900] 穿個駱駝已經夠了

[00:00:25.900->00:00:27.100] 從購買動機來說

[00:00:27.100->00:00:29.300] 駱駝就更沒有必要上那些硬核科技了

[00:00:29.300->00:00:30.900] 消費者買駱駝買的是個什麼呢

[00:00:30.900->00:00:32.200] 不是春風衣的功能性

[00:00:32.200->00:00:33.500] 而是春風衣的造型

[00:00:33.500->00:00:34.300] 寬鬆的版型

[00:00:34.300->00:00:36.300] 能精準遮住微微隆起的小肚子

[00:00:36.300->00:00:37.400] 棱角分明的質感

[00:00:37.400->00:00:39.500] 能隱藏一切不完美的整體線條

[00:00:39.500->00:00:41.300] 選秀的副作用就是顯年輕

[00:00:41.300->00:00:42.600] 再配上一條牛仔褲

[00:00:42.600->00:00:43.700] 配上一雙大黃靴

[00:00:43.700->00:00:45.200] 大學生的氣質就出來了

[00:00:45.200->00:00:46.100] 要是自拍的時候

[00:00:46.100->00:00:47.700] 再配上大學宿舍洗漱臺

[00:00:47.700->00:00:49.300] 那永遠擦不乾淨的鏡子

[00:00:49.300->00:00:50.700] 瞬間青春無敵了

[00:00:50.700->00:00:51.700] 說得更直白一點

[00:00:51.700->00:00:53.300] 人家買的是個減齡神器

[00:00:53.300->00:00:53.800] 所以說

[00:00:53.800->00:00:55.900] 吐槽穿駱駝都是假戶外愛好者的人

[00:00:55.900->00:00:57.600] 其實並沒有理解駱駝的定位

[00:00:57.600->00:00:59.800] 駱駝其實是給了想要入門山西穿搭

[00:00:59.800->00:01:01.700] 想要追逐流行的人一個最平價

[00:01:01.700->00:01:03.100] 決策成本最低的選擇

[00:01:03.100->00:01:04.900] 至於那些真正的硬核戶外愛好者

[00:01:04.900->00:01:05.800] 駱駝既沒有能力

[00:01:05.800->00:01:07.200] 也沒有打算觸打他們

[00:01:07.200->00:01:07.900] 反過來說

[00:01:07.900->00:01:09.500] 那些自駕穿越邊疆國道

[00:01:09.500->00:01:11.800] 或者去阿爾卑斯山區登山探險的人

[00:01:11.800->00:01:13.700] 也不太可能在戶外服飾上省錢

[00:01:13.700->00:01:14.900] 畢竟光是交通住宿

[00:01:14.900->00:01:15.600] 請假出行

[00:01:15.600->00:01:16.600] 成本就不低了

[00:01:16.600->00:01:17.300] 對他們來說

[00:01:17.300->00:01:19.100] 戶外裝備很多時候是保命用的

[00:01:19.100->00:01:21.100] 也就不存在跟風凹造型的必要了

[00:01:21.100->00:01:22.400] 最後我再說個題外話

[00:01:22.400->00:01:23.400] 年輕人追捧駱駝

[00:01:23.400->00:01:24.300] 一個隱藏的原因

[00:01:24.300->00:01:25.900] 其實是羽絨服越來越貴了

[00:01:25.900->00:01:26.700] 有媒體統計

[00:01:26.700->00:01:28.400] 現在國產羽絨服的平均售價

[00:01:28.400->00:01:30.100] 已經高達881元

[00:01:30.100->00:01:31.200] 波斯登均價最高

[00:01:31.200->00:01:32.000] 接近2000元

[00:01:32.000->00:01:32.900] 而且過去幾年

[00:01:32.900->00:01:34.900] 國產羽絨服品牌都在轉向高端化

[00:01:34.900->00:01:37.200] 羽絨服市場分為8000元以上的奢侈級

[00:01:37.200->00:01:38.600] 2000元以下的大眾級

[00:01:38.600->00:01:39.800] 而在中間的高端級

[00:01:39.800->00:01:41.300] 國產品牌一直沒有存在感

[00:01:41.300->00:01:42.100] 所以過去幾年

[00:01:42.100->00:01:43.600] 波斯登天空人這些品牌

[00:01:43.600->00:01:45.200] 都把2000元到8000元這個市場

[00:01:45.200->00:01:46.700] 當成未來的發展趨勢

[00:01:46.700->00:01:48.000] 東新證券研報顯示

[00:01:48.000->00:01:49.600] 從2018到2021年

[00:01:49.600->00:01:52.200] 波斯登均價4年漲幅達到60%以上

[00:01:52.200->00:01:53.100] 過去5個財年

[00:01:53.100->00:01:54.200] 這個品牌的營銷開支

[00:01:54.200->00:01:56.000] 從20多億漲到了60多億

[00:01:56.000->00:01:57.200] 羽絨服價格往上走

[00:01:57.200->00:01:59.200] 年輕消費者就開始拋棄羽絨服

[00:01:59.200->00:02:00.000] 購買平價衝刺

Whisper took 51.417154000000664 seconds

Running whisper from 06:00.033 to 07:02.470 , duration: 62.43700000000001 expanded: 0 prompt: 羽絨服價格往上走 年輕消費者就開始拋棄羽絨服 購買平價衝刺 language: zh

[00:00:00.000->00:00:03.000] 裡面再穿個普通價位的搖立絨或者羽絨小夾克

[00:00:03.000->00:00:05.000] 也不比大幾千的羽絨服差多少

[00:00:05.000->00:00:07.000] 說到底 現在消費社會發達了

[00:00:07.000->00:00:09.000] 沒有什麼需求是一定要某種特定的解決方案

[00:00:09.000->00:00:11.000] 特定價位的商品才能實現的

[00:00:11.000->00:00:13.000] 要保暖 羽絨服固然很好

[00:00:13.000->00:00:15.000] 但春風衣加一些內搭也很暖和

[00:00:15.000->00:00:18.000] 要時尚 大幾千塊錢的設計師品牌非常不錯

[00:00:18.000->00:00:20.000] 但三五十的拼多多服飾搭得好也能出彩

[00:00:20.000->00:00:23.000] 要去野外徒步 花五六千買鳥也可以

[00:00:23.000->00:00:25.000] 但迪卡儂也足以應付大多數狀況

[00:00:25.000->00:00:27.000] 所以說 花高價買春風衣當然也OK

[00:00:27.000->00:00:29.000] 三四百買件駱駝也是可以接受的選擇

[00:00:29.000->00:00:31.000] 何況駱駝也多多少少有一些功能性

[00:00:31.000->00:00:33.000] 畢竟它再怎麼樣還是個春風衣

[00:00:33.000->00:00:36.000] 理解了這個事情就很容易分辨什麼是智商稅的

[00:00:36.000->00:00:38.000] 那些向你灌輸非某個品牌不用

[00:00:38.000->00:00:41.000] 告訴你某個需求只有某個產品才能滿足

[00:00:41.000->00:00:44.000] 某個品牌就是某個品類絕對的鄙視鏈頂端

[00:00:44.000->00:00:46.000] 這類行銷的智商稅含量必然是很高的

[00:00:46.000->00:00:48.000] 它的目的是剝奪你選擇的權利

[00:00:48.000->00:00:51.000] 讓你主動放棄比價和尋找平T的想法

[00:00:51.000->00:00:53.000] 從而避免與其他品牌競爭

[00:00:53.000->00:00:56.000] 而沒有競爭的市場才是智商稅含量最高的市場

[00:00:56.000->00:00:58.000] 消費 商業動線盡在IC實驗室

[00:00:58.000->00:01:00.000] 我是館長 我們下期再見

Whisper took 23.861680400000296 seconds

Parallel transcription took 217.61452729999974 seconds

Max line width 40

Closing parallel contexts

Closing pool of 1 processes

Closing pool of 8 processes

c:\faster-whisper-webui>