本笔记主要记录使用maximum/minimum,clip_by_value和clip_by_norm来进行张量值的限值操作。

import tensorflow as tf

import numpy as np

tf.__version__

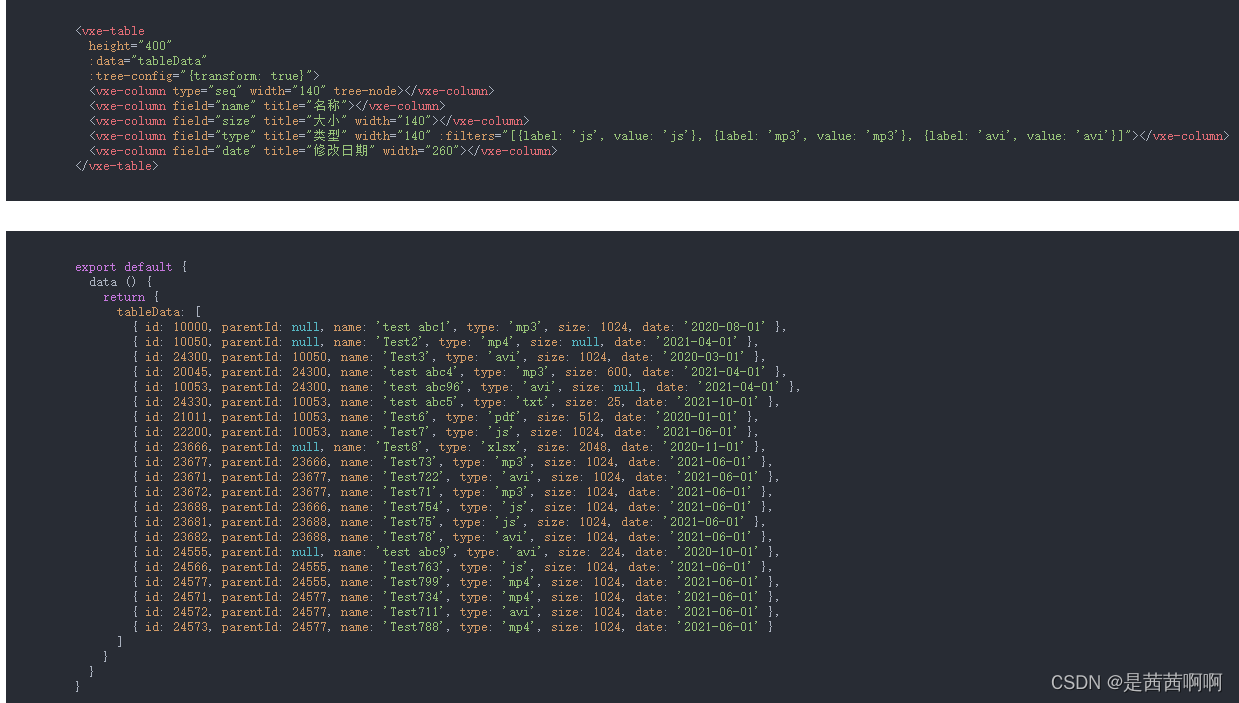

#maximum/minimumz做上下界的限值

tensor = tf.random.shuffle(tf.range(10))

print(tensor)

#maximum(x, y, name=None)

#对比x和y,保留两者最大值,可以用作保证最小值为某一个特定值

#https://blog.csdn.net/qq_36379719/article/details/104321914

print("=====tf.maximum(tensor, 5):\n", tf.maximum(tensor, 5).numpy())

print("=====tf.maximum(tensor, [6,6,6,5,5,5,4,4,4,3]):\n", tf.maximum(tensor, [6,6,6,5,5,5,4,4,4,3]).numpy())

#minimum作用和maximum正好相反,可以用来保证最大值为某一个特定值

print("=====tf.minimum(tensor, 5):\n", tf.minimum(tensor, 5).numpy())

print("=====tf.minimum(tensor, [6,6,6,5,5,5,4,4,4,3]):\n", tf.minimum(tensor, [6,6,6,5,5,5,4,4,4,3]).numpy())

#clip_by_value,可以指定上下界参数

#这个函数本身可以用maximum和minimum组合实现

tensor = tf.random.shuffle(tf.range(10))

print(tensor)

#限定tensor的元素值在[2,5]区间

print("=====tf.clip_by_value(tensor,2,5):\n", tf.clip_by_value(tensor, 2, 5).numpy())

#多维tensor

tensor = tf.random.uniform([2,3,3], maxval=10, dtype=tf.int32)

print(tensor)

print("=====tf.clip_by_value(tensor,2,5):\n", tf.clip_by_value(tensor, 2, 5).numpy())

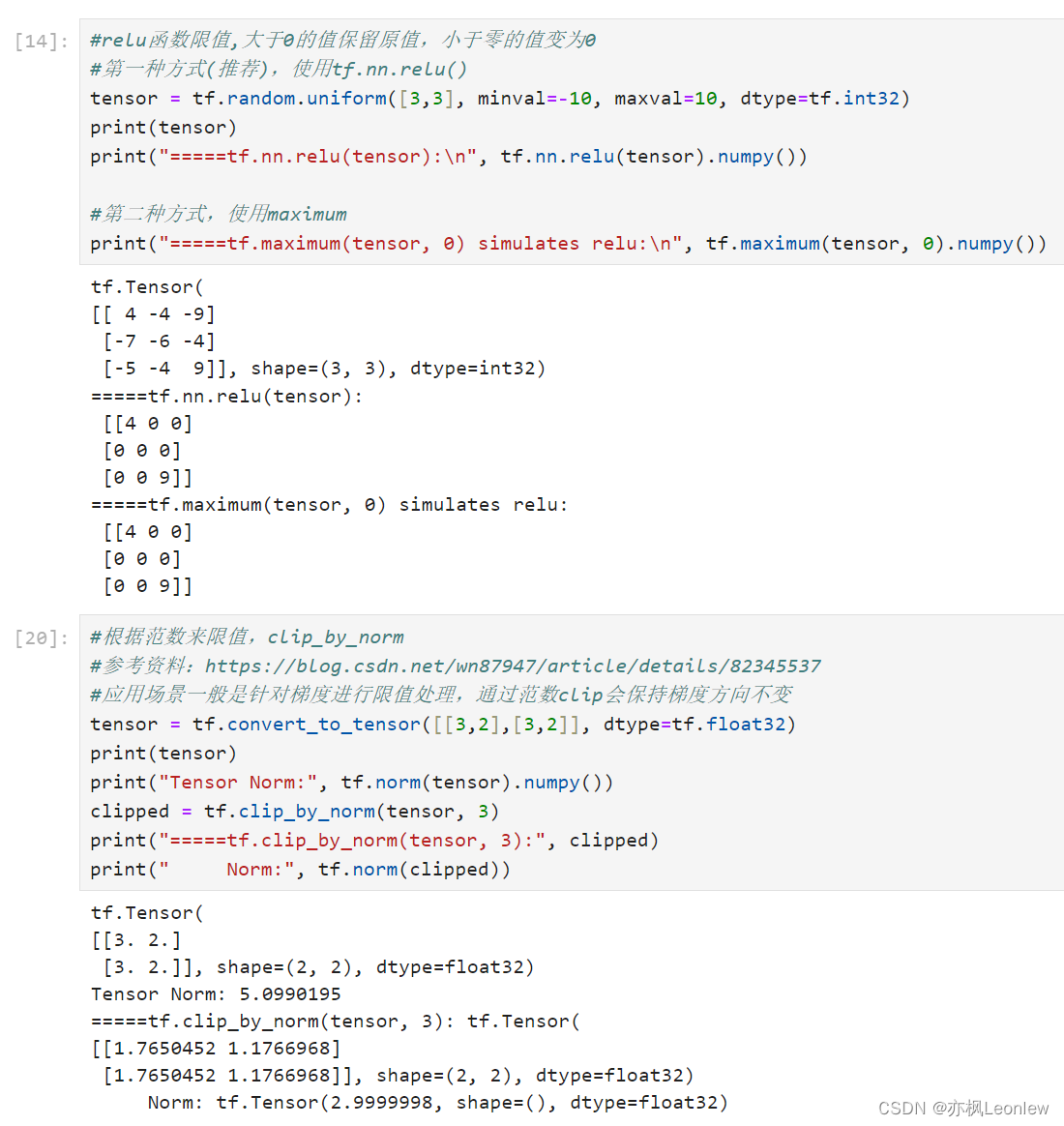

#relu函数限值,大于0的值保留原值,小于零的值变为0

#第一种方式(推荐),使用tf.nn.relu()

tensor = tf.random.uniform([3,3], minval=-10, maxval=10, dtype=tf.int32)

print(tensor)

print("=====tf.nn.relu(tensor):\n", tf.nn.relu(tensor).numpy())

#第二种方式,使用maximum

print("=====tf.maximum(tensor, 0) simulates relu:\n", tf.maximum(tensor, 0).numpy())

#根据范数来限值,clip_by_norm

#参考资料:https://blog.csdn.net/wn87947/article/details/82345537

#应用场景一般是针对梯度进行限值处理,通过范数clip会保持梯度方向不变

tensor = tf.convert_to_tensor([[3,2],[3,2]], dtype=tf.float32)

print(tensor)

print("Tensor Norm:", tf.norm(tensor).numpy())

clipped = tf.clip_by_norm(tensor, 3)

print("=====tf.clip_by_norm(tensor, 3):", clipped)

print(" Norm:", tf.norm(clipped))

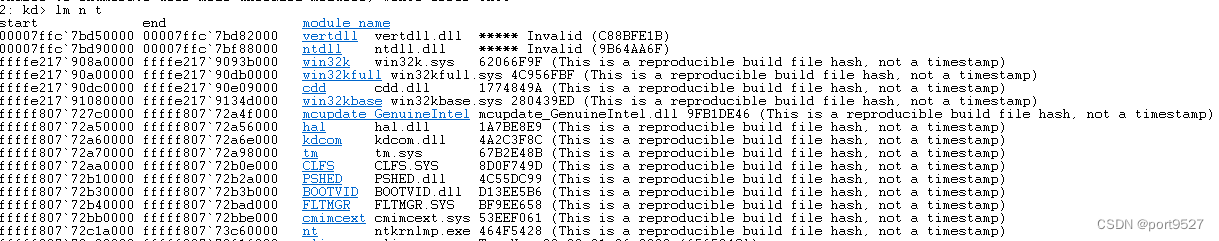

运行结果: