强化学习原理python篇06(拓展)——DQN拓展

- n-steps

- 代码

- 结果

- Double-DQN

- 代码

- 结果

- Dueling-DQN

- 代码

- 结果

- Ref

拓展篇参考赵世钰老师的教材和Maxim Lapan 深度学习强化学习实践(第二版),请各位结合阅读,本合集只专注于数学概念的代码实现。

n-steps

假设在训练开始时,顺序地完成前面的更新,前两个更新是没有用的,因为当前Q(s2, a)和Q(s2, a)是不对的,并且只包含初始的随机值。唯一有用的更新是第3个更新,它将奖励r3正确地赋给终结状态前的状态s3。

现在来完成一次又一次的更新。在第2次迭代,正确的值被赋给了Q(s2, a),但是Q(s1, a)的更新还是不对的。只有在第3次迭代时才能给所有的Q赋上正确的值。所以,即使在1步的情况下,它也需要3步才能将正确的值传播给所有的状态。

为此,修改第四步

4)将转移过程(s, a, r, s’)存储在回放缓冲区中 r 用 n 步合计展示。

代码

修改ReplayBuffer和DQN中的calculate_y_hat_and_y实现

class ReplayBuffer:

def __init__(self, episode_size, replay_time):

# 存取 queue episode

self.queue = []

self.queue_size = episode_size

self.replay_time = replay_time

def get_batch_queue(self, env, action_trigger, batch_size, epsilon):

def insert_sample_to_queue(env):

state, info = env.reset()

stop = 0

episode = []

while True:

if np.random.uniform(0, 1, 1) > epsilon:

action = env.action_space.sample()

else:

action = action_trigger(state)

next_state, reward, terminated, truncated, info = env.step(action)

episode.append([state, action, next_state, reward, terminated])

state = next_state

if terminated:

state, info = env.reset()

self.queue.append(episode)

episode = []

stop += 1

continue

if stop >= replay_time:

self.queue.append(episode)

episode = []

break

def init_queue(env):

while True:

insert_sample_to_queue(env)

if len(self.queue) >= self.queue_size:

break

init_queue(env)

insert_sample_to_queue(env)

self.queue = self.queue[-self.queue_size :]

return random.sample(self.queue, batch_size)

class DQN:

def __init__(self, env, obs_size, hidden_size, q_table_size):

self.env = env

self.net = Net(obs_size, hidden_size, q_table_size)

self.tgt_net = Net(obs_size, hidden_size, q_table_size)

# 更新net参数

def update_net_parameters(self, update=True):

self.net.load_state_dict(self.tgt_net.state_dict())

def get_action_trigger(self, state):

state = torch.Tensor(state)

action = int(torch.argmax(self.tgt_net(state).detach()))

return action

# 计算y_hat_and_y

def calculate_y_hat_and_y(self, batch, gamma):

# n_step

state_space = []

action_spcae = []

y = []

for episode in batch:

random_n = int(np.random.uniform(0, len(episode), 1))

episode = episode[-random_n:]

state, action, next_state, reward, terminated = episode[-1]

q_table_net = dqn.net(torch.Tensor(next_state)).detach()

reward = reward + (1 - terminated) * gamma * float(torch.max(q_table_net))

episode[-1] = state, action, next_state, reward, terminated

reward_space = [_[3] for _ in episode]

r_n_steps = discount_reward(reward_space, gamma)

y.append(r_n_steps)

state, action, next_state, reward, terminated = episode[0]

state_space.append(state)

action_spcae.append(action)

y_hat = self.tgt_net(torch.Tensor(np.array(state_space)))

y_hat = y_hat.gather(1, torch.LongTensor(action_spcae).reshape(-1, 1))

return y_hat.reshape(-1), torch.tensor(y)

def predict_reward(self):

state, info = env.reset()

step = 0

reward_space = []

while True:

step += 1

state = torch.Tensor(state)

action = int(torch.argmax(self.net(state).detach()))

next_state, reward, terminated, truncated, info = env.step(action)

reward_space.append(reward)

state = next_state

if terminated:

state, info = env.reset()

continue

if step >= 100:

break

return float(np.mean(reward_space))

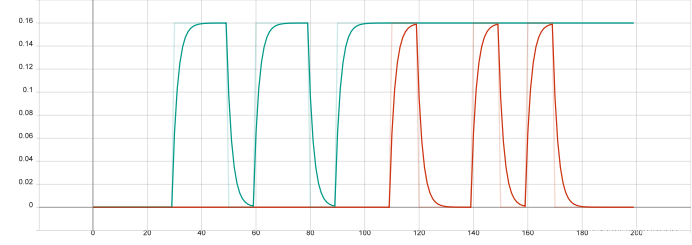

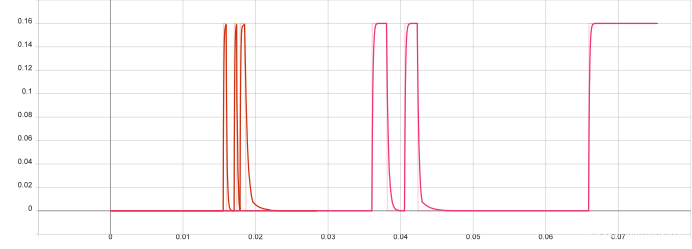

结果

以相同的参数进行训练

绿色的线为n-steps DQN,发现比普通DQN收敛速度显著提高。

Double-DQN

由于普通DQN是一种boostrap方法来更新自己的值,在

6)对于回放缓冲区中的每个转移过程,如果片段在此步结束,则计算目标 y = r y=r y=r,否则计算 y = r + γ m a x Q ^ ( s , a , w ) y=r+\gamma max \hat Q(s, a, w) y=r+γmaxQ^(s,a,w)

过程中max步骤,又扩大了该高估的误差影响,为了解决该问题,Deep Reinforcement Learning with Double Q-Learning论文的作者建议使用训练网络来选择动作,但是使用目标网络的Q值。所以新的目标Q值为

Q ( s t , a t ) = r t + γ Q ′ ( s t + 1 , arg max a Q ( s t + 1 , a ) ) Q(s_t,a_t) = r_t+\gamma Q'(s_{t+1}, \argmax \limits_{a} Q(s_{t+1}, a)) Q(st,at)=rt+γQ′(st+1,aargmaxQ(st+1,a))

代码

修改第六步

class DQN:

def __init__(self, env, obs_size, hidden_size, q_table_size):

self.env = env

self.net = Net(obs_size, hidden_size, q_table_size)

self.tgt_net = Net(obs_size, hidden_size, q_table_size)

# 更新net参数

def update_net_parameters(self, update=True):

self.net.load_state_dict(self.tgt_net.state_dict())

def get_action_trigger(self, state):

state = torch.Tensor(state)

action = int(torch.argmax(self.tgt_net(state).detach()))

return action

# 计算y_hat_and_y

def calculate_y_hat_and_y(self, batch, gamma):

y = []

action_sapce = []

state_sapce = []

##

for state, action, next_state, reward, terminated in batch:

q_table_net = self.net(torch.Tensor(next_state)).detach()

## double DQN

tgt_net_action = self.get_action_trigger(next_state)

y.append(reward + (1 - terminated) * gamma * float(q_table_net[tgt_net_action]))

action_sapce.append(action)

state_sapce.append(state)

y_hat = self.tgt_net(torch.Tensor(np.array(state_sapce)))

y_hat = y_hat.gather(1, torch.LongTensor(action_sapce).reshape(-1, 1))

return y_hat.reshape(-1), torch.tensor(y)

def predict_reward(self):

state, info = env.reset()

step = 0

reward_space = []

while True:

step += 1

state = torch.Tensor(state)

action = int(torch.argmax(self.net(state).detach()))

next_state, reward, terminated, truncated, info = env.step(action)

reward_space.append(reward)

state = next_state

if terminated:

state, info = env.reset()

continue

if step >= 100:

break

return float(np.mean(reward_space))

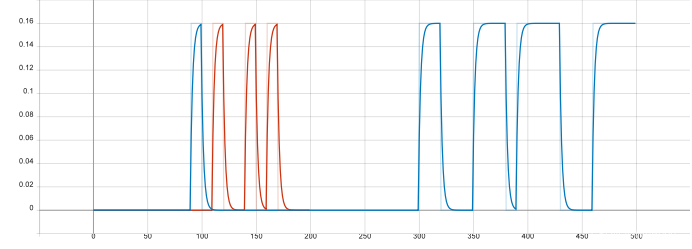

结果

Dueling-DQN

这个对DQN的改进是在2015年的“Dueling Network Architectures for Deep Reinforcement Learning”论文中提出的。

该论文的核心发现是,神经网络所试图逼近的Q值Q(s, a)可以被分成两个量:状态的价值V(s),以及这个状态下的动作优势A(s, a)。

在同一个状态下,所有动作的优势值之和为,因为所有动作的动作价值的期望就是这个状态的状态价值。

这种约束可以通过几种方法来实施,例如,通过损失函数。但是在论文中,作者提出一个非常巧妙的解决方案,就是从神经网络的Q表达式中减去优势值的平均值,它有效地将优势值的平均值趋于0。

Q ( s , a ) = V ( a ) + A ( s , a ) − 1 n ∑ a ′ A ( s , a ′ ) Q(s,a) = V(a)+A(s,a)-\frac{1}{n}\sum _{a'}A(s,a') Q(s,a)=V(a)+A(s,a)−n1a′∑A(s,a′)

这使得对基础DQN的改动变得很

简单:为了将其转换成Dueling DQN,只需要改变神经网络的结构,而

不需要影响其他部分的实现。

代码

class DuelingNet(nn.Module):

def __init__(self, obs_size, hidden_size, q_table_size):

super().__init__()

# 动作优势A(s, a)

self.a_net = nn.Sequential(

nn.Linear(obs_size, hidden_size),

nn.ReLU(),

nn.Linear(hidden_size, q_table_size),

)

# 价值V(s)

self.v_net = nn.Sequential(

nn.Linear(obs_size, hidden_size),

nn.ReLU(),

nn.Linear(hidden_size, 1),

)

def forward(self, state):

if len(torch.Tensor(state).size())==1:

state = state.reshape(1,-1)

v = self.v_net(state)

a = self.a_net(state)

mean_a = a.mean(dim=1,keepdim=True)

# torch.mean(a, axis=1).reshape(-1, 1)

return v + a - mean_a

结果

Ref

[1] Mathematical Foundations of Reinforcement Learning,Shiyu Zhao

[2] 深度学习强化学习实践(第二版),Maxim Lapan