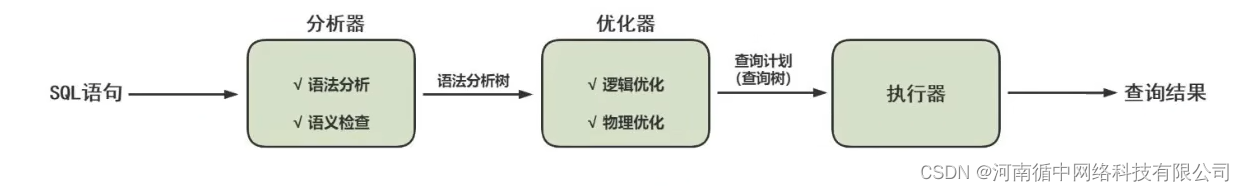

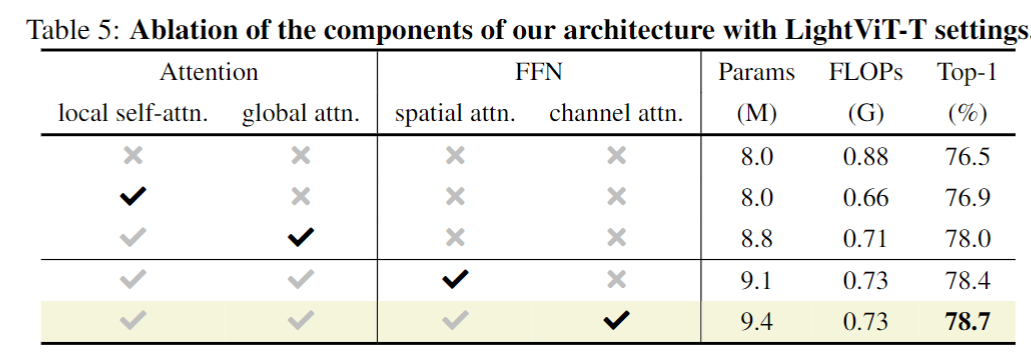

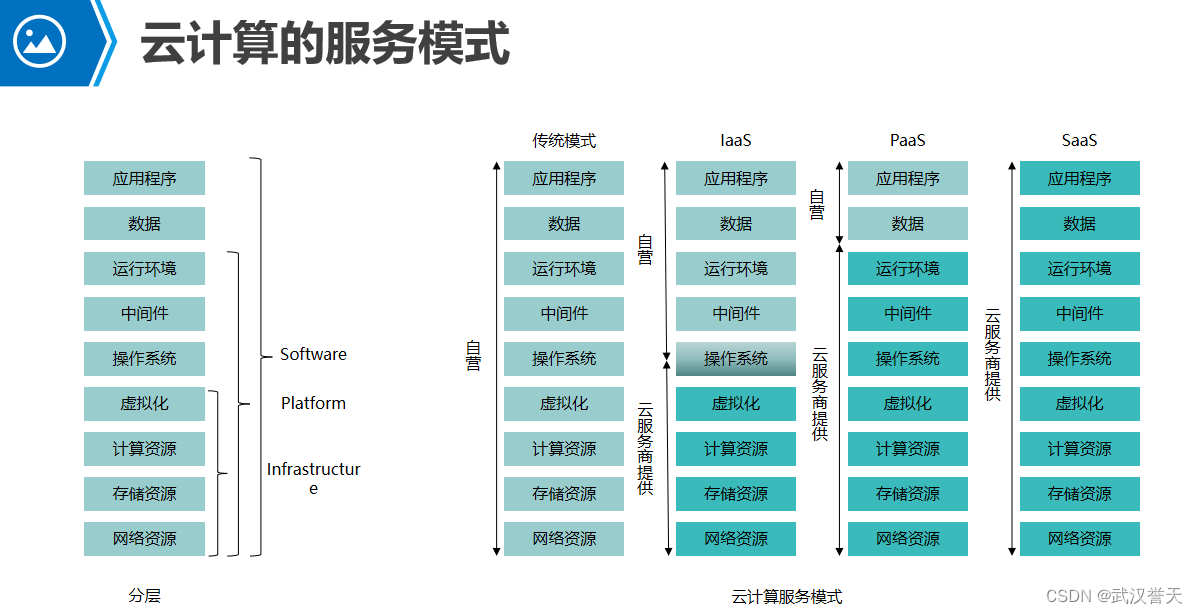

一些基础概念:

Socket(s):主板上面的物理 CPU 插槽。

Core(s):一个 CPU 一般包含 2~4 个 core,即 Core(s) per socket。

Thread(s):一个 core 包含多个可以并行处理任务的 thread,即 Thread(s) per core, thread 是单个独立的执行上下文,竞争 core 内寄存器等共享资源。

NUMA nodes:一个 socket 可以划分为多个 NUMA node。

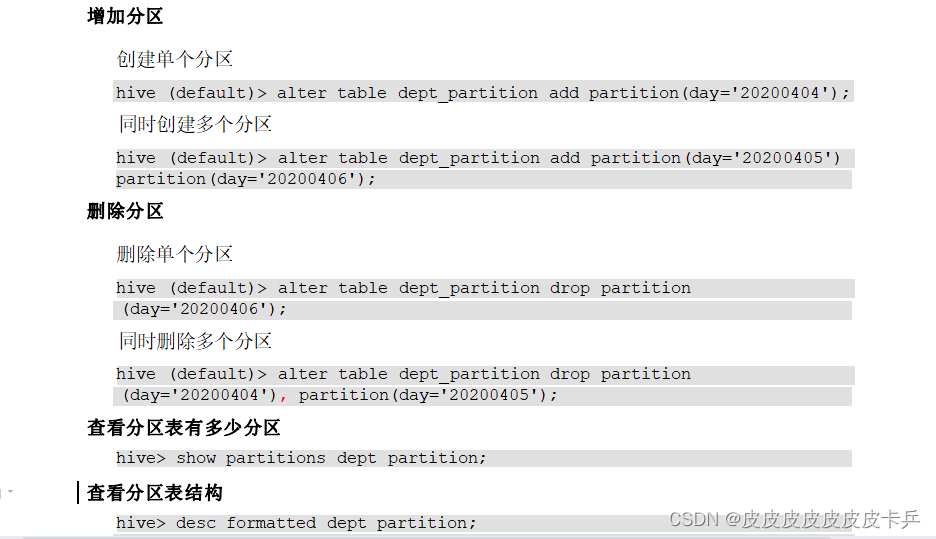

图示:

An example of a two-node NUMA system and the way the CPU cores and memory pages are made available.

NOTE

Remote memory available via Interconnect is accessed only if VM1 from NUMA node 0 has a CPU core in NUMA node 1. In this case, the memory of NUMA node 1 will act as local for the third CPU core of VM1 (for example, if VM1 is allocated with CPU 4 in the diagram above), but at the same time, it will act as remote memory for the other CPU cores of the same VM.

WARNING

At present, it is impossible to migrate an instance which has been configured to use CPU pinning.

(免费订阅,永久学习)学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,永久学习,或点击这里加qun免费

领取,关注我持续更新哦! !

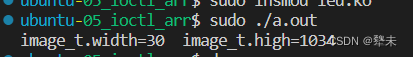

宿主机 NUMA 查看:

XXX:~ # lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 48

On-line CPU(s) list: 0-47

Thread(s) per core: 2

Core(s) per socket: 12

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 63

Stepping: 2

CPU MHz: 2200.200

BogoMIPS: 4399.95

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 30720K

NUMA node0 CPU(s): 0-11,24-35

NUMA node1 CPU(s): 12-23,36-47

XXX:~ # numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 24 25 26 27 28 29 30 31 32 33 34 35

node 0 size: 97901 MB

node 0 free: 85588 MB

node 1 cpus: 12 13 14 15 16 17 18 19 20 21 22 23 36 37 38 39 40 41 42 43 44 45 46 47

node 1 size: 98304 MB

node 1 free: 95340 MB

node distances:

node 0 1

0: 10 21

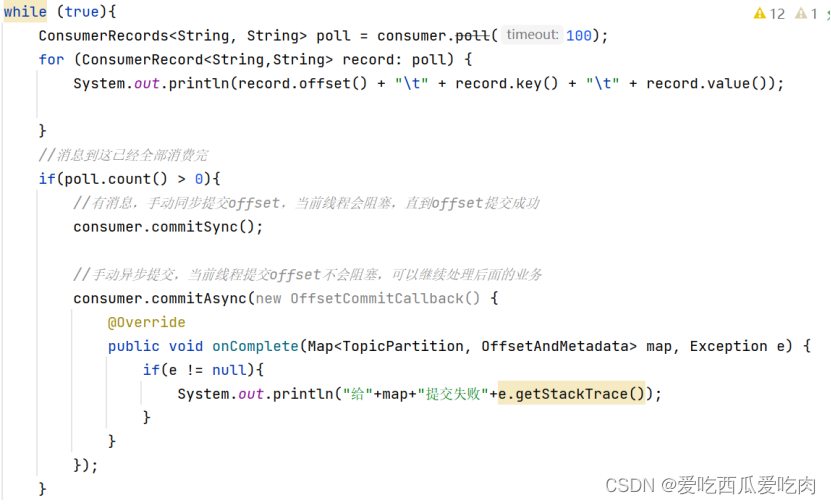

1: 21 10The NUMA topology and CPU pinning features in OpenStack provide high-level control over how instances run on hypervisor CPUs and the topology of virtual CPUs available to instances. These features help minimize latency and maximize performance.

SMP, NUMA, and SMT

Symmetric multiprocessing (SMP)

SMP is a design found in many modern multi-core systems. In an SMP system, there are two or more CPUs and these CPUs are connected by some interconnect. This provides CPUs with equal access to system resources like memory and input/output ports

Non-uniform memory access (NUMA)

NUMA is a derivative of the SMP design that is found in many multi-socket systems. In a NUMA system, system memory is divided into cells or nodes that are associated with particular CPUs. Requests for memory on other nodes are possible through an interconnect bus. However, bandwidth across this shared bus is limited. As a result, competition for this resource can incur performance penalties.

Simultaneous Multi-Threading (SMT)

SMT is a design complementary to SMP. Whereas CPUs in SMP systems share a bus and some memory, CPUs in SMT systems share many more components. CPUs that share components are known as thread siblings. All CPUs appear as usable CPUs on the system and can execute workloads in parallel. However, as with NUMA, threads compete for shared resources.

In OpenStack, SMP CPUs are known as cores, NUMA cells or nodes are known as sockets, and SMT CPUs are known as threads. For example, a quad-socket, eight core system with Hyper-Threading would have four sockets, eight cores per socket and two threads per core, for a total of 64 CPUs.

OpenStack 如何自定义配置 NUMA

TODO:

reference:

VirtDriverGuestCPUMemoryPlacement - OpenStack

OpenStack Docs: CPU topologies

Chapter 7. Configure CPU Pinning with NUMA

9.3. libvirt NUMA Tuning

https://zh.wikipedia.org/wiki/%

https://zhuanlan.zhihu.com/p/30585038