一、Camera

1.1、结合SurfaceView实现预览

1.1.1、布局

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:orientation="vertical"

tools:context=".CameraActivity">

<SurfaceView

android:id="@+id/preview"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="1"/>

</LinearLayout>

1.1.2、实现预览

mBinding.preview.getHolder().addCallback(new SurfaceHolder.Callback2() {

@Override

public void surfaceRedrawNeeded(@NonNull SurfaceHolder holder) {

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

mCamera.startPreview();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder holder) {

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder holder) {

}

});

- Camera.open()

打开摄像头 - setPreviewDisplay

设置预览展示的控件 - startPreview

开始预览

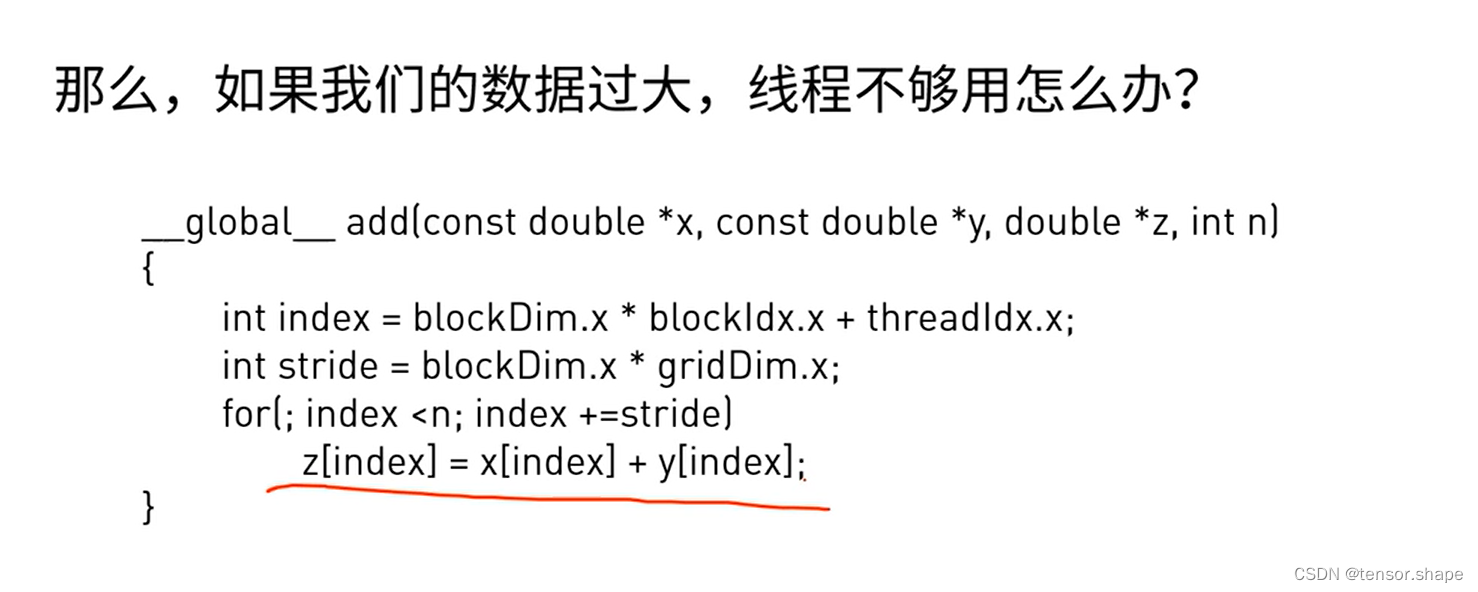

发现预览是横着的,需要使用setDisplayOrientation调整预览图像的方向

1.1.3、获取摄像头的原始数据

mBinding.preview.getHolder().addCallback(new SurfaceHolder.Callback2() {

@Override

public void surfaceRedrawNeeded(@NonNull SurfaceHolder holder) {

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

mCamera.setDisplayOrientation(90);

mCamera.setPreviewCallback(new PreviewCallBack());

mCamera.startPreview();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder holder) {

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder holder) {

}

});

private class PreviewCallBack implements Camera.PreviewCallback {

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

try {

bos.write(data);

Camera.Size previewSize = camera.getParameters().getPreviewSize();

Log.e(TAG, "onPreviewFrame:" + previewSize.width + "x" + previewSize.height);

Log.e(TAG, "Image format:" + camera.getParameters().getPictureFormat());

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}

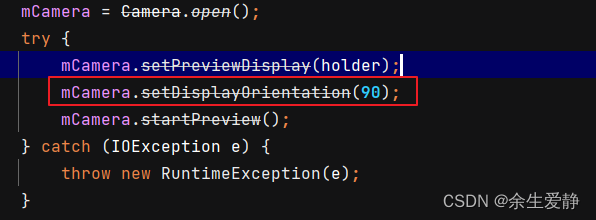

- setPreviewCallback

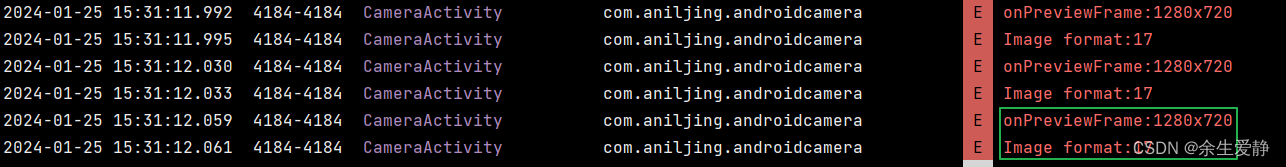

设置预览数据的回调 - 2560*1440

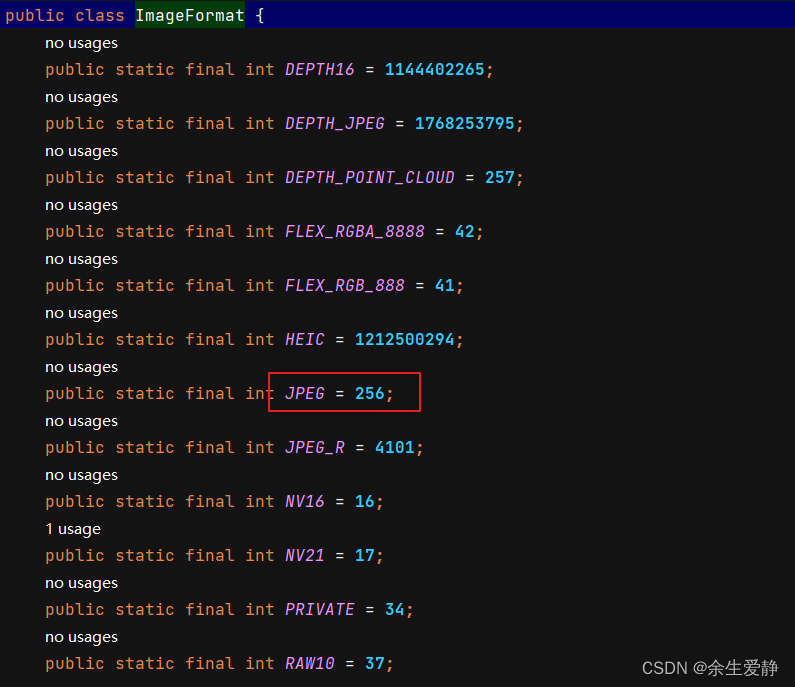

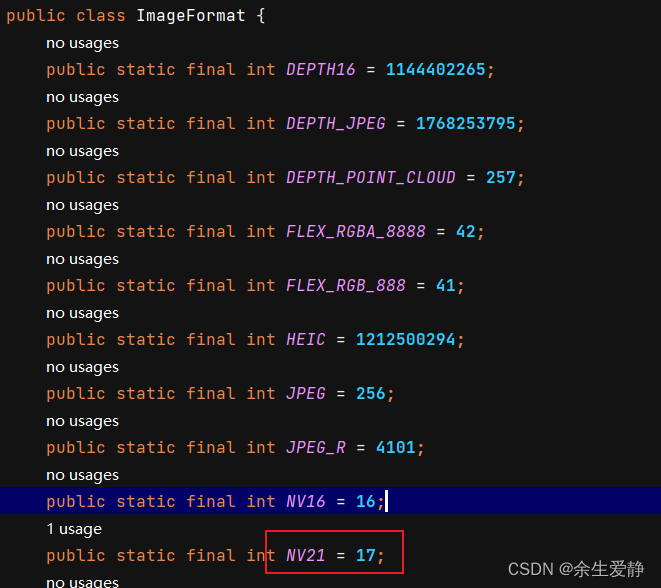

默认返回图像的分辨率 - Image format:256

默认返回图像的数据格式是JPEG的

1.1.4、调整相机数据的分辨率和格式

Parameters

private Camera.Size getBestPreviewSize(Camera.Parameters parameters, int desiredWidth, int desiredHeight) {

List<Camera.Size> supportedSizes = parameters.getSupportedPreviewSizes();

Camera.Size bestSize = null;

int bestDiff = Integer.MAX_VALUE;

for (Camera.Size size : supportedSizes) {

int diff = Math.abs(size.width - desiredWidth) + Math.abs(size.height - desiredHeight);

if (diff < bestDiff) {

bestSize = size;

bestDiff = diff;

}

}

return bestSize;

}

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

Camera.Parameters parameters = mCamera.getParameters();

// 设置预览尺寸

int desiredWidth = 1280; // 设置所需的宽度

int desiredHeight = 720; // 设置所需的高度

Camera.Size bestSize = getBestPreviewSize(parameters, desiredWidth, desiredHeight);

parameters.setPreviewSize(bestSize.width, bestSize.height);

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

//设置输出数据的格式为NV21

parameters.setPictureFormat(ImageFormat.NV21);

mCamera.setParameters(parameters);

mCamera.setPreviewCallback(new PreviewCallBack());

mCamera.setDisplayOrientation(90);

mCamera.startPreview();

} catch (IOException e) {

throw new RuntimeException(e);

}

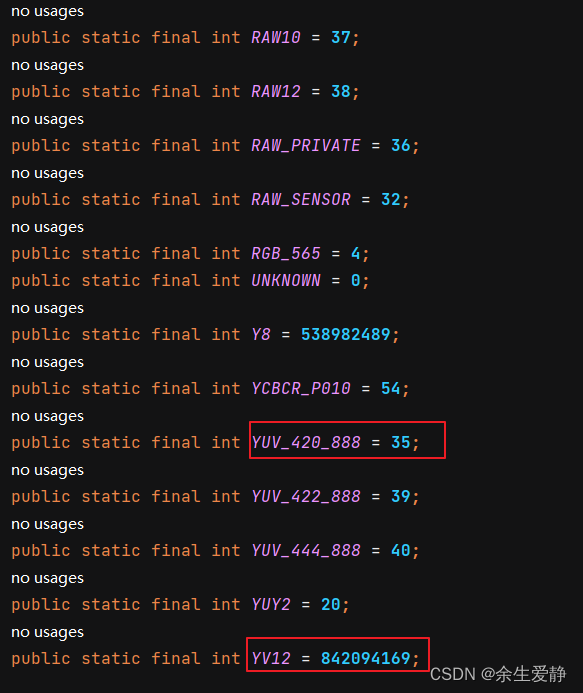

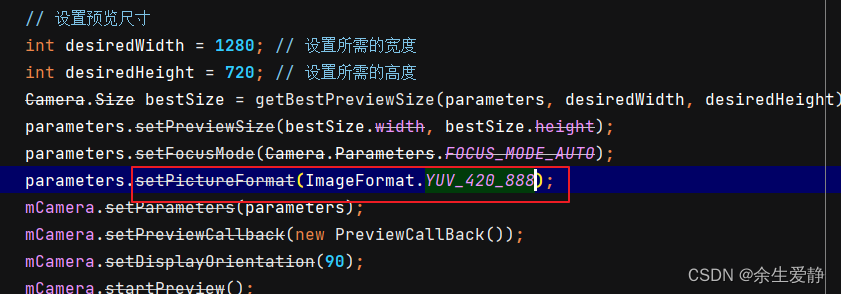

ImageFormat里面还定义的有YUV_420_888(yuv420)、YV12,那我们可以使用吗?

运行后,应用直接抛出异常了,我用的真机是VIVO x20的,android版本是8.1的,所以,不支持设置输出的摄像头数据为YUV_420_888的。

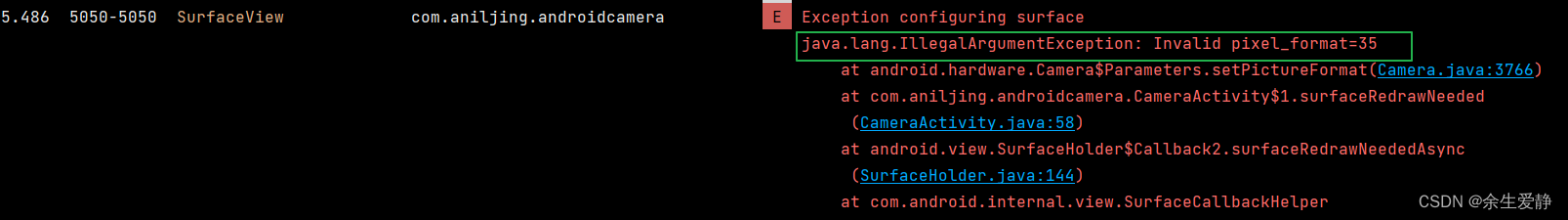

1.1.5、保存摄像头输出的nv21格式的数据

package com.aniljing.androidcamera;

import android.content.Context;

import android.graphics.ImageFormat;

import android.hardware.Camera;

import android.os.Bundle;

import android.os.Environment;

import android.util.Log;

import android.view.SurfaceHolder;

import com.aniljing.androidcamera.databinding.ActivityCameraBinding;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.List;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

public class CameraActivity extends AppCompatActivity {

private final String TAG = CameraActivity.class.getSimpleName();

private Context mContext;

private ActivityCameraBinding mBinding;

private Camera mCamera;

private File mFile = new File(Environment.getExternalStorageDirectory(), "nv21.yuv");

private BufferedOutputStream bos;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mContext = this;

mBinding = ActivityCameraBinding.inflate(getLayoutInflater());

setContentView(mBinding.getRoot());

try {

if (mFile.exists()) {

mFile.delete();

}

bos = new BufferedOutputStream(new FileOutputStream(mFile));

} catch (FileNotFoundException e) {

throw new RuntimeException(e);

}

mBinding.preview.getHolder().addCallback(new SurfaceHolder.Callback2() {

@Override

public void surfaceRedrawNeeded(@NonNull SurfaceHolder holder) {

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

Camera.Parameters parameters = mCamera.getParameters();

// 设置预览尺寸

int desiredWidth = 1280; // 设置所需的宽度

int desiredHeight = 720; // 设置所需的高度

Camera.Size bestSize = getBestPreviewSize(parameters, desiredWidth, desiredHeight);

parameters.setPreviewSize(bestSize.width, bestSize.height);

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

parameters.setPictureFormat(ImageFormat.NV21);

mCamera.setParameters(parameters);

mCamera.setPreviewCallback(new PreviewCallBack());

mCamera.setDisplayOrientation(90);

mCamera.startPreview();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder holder) {

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder holder) {

}

});

}

private class PreviewCallBack implements Camera.PreviewCallback {

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

try {

bos.write(data);

Camera.Size previewSize = camera.getParameters().getPreviewSize();

Log.e(TAG, "onPreviewFrame:" + previewSize.width + "x" + previewSize.height);

Log.e(TAG,"Image format:"+camera.getParameters().getPictureFormat());

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}

@Override

protected void onDestroy() {

super.onDestroy();

mCamera.setPreviewCallback(null);

mCamera.stopPreview();

mCamera.release();

mCamera = null;

try {

bos.flush();

bos.close();

bos = null;

} catch (IOException e) {

throw new RuntimeException(e);

}

}

private Camera.Size getBestPreviewSize(Camera.Parameters parameters, int desiredWidth, int desiredHeight) {

List<Camera.Size> supportedSizes = parameters.getSupportedPreviewSizes();

Camera.Size bestSize = null;

int bestDiff = Integer.MAX_VALUE;

for (Camera.Size size : supportedSizes) {

int diff = Math.abs(size.width - desiredWidth) + Math.abs(size.height - desiredHeight);

if (diff < bestDiff) {

bestSize = size;

bestDiff = diff;

}

}

return bestSize;

}

}

-

保存为yuv格式的文件

-

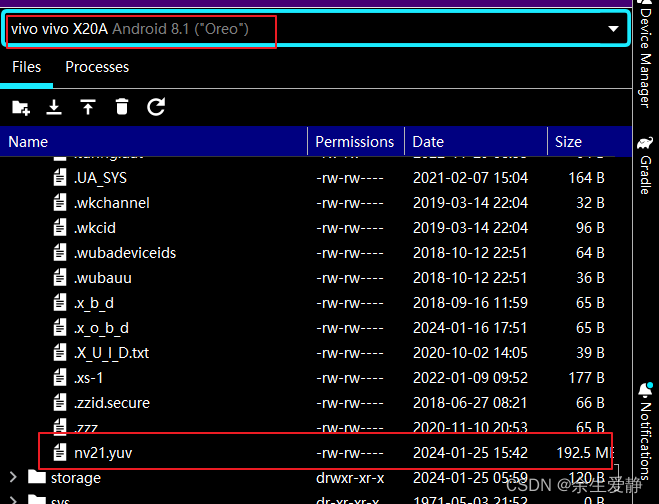

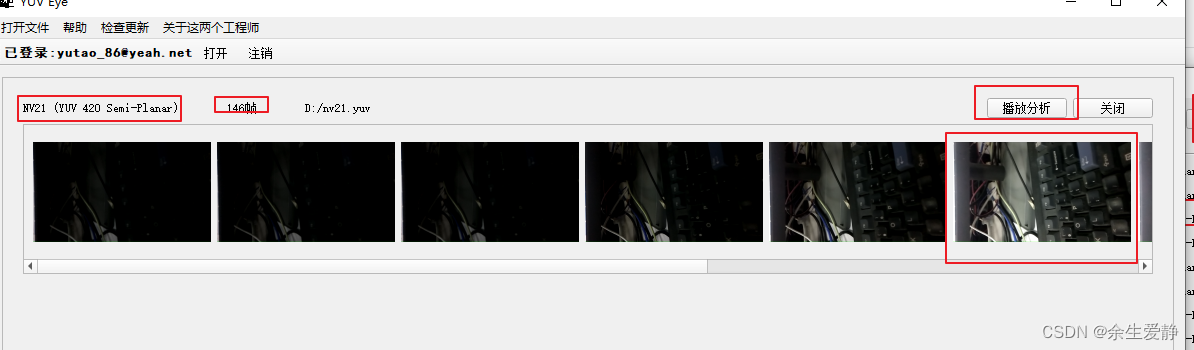

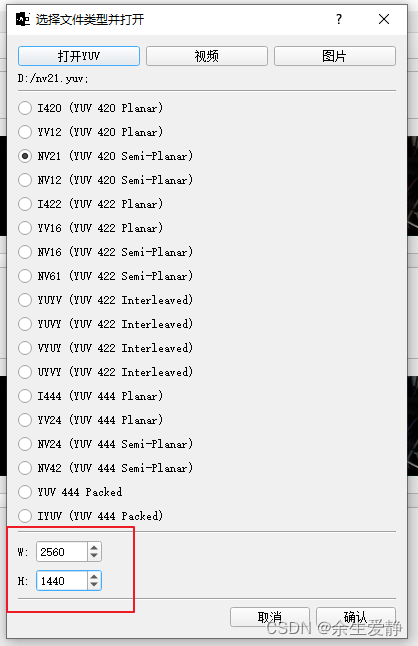

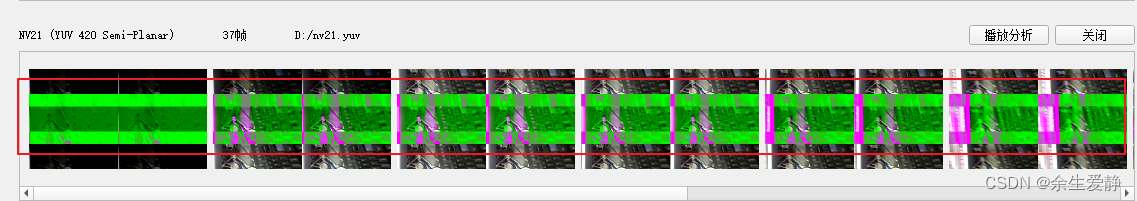

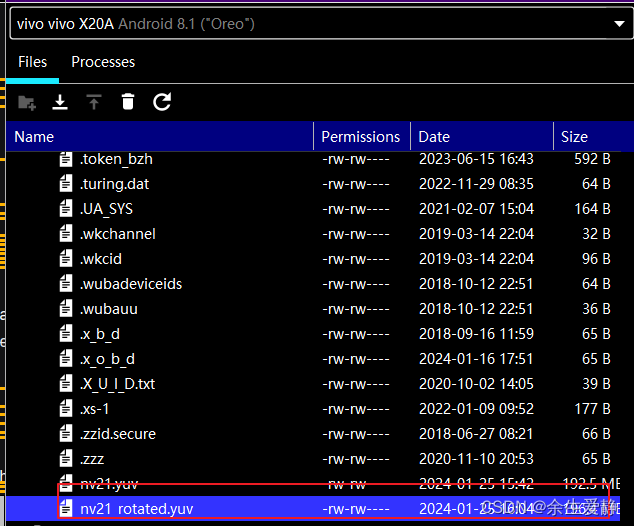

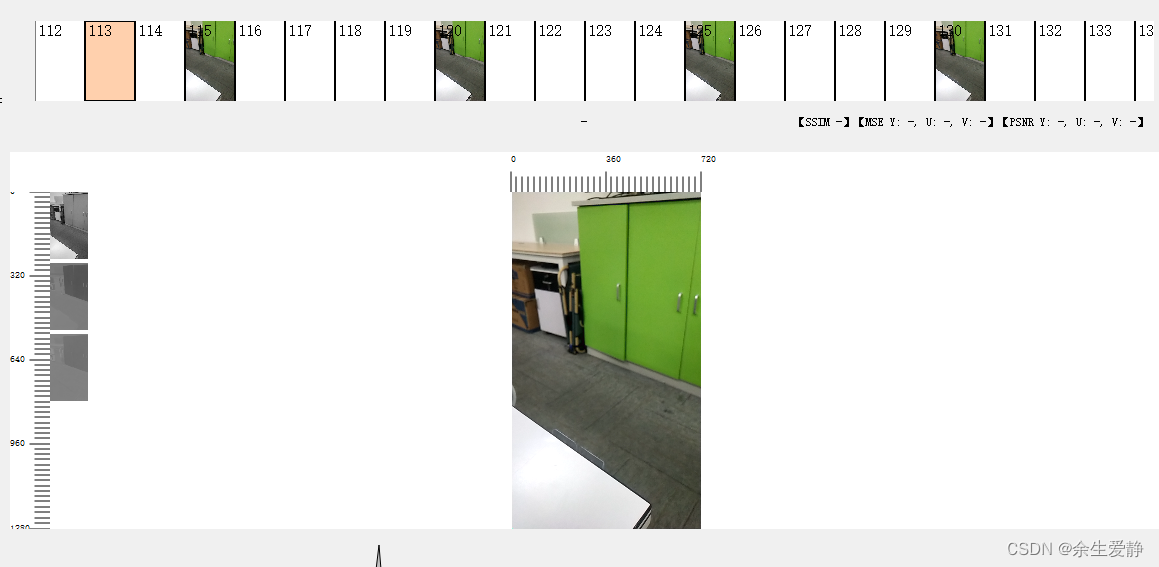

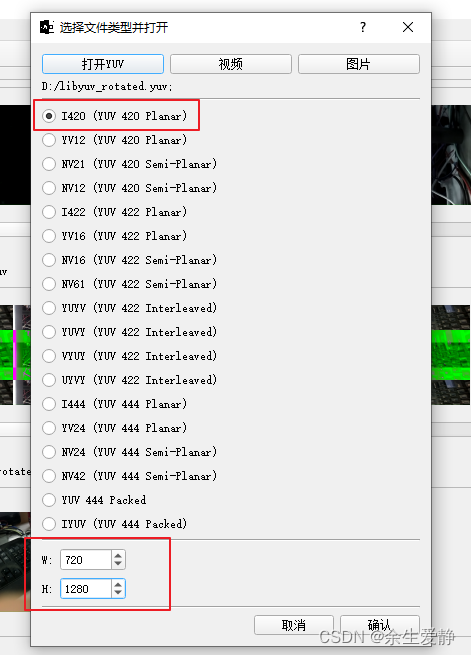

使用YuvEye工具打开nv21.yuv文件

-

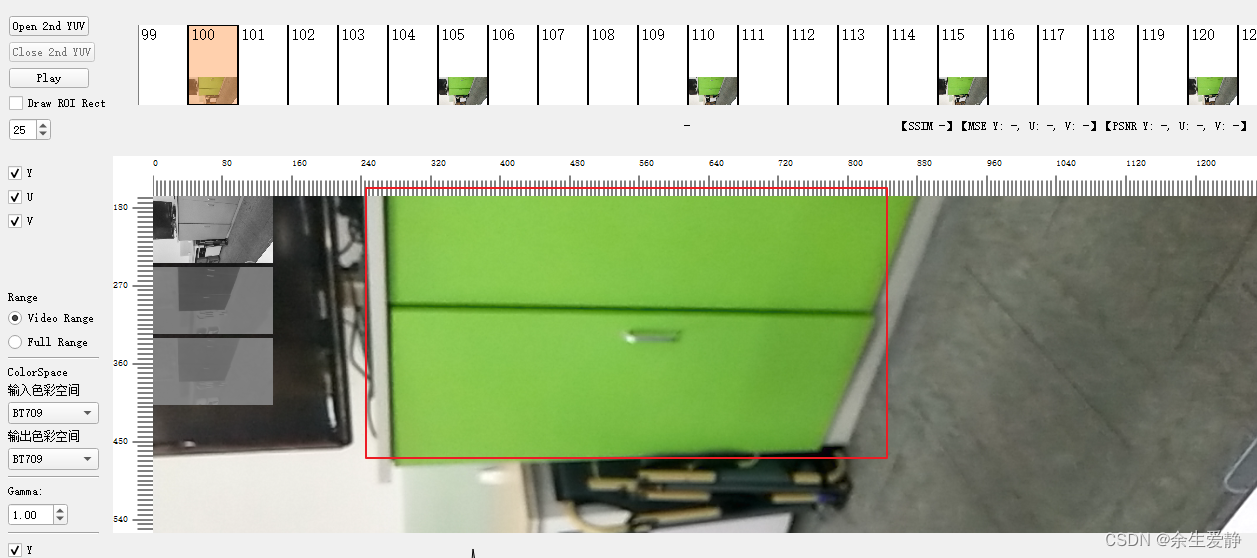

可以看出,虽然预览已经可以竖屏显示视频了,但是我们实际保存的数据却还是横屏的,所以就需要我们手动的调整

-

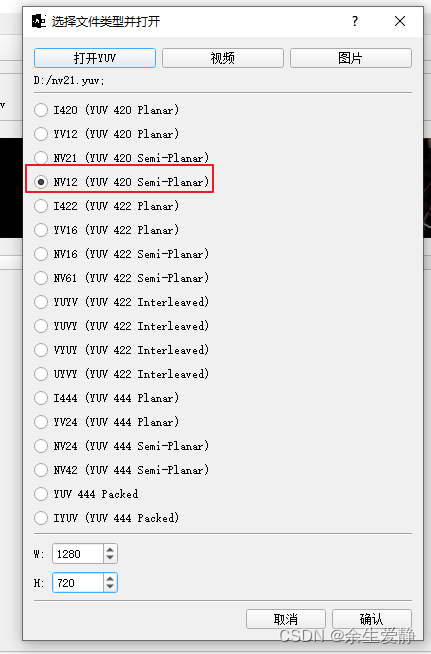

如果我们选择其他格式的,使用工具是否能够正常打开呢?

我们会发现,柜子的颜色和使用正确的格式有很明显的差别的 -

如果我们输入错误的分辨率,又是什么效果呢?

1.1.6、数据旋转

java方式:

private void nv21_rotate_to_90(byte[] nv21_data, byte[] nv21_rotated, int width, int height) {

int y_size = width * height;

int buffser_size = y_size * 3 / 2;

// Rotate the Y luma

int i = 0;

int startPos = (height - 1) * width;

for (int x = 0; x < width; x++) {

int offset = startPos;

for (int y = height - 1; y >= 0; y--) {

nv21_rotated[i] = nv21_data[offset + x];

i++;

offset -= width;

}

}

// Rotate the U and V color components

i = buffser_size - 1;

for (int x = width - 1; x > 0; x = x - 2) {

int offset = y_size;

for (int y = 0; y < height / 2; y++) {

nv21_rotated[i] = nv21_data[offset + x];

i--;

nv21_rotated[i] = nv21_data[offset + (x - 1)];

i--;

offset += width;

}

}

}

package com.aniljing.androidcamera;

import android.content.Context;

import android.graphics.ImageFormat;

import android.hardware.Camera;

import android.os.Bundle;

import android.os.Environment;

import android.util.Log;

import android.view.SurfaceHolder;

import com.aniljing.androidcamera.databinding.ActivityCameraBinding;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.List;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

public class CameraActivity extends AppCompatActivity {

private final String TAG = CameraActivity.class.getSimpleName();

private Context mContext;

private ActivityCameraBinding mBinding;

private Camera mCamera;

private File mFile = new File(Environment.getExternalStorageDirectory(), "nv21_rotated.yuv");

private BufferedOutputStream bos;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mContext = this;

mBinding = ActivityCameraBinding.inflate(getLayoutInflater());

setContentView(mBinding.getRoot());

try {

if (mFile.exists()) {

mFile.delete();

}

bos = new BufferedOutputStream(new FileOutputStream(mFile));

} catch (FileNotFoundException e) {

throw new RuntimeException(e);

}

mBinding.preview.getHolder().addCallback(new SurfaceHolder.Callback2() {

@Override

public void surfaceRedrawNeeded(@NonNull SurfaceHolder holder) {

mCamera = Camera.open();

try {

mCamera.setPreviewDisplay(holder);

Camera.Parameters parameters = mCamera.getParameters();

// 设置预览尺寸

int desiredWidth = 1280; // 设置所需的宽度

int desiredHeight = 720; // 设置所需的高度

Camera.Size bestSize = getBestPreviewSize(parameters, desiredWidth, desiredHeight);

parameters.setPreviewSize(bestSize.width, bestSize.height);

parameters.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO);

parameters.setPictureFormat(ImageFormat.NV21);

mCamera.setParameters(parameters);

mCamera.setPreviewCallback(new PreviewCallBack());

mCamera.setDisplayOrientation(90);

mCamera.startPreview();

} catch (IOException e) {

throw new RuntimeException(e);

}

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder holder) {

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder holder) {

}

});

}

private class PreviewCallBack implements Camera.PreviewCallback {

@Override

public void onPreviewFrame(byte[] data, Camera camera) {

try {

Camera.Size previewSize = camera.getParameters().getPreviewSize();

byte[] nv21_rotated=new byte[data.length];

nv21_rotate_to_90(data,nv21_rotated, previewSize.width, previewSize.height);

bos.write(nv21_rotated);

Log.e(TAG, "onPreviewFrame:" + previewSize.width + "x" + previewSize.height);

Log.e(TAG,"Image format:"+camera.getParameters().getPictureFormat());

} catch (IOException e) {

throw new RuntimeException(e);

}

}

}

@Override

protected void onDestroy() {

super.onDestroy();

mCamera.setPreviewCallback(null);

mCamera.stopPreview();

mCamera.release();

mCamera = null;

try {

bos.flush();

bos.close();

bos = null;

} catch (IOException e) {

throw new RuntimeException(e);

}

}

private Camera.Size getBestPreviewSize(Camera.Parameters parameters, int desiredWidth, int desiredHeight) {

List<Camera.Size> supportedSizes = parameters.getSupportedPreviewSizes();

Camera.Size bestSize = null;

int bestDiff = Integer.MAX_VALUE;

for (Camera.Size size : supportedSizes) {

int diff = Math.abs(size.width - desiredWidth) + Math.abs(size.height - desiredHeight);

if (diff < bestDiff) {

bestSize = size;

bestDiff = diff;

}

}

return bestSize;

}

private void nv21_rotate_to_90(byte[] nv21_data, byte[] nv21_rotated, int width, int height) {

int y_size = width * height;

int buffser_size = y_size * 3 / 2;

// Rotate the Y luma

int i = 0;

int startPos = (height - 1) * width;

for (int x = 0; x < width; x++) {

int offset = startPos;

for (int y = height - 1; y >= 0; y--) {

nv21_rotated[i] = nv21_data[offset + x];

i++;

offset -= width;

}

}

// Rotate the U and V color components

i = buffser_size - 1;

for (int x = width - 1; x > 0; x = x - 2) {

int offset = y_size;

for (int y = 0; y < height / 2; y++) {

nv21_rotated[i] = nv21_data[offset + x];

i--;

nv21_rotated[i] = nv21_data[offset + (x - 1)];

i--;

offset += width;

}

}

}

}

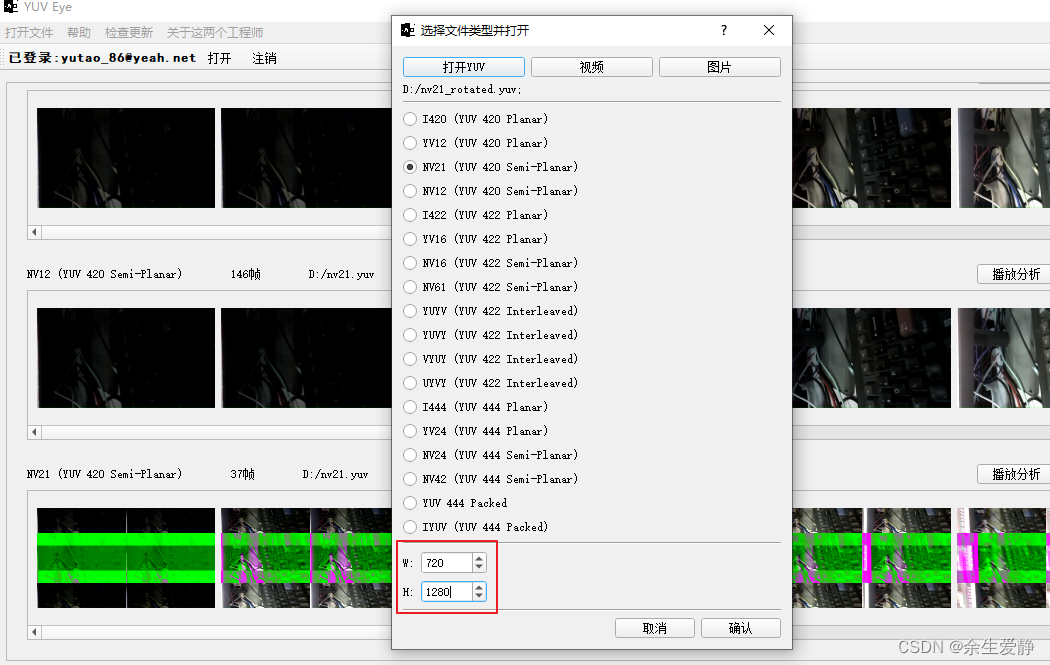

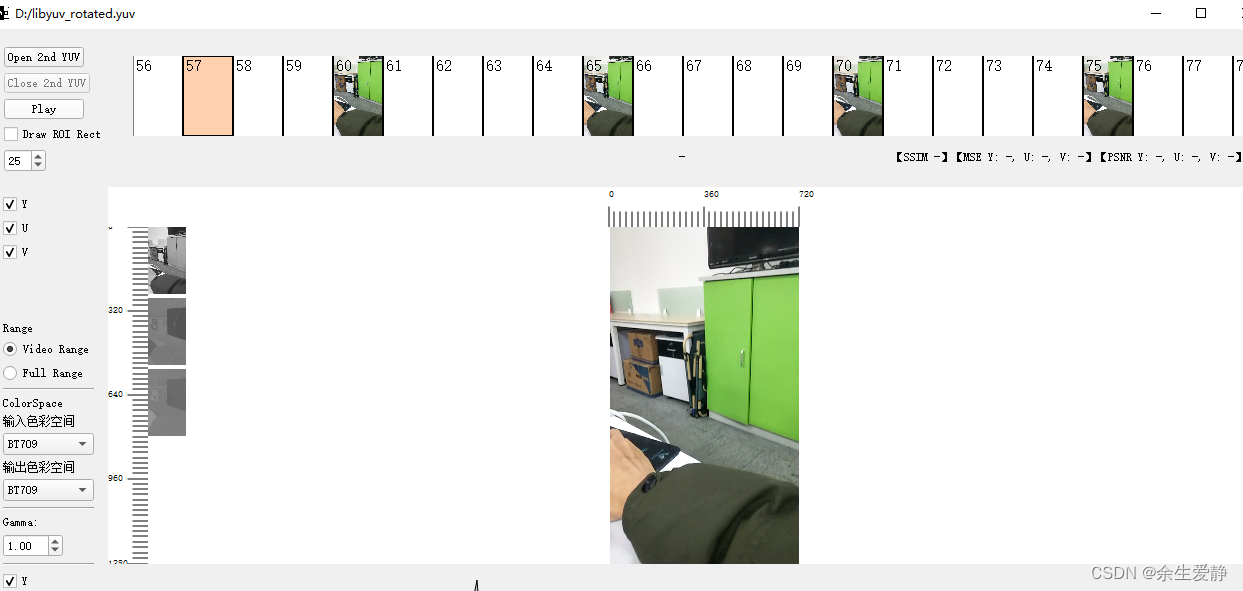

- 因为我们对数据进行了顺时针旋转了90度,所以,旋转后的图像数据的宽高也会发生颠倒

libYuv库(使用ubuntu编译libyuv库可以参考http://t.csdnimg.cn/W24Pr):

CMakeLists.txt

cmake_minimum_required(VERSION 3.22.1)

project("androidcamera")

#声明头文件路径

set(INCLUDE_DIR ${CMAKE_SOURCE_DIR}/../jniLibs/include)

#声明库文件路径

set(LIB_DIR ${CMAKE_SOURCE_DIR}/../jniLibs/lib)

#libYuv

set(YUV_LIB yuv)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -L${LIB_DIR}/${ANDROID_ABI} -std=gnu++11")

#设置头文件

include_directories(${INCLUDE_DIR})

add_library(${CMAKE_PROJECT_NAME} SHARED

native-lib.cpp

YuvUtil.cpp)

target_link_libraries(${CMAKE_PROJECT_NAME}

#链接yuv库

${YUV_LIB}

android

log)

YuvUtil.h

//

// Created by company on 2024-01-25.

//

#ifndef ANDROIDCAMERA_YUVUTIL_H

#define ANDROIDCAMERA_YUVUTIL_H

#include <jni.h>

#include <libyuv.h>

void nv21ToI420(jbyte *src_nv21_data,jint width,jint height,jbyte* dst_i420_data);

/**

* i420旋转

* @param src_i420_data

* @param width

* @param height

* @param dst_i420_data

* @param degree

*/

void rotateI420(jbyte*src_i420_data,jint width,jint height,jbyte*dst_i420_data,jint degree);

#endif //ANDROIDCAMERA_YUVUTIL_H

YuvUtil.cpp

//

// Created by company on 2024-01-25.

//

#include "YuvUtil.h"

void nv21ToI420(jbyte *src_nv21_data, jint width, jint height, jbyte *dst_i420_data) {

jint src_y_size = width * height;

jint src_u_size = (width >> 1) * (height >> 1);

jbyte *src_nv21_y_data = src_nv21_data;

jbyte *src_nv21_vu_data = src_nv21_data + src_y_size;

jbyte *dst_i420_y_data = dst_i420_data;

jbyte *dst_i420_u_data = dst_i420_data + src_y_size;

jbyte *dst_i420_v_data = dst_i420_data + src_y_size + src_u_size;

libyuv::NV21ToI420((const uint8_t *) src_nv21_y_data, width,

(const uint8_t *) src_nv21_vu_data, width,

(uint8_t *) dst_i420_y_data, width,

(uint8_t *) dst_i420_u_data, width >> 1,

(uint8_t *) dst_i420_v_data, width >> 1,

width, height);

}

void rotateI420(jbyte *src_i420_data, jint width, jint height, jbyte *dst_i420_data, jint degree) {

jint src_i420_y_size = width * height;

jint src_i420_u_size = (width >> 1) * (height >> 1);

jbyte *src_i420_y_data = src_i420_data;

jbyte *src_i420_u_data = src_i420_data + src_i420_y_size;

jbyte *src_i420_v_data = src_i420_data + src_i420_y_size + src_i420_u_size;

jbyte *dst_i420_y_data = dst_i420_data;

jbyte *dst_i420_u_data = dst_i420_data + src_i420_y_size;

jbyte *dst_i420_v_data = dst_i420_data + src_i420_y_size + src_i420_u_size;

if (degree == libyuv::kRotate90 || degree == libyuv::kRotate270) {

//90或270的width和height在旋转后是相反的

libyuv::I420Rotate((const uint8_t *) src_i420_y_data, width,

(const uint8_t *) src_i420_u_data, width >> 1,

(const uint8_t *) src_i420_v_data, width >> 1,

(uint8_t *) dst_i420_y_data, height,

(uint8_t *) dst_i420_u_data, height >> 1,

(uint8_t *) dst_i420_v_data, height >> 1,

width, height, (libyuv::RotationMode) degree);

} else {

libyuv::I420Rotate((const uint8_t *) src_i420_y_data, width,

(const uint8_t *) src_i420_u_data, width >> 1,

(const uint8_t *) src_i420_v_data, width >> 1,

(uint8_t *) dst_i420_y_data, width,

(uint8_t *) dst_i420_u_data, width >> 1,

(uint8_t *) dst_i420_v_data, width >> 1,

width, height, (libyuv::RotationMode) degree);

}

}

native-lib.cpp

#include <jni.h>

#include <string>

#include "YuvUtil.h"

extern "C"

JNIEXPORT void JNICALL

Java_com_aniljing_androidcamera_YuvUtil_nv21ToI420(JNIEnv *env, jobject thiz,

jbyteArray src_nv21_array, jint width,

jint height, jbyteArray dst_i420_array) {

jbyte *src_nv21_data = env->GetByteArrayElements(src_nv21_array, JNI_FALSE);

jbyte *dst_i420_data = env->GetByteArrayElements(dst_i420_array, JNI_FALSE);

nv21ToI420(src_nv21_data, width, height, dst_i420_data);

env->ReleaseByteArrayElements(src_nv21_array, src_nv21_data, 0);

env->ReleaseByteArrayElements(dst_i420_array, dst_i420_data, 0);

}

extern "C"

JNIEXPORT void JNICALL

Java_com_aniljing_androidcamera_YuvUtil_i420Rotate(JNIEnv *env, jobject thiz,

jbyteArray src_i420_array, jint width,

jint height, jbyteArray dst_i420_array,

jint degree) {

jbyte *src_i420_data = env->GetByteArrayElements(src_i420_array, JNI_FALSE);

jbyte *dst_i420_data = env->GetByteArrayElements(dst_i420_array, JNI_FALSE);

rotateI420(src_i420_data, width, height, dst_i420_data, degree);

env->ReleaseByteArrayElements(src_i420_array, src_i420_data, 0);

env->ReleaseByteArrayElements(dst_i420_array, dst_i420_data, 0);

}

YuvUtil.java

package com.aniljing.androidcamera;

public class YuvUtil {

public native void nv21ToI420(byte[] src_nv21_data, int width, int height, byte[] dst_i420_data);

public native void i420Rotate(byte[] src_i420_data, int width, int height, byte[] dst_i420_data, int degree);

static {

System.loadLibrary("androidcamera");

}

}

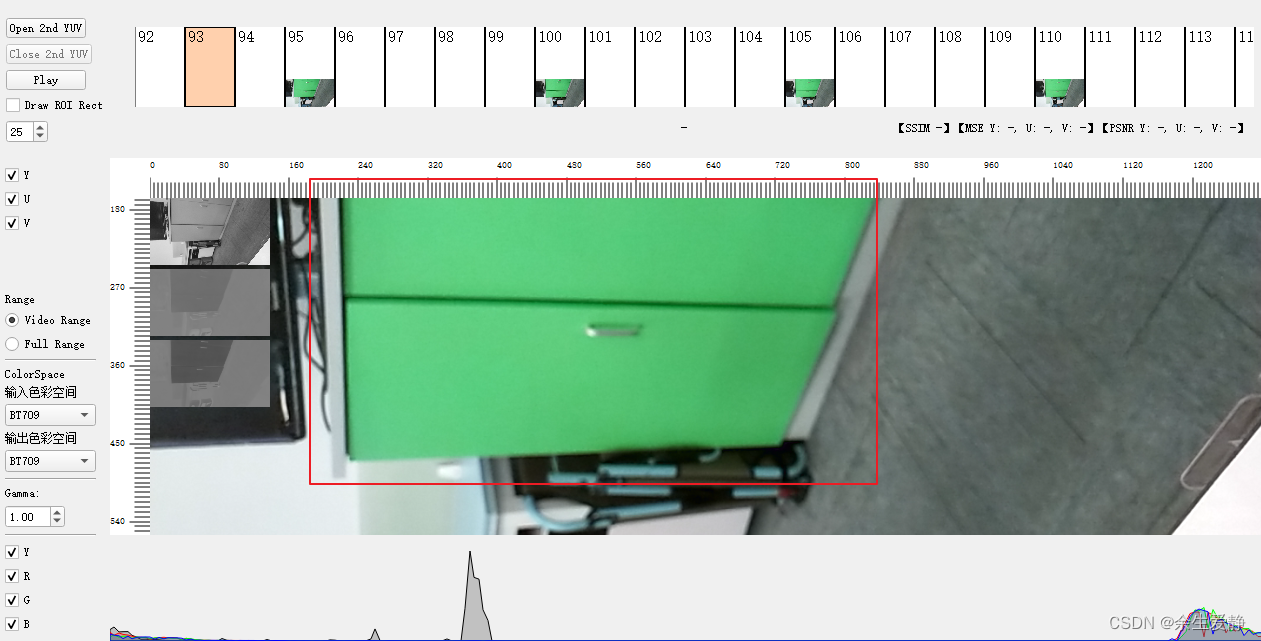

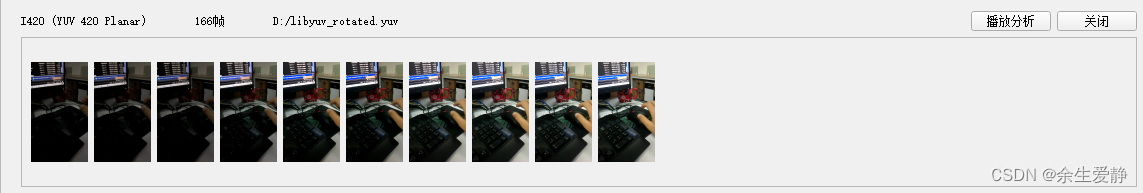

- 格式选择 I420

- 分辨率设置为720*1280

二、Camera2

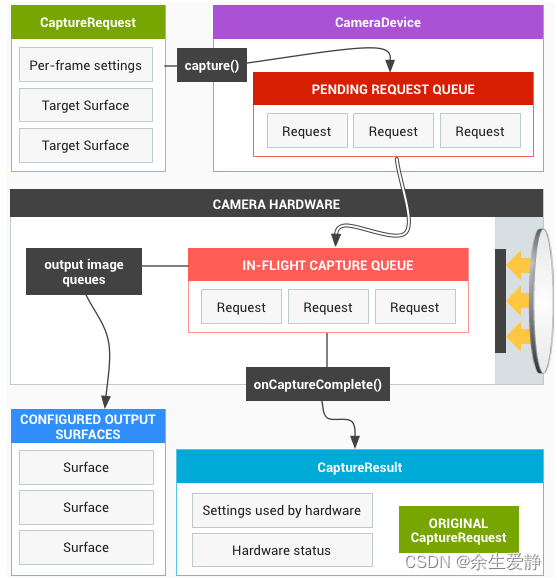

Camera2(android.hardware.camera2)是从 Android 5.0 L 版本开始引入的,并且废弃了旧的相机框架Camera1(android.hardware.Camera)。

相比于Camera1,Camera2架构上也发生了变化,API上的使用难度也增加了。Camera2将相机设备模拟成一个管道,它按顺序处理每一帧的请求并返回请求结果给客户端。

2.1、核心类

CameraManager

相机系统服务,用于管理和连接相机设备

CameraDevice

相机设备类,和Camera1中的Camera同级

CameraCharacteristics

主要用于获取相机信息,内部携带大量的相机信息,包含摄像头的正反(LENS_FACING)、AE模式、AF模式等,和Camera1中的Camera.Parameters类似

CaptureRequest

相机捕获图像的设置请求,包含传感器,镜头,闪光灯等

CaptureRequest.Builder

CaptureRequest的构造器,使用Builder模式,设置更加方便

CameraCaptureSession

请求抓取相机图像帧的会话,会话的建立主要会建立起一个通道。一个CameraDevice一次只能开启一个CameraCaptureSession。源端是相机,另一端是 Target,Target可以是Preview,也可以是ImageReader。

ImageReader

用于从相机打开的通道中读取需要的格式的原始图像数据,可以设置多个ImageReader。

2.2、使用流程

2.2.1、获取CameraManager

CameraManager cameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

2.2.2、获取相机信息,并打开相机

try {

String[] cameraIds = cameraManager.getCameraIdList();

for (int i = 0; i < cameraIds.length; i++) {

//描述相机设备的属性类

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(cameraIds[i]);

Range<Integer>[] allFpsRanges = characteristics.get(CameraCharacteristics.CONTROL_AE_AVAILABLE_TARGET_FPS_RANGES);

fpsRanges = allFpsRanges[allFpsRanges.length - 1];

//获取是前置还是后置摄像头

Integer facing = characteristics.get(CameraCharacteristics.LENS_FACING);

//使用后置摄像头

if (facing != null && facing == CameraCharacteristics.LENS_FACING_BACK) {

StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

//寻找一个 最合适的尺寸 ---》 一模一样

mPreviewSize = getBestSupportedSize(new ArrayList<Size>(Arrays.asList(map.getOutputSizes(SurfaceTexture.class))));

if (map != null) {

mCameraId = cameraIds[i];

}

orientation = characteristics.get(CameraCharacteristics.SENSOR_ORIENTATION);

//maxImages 此时需要2路,一路渲染到屏幕,一路用于网络传输

mImageReader = ImageReader.newInstance(mPreviewSize.getWidth(), mPreviewSize.getHeight(),

ImageFormat.YUV_420_888, 2);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, mCameraHandler);

cameraManager.openCamera(mCameraId, mCameraDeviceStateCallback, mCameraHandler);

}

}

} catch (CameraAccessException r) {

Log.e(TAG, "openCamera:" + r);

Log.e(TAG, "openCamera getMessage:" + r.getMessage());

Log.e(TAG, "openCamera getLocalizedMessage:" + r.getLocalizedMessage());

}

-

ImageReader

ImageReader是获取图像数据的重要途径,通过它可以获取到不同格式的图像数据,例如JPEG、YUV、RAW等。通过ImageReader.newInstance(int width, int height, int format, int maxImages)创建ImageReader对象,有4个参数:- width:图像数据的宽度

- height:图像数据的高度

- format:图像数据的格式,例如ImageFormat.JPEG,ImageFormat.YUV_420_888等

- maxImages:最大Image个数,Image对象池的大小,指定了能从ImageReader获取Image对象的最大值,过多获取缓冲区可能导致OOM,所以最好按照最少的需要去设置这个值

ImageReader其他相关的方法和回调:

- ImageReader.OnImageAvailableListener:有新图像数据的回调

- acquireLatestImage():从ImageReader的队列里面,获取最新的Image,删除旧的,如果没有可用的Image,返回null

- acquireNextImage():获取下一个最新的可用Image,没有则返回null

- close():释放与此ImageReader关联的所有资源

- getSurface():获取为当前ImageReader生成Image的Surface

-

打开相机设备

cameraManager.openCamera(@NonNull String cameraId,@NonNull final CameraDevice.StateCallback callback, @Nullable Handler handler)的三个参数:- cameraId:摄像头的唯一标识

- callback:设备连接状态变化的回调

- handler:回调执行的Handler对象,传入null则使用当前的主线程Handler

2.2.3、创建CaptureRequest

private final CameraDevice.StateCallback mCameraDeviceStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(CameraDevice camera) {

try {

mCameraDevice = camera;

SurfaceTexture surfaceTexture = mTextureView.getSurfaceTexture();

surfaceTexture.setDefaultBufferSize(1280, 720);

//Surface负责渲染

Surface previewSurface = new Surface(surfaceTexture);

//创建请求

mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mPreviewBuilder.set(CaptureRequest.CONTROL_AE_TARGET_FPS_RANGE, fpsRanges);

mPreviewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

mPreviewBuilder.addTarget(previewSurface);

mPreviewBuilder.addTarget(mImageReader.getSurface());

//创建会话

mCameraDevice.createCaptureSession(Arrays.asList(previewSurface, mImageReader.getSurface()), mCaptureSessionStateCallBack, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

Log.e(TAG, "onOpened:" + e.getMessage());

Log.e(TAG, "onOpened:" + e.getLocalizedMessage());

Log.e(TAG, "onOpened:" + e);

}

}

@Override

public void onDisconnected(CameraDevice camera) {

Log.e(TAG, "onDisconnected");

camera.close();

mCameraDevice = null;

}

@Override

public void onError(CameraDevice camera, int error) {

Log.e(TAG, "onError:" + error);

camera.close();

mCameraDevice = null;

}

};

在CameraDevice.StateCallback的onOpened回调中执行:这段的代码核心方法是mCameraDevice.createCaptureSession()创建Capture会话,它接受了三个参数:

- outputs:用于接受图像数据的surface集合,这里传入的是一个preview的surface

- callback:用于监听 Session 状态的CameraCaptureSession.StateCallback对象

- handler:用于执行CameraCaptureSession.StateCallback的Handler对象,传入null则使用当前的主线程Handler

通过CameraDevice.createCaptureRequest()创建CaptureRequest.Builder对象,传入一个templateType参数,templateType用于指定使用何种模板创建CaptureRequest.Builder对象,templateType的取值:

- TEMPLATE_PREVIEW:预览模式

- TEMPLATE_STILL_CAPTURE:拍照模式

- TEMPLATE_RECORD:视频录制模式

- TEMPLATE_VIDEO_SNAPSHOT:视频截图模式

- TEMPLATE_MANUAL:手动配置参数模式

2.2.4、预览

private final CameraCaptureSession.StateCallback mCaptureSessionStateCallBack = new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

try {

mCaptureSession = session;

CaptureRequest request = mPreviewBuilder.build();

// Finally, we start displaying the camera preview.

session.setRepeatingRequest(request, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(CameraCaptureSession session) {

Log.e(TAG, "onConfigureFailed:");

}

};

在CameraCaptureSession.StateCallback回调中,Camera2通过连续重复的Capture实现预览功能,每次Capture会把预览画面显示到对应的Surface上。连续重复的Capture操作通过mCaptureSession.setRepeatingRequest(mPreviewRequest,mCaptureCallback, mBackgroundHandler)实现,该方法有三个参数:

- request:CaptureRequest对象

- listener:监听Capture 状态的回调

- handler:用于执行CameraCaptureSession.CaptureCallback的Handler对象,传入null则使用当前的主线程Handler

停止预览使用mCaptureSession.stopRepeating()方法。

2.3、完整实现代码

package com.aniljing.androidcamera;

import android.annotation.SuppressLint;

import android.app.Activity;

import android.content.Context;

import android.graphics.ImageFormat;

import android.graphics.Point;

import android.graphics.Rect;

import android.graphics.SurfaceTexture;

import android.hardware.camera2.CameraAccessException;

import android.hardware.camera2.CameraCaptureSession;

import android.hardware.camera2.CameraCharacteristics;

import android.hardware.camera2.CameraDevice;

import android.hardware.camera2.CameraManager;

import android.hardware.camera2.CaptureRequest;

import android.hardware.camera2.params.StreamConfigurationMap;

import android.media.Image;

import android.media.ImageReader;

import android.os.Handler;

import android.os.HandlerThread;

import android.util.Log;

import android.util.Range;

import android.util.Size;

import android.view.Surface;

import android.view.TextureView;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

/**

* @ClassName Camera2ProviderPreviewWithYUV

* Camera2 两路预览:

* 1、使用TextureView预览,直接输出。

* 2、使用ImageReader获取数据,输出格式为ImageFormat.YUV_420_888,java端转化为NV21

*/

public class Camera2WithYUV {

private static final String TAG = Camera2WithYUV.class.getSimpleName();

private Activity mContext;

private String mCameraId;

private HandlerThread handlerThread;

private Handler mCameraHandler;

private CameraDevice mCameraDevice;

private TextureView mTextureView;

private CaptureRequest.Builder mPreviewBuilder;

private CameraCaptureSession mCaptureSession;

private ImageReader mImageReader;

private Size mPreviewSize;

private final Point previewViewSize;

private Range<Integer> fpsRanges;

private byte[] i420;

private YUVDataCallBack mYUVDataCallBack;

private int orientation;

private YuvUtil mYuvUtil;

public Camera2WithYUV(Activity mContext) {

this.mContext = mContext;

handlerThread = new HandlerThread("camera");

handlerThread.start();

mCameraHandler = new Handler(handlerThread.getLooper());

previewViewSize = new Point();

previewViewSize.x = 640;

previewViewSize.y = 480;

mYuvUtil=new YuvUtil();

}

public void initTexture(TextureView textureView) {

Log.e(TAG, "initTexture:" + textureView);

mTextureView = textureView;

mTextureView.setSurfaceTextureListener(new TextureView.SurfaceTextureListener() {

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surface, int width, int height) {

openCamera();

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture surface, int width, int height) {

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture surface) {

return true;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture surface) {

}

});

}

@SuppressLint("MissingPermission")

private void openCamera() {

CameraManager cameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE);

try {

String[] cameraIds = cameraManager.getCameraIdList();

for (int i = 0; i < cameraIds.length; i++) {

//描述相机设备的属性类

CameraCharacteristics characteristics = cameraManager.getCameraCharacteristics(cameraIds[i]);

Range<Integer>[] allFpsRanges = characteristics.get(CameraCharacteristics.CONTROL_AE_AVAILABLE_TARGET_FPS_RANGES);

fpsRanges = allFpsRanges[allFpsRanges.length - 1];

//获取是前置还是后置摄像头

Integer facing = characteristics.get(CameraCharacteristics.LENS_FACING);

//使用后置摄像头

if (facing != null && facing == CameraCharacteristics.LENS_FACING_BACK) {

StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

//寻找一个 最合适的尺寸 ---》 一模一样

mPreviewSize = getBestSupportedSize(new ArrayList<Size>(Arrays.asList(map.getOutputSizes(SurfaceTexture.class))));

if (map != null) {

mCameraId = cameraIds[i];

}

orientation = characteristics.get(CameraCharacteristics.SENSOR_ORIENTATION);

//maxImages 此时需要2路,一路渲染到屏幕,一路用于网络传输

mImageReader = ImageReader.newInstance(mPreviewSize.getWidth(), mPreviewSize.getHeight(),

ImageFormat.YUV_420_888, 2);

mImageReader.setOnImageAvailableListener(mOnImageAvailableListener, mCameraHandler);

cameraManager.openCamera(mCameraId, mCameraDeviceStateCallback, mCameraHandler);

}

}

} catch (CameraAccessException r) {

Log.e(TAG, "openCamera:" + r);

Log.e(TAG, "openCamera getMessage:" + r.getMessage());

Log.e(TAG, "openCamera getLocalizedMessage:" + r.getLocalizedMessage());

}

}

private final CameraDevice.StateCallback mCameraDeviceStateCallback = new CameraDevice.StateCallback() {

@Override

public void onOpened(CameraDevice camera) {

try {

mCameraDevice = camera;

SurfaceTexture surfaceTexture = mTextureView.getSurfaceTexture();

surfaceTexture.setDefaultBufferSize(1280, 720);

//Surface负责渲染

Surface previewSurface = new Surface(surfaceTexture);

//创建请求

mPreviewBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

mPreviewBuilder.set(CaptureRequest.CONTROL_AE_TARGET_FPS_RANGE, fpsRanges);

mPreviewBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

mPreviewBuilder.addTarget(previewSurface);

mPreviewBuilder.addTarget(mImageReader.getSurface());

//创建会话

mCameraDevice.createCaptureSession(Arrays.asList(previewSurface, mImageReader.getSurface()), mCaptureSessionStateCallBack, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

Log.e(TAG, "onOpened:" + e.getMessage());

Log.e(TAG, "onOpened:" + e.getLocalizedMessage());

Log.e(TAG, "onOpened:" + e);

}

}

@Override

public void onDisconnected(CameraDevice camera) {

Log.e(TAG, "onDisconnected");

camera.close();

mCameraDevice = null;

}

@Override

public void onError(CameraDevice camera, int error) {

Log.e(TAG, "onError:" + error);

camera.close();

mCameraDevice = null;

}

};

private final ImageReader.OnImageAvailableListener mOnImageAvailableListener = new ImageReader.OnImageAvailableListener() {

@SuppressLint("LongLogTag")

@Override

public void onImageAvailable(ImageReader reader) {

Image image = reader.acquireNextImage();

// Y:U:V == 4:2:2

if (mYUVDataCallBack != null && image.getFormat() == ImageFormat.YUV_420_888) {

Rect crop = image.getCropRect();

int format = image.getFormat();

int width = crop.width();

int height = crop.height();

if (i420 == null) {

i420 = new byte[width * height * ImageFormat.getBitsPerPixel(format) / 8];

}

i420 = getI420(image);

if (mYUVDataCallBack != null) {

mYUVDataCallBack.yuvData(i420, width, height, orientation);

}

}

image.close();

}

};

private final CameraCaptureSession.StateCallback mCaptureSessionStateCallBack = new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

try {

mCaptureSession = session;

CaptureRequest request = mPreviewBuilder.build();

// Finally, we start displaying the camera preview.

session.setRepeatingRequest(request, null, mCameraHandler);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(CameraCaptureSession session) {

Log.e(TAG, "onConfigureFailed:");

}

};

public void releaseCamera() {

Log.e(TAG, "releaseCamera");

if (mCaptureSession != null) {

mCaptureSession.close();

mCaptureSession = null;

}

if (mCameraDevice != null) {

mCameraDevice.close();

mCameraDevice = null;

}

if (mPreviewBuilder != null) {

mPreviewBuilder = null;

}

if (mImageReader != null) {

mImageReader.setOnImageAvailableListener(null, null);

mImageReader.close();

mImageReader = null;

}

if (handlerThread != null && !handlerThread.isInterrupted()) {

handlerThread.quit();

handlerThread.interrupt();

handlerThread = null;

}

if (mCameraHandler != null) {

mCameraHandler = null;

}

}

public interface YUVDataCallBack {

void yuvData(byte[] data, int width, int height, int orientation);

}

public void setYUVDataCallBack(YUVDataCallBack YUVDataCallBack) {

mYUVDataCallBack = YUVDataCallBack;

}

private Size getBestSupportedSize(List<Size> sizes) {

Point maxPreviewSize = new Point(640, 480);

Point minPreviewSize = new Point(480, 320);

Size defaultSize = sizes.get(0);

Size[] tempSizes = sizes.toArray(new Size[0]);

Arrays.sort(tempSizes, (o1, o2) -> {

if (o1.getWidth() > o2.getWidth()) {

return -1;

} else if (o1.getWidth() == o2.getWidth()) {

return o1.getHeight() > o2.getHeight() ? -1 : 1;

} else {

return 1;

}

});

sizes = new ArrayList<>(Arrays.asList(tempSizes));

for (int i = sizes.size() - 1; i >= 0; i--) {

if (maxPreviewSize != null) {

if (sizes.get(i).getWidth() > maxPreviewSize.x || sizes.get(i).getHeight() > maxPreviewSize.y) {

sizes.remove(i);

continue;

}

}

if (minPreviewSize != null) {

if (sizes.get(i).getWidth() < minPreviewSize.x || sizes.get(i).getHeight() < minPreviewSize.y) {

sizes.remove(i);

}

}

}

if (sizes.size() == 0) {

return defaultSize;

}

Size bestSize = sizes.get(0);

float previewViewRatio;

if (previewViewSize != null) {

previewViewRatio = (float) previewViewSize.x / (float) previewViewSize.y;

} else {

previewViewRatio = (float) bestSize.getWidth() / (float) bestSize.getHeight();

}

if (previewViewRatio > 1) {

previewViewRatio = 1 / previewViewRatio;

}

for (Size s : sizes) {

if (Math.abs((s.getHeight() / (float) s.getWidth()) - previewViewRatio) < Math.abs(bestSize.getHeight() / (float) bestSize.getWidth() - previewViewRatio)) {

bestSize = s;

}

}

return bestSize;

}

private byte[] getI420(Image image) {

try {

int w = image.getWidth(), h = image.getHeight();

// size是宽乘高的1.5倍 可以通过ImageFormat.getBitsPerPixel(ImageFormat.YUV_420_888)得到

int i420Size = w * h * 3 / 2;

Image.Plane[] planes = image.getPlanes();

//remaining0 = rowStride*(h-1)+w => 27632= 192*143+176 Y分量byte数组的size

int remaining0 = planes[0].getBuffer().remaining();

int remaining1 = planes[1].getBuffer().remaining();

//remaining2 = rowStride*(h/2-1)+w-1 => 13807= 192*71+176-1 V分量byte数组的size

int remaining2 = planes[2].getBuffer().remaining();

//获取pixelStride,可能跟width相等,可能不相等

int pixelStride = planes[2].getPixelStride();

int rowOffest = planes[2].getRowStride();

byte[] nv21 = new byte[i420Size];

//分别准备三个数组接收YUV分量。

byte[] yRawSrcBytes = new byte[remaining0];

byte[] uRawSrcBytes = new byte[remaining1];

byte[] vRawSrcBytes = new byte[remaining2];

planes[0].getBuffer().get(yRawSrcBytes);

planes[1].getBuffer().get(uRawSrcBytes);

planes[2].getBuffer().get(vRawSrcBytes);

if (pixelStride == image.getWidth()) {

//两者相等,说明每个YUV块紧密相连,可以直接拷贝

System.arraycopy(yRawSrcBytes, 0, nv21, 0, rowOffest * h);

System.arraycopy(vRawSrcBytes, 0, nv21, rowOffest * h, rowOffest * h / 2 - 1);

} else {

//根据每个分量的size先生成byte数组

byte[] ySrcBytes = new byte[w * h];

byte[] uSrcBytes = new byte[w * h / 2 - 1];

byte[] vSrcBytes = new byte[w * h / 2 - 1];

for (int row = 0; row < h; row++) {

//源数组每隔 rowOffest 个bytes 拷贝 w 个bytes到目标数组

System.arraycopy(yRawSrcBytes, rowOffest * row, ySrcBytes, w * row, w);

//y执行两次,uv执行一次

if (row % 2 == 0) {

//最后一行需要减一

if (row == h - 2) {

System.arraycopy(vRawSrcBytes, rowOffest * row / 2, vSrcBytes, w * row / 2, w - 1);

} else {

System.arraycopy(vRawSrcBytes, rowOffest * row / 2, vSrcBytes, w * row / 2, w);

}

}

}

//yuv拷贝到一个数组里面

System.arraycopy(ySrcBytes, 0, nv21, 0, w * h);

System.arraycopy(vSrcBytes, 0, nv21, w * h, w * h / 2 - 1);

byte[] i420 = new byte[nv21.length];

mYuvUtil.nv21ToI420(nv21, w, h, i420);

return i420;

}

} catch (Exception e) {

e.printStackTrace();

}

return null;

}

}

源码