背景是这样的:

你有一些Sidekiq的job,并且设置了unique_for,然后系统崩溃了,在你的redis里仍然有这个唯一性的锁,但是job却不见了,导致后面的job也进不来,这个时候需要手动disable这个唯一锁。

官网传送:Ent Unique Jobs · mperham/sidekiq Wiki · GitHub

1.先看看unique_for是怎么设置的:

class MyJob

include Sidekiq::Job

sidekiq_options unique_for: 10.minutes

def perform(...)

end

end官网解释:

This means that a second job can be pushed to Redis after 10 minutes or after the first job has successfully processed. If your job retries for a while, 10 minutes can pass, thus allowing another copy of the same job to be pushed to Redis. Design your jobs so that uniqueness is considered best effort, not a 100% guarantee A time limit is mandatory so that if a process crashes, any locks it is holding won't last forever.

简单理解就是你设置了10分钟,然后10分钟内只有一个这个job可以跑,不能同时跑两个。

2.关于如何绕过或者停用这个设置,官网是这样说的

If you declare unique_for in the Worker's class-level sidekiq_options but want to push a one-off job that bypasses the uniqueness check, use set to dynamically override the unique_for option:

# disable uniqueness

MyWorker.set(unique_for: false).perform_async(1,2,3)

# set a custom unique time

MyWorker.set(unique_for: 300).perform_async(1,2,3)3.看看实际案例

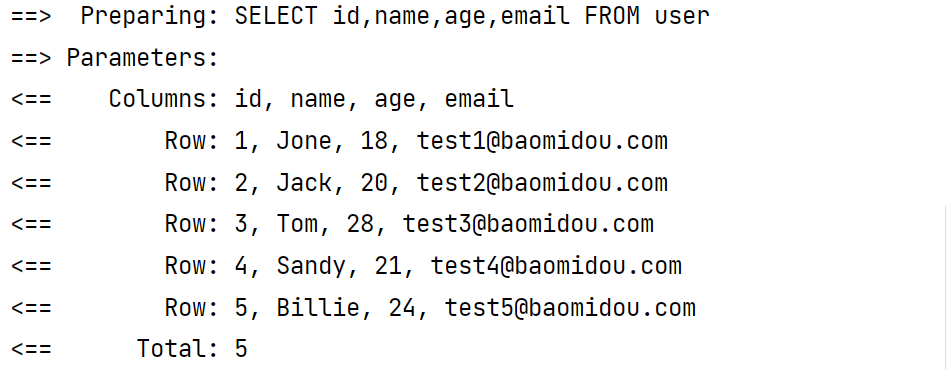

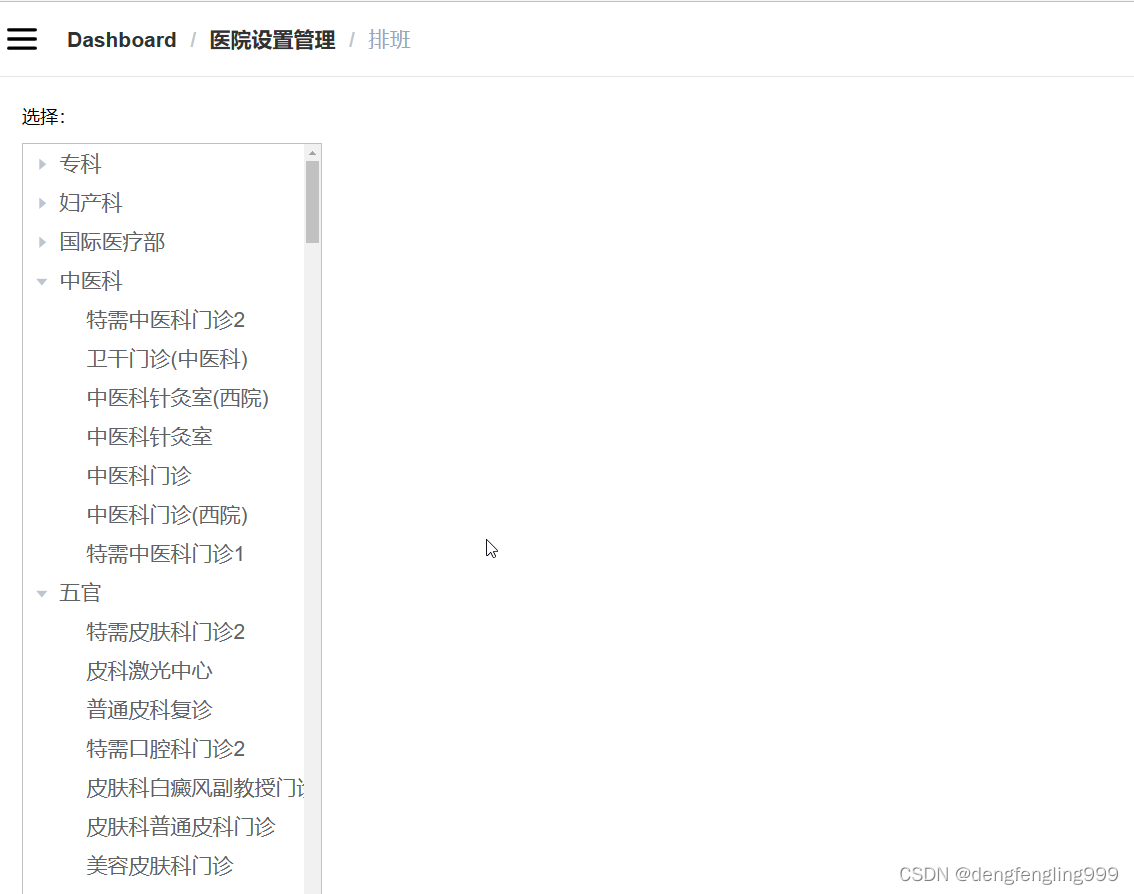

正常运行的情况下,可以看到某一时间段内只有一个job

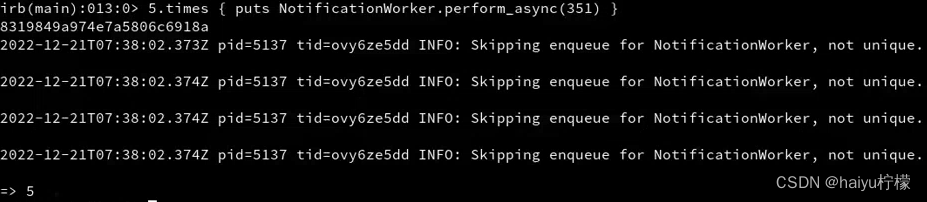

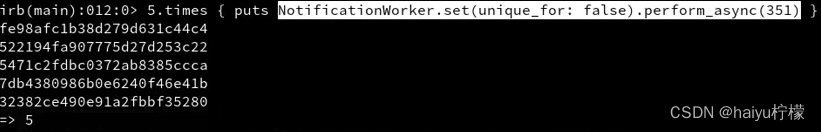

手动停用的情况下,可以看到每次都有一个新的job

好啦,学会了。