前言:

安全是一个绕不开的话题,那么,在云原生领域,在kubernetes内更加的需要安全。毕竟没有人愿意自己的项目是千疮百孔,适当的安全可以保证项目或者平台稳定高效的运行。

安全性是一个永远不会消失的问题,无论该技术推出多长时间都无关紧要。因此,我们应该始终使用 kube bench、kube-hunter 和 CIS 基准来强化 Kubernetes 集群。我们的集群越安全,它们就越不容易出现停机和黑客攻击。

一,

什么是CIS?

当然了,我们这里提到的CIS指的是一个技术性的安全组织,不是什么独联体,无关政治。

互联网安全中心 (CIS) 是一家历史悠久的全球安全组织,已将其 Kubernetes 基准创建为“客观的、共识驱动的安全指南”,为集群组件配置提供行业标准建议并强化 Kubernetes 安全态势。

级别 1 建议实施起来相对简单,同时提供主要好处,通常不会影响业务功能。级别 2 或“深度防御”建议适用于需要更全面战略的关键任务环境。

CIS 还提供确保集群资源符合基准并为不合规组件生成警报的工具。CIS 框架适用于所有 Kubernetes 发行版。

- 优点: 严格且被广泛接受的配置设置蓝图。

- 缺点: 并非所有建议都与所有组织相关,必须相应地进行评估。

二,

现在的kubernetes集群安全现状

ubernetes (K8s) 成为世界领先的容器编排平台是有原因的,当今有74% 的 IT 公司将其用于生产中的容器化工作负载。它通常是大规模处理容器配置、部署和管理的最简单方法。

但是,尽管 Kubernetes 使容器的使用变得更容易,但在安全性方面也增加了复杂性。究其原因,集群的规模随着业务的增长可能也会不断增加,并且可能会有若干个集群,而每一套集群都是由若干个组件组成的,并且可能还会有高可用的需求,这些使得集群变得更加复杂。

Kubernetes 的默认配置并不总是为所有部署的工作负载和微服务提供最佳安全性。此外,如今您不仅要负责保护您的环境免受恶意网络攻击,还要负责满足各种合规性要求。说人话就是kubeadm这样的工具使得部署不在困难,但,每一个集群并不具有最佳的安全性。

合规性已成为确保业务连续性、防止声誉受损和确定每个应用程序的风险级别的关键。合规性框架旨在通过轻松监控控制、团队级别的问责制和漏洞评估来解决安全和隐私问题——所有这些都在 K8s 环境中提出了独特的挑战。

为了完全保护 Kubernetes,需要多管齐下的方法:干净的代码、完全的可观察性、防止与不受信任的服务交换信息以及数字签名。还必须考虑网络、供应链和 CI/CD 管道安全、资源保护、架构最佳实践、机密管理和保护、漏洞扫描和容器运行时保护。

三,

什么是kube-bench?

Kube-Bench是一款针对Kubernete的安全检测工具,从本质上来说,Kube-Bench是一个基于Go开发的应用程序,它可以帮助研究人员对部署的Kubernete进行安全检测

那么,kube-bench到底是检测kubernetes集群的什么呢?

主要是检测kubernetes集群的各个组件的配置文件,查看这些配置文件是否符合安全基线的标准。

例如,kubelet的配置文件:

其中的anonymous的值可以是true或者false,默认anonymous-auth参数设置成true,也就是可以进行匿名认证,这时对kubelet API的请求都以匿名方式进行,系统会使用默认匿名用户和默认用户组来进行访问,默认用户名“system:anonymous”,默认用户组名“system:unauthenticated”。那么,这显然是不安全的一种配置方式,因此,下面的文件做了显式的定义false,可以有效的提高集群的安全性。

那么,如果我们每一个集群都这样人肉检索配置是否合乎安全,显然是不合适的。

kube-bench这个工具可以自动的将各个组件内的这些错误的,不合乎安全基线的配置找出来,并生成一个详细的报告。也就是说,kube-bench是一个自动化的安全检测工具。

[root@k8s-master cfg]# cat /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

四,

kube-bench的部署

kube-bench的部署方式大体有三种

第一种:

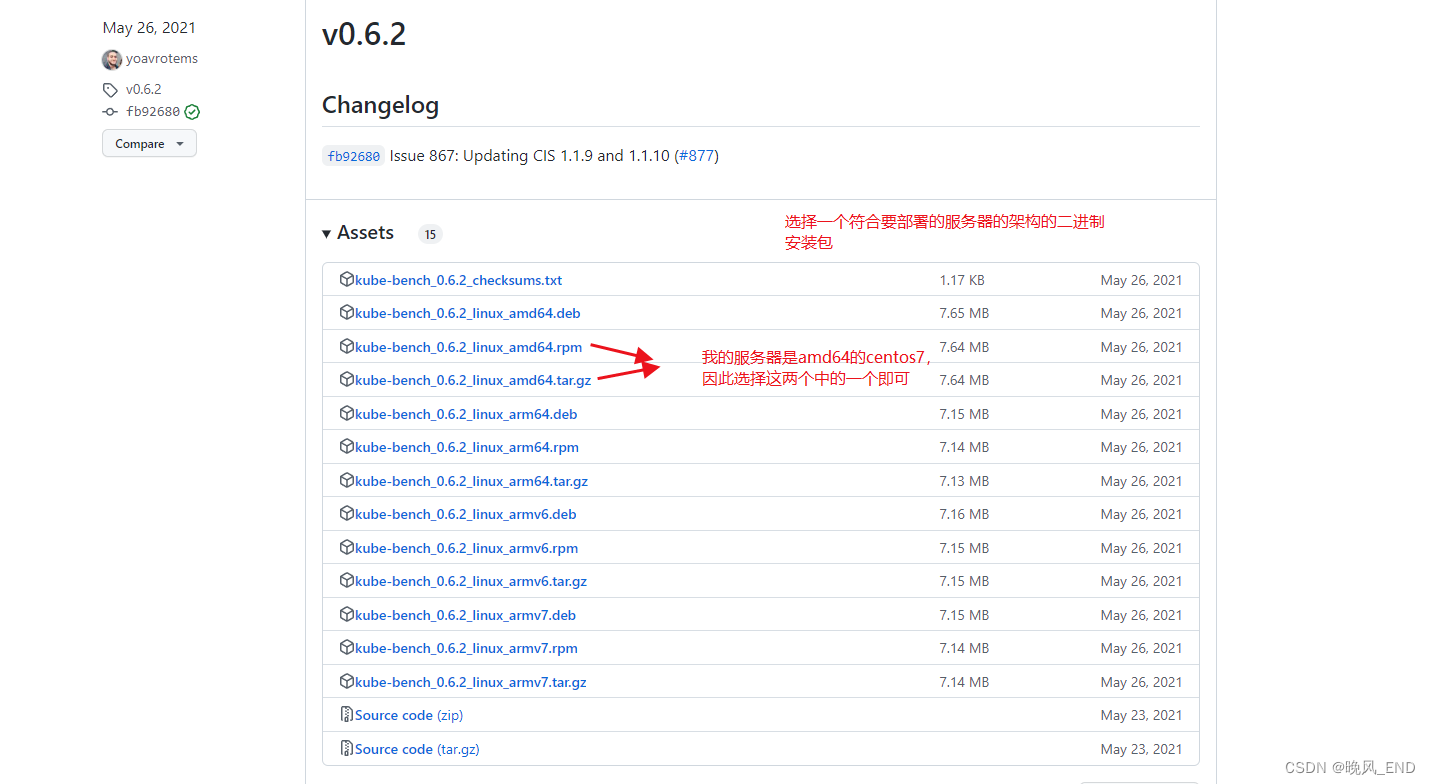

二进制部署

二进制的部署文件下载地址:Releases · aquasecurity/kube-bench · GitHub

上传到kubernetes集群的master节点,解压后,得到一个可执行文件kube-bench,一个配置文件夹cfg:

[root@k8s-master ~]# ll

total 25248

drwxr-xr-x 13 root root 193 Jan 4 05:10 cfg

-rwxr-xr-x 1 1001 116 17838153 May 26 2021 kube-bench

-rw-r--r-- 1 root root 8009592 Jan 4 05:09 kube-bench_0.6.2_linux_amd64.tar.gz

执行此命令即可开始安全检测:

./kube-bench run --targets master --config-dir ./cfg --config ./cfg/config.yaml这个是检测master节点的,当然了,输出会比较多,这一节就不多说什么了,检测结果在下一节进行分析,这里只是演示一下部署。

第二种:

容器运行kube-bench

直接运行一个容器,此容器持久化kube-bench命令到本地

docker run --rm -v `pwd`:/host docker.io/aquasec/kube-bench:latest install此命令的日志输出如下:

Unable to find image 'aquasec/kube-bench:latest' locally

latest: Pulling from aquasec/kube-bench

a0d0a0d46f8b: Pull complete

de518435ffef: Pull complete

c9e72fdf9efa: Pull complete

cfa016c18c39: Pull complete

629f37d57c12: Pull complete

04166caf0b52: Pull complete

fc9451058e82: Pull complete

8fe3a63c04b4: Pull complete

7e2fab6be223: Pull complete

b5eba067eb85: Pull complete

5d793ab25bac: Pull complete

db448d0d5d19: Pull complete

Digest: sha256:efaf4ba0d1c98d798c15baff83d0c15d56b4253d7e778a734a6eccc9c555626e

Status: Downloaded newer image for aquasec/kube-bench:latest

===============================================

kube-bench is now installed on your host

Run ./kube-bench to perform a security check

===============================================

开始安全检测:

[root@k8s-master ~]# ./kube-bench run --targets=master

[INFO] 1 Master Node Security Configuration

[INFO] 1.1 Master Node Configuration Files

[PASS] 1.1.1 Ensure that the API server pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.2 Ensure that the API server pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.3 Ensure that the controller manager pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.4 Ensure that the controller manager pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.5 Ensure that the scheduler pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.6 Ensure that the scheduler pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.7 Ensure that the etcd pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.8 Ensure that the etcd pod specification file ownership is set to root:root (Automated)

[WARN] 1.1.9 Ensure that the Container Network Interface file permissions are set to 644 or more restrictive (Manual)

[PASS] 1.1.10 Ensure that the Container Network Interface file ownership is set to root:root (Manual)

[PASS] 1.1.11 Ensure that the etcd data directory permissions are set to 700 or more restrictive (Automated)

[FAIL] 1.1.12 Ensure that the etcd data directory ownership is set to etcd:etcd (Automated)

。。。。。。。。。。第三种:

在集群内运行kube-bench的job类型pod

这里需要在GitHub下载源码包来获取yaml文件,我这里下载的是kube-bench-0.6.6.zip

将该源码包上传到服务器后解压并进入解压目录,可以看到有很多yaml文件:

[root@k8s-master kube-bench-0.6.6]# ls

cfg cmd CONTRIBUTING.md docs go.mod hack integration job-ack.yaml job-eks-asff.yaml job-gke.yaml job-master.yaml job.yaml main.go mkdocs.yml OWNERS

check codecov.yml Dockerfile entrypoint.sh go.sum hooks internal job-aks.yaml job-eks.yaml job-iks.yaml job-node.yaml LICENSE makefile NOTICE README.md

选择job.yaml 这个文件,执行它:

[root@k8s-master kube-bench-0.6.6]# kubectl apply -f job.yaml

job.batch/kube-bench created

等待job任务完成,状态变为completed:

[root@k8s-master kube-bench-0.6.6]# kubectl get po

NAME READY STATUS RESTARTS AGE

kube-bench-rn9dg 0/1 Completed 0 62s

查看此pod的日志,该日志即为安全检测的结果;

[root@k8s-master kube-bench-0.6.6]# kubectl logs kube-bench-rn9dg

[INFO] 4 Worker Node Security Configuration

[INFO] 4.1 Worker Node Configuration Files

[PASS] 4.1.1 Ensure that the kubelet service file permissions are set to 644 or more restrictive (Automated)

[PASS] 4.1.2 Ensure that the kubelet service file ownership is set to root:root (Automated)

[PASS] 4.1.3 If proxy kubeconfig file exists ensure permissions are set to 644 or more restrictive (Manual)

[PASS] 4.1.4 Ensure that the proxy kubeconfig file ownership is set to root:root (Manual)

[PASS] 4.1.5 Ensure that the --kubeconfig kubelet.conf file permissions are set to 644 or more restrictive (Automated)

[PASS] 4.1.6 Ensure that the --kubeconfig kubelet.conf file ownership is set to root:root (Manual)

。。。。。。。当然了,如果想只检测master,那么,apply job-master.yaml 这个文件即可,此文件的内容如下:

[root@k8s-master kube-bench-0.6.6]# cat job-master.yaml

---

apiVersion: batch/v1

kind: Job

metadata:

name: kube-bench-master

spec:

template:

spec:

hostPID: true

nodeSelector:

node-role.kubernetes.io/master: ""

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: kube-bench

image: aquasec/kube-bench:latest

command: ["kube-bench", "run", "--targets", "master"]

volumeMounts:

- name: var-lib-etcd

mountPath: /var/lib/etcd

readOnly: true

- name: var-lib-kubelet

mountPath: /var/lib/kubelet

readOnly: true

- name: var-lib-kube-scheduler

mountPath: /var/lib/kube-scheduler

readOnly: true

- name: var-lib-kube-controller-manager

mountPath: /var/lib/kube-controller-manager

readOnly: true

- name: etc-systemd

mountPath: /etc/systemd

readOnly: true

- name: lib-systemd

mountPath: /lib/systemd/

readOnly: true

- name: srv-kubernetes

mountPath: /srv/kubernetes/

readOnly: true

- name: etc-kubernetes

mountPath: /etc/kubernetes

readOnly: true

# /usr/local/mount-from-host/bin is mounted to access kubectl / kubelet, for auto-detecting the Kubernetes version.

# You can omit this mount if you specify --version as part of the command.

- name: usr-bin

mountPath: /usr/local/mount-from-host/bin

readOnly: true

- name: etc-cni-netd

mountPath: /etc/cni/net.d/

readOnly: true

- name: opt-cni-bin

mountPath: /opt/cni/bin/

readOnly: true

- name: etc-passwd

mountPath: /etc/passwd

readOnly: true

- name: etc-group

mountPath: /etc/group

readOnly: true

restartPolicy: Never

volumes:

- name: var-lib-etcd

hostPath:

path: "/var/lib/etcd"

- name: var-lib-kubelet

hostPath:

path: "/var/lib/kubelet"

- name: var-lib-kube-scheduler

hostPath:

path: "/var/lib/kube-scheduler"

- name: var-lib-kube-controller-manager

hostPath:

path: "/var/lib/kube-controller-manager"

- name: etc-systemd

hostPath:

path: "/etc/systemd"

- name: lib-systemd

hostPath:

path: "/lib/systemd"

- name: srv-kubernetes

hostPath:

path: "/srv/kubernetes"

- name: etc-kubernetes

hostPath:

path: "/etc/kubernetes"

- name: usr-bin

hostPath:

path: "/usr/bin"

- name: etc-cni-netd

hostPath:

path: "/etc/cni/net.d/"

- name: opt-cni-bin

hostPath:

path: "/opt/cni/bin/"

- name: etc-passwd

hostPath:

path: "/etc/passwd"

- name: etc-group

hostPath:

path: "/etc/group"

查看此job的日志,该日志即为master的安全检测结果;

[root@k8s-master kube-bench-0.6.6]# kubectl logs kube-bench-master-mkgcd

[INFO] 1 Master Node Security Configuration

[INFO] 1.1 Master Node Configuration Files

[PASS] 1.1.1 Ensure that the API server pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.2 Ensure that the API server pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.3 Ensure that the controller manager pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.4 Ensure that the controller manager pod specification file ownership is set to root:root (Automated)

。。。。。。。。。。。。。。。五,

kube-bench安全检测的结果分析和安全漏洞修复示例

在正式的开始安全检测前,我想应该先说明一下我这个kubernetes集群的状态,是全新安装的kubeadm部署的全部默认配置的集群,一个master节点,两个work节点。

kube-bench使用的是二进制部署

[root@k8s-master mnt]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 33h v1.23.15

k8s-node1 Ready <none> 33h v1.23.15

k8s-node2 Ready <none> 33h v1.23.15

那么,先进行master节点的安全检测吧:

检测命令的参数如下:

The specified --targets "policyers" are not configured for the CIS Benchmark cis-1.6\n Valid targets [master node controlplane etcd policies]根据以上报错,我们使用master这个参数对master节点进行安全检测

附:

每一个检测点都有标号,例如,1.1.1指的是 Ensure that the API server pod specification file permissions are set to 644 or more restrictive (Automated)

那么,如果此检测点是FAIL也就是失败,下面的== Remediations master ==将会提示如何修复,例如,第一个WARN提示是1.1.9,修复方法是:

1.1.9 Run the below command (based on the file location on your system) on the master node.

For example,

chmod 644 <path/to/cni/files>

[root@k8s-master mnt]# ./kube-bench run --targets=master --config-dir ./cfg --config ./cfg/config.yaml

[INFO] 1 Master Node Security Configuration

[INFO] 1.1 Master Node Configuration Files

[PASS] 1.1.1 Ensure that the API server pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.2 Ensure that the API server pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.3 Ensure that the controller manager pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.4 Ensure that the controller manager pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.5 Ensure that the scheduler pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.6 Ensure that the scheduler pod specification file ownership is set to root:root (Automated)

[PASS] 1.1.7 Ensure that the etcd pod specification file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.8 Ensure that the etcd pod specification file ownership is set to root:root (Automated)

[WARN] 1.1.9 Ensure that the Container Network Interface file permissions are set to 644 or more restrictive (Manual)

[WARN] 1.1.10 Ensure that the Container Network Interface file ownership is set to root:root (Manual)

[PASS] 1.1.11 Ensure that the etcd data directory permissions are set to 700 or more restrictive (Automated)

[FAIL] 1.1.12 Ensure that the etcd data directory ownership is set to etcd:etcd (Automated)

[PASS] 1.1.13 Ensure that the admin.conf file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.14 Ensure that the admin.conf file ownership is set to root:root (Automated)

[PASS] 1.1.15 Ensure that the scheduler.conf file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.16 Ensure that the scheduler.conf file ownership is set to root:root (Automated)

[PASS] 1.1.17 Ensure that the controller-manager.conf file permissions are set to 644 or more restrictive (Automated)

[PASS] 1.1.18 Ensure that the controller-manager.conf file ownership is set to root:root (Automated)

[PASS] 1.1.19 Ensure that the Kubernetes PKI directory and file ownership is set to root:root (Automated)

[PASS] 1.1.20 Ensure that the Kubernetes PKI certificate file permissions are set to 644 or more restrictive (Manual)

[PASS] 1.1.21 Ensure that the Kubernetes PKI key file permissions are set to 600 (Manual)

[INFO] 1.2 API Server

[WARN] 1.2.1 Ensure that the --anonymous-auth argument is set to false (Manual)

[PASS] 1.2.2 Ensure that the --basic-auth-file argument is not set (Automated)

[PASS] 1.2.3 Ensure that the --token-auth-file parameter is not set (Automated)

[PASS] 1.2.4 Ensure that the --kubelet-https argument is set to true (Automated)

[PASS] 1.2.5 Ensure that the --kubelet-client-certificate and --kubelet-client-key arguments are set as appropriate (Automated)

[FAIL] 1.2.6 Ensure that the --kubelet-certificate-authority argument is set as appropriate (Automated)

[PASS] 1.2.7 Ensure that the --authorization-mode argument is not set to AlwaysAllow (Automated)

[PASS] 1.2.8 Ensure that the --authorization-mode argument includes Node (Automated)

[PASS] 1.2.9 Ensure that the --authorization-mode argument includes RBAC (Automated)

[WARN] 1.2.10 Ensure that the admission control plugin EventRateLimit is set (Manual)

[PASS] 1.2.11 Ensure that the admission control plugin AlwaysAdmit is not set (Automated)

[WARN] 1.2.12 Ensure that the admission control plugin AlwaysPullImages is set (Manual)

[WARN] 1.2.13 Ensure that the admission control plugin SecurityContextDeny is set if PodSecurityPolicy is not used (Manual)

[PASS] 1.2.14 Ensure that the admission control plugin ServiceAccount is set (Automated)

[PASS] 1.2.15 Ensure that the admission control plugin NamespaceLifecycle is set (Automated)

[FAIL] 1.2.16 Ensure that the admission control plugin PodSecurityPolicy is set (Automated)

[PASS] 1.2.17 Ensure that the admission control plugin NodeRestriction is set (Automated)

[PASS] 1.2.18 Ensure that the --insecure-bind-address argument is not set (Automated)

[FAIL] 1.2.19 Ensure that the --insecure-port argument is set to 0 (Automated)

[PASS] 1.2.20 Ensure that the --secure-port argument is not set to 0 (Automated)

[FAIL] 1.2.21 Ensure that the --profiling argument is set to false (Automated)

[FAIL] 1.2.22 Ensure that the --audit-log-path argument is set (Automated)

[FAIL] 1.2.23 Ensure that the --audit-log-maxage argument is set to 30 or as appropriate (Automated)

[FAIL] 1.2.24 Ensure that the --audit-log-maxbackup argument is set to 10 or as appropriate (Automated)

[FAIL] 1.2.25 Ensure that the --audit-log-maxsize argument is set to 100 or as appropriate (Automated)

[WARN] 1.2.26 Ensure that the --request-timeout argument is set as appropriate (Automated)

[PASS] 1.2.27 Ensure that the --service-account-lookup argument is set to true (Automated)

[PASS] 1.2.28 Ensure that the --service-account-key-file argument is set as appropriate (Automated)

[PASS] 1.2.29 Ensure that the --etcd-certfile and --etcd-keyfile arguments are set as appropriate (Automated)

[PASS] 1.2.30 Ensure that the --tls-cert-file and --tls-private-key-file arguments are set as appropriate (Automated)

[PASS] 1.2.31 Ensure that the --client-ca-file argument is set as appropriate (Automated)

[PASS] 1.2.32 Ensure that the --etcd-cafile argument is set as appropriate (Automated)

[WARN] 1.2.33 Ensure that the --encryption-provider-config argument is set as appropriate (Manual)

[WARN] 1.2.34 Ensure that encryption providers are appropriately configured (Manual)

[WARN] 1.2.35 Ensure that the API Server only makes use of Strong Cryptographic Ciphers (Manual)

[INFO] 1.3 Controller Manager

[WARN] 1.3.1 Ensure that the --terminated-pod-gc-threshold argument is set as appropriate (Manual)

[FAIL] 1.3.2 Ensure that the --profiling argument is set to false (Automated)

[PASS] 1.3.3 Ensure that the --use-service-account-credentials argument is set to true (Automated)

[PASS] 1.3.4 Ensure that the --service-account-private-key-file argument is set as appropriate (Automated)

[PASS] 1.3.5 Ensure that the --root-ca-file argument is set as appropriate (Automated)

[PASS] 1.3.6 Ensure that the RotateKubeletServerCertificate argument is set to true (Automated)

[PASS] 1.3.7 Ensure that the --bind-address argument is set to 127.0.0.1 (Automated)

[INFO] 1.4 Scheduler

[FAIL] 1.4.1 Ensure that the --profiling argument is set to false (Automated)

[PASS] 1.4.2 Ensure that the --bind-address argument is set to 127.0.0.1 (Automated)

== Remediations master ==

1.1.9 Run the below command (based on the file location on your system) on the master node.

For example,

chmod 644 <path/to/cni/files>

1.1.10 Run the below command (based on the file location on your system) on the master node.

For example,

chown root:root <path/to/cni/files>

1.1.12 On the etcd server node, get the etcd data directory, passed as an argument --data-dir,

from the below command:

ps -ef | grep etcd

Run the below command (based on the etcd data directory found above).

For example, chown etcd:etcd /var/lib/etcd

1.2.1 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the below parameter.

--anonymous-auth=false

1.2.6 Follow the Kubernetes documentation and setup the TLS connection between

the apiserver and kubelets. Then, edit the API server pod specification file

/etc/kubernetes/manifests/kube-apiserver.yaml on the master node and set the

--kubelet-certificate-authority parameter to the path to the cert file for the certificate authority.

--kubelet-certificate-authority=<ca-string>

1.2.10 Follow the Kubernetes documentation and set the desired limits in a configuration file.

Then, edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

and set the below parameters.

--enable-admission-plugins=...,EventRateLimit,...

--admission-control-config-file=<path/to/configuration/file>

1.2.12 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --enable-admission-plugins parameter to include

AlwaysPullImages.

--enable-admission-plugins=...,AlwaysPullImages,...

1.2.13 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --enable-admission-plugins parameter to include

SecurityContextDeny, unless PodSecurityPolicy is already in place.

--enable-admission-plugins=...,SecurityContextDeny,...

1.2.16 Follow the documentation and create Pod Security Policy objects as per your environment.

Then, edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --enable-admission-plugins parameter to a

value that includes PodSecurityPolicy:

--enable-admission-plugins=...,PodSecurityPolicy,...

Then restart the API Server.

1.2.19 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the below parameter.

--insecure-port=0

1.2.21 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the below parameter.

--profiling=false

1.2.22 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --audit-log-path parameter to a suitable path and

file where you would like audit logs to be written, for example:

--audit-log-path=/var/log/apiserver/audit.log

1.2.23 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --audit-log-maxage parameter to 30 or as an appropriate number of days:

--audit-log-maxage=30

1.2.24 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --audit-log-maxbackup parameter to 10 or to an appropriate

value.

--audit-log-maxbackup=10

1.2.25 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --audit-log-maxsize parameter to an appropriate size in MB.

For example, to set it as 100 MB:

--audit-log-maxsize=100

1.2.26 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

and set the below parameter as appropriate and if needed.

For example,

--request-timeout=300s

1.2.33 Follow the Kubernetes documentation and configure a EncryptionConfig file.

Then, edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the --encryption-provider-config parameter to the path of that file: --encryption-provider-config=</path/to/EncryptionConfig/File>

1.2.34 Follow the Kubernetes documentation and configure a EncryptionConfig file.

In this file, choose aescbc, kms or secretbox as the encryption provider.

1.2.35 Edit the API server pod specification file /etc/kubernetes/manifests/kube-apiserver.yaml

on the master node and set the below parameter.

--tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_128_GCM

_SHA256,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_RSA_WITH_AES_256_GCM

_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_AES_256_GCM

_SHA384

1.3.1 Edit the Controller Manager pod specification file /etc/kubernetes/manifests/kube-controller-manager.yaml

on the master node and set the --terminated-pod-gc-threshold to an appropriate threshold,

for example:

--terminated-pod-gc-threshold=10

1.3.2 Edit the Controller Manager pod specification file /etc/kubernetes/manifests/kube-controller-manager.yaml

on the master node and set the below parameter.

--profiling=false

1.4.1 Edit the Scheduler pod specification file /etc/kubernetes/manifests/kube-scheduler.yaml file

on the master node and set the below parameter.

--profiling=false

== Summary master ==

43 checks PASS

11 checks FAIL

11 checks WARN

0 checks INFO

== Summary total ==

43 checks PASS

11 checks FAIL

11 checks WARN

0 checks INFO

输出总体上可以分为三部分,第一部分是检测结果,第二部分是== Remediations master == 这一行后面的安全整改建议,第三部分是安全检测结果总结,

- [PASS]:测试通过

- [FAIL]:测试未通过,重点关注,在测试结果会给出修复建议

- [WARN]:警告,可做了解

- [INFO]:信息,没什么可说的,就是字面意思

那么,输出的东西有点多,可以将检测报告重定向到某个文件内,我这里重定向到result 这个文件内:

./kube-bench run --targets=master --config-dir ./cfg --config ./cfg/config.yaml >result以上输出中有一个FAIL是这个:

[FAIL] 1.1.12 Ensure that the etcd data directory ownership is set to etcd:etcd (Automated)这个表示etcd集群的数据目录 属主部署etcd这个用户,那么,我们将数据目录属主更改后,看看是否可以消除错误:

新建etcd用户,并且该用户不可登陆系统,然后给予etcd集群的数据目录 etcd这个用户属主

[root@k8s-master mnt]# useradd etcd -s /sbin/nologin

[root@k8s-master mnt]# id etcd

uid=1000(etcd) gid=1000(etcd) groups=1000(etcd)

[root@k8s-master mnt]# chown -Rf etcd. /var/lib/etcd/

再次检测:

./kube-bench run --targets=master --config-dir ./cfg --config ./cfg/config.yaml >result可以看到这个安全隐患清除了:

[root@k8s-master mnt]# tail -f result

10 checks FAIL

11 checks WARN

0 checks INFO

== Summary total ==

44 checks PASS

10 checks FAIL

11 checks WARN

0 checks INFO

OK,检测一下工作节点,可以看到有一个FAIL:

./kube-bench run --targets=node --config-dir ./cfg --config ./cfg/config.yaml >result[FAIL] 4.2.6 Ensure that the --protect-kernel-defaults argument is set to true (Automated)修复建议是这个:

== Remediations node ==

4.2.6 If using a Kubelet config file, edit the file to set protectKernelDefaults: true.

If using command line arguments, edit the kubelet service file

/lib/systemd/system/kubelet.service on each worker node and

set the below parameter in KUBELET_SYSTEM_PODS_ARGS variable.

--protect-kernel-defaults=true

Based on your system, restart the kubelet service. For example:

systemctl daemon-reload

systemctl restart kubelet.service

OK,这个建议我们接受,登陆工作节点,修改kubelet的配置文件/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf(三个节点都修改)

[root@k8s-node1 ~]# cat /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

Environment="KUBELET_SYSTEM_PODS_ARGS=--protect-kernel-defaults=true" #这个是新增的

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS --system-reserved=cpu=1,memory=2Gi,ephemeral-storage=5Gi $KUBELET_SYSTEM_PODS_ARGS #这个也是新增的重启kubelet服务,再次检测可以看到没有FAIL了:

systemctl daemon-reload && systemctl restart kubelet再次使用kube-bench安全检测master,可以看到 --protect-kernel-defaults=true参数生效了

[root@k8s-master mnt]# ./kube-bench run --targets=node --config-dir ./cfg --config ./cfg/config.yaml

[INFO] 4 Worker Node Security Configuration

[INFO] 4.1 Worker Node Configuration Files

[PASS] 4.1.1 Ensure that the kubelet service file permissions are set to 644 or more restrictive (Automated)

[PASS] 4.1.2 Ensure that the kubelet service file ownership is set to root:root (Automated)

[PASS] 4.1.3 If proxy kubeconfig file exists ensure permissions are set to 644 or more restrictive (Manual)

[PASS] 4.1.4 Ensure that the proxy kubeconfig file ownership is set to root:root (Manual)

[PASS] 4.1.5 Ensure that the --kubeconfig kubelet.conf file permissions are set to 644 or more restrictive (Automated)

[PASS] 4.1.6 Ensure that the --kubeconfig kubelet.conf file ownership is set to root:root (Manual)

[PASS] 4.1.7 Ensure that the certificate authorities file permissions are set to 644 or more restrictive (Manual)

[PASS] 4.1.8 Ensure that the client certificate authorities file ownership is set to root:root (Manual)

[PASS] 4.1.9 Ensure that the kubelet --config configuration file has permissions set to 644 or more restrictive (Automated)

[PASS] 4.1.10 Ensure that the kubelet --config configuration file ownership is set to root:root (Automated)

[INFO] 4.2 Kubelet

[PASS] 4.2.1 Ensure that the anonymous-auth argument is set to false (Automated)

[PASS] 4.2.2 Ensure that the --authorization-mode argument is not set to AlwaysAllow (Automated)

[PASS] 4.2.3 Ensure that the --client-ca-file argument is set as appropriate (Automated)

[PASS] 4.2.4 Ensure that the --read-only-port argument is set to 0 (Manual)

[PASS] 4.2.5 Ensure that the --streaming-connection-idle-timeout argument is not set to 0 (Manual)

[PASS] 4.2.6 Ensure that the --protect-kernel-defaults argument is set to true (Automated)

[PASS] 4.2.7 Ensure that the --make-iptables-util-chains argument is set to true (Automated)

[PASS] 4.2.8 Ensure that the --hostname-override argument is not set (Manual)

[WARN] 4.2.9 Ensure that the --event-qps argument is set to 0 or a level which ensures appropriate event capture (Manual)

[WARN] 4.2.10 Ensure that the --tls-cert-file and --tls-private-key-file arguments are set as appropriate (Manual)

[PASS] 4.2.11 Ensure that the --rotate-certificates argument is not set to false (Manual)

[PASS] 4.2.12 Verify that the RotateKubeletServerCertificate argument is set to true (Manual)

[WARN] 4.2.13 Ensure that the Kubelet only makes use of Strong Cryptographic Ciphers (Manual)

。。。。。。。。。。。。。。。。