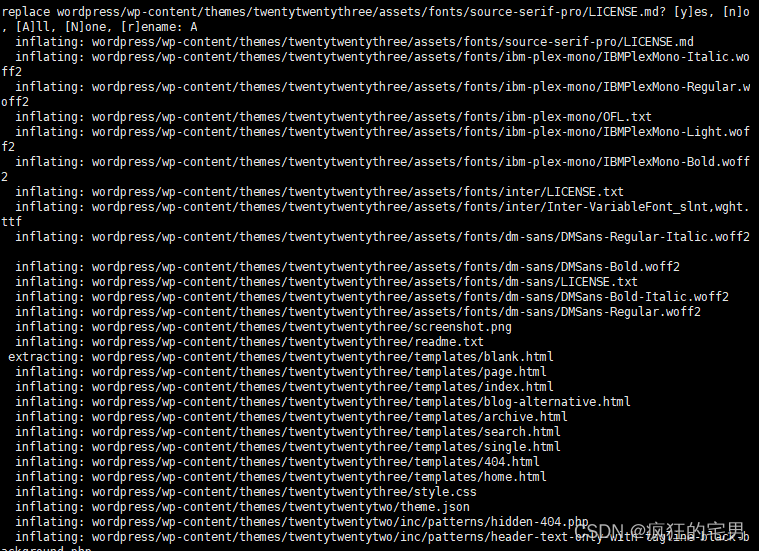

前向实时渲染vsm阴影实现的主要步骤:

1. 编码深度数据,存到一个rtt中。

2. 纵向和横向执行遮挡信息blur filter sampling, 存到对应的rtt中。

3. 将上一步的结果(rtt)应用到可接收阴影的材质中。

具体代码情况文章最后附上的实现源码。

当前示例源码github地址:

https://github.com/vilyLei/voxwebgpu/blob/feature/rendering/src/voxgpu/sample/BaseVSMShadowTest.ts

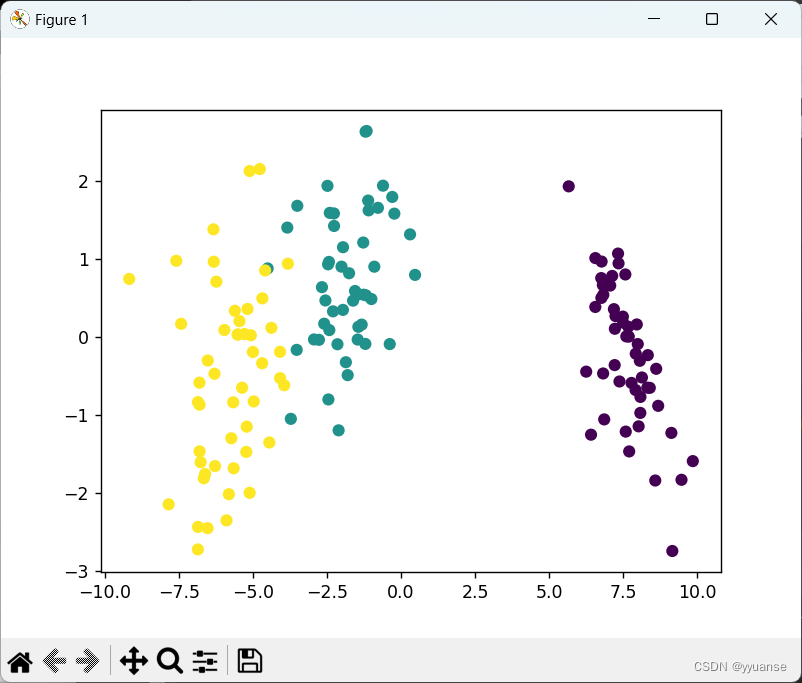

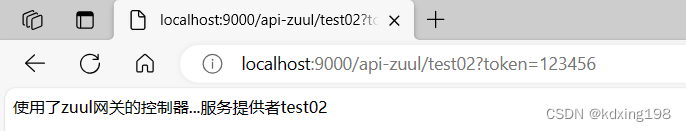

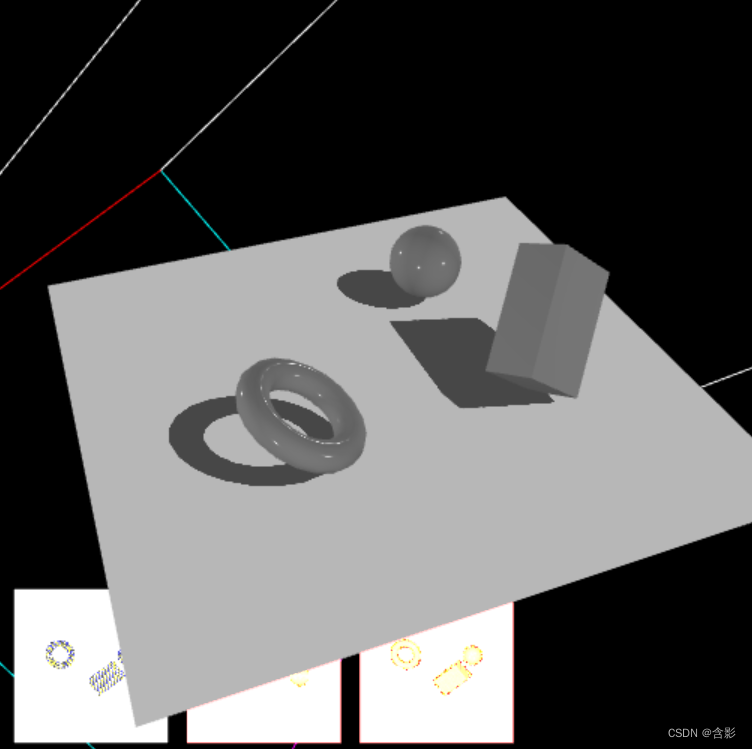

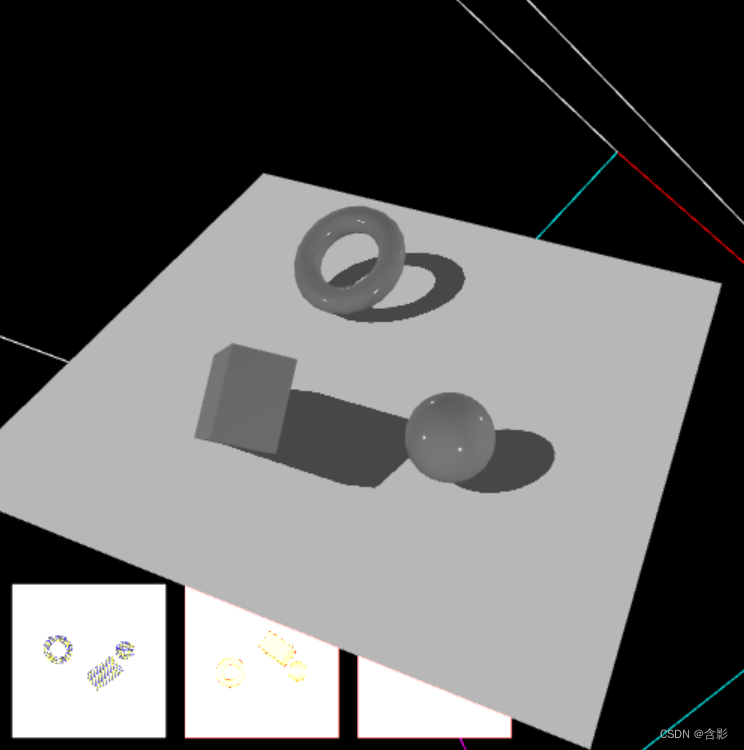

当前示例运行效果:

主要的WGSL Shader代码:

编码深度:

struct VertexOutput {

@builtin(position) Position: vec4<f32>,

@location(0) projPos: vec4<f32>,

@location(1) objPos: vec4<f32>

}

@vertex

fn vertMain(

@location(0) position: vec3<f32>

) -> VertexOutput {

let objPos = vec4(position.xyz, 1.0);

let wpos = objMat * objPos;

var output: VertexOutput;

let projPos = projMat * viewMat * wpos;

output.Position = projPos;

output.projPos = projPos;

output.objPos = objPos;

return output;

}

const PackUpscale = 256. / 255.; // fraction -> 0..1 (including 1)

const UnpackDownscale = 255. / 256.; // 0..1 -> fraction (excluding 1)

const PackFactors = vec3<f32>(256. * 256. * 256., 256. * 256., 256.);

const UnpackFactors = UnpackDownscale / vec4<f32>(PackFactors, 1.0);

const ShiftRight8 = 1. / 256.;

fn packDepthToRGBA(v: f32) -> vec4<f32> {

var r = vec4<f32>(fract(v * PackFactors), v);

let v3 = r.yzw - (r.xyz * ShiftRight8);

r = vec4<f32>(v3.x, v3);

return r * PackUpscale;

}

@fragment

fn fragMain(

@location(0) projPos: vec4<f32>,

@location(1) objPos: vec4<f32>

) -> @location(0) vec4<f32> {

let fragCoordZ = 0.5 * projPos[2] / projPos[3] + 0.5;

var color4 = packDepthToRGBA( fragCoordZ );

return color4;

}纵向和横向执行遮挡信息blur filter sampling:

struct VertexOutput {

@builtin(position) Position: vec4<f32>,

@location(0) uv: vec2<f32>

}

@vertex

fn vertMain(

@location(0) position: vec3<f32>,

@location(1) uv: vec2<f32>

) -> VertexOutput {

var output: VertexOutput;

output.Position = vec4(position.xyz, 1.0);

output.uv = uv;

return output;

}

const PackUpscale = 256. / 255.; // fraction -> 0..1 (including 1)

const UnpackDownscale = 255. / 256.; // 0..1 -> fraction (excluding 1)

const PackFactors = vec3<f32>(256. * 256. * 256., 256. * 256., 256.);

const UnpackFactors = UnpackDownscale / vec4<f32>(PackFactors, 1.0);

const ShiftRight8 = 1. / 256.;

fn packDepthToRGBA(v: f32) -> vec4<f32> {

var r = vec4<f32>(fract(v * PackFactors), v);

let v3 = r.yzw - (r.xyz * ShiftRight8);

return vec4<f32>(v3.x, v3) * PackUpscale;

}

fn unpackRGBAToDepth( v: vec4<f32> ) -> f32 {

return dot( v, UnpackFactors );

}

fn pack2HalfToRGBA( v: vec2<f32> ) -> vec4<f32> {

let r = vec4( v.x, fract( v.x * 255.0 ), v.y, fract( v.y * 255.0 ));

return vec4<f32>( r.x - r.y / 255.0, r.y, r.z - r.w / 255.0, r.w);

}

fn unpackRGBATo2Half( v: vec4<f32> ) -> vec2<f32> {

return vec2<f32>( v.x + ( v.y / 255.0 ), v.z + ( v.w / 255.0 ) );

}

const SAMPLE_RATE = 0.25;

const HALF_SAMPLE_RATE = 0.125;

@fragment

fn fragMain(

@location(0) uv: vec2<f32>,

) -> @location(0) vec4<f32> {

var mean = 0.0;

var squared_mean = 0.0;

let resolution = viewParam.zw;

let fragCoord = resolution * uv;

let radius = param[3];

let c4 = textureSample(shadowDepthTexture, shadowDepthSampler, uv);

var depth = unpackRGBAToDepth( c4 );

for ( var i = -1.0; i < 1.0 ; i += SAMPLE_RATE) {

#ifdef USE_HORIZONAL_PASS

let distribution = unpackRGBATo2Half( textureSample(shadowDepthTexture, shadowDepthSampler, ( fragCoord.xy + vec2( i, 0.0 ) * radius ) / resolution ) );

mean += distribution.x;

squared_mean += distribution.y * distribution.y + distribution.x * distribution.x;

#else

depth = unpackRGBAToDepth( textureSample(shadowDepthTexture, shadowDepthSampler, ( fragCoord.xy + vec2( 0.0, i ) * radius ) / resolution ) );

mean += depth;

squared_mean += depth * depth;

#endif

}

mean = mean * HALF_SAMPLE_RATE;

squared_mean = squared_mean * HALF_SAMPLE_RATE;

let std_dev = sqrt( squared_mean - mean * mean );

var color4 = pack2HalfToRGBA( vec2<f32>( mean, std_dev ) );

return color4;

}应用到可接收阴影的材质中(示例用法):

struct VertexOutput {

@builtin(position) Position: vec4<f32>,

@location(0) uv: vec2<f32>,

@location(1) worldNormal: vec3<f32>,

@location(2) svPos: vec4<f32>

}

@vertex

fn vertMain(

@location(0) position: vec3<f32>,

@location(1) uv: vec2<f32>,

@location(2) normal: vec3<f32>

) -> VertexOutput {

let objPos = vec4(position.xyz, 1.0);

let wpos = objMat * objPos;

var output: VertexOutput;

let projPos = projMat * viewMat * wpos;

output.Position = projPos;

// output.normal = normal;

let invMat33 = inverseM33(m44ToM33(objMat));

output.uv = uv;

output.worldNormal = normalize(normal * invMat33);

output.svPos = shadowMatrix * wpos;

return output;

}

fn pack2HalfToRGBA( v: vec2<f32> ) -> vec4<f32> {

let r = vec4( v.x, fract( v.x * 255.0 ), v.y, fract( v.y * 255.0 ));

return vec4<f32>( r.x - r.y / 255.0, r.y, r.z - r.w / 255.0, r.w);

}

fn unpackRGBATo2Half( v: vec4<f32> ) -> vec2<f32> {

return vec2<f32>( v.x + ( v.y / 255.0 ), v.z + ( v.w / 255.0 ) );

}

fn texture2DDistribution( uv: vec2<f32> ) -> vec2<f32> {

let v4 = textureSample(shadowDepthTexture, shadowDepthSampler, uv );

return unpackRGBATo2Half( v4 );

}

fn VSMShadow (uv: vec2<f32>, compare: f32 ) -> f32 {

var occlusion = 1.0;

let distribution = texture2DDistribution( uv );

let hard_shadow = step( compare , distribution.x ); // Hard Shadow

if (hard_shadow != 1.0 ) {

let distance = compare - distribution.x ;

let variance = max( 0.00000, distribution.y * distribution.y );

var softness_probability = variance / (variance + distance * distance ); // Chebeyshevs inequality

softness_probability = clamp( ( softness_probability - 0.3 ) / ( 0.95 - 0.3 ), 0.0, 1.0 ); // 0.3 reduces light bleed

occlusion = clamp( max( hard_shadow, softness_probability ), 0.0, 1.0 );

}

return occlusion;

}

fn getVSMShadow( shadowMapSize: vec2<f32>, shadowBias: f32, shadowRadius: f32, shadowCoordP: vec4<f32> ) -> f32 {

var shadowCoord = vec4<f32>(shadowCoordP.xyz / vec3<f32>(shadowCoordP.w), shadowCoordP.z + shadowBias);

let inFrustumVec = vec4<bool> ( shadowCoord.x >= 0.0, shadowCoord.x <= 1.0, shadowCoord.y >= 0.0, shadowCoord.y <= 1.0 );

let inFrustum = all( inFrustumVec );

let frustumTestVec = vec2<bool>( inFrustum, shadowCoord.z <= 1.0 );

var shadow = VSMShadow( shadowCoord.xy, shadowCoord.z );

if ( !all( frustumTestVec ) ) {

shadow = 1.0;

}

return shadow;

}

@fragment

fn fragMain(

@location(0) uv: vec2<f32>,

@location(1) worldNormal: vec3<f32>,

@location(2) svPos: vec4<f32>

) -> @location(0) vec4<f32> {

var color = vec4<f32>(1.0);

var shadow = getVSMShadow(params[1].xy, params[0].x, params[0].z, svPos );

let shadowIntensity = 1.0 - params[0].w;

shadow = clamp(shadow, 0.0, 1.0) * (1.0 - shadowIntensity) + shadowIntensity;

var f = clamp(dot(worldNormal, params[2].xyz),0.0,1.0);

if(f > 0.0001) {

f = min(shadow,clamp(f, shadowIntensity,1.0));

}else {

f = shadowIntensity;

}

var color4 = vec4<f32>(color.xyz * vec3(f * 0.9 + 0.1), 1.0);

return color4;

}此示例基于此渲染系统实现,当前示例TypeScript源码如下:

export class BaseVSMShadowTest {

private mRscene = new RendererScene();

private mShadowCamera: Camera;

private mDebug = false;

initialize(): void {

this.mRscene.initialize({

canvasWith: 512,

canvasHeight: 512,

rpassparam: { multisampleEnabled: true }

});

this.initScene();

this.initEvent();

}

private mEntities: Entity3D[] = [];

private initScene(): void {

let rc = this.mRscene;

this.buildShadowCam();

let sph = new SphereEntity({

radius: 80,

transform: {

position: [-230.0, 100.0, -200.0]

}

});

this.mEntities.push(sph);

rc.addEntity(sph);

let box = new BoxEntity({

minPos: [-30, -30, -30],

maxPos: [130, 230, 80],

transform: {

position: [160.0, 100.0, -210.0],

rotation: [50, 130, 80]

}

});

this.mEntities.push(box);

rc.addEntity(box);

let torus = new TorusEntity({

transform: {

position: [160.0, 100.0, 210.0],

rotation: [50, 30, 80]

}

});

this.mEntities.push(torus);

rc.addEntity(torus);

if (!this.mDebug) {

this.applyShadow();

}

}

private mShadowDepthRTT = { uuid: "rtt-shadow-depth", rttTexture: {}, shdVarName: 'shadowDepth' };

private mOccVRTT = { uuid: "rtt--occV", rttTexture: {}, shdVarName: 'shadowDepth' };

private mOccHRTT = { uuid: "rtt--occH", rttTexture: {}, shdVarName: 'shadowDepth' };

private applyShadowDepthRTT(): void {

let rc = this.mRscene;

// rtt texture proxy descriptor

let rttTex = this.mShadowDepthRTT;

// define a rtt pass color colorAttachment0

let colorAttachments = [

{

texture: rttTex,

// green clear background color

clearValue: { r: 1, g: 1, b: 1, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

];

// create a separate rtt rendering pass

let rPass = rc.createRTTPass({ colorAttachments });

rPass.node.camera = this.mShadowCamera;

let extent = [-0.5, -0.5, 0.8, 0.8];

const shadowDepthShdSrc = {

shaderSrc: { code: shadowDepthWGSL, uuid: "shadowDepthShdSrc" }

};

let material = this.createDepthMaterial(shadowDepthShdSrc);

let es = this.createDepthEntities([material], false);

for (let i = 0; i < es.length; ++i) {

rPass.addEntity(es[i]);

}

// 显示渲染结果

extent = [-0.95, -0.95, 0.4, 0.4];

let entity = new FixScreenPlaneEntity({ extent, flipY: true, textures: [{ diffuse: rttTex }] });

rc.addEntity(entity);

}

private applyBuildDepthOccVRTT(): void {

let rc = this.mRscene;

// rtt texture proxy descriptor

let rttTex = this.mOccVRTT;

// define a rtt pass color colorAttachment0

let colorAttachments = [

{

texture: rttTex,

// green clear background color

clearValue: { r: 1, g: 1, b: 1, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

];

// create a separate rtt rendering pass

let rPass = rc.createRTTPass({ colorAttachments });

let material = new ShadowOccBlurMaterial();

let ppt = material.property;

ppt.setShadowRadius(this.mShadowRadius);

ppt.setViewSize(this.mShadowMapW, this.mShadowMapH);

material.addTextures([this.mShadowDepthRTT]);

let extent = [-1, -1, 2, 2];

let rttEntity = new FixScreenPlaneEntity({ extent, materials: [material] });

rPass.addEntity(rttEntity);

// 显示渲染结果

extent = [-0.5, -0.95, 0.4, 0.4];

let entity = new FixScreenPlaneEntity({ extent, flipY: true, textures: [{ diffuse: rttTex }] });

rc.addEntity(entity);

}

private applyBuildDepthOccHRTT(): void {

let rc = this.mRscene;

// rtt texture proxy descriptor

let rttTex = this.mOccHRTT;

// define a rtt pass color colorAttachment0

let colorAttachments = [

{

texture: rttTex,

// green clear background color

clearValue: { r: 1, g: 1, b: 1, a: 1.0 },

loadOp: "clear",

storeOp: "store"

}

];

// create a separate rtt rendering pass

let rPass = rc.createRTTPass({ colorAttachments });

let material = new ShadowOccBlurMaterial();

let ppt = material.property;

ppt.setShadowRadius(this.mShadowRadius);

ppt.setViewSize(this.mShadowMapW, this.mShadowMapH);

material.property.toHorizonalBlur();

material.addTextures([this.mOccVRTT]);

let extent = [-1, -1, 2, 2];

let rttEntity = new FixScreenPlaneEntity({ extent, materials: [material] });

rPass.addEntity(rttEntity);

// 显示渲染结果

extent = [-0.05, -0.95, 0.4, 0.4];

let entity = new FixScreenPlaneEntity({ extent, flipY: true, textures: [{ diffuse: rttTex }] });

rc.addEntity(entity);

}

private createDepthMaterial(shaderSrc: WGRShderSrcType, faceCullMode = "none"): WGMaterial {

let pipelineDefParam = {

depthWriteEnabled: true,

faceCullMode,

blendModes: [] as string[]

};

const material = new WGMaterial({

shadinguuid: "shadow-depth_material",

shaderSrc,

pipelineDefParam

});

return material;

}

private createDepthEntities(materials: WGMaterial[], flag = false): Entity3D[] {

const rc = this.mRscene;

let entities = [];

let ls = this.mEntities;

let tot = ls.length;

for (let i = 0; i < tot; ++i) {

let et = ls[i];

let entity = new Entity3D({ transform: et.transform });

entity.materials = materials;

entity.geometry = et.geometry;

entities.push(entity);

if (flag) {

rc.addEntity(entity);

}

}

return entities;

}

private mShadowBias = -0.0005;

private mShadowRadius = 2.0;

private mShadowMapW = 512;

private mShadowMapH = 512;

private mShadowViewW = 1300;

private mShadowViewH = 1300;

private buildShadowCam(): void {

const cam = new Camera({

eye: [600.0, 800.0, -600.0],

near: 0.1,

far: 1900,

perspective: false,

viewWidth: this.mShadowViewW,

viewHeight: this.mShadowViewH

});

cam.update();

this.mShadowCamera = cam;

const rsc = this.mRscene;

let frameColors = [[1.0, 0.0, 1.0], [0.0, 1.0, 1.0], [1.0, 0.0, 0.0], [0.0, 1.0, 1.0]];

let boxFrame = new BoundsFrameEntity({ vertices8: cam.frustum.vertices, frameColors });

rsc.addEntity(boxFrame);

}

private initEvent(): void {

const rc = this.mRscene;

rc.addEventListener(MouseEvent.MOUSE_DOWN, this.mouseDown);

new MouseInteraction().initialize(rc, 0, false).setAutoRunning(true);

}

private mFlag = -1;

private buildShadowReceiveEntity(): void {

let cam = this.mShadowCamera;

let transMatrix = new Matrix4();

transMatrix.setScaleXYZ(0.5, -0.5, 0.5);

transMatrix.setTranslationXYZ(0.5, 0.5, 0.5);

let shadowMat = new Matrix4();

shadowMat.copyFrom(cam.viewProjMatrix);

shadowMat.append(transMatrix);

let material = new ShadowReceiveMaterial();

let ppt = material.property;

ppt.setShadowRadius(this.mShadowRadius);

ppt.setShadowBias(this.mShadowBias);

ppt.setShadowSize(this.mShadowMapW, this.mShadowMapH);

ppt.setShadowMatrix(shadowMat);

ppt.setDirec(cam.nv);

material.addTextures([this.mOccHRTT]);

const rc = this.mRscene;

let plane = new PlaneEntity({

axisType: 1,

extent: [-600, -600, 1200, 1200],

transform: {

position: [0, -1, 0]

},

materials: [material]

});

rc.addEntity(plane);

}

private applyShadow(): void {

this.applyShadowDepthRTT();

this.applyBuildDepthOccVRTT();

this.applyBuildDepthOccHRTT();

this.buildShadowReceiveEntity();

}

private mouseDown = (evt: MouseEvent): void => {

this.mFlag++;

if (this.mDebug) {

if (this.mFlag == 0) {

this.applyShadowDepthRTT();

} else if (this.mFlag == 1) {

this.applyBuildDepthOccVRTT();

} else if (this.mFlag == 2) {

this.applyBuildDepthOccHRTT();

} else if (this.mFlag == 3) {

this.buildShadowReceiveEntity();

}

}

};

run(): void {

this.mRscene.run();

}

}