文章目录

- 基本步骤

- 示例

- 生成第 1 棵决策树

- 生产第 2 棵决策树

- 生成第 T 棵决策树

- 加权投票

- sklearn 实现

基本步骤

首先,是初始化训练数据的权值分布

D

1

D_1

D1。假设有

m

m

m 个训练样本数据,则每一个训练样本最开始时,都被赋予相同的权值:

w

1

=

1

m

w_1 = \large \frac{1}{m}

w1=m1,这样训练样本集的初始权值分布

D

1

(

i

)

D_1(i)

D1(i):

D

1

(

i

)

=

w

1

=

(

w

11

,

⋯

,

w

1

m

)

=

(

1

m

,

⋯

,

1

m

)

D_1(i) = w_1 = (w_{11}, \cdots, w_{1m}) = (\frac{1}{m}, \cdots, \frac{1}{m})

D1(i)=w1=(w11,⋯,w1m)=(m1,⋯,m1)

进行迭代 t = 1 , ⋯ , T t = 1, \cdots, T t=1,⋯,T;

选取一个当前误差最低的弱分类器

h

t

h_t

ht 作为第

t

t

t 个基本分类器,并计算弱分类器

h

t

:

X

→

{

−

1

,

1

}

h_t:X\rightarrow \{-1, 1\}

ht:X→{−1,1},该弱分类器在分布

D

t

D_t

Dt 上的分类错误率为:

ϵ

t

=

P

(

h

t

(

x

i

)

≠

y

i

)

=

∑

i

=

t

n

w

t

i

I

(

h

t

(

x

i

)

≠

y

i

)

\epsilon_t = P(h_t(x_i) \neq y_i) = \sum ^n _{i=t} w_{ti} I(h_t(x_i) \neq y_i)

ϵt=P(ht(xi)=yi)=i=t∑nwtiI(ht(xi)=yi) 其中,

I

(

h

t

(

x

i

)

≠

y

i

)

=

{

1

h

t

(

x

i

)

≠

y

i

0

h

t

(

x

i

)

=

y

i

I(h_t(x_i) \neq y_i) = \begin{cases} 1 & h_t(x_i) \neq y_i \\\\ 0 & h_t(x_i) = y_i \\ \end{cases}

I(ht(xi)=yi)=⎩

⎨

⎧10ht(xi)=yiht(xi)=yi分类错误率应满足

0

<

ϵ

<

0.5

0 < \epsilon < 0.5

0<ϵ<0.5 ,

第

t

t

t 个弱分类器

h

t

h_t

ht 的权重系数为:

α

t

=

1

2

l

o

g

(

1

−

ϵ

t

ϵ

t

)

\alpha_t = \frac{1}{2} log\left(\frac{1 - \epsilon_t}{\epsilon_t}\right)

αt=21log(ϵt1−ϵt)

并求出新权重

w

t

+

1

=

(

w

t

+

1

,

1

,

⋯

,

w

t

+

1

,

m

)

w_{t+1} = (w_{t+1,1}, \cdots, w_{t+1,m})

wt+1=(wt+1,1,⋯,wt+1,m),其中:

w

t

+

1

,

i

=

w

t

i

e

−

α

t

y

i

h

t

(

x

i

)

=

{

w

t

i

e

α

t

h

t

(

x

i

)

≠

y

i

w

t

i

e

−

α

t

h

t

(

x

i

)

=

y

i

w_{t+1,i} = w_{ti} e^{-\alpha_t y_i h_t(x_i)} = \begin{cases} w_{ti} e ^{\alpha_t} & h_t(x_i) \neq y_i \\\\ w_{ti} e ^{-\alpha_t} & h_t(x_i) = y_i \\ \end{cases}

wt+1,i=wtie−αtyiht(xi)=⎩

⎨

⎧wtieαtwtie−αtht(xi)=yiht(xi)=yi

对新权重进行归一化处理,其中

Z

t

Z_t

Zt 为归一化常数,得出训练样本的权重分布

D

t

+

1

D_{t+1}

Dt+1 为:

D

t

+

1

=

w

t

+

1

Z

t

D_{t+1} = \frac{w_{t+1}}{Z_{t}}

Dt+1=Ztwt+1 简化上述过程公式为:

D

t

+

1

=

D

t

Z

t

×

{

e

−

α

t

h

t

(

x

i

)

≠

y

i

e

α

t

h

t

(

x

i

)

=

y

i

=

D

t

e

−

α

t

y

h

t

(

x

)

Z

t

\begin{aligned} D_{t+1} & = \frac{D_t}{Z_t} × \begin{cases} e^{-\alpha_t} & h_t(x_i) \neq y_i \\\\ e^{\alpha_t} & h_t(x_i) = y_i \\ \end{cases} \\\\ & = \frac{D_te^{{-\alpha_t y h_t(x)}}}{Z_t} \end{aligned}

Dt+1=ZtDt×⎩

⎨

⎧e−αteαtht(xi)=yiht(xi)=yi=ZtDte−αtyht(x)

最后是集合策略。Adaboost分类采用的是加权表决法,构建基本分类器的线性组合:

f

(

x

)

=

∑

t

=

1

T

α

t

h

t

(

x

)

f(x) = \sum ^T _{t=1} \alpha_t h_t(x)

f(x)=t=1∑Tαtht(x)

通过符号函数 sign 的作用,得到一个最终的强分类器为:

H

(

x

)

=

s

i

g

n

(

f

(

x

)

)

=

s

i

g

n

(

∑

t

=

1

T

α

t

h

t

(

x

)

)

H(x) = sign(f(x)) = sign(\sum ^T _{t=1} \alpha_t h_t(x))

H(x)=sign(f(x))=sign(t=1∑Tαtht(x))

示例

考虑一个分类数据集

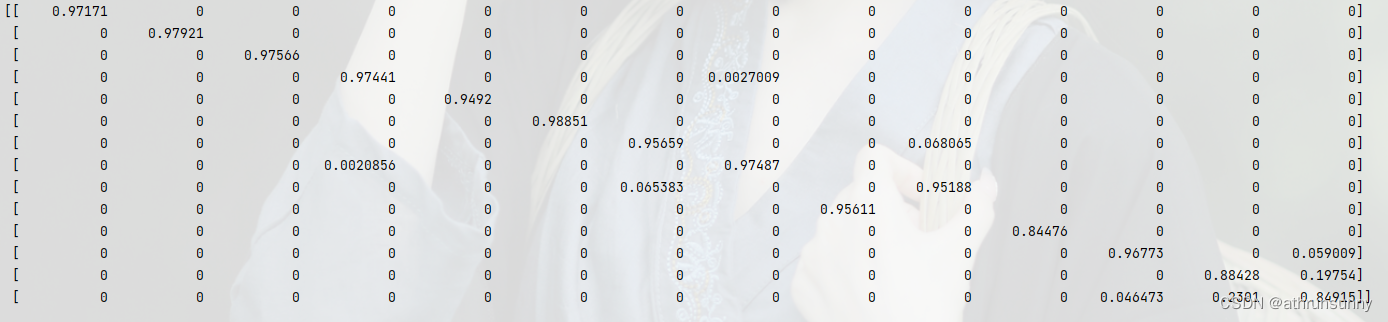

| 序号 | X 1 X_1 X1 | X 2 X_2 X2 | Y Y Y |

|---|---|---|---|

| 1 | 0 | 0 | 1 |

| 2 | 0.5 | 0.9 | 1 |

| 3 | 1 | 1.2 | -1 |

| 4 | 1.2 | 0.7 | -1 |

| 5 | 1.4 | 0.6 | 1 |

| 6 | 1.6 | 0.2 | -1 |

| 7 | 1.7 | 0.4 | 1 |

| 8 | 2 | 0 | 1 |

| 9 | 2.2 | 0.1 | -1 |

| 10 | 2.5 | 1 | -1 |

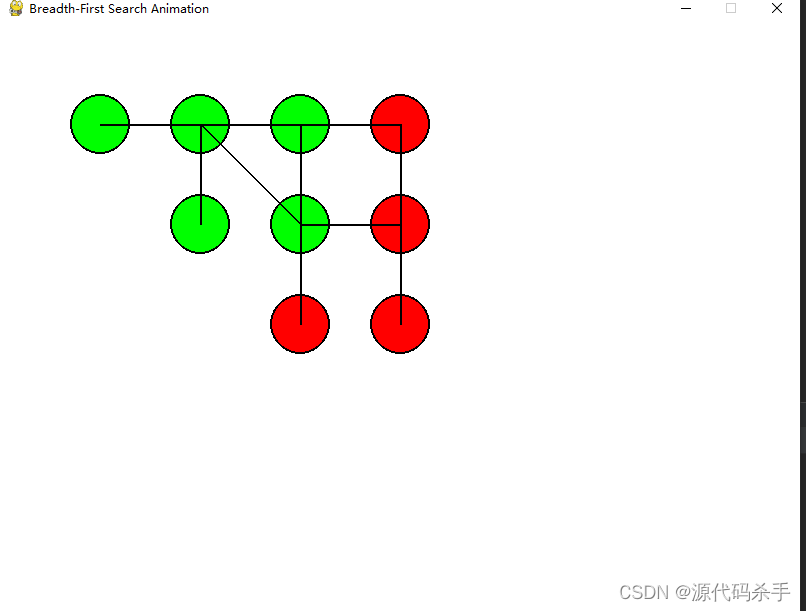

生成第 1 棵决策树

(随机) 选择条件 x 2 ≤ 0.65 x_2 ≤ 0.65 x2≤0.65 生成第 1 棵决策树

在分布

D

1

=

(

0.1

,

⋅

⋅

⋅

,

0.1

)

T

D_1 = (0.1, · · · , 0.1)^T

D1=(0.1,⋅⋅⋅,0.1)T 下,计算分类错误率

ϵ

=

0.3

ϵ = 0.3

ϵ=0.3,求出权重系数

α

1

\alpha_1

α1:

α

1

=

1

2

l

o

g

(

1

−

ϵ

ϵ

)

=

0.184

α_1 = \frac{1}{2} log\left( \frac{1−ϵ} {ϵ} \right) = 0.184

α1=21log(ϵ1−ϵ)=0.184

再求出新权重

w

2

=

(

w

2

,

1

,

⋯

,

w

2

,

10

)

w_2 = (w_{2,1}, \cdots, w_{2,10})

w2=(w2,1,⋯,w2,10),其中:

w

2

,

i

=

{

w

1

i

e

α

1

i

f

y

≠

y

^

w

1

i

e

−

α

1

i

f

y

=

y

^

w_{2,i} = \begin{cases} w_{1i} e ^{\alpha_1} & if ~~ y \neq \hat y \\\\ w_{1i} e ^{-\alpha_1} & if ~~ y = \hat y \\ \end{cases}

w2,i=⎩

⎨

⎧w1ieα1w1ie−α1if y=y^if y=y^

对求得的新权重进行归一化求出权重分布

D

2

D_2

D2:

| X 1 X_1 X1 | X 2 X_2 X2 | Y Y Y | Y ^ \hat Y Y^ | D 1 D_1 D1 | w 2 w_2 w2 | D 2 D_2 D2 |

|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 0.1 | 0.083 | 0.088 |

| 0.5 | 0.9 | 1 | -1 | 0.1 | 0.12 | 0.128 |

| 1 | 1.2 | -1 | -1 | 0.1 | 0.083 | 0.088 |

| 1.2 | 0.7 | -1 | -1 | 0.1 | 0.083 | 0.088 |

| 1.4 | 0.6 | 1 | 1 | 0.1 | 0.083 | 0.088 |

| 1.6 | 0.2 | -1 | 1 | 0.1 | 0.12 | 0.128 |

| 1.7 | 0.4 | 1 | 1 | 0.1 | 0.083 | 0.088 |

| 2 | 0 | 1 | 1 | 0.1 | 0.083 | 0.088 |

| 2.2 | 0.1 | -1 | 1 | 0.1 | 0.12 | 0.128 |

| 2.5 | 1 | -1 | -1 | 0.1 | 0.083 | 0.088 |

生产第 2 棵决策树

随机选择条件 x 1 ≤ 1.5 x_1 ≤ 1.5 x1≤1.5 生成第 2 棵决策树

在分布

D

2

=

(

0.088

,

0.128

,

⋅

⋅

⋅

,

0.088

)

T

D_2 = (0.088, 0.128, · · · , 0.088)^T

D2=(0.088,0.128,⋅⋅⋅,0.088)T 下,计算分类错误率

ϵ

=

0.352

ϵ = 0.352

ϵ=0.352,求出权重系数

α

2

\alpha_2

α2:

α

2

=

1

2

l

o

g

(

1

−

ϵ

ϵ

)

=

0.133

α_2 = \frac{1}{2} log\left( \frac{1−ϵ} {ϵ} \right) = 0.133

α2=21log(ϵ1−ϵ)=0.133

再求出新权重 w 3 w_3 w3,对 w 3 w_3 w3 进行归一化求出权重分布 D 3 D_3 D3:

| X 1 X_1 X1 | X 2 X_2 X2 | Y Y Y | Y ^ \hat Y Y^ | D 2 D_2 D2 | w 3 w_3 w3 | D 3 D_3 D3 |

|---|---|---|---|---|---|---|

| 0 | 0 | 1 | 1 | 0.088 | 0.077 | 0.079 |

| 0.5 | 0.9 | 1 | 1 | 0.128 | 0.112 | 0.115 |

| 1 | 1.2 | -1 | 1 | 0.088 | 0.101 | 0.104 |

| 1.2 | 0.7 | -1 | 1 | 0.088 | 0.101 | 0.104 |

| 1.4 | 0.6 | 1 | 1 | 0.088 | 0.077 | 0.079 |

| 1.6 | 0.2 | -1 | -1 | 0.128 | 0.112 | 0.115 |

| 1.7 | 0.4 | 1 | -1 | 0.088 | 0.101 | 0.104 |

| 2 | 0 | 1 | -1 | 0.088 | 0.101 | 0.104 |

| 2.2 | 0.1 | -1 | -1 | 0.128 | 0.112 | 0.115 |

| 2.5 | 1 | -1 | 1 | 0.088 | 0.077 | 0.079 |

生成第 T 棵决策树

如此循环下去生成 T T T 棵决策树。

加权投票

通过加权投票的方式得到集成分类器:

F

(

x

)

=

α

1

T

r

e

e

1

+

α

2

T

r

e

e

2

+

⋯

+

α

t

T

r

e

e

t

=

0.184

I

(

X

2

≤

0.65

)

+

0.133

I

(

X

1

≤

1.5

)

+

⋯

+

α

t

T

r

e

e

t

\begin{aligned} F(x) & = α_1Tree_1 + α_2Tree_2 + \cdots + α_tTree_t \\\\ & = 0.184I(X_2 ≤ 0.65) + 0.133I(X_1 ≤ 1.5) + \cdots + α_tTree_t \end{aligned}

F(x)=α1Tree1+α2Tree2+⋯+αtTreet=0.184I(X2≤0.65)+0.133I(X1≤1.5)+⋯+αtTreet

sklearn 实现

import numpy as np

import matplotlib.pyplot as plt

from sklearn.tree import DecisionTreeRegressor

from sklearn.ensemble import AdaBoostRegressor

# Create the dataset

X = np.array([[0, 0], [0.5, 0.9], [1, 1.2], [1.2, 0.7], [1.4, 0.6], [1.6, 0.2], [1.7, 0.4], [2, 0], [2.2, 0.1], [2.5, 1]])

y = np.array([1, 1, -1, -1, 1, -1, 1, 1, -1, -1])

# Fit the classifier

regr_1 = DecisionTreeRegressor(max_depth=3)

regr_2 = AdaBoostRegressor(regr_1, n_estimators=10, random_state=20)

regr_1.fit(X, y)

regr_2.fit(X, y)

# Score

core_1 = regr_1.score(X, y)

core_2 = regr_2.score(X, y)

print("Decision Tree score : %f" % core_1)

print("AdaBoost score : %f" % core_2)

# Predict

y_1 = regr_1.predict(X)

y_2 = regr_2.predict(X)

# Plot the results

x = range(10)

plt.figure()

plt.scatter(x, y, c="k", label="training samples")

plt.plot(x, y_1, c="g", label="n_estimators=1", linewidth=2)

plt.plot(x, y_2, c="r", label="n_estimators=20", linewidth=2)

plt.xlabel("data")

plt.ylabel("target")

plt.title("Boosted Decision Tree Regression")

plt.legend()

plt.show()

# output

Decision Tree score : 0.733333

AdaBoost score : 1.000000

![[安洵杯 2019]easy_web](https://img-blog.csdnimg.cn/direct/db9e8633ebe4442fa575ca5adca26960.png)