Kafka consumer

多线程消费

kafka 消费者对象 - KafkaConsumer是非线程安全的。与KafkaProducer不同,KafkaProducer是线程安全的,因为开发者可以在多个线程中放心地使用同一个KafkaProducer实例。

但是对于消费者而言,由于它是非线程安全的,因此用户无法直接在多个线程中直接共享同一个KafkaConsumer实例。对应kafka 多线程消费给出两种解决方案:

-

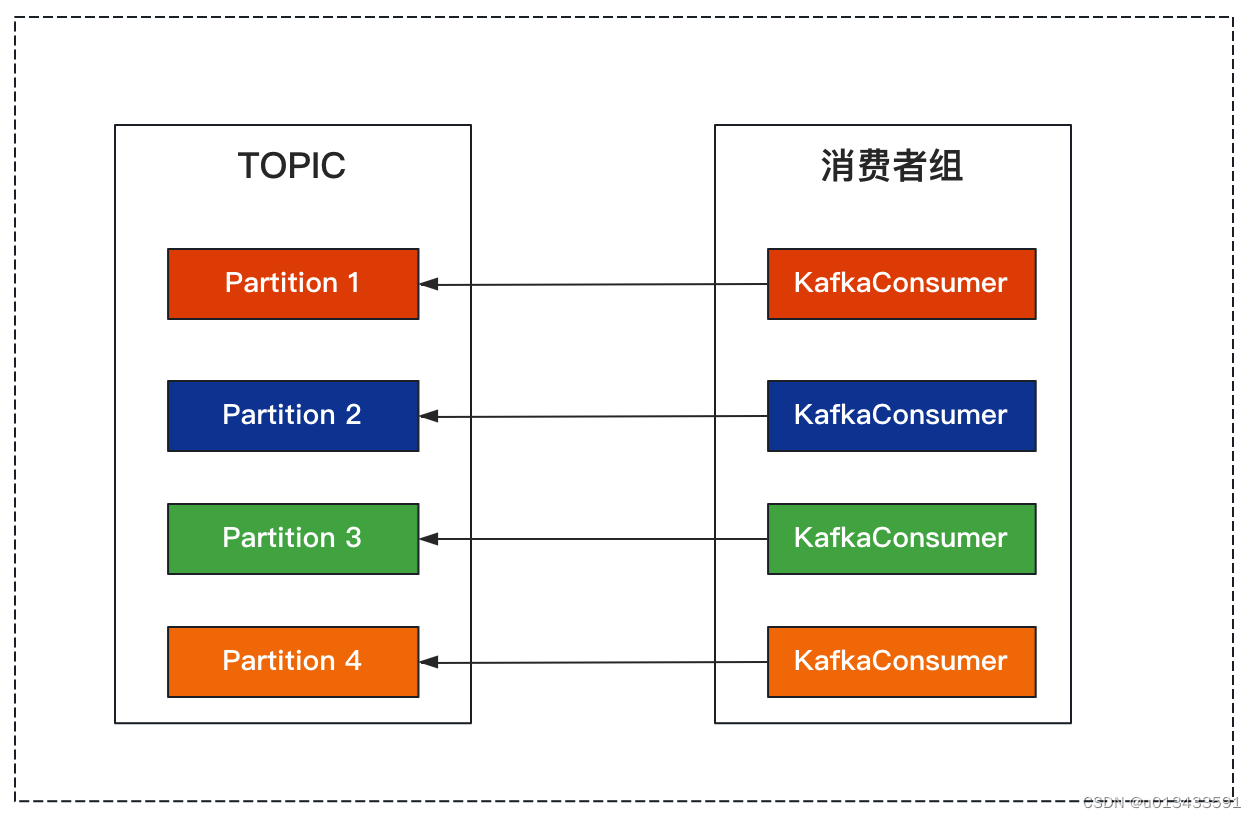

每个线程维护一个KafkaConsumer,每个KafkaConsumer消费一个topic分区

-

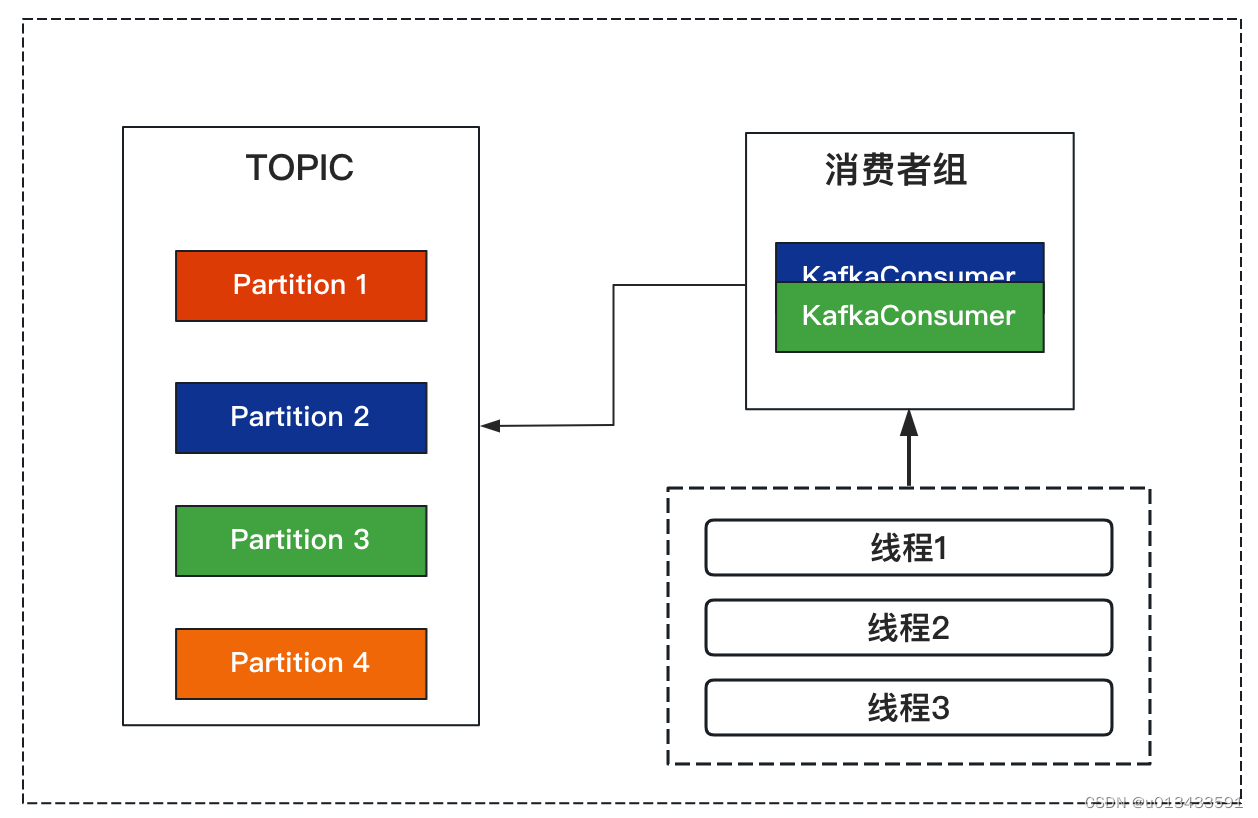

单个KafkaConsumer实例统一拉取数据,交给多个worker线程进行处理

多Consumer

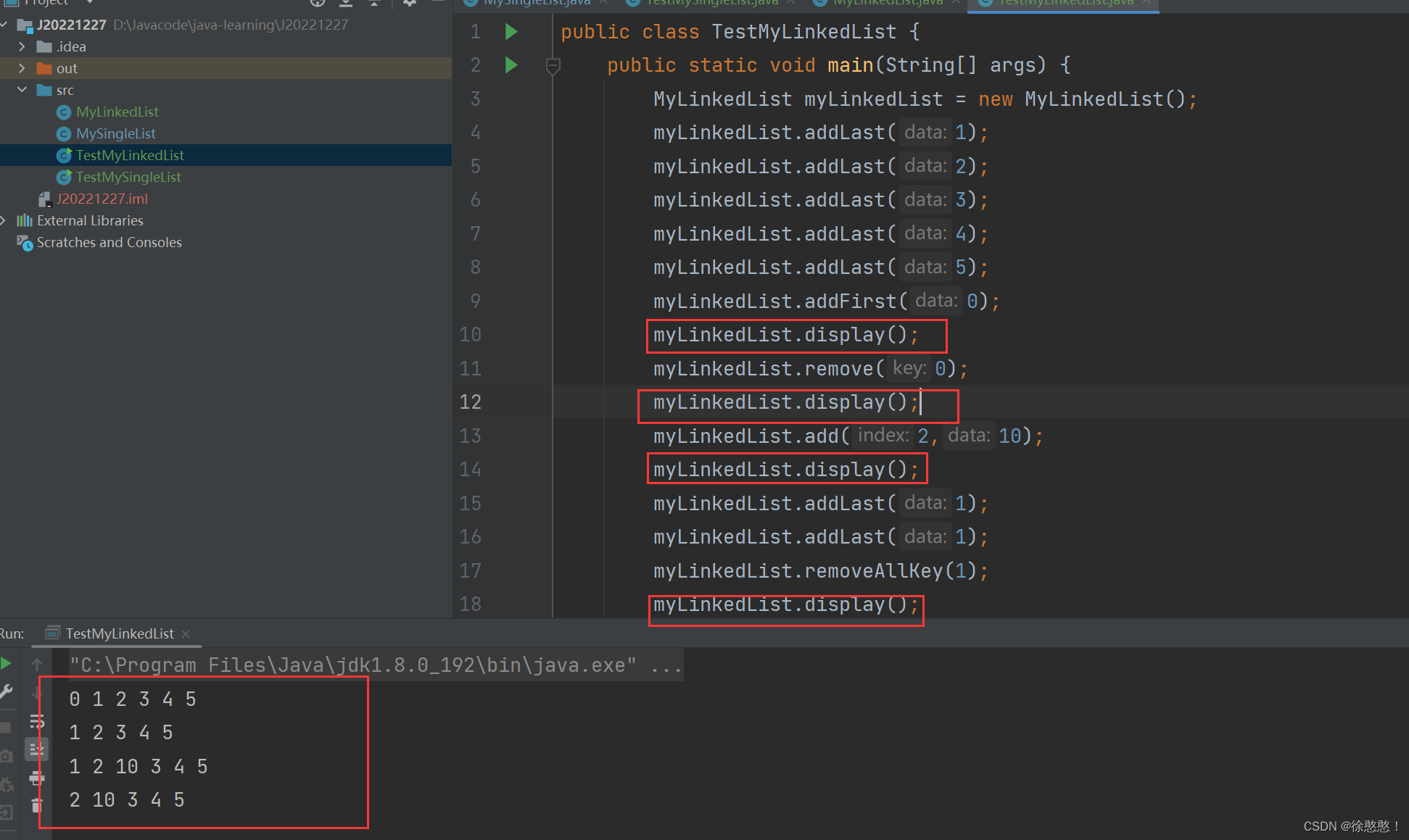

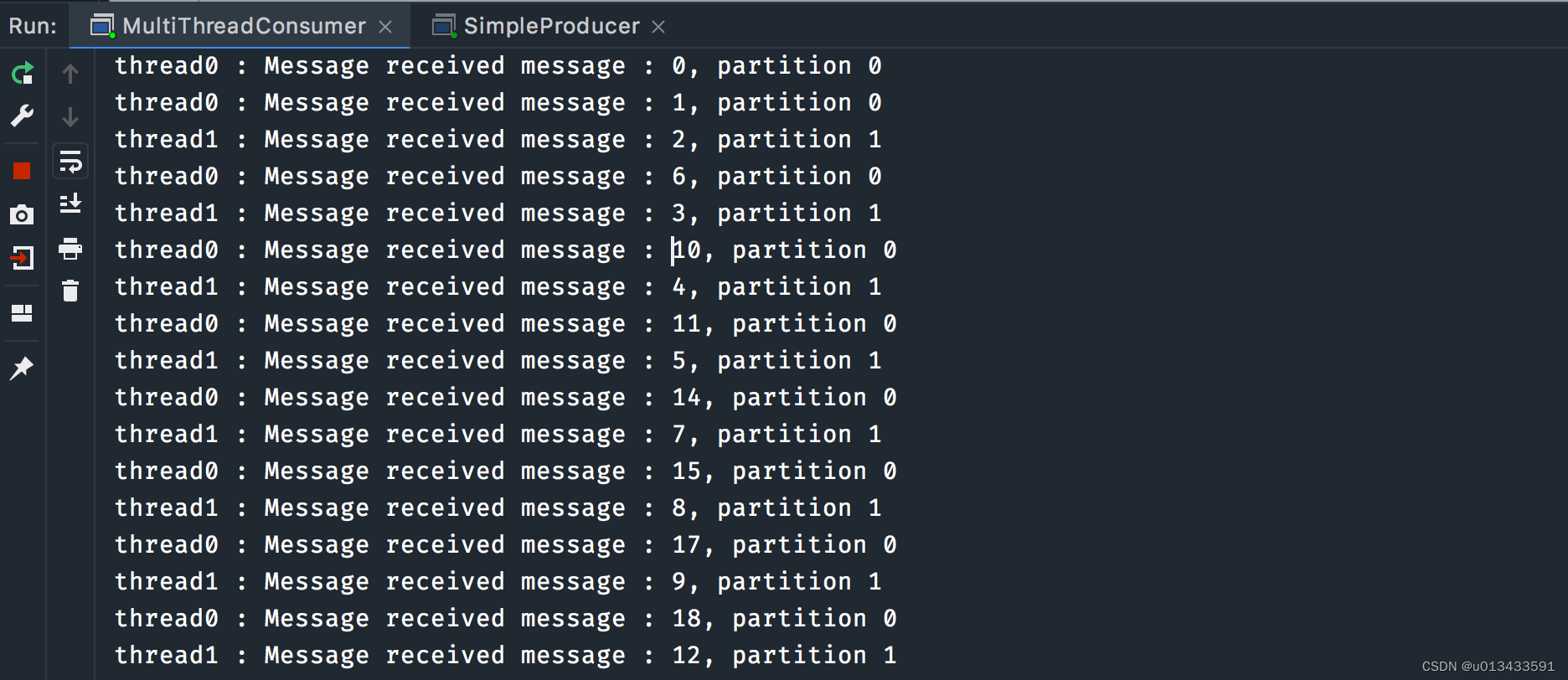

- 程序代码

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class MultiThreadConsumer {

public static void main(String[] args) {

String brokers = "localhost:9092";

String topic = "topic_t40";

String groupId = "app_q";

int consumers = 2;

for(int i = 0;i < consumers;i++){

final ConsumerRunnable consumer = new ConsumerRunnable(brokers, groupId, topic, "thread" + i);

new Thread(consumer).start();

}

}

static class ConsumerRunnable implements Runnable{

private final KafkaConsumer<String,String> consumer;

private volatile boolean isRunning = true;

private String threadName ;

public ConsumerRunnable(String brokers,String groupId,String topic,String threadName) {

Properties props = new Properties();

props.put("bootstrap.servers", brokers);

props.put(ConsumerConfig.GROUP_ID_CONFIG, groupId);

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "latest");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

consumer = new KafkaConsumer<>(props);

consumer.subscribe(Arrays.asList(topic));

this.threadName = threadName;

}

@Override

public void run() {

try {

while (isRunning) {

ConsumerRecords<String, String> records = consumer.poll(Duration.ofSeconds(1));

records.forEach(record -> {

System.out.println(this.threadName + " : Message received " + record.value() + ", partition " + record.partition());

});

}

}finally {

consumer.close();

}

}

}

}

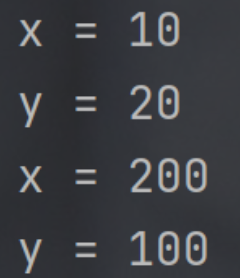

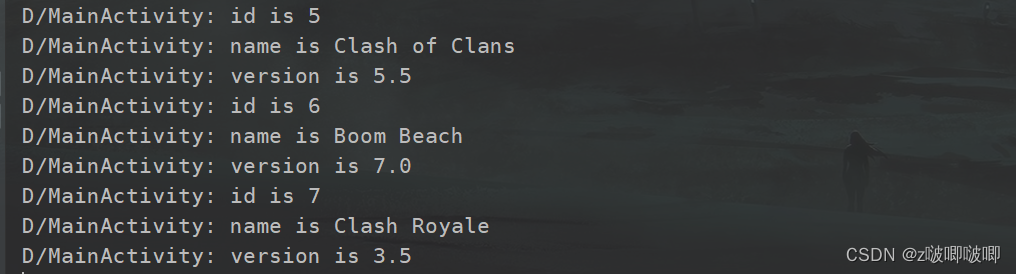

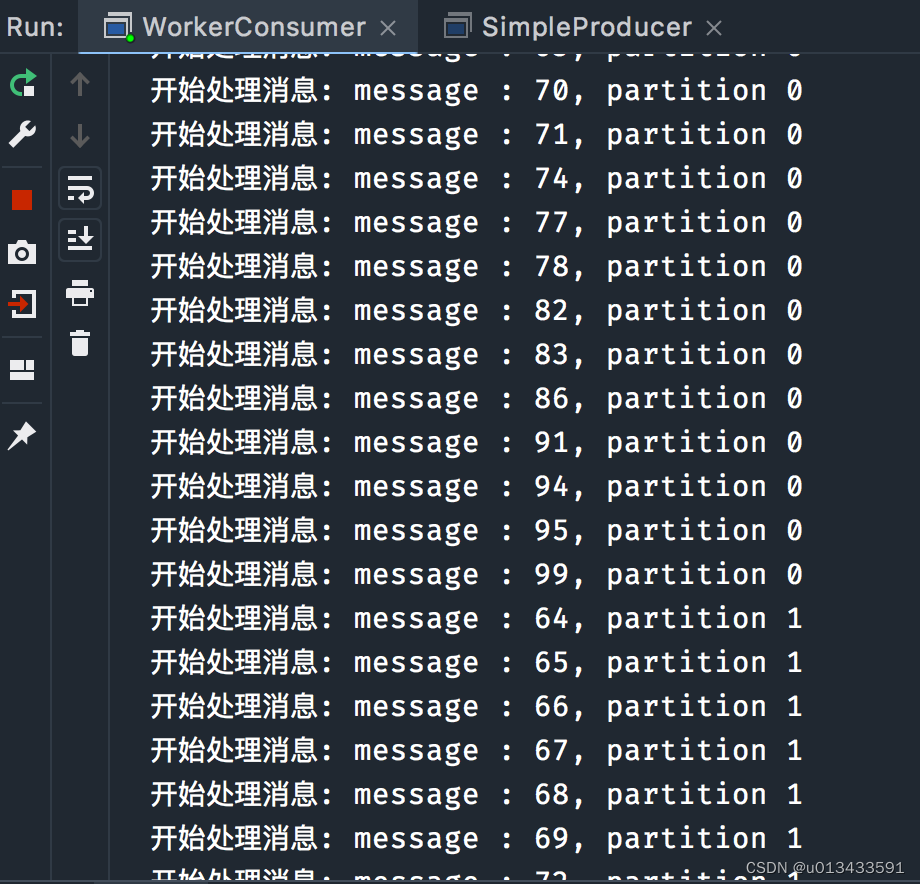

- 测试结果

多Work线程

-

程序代码

public class WorkerConsumer { private static ExecutorService executor = Executors.newFixedThreadPool(100); public static void main(String[] args){ String topicName = "topic_t40"; Properties props = new Properties(); props.put("bootstrap.servers", "localhost:9092"); props.put(ConsumerConfig.GROUP_ID_CONFIG, "app_w"); props.put("client.id", "client_02"); props.put("enable.auto.commit", true); props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "latest"); props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName()); KafkaConsumer<String, String> consumer = new KafkaConsumer<>(props); consumer.subscribe(Arrays.asList(topicName)); try { while (true) { ConsumerRecords<String, String> records = consumer.poll(Duration.ofSeconds(1)); if(!records.isEmpty()){ executor.execute(new MessageHandler(records)); } } }finally { consumer.close(); } } static class MessageHandler implements Runnable{ private ConsumerRecords<String, String> records; public MessageHandler(ConsumerRecords<String, String> records) { this.records = records; } @Override public void run() { records.forEach(record -> { System.out.println(" 开始处理消息: " + record.value() + ", partition " + record.partition()); }); } } } -

测试结果

方法对比

多Consumer

- 优势 - 实现简单;速度较快 无线程切换,方便位移管理,易于维护分区间消息消费顺序

- 缺点 - socket连接大;consumer的数量受限于topic的分区数,扩展性差;

多Work线程

- 优势 - 消息获取与消息处理解耦;可独立扩展消费者数量和工作线程数量,伸缩性好

- 缺点 - 难以维护分区消息处理的有序性,位移管理困难

__consumer_offsets

之前提到过,消费者通过拉取模式从broker中拉取数据,每次消费成功后,消费者记录自身消费位移,当服务重启后,默认从最后的位移位置开始拉取最新的数据。那么消费者是如何记录自身的位移的呢?

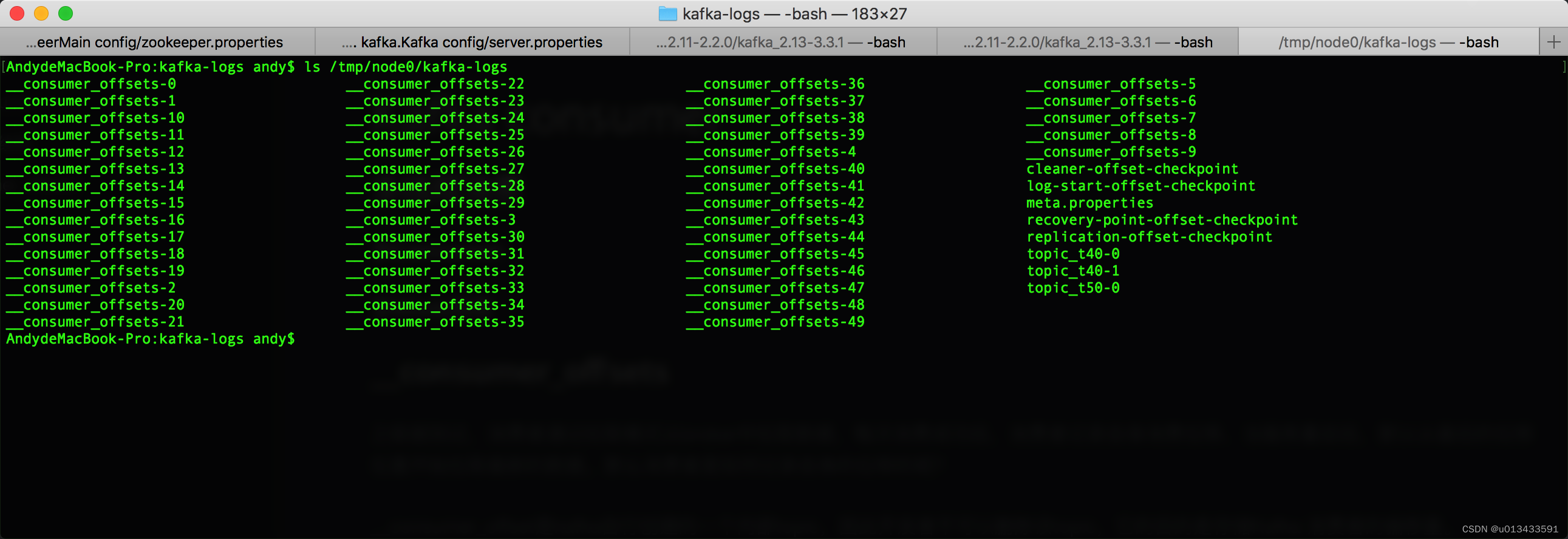

__consumer_offset是kafka自行创建的一个内部topic,因此开发者不可以删除该topic,它的目的是存储Kafka 消费者的偏移量。consumer_offset是管理所有消费者的偏移量的一个主题。

# 查看kafka配置文件日志路径

more config/server.properties | grep log.dirs

log.dirs=/tmp/node0/kafka-logs

在kafka的日志目录中,可以看到由**__consumer_offsets**开头的带数字序号的50个文件夹,表示该topic有50个分区。进入任意文件夹,发现他跟正常的topic文件差不多,里面至少包含了2个index索引文件,一个日志文件

ls -ll /tmp/node0/kafka-logs/__consumer_offsets-1

total 16

-rw-r--r-- 1 andy wheel 10485760 12 27 21:07 00000000000000000000.index

-rw-r--r-- 1 andy wheel 0 12 26 19:49 00000000000000000000.log

-rw-r--r-- 1 andy wheel 10485756 12 27 21:07 00000000000000000000.timeindex

-rw-r--r-- 1 andy wheel 8 12 27 21:07 leader-epoch-checkpoint

-rw-r--r-- 1 andy wheel 43 12 26 19:49 partition.metadata00000000000000000000.log 00000000000000000000.timeindex leader-epoch-checkpoint partition.metadata

当多个consumer 或 consumer group需要同时提交位移信息时,kafka会根据每个消费者的group.id 做hash取模运算,从而将位移数据负载到不同的分区上。

订阅主题

kafka 消费者处理支持常规的topic列表进行订阅之外,还支持基于正则表达式订阅topic,代码实现分别如下:

- Topic列表订阅

consumer.subscribe(Arrays.asList("hello","world"));

- 基于正则表达是订阅

consumer.subscribe(Pattern.compile("topic_*"));